Abstract

To minimize the variance of Monte Carlo estimators, we develop a novel exponential embedding technique that extends the classical concept of sufficient statistics in importance sampling. Our method demonstrates bounded relative error and logarithmic efficiency when applied to normal and gamma distributions, especially in rare event scenarios. To illustrate this innovative technique, we address the problem of credit risk measurement in portfolios and present an efficient simulation algorithm to estimate the likelihood of significant portfolio losses, leveraging multi-factor models with a normal mixture copula. Finally, supported by comprehensive simulation studies, our approach offers a more effective and efficient way to simulate moderately rare events.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Many scientific and statistical applications involve calculating a small probability and/or expectation of a complicated function of a given random variable, as discussed in Asmussen and Glynn (2007). Often, the statistic in question is too complex for an analytical solution, necessitating the use of Monte Carlo simulation methods. More specifically, consider a probability space \(( \Omega , \mathscr {F}, P)\). Here, \(\Omega \) is the sample space corresponding to the outcomes of a certain experiment (which may be hypothetical), \(\mathscr {F}\) is the \(\sigma \)-algebra of subsets of \(\Omega \), and P is a probability measure defined on these subsets. Now let \(X=(X_1,\ldots ,X_d)^\intercal \) be a d-dimensional random vector on a probability space \((\Omega , \mathcal{F}, P)\) and let \(\mathscr {P}(\cdot )\) be a real-valued function from \(\mathbbm {R}^d\) to \(\mathbbm {R}\), where \(~^\intercal \) denotes the transpose. The problem of interest is to calculate a functional of the form

where \(E_P[\cdot ]\) is the expectation operator under the probability measure P. When \(\mathscr {P}(X)\) is an indicator function \(\mathbbm {1}_{\{X \in A\}}\) for \(A \in {{\mathscr {F}}}\), then \(m = P\{X \in A\}.\)

Due to the complexity of estimating such a complicated function, analytical calculation of \(E_P[\mathscr {P}(X)]\) is often unavailable, and one must apply simulation methods. However, naive Monte Carlo methods are inefficient in computing small probabilities because the estimator’s variance gradually dominates its mean, such that many repeated trials are needed to achieve acceptable accuracy. Consequently, importance sampling based on exponential tilting was proposed by Siegmund (1976) as a variance reduction technique in rare-event simulation for the case of independent and identically distributed (i.i.d.) random variables.Footnote 1 The basic idea is to sample under an alternative probability and adjust by the likelihood ratio (Radon–Nikodym derivative). Usually, the alternative distribution is designed based on a certain criterion within a family of parameterized distributions. Introducing this change of measure always yields an unbiased estimator. The likelihood ratio function must be positive and hence is usually constructed as the exponential function. This family of alternative distributions is also known as the exponential tilting family. For various extensions and applications, see, e.g., Glynn and Iglehart (1989), Sadowsky and Bucklew (1990), Ben Rached etal. (2018) ,Owen etal. (2017) and references therein. A comprehensive introduction can be found in Asmussen and Glynn (2007) and Owen (2013).

From a theoretical point of view for importance sampling, one must propose algorithms for the efficient simulation of the right tail of a given random variable. In the case of distributions with light right tails (i.e., those that decay at an exponential rate or faster), under some regularity assumptions, the standard exponential tilting importance sampling estimator, cf. Asmussen and Glynn (2007), satisfies the logarithmic efficiency property, which is a useful metric used to assess the efficiency of an estimator. In contrast, for heavy-tailed distributions, such as the example of log normals and Weibulls with shape parameters strictly less than 1, the exponential tilting method cannot be applied. Therefore, efficient algorithms have been developed to estimate tail probabilities involving heavy tails. In this regard, Asmussen and Binswanger (1997) provide the first logarithmic efficiency for such probabilities. Asmussen and Kroese (2006) propose an estimator with a bounded relative error under distributions with regularly varying tails.

In addition than the independent importance sampling algorithm, more complex state-dependent importance sampling has been proposed in the literature over the last few years to estimate probabilities for sums of heavy-tailed independent random variables, (e.g., Dupuis and Wang 2004; Dupuis etal. 2007; Blanchet and Liu 2008; Blanchet and Li 2011; Blanchet and Lam 2012; Amar etal. 2023). Some researchers address the left-tail region, that is, the probability that sums of nonnegative random variables fall below a sufficiently small threshold (e.g., Asmussen etal. 2016; Ben Issaid etal. 2017; Ben Rached etal. 2015, 2018, 2020a, b, 2021; Fuh etal. 2023a).

Applications of importance sampling have been given in other fields in the literature. To name a few, the improvement of the convergence rate in stochastic gradient-based optimization algorithms has been given in Zhao and Zhang (2015), Richtárik and Takáč (2016), Johnson and Guestrin (2018), Katharopoulos and Fleuret (2018), Csiba and Richtárik (2018), and Fuh etal. (2023b). Metelli etal. (2018) and Metelli etal. (2020) apply importance sampling in the target policy.

A useful tool in importance sampling for rare event simulation (\(m = P\{X \in A\}\)) is exponential tilting (e.g., Siegmund 1976; Sadowsky and Bucklew 1990), for which the above-mentioned algorithm is more efficient for large-deviation rare events (m of the order \(10^{-5}\) or less) than arbitrary events (say, m is not rare). Examples of such events occur in telecommunications (m = bit-loss rate, probability of buffer overflow) and reliability (m = the probability of failure before time t). Efficient Monte Carlo simulation of such events is proposed by Sadowsky and Bucklew (1990) based on the large deviations theory given by Ney (1983).

Other than the above-mentioned extremely rare event simulation, in this paper, we are interested in simulating moderately rare events, that is, when \(m = P\{X \in A\}\) is small, say, of the order \(10^{-2}\) or \(10^{-3}\) or so; i.e., \(\{ X \in A\}\) is a moderate-deviation rare event. Such problems appear in the construction of confidence regions for asymptotically normal statistics (e.g., Efron and Tibshirani 1994), and in the computation of value-at-risk (VaR) in risk management (e.g., Duffie and Singleton 2003; Glasserman etal. 2000, 2002; Fuh etal. 2011). For the calculation of VaR in financial risk management, the reduction in terms of the computational time is essential, cf. Chapter 9 of Glasserman (2004). For a more general \(E_P[\mathscr {P}(X)]\) such as portfolio loss (e.g., Li 2000; Glasserman and Li 2005; Glasserman etal. 2007, 2008; Bassamboo etal. 2008; Chan and Kroese 2010), see Sect. 3 for details.

To provide a more accurate simulation algorithm for a moderately rare event, instead of using large-deviation exponential tilting, an alternative choice of the tilting parameter is based on the criterion of minimizing the variance of the importance sampling estimator directly. For example, Johns (1988), Do and Hall (1991), Fuh and Hu (2004) study efficient simulations for bootstrapping the sampling distribution of statistics of interest. Su and Fu (2000), Fu and Su (2002) minimize the variance under the original probability measure. Fuh etal. (2011) apply the importance sampling method for VaR computation under a multivariate t-distribution, in which the authors also show that their proposed method consistently outperforms, in the sense of variance reduction, existing methods derived from large deviations theory under various settings.

Our criterion in the search for desirable tilting parameters is to minimize the variance of the estimator. Numerically, this procedure is based on a pre-sampling stochastic approximation algorithm. This problem has been well-studied in Egloff etal. (2005), Kawai (2009), and Kawai (2018). Overall our algorithm has two stages. We first search the desired tilting parameters and then obtain samples under the alternative distribution. This is similar to adaptive importance sampling with stochastic approximation in Ahamed etal. (2006) in the sense that we both seek to use stochastic approximation to learn the optimal sampling distribution. However, our methods treat the search step as a separate stage other than an adaptive online procedure. Moreover, the proposed exponential tilting family is based on sufficient statistics.

Note that for events of large deviations \(P\{X \in A = (a, \infty )\}\) for some \(a > 0\), Sadowsky and Bucklew (1990) show that the asymptotically optimal alternative distribution is obtained through exponential tilting; that is, \(Q(dx) = C \exp (\theta x)P(dx)\), where C is a normalizing constant and \(\theta \) determines the amount of tilting. The optimal amount of tilting \(\theta \) is such that the expectation of X under the Q-measure equals the dominating point, which is located at the boundary a of A. However, for a moderately deviating rare event, Do and Hall (1991) and Fuh and Hu (2004) show that typically the tilting point \(\theta ^*\) of the optimal alternative distribution is inside \((a,\infty )\). This is different from that given by large deviations theory. However, it is shown in Fuh and Hu (2004) that \(\theta ^* - a\) approaches 0 as \(a \rightarrow \infty \); see Example 1 in Sect. 2.

Along this line, the proposed exponential embedding is based on the idea of sufficient statistics. To illustrate the concept of sufficient exponential embedding, we consider a one-dimensional normal distributed random variable. Let \(X \sim N(0,1)\) be a random variable with the standard normal distribution, with probability density function (pdf) \(e^{-x^2/2}/\sqrt{2\pi }\). Then the sufficient exponential embedding is

Note that here for the normal distribution, the sufficient statistic is \(T(x) = [x \,\,x^2]\). In contrast to the classical one-parameter exponential embedding, the sufficient exponential embedding is based on two parameters \((\theta , \eta )\) corresponding to the sufficient statistic \(T(x)= [x \,\,x^2]\). A systematic study of this innovative technique will be given in Sect. 2, in which we also use the idea of the conjugate measure of Q, \(\bar{Q} (dx) = C \exp (- (\theta x + \eta x^2))P(dx)\), to characterize the optimal tilting \((\theta ,\eta )\) by solving the equation wherein the expectation of \((X,X^2)\) under the Q-measure equals the conditional expectation of \((X,X^2)\) under the \(\bar{Q}\)-measure given the rare event. To illustrate this innovative technique, we tackle the complex issue of assessing credit risk in portfolios that include financial instruments such as loans and bonds. We propose a streamlined simulation algorithm to efficiently estimate the probability of substantial portfolio losses.

There are two aspects in this study. To begin with, based on the criterion of minimizing the variance of the importance sampling estimator, we propose an innovative importance sampling algorithm based on an unconventional sufficient (statistic) exponential embedding. Here we term this sufficient exponential tilting because the form of the embedding is selected based on the sufficient statistic of the underlying distribution, for which more than one parameter (usually two parameters) can be tilted in our method; for instance, the tilting parameters can be the location and scale parameters in the multivariate normal distribution. Theoretical investigations, including bounded relative error and logarithmic efficiency analysis (Theorems 5–6), and numerical studies are given to support the proposed importance sampling method. Note that in Theorem 5 for normal distribution, to simulate \(\{X > a\}\) for large \(a > 0\), we show that optimal sufficient exponential tilting outperforms traditional optimal one-parameter exponential tilting in the sense of reducing asymptotic variance. In Theorem 6 for gamma distribution, to simulate \(\{X > a\}\) and \(\{X < 1/a\}\) for large \(a > 0\), we show that optimal sufficient exponential tilting outperforms traditional optimal one-parameter exponential tilting. Furthermore, we show that for the normal, multivariate normal, and gamma distributions, our simulation study shows that sufficient exponential tilting performs 2 to 5 times better than classical one-parameter exponential tilting for some simple rare event simulations. Note that the innovative tilting formula is more suitable for the grouped normal mixture copula model, and is of independent interest. In particular, when applying sufficient exponential tilting in the normal mixture models, the tilting parameter can be either the shape or the rate parameter for the underlying gamma distribution, which results in a more efficient simulation.

Next, by utilizing a fast computational method for how the rare event occurs and the proposed importance sampling method, we provide an efficient simulation algorithm for a multi-factor model with the normal mixture copula model to estimate the probability that the portfolio incurs large losses. To be more precise, in this stage, we use the inverse Fourier transform to handle the distribution of total losses, i.e., the sum of n “weighted” independent but non-identically distributed Bernoulli random variables. An automatic variant of Newton’s method is introduced to determine the optimal tilting parameters. Note that the proposed simulation device is based on importance sampling for a joint probability other than the conditional probability used in previous studies, which is also shown to achieve variance reduction in the asymptotic sense in terms of bounded relative error (Theorem 7). Finally, to illustrate the applicability of our method, we mention numerical results of the proposed algorithm under various copula settings, and highlight insights into the trade-off between the reduced variances and increased computational time.

The remainder of this paper is organized as follows. Section 2 presents a general account of importance sampling based on sufficient exponential embedding. Section 3 formulates the problem of estimating large portfolio losses, presents the normal mixture copula model, and studies the proposed optimal importance sampling for portfolio loss under the normal mixture copula model. Section 4 concludes. Some proofs and numerical results are deferred to “Appendices A–D”.

2 Sufficient exponential tilting

To calculate the value of (1) using importance sampling, one selects a sampling probability measure Q under which X has a pdf \(q(x) = q(x_1,\ldots ,x_d)\) with respect to the Lebesgue measure \(\mathscr {L}\). Q is assumed to be absolutely continuous with respect to the original probability measure P. Therefore, Eq. (1) can be written as

where \(\text{ E}_Q[\cdot ]\) is the expectation operator under which X has a pdf q(x) with respect to the Lebesgue measure \(\mathscr {L}\). The ratio f(x)/q(x) is called the importance sampling weight, the likelihood ratio, or the Radon–Nikodym derivative.

Here, we focus on the exponentially tilted probability measure of P. Instead of considering the commonly adopted one-parameter exponential tilting in the literature (Asmussen and Glynn 2007), we propose a sufficient exponential tilting algorithm. To the best of our knowledge, the use of sufficient exponential embedding is novel in the literature. As will be seen in the examples in Sect. 2.1, the tilting probabilities for existent two-parameter distributions, such as the gamma and normal distributions, can be obtained by solving simple formulas.

Let \(Q_{\theta ,\eta }\) be the tilting probability measure, where subscript \(\theta =(\theta _1,\ldots ,\theta _p)^\intercal \in \mathbbm {R}^p\) and \(\eta =(\eta _1,\ldots ,\eta _\kappa )^\intercal \in \mathbbm {R}^\kappa \) are the tilting parameters. Here p and \(\kappa \) denote the number of parameters, respectively. Let \(h_1(x)\) be a function from \(\mathbbm {R}^d\) to \(\mathbbm {R}^p\), and \(h_2(x)\) be a function from \(\mathbbm {R}^d\) to \(\mathbbm {R}^\kappa \). Denote \(\Theta \) and H as the parameter spaces such that the moment-generating function \(\Psi (\theta ,\eta ):= E [\text{ e}^{\theta ^\intercal h_1(x) + \eta ^\intercal h_2(x)}]\) of \((h_1(X),h_2(X))\) exists for \(\theta \in \Theta \subset \mathbbm {R}^p \) and \(\eta \in H \subset \mathbbm {R}^\kappa \). Let \(f_{\theta ,\eta }(x)\) be the pdf of X under the exponentially tilted probability measure \(Q_{\theta ,\eta }\), defined by

where \(\psi (\theta ,\eta )=\ln \Psi (\theta ,\eta )\) is the cumulant function. Note that in (3), we present one type of parameterization which is suitable for our derivation. Explicit representations of \(h_1(x)\) and \(h_2(x)\) for specific distributions are given in the examples and remarks in Sect. 2.1, which include the normal and multivariate normal distributions as well as the gamma distribution.

Consider sufficient exponential embedding. Equation (2) becomes

Because of the unbiasedness of the importance sampling estimator, its variance is

where \(m=\int _{\mathbbm {R}^d} \mathscr {P}(x)f(x)dx.\) For simplicity, we assume that the variance of the importance sampling estimator exists. A simple example is that \(\mathscr {P}(X)\) is bounded and the parameters (\(\theta ,\eta \)) lie in the domain discussed as above. For instance, in the one-dimensional Gaussian case with \(\mathscr {P}(X) = \mathbbm {1}_{\{X>a\}}\) or \(\mathscr {P}(X) = \mathbbm {1}_{\{X<a\}}\), it is possible to determine the exact domain on which the variance is finite. The same is true in the case where X is a gamma distribution. We will consider these two cases in Theorems 5 and 6.

Define the first term of the right-hand side (RHS) of (4) by \(G(\theta ,\eta )\). Then minimizing

is equivalent to minimizing \(G(\theta ,\eta )\). Standard algebra gives a simpler form of \(G(\theta ,\eta )\):

which is used to find the optimal tilting parameters. In the following theorem, we show that \(G(\theta ,\eta )\) in (5) is a convex function in \(\theta \) and \(\eta \). This property ensures that there exists no multi-mode problem in the search stage when determining the optimal tilting parameters.

To minimize \(G(\theta ,\eta )\), the first-order condition requires the solution of \(\theta , \eta \), denoted by \(\theta ^*,\eta ^*\), to satisfy \(\nabla _\theta G(\theta ,\eta )\mid _{\theta =\theta ^*}=0\), and \(\nabla _\eta G(\theta ,\eta )\mid _{\eta =\eta ^*}=0\), where \(\nabla _\theta \) denotes the gradient with respect to \(\theta \) and \(\nabla _\eta \) denotes the gradient with respect to \(\eta \). Under the condition that X is in an exponential family, and \(\psi (\theta , \eta )\) are bounded continuously differentiable functions with respect to \(\theta \) and \(\eta \), by the dominated convergence theorem, simple calculation yields

therefore, \((\theta ^*, \eta ^*)\) is the root of the following system of nonlinear equations:

To simplify the RHS of (6) and (7), we define the conjugate measure \(\bar{Q}^{\mathscr {P}}_{\theta ,\eta }\) of the measure Q with respect to the payoff function \(\mathscr {P}\) as

where \(\bar{\psi }(\theta ,\eta )\) is \(\log \bar{\Psi }(\theta ,\eta )\) with \(\bar{\Psi } (\theta ,\eta )=E_P[\mathscr {P}^2(X)\text{ e}^{-(\theta ^\intercal h_1(X) +\eta ^\intercal h_2(X))}\!]\). Then the RHS of (6) equals \(E_{\bar{Q}^{\mathscr {P}}_{\theta ,\eta }}[h_1(X)\!]\), the expected value of \(h_1(X)\) under \(\bar{Q}^{\mathscr {P}}_{\theta ,\eta }\), and the RHS of (7) equals \(E_{\bar{Q}^{\mathscr {P}}_{\theta ,\eta }}[ h_2(X)]\), the expected value of \(h_2(X)\) under \(\bar{Q}^{\mathscr {P}}_{\theta ,\eta }\).

The following theorem establishes the existence, uniqueness, and characterization for the minimizer of (5). Before that, to ensure the finiteness of the moment-generating function \(\Psi (\theta ,\eta )\), we add a condition that \(\Psi (\theta ,\eta )\) is steep, cf. Asmussen and Glynn (2007). To define steepness, let \(\theta ^{-}_i= (\theta _1,\ldots , \theta _i,\ldots ,\theta _p) \in \Theta \) such that all \(\theta _k\) are fixed for \(k=1,\ldots ,i-1,i+1,\ldots ,p\) except the i-th component. Denote \(\eta _j^- \in H\) correspondingly for \(j=1,\ldots ,{\kappa }\). Now, let \(\theta _{i,\max }:= \sup \{ \theta _i: \Psi (\theta ^{-}_i,\eta ) < \infty \}\) for \(i=1,\ldots ,p\), and \(\eta _{j,\max }:= \sup \{ \eta _j: \Psi (\theta ,\eta ^-_j) < \infty \}\) for \(j=1,\ldots ,{\kappa }\) (for light-tailed distributions, we have \(0 < \theta _{i,\max } \le \infty \) for \(i=1,\ldots ,p\), and \(0 < \eta _{j,\max } \le \infty \) for \(j=1,\ldots ,{\kappa }\)). Then steepness means \(\Psi (\theta ,\eta ) \rightarrow \infty \) as \(\theta _i \rightarrow \theta _{i,\max }\) for \(i=1,\ldots ,p\), or \(\eta _j \rightarrow \eta _{j,\max }\) for \(j=1,\ldots ,{\kappa }\).

The following conditions are used in Theorem 1:

-

i)

\(\bar{\Psi }(\theta ,\eta )\Psi (\theta ,\eta ) \rightarrow \infty \) as \(\theta _i \rightarrow \theta _{i,\max }\) for \(i=1,\ldots ,p\), or \(\eta _j \rightarrow \eta _{j,\max }\) for \(j=1,\ldots ,{\kappa }\);

-

ii)

\(G(\theta ,\eta )\) is a continuously differentiable function on \(\Theta \times H\), and

$$\begin{aligned} \max _{i=1,\ldots ,p,~ j = 1,\ldots , {\kappa }} \bigg \{ \lim _{ \theta _i \rightarrow \theta _{i,\max } } \frac{\partial G(\theta ,\eta )}{\partial \theta _i}, \lim _{ \eta _j \rightarrow \eta _{j,\max } } \frac{\partial G(\theta ,\eta )}{ \partial \eta _j} \bigg \} > 0.\nonumber \\ \end{aligned}$$(8)

Note that condition i) or ii) is used to guarantee the existence of the minimum point. More details can be found in the proof of Theorem 1.

Theorem 1

Suppose the moment-generating function \(\Psi (\theta ,\eta )\) of \((h_1(X),h_2(X))\) exists for \(\theta \in \Theta \subset {\mathbbm {R}^p}\) and \(\eta \in H \subset {\mathbbm {R}^\kappa }\). Assume \(\Psi (\theta ,\eta )\) is steep. Furthermore, assume either i) or ii) holds. Then \(G(\theta ,\eta )\) defined in (5) is a convex function in \((\theta ,\eta )\), and there exists a unique minimizer of (5), which satisfies

Proof

To prove Theorem 1, we require the following three propositions. Proposition 2 is taken from Theorem VI.3.4. of Ellis (1985), Proposition 3 is a standard result from convex analysis, and Proposition 4 is taken from Theorem 1 of Soriano (1994). Note that although the function domain is the whole space in Propositions 3 and 4, the results for a subspace still hold with similar proofs.

Proposition 2

f(x) is differentiable at \( x \in int(\mathscr {X})\) if and only if the d partial derivatives \(\frac{\displaystyle \partial f(x)}{\displaystyle \partial x_{i}}\) for \(i =1,\ldots , d\) exist at \(x \in int(\mathscr {X}) \subset \mathbbm {R}^d\) and are finite.

Proposition 3

Let \(f: \mathbbm {R}^d \rightarrow \mathbbm {R}\) be continuous on all of \(x \in \mathbbm {R}^d\). If f is coercive (in the sense that \(f({x}) \rightarrow \infty \) if \(\Vert {x}\Vert \rightarrow \infty \)), then f has at least one global minimizer.

Proposition 4

Let \(f: \mathbbm {R}^d \rightarrow \mathbbm {R}\), and let f, a continuously differentiable, convex function, satisfy (8). Then a minimum point for f exists.

Below, we first show that \(G(\theta ,\eta )\) is a strictly convex function. For any given \(\lambda \in (0,1)\), and \((\theta ,\eta ), (\theta ',\eta ') \in \Theta \times H \subset \mathbbm {R}^p\times \mathbbm {R}^\kappa \), by the convexity of \(\psi (\cdot ,\cdot )\), we have

Note that \( \lambda (\theta ,\eta ) + (1 - \lambda )(\theta ',\eta ') = (\lambda \theta + (1 - \lambda )\theta ', \lambda \eta + (1 - \lambda )\eta ')\) is a \((p+\kappa )\)-dimensional vector. Then

Next, we prove the existence of \((\theta ,\eta )\) in the optimization problem (5). To obtain the global minimum of \(G(\theta ,\eta )\), we note that \(G(\theta ,\eta )\) is strictly convex from the above argument, and \(\frac{\displaystyle \partial G(\theta ,\eta )}{\displaystyle \partial \theta _{i}}\) and \(\frac{\displaystyle \partial G(\theta ,\eta )}{\displaystyle \partial \eta _{i}}\) exist for \(i =1,\ldots , p\), \(j=1,\ldots , {\kappa }\). Proposition 2 establishes that \(G(\theta ,\eta )\) is continuously differentiable for \((\theta ,\eta ) \in \Theta \times H\). By the definition of \(G(\theta ,\eta )\) in (5), it is easy to see that condition i) implies that \(G(\theta )\) is coercive. Then by Proposition 3, \(G(\theta ,\eta )\) has a unique minimizer. Clearly ii) implies that conditions in Proposition 4 hold.

To prove (9) and (10), we simplify the right-hand side of (6) and (7) under \(\bar{Q}^{\mathscr {P}}_{\theta ,\eta }\). Standard algebra yields

for \(i=1,\ldots ,p,~j=1,\ldots ,{\kappa }\). This implies the desired result. \(\square \)

Remark 1

(a) To keep the exponentially tilted probability measure \(Q_{\theta ,\eta }\) within the same exponential family as the original probability measure P, one possible selection of \(h_1(x)\) and \(h_2(x)\) is based on the sufficient statistic of the original probability distribution. For example, for the normal distribution, the sufficient statistic is \(T(x)=[x\,\,x^2]\) and thus \(h_1(x)=x\) and \(h_2(x)=x^2\); for the gamma distribution, the sufficient statistic is \(T(x)=[\log x\,\, x]\) and thus \(h_1(x)=\log x\) and \(h_2(x)=x\), and for the beta distribution, the sufficient statistic is \(T(x)=[\log x\,\, \log (1-x)]\) and thus \(h_1(x)=\log x\) and \(h_2(x)=\log (1-x)\). Such a device can be applied to other distributions as well, such as the lognormal distribution, the inverse Gaussian distribution, and the inverse gamma distribution.

(b) Now, we provide a heuristic explanation for this device. The idea of using a sufficient statistic for exponential tilting is that we can treat this tilting as a sufficient exponential tilting within the same given parametric family. Furthermore, by using the Fisher–Neyman factorization theorem in the exponential family, we note that this device provides the maximum degree of freedom for exponential tilting within the same exponential family. We also expect to have an analogy parallel to the Rao–Blackwell theorem for sufficient exponential tilting: minimize the mean square loss among all possible importance sampling in the same exponential embedding.

2.1 Examples for multivariate normal and gamma distributions

To illustrate the proposed sufficient exponential tilting, we present examples of the multivariate normal distribution and the gamma distribution. We choose these two distributions to indicate the location and scale properties of the sufficient exponential tilting used in our general framework. In these examples, by using a suitable re-parameterization, we obtain neat tilting formulas for each distribution based on its sufficient statistic. Our simulation studies also show that the proposed sufficient exponential tilting performs 2 to 5 times better than classical one-parameter exponential tilting for some simple rare events.

We here check the validity of applying Theorem 1 for the example. First, we note that both the multivariate normal distribution and the gamma distribution are steep. Next, it is easy to see that the sufficient conditions \(\bar{\Psi }(\theta )\Psi (\theta ) \rightarrow \infty \) as \(\theta _i \rightarrow \theta _{i,\max }\) for \(i=1,\ldots ,p\), or \(\eta _j \rightarrow \eta _{j,\max }\) for \(j=1,\ldots ,{\kappa }\) in Theorem 1 hold in each example. For example, when \(X\sim N_d({\textbf {0}},\Sigma )\), then \(\Psi (\theta ) = O(e^{\Vert \theta \Vert ^2})\) approaches \(\infty \) sufficiently quickly. Another simple example illustrated here is when \(\mathscr {P}(X) = \mathbbm {1}_{\{X \in A\}}\) and \(A:= [a_1, \infty ) \times \cdots \times [a_d,\infty )\), with \(a_i > 0\) for all \(i=1,\ldots ,d\), and X has a d-dimensional standard normal distribution; in this case it is easy to verify that the sufficient conditions in Theorem 1 hold. For gamma distribution \(X \sim \texttt{Gamma}(\alpha ,\beta )\), we have \(\Psi (\theta ,\eta )=\frac{(1/\beta )^{-\alpha }\Gamma (\alpha +\theta )}{\Gamma (\alpha )(\beta -\eta )^{\alpha +\theta }}\) and \(\bar{\Psi }(\theta ,\eta )=\frac{(1/\beta )^{-\alpha }\Gamma (\alpha -\theta )}{\Gamma (\alpha )(\beta +\eta )^{\alpha -\theta }}\). Then it is easy to see that \(\Psi (\theta ,\eta ) \bar{\Psi }(\theta ,\eta ) \rightarrow \infty \) as \(\eta \uparrow \beta \) or \(\eta \downarrow -\beta \).

Example 1

Multivariate normal distribution.

To illustrate the concept of sufficient exponential embedding, we first consider a one-dimensional normal distributed random variable as an example. Let X be a random variable with the standard normal distribution, denoted by N(0, 1), with probability density function (pdf) \(\frac{dP}{d\mathscr {L}}=e^{-{x^2}/{2}}/\sqrt{2\pi }.\) By using the sufficient exponential embedding in (3) with \(h_1(x):=x\) and \(h_2(x):=x^2\), we have

In this case, the tilting probability measure \(Q_{\theta ,\eta }\) is \(N(\theta /(1-2\eta ), 1/(1-2 \eta ))\), with \(\eta < 1/2,\) a location-scale family.

For the event of \(\mathscr {P}(X)=\mathbbm {1}_{\{X > a\}}\) for \(a > 0\), define \(\bar{Q}_{\theta ,\eta }\) as \(N(-\theta /(1+2\eta ), 1/(1+2 \eta ))\) with \(\eta > -1/2\). Applying Theorem 1, \((\theta ^*,\eta ^*)\) is the root of

which is equivalent to

under the standard parameterization.

Take the one-parameter exponential embedding case, with \(\sigma \) fixed. Standard calculation gives \(\Psi (\theta ) =\text {e}^{\theta ^2/2}\), \(\psi (\theta ) = \theta ^2/2\), and \(\psi '(\theta ) = \theta \). Using the fact that \(X\mid \{X>a\}\) is a truncated normal distribution with minimum value a under \(\bar{Q}\), \(\theta ^*\) must satisfy \(\theta =\frac{\phi (a+\theta )}{1-\Phi (a+\theta )}-\theta \), cf. Fuh and Hu (2004).

Table 1 presents numerical results for the normal distribution. As demonstrated in the table, using sufficient exponential tilting for the simple event, \(\mathbbm {1}_{\{X>a\}}\), yields performance 2 to 3 times better than one-parameter exponential tilting in terms of variance reduction factors. Note that here we adopt the automatic Newton method (Teng etal. 2016) to search the optimal parameters for all experiments.

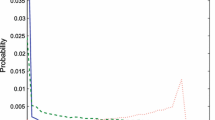

Due to the convex property \(G(\theta ,\eta )\) and the uniqueness of the optimal tilting parameters, the optimal tilting formula is robust and not sensitive to the initial value in most cases. To illustrate this phenomenon, we consider the \(G(\theta ,\eta )\) function for the simple event \(X>2\) under the standard normal distribution in Fig. 1. As shown in the figure, \(\theta \) is not sensitive to the initial values; note that the range of the initial values becomes vital for finding optimal \(\eta \) due to the constraint \(\eta <1/2\). Moreover, there exists a flat area for simultaneously tilting both parameters \(\theta ,\eta \).

We now proceed to a d-dimensional multivariate normal distribution. Let \(X=(X_1,\ldots ,X_d)^{\intercal }\) be a random vector with the standard multivariate normal distribution, denoted by \(N(0,\mathbb {I})\), with pdf \(\textrm{det}(2\pi \mathbb {I})^{-1/2}\, \ e^{-(1/2)\,x^{\intercal }\mathbb {I}^{-1}x},\) where \(\mathbb {I}\) is the identity matrix. By using the sufficient exponential embedding in (3), we have

where \(|\cdot |\) denotes the determinant of a matrix, and \(M=\left( a_{ij}\right) \in \mathbb {R}^{d\times d}\),

with \(a_{ij}=\eta _i\) for \(i=j\) and \(a_{ij}=\eta _{d+1}\) for \(i\ne j\). In this case, the tilting probability measure \(Q_{\theta ,\eta }\) is \(N((\mathbb {I}-2M)^{-1}\theta ,(\mathbb {I}-2M)^{-1})\).

For the event of \(\mathscr {P}(X)=\mathbbm {1}_{\{X \in A\}}\), define \(\bar{Q}_{\theta ,\eta }\) as \(N((\mathbb {I}+2M)^{-1}(-\theta ),(\mathbb {I}+2M)^{-1})\). Similar to the above one-dimensional normal distribution, we consider the standard parameterization by letting \(\mu :=(\mathbb {I}-2\,M)^{-1}\theta \), \(\Sigma := (\mathbb {I}-2\,M)^{-1}\), and define \(\bar{Q}_{\mu ,\Sigma }\) as \(N(-(2\mathbb {I}-\Sigma ^{-1})^{-1}(\Sigma ^{-1})\mu ,(2\mathbb {I}-\Sigma ^{-1})^{-1})\). Applying Theorem 1, \((\mu ^*,\Sigma ^*)\) is the root of

where

Here in (16), \({{\,\textrm{Tr}\,}}(A)\) is the trace of matrix A; the value of \(b_{jk}\) is defined as follows: for each \(i=1,\ldots ,d\),

and for \(i=d+1\),

Remark 2

The left-hand sides (LHSs) of (14) and (15) are derivatives of the cumulant function \(\psi _{MN}(\theta ,\eta ):= \log (\sqrt{\mid (\mathbb {I}-2\,M)^{-1}\mid })+\frac{1}{2}(\theta ^{\intercal }(\mathbb {I}-2\,M)^{-1}\theta )\) for a multivariate normal with respect to parameters \(\theta \) and \(\eta \), respectively. Note that in the RHS of (17), Jacobi’s formula is adopted for the derivative of the determinant of the matrix \((\mathbb {I}-2\,M)^{-1}\) and \(\nabla _{\eta _i}\Sigma ^{-1}\) is

where for each \(i=1,\ldots ,d\),

and for \(i=d+1\),

Table 2 presents the numerical results for the standard bivariate normal distribution. As shown in the table, for event types \(\mathbbm {1}_{\{ X_1+X_2>a\}}, \mathbbm {1}_{\{X_1>a,X_2>a\}}\), and \(\mathbbm {1}_{\{X_1X_2>a,X_1>0,X_2>0\}}\), tilting different parameters results in different performance in variance reduction. Although sometimes tilting the variance parameter or the correlation parameter alone provides poor performance, combining them as mean parameter tilting yields 2 to 3 times better performance than one-parameter exponential tilting.

Note that for easy implementation, we here consider the standard parameterization and directly tilt \({\mu }=(\mu _1,\mu _2)\), \({\sigma }=(\sigma _1,\sigma _2)\), and \(\rho \) in this example; that is, in this case, \((\mathbb {I}-2M)^{-1}\theta ={\mu }\) and \((\mathbb {I}-2\,M)^{-1}= \begin{pmatrix} \sigma _1^2 &{} \rho \sigma _1\sigma _2\\ \rho \sigma _1\sigma _2&{}\sigma _2^2 \end{pmatrix} \). In addition, we illustrate the \(G(\mu , \sigma , \rho )\) function for simple event \(X + Y> 3\) under the standard bivariate normal distribution in Fig. 2, where neither \(\mu \) nor \(\sigma \) are sensitive to the initial values; although the range of the initial values becomes vital for finding optimal \(\rho \), \(G(\rho )\) is flat for most \(\rho \).Footnote 2

Example 2

Gamma distribution. Let X be a random variable with a gamma distribution, denoted by \(\texttt{Gamma}(\alpha ,\beta )\), with pdf \(\frac{dP}{d\mathscr {L}}=(\beta ^{\alpha }/\Gamma (\alpha ))\, x^{\alpha -1}e^{-\beta x}\). By using the sufficient exponential embedding in (3) with \(h_1(x):=\log (x)\) and \(h_2(x):=x\), we have

In this case, the tilting probability measure \(Q_{\theta ,\eta }\) is \(\texttt{Gamma}(\alpha +\theta , \beta -\eta )\). For the event of \(\mathscr {P}(X)=\mathbbm {1}_{\{X > a\}}\) for \(a > 0\), define \(\bar{Q}_{\theta ,\eta }\) as \(\texttt{Gamma}(\alpha -\theta , \beta +\eta )\). Applying Theorem 1, \((\theta ^*,\eta ^*)\) is the root of

where \(\Upsilon (\alpha +\theta )\) is a digamma function equal to \(\Gamma '(\alpha +\theta )/\Gamma (\alpha +\theta )\).

Table 3 presents the numerical results for the gamma distribution. Note that the commonly used one-parameter exponential tilting involves a change only for the parameter \(\beta \) (i.e., changing \(\beta \) to \(\beta -\eta ^*\)) in the case of the gamma distribution. However, observe that tilting the other parameter \(\alpha \) (i.e., \(\alpha \rightarrow \alpha +\theta ^*\)) for some cases yields 2 to 3 times better performance than the one-parameter exponential tilting in terms of variance reduction factors. This is due to the \(-\theta <\alpha \) and \(\eta <\beta \) constraint. For instance, consider the case in which we tilt only one parameter, either \(\theta \) or \(\eta \), as follows. For the simple event \(\mathbbm {1}_{\{X>a\}}\), we can either choose parameter \(\eta \) such that \(- \beta<\eta <\beta \) or parameter \(\theta \) such that \(-\alpha < \theta \) and \(\alpha -\theta \in \mathbbm {R}{\setminus }\{0,-1,-2,\ldots \}\) (see “Appendix B”), to obtain a larger mean for the tilted gamma distribution. In this case, it is clear that \(\theta \)-tilting yields a larger parameter search space and thus achieves better performance than \(\eta \)-tilting. For simple event \(\mathbbm {1}_{\{1/X>a\}}\), the optimal tilting parameters \(\theta ^*\) and \(\eta ^*\) can be obtained by solving the revised version of (19) and (20), where the condition is revised from \(X>a\) to \(1/X>a\). Note that event \(\mathbbm {1}_{\{1/X>a\}}\) shows the opposite effect.

Additionally, Fig. 3 illustrates the \(G(\theta ,\eta )\) function for simple event \(X>10\) under the gamma distribution. The range of the initial values becomes vital for finding optimal \(\theta , \eta \) due to the \(\theta >-\alpha \) and \(\eta <\beta \) constraint. Moreover, similar to the case in Fig. 1c, there exists a flat area for simultaneously tilting both parameters \(\theta ,\eta \).

Remark 3

Observed from the above examples, the proposed sufficient exponential tilting yields improvements in terms of variance reduction in two aspects. First, in some cases, tilting multiple parameters simultaneously via our sufficient exponential tilting algorithm greatly improves the variance reduction performance; for example, in the case of simulating simple moderate-deviation rare events, for the normal distribution, although changing \(\sigma \) or \(\rho \) alone may not help much in variance reduction, changing them together with \(\mu \) can yield 3 to 4 times better performance than traditional mean-shift one-parameter tilting. Second, in some cases, tilting other parameters results in better performance; for instance, for the gamma distribution, changing the shape parameter \(\alpha \) leads to better performance for some rare events, though traditional one-parameter tilting always changes the rate parameter \(\beta \). Moreover, for the simple cases, the computational time of such two-parameter tilting is almost the same as that of one-parameter tilting since our algorithm always converges in 3 or 4 iterations when locating optimal tilting parameters. Analyses for more complex mixture distributions regarding the computational cost are provided in Sect. 4.

For other events of interest, however, e.g., \(E(X\mathbbm {1}_{\{0<X<a\}})\), other than the presented rare event cases, the proposed sufficient exponential tilting method always yields better performance than one-parameter tilting because optimal two-parameter tilting includes the solution of one-parameter tilting. For example, in the case of simulating \(E(X\mathbbm {1}_{\{0<X<1\}})\) for the standard normal distribution, tilting the standard deviation together with the mean via our method yields 10 times better variance reduction performance than tilting the mean only.

2.2 Bounded relative error and logarithmic efficiency analysis

Here we state the theoretical results of both bounded relative error and logarithmic efficiency for the normal and gamma distributions under the case of simple rare events. Note that the optimal tilting parameters in Theorems 5 and 6 are approximately optimal tilting parameters, in the standard large deviation sense, to be defined in “Appendices A and B”.

Theorem 5

Let X be a random variable from the standard normal distribution N(0, 1) with pdf \(\varphi (x)\). Denote \(\varphi _{\theta ,\eta }(x)\) as the pdf of \(N(\theta /(1-2\eta ),1/(1-2\eta ))\).

For the simulation of the rare event probability \(p=P(X>a)\), the domains of the tilting parameters \(\theta \) and \(\eta \) are \(\Theta =(-\infty ,\infty )\) and \(H=[-1/2,1/2)\), respectively, and the approximated optimal tilting parameters are \(\theta ^*=a(1-2\eta ^*)\) and \(\eta ^* \rightarrow -1/2\) as \(a \rightarrow \infty \). Moreover, the approximated optimal sufficient exponential tilting entails logarithmic efficiency but not bounded relative error; i.e., for all \(\varepsilon >0\), we have

Moreover, optimal sufficient exponential tilting outperforms traditional optimal one-parameter exponential tilting in the sense of reducing asymptotic variance.

Theorem 6

Let X be a random variable from the gamma distribution \(\texttt{Gamma}(\alpha ,\beta )\) with pdf f(x). Denote \(f_{\theta ,\eta }(x)\) as the pdf of \(\texttt{Gamma}(\alpha +\theta ,\beta -\eta )\).

(i) For the simulation of rare event probability \(p=P(X>a)\), the domains of the tilting parameters \(\theta \) and \(\eta \) are \(\Theta =\{\theta \mid -\alpha < \theta ~\text {and}~\alpha -\theta \in \mathbbm {R}{\setminus }\{0,-1,-2,\ldots \}\}\) and \(H=(-\beta ,\beta )\), and the approximated optimal tilting parameters are \(\eta ^* = (a \beta - \alpha - \theta ^*)/a\) and \(\theta ^* \rightarrow \infty \) as \(a \rightarrow \infty \), such that \(\theta ^*/a \rightarrow c\) with \(c \in (0,2\beta )\).

Moreover, the approximated optimal sufficient exponential tilting entails bounded relative error; i.e.,

Therefore, optimal sufficient exponential tilting outperforms traditional optimal one-parameter exponential tilting, which has only logarithmic efficiency for simulating \(\{X>a\}\).

(ii) For the simulation of rare event probability \(p=P(X<1/a)\), the domains of the tilting parameters \(\theta \) and \(\eta \) are \(\Theta =\{\theta \mid -\alpha<\theta <\alpha ~\text {and}~\alpha -\theta \in \mathbbm {R}{\setminus }\{0,-1,-2,\ldots \}\}\) and \(H=(-\infty ,\beta )\), and the approximated optimal tilting parameters are \(\eta ^* = \beta - a(\alpha + \theta ^*)\) and \(\theta ^* \rightarrow \alpha \) as \(a \rightarrow \infty \).

Moreover, approximated optimal sufficient exponential tilting entails bounded relative error; i.e.,

Furthermore, the ratio of the expected squared estimators between optimal sufficient exponential tilting and optimal one-parameter tilting is less than one, indicating that the former outperforms the latter.

The proofs of Theorems 5 and 6 are given in “Appendices A and B”, respectively.

Remark 4

Note that two matters affect the domain of tilting parameter spaces \(\Theta \) and H. First, it is necessary to ensure realistic parameters for the tilted distribution; e.g., the \(\eta < 1/2\) constraint for the normal distribution (\(\eta <\beta \) for the gamma distribution) guarantees the variance (the ratio parameter) of the tilted distribution to be positive. Second, other constraints are needed to ensure the finiteness of the expected squared estimator for the given event; e.g., in the simulation of rare event \(\{X>a\}\), \(\eta \ge -1/2\) is needed for the normal distribution and \(\eta >-\beta \) for the gamma distribution.

3 An application: portfolio loss under the normal mixture copula model

Consider a portfolio of loans consisting of n obligors, each of whom has a small probability of default. We further assume that the loss resulting from the default of the k-th obligor, denoted as \(c_k\) (monetary units), is known. In copula-based credit models, dependence among default indicator for each obligor is introduced through a vector of latent variables \({X}=(X_1,\ldots , X_n)\), where the k-th obligor defaults if \(X_k\) exceeds some chosen threshold \(\chi _k\). The total loss from defaults is then denoted by

where \(\mathbbm {1}\) is the indicator function. Particularly, the problem of interest is to estimate the probability of losses, \(P(L_n > \tau )\), especially at large values of \(\tau \).

As mentioned earlier in the introduction, the widely-used normal copula model might assign an inadequate probability to the event of many simultaneous defaults in a portfolio. In view of this, Bassamboo etal. (2008) and Chan and Kroese (2010) set forth the t-copula model for modeling portfolio credit risk. In this paper, we further consider the normal mixture model (McNeil etal. 2015), including the normal copula and t-copula models as special cases, for the generalized d-factor model of the form

in which

-

\({Z}=(Z_1,\ldots ,Z_d)^\intercal \) follows a d-dimensional multivariate normal distribution with zero mean and covariance matrix \(\Sigma \), where\(~^\intercal \) denotes vector transpose;

-

\({V}=(V_1,\ldots ,V_{d+1})\) are non-negative scalar-valued random variables which are independent of Z, and each \(V_j\) is a shock variable independent from each other, for \(j=1,\ldots ,d+1\);

-

\(\epsilon _k\sim N(0,\sigma _\epsilon ^2)\) is an idiosyncratic risk associated with the k-th obligor, for \(k=1,\ldots ,n\);

-

\(\rho _{k1},\ldots ,\rho _{kd}\) are the factor loadings for the k-th obligor, and \(\rho _{k1}^2+\cdots +\rho _{kd}^2\le 1\);

-

\(\rho _k=\sqrt{1-\left( \rho _{k1}^2+\cdots +\rho _{kd}^2\right) }\), for \(k=1,\ldots ,n\).

Model (22) is the so-called grouped normal mixture copula in McNeil etal. (2015), which is constructed by drawing randomly from this set of component multivariate normals based on a set of weights controlled by the distribution of V. This model enables us to blend in multiplicative shocks via the variables V, which could be interpreted as shocks that arise from new information. Note that in model (22), we consider a multi-factor model to capture the effect of different factors. In addition, rather than multiplying all components of a correlated Gaussian vector Z with a single V, we instead multiply different subgroups with different variates \(V_j\); the \(V_j\) are themselves comonotonic [see Section 7.2.1 in McNeil etal. (2015)].

Let \(W=(W_1,\dots ,W_{d+1})\) and \(V_j=g(W_j)\) for \(j=1,\ldots ,d+1\). Note that in this paper, we mainly focus on the class of \(V_j\) with \(W_j\sim \texttt{Gamma}(\alpha _j,\beta _j)\), \(j=1,\ldots ,d+1\). With \(V_j=g(W_j)=\sqrt{\nu _j/W_j}\) and \(W_j\sim \texttt{Gamma}(\nu _j/2,1/2)\) (for \(j=1,\ldots ,d+1\)), we therefore create subgroups whose dependence properties are described by normal mixture copulas with different \(\nu _j\) parameters. The groups may even consist of a single member for each \(\nu _j\) parameter, as indicated in Section 7.3 of McNeil etal. (2015) and references therein. With the above setting, in the degenerated case that \(W_1 = W_2 = \cdots = W_{d+1}\) is a common gamma random variable (i.e., \(W_j\sim \texttt{Gamma}(\nu /2,1/2)\) for \(j=1,\ldots ,d+1\)), X forms a multivariate t-distribution, which is the most popular form in financial modeling. In addition, we consider the case that \(V_j=g(W_j)=\sqrt{W_j}\), \(j=1,\ldots , d+1\) and \(W_j\) is with a generalized inverse Gaussian (GIG) distribution. The GIG mixing distribution, a special case of the symmetric generalized hyperbolic (GH) distribution, is very flexible for modeling financial returns. Moreover, GH distributions also include the symmetric normal inverse Gaussian (NIG) distribution and a symmetric multivariate distribution with hyperbolic distribution for its one-dimensional marginal as interesting examples. Note that this class of distributions has become popular in the financial modeling literature. An important reason is their link to Lévy processes (such as Brownian motion or the compound Poisson distribution) that are used to model price processes in continuous time. For example, the generalized hyperbolic distribution has been used to model financial returns in Eberlein and Keller (1995) and Eberlein etal. (1998). The reader is referred to McNeil etal. (2015) for more details.

Note that the number of factors used for model (22) usually depends on the number of obligors in the credit portfolio and their characteristics, e.g., the number of sectors that the obligors belong to. In addition, in practice we would expect the factor loadings to be fairly sparse, cf. Glasserman etal. (2008).

We now define the tail probability of total portfolio losses conditional on Z and V. Specifically, the tail probability of total portfolio losses conditional on the factors Z and V, denoted as \(\varrho (Z,V)\) is defined as

The desired probability of losses can be represented as

For an efficient Monte Carlo simulation of the probability of total portfolio losses (24), we apply importance sampling to the distributions of the factors \({Z} =(Z_1,\ldots ,Z_d)^\intercal \) and \({V}=(V_1,\ldots ,V_{d+1})^\intercal =(g(W_1),\ldots ,g(W_{d+1}))^\intercal \) (see (22)). In other words, we attempt to choose importance sampling distributions for both Z and W that reduce the variance in estimating the integral \(E[\varrho (Z,V)]\) against the original densities of Z and W.

As noted in Glasserman and Li (2005) for normal copula models, the simulation of (24) involves two rare events: the default event and the total portfolio loss event. For the normal mixture model (22), this makes the simulation of (24) even more challenging. For a general simulation algorithm for this type of problem, we simulate \(P(L_n > \tau )\) as the expected value of \(\varrho (Z,V)\) in (24). Our device is based on a joint probability simulation rather than the conditional probability simulation considered in the literature. Moreover, we note that the simulated distributions—the multivariate normal distribution Z and the commonly adopted multivariate gamma distribution for W—are both two-parameter distributions.Footnote 3 This motivates us to study a sufficient exponential tilting in the next section.

3.1 Sufficient exponential tilting for normal mixture distributions

Recall that in (22), the latent random vector X follows a multivariate normal mixture distribution. In this section, for simplicity, we consider a one-dimensional normal mixture distribution as an example to demonstrate the proposed sufficient exponential tilting. Let X be a one-dimensional normal mixture random variable with only one factor (i.e., \(d=1\)) such that \({X=\xi {V}Z=\xi g(W)Z}\), where \(\xi \in \mathbb {R}\), \(Z\sim N(0,1)\), \(W\sim \texttt{Gamma}(\alpha ,\beta )\), and V (as well as W) is a non-negative and scalar-valued random variable which is independent of Z. Since the random variable W is independent of Z, by using sufficient exponential embedding with \(h_1(z)\) and \(h_2(z)\) for Z and \(\tilde{h}_1(w)\) and \(\tilde{h}_2(w)\) for W, we have

where \(\theta _1,\eta _1\) are the tilting parameters for Z and \(\theta _2,\eta _2\) are the tilting parameters for W.

As Z follows a standard normal distribution N(0, 1) and W follows a gamma distribution \(\texttt{Gamma}(\alpha ,\beta )\), from (12) and (18), Eq. (25) becomes

where \(\mu =\theta _1/(1-2\eta _1)\) and \(\sigma ^2= 1/(1-2\eta _1)\).Footnote 4 The optimal tilting parameters \(\theta _1^*, \eta _1^*\) for Z can be obtained by solving the modified version of (13) for the normal distribution; \(\theta _2^*\) and \(\eta _2^*\) for W are the solutions of the modified version of (20) and (19) for the gamma distribution, where condition \(X>a\) is modified to accommodate the event. For example, condition \(X>a\) is modified to \(\sqrt{W}Z>a\) in (13), (20), and (19) for the example in Table 4. Note that due to the fact that W is independent of Z, the optimal tilting can be done separately for the normal and gamma distributions. Moreover, for demonstration and to promote reproducibility, we release our implementationFootnote 5 for searching the optimal parameters for the normal, gamma, and normal mixture distributions (corresponding to the results in Tables 1, 3, and 4, respectively); the optimal parameters for each setting are also listed in the released code.

Table 4 shows the numerical results for this one-dimensional, one-factor normal mixture distribution with \({V=g(W)}{=\sqrt{W}}\). Since for a normal mixture random variable, the variance is associated with the random variable W, tilting the standard deviation-related parameter \(\eta _1\) with \(\theta _1\) of the normal random variable Z is relatively insignificant in comparison to tilting the parameters of W (i.e., \(\theta _2\) or \(\eta _2\)) with \(\theta _1\). This is shown in Table 4. In addition, similar to the case demonstrated in Example 2, in this case, tilting \(\theta _2\) with \(\theta _1\) also yields better performance than tilting \(\eta _2\) with \(\theta _1\) in the sense of variance reduction, which is consistent with the theoretical results in “Appendices A and B” and the numerical results in Table 3 (see Remark 7 also).

Next, we summarize tilting for the event in which the k-th obligor defaults if \(X_k\) exceeds a given threshold \(\chi _k\) as “ABC-event tilting,” which involves the calculation of tail event

where A denotes the normally distributed part of the systematic risk factors, B denotes the idiosyncratic risk associated with each obligor, and C denotes the non-negative and scalar-valued random variables which are independent of A and B,Footnote 6 For example, for the normal mixture copula model in (22), the d-dimensional multivariate normal random vectors \(Z=(Z_1,\ldots ,Z_d)\) are associated with A, \(\epsilon _k\) is associated with B, and the non-negative and scalar-valued random variables W are associated with C. Table 5 summarizes the exponential tilting used in Glasserman and Li (2005), Bassamboo etal. (2008), Chan and Kroese (2010), Scott and Metzler (2015) and our paper. Note that Glasserman and Li (2005) consider the normal copula model so that there is no need for tilting C, and that Bassamboo etal. (2008), Chan and Kroese (2010), Scott and Metzler (2015) only consider the one-dimensional t-distribution, whereas we consider the multi-dimensional normal mixture distribution. Moreover, except for the proposed model, the other four methods adopt so-called one-parameter tilting. For example, even though Scott and Metzler (2015) consider the tilting of A and C, the same as our setting, only one parameter is tilted for each of the two distributions (i.e., mean for the normal distribution and shape for the gamma distribution); in our method, however, the tilting parameter can be either the shape or the rate parameter for the underlying gamma distribution, which results in a more efficient simulation.

Remark 5

When considering the t-distribution for \(X_k\) (i.e., \(V_j=\sqrt{\nu _j/W_j}\) with \(W_j\sim \texttt{Gamma}(\nu _j/2,1,2)\) for \(j=1,\ldots ,d+1\)), traditional one-parameter tilting for the gamma distribution has bounded relative error, whereas it has only logarithmic efficiency for the normal distribution. This fact explains why Bassamboo etal. (2008) tilt only the gamma distribution (i.e., event \(\texttt{C}\) in Table 5). However, in this paper, we additionally tilt the normal distribution to improve the “second order” efficiency via the proposed sufficient exponential tilting (see Theorem 6 ii)). Moreover, when considering \(V_j=\sqrt{W_j}, j=1,\ldots ,d+1\) with \(W_j\sim \texttt{Gamma}(\alpha _j,\beta _j)\), sufficient exponential tilting outperforms traditional one-parameter exponential tilting, which has only logarithmic efficiency (see Theorem 6 i)).

3.2 Exponential tilting for \(\varrho ({Z},{V})\)

In this subsection, we use notation similar to that in Sect. 2. Let \({Z} = (Z_1,\ldots ,Z_d)^{\intercal }\) be a d-dimensional multivariate normal random variable with zero mean and identity covariance matrix \(\mathbb {I}\),Footnote 7 and denote \({V}=(V_1,\ldots ,V_{d+1})^{\intercal }=(g(W_1),\ldots ,g(W_{d+1}))^{\intercal }\) as non-negative scalar-valued random variables, which are independent of Z. Under the probability measure P, let \(f_z({ z})=f_z(z_1,\ldots ,z_d)\) and \(f_w({w})=f_w(w_1,\ldots ,w_{d+1})\) be the probability density functions of Z and W, respectively, with respect to the Lebesgue measure \(\mathscr {L}\). As alluded to earlier, our aim is to calculate the expectation of \(\varrho ({Z},{V})\),

under the probability measure P.Footnote 8

To evaluate (26) via importance sampling, we choose a sampling probability measure Q, under which Z and W have the corresponding probability density functions \(q_z({z})=q_z(z_1,\ldots ,z_d)\) and \(q_w({w})=q_w(w_1,\ldots ,w_{d+1})\). Assume that Q is absolutely continuous with respect to P; Eq. (26) can then be written as

Let \(Q_{\mu ,\Sigma ,\theta ,\eta }\) be the sufficient exponential tilted probability measure of P. Here subscripts \({\mu }=(\mu _1,\ldots ,\mu _d)^{\intercal }\), and \(\Sigma \), constructed via \(\rho \) and \({\sigma }=(\sigma _1,\ldots ,\sigma _d)^{\intercal }\), are the tilting parameters for random vector Z,Footnote 9 and \({\theta }=(\theta _1,\ldots ,\theta _{d+1})^{\intercal }\) and \({\eta }=(\eta _1,\ldots ,\eta _{d+1})^{\intercal }\) are the tilting parameters for W. Define the likelihood ratios

where \(q_{z,{\mu },\Sigma }(z)\) and \(q_{w,{\theta },{\eta }}(w)\) denote the probability density functions corresponding to \(q_z(z)\) with tilting parameters \({\mu }\) and \(\Sigma \) and \(q_w(w)\) with tilting parameters \({\theta }\) and \({\eta }\), respectively. Then, combined with (28), Eq. (27) becomes

Denote \(G(\!{\mu },\!\Sigma ,\!{\theta },\!{\eta }){=} E_{P}\left[ \varrho ^2(Z,V){r_{z,{\mu }\!,\Sigma }(Z)}{r_{w,{\theta },\!{\eta }}(W)\!}\right] \), which is assumed to be finite. By using the same argument as that in Sect. 2, we minimize \(G({\mu },\Sigma ,{\theta },{\eta })\) to get the tilting formula. That is, tilting parameters \({\mu }^*\), \(\Sigma ^*\), \({\theta }^*\), and \({\eta }^*\) are chosen to satisfyFootnote 10

3.2.1 (Fast) inverse Fourier transform for non-identical \(c_k\)

To obtain the optimal tilting parameters in (29) and (30), we must calculate the conditional default probability \(\varrho (z,v)=P(L_n>\tau \mid Z=z, V=v)\) in (21). We here apply the fast inverse Fourier transform (FFT) method described as follows. Note that using the definition of the latent factor \(X_k\) for the k-th obligor in (22), the conditional default probability \(P(X_k > \chi _k \mid Z=z, V=v)\) given \(Z=(z_1,\ldots ,z_d)^\intercal \) and \(V=(v_1,\ldots ,v_{d+1})^\intercal \) becomes

With non-identical \(c_k\), the distribution of the sum of n independent but non-identically distributed weighted Bernoulli random variables becomes difficult to evaluate. Here we adopt the inverse Fourier transform to calculate \(\varrho (z,v)\) (Oberhettinger 2014). Recall that \(L_n \mid (Z=z,V=v)\) equals

where \(H_{\ell }^{z,v}\sim \texttt{Bernoulli}(p_{z,v,\ell })\) (see Eq. (31)), and the support of \(L^{z,v}_n\) is a discrete set with a finite number of values. Its Fourier transform is

where \(\phi _{H^{z,v}_{\ell }}(s)=1-p_{z,v,\ell }+p_{z,v,\ell }e^{is}\). For random variable \(L^{z,v}_n\), we can recover \(q^{z,v}_k\) = \(P(L^{z,v}_n = k)\) by inverting the Fourier series:

where \(k=1,2,\ldots ,\infty \).

An FFT algorithm computes the discrete Fourier transform (DFT) of a sequence, or its inverse. To reduce the computational time, this paper uses the FFT to approximate the probability in (32). With Euler’s relation \(e^{i\theta }=\cos \theta +i\sin \theta \), we can confirm that \(\phi _{L^{z,v}_n}(t)\) has a period of \(2\pi \); i.e., \(\phi _{L^{z,v}_n}(t)=\phi _{L^{z,v}_n}(t+2\pi )\) for all t, due to the fact that \(e^{i(t+2\pi )k}=e^{itk}\).

With this periodic property, we now evaluate the characteristic function \(\phi _{L^{z,v}_n}\) at N equally spaced values in the interval \([0,2\pi ]\) as

which defines the DFT of the sequence of probabilities \(q^{z,v}_k\). By using the corresponding sequence of characteristic function values above, we can recover the sequence of probabilities; that is, we aim for the sequence of \(q^{z,v}_k\)’s from the sequence of \(b^{z,v}_m\)’s, which can be achieved by employing the inverse DFT operation

Finally, the approximation of \(\varrho (z,v)\) can be calculated as

where \(P_{\text {FFT}}(\cdot )\) denotes the probability approximated using a fast inverse Fourier transform.

Note that even “exact” FFT algorithms have errors when using finite-precision floating-point arithmetic, but these errors are typically very small. Most FFT algorithms have outstanding numerical properties; for example, the bound on the relative error for the Cooley–Tukey algorithm is \(O(\epsilon \log N)\). To attest the approximation performance, Table 6 provides several examples showing the approximation error and computational time of the inverse Fourier transform. In the table, we set the number of obligors \(n=250\) and assume \(p_{z,v,\ell }=0.1\) (i.e., \(H_{\ell }^{z,v}\sim \texttt{Bernoulli}(0.1)\)) for simplicity. To check the approximation performance, we first consider the case with equal \(c_i=1\), where the probability (denoted as \(P_{\text {Binomial}}(\cdot )\)) is evaluated analytically via the cumulative density function of the binomial distribution with parameters \(n=250\) and \(p=0.1\). Observe that the differences between the approximated probabilities (\(P_{\text {FFT}}(\cdot )\)) and the analytical ones (\(P_{\text {Binomial}}(\cdot )\)) are negligible, i.e., the bias is extremely small. Moreover, we investigate the case with five different \(c_i\), in which we compare the approximated probabilities with those generated via simulation with 500,000 samples; as shown in Table 6, the approximated probabilities all lie within the corresponding 95% confidence intervals. We note also that the computational time grows linearly with the number of different \(c_i\).Footnote 11

3.2.2 Bounded relative error analysis

To state that when one simulates the portfolio loss probability \(P(L_n > \tau )\) in (24), the optimal sufficient exponential tilting has bounded relative error, we first define the following notation. To set the stage, recall \(L_n\) in (21). Let u(x) be a function that increases at a subexponential rate such that \(u(x) \rightarrow \infty \) as \(x \rightarrow \infty \), and set the default thresholds for the k-th obligor to be \(\chi _k = a_k u(n)\), where \(a_k > 0\) is a positive constant; that is, we consider

Let \(W=(W_1,\ldots ,W_d,W_{d+1}):=(\tilde{W},W_{d+1})\). Due to the independence of \(\{W_j,j=1,\ldots ,d+1\}\), we can define

If \(W_{d+1}\) follows a gamma distribution \(\texttt{Gamma}(\alpha ,\beta )\), we have

Here the domains of the tilting parameter spaces are the same as those in Theorems 5 and 6.

The asymptotic optimal tilting parameters \(\mu ^*, \sigma ^*\) for Z can be obtained by using the same method of solving (14) and (15), in which the indicated set \(\mathbbm {1}_{\{X \in A\}}\) is replaced by \(\varrho (Z,V)\) in (27); \(\theta ^*\) and \(\eta ^*\) for W can be obtained by using the same method of solving (19) and (20), in which the indicated set \(\mathbbm {1}_{\{X > a \}}\) is replaced by \(\varrho (Z,V)\) in (27).Footnote 12

Theorem 7

Under the setting in model (22) with \(V_j=g(W_j)\) for \(j=1,\ldots ,d+1\), and the assumption that \(W_{d+1}\sim \texttt{Gamma}(\alpha ,\beta )\), let the sequence \(\{(c_k,a_k): k \ge 1\}\), defined in (34), take values in a finite set \(\mathcal{D}\). In addition, the proportion of each element \((c_k,a_k) \in \mathcal{D}\) in the portfolio converges to \(q_j > 0\) as \(n \rightarrow \infty \). Considering \(\tau = nb\) for some \(b > 0\), let \(A_n = \{L_n > nb\}\). Then, we have

where \(E^*\) denotes the expectation under the probability measure Q in (35). In other words, optimal sufficient exponential tilting has bounded relative error.

The proof of Theorem 7 is given in “Appendix C”.

3.3 Algorithms

This subsection summarizes the steps when we implement the proposed sufficient exponential importance sampling algorithm, which consists of two components: tilting parameter search and tail probability calculation. The aim of the first component is to determine the optimal tilting parameters. We implement the search phase using an automatic Newton method (Teng etal. 2016). We here define the conjugate measures \(\bar{Q}_{\mu ,\Sigma }\) for Z and \(\bar{Q}_{\theta ,\eta }\) for W of the measure Q.Footnote 13 With these two conjugate measures and the results in (14), (15), (20), and (19), we define functions \(g_{\mu }(\mu )\), \(g_{\Sigma }(\Sigma )\), \(g_{\theta }(\theta )\), and \(g_{\eta }(\eta )\) as

where \(\nabla _{\eta _i}M\) in (37) is defined in (16). To find the optimal tilting parameters, we must find the roots of the above four equations. With Newton’s method, the roots of (36), (37), (38), and (39) are found iteratively by

where the Jacobian of \(g_{\delta }(\delta )\) is defined as

In (40) and (41), \(\delta \) can be replaced with \(\mu \), \(\Sigma \), \(\theta \), and \(\eta \), and \(J^{-1}_{\delta }\) is the inverse of the matrix \(J_\delta \).

To measure the precision of the roots to the solutions in (36), (37), (38), and (39), we define the sum of the square error of \(g_\delta (\delta )\) as \(\Vert g_\delta (\delta )\Vert =g'_\delta (\delta )g_\delta (\delta )\); a \(\delta ^{(n)}\) is accepted when \(\Vert g_\delta (\delta ^{(n)})\Vert \) is less than a predetermined precision level \(\epsilon \). To illustrate the algorithm, we here use the setting \(V_j=g(W_j)=\sqrt{\nu _j/W_j}\) and \(W_j\sim \texttt{Gamma}(\nu _j/2,1/2)\) (for \(j=1,\ldots ,d+1\)) as an example. The detailed procedures of the first component are described as follows:

-

Determine optimal tilting parameters:

-

(1)

Generate independent samples \(z^{(i)}\) from \(N(0,\mathbb {I})\) and \(w_j^{(i)}\) from \(\texttt{Gamma}(\nu _j/2,1/2)\) for \(i=1,\ldots \mathscr {B}_1\); calculate \(v_j^{(i)}=\sqrt{\nu _j/w_j^{(i)}}\) for \(j=1,\dots ,d+1\).

-

(2)

Set \(\mu ^{(0)}\), \(\Sigma ^{(0)}\), \(\theta ^{(0)}\), and \(\eta ^{(0)}\) properly; \(k=1\).

-

(3)

Calculate \(g_{\delta ^{(0)}}\) functions via (36), (37), (38), and (39).

-

(4)

Calculate the \({J}_\delta \) and their inverse matrices for the \(g_\delta \) functions.

-

(5)

Calculate \(\delta ^{(k)}=\delta ^{(k-1)}-{J}_{\delta ^{(k-1)}}^{-1}{g}_\delta (\delta ^{(k-1)})\) in (40) for \(\delta =\mu , \Sigma , \theta , \eta \).

-

(6)

Calculate \(g_{\delta ^{(k)}}\) functions via (36), (37), (38), and (39). If \(\forall \,\,\delta \in \{\mu ,\Sigma ,\theta ,\eta \},\,\, \Vert {g}_\delta (\delta ^{(k)})\Vert <\epsilon \), set \(\delta ^*=\delta ^{(k)}\) and stop. Otherwise, return to step (4).

-

(1)

We proceed to describe the second component that calculates the probability of losses, in which optimal tilting parameters \(\mu ^*\), \(\Sigma ^*\), \(\theta ^*\) and \(\eta ^*\) are used (see step (6) for the first component above). The detailed procedures of the second component are summarized as follows:

-

Calculate the probability of losses, \(P(L_n>\tau )\):

-

(1)

Generate independent samples \(z^{(i)}\) from \(N(\mu ^*,\Sigma ^*)\) and \(w_j^{(i)}\) from \(\texttt{Gamma}(\nu _j/2-\theta ^*_j,1/2+\eta ^*_j)\) for \(i=1,\ldots \mathscr {B}_2\); calculate \(v_j^{(i)}=\sqrt{\nu _j/w_j^{(i)}}\) for \(j=1,\dots ,d+1\).

-

(2)

Estimate m by \(\hat{m}=\frac{1}{\mathscr {B}_2}\sum _{i=1}^{\mathscr {B}_2}\tilde{\varrho }(z^{(i)}, v^{(i)})\,r_{z,\mu ^*,\Sigma ^*}(z)\,r_{w,\theta ^*,\eta ^*}(w)\) in (28), where \(\tilde{\varrho }(z,v)\) is calculated by the analytical form from (33) and \(\mu ^*,\Sigma ^*,\theta ^*\), and \(\eta ^*\) are obtained from step (6) of the first component of the algorithm.

-

(1)

As a side note, in the above sufficient exponential tilting algorithm, a component-wise Newton method is adopted to determine the optimal tilting parameters \(\mu ,\Sigma ,\theta \), and \(\eta \); this differs from Algorithm 2 in Teng etal. (2016), which involves only one-parameter tilting for \(\mu \).

Remark 6

To implement the proposed importance sampling, we require an additional searching stage. The searching stage employs the recursive formula in (40), in which the function \(g_\delta (\cdot )\) and the Jacobian \(J_\delta \) do not have closed-form formulas and must be approximated by Monte Carlo simulation. For easy presentation, as V (or W) dominates the performance [as stated in 3.3 of Bassamboo etal. (2008)], we here use the case for estimating \(\eta ^*\) (see Eq. (39)) to illustrate the approximation.

Let \(\hat{g}_\eta (\eta )\) be the Monte Carlo estimator of \(g_\eta (\eta )\), defined as

where \(Y^{(1)},\ldots ,Y^{(\mathscr {B}_1)}\) are random samples under \(\bar{Q}_{\theta ,\eta }\). However, it is difficult to generate samples from \(\bar{Q}_{\theta ,\eta }\) because it involves the payoff function \(\varrho (Z,V)\). Recall that substituting (8) for \(\bar{Q}_{\theta ,\eta }\) in (39) yields

Therefore, we can estimate \(g_\eta (\eta ) \) by

where \(Z^{(1)}, \ldots , Z^{(\mathscr {B}_1)}\) and \(V^{(1)},\ldots ,V^{(\mathscr {B}_1)}\) (and \(W^{(1)},\ldots ,W^{(\mathscr {B}_1)}\)) are i.i.d. samples under P. Note that under the finiteness of the second moment assumption in (4), the standard strong law of large numbers implies that the second term on the right-hand side of (42) converges P-almost surely to

which is \(E_{\bar{Q}_{\theta ,\eta }}[W \mid L_n>\tau ]\) by the definition of conjugate measure \(\bar{Q}_{\theta ,\eta }\).

To approximate the second term on the right-hand side of (42), we apply a similar technique to that in Fuh and Hu (2004), which uses a small number of \(\mathscr {B}_1\) to locate the optimal tilting parameters. By (39), (42), and a similar argument to that in Theorem 1 of Bassamboo etal. (2008), we have \(\mathbbm {1}_{\{L_n>\tau \}} \sim \mathbbm {1}_{\{W_{d+1}>a\}}\) when \(V_j=g(W_j)=\sqrt{W_j}\) for \(j=1,\ldots ,d+1\). This implies that

Since \(P(W_{d+1}>a) \) is small when a is large, the preceding equation indicates that we may lose numerical/simulation precision. Therefore, we multiply both the numerator and denominator of the preceding equation by \(\exp (\eta x + \theta \log x)\), for suitable \(\theta = \xi \), \(\eta = \beta - (\alpha +\xi )/a\) for a large \(\xi \) as suggested in (B20) and (B21), and compute

Then we run the simulation in (42) based on (43). Note that over an important part of the set \([a,\infty )\), \(x^{\alpha + \theta -1} \exp (- (\beta - \eta ) x)\) is a moderately sized number such that the conditional expectation in (43) can be simulated quite accurately with a reasonably small size of \(\mathscr {B}_1\). On the other hand, similar techniques can be used for the case \(V_j=g(W_j)=\sqrt{\nu _j/W_j}\); that is, we turn to estimate \(E_{\bar{Q}_{\theta ,\eta }}\left[ W \,\mid \, L_n>\tau \right] \sim E_{\bar{Q}_{\theta ,\eta }}\left[ W \,\mid \, W_{d+1} < 1/a \right] \) (check “Appendix B”).

3.4 Numerical results

We compare the performance between our method and those proposed in Bassamboo etal. (2008) and Chan and Kroese (2010). For comparison purposes, we adopt the same sets of parameter values as those in Table 1 of Bassamboo etal. (2008), where the latent variables \(X_k\) in 22 follow a t-distribution, i.e., \(V_1=V_2=\sqrt{\nu _1/W_1}\) and \(W_1\sim \texttt{Gamma}(\nu _1/2,1/2)\). The model parameters were chosen to be \(n=250\), \(\rho _{11}=0.25\), the default thresholds for each individual obligors \(\chi _i =0.5\times \sqrt{n}\), each \(c_i=1\), \(\tau =250\times b\), \(b=0.25\), and \(\sigma _{\epsilon }=3\). Table 7 reports the results of the exponential change of measure (ECM) proposed in Bassamboo etal. (2008) and conditional Monte Carlo simulation without and with cross entropy (CondMC and CondMC-CE, respectively) in Chan and Kroese (2010). As observed from the table, the proposed algorithm (the last four columns) offers substantial variance reduction compared with crude simulation; in general, it compares favorably to the ECM and CondMC estimators. Moreover, for a fair comparison with the results of CondMC-CE, we first follow the CondMC method by integrating out the shock variable analytically; then, instead of using the cross-entropy approach, we apply the proposed importance sampling method for variance reduction. Under this setting, the proposed method yields variance reductions comparable to those of CondMC-CE.

In addition to the above results, we additionally conducted experiments with the following setup, the results of which are reported and discussed in “Appendix D”. First, we compare the performance of the proposed method with crude simulation, under three-factor normal mixture models, in which the t-distribution or the GIG distribution for \(X_k\) is considered. (Note that we compare the performance of our method only with crude Monte Carlo simulation henceforth, as most of the literature focuses on simulating one-dimensional cases.) The cases with different losses resulting from default of the obligors are also investigated. Finally, we compare the computational time of crude Monte Carlo simulation with that of the proposed importance sampling under several scenarios, and provide insight into the trade-off between reduced variance and increased computational time. Detailed setups for all the experiments for portfolio losses are also found in Appendix 3.4.

4 Conclusion

This paper introduces a comprehensive framework for a sufficient exponential tilting algorithm, supported by both theoretical foundations and empirical evidence from numerical experiments. We employ this approach in a multi-factor model featuring a normal mixture copula. Traditional methods for calculating portfolio credit risk—such as direct analysis or basic Monte Carlo simulation—are insufficient due to the large portfolio size, diverse obligor characteristics, and the interconnected yet infrequent nature of default events. To address these challenges, we offer an optimized simulation algorithm that more accurately estimates the likelihood of significant portfolio losses when a normal mixture copula is involved. In summary, our computational method brings two main innovations to the table. First, from a statistical standpoint, we introduce an unconventional sufficient (statistic) exponential embedding that serves as a versatile tool in importance sampling. Secondly, our work appears to be the first to specifically tackle high-dimensional importance sampling within multi-factor models that go beyond the standard normal copula assumptions. In the broader context, the relevance and application of our work extend beyond credit risk to extreme event modeling in various domains, such as pandemics, highlighting its importance and versatility. The computational challenges associated with extreme event modeling are well-known, and our paper contributes significantly to this arena.

There are several possible future directions based on this model. To name a few, first, we will explore more properties of the proposed sufficient exponential tilting, and apply it to more practical cases, to see how far it can go. Second, as suggested by a reviewer, it could be helpful to discuss computing the value-at-risk or expected shortfall for general financial risk management or for insurance company interests when managing the large number of obligors involved, as these remain demanding topics in practice. Third, although in this paper the default time is fixed and the default boundary is exogenous, the default time could be any time before a pre-fixed time T and the default boundary could depend on firm characteristics and may be state- and time-dependent. To capture these phenomena, we will consider more complicated dynamic models, for which importance sampling should be more sophisticated. Before that, it would be interesting to develop an importance sampling algorithm for first-passage time events (i.e., the probability that sums of non-negative random variables fall below a sufficiently small threshold), and study the performance of sufficient exponential tilting in the domain of state-dependent importance sampling. Finally, as credit derivatives are among the fastest growing contracts in the derivatives market (Chen and Sopranzetti 2003; Ericsson etal. 2009; Hirtle 2009), another interesting future research direction would be to apply the proposed technique to credit derivative valuation.

Notes

Note that to draw the figure, we assume \(\mu =\mu _1=\mu _2\) and \(\sigma =\sigma _1=\sigma _2\) for easy presentation.

Here we treat the mean vector and variance-covariance matrix of Z as two parameters.

To simplify the notation, we here use \(\mu \) and \(\sigma ^2\).

Here we omit the coefficients before A B, and C for simplicity.

Although here we consider the identity covariance matrix for simplicity, it is straightforward to extend this to any valid covariance matrix \(\Sigma \).

Note that Theorem 1 works for a random variable from an exponential family. In this section, we simply apply the proposed importance sampling to simulate the portfolio loss. In the case of simulating a rare event probability, in which X is in a non-convex set, further decomposition based on mixture distribution tilting is required, cf. Fuh and Hu (2004), Glasserman etal. (2008). This line will be further studied in a separate paper.

To simplify the notation, we use \(\mu \) to denote one dimensional and high dimensional parameters.

This partial derivative is componentwise.

All of the experiments were obtained by running programs via Mathematica 11 on a MacBook Pro with a 2.6 GHz Intel Core i7 CPU.

Note that here we do not tilt the correlation parameters.

Note that these two conjugate measures are different from that defined in Sect. 2, which is additionally with respect to a general payoff function \(\mathscr {P}\). As the payoff function here is the probability of losses in (24) and thus can be represented as the expectation of an indicator function (which is similar to the simple examples in Sect. 2.1), we here follow the conjugate measure in Fuh etal. (2018) for the following calculation—the event of the probability becomes the condition of the expectation (see Equations (36)–(39)).

For our experiments, the software utilized was Mathematica 13.1; the hardware platform was a MacBook Pro (16-inch, 2021) (M1 Pro Chip with a 10-core CPU).

Moreover, increasing the number of tilting parameters only affects the search time for the optimal tilting parameters, the time complexity of which grows linearly with the number of considered parameters.