Abstract

Combinatorial estimation is a new area of application for sequential Monte Carlo methods. We use ideas from sampling theory to introduce new without-replacement sampling methods in such discrete settings. These without-replacement sampling methods allow the addition of merging steps, which can significantly improve the resulting estimators. We give examples showing the use of the proposed methods in combinatorial rare-event probability estimation and in discrete state-space models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Importance sampling is a widely used Monte Carlo technique that involves changing the probability distribution under which simulation is performed. Importance sampling algorithms have been applied to a variety of discrete estimation problems, such as estimating the locations of change-points in a time series (Fearnhead and Clifford 2003), the permanent of a matrix (Kou and McCullagh 2009), the \(\mathcal {K}\)-terminal network reliability (L’Ecuyer et al. 2011) and the number of binary contingency tables with given row and column sums (Chen et al. 2005).

Sequential importance resampling algorithms (Doucet et al. 2001; Liu 2001; Del Moral et al. 2006; Rubinstein and Kroese 2017) combine importance sampling with some form of resampling. The aim of the resampling step is to remove samples that have an extremely low importance weight. In the case that the random variables of interest take on only finitely many values, forms of resampling that involve without-replacement sampling can be used (Fearnhead and Clifford 2003).

The resulting algorithms are similar to particle-based algorithms with resampling, but the sampling and resampling steps are replaced by a single without-replacement sampling step. In the approach of Fearnhead and Clifford (2003), the authors use what we characterize as a probability proportional to size sampling design. These ideas have recently been incorporated into quasi Monte Carlo (Gerber and Chopin 2015), as sequential quasi Monte Carlo. The stochastic enumeration algorithm of Vaisman and Kroese (2015) is another without-replacement sampling method, based on simple random sampling.

Use of without-replacement sampling has a number of advantages. This type of sampling tends to automatically compensate for deficiencies in the importance sampling density. If the importance sampling density wrongly assigns high probability to some values, then the consequence of this mistake is limited, as those values can still only be sampled once. This type of sampling can in principle reduce the effect of sample impoverishment (Gilks and Berzuini 2001), as there is a lower limit to the number of distinct particles.

The first contribution of this paper is to highlight the links between the field of sampling theory and sequential Monte Carlo, in the discrete setting. In particular, we view the use of without-replacement sampling as an application of the famous Horvitz–Thompson estimator (Horvitz and Thompson 1952), unequal probability sampling designs (Brewer and Hanif 1983; Tillé 2006) and multistage sampling. The links between these fields have received limited attention in the literature (Fearnhead 1998; Carpenter et al. 1999; Douc et al. 2005), and the link with the Horvitz–Thompson estimator has not been made previously.

Our application of methods from sampling theory would likely be considered unusual by practitioners in that field. For example, in the Monte Carlo context, physical data collection is replaced by computation, so huge sample sizes become quite feasible. Also, it has traditionally been unusual to apply multistage methods with more than three stages of sampling, but in the Monte Carlo context we apply such methods with thousands of stages.

The second contribution of this paper is to describe a new method of without-replacement sampling, using results from sampling theory. Specifically, we use the Pareto design (Rosén 1997a, b) as a computationally efficient unequal probability sampling design. Our use of the Pareto design relies on results from Bondesson et al. (2006).

The rest of this paper is organized as follows. Section 2 describes importance sampling and related particle algorithms. Section 3 gives an overview of sampling theory. Section 4 introduces the new sequential Monte Carlo method incorporating sampling without replacement, and lists some advantages and disadvantages of the proposed methodology. Section 5 gives some numerical examples of the effectiveness of without-replacement sampling. Section 6 summarizes our results and gives directions for further research.

2 Sequential importance resampling

2.1 Importance sampling

Let \({\mathbf {X}}_d = \left( X_1, \ldots , X_d\right) \) be a random vector in \(\mathbb {R}^d\), having density f with respect to a measure \(\mu \), e.g., the Lebesgue measure or a counting measure. Let \({\mathbf {X}}_t = \left( X_1, \ldots , X_t\right) \) be the first t components of \({\mathbf {X}}_d\). We wish to estimate the value of \(\ell = \mathbb {E}_f\left[ h\left( {\mathbf {X}}_d\right) \right] \), for some real-valued function h.

The crude Monte Carlo approach is to simulate \(n\) iid copies \({\mathbf {X}}_d^1, \ldots , {\mathbf {X}}_d^n\) according to f, and estimate \(\ell \) by \(n^{-1}\sum _{i=1}^nh\left( {\mathbf {X}}_d^i\right) \). However, there is no particular reason to use f as the sampling density. For any other density g such that \(g({\mathbf {x}}) = 0\) implies \(h({\mathbf {x}})f({\mathbf {x}}) = 0\),

where \(w\left( {\mathbf {x}}_d\right) \,{\buildrel {\mathrm {def}} \over =}\,\frac{f\left( {\mathbf {x}}_d\right) }{g\left( {\mathbf {x}}_d\right) }\) is the importance weight. If \({\mathbf {X}}_d^1, \ldots , {\mathbf {X}}_d^n\) are iid with density g, then the estimator

is unbiased. This estimator is known as an importance sampling estimator (Marshall 1956), with g being the importance density.

The quality of the importance sampling estimator depends on a good choice for the importance density. If h is a nonnegative function, then the optimal choice is

and the estimator has zero variance.

If the normalizing constant of f is unknown, then we can replace the weight function w with the unnormalized version \(w_{\mathrm {r}}\left( {\mathbf {x}}\right) = \frac{c f\left( {\mathbf {x}}_d\right) }{g\left( {\mathbf {x}}_d\right) }\), where cf is a known function but c and f are unknown individually. In that case we use the asymptotically unbiased ratio estimator

2.2 Sequential importance sampling

Let \({\mathbf {x}}_{t} = \left( x_1, \ldots , x_t\right) \). We adopt Bayesian notation, so that the interpretation of \(f\left( \ldots \right) \) depends on its arguments, e.g., \(f\left( x_3 \;\vert \;{\mathbf {x}}_2\right) \) is the density of \(X_3\) conditional on \({\mathbf {X}}_2 = {\mathbf {x}}_2\). It can be difficult to directly specify an importance density on a high-dimensional space. The simplest method is often to build the distributions of the components sequentially. We first specify \(g\left( x_1\right) \), then \(g\left( x_2 \;\vert \;x_1\right) , g\left( x_3 \;\vert \;{\mathbf {x}}_{2}\right) \), etc. If g is then used as an importance density, the importance weight is

Early applications of this type of sequential build-up include Hammersley and Morton (1954) and Rosenbluth and Rosenbluth (1955). More recent uses include Kong et al. (1994), Liu and Chen (1995). See Liu et al. (2001) for further details.

It is often convenient to calculate the importance weights recursively as \(u_1\left( x_1\right) = \frac{f\left( x_1\right) }{g(x_1)}\) and

It is clear that \(u_d\left( {\mathbf {x}}_d\right) = w\left( {\mathbf {x}}_d\right) \). Note that computing \(u_t\) requires the factorization of \(f\left( {\mathbf {x}}_t\right) \) in order to compute \(f\left( x_t \;\vert \;{\mathbf {x}}_{t-1}\right) \), which can be difficult. An alternative is to use a family \(\left\{ f_t\left( {\mathbf {x}}_t\right) \right\} _{t = 1}^d\) of auxiliary densities, where it is required that \(f_d = f\). Using these densities, we can compute the importance weights as \(v_1 = \frac{f_1(x_1)}{g(x_1)}\) and

Note that \(u_d\left( {\mathbf {x}}_d\right) = v_d\left( {\mathbf {x}}_d\right) = w\left( {\mathbf {x}}_d\right) \). We obtain \(u_t\) as a special case of \(v_t\), where the auxiliary densities are the marginals of f. As \(v_t\) is more general, we use it to define our importance weights (unless otherwise stated). If the auxiliary densities are only known up to constant factors, then the unnormalized version of (5) involves setting \(v_1\left( x_1\right) = \frac{c_1 f_1\left( x_1\right) }{g\left( x_1\right) }\) and

where the functions \(\left\{ c_t f_t\left( {\mathbf {x}}_t\right) \right\} \) are known, but the normalized functions \(\left\{ f_t\left( {\mathbf {x}}_t\right) \right\} \) may be unknown.

If \(c_d = 1\) it is possible to evaluate \(f_d\), and we can use the estimator \(\widehat{\ell }_{\mathrm {ub}}\) defined in (1), regardless of whether \(c_t \ne 1\) for \(t < d\). Otherwise, if \(f_d\) is known only up to a constant factor, we must use \(\widehat{\ell }_{\mathrm {ratio}}\). The variance of the corresponding importance sampling estimator is independent of the choice of auxiliary densities and of the constants \(\left\{ c_t\right\} \), but dependent on g. This will change in Sect. 2.3 with the introduction of resampling steps.

Sequential importance sampling can be performed by simulating all d components of \({\mathbf {X}}_d\) and repeating this process \(n\) times. Alternatively, we can simulate the first component of all \(n\) copies of \({\mathbf {X}}_d\). Then, we simulate the second components conditional on the first, and so on. We adopt the second approach, as it leads naturally to sequential importance resampling.

2.3 Sequential importance resampling

It is often clear before all d components have been simulated that the final importance weight will be small. Samples with a small final importance weight will not contribute significantly to the final estimate. It makes sense to remove these samples before the full d components have been simulated. One way of achieving this is by resampling from the set of partially observed random vectors. In this context, the partially observed vectors are known as particles.

Let \(\left\{ {\mathbf {X}}_t^i \right\} _{i=1}^n\) be the set of particles for a sequential importance sampling algorithm, and let \(W_t^i = v_t\left( {\mathbf {X}}_t^i\right) \) be the importance weights in Sect. 2.2. Let \(\left\{ {\mathbf {Y}}_t^i \right\} _{i=1}^n\) be a sample of size \(n\) chosen with replacement from \(\left\{ {\mathbf {X}}_t^i\right\} _{i=1}^n\) with probabilities proportional to \(\left\{ W_t^i\right\} _{i=1}^n\), and let \(\overline{W}_t = n^{-1}\sum _{i=1}^nW_t^i\). We can replace the variables \(\left\{ \left( {\mathbf {X}}_t^i, W_t^i\right) \right\} _{i=1}^n\) by \(\left\{ \left( {\mathbf {Y}}_t^i, \overline{W}_t\right) \right\} _{i=1}^n\) and continue the sequential importance sampling algorithm. This type of resampling is called multinomial resampling. The most famous use of multinomial resampling is in the bootstrap filter (Gordon et al. 1993). There are numerous other types of resampling, such as splitting or enrichment (Wall and Erpenbeck 1959), stratified resampling and residual resampling (Liu and Chen 1995; Carpenter et al. 1999). See Liu et al. (2001) for a recent overview.

3 Sampling theory

Sampling theory aims to provide estimates about a finite population by examining a randomly chosen set of elements of the population, known as a sample. The population consists of \(N\) different objects known as units, denoted by the numbers \(1, 2, \ldots , N\). We will assume that the size \(N\) of the population is known.

We assume that for each unit \(i \in \left\{ 1, \ldots , N\right\} \) there is a fixed scalar value \(y\left( i\right) \). These values are known only for the units selected in the sample. We wish to estimate some function \(F\left( y\left( 1\right) , \ldots , y\left( N\right) \right) \) of the values, most often the mean \(\overline{y} = N^{-1}\sum _{i=1}^Ny\left( i\right) \).

In its most abstract form, sampling theory is concerned with constructing random variables taking values in certain product sets. For example, a sample chosen with replacement corresponds to a random vector taking values in \(\bigcup _{n=1}^\infty \left\{ 1, \ldots , N\right\} ^n\). A sample of fixed size \(n\) chosen with replacement corresponds to a random variable taking values in \(\left\{ 1, \ldots , N\right\} ^n\). Define the power set \(\mathcal {P}\left( X\right) \) as the set of all subsets of the set X. A sample without replacement corresponds to a random variable taking values in the power set \(\mathcal {P}\left( \left\{ 1, \ldots , N\right\} \right) \), and a sample without replacement of fixed size \(n\) corresponds to a random variable taking values in

These random variables have some distribution, and these types of distribution are known as sampling designs.

Units may be included in the sample with equal probability or unequal probability. Our focus in this section is on without-replacement sampling with a fixed sample size \(n\) and unequal probabilities. The probability of including unit i in the sample is called the inclusion probability of unit i, and denoted by \(\pi \left( i\right) \). We assume that all the inclusion probabilities are strictly positive. The probability that both units i and j are included in the sample is denoted by \(\pi (i, j)\). This is referred to as the second-order inclusion probability.

In order to apply unequal probability sampling designs, we assume that there are positive values \(\left\{ p\left( i\right) \right\} _{i = 1}^{N}\) (known as size variables). For reasons specific to the application domain, these values are assumed to be positively correlated with the values in \(\left\{ y\left( i\right) \right\} _{i = 1}^{N}\). In traditional sampling applications, \(\left\{ p\left( i\right) \right\} _{i = 1}^{N}\) might correspond to (financially expensive) census of the population at a previous time, or estimates of the \(\left\{ y\left( i\right) \right\} _{i=1}^N\) which are easily obtainable but highly variable. In our setting, the \(\left\{ p\left( i\right) \right\} _{i = 1}^{N}\) play a similar role to the importance density in traditional importance sampling.

Unlike the \(\left\{ y\left( i\right) \right\} _{i=1}^{N}\), the \(\left\{ p\left( i\right) \right\} _{i = 1}^{N}\) are known before sampling is performed. We aim to have \(\left\{ \pi \left( i\right) \right\} _{i=1}^N\) approximately proportional to \(\left\{ p\left( i\right) \right\} _{i = 1}^{N}\), and therefore approximately proportional to the \(\left\{ y\left( i\right) \right\} _{i=1}^N\). For these reasons, unequal probability designs are also known as probability proportional to size (PPS) designs. Calculation of the inclusion probabilities for these designs is often difficult. See Tillé (2006) or Cochran (1977) for further details on general sampling theory.

3.1 The Horvitz–Thompson estimator

Assume that we are using a without-replacement sampling design with fixed size \(n\), and wish to estimate the total \(N \overline{y}\) of the population values. If \(\mathbf {s} \in \mathcal {S}_n\) is the chosen sample, then the Horvitz–Thompson estimator (Horvitz and Thompson 1952) of the total is

3.2 Sampling with probability proportional to size

In the next section, we discuss some common PPS designs. In order to apply PPS designs, we often need conditions on the size variables \(\left\{ p\left( i\right) \right\} _{i = 1}^{N}\). The most common condition is \(0< p\left( i\right) < 1\), although some designs are more restrictive.

3.2.1 The Poisson and conditional Poisson designs

For the Poisson design, we require \(0< p\left( i\right) < 1\), and every unit i is included independently with probability \(p\left( i\right) \). We emphasize that this design selects a sample with a random size. The density for the Poisson design is

where \(\mathbf {s} \in \mathcal {P}\left( \left\{ 1, \ldots , N\right\} \right) \). The inclusion probabilities are \(\pi \left( i\right) = p\left( i\right) \). This design is trivial to sample from.

The Conditional Poisson (CP) design is the Poisson design conditional on the sample having size \(n\).

3.2.2 The Pareto design

The Pareto design was first proposed by Rosén (1997a, b). Assume that \(0< p\left( i\right) < 1\). We generate iid random variables \(U_1, \ldots , U_N\), distributed uniformly on \(\left[ 0, 1\right] \). These are used to generate the ranking variables

The \(n\) units with the smallest ranking variables are selected as the sample of size \(n\), making this design trivial to implement. The computational complexity of generating a Pareto sample is \(O\left( N\log N\right) \).

The density of this design is given in Bondesson et al. (2006) as

where \(\mathbf {s} \in \mathcal {S}_n\), and

The inclusion probabilities are much more difficult to compute than for the previous designs.

3.2.3 The Sampford design

Assume that \(0< p\left( i\right) < 1\) and \(\sum _{i=1}^Np\left( i\right) = n\). This condition is far more restrictive than the conditions for the previously mentioned designs, and the \(\left\{ p\left( i\right) \right\} \) cannot necessarily be rescaled to satisfy this condition. The Sampford design (Sampford 1967) has pdf

where \(\mathbf {s} \in \mathcal {S}_n\). This design has the advantage that \(\pi \left( i\right) = p\left( i\right) \), so the inclusion probabilities can be exactly specified. This design is difficult to sample from.

3.2.4 Systematic sampling

Assume that \(0 < p\left( i\right) \), and let \(K = n^{-1}\sum _{i=1}^Np\left( i\right) \). We assume that all the \(p\left( i\right) \) are smaller than K. Simulate U uniformly on \(\left[ 0, K\right] \). The sample contains every unit j such that

We have described systematic sampling (Madow and Madow 1944) using a fixed ordering of units, in which case some pairwise inclusion probabilities are zero. Systematic sampling can also be performed using a random ordering, in which case every pairwise inclusion probability is positive.

The complexity of generating a systematic sample is \(O\left( N\right) \) (Fearnhead and Clifford 2003), which is asymptotically faster than generation of a Pareto sample.

3.2.5 Adjusting the population

The existence of units with large size variables may preclude the existence of a sampling design with sample size \(n\), for which \(\pi \left( i\right) \propto p\left( i\right) \). As \(\sum _{i=1}^N\pi \left( i\right) = n\), proportionality would require

This may contradict \(\pi \left( i\right) \le 1\).

More generally, if a population does not satisfy the conditions for a particular design, units can be removed from the population and the sample size adjusted, until the conditions are satisfied. For example, consider the case where the Sampford design cannot be applied, because even though the \(\left\{ p\left( i\right) \right\} _{i=1}^N\) are positive, they cannot be rescaled to satisfy the conditions in Sect. 3.2.3. We iteratively remove the units with the largest size variable from the population, until the Sampford design can be applied with sample size \(n- k\), where k is the number of units removed. The k removed units are deterministically included in the sample, and the Sampford design is applied to the remaining units, with sample size \(n- k\).

4 Sequential Monte Carlo for finite problems

Our aim in this section is to develop a new sequential Monte Carlo technique that uses sampling without replacement. The algorithms we develop are based on the Horvitz–Thompson estimator and can be interpreted as an application of multistage sampling methods from the field of sampling theory.

We begin in Sect. 4.1 by describing our new sequential Monte Carlo technique without reference to any specific sampling design. In Sect. 4.2, we argue for the use of the Pareto design, with the inclusion probabilities being approximated by the inclusion probabilities of a related Sampford design. Section 4.5 gives some advantages and disadvantages of without-replacement sampling methods.

4.1 Sequential Monte Carlo without replacement

Assume that \({\mathbf {X}}_d = \left( X_1, \ldots , X_d\right) \) is a random vector in \(\mathbb R^d\), taking values in the finite set \(\mathscr {S}_d\) and having density f with respect to the counting measure on \(\mathscr {S}_d\). We wish to estimate the value of

Let \(\mathbf {S}_i\) be a subset of the support of \({\mathbf {X}}_i = \left( X_1, \ldots , X_i\right) \). For \(d \ge t > i \ge 1\), define \(\mathscr {S}_{t}\left( \mathbf {S}_{i}\right) \) as

That is, \(\mathscr {S}_{t} \left( \mathbf {S}_{i}\right) \) is the set of all extensions of a vector in \(\mathbf {S}_i\) to a possible value for \({\mathbf {X}}_t\). For any value \({\mathbf {x}}_i\) of \({\mathbf {X}}_i\), let

It will simplify our algorithms to define

We begin by drawing a without-replacement sample from the set of all possible values of the first coordinate, \(X_1\). That is, we select a sample \(\mathbf {S}_1\) (of fixed or random size) from \(\mathscr {S}_1\) according to a sampling design. For any \(x_1\in \mathscr {S}_1\) let \(\pi ^1\left( x_1\right) \) be the inclusion probability for element \(x_1\) under this design. The specific choice of the sampling design is deferred to Sect. 4.2.

We now repeat this sampling process by drawing a without-replacement sample from the possible values of \({\mathbf {X}}_2\), conditional on the value of \(X_1\) being contained in \(\mathbf {S}_1\). That is, we select a without-replacement sample \(\mathbf {S}_2\) from \(\mathscr {S}_{2} \left( \mathbf {S}_{1}\right) \) according to a second sampling design. If \({\mathbf {x}}_2 \in \mathscr {S}_{2}\left( \mathbf {S}_1\right) \), let \(\pi ^2\left( {\mathbf {x}}_2\right) \) be the inclusion probability of element \({\mathbf {x}}_2\) under this second design, and so on.

In general, we draw a without-replacement sample \(\mathbf {S}_t\) from \(\mathscr {S}_{t}\left( \mathbf {S}_{t-1}\right) \) according to a sampling design, and calculate the inclusion probabilities \(\pi ^t\left( {\mathbf {x}}_t\right) \). This process continues until a sample from \(\mathscr {S}_{d}\left( \mathbf {S}_{d-1}\right) \) is generated.

Abusing notation slightly, if \({\mathbf {x}}\) is a vector of dimension greater than t, then \(\pi ^t\left( {\mathbf {x}}\right) \) will be interpreted as applying \(\pi ^t\) to the first t coordinates. The only way for \(\left( x_1, \ldots , x_d\right) \) to be selected as a member of \(\mathbf {S}_d\) is if \(x_1\) is contained in \(\mathscr {S}_1, \left( x_1, x_2\right) \) is contained in \(\mathbf {S}_2, \left( x_1, x_2, x_3\right) \) is contained in \(\mathbf {S}_3\), etc. The final sample \(\mathbf {S}_d\) is generated by a sampling design, for which the inclusion probability of \({\mathbf {x}}_d \in \mathbf {S}_d\) is \(\prod _{t=1}^d \pi ^t\left( {\mathbf {x}}_d\right) \). The Horvitz–Thompson estimator [see (7)] of \(\ell \) is therefore

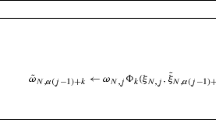

Computation of this estimator is described in Algorithm 1. The inclusion probabilities \(\pi ^t\) depend on the sampling designs at the intermediate steps and the chosen samples. So the estimator is a function of the final set \(\mathbf {S}_d\) and implicitly a function of \(\mathbf {S}_1, \ldots , \mathbf {S}_{d-1}\). “Appendix 1” shows that this estimator is unbiased. In practice, Algorithm 1 is implemented by maintaining a weight for each particle, and updating the particle weights by multiplying by \(\frac{f\left( x_t \;\vert \;{\mathbf {x}}_{t-1}\right) }{\pi ^t\left( {\mathbf {x}}_t\right) }\) every time sampling is performed. That is,

Note the similarities between (11) and (4). The only difference is that the inclusion probabilities replace the importance density in the formula.

Example 1

To illustrate this methodology, assume that \(d = 3\), that \({\mathbf {X}}_3\) is a random vector in \(\left\{ 0, 1, 2\right\} ^3\) with density f and that all our sampling designs select exactly two units. One possible realization of our proposed algorithm is shown in Fig. 1. There are three possible values of \(X_1\), and there are three possible samples of size 2. We select a sample \(\mathbf {S}_1\) according to some sampling design. Assume that units 0 and 1 are chosen. So the initial sample \(\mathbf {S}_1\) from \(\mathscr {S}_1\) will be \(\mathbf {S}_1 = \left\{ 0, 1\right\} \). We compute the inclusion probabilities \(\pi ^1\left( 0\right) \) and \(\pi ^1\left( 1\right) \) of each of these units being contained in the sample \(\mathbf {S}_1\).

Conditional on these values of \(X_1\) there are six possible values of \({\mathbf {X}}_2\), which are

The next step is to select a sample \(\mathbf {S}_2\) of size 2 from these six units, according to some sampling design. Assume that the units \(\left( 0,1\right) \) and \(\left( 1, 1\right) \) are chosen. We compute the inclusion probabilities \(\pi ^2\left( 0, 1\right) \) and \(\pi ^2\left( 1, 1\right) \) of each of these units being contained in the sample \(\mathbf {S}_2\).

The final step is to sample \({\mathbf {X}}_3\) conditional on \({\mathbf {X}}_2\) being one of the values in \(\mathbf {S}_2\). In this case, \(\mathscr {S}_3\left( \mathbf {S}_2\right) \) is

Assume that the sample of size 2 chosen is

and compute the inclusion probabilities \(\pi ^3\left( 0, 1, 1\right) \) and \(\pi ^3\left( 1, 1, 1\right) \).

The overall inclusion probabilities of the two units in \(\mathbf {S}_3\) are

and

In this case the Horzitz–Thompson estimator of \(\ell \) is therefore

\(\square \)

We refer to the elements of the sets \(\mathbf {S}_1, \ldots , \mathbf {S}_d\) as particles. A particle refers to an object that is chosen in a sampling step. We refer to elements of the sets \(\mathscr {S}_1, \ldots , \mathscr {S}_d\left( \mathbf {S}_{d-1}\right) \) as units to distinguish them from the particles. The term “unit” is traditional in survey sampling to refer to an element of a population, from which a sample is drawn.

Illustration of the without-replacement sampling methodology, in the case that \(d = 3\) and \({\mathbf {X}}_3\) is a random vector in \(\left\{ 0, 1,2 \right\} ^3\). The marked subsets of \(X_1, {\mathbf {X}}_2\) and \({\mathbf {X}}_3\) are \({\mathbf {S}}_1,\,{\mathbf {S}}_2\) and \({\mathbf {S}}_3\)

If h is a nonnegative function and

we find that the estimator has zero variance. This formula is similar to the zero-variance importance sampling density given in (2). An alternative method of obtaining a zero-variance estimator is to choose the d sampling designs, such that at every sampling step, with probability 1 all the possible units are sampled. In this case, the estimator corresponds to exhaustive enumeration of all the possible values of \({\mathbf {X}}_d\).

We can generalize to the case where \(c f\left( {\mathbf {x}}_d\right) \) is known but the normalizing constant c is unknown, and the aim is to estimate c. The final estimator returned by Algorithm 1 should be changed to

If the aim is to estimate \(\mathbb {E}_{f}\left[ h_d\left( {\mathbf {X}}_d\right) \right] \) but only \(c f\left( {\mathbf {x}}_d\right) \) is known for some unknown constant c, then as in standard sequential Monte Carlo, we use the estimator

This estimator is no longer unbiased.

4.2 Choice of sampling design

So far we have not discussed the choice of the sampling design. Our preferred choice is to simulate from the Pareto design, due to the ease of simulation. The inclusion probabilities are difficult to calculate, but we use the connections to the Sampford design, for which the inclusion probabilities are easy to calculate, to avoid this problem.

The pdfs of the Sampford and Pareto designs [Eqs. (8) and (9)] differ only in the last term of the product. Bondesson et al. (2006) shows that if

then the constants \(c\left( i\right) \) in (8) are approximately equal to \(1 - p\left( i\right) \), which is the corresponding term in (9). This implies that the Pareto and Sampford designs are almost identical in this case. The condition that D be large is generally equivalent to requiring that \(n\) and \(N- n\) are not small. More importantly, if (13) holds then the inclusion probabilities of the Pareto design are approximately \(\left\{ p\left( i\right) \right\} _{i=1}^N\).

We normalize the size variables to sum to \(n\), simulate directly from the Pareto design and assume that the inclusion probabilities are the normalized size variables. This choice has very significant computational advantages. It allows for fast sampling and fast computation of the inclusion probabilities.

In theory, this approximation to the inclusion probabilities will introduce bias into our algorithms, but empirically this bias is found to be negligible. We emphasize that it is the approximation of the inclusion probabilities that is important. The fact that the designs themselves are almost identical is only a means of obtaining this approximation for the inclusion probabilities.

In general, the condition

required by the Sampford design will not hold, and this cannot always be fixed by rescaling the \(\left\{ p\left( i\right) \right\} \). In these cases, we take the approach outlined in Sect. 3.2.5. We deterministically select the unit corresponding to the largest size variable \(p\left( i\right) \). If the \(\left\{ p\left( i\right) \right\} \) for the remaining units (suitably rescaled to sum to \(n-1\)) lie between 0 and 1 then the remaining \(n-1\) units are selected according to the Pareto design. Otherwise, units are chosen deterministically until these conditions are met, and the design can be applied. The units chosen deterministically will have inclusion probability 1.

Example 2

We let \(N= 1000\) and simulated the size variables \(\left\{ p\left( i\right) \right\} _{i=1}^{N}\) uniformly on [0, 1]. For a fixed value of \(n\), these size variables were rescaled to sum to \(n\) and used as the size variables for Pareto and Sampford designs. The inclusion probabilities \(\left\{ \pi ^{\mathrm {Pareto}}_{n}\left( i\right) \right\} _{i=1}^{N}\) of the Pareto design were computed. Recalling that the inclusion probabilities of the Sampford design are \(\left\{ p\left( i\right) \right\} _{i=1}^N\), we calculated

This was repeated for different values of \(n\), and the results are shown in Fig. 2. It is clear that the inclusion probabilities for the Pareto design and the Sampford design are extremely close. Calculating the Pareto inclusion probabilities out to \(n= 200\) required 1000 base-10 digits of accuracy. As a result, these calculations were extremely slow. \(\square \)

Maximum relative error [as measured by (15)] when approximating the Pareto inclusion probabilities by \(\left\{ p\left( i\right) \right\} _{i=1}^N\). The x axis is the sample size \(n\).

It remains to specify the size variables \(\left\{ p\left( i\right) \right\} \) for the design. If we wish to use an importance sampling density g to specify the size variables, then for sampling at step t we propose (with a slight abuse of notation) to use size variables

The size variables can also be written recursively as

Equation (17) is similar to (4).

These size variables give a straightforward method for converting an importance sampling algorithm into a sequential Monte Carlo without replacement algorithm, shown in Algorithm 2. For simplicity, Algorithm 2 omits the details relating to the deterministic inclusion of some units if (14) fails to hold. If the sample size \(n\) is greater than the number \(N\) of units, then the entire population is sampled and every inclusion probability is 1.

4.3 Merging of equivalent units

When applying without-replacement sampling algorithms, there are often multiple values which will have identical contributions to the final estimator. Let \(h^*\left( {\mathbf {x}}_t\right) = \mathbb {E}\left[ h\left( {\mathbf {X}}_d\right) \;\vert \;{\mathbf {X}}_t = {\mathbf {x}}_t\right] \). That is, when the sample is taken on Line 3 of Algorithm 1, there may be values \({\mathbf {x}}_t\) and \({\mathbf {x}}_t'\) in \(\mathscr {S}_t\left( \mathbf {S}_{t-1}\right) \), for which \(h^*_t\left( {\mathbf {x}}_t'\right) = h^*_t\left( {\mathbf {x}}_t\right) \). In such a case, the units can be merged, reducing the set of units to which the sampling design is applied. Before continuing, we give a short example illustrating how this idea works.

Illustration of merging of units in Example 3. Here \(d = 3\) and \({\mathbf {X}}_3\) is a random vector in \(\left\{ 0, 1,2 \right\} ^3\). The merged unit is represented by (0, 1), but could also be represented by (1, 1). The marked subsets of \(X_1, {\mathbf {X}}_2\) and \({\mathbf {X}}_3\) are \({\mathbf {S}}_1,\,{\mathbf {S}}_2\) and \({\mathbf {S}}_3\)

Example 3

Consider again the example shown in Fig. 1 of a random vector taking values in \(\left\{ 0, 1, 2\right\} ^3\). For simplicity, we use the conditional Poisson sampling design. Let

and let h be equal to 2 for all other values of \({\mathbf {X}}_3\). Assume that f is the uniform density on \(\left\{ 0, 1, 2\right\} ^3\), so that the value we aim to estimate is 2.015. Let \(g\left( x_1\right) = \frac{1}{3},\,g\left( {\mathbf {x}}_2\right) = \frac{1}{9}\) and \(g\left( {\mathbf {x}}_3\right) = \frac{1}{27}\). This implies that the inclusion probabilities at iteration \(t = 1\) are \(\frac{2}{3}\), and the inclusion probabilities of all the units in \(\mathscr {S}_2\left( \mathbf {S}_2\right) \) are \(\frac{1}{3}\).

At iteration \(t = 2\), the sampling design is applied to \(\mathscr {S}_2\left( \mathbf {S}_2\right) \), which includes \(\left( 0, 1\right) \) and \(\left( 1, 1\right) \). In this example, we have

Both units have the same expected contribution to the final estimator, and if this was known, we could replace the pair of units by a single unit \(\left( 0, 1\right) + \left( 1, 1\right) \), where the merged unit is represented by \(\left( 0, 1\right) \) or \(\left( 1, 1\right) \). After the merging, we have the situation shown in Fig. 3, where we have chosen to represent the merged unit as (0, 1). We could choose to represent the merged unit by (1, 1), in which case the units underneath the merged unit would be (1, 1, 0), (1, 1, 1) and (1, 1, 2). The value of the size variable for the merged unit is

We must also double the contribution of the merged unit to the final estimator, as it represents two units.

If units (0, 1, 0) and (0, 1, 1) are chosen in the third step, the value of the estimator is

The bolded values are \(2 h\left( 0, 1, 0\right) f\left( 0, 1, 0\right) \) and

\(2 h\left( 0, 1, 1\right) f\left( 0, 1, 1\right) \), where the factor of 2 accounts for the merging.

Assume that units 0 and 1 are initially selected. If no merging is performed, then the variance of estimator is 0.52. If the merging step is performed, and the merged unit is represented by (0, 1), then the variance of the estimator is 1.04. If the merged unit is represented by (1, 1), then the variance of the estimator is 0.0048. \(\square \)

As in Sect. 4.2, let g be the importance function, for simplicity assumed to be normalized. In order to formalize the idea of merging equivalent units, we add additional information to all the sample spaces and the samples chosen from them. The new units will be triples, where the first entry \({\mathbf {x}}_t\) represents the value of the unit, the second entry w can be interpreted as the importance weight, and the third entry p can be interpreted as the size variable.

With slight abuse of notation, we redefine the sets \(\mathbf {S}_0, \ldots , \mathbf {S}_d\) to account for this extra structure. Let

The initial sample \(\mathbf {S}_0\) is chosen from \(\mathscr {T}_1\), with probability proportional to the third component. Assume that sample \(\mathbf {S}_{t-1}\) has been chosen, and let

Note that (18) incorporates the recursive equations in (11) and (17). Using these definitions, we can sample \(\mathbf {S}_2\) from \(\mathscr {T}_2\left( \mathbf {S}_1\right) ,\,\mathbf {S}_3\) from \(\mathscr {T}_3\left( \mathbf {S}_2\right) \), etc. We can now state Algorithm 3. If the merging step on Line 4 is omitted, then this algorithm is in fact a restatement of Algorithm 1 using different notation. The merging rule on Line 4 is given in Proposition 1.

Proposition 1

If units \(\left( {\mathbf {x}}_t, w, p\right) \) and \(\left( {\mathbf {x}}_t', w', p'\right) \) in

\(\mathscr {T}_t\left( \mathbf {S}_{t-1}\right) \) satisfy \(h^*\left( {\mathbf {x}}_t\right) = h^*\left( {\mathbf {x}}_t'\right) \), they can be removed and replaced by the unit

The final estimator is still unbiased.

Proof

See “Appendix 2.”

The value \(p + p'\) in the third component of the merged unit can be replaced by any positive value, without biasing the resulting estimator. We gave an example of this type of merging in Example 3. Example 3 is unusual, as it merges units for which the function h takes very different values. A more common way for \(h^*\left( {\mathbf {x}}_t\right) = h^*\left( {\mathbf {x}}_t'\right) \) to occur is if

Example 4

We now continue Example 3, using the new definitions of \(\mathscr {T}_1\) and \(\mathscr {T}_t\left( \mathbf {S}_{t-1}\right) \). As shown in Fig. 3, the six units in \(\mathscr {T}_2\left( \mathbf {S}_1\right) \) become five after the merging step. Of these, two units are chosen to be in \(\mathbf {S}_2\); these units are

and

The other possible value for the merged unit is \(\left( \left( 1, 1\right) , \frac{1}{3}, \frac{1}{3}\right) \). \(\square \)

Algorithm 3 does not specify a sampling design. We suggest the use of a Pareto design, with the inclusion probabilities being approximated by those of a Sampford design, as discussed in Sect. 4.2. However, these types of merging step can applied with any sampling design, including the systematic sampling suggested in Fearnhead and Clifford (2003).

4.4 Links with the work of Fearnhead and Clifford (2003)

Carpenter et al. (1999) and Fearnhead and Clifford (2003) propose a resampling method which they name “stratified sampling.” This method is systematic sampling (Sect. 3.2.4) with probability proportional to size, with large units included deterministically. This method has a long history in sampling theory (Madow and Madow 1944; Madow 1949; Hartley and Rao 1962; Iachan 1982). That large units must be included deterministically in a PPS design is well known in the sampling theory literature (Sampford 1967; Rosén 1997b; Aires 2000).

From a sampling theory point of view, the optimality result of Fearnhead and Clifford (2003) can be paraphrased as “sampling with probability proportional to size is optimal.” As the optimality criteria relates only to the inclusion probabilities, the Sampford design satisfies this condition just as well as systematic sampling. The conditional Poisson and Pareto designs will approximately satisfy this condition, especially when \(n\) is large.

In the approach of Fearnhead and Clifford (2003), units with large weights are included deterministically, and their weights are unchanged by the sampling step. All other units are selected stochastically, and are assigned the same weight if they are chosen.

This can be interpreted as an application of the Horvitz–Thompson estimator. With these observations, the approach of Fearnhead and Clifford (2003) can be interpreted as an application of Algorithm 1 using systematic sampling.

4.5 Advantages and disadvantages

Like many methods that involve interacting particles (e.g., multinomial resampling algorithms), the sample size used to generate the estimator is fixed at the start and cannot be increased without recomputing the entire estimator. By contrast, additional samples can be added to an importance sampling estimator and some sequential Monte Carlo estimators (Brockwell et al. 2010; Paige et al. 2014), if a lower variance estimator is desired.

Without-replacement sampling allows the use of particle merging steps, which can dramatically improve the variance of the resulting estimators, while also lowering the simulation effort required. Such merging steps are not possible with more classical types of resampling.

If particle merging is used then the resulting estimator is specialized to the particular function h, as the units that can be merged depend on the function h. By contrast, the weighted sample generated by an importance sampling estimator can, in theory, be used to estimate the expectation of a different function h. In practice, even importance sampling estimators can be optimized by discarding particles as soon as they are known to make a contribution of zero to the final estimator. In such cases, even the importance sampling algorithm is specialized to the function h.

The choice of the sample size is far more complicated than for traditional importance sampling algorithms. A large enough sample size will return a zero-variance estimator, but this sample size is generally impractical. However, it is unclear whether the variance of the estimator must decrease as \(n\) decreases. This is particularly true when merging steps are added to the algorithm. The following simple example illustrates this.

Example 5

Take the example shown in Fig. 4, where \({\mathbf {X}}_2\) takes on eight values and the values of \(h\left( {\mathbf {x}}_2\right) \) are as given. Assume that \(f\left( {\mathbf {x}}_2\right) = \frac{1}{8}\) for each of these values. Let the size variables be \(p\left( x_1\right) = p\left( {\mathbf {x}}_2\right) = 1\). if \(n= 1\) the estimator has zero variance. However, with \(n= 2\) the estimator has nonzero variance; the value to be estimated is \(\frac{18}{8}\), but if units \(\left( 0, 0\right) \) and \(\left( 0, 1\right) \) are selected, the estimator is \(2.8125 \ne \frac{18}{8}\). So increasing the sample size has increased the variance from zero to some nonzero value.

Despite the previous remarks about choice of sample size, in practice the variance of the estimator decreases as \(n\) increases. As the variance of the estimator will reach 0 for finite \(n\), it must be possible to observe a better than \(n^{-1}\) decay in the variance of the estimator. This is in some sense a trivial statement, as there exists a sample size k, such that the estimator has nonzero variance with this sample size, but for sample size \(k+1\) the estimator has zero variance. However, we observe more rapid decreases in practical applications of these types of estimators. For an example, see the simulation results in Sect. 5.2.

5 Examples

In our examples, we compare estimators using their work-normalized variance, defined as

where T is the simulation time to compute the estimator. In practice, the terms in the definitions of WNRV are replaced by their estimated values.

5.1 Change-point detection

We consider the discrete-time change-point model used in the example in Section 5 of Fearnhead and Clifford (2003). In this model, there is some underlying real-valued signal \(\left\{ U_t \right\} _{t=1}^\infty \). At each time-step, this signal may maintain its value from the previous time, or change to a new value. The observations \(\left\{ Y_t \right\} _{t=1}^\infty \) combine \(\left\{ U_t \right\} _{t=1}^\infty \) with some measurement error. This measurement error will sometimes generate outliers, in which case \(Y_t\) is conditionally independent of \(U_t\). This model is a type of hidden Markov model.

Let \(X_t = \left( C_t, O_t\right) \) be the underlying Markov chain, where both \(C_t\) and \(O_t\) take values in \(\left\{ 1, 2\right\} \), and let \(\left\{ V_t \right\} _{t=1}^\infty \) and \(\left\{ W_t \right\} _{t=1}^\infty \) be independent sequences of standard normal random variables. Let

If \(C_t = 2\), the signal changes to a new value, distributed according to \(N\left( \mu , \sigma ^2\right) \). Otherwise, the signal maintains the previous value. Let

If \(O_t = 2\), the observed value is an outlier and is distributed according to \(N\left( \nu , \tau _2^2\right) \). Otherwise, the measurement reflects the underlying signal, with error distributed according to \(N\left( 0, \tau _1^2\right) \).

It remains to specify the distribution of the Markov chain \(\left\{ X_t \right\} _{t=1}^\infty \). In the example given in Fearnhead and Clifford (2003), the \(\left\{ C_t \right\} _{t=1}^\infty \) are assumed iid, and \(\left\{ O_t\right\} _{t=1}^\infty \) is a Markov chain, with

In this example, there is some integer \(d > 1\), and the aim is to estimate the marginal distributions of \(\left\{ C_t \right\} _{t=1}^d\) and \(\left\{ O_t \right\} _{t=1}^d\), conditional on \({\mathbf {Y}}_d = \left\{ Y_t \right\} _{t=1}^d\).

For the purposes of this example, we apply a version of Algorithm 3 that involves some minor changes. See “Appendix 1” for further details. The final algorithm is given as Algorithm 6 in “Appendix 3.” This algorithm contains the merging steps outlined in Fearnhead and Clifford (2003), which operate on principles similar to those described in Sect. 4.3.

For this example, we used the well-log data from Ó Ruanaidh and Fitzgerald (1996) and Fearnhead and Clifford (2003), and aimed to estimate the posterior probabilities

which are the posterior probabilities that there is a change or an outlier at time t, respectively. For this dataset \(d = 4050\). The data are shown in Fig. 5.

The well-log data from Ó Ruanaidh and Fitzgerald (1996)

We applied two methods to this problem. The first was the method of Fearnhead and Clifford (2003), and the second was our without-replacement sampling method, using a Pareto design as an approximation to the Sampford design. Both of these methods can be viewed as specializations of Algorithm 6, where the method of Fearnhead and Clifford (2003) uses systematic sampling. Both methods were applied 1000 times with \(n= 100\). Each run of either method produces 4050 outlier probability estimates and 4050 change-point probability estimates, so we provide a summary of the results. Note that the sample size required to produce a zero-variance estimator is on the order of \(2^{4050}\) in this case, which is clearly infeasible.

For the 4050 outlier probabilities, our method had a lower variance for 1656 estimates, and a higher variance for 2393 estimates. For the 4050 change-point estimates, our method had a lower variance for 1915 estimates, and a higher variance for 2121. This suggests that systematic sampling performs better than our approximation. Figure 6 shows the variances of every outlier probability estimate, under both methods. This plot suggests that if systematic sampling performs better, the improvement is small. The results for the change-points are similar.

Recall from Sect. 4.5 that the optimality condition of Fearnhead and Clifford (2003) can be paraphrased as “sampling with probability proportional to size is optimal.” So, to the extent that the approximation for the inclusion probabilities of the Pareto design (see Sect. 4.2) holds, we expect that both methods should have similar performance. This is reflected in the simulation results. There is some discrepancy for estimates of the outlier probabilities, where systematic sampling performs slightly better. This may be due to the somewhat small sample size.

Fearnhead and Clifford (2003) also applied the mixture Kalman filter (Chen and Liu 2000) and a multinomial resampling algorithm. They showed that the without-out replacement sampling approach significantly outperformed the alternatives. As our approach has equivalent performance to the method of Fearnhead and Clifford (2003), we do not consider these alternatives further.

5.2 Network reliability

5.2.1 Without particle merging

We now give an application of without-replacement sampling to the \(\mathcal {K}\)-terminal network reliability estimation problem. Assume we have some known graph \(\mathcal {G}\) with m edges, which are enumerated as \(e_1, \ldots , e_m\). We define a random subgraph \(\mathbf {X}\) of \(\mathcal {G}\), with the same vertex set. Let \(X_1, \ldots , X_m\) be independent binary random variables representing the states of the edges of \(\mathcal {G}\). With probability \(\theta _i\) variable \(X_i = 1\), in which case edge \(e_i\) of \(\mathcal {G}\) is included in \(\mathbf {X}\). For a fixed set \(\mathcal {K} = \left\{ v_1, \ldots , v_k\right\} \) of vertices of \(\mathcal {G}\), the \(\mathcal {K}\)-terminal network unreliability is the probability \(\ell \) that these vertices are not connected; that is, they do not all lie in the same connected component of \(\mathbf {X}\). As computation of this quantity is in general \(\#P\) complete, it often cannot be computed and must be estimated. If the probabilities \(\left\{ \theta _i \right\} \) are close to 1 then the unreliability is close to zero, and the problem is one of estimating a rare-event probability.

One of the best methods currently available for estimating the unreliability \(\ell \) is approximate zero-variance importance sampling (L’Ecuyer et al. 2011). This method is based on mincuts. In the \(\mathcal {K}\)-terminal reliability context a cut of a graph \(g\) is a set \({\mathbf {c}}\) of edges of \(g\) such that the vertices in \(\mathcal {K}\) do not all lie in the same component of \(g\setminus {\mathbf {c}}\). A mincut is a cut \({\mathbf {c}}\) such that no proper subset of \({\mathbf {c}}\) is also a cut.

In L’Ecuyer et al. (2011) the states of the edges are simulated sequentially using state-dependent importance sampling. Assume that the values \(x_1, \ldots , x_t\) of \(X_1, \ldots , X_t\) are already known. Let \(\mathcal {G}\left( x_1, \ldots , x_t\right) \) be the subgraph of \(\mathcal {G}\) obtained by removing all edges \(e_i\) where \(i \le t\) and \(x_i = 0\). Let \(\mathcal {C}\left( x_1, \ldots , x_t\right) \) be the set of all mincuts of \(\mathcal {G}\left( x_1, \ldots , x_t\right) \) that do not contain edges \(e_1, \ldots , e_t\). Let \(E\left( \cdot \right) \) be the event that a set of edges is missing from \(\mathbf {X}\). Define

Under the importance sampling density, \(X_{t+1} = 1\) with probability

instead of \(\theta _{t+1}\) under the original distribution. We add a without-replacement resampling step to this importance sampling algorithm by implementing Algorithm 2. We refer to this algorithm as WOR. As this algorithm is a fairly straightforward specialization of Algorithm 2, we do not describe the details of the algorithm here.

5.2.2 With particle merging

In order to apply Algorithm 3, we only need to specify the particle merging step. We do this by marking some of the missing edges in each unit as present, once it has been determined that this change makes no difference to the connectivity properties of the graph.

An example of this situation is shown in Fig. 7. In this case edge \(\left\{ 3, 8 \right\} \) is known to be missing, but vertices 3 and 8 are already known to be connected. So whether edge \(\left\{ 3, 8\right\} \) is present or absent cannot change the connectivity properties of the final graph, regardless of the states of the remaining edges.

Assume that we have some unit \(\left( {\mathbf {x}}_t, w, p\right) \), and for some \(1< i < t,\,x_i = 0\). Let \(\left\{ v, v'\right\} = e_i\). Assume that v and \(v'\) are in the same connected component of \(\mathcal {G}\left( x_1, \ldots , x_t\right) \), so that these vertices are already connected by a path that does not include edge \(e_i\). Regardless of the states \(x_{t+1}, \ldots , x_m\) of the remaining edges, setting \(x_i = 1\) will never change whether the vertices in \(\mathcal {K}\) to lie in the same connected component. So if

it can be shown that \(h^*\left( {\mathbf {x}}_t\right) = h^*\left( {\mathbf {x}}_t'\right) \). This observation leads to the particle merging step in Algorithm 4.

It is interesting to note that this algorithm is in some sense similar to the turnip (Lomonosov 1994), which is a variation on permutation Monte Carlo (Elperin et al. 1991). In the case of the turnip, the states of some edges are ignored. In our case, the merging step also tends to ignore the states of certain edge.

5.2.3 Results

We performed a simulation study to compare four different methods, all based on the importance sampling scheme of L’Ecuyer et al. (2011). This importance sampling scheme by itself is method IS. Adding without-replacement sampling (Algorithm 2) is method WOR. Adding without-replacement sampling and particle merging (Algorithm 3) is method WOR-Merge. Adding the resampling method of Fearnhead and Clifford (2003) is method Fearnhead. We used sample sizes 10, 20, 100, 1000 and 10,000.

We also implemented a residual resampling method (Carpenter et al. 1999). However, this method was found to perform uniformly worse than vanilla importance sampling on all the network reliability examples tested. The resampling step has the affect of “negating” the importance sampling scheme. The results for this method are not shown in the figures for this section, as they cannot reasonably be shown on the same scale.

The first graph tested was the dodecahedron graph (Fig. 8), with \(\mathcal {K} = \left\{ 1, 20\right\} \) and \(\theta _i = 0.99\). Results are given in Fig. 9. In this case, the true value of \(\ell \) is known to be \(2.061891\times 10^{-6}\). All the without-replacement sampling methods have the property that the WNRV decreases as the sample size increases. Method WOR-Merge clearly outperforms the other methods. Application of a residual resampling algorithm to this problem resulted in an estimator with a work-normalized variance on the order of \(10^{-9}\), many orders of magnitude worse than the results for the other four methods.

The second graph tested was a modification of the \(9 \times 9\) grid graph (Fig. 10), where \(\mathcal {K}\) contains the highlighted vertices. The modified grid graph is a somewhat pathological case for this importance sampling density, as in the limit as \(p \rightarrow 1\) one of the 9 minimum cuts has a very low probability of being selected. Results in Fig. 11 show that the WOR-Merge estimator significantly outperforms the other estimators.

The third graph tested was three dodecahedron graphs arranged in parallel (Fig. 12), with \(\theta _i = 0.9999\). Simulation results are shown in Fig. 13. It is interesting to see that the performance of method WOR-Merge does not change significantly when increasing the sample size from 20 to 100, or from 1000 to 10,000.

The fourth graph tested was three dodecahedron graphs arranged in series (Fig. 14), with \(\theta _i = 0.9999\). Simulation results are given in Fig. 15.

6 Concluding remarks

This article has described the incorporation of ideas from sampling theory into sequential Monte Carlo methods. Taking a sampling theory approach provides a new perspective on the use of without-replacement sampling methods. It shows how the inclusion probabilities of the sampling designs take the place of the importance density in a standard importance resampling algorithm.

This article shows that the sampling method of Fearnhead and Clifford (2003) is systematic sampling, and that the optimality result of Fearnhead and Clifford (2003) relates to probability proportional to size sampling. The stochastic enumeration algorithm of Vaisman and Kroese (2015) is also a special case of the methods described in this paper. It uses simple random sampling without importance sampling, and introduces some merging ideas, which they term tree reductions.

Adding a resampling step to an importance sampling algorithm has the potential to increase the variance of the resulting estimator. If the importance sampling density is sufficiently different from the zero-variance density, adding a without-replacement resampling step can result in significant improvement. We illustrated this with reference to the \(\mathcal {K}\)-terminal network reliability problem, and a hidden Markov model.

In the case of the network reliability example, adding a without-replacement sampling step improved the variance of the importance sampling estimator proposed by L’Ecuyer et al. (2011) by an order of magnitude. The without-replacement algorithms have the property that the work-normalized variance decreases as the sample size increases; the importance sampling algorithm on which they are based do not have this property. In our experience, the importance sampling estimator was (previously) the best known estimation method for this problem.

We also applied a residual resampling method to the network reliability example, and found that its performance was an order of magnitude worse than the original importance sampling scheme. This is because in this case the resampling step tends to “negate” the importance sampling step. This highlights an important distinction between the without-replacement sampling methods we describe, and more traditional forms of resampling. In the methods we propose, the true density f does not enter into the resampling step. This works extremely well, where the importance density is well designed. The true density could be incorporated into the sampling step by changing the definition of the size variables.

In this article, we suggested the use of a Pareto sampling design, where the inclusion probabilities are approximated by those of a Sampford design. This results in a design which is easy to simulate from, and which has inclusion probabilities which are easy to calculate. In this sense, the proposed design is similar to systematic sampling. The performance of the Pareto approximation to the Sampford design is found to be similar to the systematic sampling design suggested by Fearnhead and Clifford (2003).

The approximation we suggest has the advantage that the joint inclusion probability of every pair of units is positive. This condition is known to be desirable in the sampling design literature, as it allows the estimation of the variance of the Horvitz–Thompson estimator. In future, this may allow the estimation of the variance of the without-replacement sampling estimator, without the need to construct independent estimates.

Caution is potentially needed when applying an approximation within an iterative procedure, as it is possible that approximation errors will accumulate. Our numerical results suggest that such an accumulation of errors is not significant. Ultimately, such concerns must be balanced against the advantages of the proposed methods. Further work in the field of sampling design may make the use of approximations unnecessary.

When using without-replacement sampling, the merging of equivalent units can significantly reduce the variance of the resulting estimators. However, this also has disadvantages. When equivalent units are merged, it becomes even less certain that the variance of the resulting estimator always decreases as \(n\) increases. Merging also specializes the resulting algorithm to the function h; in general, it is not possible to use the final sample generated by the algorithm to estimate the expectation of a different function.

Particle merging is a way of incorporating problem-specific information into a particle filtering algorithm, in a way that is similar to the design of an importance sampling density. Proposition 1 is not the only way this can occur. Another possibility is that \(h^*\left( {\mathbf {x}}_t\right) = m\left( {\mathbf {x}}_t\right) h^*\left( {\mathbf {x}}_t'\right) \), where \(m\left( {\mathbf {x}}_t\right) \) is some known function, but both \(h^*\left( {\mathbf {x}}_t\right) \) and \(h^*\left( {\mathbf {x}}_t'\right) \) are unknown.

Our examples also illustrated the extreme flexibility of without-replacement sampling algorithms; the importance density, sampling design and merging steps can all be changed. In both our examples, this allowed us to use problem-specific information in the resulting algorithm. The downside is that customizing these algorithms to this extent is nontrivial; it essentially requires the design of an entirely new algorithm for every application.

The sampling theory approach is a promising direction for future work on without-replacement sampling methods; there is a large literature which probably contains many more relevant ideas. As an example, recall that in order to apply these types of methods, it must be possible to enumerate all the possible values of the particles at the next step. In some cases, this set may so large that this is impractical. In the field of sampling theory, this is referred to as a case where the sampling frame (set of all possible units) is missing. These types of problems have been studied in the relevant literature, so solutions to this problem may already exist.

The sampling theory approach also provides some insight into the optimality result of Fearnhead and Clifford (2003). The optimality result is given for only a single sampling step. When multiple such resampling steps are performed, the variance of the resulting estimator will depend in a complicated way on the joint inclusion probabilities of the sampling designs which are applied. These joint inclusion probabilities do not enter into the optimality result. While the result of Fearnhead and Clifford (2003) is the strongest statement that can be made in the general case, there may be specific cases where sampling designs that do not satisfy the optimality condition result in a lower variance estimator.

References

Aires, N.: Comparisons between conditional Poisson sampling and Pareto \(\pi \)ps sampling designs. J. Stat. Plan. Inference 88(1), 133–147 (2000)

Bondesson, L., Traat, I., Lundqvist, A.: Pareto sampling versus Sampford and conditional Poisson sampling. Scand. J. Stat. 33(4), 699–720 (2006)

Brewer, K.R.W., Hanif, M.: Sampling with Unequal Probabilities, vol. 15. Springer, New York (1983)

Brockwell, A., Del Moral, P., Doucet, A.: Sequentially interacting Markov chain Monte Carlo methods. Ann. Stat. 38(6), 3387–3411 (2010)

Carpenter, J., Clifford, P., Fearnhead, P.: Improved particle filter for nonlinear problems. IEE Proc. Radar Sonar Navig. 146(1), 2–7 (1999)

Chen, R., Liu, J.S.: Mixture Kalman filters. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 62(3), 493–508 (2000)

Chen, Y., Diaconis, P., Holmes, S.P., Liu, J.S.: Sequential Monte Carlo methods for statistical analysis of tables. J. Am. Stat. Assoc. 100(469), 109–120 (2005)

Cochran, W.G.: Sampling Techniques, 3rd edn. Wiley, New York (1977)

Del Moral, P., Doucet, A., Jasra, A.: Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 68(3), 411–436 (2006)

Douc, R., Cappé, O., Moulines, E.: Comparison of resampling schemes for particle filtering. In: ISPA 2005. In: Proceedings of the 4th International Symposium on Image and Signal Processing and Analysis, pp 64–69 (2005)

Doucet, A., de Freitas, N., Gordon, N. (eds.): Sequential Monte Carlo Methods in Practice. Statistics for Engineering and Information Science. Springer, New York (2001)

Elperin, T.I., Gertsbakh, I., Lomonosov, M.: Estimation of network reliability using graph evolution models. IEEE Trans. Reliab. 40(5), 572–581 (1991)

Fearnhead, P.: Sequential Monte Carlo Methods in Filter Theory. Ph.D. thesis, University of Oxford (1998)

Fearnhead, P., Clifford, P.: On-line inference for hidden Markov models via particle filters. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 65(4), 887–899 (2003)

Gerber, M., Chopin, N.: Sequential quasi Monte Carlo. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 77(3), 509–579 (2015)

Gilks, W.R., Berzuini, C.: Following a moving target-Monte Carlo inference for dynamic Bayesian models. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 63(1), 127–146 (2001)

Gordon, N., Salmond, D., Smith, A.: Novel approach to nonlinear/non-Gaussian Bayesian state estimation. IEE Proc. F Radar Signal Process. 140(2), 107–113 (1993)

Hammersley, J.M., Morton, K.W.: Poor man’s Monte Carlo. J. R. Stat. Soc. Ser. B (Methodol.) 16(1), 23–38 (1954)

Hartley, H.O., Rao, J.N.K.: Sampling with unequal probabilities and without replacement. Ann. Math. Stat. 33(2), 350–374 (1962)

Horvitz, D.G., Thompson, D.J.: A generalization of sampling without replacement from a finite universe. J. Am. Stat. Assoc. 47(260), 663–685 (1952)

Iachan, R.: Systematic sampling: a critical review. Int. Stat. Rev. 50(3), 293–303 (1982)

Kong, A., Liu, J.S., Wong, W.H.: Sequential imputations and Bayesian missing data problems. J. Am. Stat. Assoc. 89(425), 278–288 (1994)

Kou, S.C., McCullagh, P.: Approximating the \(\alpha \)-permanent. Biometrika 96(3), 635–644 (2009)

L’Ecuyer, P., Rubino, G., Saggadi, S., Tuffin, B.: Approximate zero-variance importance sampling for static network reliability estimation. IEEE Trans. Reliab. 60(3), 590–604 (2011)

Liu, J.S.: Monte Carlo Strategies in Scientific Computing. Springer, New York (2001)

Liu, J.S., Chen, R.: Blind deconvolution via sequential imputations. J. Am. Stat. Assoc. 90(430), 567–576 (1995)

Liu, J.S., Chen, R., Logvinenko, T.: A theoretical framework for sequential importance sampling with resampling. In: Doucet, A., de Freitas, N., Gordon, N. (eds.) Sequential Monte Carlo Methods in Practice. Statistics for Engineering and Information Science, pp. 225–246. Springer, New York (2001)

Lomonosov, M.: On Monte Carlo estimates in network reliability. Probab. Eng. Inf. Sci. 8, 245–264 (1994)

Madow, W.G.: On the theory of systematic sampling, II. Ann. Math. Stat. 20(3), 333–354 (1949)

Madow, W.G., Madow, L.H.: On the theory of systematic sampling, I. Ann. Math. Stat. 15(1), 1–24 (1944)

Marshall, A.: The use of multi-stage sampling schemes in Monte Carlo computations. In: Meyer, H.A. (ed.) Symposium on Monte Carlo Methods. Wiley, Hoboken (1956)

Ó Ruanaidh, J.J.K., Fitzgerald, W.J.: Numerical Bayesian Methods Applied to Signal Processing. Springer, New York (1996)

Paige, B., Wood, F., Doucet, A., Teh, Y.W.: Asynchronous anytime sequential Monte Carlo. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K. (eds.) Advances in Neural Information Processing Systems, vol. 27, Curran Associates, Inc., pp 3410–3418 (2014)

Rosén, B.: Asymptotic theory for order sampling. J. Stat. Plan. Inference 62(2), 135–158 (1997a)

Rosén, B.: On sampling with probability proportional to size. J. Stat. Plan. Inference 62(2), 159–191 (1997b)

Rosenbluth, M.N., Rosenbluth, A.W.: Monte Carlo calculation of the average extension of molecular chains. J. Chem. Phys. 23(2), 356–359 (1955)

Rubinstein, R.Y., Kroese, D.P.: Simulation and the Monte Carlo Method, 3rd edn. Wiley, New York (2017)

Sampford, M.R.: On sampling without replacement with unequal probabilities of selection. Biometrika 54(3–4), 499–513 (1967)

Tillé, Y.: Sampling Algorithms. Springer, New York (2006)

Vaisman, R., Kroese, D.P.: Stochastic enumeration method for counting trees. Methodol. Comput. Appl. Probab. 19(1), 31–73 (2017)

Wall, F.T., Erpenbeck, J.J.: New method for the statistical computation of polymer dimensions. J. Chem. Phys. 30(3), 634–637 (1959)

Acknowledgements

This work was supported by the Australian Research Council Centre of Excellence for Mathematical & Statistical Frontiers, under grant number CE140100049. The authors would like to thank the reviewers for their valuable comments, which improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Unbiasedness of sequential without-replacement Monte Carlo

Let \(h^*\left( {\mathbf {x}}_t\right) = \mathbb {E}\left[ h\left( {\mathbf {X}}_d\right) \;\vert \;{\mathbf {X}}_t = {\mathbf {x}}_t\right] \). Note that

Consider the expression

where \(1 \le t < d\). Let \(I\left( {\mathbf {x}}_t\right) \) be a binary variable, where \(I\left( {\mathbf {x}}_t\right) = 1\) indicates the inclusion of element \({\mathbf {x}}_{t}\) of \(\mathscr {S}_{t}\left( \mathbf {S}_{t-1}\right) \) in \(\mathbf {S}_t\). We can rewrite (20) as

Recall that \(\mathbb {E}\left[ I_t\left( {\mathbf {x}}_t\right) \;\vert \;\mathbf {S}_{t-1}\right] = \pi ^t\left( {\mathbf {x}}_t\right) \). So the expectation of (21) conditional on \(\mathbf {S}_1, \ldots , \mathbf {S}_{t-1}\) is

So

Applying Eq. (22) d times to

shows that \(\mathbb {E}\left[ \widehat{\ell }\right] = \ell \).

Appendix 2: Unbiasedness of sequential without-replacement Monte Carlo, with merging

The proof is similar to “Appendix 1.” In this case, all the sample spaces and samples are sets of triples. Consider any expression of the form

It is clear that if the proposed merging rule is applied to \(\mathscr {T}_t\left( \mathbf {S}_{t-1}\right) \), then the value of (23) is unchanged. Using the definition of \(\mathscr {T}_t\left( \mathbf {S}_{t-1}\right) \), Eq. (23) can be written as

The expectation of (24) conditional on \(\mathbf {S}_{t-2}\) is

So

Applying Eq. (26) \(d-1\) times to

shows that \(\widehat{\ell }\) is unbiased.

Appendix 3: Without-replacement sampling for the change-point example

We now give the details of the application of without-replacement sampling to the change-point example in Sect. 1. Recall that \({\mathbf {X}}_d = \left\{ X_t \right\} _{t=1}^d\) is a Markov chain and \({\mathbf {Y}}_d = \left\{ Y_t \right\} _{t=1}^d\) are the observations. Let f be the joint density of \({\mathbf {X}}_d\) and \({\mathbf {Y}}_d\). Note that

for some unknown constants \(\left\{ c_t\right\} _{t=1}^d\). Define the size variables recursively as

This updating rule is slightly different from that given in (17). Equations (30) and (27) require an initial distribution for \(X_1 = \left( C_1, O_1\right) \), which we take to be

Define

and let \(\mathbf {S}_1\) be a sample chosen from \(\mathscr {U}_1\), with probability proportional to the last component. Assume that sample \(\mathbf {S}_{t-1}\) has been chosen, and let

We account for the unknown normalizing constants in (27) by using an estimator of the form (12). This results in Algorithm 5.

Proposition 2

The set \(\mathbf {S}_d\) generated by Algorithm 5 has the property that

Proof

Define

Using (27),

Consider any expression of the form

Equation (31) can be written as

The expectation of (32) conditional on \(\mathbf {S}_{t-2}\) is

So

Applying Eq. (33) \(d-1\) times to

completes the proof. \(\square \)

We now describe the merging step outlined in Fearnhead and Clifford (2003), applied to the estimation of the posterior change-point probabilities

The method we describe here can be extended fairly trivially to also estimate \(\left\{ \mathbb {P}\left( O_t = 2 \;\vert \;{\mathbf {Y}}_d = {\mathbf {y}}_d\right) \right\} _{t=1}^d\).

In order to perform this merging, we must add more information to all the sample spaces and the samples chosen from then. The extended space will have \({\mathbf {x}}_t\) as the first entry, the particle weight w as the second entry, and a vector \(\mathbf m_t\) of t values as the third entry. The last entry will be an estimate of \(\left\{ \mathbb {P}\left( C_i = 2 \;\vert \;{\mathbf {y}}_t\right) \right\} _{i=1}^t\). Let

Note that the third component of every element of \(\mathscr {V}_1\) is either 0 or 1. Let \(\mathbf {S}_1\) be a sample drawn from \(\mathscr {V}_1\), with probability proportional to the second element. Assume that sample \(\mathbf {S}_{t-1}\) has been chosen, and let \(\mathscr {V}_t\left( \mathbf {S}_{t-1}\right) \) be

We can now define Algorithm 6, which uses the merging step outlined in Proposition 4.

Proposition 3

If the merging step is omitted, then the set \(\mathbf {S}_d\) generated by Algorithm 6 has the property that

Proof

Define

It can be shown that

Consider any expression of the form

Equation (34) can be written as

The expectation of (35) conditional on \(\mathbf {S}_{t-2}\) is

So

Applying Eq. (36) \(d-1\) times to

completes the proof. \(\square \)

Proposition 4

Assume we have two units \(\left( {\mathbf {x}}_t, w, \mathbf m_t\right) \) and \(\left( {\mathbf {x}}_t', w', \mathbf m_t'\right) \), both corresponding to paths of the Markov chain with \(C_t = 2\) and \(O_t = 2\). Then, we can remove these units, and replace them with the single unit

This rule also applies if both units correspond to \(C_t = 2\) and \(O_t = 1\).

Proof

Under the specified conditions on \({\mathbf {x}}_t\) and \({\mathbf {x}}_t'\),

This shows that

So replacement of this pair of units by the specified single unit does not bias the resulting estimator. \(\square \)

Rights and permissions

About this article

Cite this article

Shah, R., Kroese, D.P. Without-replacement sampling for particle methods on finite state spaces. Stat Comput 28, 633–652 (2018). https://doi.org/10.1007/s11222-017-9752-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-017-9752-8