Abstract

Principal component analysis (PCA) is a well-established tool for identifying the main sources of variation in multivariate data. However, as a linear method it cannot describe complex nonlinear structures. To overcome this limitation, a novel nonlinear generalization of PCA is developed in this paper. The method obtains the nonlinear principal components from ridges of the underlying density of the data. The density is estimated by using Gaussian kernels. Projection onto a ridge of such a density estimate is formulated as a solution to a differential equation, and a predictor-corrector method is developed for this purpose. The method is further extended to time series data by applying it to the phase space representation of the time series. This extension can be viewed as a nonlinear generalization of singular spectrum analysis (SSA). Ability of the nonlinear PCA to capture complex nonlinear shapes and its SSA-based extension to identify periodic patterns from time series are demonstrated on climate data.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In practical applications, one is often dealing with high-dimensional data that is confined to some low-dimensional subspace. Since its introduction by Pearson (1901), principal component analysis (PCA, e.g. Jolliffe 1986) has become a ubiquitous tool for identifying such subspaces. The method uses an orthogonal transformation to separate the directions of maximal variance. PCA and its variants have appeared in various contexts such as empirical orthogonal functions (EOF) in climate analysis (e.g. Weare et al. 1976), proper orthogonal decomposition (POD) in fluid mechanics (e.g. Berkooz et al. 1993) and the Karhunen-Loève transform (KLT) in the theory of stochastic processes (e.g. Loève 1955).

However, as a linear method, PCA is insufficient for describing complex nonlinear data. Several nonlinear extensions have been developed to overcome this limitation. The most prominent of these are the neural network-based nonlinear PCA (NLPCA, e.g. Hsieh 2004; Kramer 1991; Monahan 2001; Scholz et al. 2005, 2008) and kernel PCA (KPCA, e.g. Schölkopf et al. 1997). These methods, however, have shortcomings. NLPCA requires a large number of user-supplied parameters that need to be carefully tuned for the application at hand. Furthermore, the transformation of the input data into the high-dimensional kernel space in KPCA incurs a significant computational cost. A careful choice of kernel function is also needed when using KPCA.

Some variants of PCA, where the principal components are obtained by restricting the analysis to local neighbourhoods of the data points, have been developed (e.g. Kambhatla and Leen 1997; Einbeck et al. 2005, 2008). However, this approach leads to the problem of determining a global coordinate system. A well-known approach to this problem is local tangent space alignment (LTSA, Zhang and Zha 2004) that determines a coordinate system by solving an eigenvalue problem constructed from the local principal component coordinates. However, this method and other neighbourhood-based methods are in general sensitive to noise and the choice of the neighbourhoods.

The contribution of this paper is the development of kernel density principal component analysis (KDPCA). The proposed method builds on the idea of using ridges of the underlying density of the data to estimate nonlinear structures (Ozertem and Erdogmus 2011). This idea has later been refined by Pulkkinen et al. (2014) and Pulkkinen (2015). In the proposed approach, the ridges are interpreted as nonlinear counterparts of principal component hyperplanes. The density is estimated by using Gaussian kernels.

In the linear PCA, principal component scores (i.e. coordinates) of a given sample point are obtained as projections along principal component axes. Generalizing the concept of a principal component axis, the projections in KDPCA are done along curvilinear trajectories onto ridges of a Gaussian kernel density estimate. Based on the theory of ridges, it is shown that such projections can be done in a well-defined coordinate system. A projection trajectory is formulated as a solution to a differential equation, and a predictor-corrector algorithm is developed for tracing its solution curve.

A strategy for choosing the kernel bandwidth is critical for the practical applicability of KDPCA. Use of an automatic bandwidth selector for this purpose is demonstrated. It is also shown that the nonlinear principal components are well-defined for any sufficiently large bandwidth. In addition, they converge to the linear ones when the kernel bandwidth approaches infinity, thus making the linear PCA as a special case of KDPCA.

Finally, KDPCA is extended to time series analysis. In analogy with the well-known singular spectrum analysis (SSA, e.g. Golyandina et al. 2001; Vautard et al. 1992), it is applied to the phase space representation of the time series. This approach addresses the main shortcoming of the linear SSA. That is, being based on the linear PCA, it cannot separate different components of a time series when its trajectory in the phase space forms a closed loop. This is the case for quasiperiodic (i.e. approximately periodic) time series that form an important special class appearing in many applications. Examples include climate analysis (e.g. Hsieh and Hamilton 2003; Hsieh 2004) and medical applications such as electrocardiography and electroencephalography (e.g. Rangayyan 2002).

The remaining of this paper is organized as follows. In Sect. 2 we recall the linear PCA. Section 3 is devoted to development of KDPCA, and in Sect. 4 it is extended to time series data. Test results on a synthetic dataset, simulated climate dataset and an atmospheric time series are given in Sect. 5. The computational complexity of KDPCA is also analyzed and a comparison with related methods is given. Finally, Sect. 6 concludes this paper. The more involved proofs are deferred to Appendix.

2 The linear PCA

As the proposed method is a generalization of the linear PCA (e.g. Jolliffe 1986), we briefly recall the theoretical background of this method in this section.

The linear PCA attempts to capture the variability of a given data

by transforming the data into a lower-dimensional coordinate system via an orthogonal transformation. In this coordinate system, the axes point along directions of maximal variance.

For the formulation of PCA, we denote the mean-centered samples by

Assume that the mean-centered samples \(\tilde{\varvec{y}}_i\) are transformed into an \(m\)-dimensional space via the mapping

where \(\varvec{A}\) is a \(d\times m\) matrix with \(0<m<d\) and with orthonormal columns. Conversely, for the given coordinates \(\varvec{\theta }_i\) in the \(m\)-dimensional space, the corresponding reconstruction (i.e. projection onto the hyperplane spanned by the \(m\) first principal components) of \(\varvec{y}_i\) in the input space is obtained as

With the above definitions, it can be shown that finding the matrix \(\varvec{A}\) that minimizes the reconstruction error is equivalent to maximizing the variance in the transformed coordinate system (Jolliffe 1986). That is,

where \(O(d,m)\) denotes the set of \(d\times m\) matrices having orthonormal columns. Furthermore, any \(i\)-th principal component corresponds to the direction of the \(i\)-th largest variance, and these directions form an orthogonal set.

The solution to the above optimization problems is the matrix \(\varvec{V}_m= [\varvec{v}_1\quad \varvec{v}_2\quad \cdots \quad \varvec{v}_m]\), where the column vectors \(\varvec{v}_i\) are the (normalized) eigenvectors of the \(d\times d\) sample covariance matrix

corresponding to the \(m\) largest eigenvalues. Thus, projection of the mean-centered sample set \(\tilde{\varvec{Y}}\) onto the \(m\)-dimensional subspace corresponding to the directions of largest variance is given by

In statistical literature, the coordinates \(\varvec{\varTheta }\in \mathbb {R}^{n \times m}\) obtained in this way are called principal component scores (e.g. Jolliffe 1986).

3 Nonlinear kernel density PCA

In this section we develop the kernel density principal component analysis (KDPCA). The method is based on estimation of the underlying density of the data with Gaussian kernels. It is shown that the nonlinear principal component scores of given sample points can be obtained one by one by successively projecting them onto ridges of the underlying density. The projection curves are defined as a solution to a differential equation, and a predictor-corrector method is developed for this purpose.

3.1 Statistical model and ridge definition

In order to formally define the underlying density of the data points, we assume that they are sampled from an \(m\)-dimensional manifold \(M\) embedded in \(\mathbb {R}^d\). The sampling is done with normally distributed additive noise having variance \(\sigma ^2\). Denoting the points on \(M\) by the random variable \(\varvec{Z}\), the model is written as

The marginal density for the observed variable \(\varvec{X}\) is

where

and \(w\) denotes the density of \(\varvec{Z}\) supported on \(M\). The idea is to estimate the manifold \(M\) from \(m\)-dimensional ridges of the marginal density \(p\). A detailed theoretical analysis of this approach is given in Genovese et al. (2014).

We adapt the definition of a ridge set from Pulkkinen et al. (2014). An \(r\)-dimensional ridge point of a probability density is a local maximum in a subspace spanned by a subset of the eigenvectors of its Hessian matrix. These eigenvectors correspond to the \(d-r\) algebraically smallest eigenvalues. The one-dimensional ridge set (i.e. ridge curve) of the density of a point set sampled from the above model is illustrated in Fig. 1.

Definition 3.1

A point \(\varvec{x}\in \mathbb {R}^d\) belongs to the \(r\) -dimensional ridge set \(\mathcal {R}_p^r\), where \(0\le r<d\), of a twice differentiable probability density \(p:\mathbb {R}^d\rightarrow \mathbb R\) if

where \(\lambda _1(\varvec{x})\ge \lambda _2(\varvec{x})\ge \dots \ge \lambda _d(\varvec{x})\) and \(\{\varvec{v}_i(\varvec{x})\}_{i=1}^d\) denote the eigenvalues and the corresponding eigenvectors of \(\nabla ^2 p (\varvec{x})\), respectively.

The following result shows a connection between ridge sets and linear principal components when the underlying density of the data is normal. This result follows trivially from the following lemma (Ozertem and Erdogmus 2011) and the fact that the logarithm of a normal density with mean \(\varvec{\mu }\) and covariance \(\varvec{\Sigma }\) is a quadratic function whose gradient and Hessian are

respectively.

Lemma 3.1

If \(p:\mathbb {R}^d\rightarrow \mathbb {R}\) is twice differentiable, then \(\mathcal {R }^r_{\log {p}}=\mathcal {R}^r_p\) for all \(r=0,1,2,\dots ,d-1\).

Proposition 3.1

Let \(p:\mathbb {R}^d\rightarrow \mathbb {R}\) be a \(d\)-variate normal density with mean \(\varvec{\mu }\) and positive definite covariance matrix \(\varvec{\Sigma }\). Denote the eigenvalues of \(\varvec{\Sigma }\) by \(\lambda _1\ge \lambda _2 \ge \dots \ge \lambda _d\) and the corresponding eigenvectors by \(\{\varvec{v }_i\}_{i=1}^d\). Then for any \(0\le r<d\) such that \(\lambda _1>\lambda _2>\cdots >\lambda _{r+1}\) we have

For a linear model with \(\varvec{Z}=\varvec{\mu }+\varvec{W} \varvec{\varPhi }\),

and a matrix \(\varvec{W}\in \mathbb {R}^{d\times m}\) having orthogonal columns, the marginal density (5) is normal. Namely, the observed variable \(\varvec{X}\) is distributed according to

(Tipping and Bishop 1999). From the above we observe that the \(m\)-dimensional ridge set of the density of \(\varvec{X}-\varvec{\mu }\) coincides with the subspace spanned by the columns of \(\varvec{W}\).

Proposition 3.1 and the above observation suggest an approach for computing the principal component scores \(\varvec{ \theta }\), as defined in Sect. 2, of a given point having an underlying density \(p\). The idea is to project the point onto \(\mathcal {R}^m_{ \log {p}}\) in the subspace spanned by the eigenvectors \(\{\varvec{v}_i\}_{i= m+1}^d\) of \(\nabla ^2 \log {p}\) and then obtain projection coordinates along the first \(m\) eigenvectors. The remaining \(d-m\) components, that are interpreted as noise, are discarded. The point in \(\mathcal {R}^0_{\log {p}}\), that is the maximum of \(\log {p}\), is chosen as the origin of the coordinate system. This idea will be generalized to the nonlinear case in the next section.

However, when the manifold \(M\) is nonlinear, the estimate for \(M\) obtained from ridges of the marginal density (5) is biased. In Pulkkinen (2015), this is analyzed with a representative example In this example, the manifold \(M\) is the unit circle parametrized as \(\varvec{f}(\varPhi )=( \cos (\varPhi ),\sin (\varPhi ))\) and \(\varPhi \) is uniformly distributed on the interval \([0,2\pi ]\). With normally distributed noise, the bias is small, as illustrated in Fig. 2 with \(\sigma =0.2\). It occurs towards the center of curvature and is proportional to the ratio between \(\sigma \) and the curvature radius. In addition, for any compact and closed manifold \(M\), the ridge estimate converges to \(M\) when \(\sigma \) tends to zero (Genovese et al. 2014).

3.2 Obtaining principal component scores from ridge sets

Based on Proposition 3.1, we now develop the theoretical basis for computing the first \(m\) nonlinear principal component scores of a given point \(\varvec{y}\). The idea is to obtain the scores one by one by successively projecting the point onto lower-dimensional ridge sets of its underlying density (5). The projections are done along eigenvector curves that are defined by a differential equation. The arc lengths of the curves are interpreted as the principal component scores. As a special case of this approach, we obtain an orthogonal projection onto a linear PCA hyperplane.

For now, we assume that a given point \(\varvec{y}\in \mathbb {R}^d\) has already been projected onto an \(m\)-dimensional ridge set of its underlying density \(p\) with \(m\le d\). For \(r=1,2,\dots ,m\), we define a projection curve \(\varvec{\gamma }_r:\mathbb {R}\rightarrow \mathbb {R}^d\) onto the \(r-1\)-dimensional ridge set as a solution to the initial value problem

where \(\varvec{P}_r(\cdot )=\varvec{I}-\varvec{v}_r(\cdot )\varvec{v}_r(\cdot )^T\) and \(\{\varvec{v}_i(\cdot )\}_{i=1}^d\) denote the eigenvectors corresponding to the eigenvalues \(\lambda _1(\cdot )\ge \lambda _2(\cdot )\ge \dots \ge \lambda _d(\cdot )\) of \(\nabla ^2\log {p}\).

We begin with a special case that motivates the above definition and shows its connection to the linear PCA projection. Namely, for any \(d\)-dimensional normal density \(p\), a ridge point \(\varvec{x}_0\in \mathcal {R}^r_{\log {p}}\), where \(1\le r\le m\), can be projected onto the lower-dimensional ridge set \(\mathcal {R}^{r-1}_{\log {p}}\) by following the solution curve of (7) that is a straight line parallel to the eigenvector \(\varvec{v}_r\). This property follows trivially from the definitions of the normal density and the ridge set because \(\log {p}\) is in this case a quadratic function.

Proposition 3.2

Let \(p\) be a \(d\)-variate normal density with symmetric and positive definite covariance matrix \(\varvec{\Sigma }\) and let \(1\le r\le d\). If the eigenvalues of \(\varvec{\Sigma }\) satisfy the condition \(\lambda _1> \lambda _2>\dots >\lambda _{r+1}\), then for any solution curve \(\varvec{\gamma }_r\) of the initial value problem (7) we have

for all \(t\ge 0\). Furthermore, if the sign of \(\varvec{\gamma }'_r\) is chosen such that

then \(\log {p}\) has a unique maximum point \(\varvec{x}^*\in \mathcal {R}_{ \log {p}}^{r-1}\) along the curve \(\varvec{\gamma }_r\).

However, when the density \(p\) defined by Eq. (5) is not normal, we need the following assumption. It is needed to guarantee that the ridge sets of \(p\) induce a well-defined coordinate system. This assumption is reasonable when the data follows some unimodal distribution with clearly distinguishable major and minor axes as in Figs. 1 and 3. A higher-dimensional example will be given in Sect. 5.

Assumption 3.1

Define the set

Let \(\lambda _1(\cdot )\ge \lambda _2(\cdot )\ge \dots \ge \lambda _d(\cdot )\) denote the eigenvalues of \(\nabla ^2\log {p}\). Assume that for all \(\varvec{x}\in U_{\varvec{y}}\) we have

and that \(U_{\varvec{y}}\) is compact and connected.

The density \(p\) defined by Eq. (5) is a \(C^{\infty }\)-function. Thus, compactness and connectedness of the set \(U_{ \varvec{y}}\) together with condition (9) guarantees unimodality of \(\log {p}\) in the set \(U_{\varvec{y}}\). Damon (1998) and Miller (1998) give a rigorous treatment of ridge sets of \(C^{\infty }\)-functions in a differential geometric framework. When Assumption 3.1 is satisfied, their results guarantee that the \(r\)-dimensional ridge set of \(\log {p}\) induces a connected manifold in \(U_{\varvec{y}}\) for any \(1\le r \le m\). In addition, condition (9) implies differentiability of the Hessian eigenvectors (e.g. Magnus 1985), which is essential for the definition of the initial value problem (7).

Remark 3.1

When \(p\) is multimodal, a separate coordinate system can be obtained for each disjoint component of a superlevel set \(\mathcal {L}_c=\{\varvec{x}\in \mathbb {R}^d\mid \log {p}(\varvec{x})\ge c\}\) in which condition (9) is satisfied.

When the density \(p\) is not normal, obtaining an expression for the tangent vector \(\varvec{\gamma }'_r(t)\) is nontrivial. However, by utilizing the formula for the derivatives of eigenvectors (e.g. Magnus 1985), Eq. (7) for a general density \(p\) can after some calculation be rewritten as

where

and

and the operator “\(^+\)” denotes the Moore-Penrose pseudoinverse (e.g. Golub and Loan 1996).

For a general density \(p\), projection onto the ridge set \(\mathcal {R}^{r-1}_{ \log {p}}\) by maximizing \(\log {p}\) along the curve \(\varvec{\gamma }_r\) requires additional justification. To this end, we first note that under Assumption 3.1 choosing the sign of \(\varvec{\gamma }'_r (t)\) according to (8) is sufficient to guarantee that \(\varvec{\gamma }_r\) with \(r=m,m-1,\dots ,1\) yield projection curves in a well-defined coordinate system. This is illustrated in Fig. 3. Second, we show that when \(\varvec{\gamma }_r\) approaches a ridge point \(\varvec{x}^*\in \mathcal {R}^{r-1}_{\log {p}}\), the tangent vector \(\varvec{\gamma }'_r\) becomes parallel to the eigenvector \(\varvec{v}_r\).

Proposition 3.3

Let \(1\le r\le d\) and let \(\varvec{\gamma }'_r\) denote the normalized tangent vector of a solution curve of (7). If Assumption 3.1 and condition (8) are satisfied and

for some \(t^*>0\), then

Proof

Define the set

The range of the matrix in the second term of \(\varvec{F}_r(\varvec{x })\) defined by Eq. (12), that is

is clearly spanned by the vector \(\varvec{v}_r(\varvec{x})\) for all \(\varvec{x}\in U\). Furthermore, \(\varvec{v}_r(\varvec{x})\) is uniquely determined by condition (16). We also note that the range of the first term of the matrix \(\varvec{A}_r(\varvec{x})\) defined by Eq. (11), that is

is the set \(\{\varvec{w}\in \mathbb {R}^d\mid \varvec{w}^T\varvec{v }_r(\varvec{x})=0\}\) for all \(\varvec{x}\in U\). This follows from the definition of the matrix \(\varvec{P}_r(\varvec{x})\), the eigendecomposition of \(\nabla ^2\log {p}(\varvec{x})\) and condition (15) that guarantees nonsingularity of \(\nabla ^2\log { p}(\varvec{x})\).

On the other hand, by the limit (13) the first term of the matrix \(\varvec{F}_r(\varvec{\gamma }_r(t))\) defined by Eq. (12), that is

converges to zero as \(t\) approaches \(t^*\). In view of the above observation that the ranges of the matrices \(\varvec{B}(\varvec{x})\) and \(\varvec{G} (\varvec{x})\) are orthogonal for all \(\varvec{x}\in U\), Eqs. (10)–(12) and Assumption 3.1 together with condition (8) thus imply that

The claim follows from the first of the above limits because the range of the symmetric matrix \(\varvec{B}(\varvec{x})\) is orthogonal to its null space. \(\square \)

Proposition 3.3 implies the following properties that motivate seeking for a lower-dimensional ridge point by maximizing \(\log {p }\) along the curve \(\varvec{\gamma }_r\).

Proposition 3.4

If \(\varvec{\gamma }_r\) is a solution to (7) for some \(1\le r\le d\) and Assumption 3.1 and condition (8) are satisfied, then either \(\varvec{\gamma }_r(t)\in \mathcal {R}^r_{\log {p}}\setminus \mathcal {R}^{r-1}_{\log {p}}\) for all \(t\ge 0\) or \(\lim _{t\rightarrow t^*}\varvec{\gamma }_r(t)\in \mathcal {R}^{r-1}_{ \log {p}}\) for some \(t^*>0\). In the latter case, \(\log {p}\) attains its local maximum along \(\varvec{\gamma }_r\) at the limit point \(\varvec{\gamma }_r(t^*)\).

Proof

By Eq. (7), the choice of \(\varvec{x}_0\) and the definition of the matrix \(\varvec{P}_r(\cdot )\), for all \(i\ne r\) and \(t \ge 0\) we have

for some constants \(c_i\ne 0\). By Assumption 3.1, condition (8) and Definition 3.1 this implies that either \(\varvec{\gamma }_r(t)\in \mathcal {R}^r_{\log {p}} \setminus \mathcal {R}^{r-1}_{\log {p}}\) for all \(t\ge 0\) or \(\lim _{t\rightarrow t^*} \varvec{\gamma }_r(t)\in \mathcal {R}^{r-1}_{\log {p}}\) for some \(t^*>0\). In the latter case we have

Thus, the limit (14) implies that

Furthermore, by condition (6b) the point \(\varvec{ \gamma }_r(t^*)\) is a local maximum of \(\log {p}\) along \(\varvec{\gamma }_r\).\(\square \)

Finally, the arc length of a curve \(\varvec{\gamma }_r\) gives the (curvilinear) distance of its starting point to the ridge set \(\mathcal {R}^{r-1}_{\log {p}}\). Assume that we have projected the given point \(\varvec{y}\) onto the ridge set \(\mathcal {R}^m_{\log {p}}\). Starting from such a point, computing the arc lengths successively for \(r=m,m-1,\dots ,1\) then yields the first \(m\) principal component scores of \(\varvec{y}\). When Assumption 3.1 is satisfied, imposing the conditions (cf. Proposition 3.2)

for all \(r=m,m-1,\dots ,1\) and \(t\ge 0\) guarantees that the curves \(\varvec{\gamma }_r\) lie in the set \(U_{\varvec{y}}\).

Denote the projection of the point \(\varvec{y}\) onto the set \(\mathcal {R}^m_{\log {p}}\) as \(\tilde{\varvec{y}}\) and the starting points of the curves \(\varvec{\gamma }_r\) as \(\varvec{x}_0^r\). The \(m\) first principal component scores of \(\varvec{y}\) are then obtained recursively as

where

Here we assume that for each \(r\) there exists \(t^*_r\ge 0\) such that \(\varvec{\gamma }_r(t^*_r)\in \mathcal {R}^{r-1}_{\log {p}}\). The multiplier \(\displaystyle s^*_r=\lim _{t\rightarrow t^*_r-}s_r(t)\), where

is introduced to ensure that the principal component score \(\theta _r\) has the correct sign.

3.3 Density estimation with Gaussian kernels

In practice, the target density \(p\) is unknown and it needs to be estimated from the observations that we shall denote as \(\varvec{Y}=\{\varvec{y}_i\}_{ i=1}^n\). To this end, we use Gaussian kernels, and in this section we recall the necessary theoretical results. We also show that the bandwidth can be interpreted as a free scale parameter and that choosing a sufficiently large bandwidth yields a unimodal kernel density with connected ridge sets. This is a key result since the target density might not in all cases satisfy the these assumptions. Finally, we show that the linear PCA can be obtained as a special case of the above approach.

Definition 3.2

The Gaussian kernel density estimate \(\hat{p}_{\varvec{H}}\) obtained by drawing a set of samples \(\varvec{Y}=\{\varvec{y}_i\}_{i=1 }^n\subset \mathbb {R}^d\) from a probability density \(p:\mathbb {R}^d\rightarrow \mathbb { R}\) is

where the kernel \(K_{\varvec{H}}:\mathbb {R}^d\rightarrow ]0,\infty [\) is the Gaussian function

with symmetric and positive definite bandwidth matrix \(\varvec{H}\).

The problem of finding an optimal bandwith matrix \(\varvec{H}\) is well-studied in the literature. Under mild assumptions on the target density \(p\), asymptotic convergence of several bandwidth selectors for the target density and its derivatives can be proven (e.g. Chacón et al. 2011). That is, for a given order \(k\), the \(k\)-th derivative of the estimator \(\hat{p}_{\varvec{H}}\) converges to the \(k\)-th derivative of \(p\) in probability as the number of samples \(n\) tends to infinity. Extending these results, Genovese et al. (2014) show that any ridge set of a kernel density estimate \(\hat{p}_{h^2\varvec{I}}\) converges to the ridge set of \(p\) as \(n\rightarrow \infty \) for an appropriately chosen sequence of bandwidths \(h\). In addition, several bandwidth selectors have been implemented in the ks package for R (Duong 2007).

Using the function \(\hat{p}_{\varvec{H}}\) as a density estimate might not be appropriate in all cases. The target density \(p\) might not be unimodal or have connected ridge sets, as assumed in Sect. 3.2. To address this issue, the bandwidth \(\varvec{H}\) can be given an alternative interpretation when parametrized as \(\varvec{H}=h^2 \varvec{I}\). Namely, the following result shows that a ridge point lies on a locally defined principal component hyperplane. This hyperplane is determined by a weighted sample mean and the eigenvectors of a weighted sample covariance matrix, where the weights are Gaussian functions. For the following, we introduce the notation \(\hat{p}_h=\hat{p}_{h^2\varvec{I}}\).

Theorem 3.1

Let \(\hat{p}_h:\mathbb {R}^d\rightarrow \mathbb {R}\) be a Gaussian kernel density, let \(0<r<d\) and denote the eigenvectors of \(\nabla ^2\log {\hat{p}_h}(\cdot )\) corresponding to the \(r\) greatest eigenvalues by \(\{\varvec{v}_i(\cdot ) \}_{i=1}^r\). Define

where

Assume that the eigenvalues of \(\nabla ^2\log {\hat{p}_h}(\varvec{x})\) satisfy the condition \(\lambda _1(\varvec{x})>\lambda _2(\varvec{x})> \dots >\lambda _{r+1}(\varvec{x})\). Then

if and only if

where \(\{\tilde{\varvec{v}}_i(\varvec{x})\}_{i=1}^r\) denote the eigenvectors of \(\tilde{\varvec{\Sigma }}(\varvec{x})\) corresponding to the \(r\) greatest eigenvalues.

Proof

First, we note the formulae

and

By a straightforward calculation we then obtain that

and

By Eq. (25), the matrices \(\nabla ^2\log {\hat{p}_h}\) and \(\tilde{\varvec{\Sigma }}(\varvec{x})\) have the same eigenvectors. Hence, by Eq. (24) the condition that

is equivalent to

from which the claim follows by the orthogonality of the eigenvectors \(\tilde{\varvec{v}}_i(\varvec{x})\).\(\square \)

Ridges of the Gaussian kernel density \(\hat{p}_h\) can be used in an exploratory fashion by adjusting the bandwidth \(h\). As suggested by Theorem 3.1, this parameter determines the scale of the structures sought from the data. However, differently to the normal density in Proposition 3.2, the density \(\hat{p}_h\) is not generally unimodal or have connected ridge sets as requred by Assumption 3.1. For instance, it becomes multimodal when \(h\) is too small.

Fortunately, Assumption 3.1 for \(\hat{p}_h\) and the superlevel set

can be satisfied by choosing a sufficiently large \(h\). This is guaranteed by the following theorem that is proven in Appendix. Although \(\hat{p}_h\) does not necessarily reflect the underlying density with such bandwidth choices, its ridges can still capture nonlinear structure that cannot be described by the linear PCA.

Assumption 3.2

The \(r+1\) greatest eigenvalues of the sample covariance matrix \(\hat{\varvec{\Sigma }}_{\varvec{Y}}\) defined by equation (3) satisfy the conditions \(\lambda _1>\lambda _2>\dots >\lambda _{r+1}>0\).

Theorem 3.2

Under Assumption 3.2 for \(r=m\) and given \(\varvec{Y}= \{\varvec{y}_i\}_{i=1}^N\), for a Gaussian kernel density \(\hat{p}_h\) there exists \(h_0>0\) such that Assumption 3.1 is satisfied for \(U_{\varvec{Y}}\) and \(\hat{p}_h\) for all \(h\ge h_0\).

An important special case arises when \(h\) approaches infinity. At this limit, any \(r\)-dimensional ridge set of the Gaussian kernel density approaches the \(r\)-dimensional PCA hyperplane, which can be readily observed from Eqs. (22)–(25). A rigorous proof of this property is deferred to Appendix. As a result, the linear principal components are a special case of those obtained from kernel density ridges.

Theorem 3.3

For \(\varvec{Y}=\{\varvec{y}_i\}_{i=1}^N\subset \mathbb {R}^d\), let \(\hat{p}_h:\mathbb {R}^d\rightarrow \mathbb {R}\) be a Gaussian kernel density, let \(0\le r<d\) and let Assumption 3.2 be satisfied. Define the set

where \(\hat{\varvec{\mu }}\) denotes the sample mean (1) and \(\{\varvec{v}_i\}_{i=1}^r\) denote the eigenvectors of the sample covariance matrix \(\hat{\varvec{\Sigma }}_{\varvec{Y}}\) corresponding to the eigenvalues \(\{\lambda _i\}_{i=1}^r\). Then for any compact set \(U\subset \mathbb {R}^d\) such that \(U\cap S^r_{\infty }\ne \emptyset \) and \(\varepsilon >0\) there exists \(h_0>0\) such that

where

3.4 Algorithm for estimating principal component scores

Based on the theory developed in Sects. 3.2 and 3.3, we now develop the algorithm for computing the nonlinear principal component score estimates

of a given sample set

for a given \(0<m\le d\). This amounts to first projecting the samples \(\varvec{y}_i\) onto the ridge set \(\mathcal {R}^m_{\log {\hat{p}_{\varvec{H}}}}\) of the kernel density \(\log {\hat{p}_{\varvec{H}}}\) and then successively projecting them onto the lower-dimensional ridge sets \(\mathcal {R}^r_{\log {\hat{p}_{ \varvec{H}}}}\) until \(r=0\). The latter projections are done by tracing the curves denoted by \(\hat{\varvec{\gamma }}_r\) by using a predictor-corrector method As a by-product, the principal component scores are obtained from a numerical approximation of the integral (18).

A pseudocode of the algorithm is listed as Algorithm 1. It involves the initial projection onto the ridge set \(\mathcal {R}^m_{\log {\hat{p} }_{\varvec{H}}}\) (lines 2 and 3), and after that \(m\times n\) loops. Each iteration for \(r=m,m-1,\dots ,1\) projects each of the \(n\) sample points onto the ridge set \(\mathcal {R}^{r-1}_{\log {\hat{p} }_{\varvec{H}}}\). The intermediate projections are stored in the variables \(\{\varvec{x}^*_i\}_{i=1}^n\). For the initial ridge projection and the corrector steps, the algorithm utilizes the trust region Newton method developed by Pulkkinen et al. (2014) (the GTRN algorithm). This method is briefly described at the end of this subsection.

In the following, we describe the steps for carrying out one ridge projection (i.e. one iteration of the loop over the index \(i\)) for a given \(r\). The starting point \(\varvec{x}_0\) for \(\hat{\varvec{\gamma }}_r\) is chosen as \(\varvec{x}^*_i\) representing the projection of the sample point \(\varvec{y}_i\) onto the set \(\mathcal {R}^r_{\log {\hat{p}_{\varvec{H}}}}\). Assuming that there exists a monotoneously increasing sequence \(\{t_k\}\) such that \(\hat{ \varvec{\gamma }}_r(t_{k^*})\in \mathcal {R}^{r-1}_{\log {\hat{p}_{\varvec{ H}}}}\) for some \(k^*\), we introduce the notation \(\varvec{x}_k=\hat{ \varvec{\gamma }}_r(t_k)\) for the iterates along the curve \(\hat{\varvec{\gamma }}_r\). With this notation, an approximation to the integral (18) is given by

The algorithm uses a predictor–corrector method to generate the iterates \(\varvec{x}_k\). At the predictor step (line 23), the algorithm proceeds along a tangent vector \(\varvec{u}_k=\hat{\varvec{ \gamma }}'_r(t_k)\) solved from Eq. (10). That is,

where \(\tau >0\) is some user-supplied step size, \(\Vert \varvec{u}_k\Vert =1\) and the multiplier

(lines 9–12) is introduced to impose conditions (17). To project the predictor estimate \(\tilde{\varvec{x}}_k\) back to the ridge set \(\mathcal {R }^r_{\log {\hat{p}_{\varvec{H}}}}\), the algorithm takes a corrector step (line 24).

A stopping criterion is imposed to terminate the tracing of the curve \(\hat{ \varvec{\gamma }}_r\) when a maximum of \(\log {\hat{p}_{\varvec{H}}}\) along \(\hat{\varvec{\gamma }}_r\) is encountered (line 14). For \(k>0\), the condition

tests whether the gradient changes sign along the curve. When this condition is met, the algorithm projects the midpoint of the current and previous iterate onto a nearby ridge point \(\varvec{x}^*_i\in \mathcal {R}^{r-1}_{\log {\hat{p }_{\varvec{H}}}}\) (line 16). At lines 18–19, the algorithm computes the sign \(s^*_r\) for the integral (18) by approximately testing condition (19). The inner iteration (i.e. iteration of the loop over the index \(k\)) is then terminated, and the point \(\varvec{x}^*_i\) is retained as a starting point for projection onto a lower-dimensional ridge set.

Tests for unimodality or connectedness of ridge sets are not included in Algorithm 1 for simplicity. Unimodality can be tested by finding all modes of the density \(\hat{p}_{\varvec{H}}\) by using the GTRN algorithm, as described in Pulkkinen (2015). Disconnectedness can be tested if a curve \(\hat{\varvec{\gamma }}_r\) crosses a point \(\varvec{x}\) where \(\lambda _{r+1}(\varvec{x})=0\) or \(\lambda _i(\varvec{x})= \lambda _j(\varvec{x})\) for some \(i,j=1,2,\dots ,r+1\) such that \(i\ne j\), where \(\lambda _i(\cdot )\) denote the eigenvalues of \(\nabla ^2\log {\hat{p}_{ \varvec{H}}}\) (Miller 1998). When multimodality or a disconnected ridge set is detected, the algorithm can be restarted with larger \(h\) or smaller initial ridge dimension \(m\).

An alternative approach to amend the above situation is to relax the requirement of having a single coordinate system. This can in particular occur when \(\hat{p }_{\varvec{H}}\) is used as a density estimate and the target density \(p\) does not satisfy the above assumptions. In such case, the points can be given as many coordinate systems as there are clusters in the data identified by the modes of \(\hat{p}_{\varvec{H}}\) (see e.g. Pulkkinen 2015 for the case \(m=1\)). However, this does not address the more difficult case when the density is unimodal but not all of its first \(m\) ridge sets are connected.

The GTRN algorithm (Pulkkinen et al. 2014) utilized in Algorithm 1 implements a Newton-type method for projecting a \(d\)-dimensional point onto an \(r\)-dimensional ridge set of a probability density. The method successively maximizes a quadratic model of the objective function. The maximization is constrained within a trust region to guarantee convergence. To obtain a ridge projection, it is done in the subspace spanned by the Hessian eigenvectors corresponding to the \(d-r\) smallest eigenvalues. That is, at each iteration \(l\) the next iterate \(\varvec{z}_{ l+1}=\varvec{z}_l+\varvec{s}_l\) is obtained by solving the subproblem

where \(Q_l\) denotes the quadratic model at the current iterate \(\varvec{z }_l\), \(\{\varvec{v}_i(\varvec{z}_l)\}_{i=r+1}^d\) denote the eigenvectors and \(\Delta _l\le \Delta _{\max }\) denotes the current trust region radius that is updated after each iteration. For each call of GTRN, Algorithm 1 uses the experimentally chosen \(\Delta _{\max }=0.5\tau \) (\(\tau \) for the initial projection) and stopping criterion threshold \(\varepsilon _{pr}=10^{-5}\).

Remark 3.2

The GTRN algorithm can be viewed as an approximate solution method to an initial value problem of the form (7), where \(\varvec{P}_r(\cdot )=\sum _{i=1}^r\varvec{v}_i(\cdot )\varvec{v}_i(\cdot )^T\). As Algorithm 1, GTRN yields an orthogonal projection when applied to the logarithm of a normal density. Differently to Algorithm 1, projection of a \(d\)-dimensional point onto an \(r\)-dimensional ridge set with this algorithm only requires continuity of the first \(r\) Hessian eigenvectors. That is, when the \(r+1\) greatest eigenvalues are distinct in the set \(U_{\varvec{Y}}\).

Remark 3.3

Consistent orientation of the eigenvectors \(\varvec{v}_r(\varvec{x }^*_i)\) at the projected points is necessary for the principal component scores \(\hat{\theta }_{i,r}\) to have correct signs. However, in practice the signs of the eigenvectors depend on the numerical algorithm for computing them. Therefore, the implementation of Algorithm 1 uses an Euclidean minimum spanning tree (e.g. Jaromczyk and Toussaint 1992) to align the eigenvectors after each iteration of the outer loop.

4 Nonlinear extension of SSA to time series data

In this section, the KDPCA method developed in Sect. 3 is extended to time series data. The method, that we call KDSSA, is based on the singular spectrum analysis (SSA) that is an extension of the linear PCA. In SSA, a time series is embedded in a multidimensional phase space. This is done by constructing a trajectory matrix from time-lagged copies of the time series. Formally, the trajectory matrix of a time series \(\varvec{x}=( x_1,x_2,\dots ,x_n)\) is defined as

where \(L\) is some user-supplied time window length.

Applying the linear PCA to the above matrix, one can obtain the principal components and the reconstructed time series by using the formulae given by Vautard et al. (1992). Generalizing their approach, we minimize the reconstruction error

using the first \(m\) nonlinear principal components, where \(m\le L\). Here the vectors \(\tilde{\varvec{y}}_i\) denote the projections of the row vectors \(\varvec{y}_i\) of \(\varvec{Y}_{\varvec{x},L}\) onto the \(m\)-dimensional ridge set of their Gaussian kernel density.

A straightforward calculation shows that by equating the gradient \(\nabla E( \varvec{x})\) to zero, we obtain the formulae

for the elements of the reconstructed time series such that \(E(\varvec{x}^* )\) minimizes the reconstruction error (27).

In this paper the nonlinear SSA is applied to quasiperiodic time series (i.e. noisy time series having some underlying periodic pattern). The motivation is as follows. Assuming that a time series follows the model

for some periodic function \(f\) and \(\varepsilon \) representing the noise, it is reasonable to model the trajectory samples (i.e. the rows of the matrix \(\varvec{Y}_{\varvec{x},L}\)) as a point set that is randomly distributed around a closed curve (cf. Fig. 10 in Sect. 5).

When the aim is to obtain a noise-free time series from a reconstructed phase space trajectory, only an approximate projection onto the ridge curve (i.e. one-dimensional ridge set) of the trajectory density suffices. The GTRN algorithm developed by Pulkkinen et al. (2014) is appropriate for this purpose. On the other hand, a parametrization of the reconstructed trajectory can be obtained by the algorithm developed by Pulkkinen (2015). Differently to Algorithm 1, this algorithm yields a continuous parametrization even when the trajectory density is multimodal, provided that the set of its ridge curves forms a single closed loop. Both of the aforementioned approaches are demonstrated in the next section.

5 Practical applications

This section is devoted to practical applications of KPDCA. The method is applied to a synthetic dataset and climate model output that exhibit highly nonlinear behaviour. In addition, its SSA-based extension is applied to an atmospheric time series. Finally, computational complexity analysis and comparison with related methods is given.

5.1 Test setup

Algorithm 1 as well as the algorithms developed by Pulkkinen et al. (2014) and Pulkkinen (2015) used in the tests were implemented in Fortran 95. Algorithm 1 was run with \(m=d\) and \(\tau =0.05h\). For the nonlinear SSA, the above algorithms were run with their default parameters, except for GTRN the parameters \(\Delta _{\max }\) and \(\varepsilon _{pr}\) were chosen as \(0.25h\) and \(10^{-4}\), respectively.

5.2 Synthetic dataset

In the first experiments we consider a synthetic dataset generated from the model described in Sect. 3.1. Here the three-dimensional data points represented by the random variable \(\varvec{X}\) are sampled from a two-dimensional surface with additive normally distributed noise. The model is given by

with

and \(\varvec{\varepsilon }\sim \mathcal {N}_2(\varvec{0},0.01^2\varvec{I})\). The point set containing \(5000\) samples and its coordinates along the surface parametrized by \(\varvec{f}\) (scaled by arc length) are plotted in Fig. 4.

The one-dimensional KDPCA reconstruction of the above dataset and the principal component scores are plotted in Fig. 5. The bandwidth matrix \(\varvec{H}\) was chosen by using the Hns plug-in selector described in Chacón et al. (2011) and implemented in Duong (2007). In this case, the automatic bandwidth selector gives the desired result. Furthermore, the principal component scores give an accurate representation of the manifold coordinates shown in Fig. 4 (the orientation of the coordinate system is adjusted to match that of Fig. 4).

For comparison, the principal component scores obtained with the hierarchical inverse NLPCA described in Scholz et al. (2005, 2008) are shown in Fig. 6. This method is a variant of a neural network-based PCA. Nowadays, such methods have become popular particularly in climate analysis (e.g. Kramer 1991; Monahan 2001; Hsieh 2004). These methods fit a surface to the given data by using a series of nonlinear mappings. The weights determining the shape of the surface are obtained by optimization of a least-squares goodness of fit criterion. Typically, the cost function has a number of local solutions, and thus multiple trial runs are needed to obtain the optimal weights.

Figure 6 shows the best result from five experiments with randomly chosen initial weights. Weight decay coefficient (i.e. regularization parameter) \(0.1\) was used in order to avoid overfitting. From Fig. 6 we observe that the scores obtained with NLPCA roughly reflect the structure of the dataset. However, there is significant distortion in the scores and the mapping \(\varvec{\varPsi }:\mathbb {R}^2\rightarrow \mathbb {R}^3\) from the principal component space to the input space. The latter can be seen from Fig. 6 showing a uniform grid in \(\mathbb {R}^2\) mapped to \(\mathbb {R}^3\) via \(\varvec{\varPsi }\).

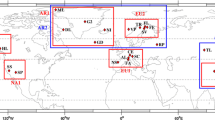

5.3 Application to simulated climate model data

In the second experiment, KDPCA was applied to a simulated sea surface temperature dataset. This dataset is provided by the National Oceanic and Atmospheric Administration (NOAA). The data was obtained from the Coupled Model Intercomparison Project phase 3 (CMIP3) simulations of the GFDL-CM2.1 climate model (Delworth et al. 2006). All preprocessing steps were done as in Ross (2008) and Ross et al. (2008), where this dataset has been analyzed in detail. The preprocessed dataset consisting of \(6,000\) samples represents temperature anomalies (i.e. data from which seasonality has been removed by subtracting the monthly mean values).

To make estimation of the nonlinear principal components computationally feasible, the high-dimensional data (\(d=10073\), one dimension for each ocean grid point) was first projected onto the first ten principal components obtained by PCA. As these principal components explain 87.3 % of variance, a significant amount of information was not lost by carrying out this preprocessing step.

For the GFDL-CM2.1 dataset, the bandwidth choosers implemented in Duong (2007) failed to yield a unimodal density with connected ridge sets. Therefore the kernel bandwidth was chosen as \(\varvec{H}=h^2\varvec{I}\) with \(h=40\). This choice is approximately \(\sqrt{\lambda _1}\), where \(\lambda _1\) denotes the greatest eigenvalue of the matrix \(\varvec{H}\) obtained by the Hns bandwidth selector.

The dataset and its first principal component obtained from kernel density ridge are plotted in Fig. 7. This figure shows cross-sections of the data and the principal component curve along the first linear principal component axes. Projection of the GFDL-CM2.1 data onto the surface spanned by the first two principal components obtained by Algorithm 1 is shown in Fig. 8. The corresponding principal component scores are plotted in Fig. 9.

Compared to the linear principal component projection shown in Fig. 7, it is clear that the nonlinear principal components represent the ”unfolded” dataset and they are better able to capture the variance in the data. Comparison of explained variances of the first eight linear and nonlinear principal components listed in Table 1 also supports this claim. The variance explained by KDPCA is more concentrated towards the first principal component than the variance explained by PCA.Footnote 1

A typical application of principal component analysis (and its nonlinear extensions) is to explain the variance in the given data by some small set of variables. This has been done in Ross (2008) and Ross et al. (2008) for the GFDL-CM2.1 data, and the two main sources of variation were identified. The first principal component correlates with the so-called NINO3 index that is related to the El Niño Southern Oscillation (ENSO) phenomenon. The second one correlates with the Pacific warm water volume. The analysis done here could be carried out further, but we do not attempt repeat the earlier experiments by Ross (2008) and Ross et al. (2008), as using KDPCA would yield similar results than the earlier nonlinear PCA extensions. Of more interest are the differences between KDPCA and the previously proposed methods. A discussion of potential advantages of using KDPCA is given in Sect. 5.6.

5.4 Application to atmospheric time series

The quasi-biennial oscillation (QBO) is one of the most well-studied atmospheric phenomena. The QBO is a quasiperiodic oscillation of the equatorial zonal wind between easterlies and westerlies in the tropical stratosphere with a mean period of 28–29 months. Motivated by an earlier neural network-based nonlinear SSA approach of Hsieh and Hamilton (2003), the nonlinear SSA (KDSSA) described in Sect. 4 was applied to a QBO time series. The time series is provided by the institute of meteorology at the University of Berlin. It consists of monthly mean zonal winds between 1953–2013 constructed from balloon observations at seven different pressure levels corresponding to the altitude range 20–30 km. Here we use a simplified test setup and analyze only the observations from the 30 Hpa level, resulting to a univariate time series.

The trajectory matrix (26) was obtained from the QBO time series with \(L=18\) months. The linear PCA was first applied in the 18-dimensional phase space so that the first four principal components were retained. These principal components explain 95.2 % of the variance, and thus a significant amount of information was not lost by doing this step. The resulting samples were then projected onto the kernel density ridges by using the GTRN algorithm (Pulkkinen et al. 2014). The bandwidth was chosen as \(\varvec{H}=h^2 \varvec{I}\) with \(h=260\) by the heuristic rule used in Sect. 5.3. The reconstructed time series was obtained by transforming the projected samples from the four-dimensional space back to the \(18\)-dimensional phase space and using the formulae (28).

The trajectory samples and their kernel density ridge projections in the reduced four-dimensional phase space are plotted in Fig. 10. This figure shows a cross-section along the first three linear principal components. Due to the underlying periodic structure present in the time series, its reconstructed phase space trajectory forms a closed loop that passes through the middle of the point cloud. The QBO time series and the reconstructed time series obtained by using the reconstructed phase space trajectory are plotted in Fig. 11. For comparison, the reconstructed time series obtained by using the first linear SSA component and the first and second linear components combined are also plotted in this figure.

The conclusion from Figs. 10 and 11 is that the nonlinear SSA is able to capture the underlying periodic structure in the QBO time series. It is clear that the closed loop found by the nonlinear approach, as shown in Fig. 10, cannot be described by any combination of linear principal components. Consequently, it can be seen from Fig. 11 that the linear SSA reconstruction by using only the first principal component is inadequate to describe the structure of the time series. On the other hand, by adding more principal components in the analysis, the linear SSA only captures noise and not the underlying periodic pattern.

In Sect. 5.4, the principal component scores (i.e. the coordinates along the nonlinear principal components) were of main interest. Also, in the nonlinear SSA tracking the coordinates of a time series along its reconstructed trajectory curve in the phase space may provide useful information. Namely, when the time series is close to periodic, anomalously short or long cycles can be identified by carrying out such analysis. For the QBO time series, this has been done in Hsieh and Hamilton (2003) by using the neural network-based NLPCA.

Obtaining the coordinates of a time series along its reconstructed phase space trajectory is also possible by using the ridge-based approach. In order to demonstrate this, an approximate parametrization of the trajectory was obtained by using the algorithm developed by Pulkkinen (2015). The projection coordinates were obtained for each sample by finding the nearest point along line segments connecting the trajectory points and computing its distance along the approximate curve to a point fixed as the origin.

Due to its very regular period, the QBO time series progresses along its reconstructed phase space trajectory at a nearly constant rate. This can be seen from Fig. 12 showing the trajectory coordinate \(t\) scaled to the interval \([-\pi ,\pi ]\) as a function of time. In addition, following Hsieh and Hamilton (2003), anomalies (i.e. deviations from the constant rate) were calculated. This was done by fitting a regression line to the \(t\)-time series obtained by concatenating the individual cycles and then subtracting the regression line from the concatenated time series. The normalized \(t\)-anomaly time series obtained in this way is also plotted in Fig. 12.

Comparison of the \(t\)-anomaly time series to the \(t\)-time series and Fig. 11 shows its relation to fluctuations from the mean period length. Namely, up- and downward trends in the \(t\)-anomalies correspond to abnormally short and long cycles, respectively. This can be seen, for instance, by comparing the long periods during 1962–1969, 1984–1993 and 2000–2002 and the short periods during 1955–1962, 1969–1975 and 2004–2009 to the \(t\)-anomaly time series.

5.5 Complexity Analysis

This subsection is devoted to discussion of computational complexity of Algorithm 1 and comparison of KDPCA with existing nonlinear dimensionality reduction methods. After the initial projection step by using the GTRN algorithm (Pulkkinen et al. 2014) having computational cost \(\mathcal {O}( n^2 d^2+nd^3)\), the computational cost of Algorithm 1 is \(\mathcal {O}(n^2d^3 m+d^3 nm+n^2 dm)\), which is explained in the following paragraphs.

The main source of computational cost is the evaluation of the Gaussian kernel density and its derivatives. For each of the \(m\) projection steps, this needs to be done for all \(n\) sample points a number of times that depends on the chosen step size \(\tau \). For the third derivative that dominates the computational cost, the cost of a single evaluation is \(\mathcal {O}(nd^3)\). This makes the total complexity of evaluations \(\mathcal {O}(n^2d^3 m)\). When \(d\) is small, this cost can be reduced by order of \(n\) by using the fast Gauss transform or related techniques (Greengard and Strain 1991; Yang et al. 2003).

Computation of the tangent vector in Algorithm 1 and obtaining the trust region step in the corrector involve eigendecomposition of a \(d\times d\) matrix (Pulkkinen et al. 2014). The cost of this operation is \(\mathcal {O}(d^3)\), and this is done \(\mathcal {O}(nm)\) times in the algorithm, making the total cost of eigendecompositions \(\mathcal {O}(d^3 nm)\). Finally, the cost of traversing the Euclidean minimum spanning tree by using a basic implementation is \(\mathcal {O}(n^2d)\). This is done \(m\) times in the algorithm, after each projection of all the sample points, and thus the total cost of traversal of such trees is \(\mathcal {O}(n^2 dm)\).

Computational efficiency of Algorithm 1 can be improved by replacing the projection curve tangent by a Hessian eigenvector (cf. Propositions 3.2 and 3.3). In practice, this leads to slightly worse approximations for the higher-dimensional principal component scores (the first principal component is not affected). This approximation reduces the evaluation cost by order of \(d\) since third derivatives are not needed.

When only the first nonlinear principal component (i.e. principal curve) is sought, a significant speedup can be achieved by using a specialized algorithm developed by Pulkkinen (2015). Using this algorithm requires choosing the kernel bandwidth so that the ridge curve set of the density consists of one connected curve. Under this assumption, it suffices to use one starting point, and the total computational cost of tracing the ridge curve is \(\mathcal {O}(nd^3)\). The principal component scores can be obtained from projections onto the line segments forming the approximate curve as in Sect. 5.4 at a cost of \(\mathcal {O}(ndk)\), where \(k\) is the number of line segments.

5.6 Comparison to other methods

The neural network-based methods are among the most popular nonlinear PCA methods applied in climate analysis. However, they have several shortcomings. Some of them are discussed below with comparison to KDPCA.

-

NLPCA involves minimization of a cost function that generally has several local minima. This problem is typically addressed by using multiple starting points, which may incur a high computational cost. KDPCA is not affected by this issue because it does not attempt to minimize a single cost function. Instead, it performs local maximizations from each sample point. The projection curves are uniquely defined when the ridge sets are connected.

-

The principal components obtained by KDPCA have a statistical interpretation. This is not the case for NLPCA that is based on an artificially constructed neural network. In fact, the NLPCA principal curves and surfaces are not guaranteed to follow regions of high concentration of the data points. Examples of this are given by Christiansen (2005). Due to this issue, drawing statistical inferences from the NLPCA output should be done with extreme caution.

-

NLPCA uses artificial penalty terms to avoid overfitting. Despite this, the density of the data along the first nonlinear principal component can exhibit spurious multimodality (Christiansen 2005). This can occur even when the underlying density of the data is close to normal. On the other hand, KDPCA performs no worse than the linear PCA when the kernel bandwidth is chosen sufficiently large.

-

When using NLPCA, the type of a curve (open or closed) to be fitted to the data needs to be specified a priori in the neural network structure. KDPCA can determine this automatically when the principal curve is traced by using the algorithm developed by Pulkkinen (2015).

-

The curves fitted by NLPCA are not parametrized by arc length. This may introduce a significant bias to reconstructions and principal component scores. When drawing statistical inferences from a curve fitted by NLPCA, arc length reparametrization should be done to remove the bias (Newbigging et al. 2003). However, this approach has not been generalized to higher dimensions. On the other hand, KDPCA produces an arc length parametrization for principal component curves and surfaces of any dimension due to its construction.

KDPCA has also certain advantages compared to other commonly used nonlinear dimensionality reduction methods. This is because it seems to perform well in the presence of noise and it operates directly in the input space.

-

Graph-based methods such as Isomap (Tenenbaum et al. 2000), Hessian eigenmaps (Donoho and Grimes 2003) and maximum variance unfolding (Weinberger and Saul 2006) are based on the assumption that the data lies directly on a low-dimensional manifold. Thus, they are sensitive to noise, and blindly applying such methods to noisy data may lead to undesired results.

-

The aforementioned methods and kernel-based methods such as KPCA do not produce a reconstruction of the data in the original input space. This would be a very desired feature, for instance, in climate analysis where plots of reconstructed grid data or time series provide information about the main sources of variation.

The main difficulty in KDPCA is the choice of kernel bandwith \(\varvec{H}\). When the data follows the model described in Sect. 3.1, the number of samples is sufficiently large and the data dimension is small, an automatic bandwidth selector can be used. This was successfully done in Sect. 5.2. Unfortunately, the above requirements might be unrealistic for practical applications, as observed in Sects. 5.3 and 5.4. As a result, the kernel density may become multimodal or have disconnected ridge sets. In such cases, the bandwidth parametrization \(\varvec{H}=h^2\varvec{I}\) can be used and a sufficiently large \(h\) can be chosen by visual inspection of principal components in two or three dimensions (cf. Figs. 7, 8 and 10). As shown in Sects. 5.3 and 5.4, a plug-in bandwidth estimate can be utilized for obtaining a first guess.

6 Conclusions and discussion

Principal component analysis (PCA) is a well-established tool for exploratory data analysis. However, as a linear method it cannot describe complex nonlinear structure in the given data. To address this deficiency, a novel nonlinear generalization of the linear PCA was developed in this paper.

The proposed KDPCA method is based on the idea of using ridges of the underlying density of the data to estimate nonlinear structures. It was shown that the principal component coordinates of a given point set can be obtained one by one by successively projecting the points onto lower-dimensional ridge sets of the density. Such a projection was defined as a solution to a differential equation. A predictor-corrector method using a Newton-based corrector was developed for this purpose.

Gaussian kernels were used for estimation of the density from the data. This choice has several advantages. First, this choice allows automatic estimation of an appropriate bandwidth from the data. This was demonstrated by numerical experiments, although the currently available bandwidth estimation methods have only limited applicability. Second, a fundamental result was derived showing that by choosing the bandwidth as \(\varvec{H}=h^2\varvec{I}\) and letting \(h\) approach infinity, KDPCA reduces to the linear PCA when desired.Third, the theory of ridge sets ensures that any ridge set of a Gaussian kernel density has a well-defined coordinate system when \(h\) is sufficiently large.

Based on the linear singular spectrum analysis (SSA), KDPCA was extended to time series data. It was shown that when a time series is (quasi)periodic, the first nonlinear principal component of its phase space representation can be used to reconstruct the underlying periodic pattern from noise. Though the periodicity assumption is restrictive, such time series are relevant for many practical applications. Examples include climate analysis and medical applications such as electrocardiography and electroencephalography.

The proposed KDPCA method and its SSA-based variant were applied to a highly nonlinear dataset obtained from a climate model and to an atmospheric time series. The method is superior to the linear PCA in capturing the complex nonlinear structure of the data. It also has several advantages compared to the existing nonlinear dimensionality reduction methods. In particular, KDPCA requires only one parameter, that is, the kernel bandwidth \(\varvec{H}\). When parametrized as \(\varvec{H}=h^2\varvec{I}\), the bandwidth has an intuitive interpretation as a scale parameter.

While KDPCA showed convincing results on the test datasets, its applicability to real-world data remains to be fully confirmed. When the data is noisy and sparse, which is typical for observational data, the additional information obtained by KDPCA might not justify its high computational cost. However, using the techniques discussed in Sect. 5.5 could significantly improve the scalability of KDPCA to large datasets. Computational difficulties due to high dimensionality of the data can also be circumvented. In many situations, the variance is confined to some low-dimensional subspace that can be identified by using a simpler method as a preprocessing step (as in Sects. 5.3 and 5.4).

Notes

The explained variances for the nonlinear principal components were obtained from the covariance matrix of the corresponding scores.

References

Berkooz, G., Holmes, P., Lumley, J.L.: The proper orthogonal decomposition in the analysis of turbulent flows. Annu. Rev. Fluid Mech. 25, 539–575 (1993)

Chacón, J.E., Duong, T., Wand, M.P.: Asymptotics for general multivariate kernel density derivative estimators. Stat. Sin. 21, 807–840 (2011)

Christiansen, B.: The shortcomings of nonlinear principal component analysis in identifying circulation regimes. J. Clim. 18(22), 4814–4823 (2005)

Damon, J.: Generic structure of two-dimensional images under Gaussian blurring. SIAM J. Appl. Math. 59(1), 97–138 (1998)

Delworth, T.L., Broccoli, A.J., Rosati, A., Stouffer, R.J., Balaji, V.: GFDL’s CM2 global coupled climate models. Part I: formulation and simulation characteristics. J. Clim. 19(5), 643–674 (2006)

Donoho, D.L., Grimes, C.: Hessian eigenmaps: locally linear embedding techniques for high-dimensional data. Proc. Natl. Acad. Sci. 100(10), 5591–5596 (2003)

Duong, T.: ks: Kernel density estimation and kernel discriminant analysis for multivariate data in R. J. Stat. Softw. 21(7), 1–16 (2007)

Einbeck, J., Tutz, G., Evers, L.: Local principal curves. Stat. Comput. 15(4), 301–313 (2005)

Einbeck, J., Evers, L., Bailer-Jones, C.: Representing complex data using localized principal components with application to astronomical data. In: Gorban, A.N., Kégl, B., Wunsch, D.C., Zinovyev, A.Y. (eds.) Principal Manifolds for Data Visualization and Dimension Reduction, volume 58 of Lecture Notes in Computational Science and Engineering, pp. 178–201. Springer, Berlin, Heidelberg (2008)

Genovese, C.R., Perone-Pacifico, M., Verdinelli, I., Wasserman, L.: Nonparametric ridge estimation. Ann. Stat. 42(4), 1511–1545 (2014)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. The Johns Hopkins University Press, Baltimore (1996)

Golyandina, N., Nekrutkin, V., Zhigljavsky, A.A.: Analysis of Time Series Structure: SSA and Related Techniques. Chapman and Hall/CRC Press, London (2001)

Greengard, L., Strain, J.: The fast Gauss transform. SIAM J. Sci. Stat. Comput. 12(1), 79–94 (1991)

Higham, N.J.: Functions of Matrices: Theory and Computation. SIAM, Philadelphia (2008)

Hsieh, W.W.: Nonlinear multivariate and time series analysis by neural network methods. Rev. Geophys. 42(1), 1–25 (2004)

Hsieh, W.W., Hamilton, K.: Nonlinear singular spectrum analysis of the tropical stratospheric wind. Quart. J. R. Meteorol. Soc. 129(592), 2367–2382 (2003)

Jaromczyk, J.W., Toussaint, G.T.: Relative neighborhood graphs and their relatives. Proc. IEEE 80(9), 1502–1517 (1992)

Jolliffe, I.T.: Principal Component Analysis. Springer-Verlag, Berlin (1986)

Kambhatla, N., Leen, K.T.: Dimension reduction by local principal component analysis. Neural Comput. 9(7), 1493–1516 (1997)

Kramer, M.A.: Nonlinear principal component analysis using autoassociative neural networks. AIChE J. 37(2), 233–243 (1991)

Loève, M.: Probability Theory: Foundations, Random Sequences. van Nostrand, Princeton (1955)

Magnus, J.R.: On differentiating eigenvalues and eigenvectors. Econ. Theory 1(2), 179–191 (1985)

Miller, J.: Relative critical sets in \(R^n\) and applications to image analysis. PhD thesis, University of North Carolina (1998)

Monahan, A.H.: Nonlinear principal component analysis: tropical Indo-Pacific sea surface temperature and sea level pressure. J. Clim. 14(2), 219–233 (2001)

Newbigging, S.C., Mysak, L.A., Hsieh, W.W.: Improvements to the non-linear principal component analysis method, with applications to ENSO and QBO. Atmos.-Ocean 41(4), 291–299 (2003)

Ortega, J.M.: Numerical Analysis: A Second Course. SIAM, Philadelphia (1990)

Ozertem, U., Erdogmus, D.: Locally defined principal curves and surfaces. J. Mach. Learn. Res. 12, 1249–1286 (2011)

Pearson, K.: On lines and planes of closest fit to systems of points in space. Philos. Mag. Ser. 6 2(11), 559–572 (1901)

Pulkkinen, S.: Ridge-based method for finding curvilinear structures from noisy data. Comput. Stat. Data Anal. 82, 89–109 (2015)

Pulkkinen, S., Mäkelä, M.M., Karmitsa, N.: A generative model and a generalized trust region Newton method for noise reduction. Comput. Optim. Appl. 57(1), 129–165 (2014)

Rangayyan, R.M.: Biomedical Signal Analysis: A Case-Study Approach. IEEE Press, New York (2002)

Renardy, M., Rogers, R.C.: An introduction to partial differential equations. In: Marsden, J.E., Sirovich, L., Antman, S.S. (eds.) Texts in Applied Mathematics, vol. 13, 2nd edn. Springer-Verlag, New York (2004)

Ross, I.: Nonlinear dimensionality reduction methods in climate data analysis. PhD thesis, University of Bristol, United Kingdom (2008)

Ross, I., Valdes, P.J., Wiggins, S.: ENSO dynamics in current climate models: an investigation using nonlinear dimensionality reduction. Nonlinear Process. Geophys. 15, 339–363 (2008)

Schölkopf, B., Smola, A., Müller, K.-R.: Kernel principal component analysis. In: Gerstner, W., Germond, A., Hasler, M., Nicoud, J.-D. (eds.) Artificial Neural Networks-ICANN’97, volume 1327 of Lecture Notes in Computer Science, pp. 583–588. Springer, Berlin (1997)

Scholz, M., Kaplan, F., Guy, C.L., Kopka, J., Selbig, J.: Non-linear PCA: a missing data approach. Bioinformatics 21(20), 3887–3895 (2005)

Scholz, M., Fraunholz, M., Selbig, J.: Nonlinear principal component analysis: Neural network models and applications. In: Gorban, A.N., Kégl, B., Wunsch, D.C., Zinovyev, A.Y. (eds.) Principal Manifolds for Data Visualization and Dimension Reduction, volume 58 of Lecture Notes in Computational Science and Engineering, pp. 44–67. Springer, Berlin (2008)

Tenenbaum, J.B., de Silva, V., Langford, J.C.: A global geometric framework for nonlinear dimensionality reduction. Science 290(5500), 2319–2323 (2000)

Tipping, M.E., Bishop, C.M.: Probabilistic principal component analysis. J. R. Stat. Soc. 61(3), 611–622 (1999)

Vautard, R., Yiou, P., Ghil, M.: Singular-spectrum analysis: a toolkit for short, noisy chaotic signals. Physica D 58(1–4), 95–126 (1992)

Weare, B.C., Navato, A.R., Newell, E.R.: Empirical orthogonal analysis of Pacific sea surface temperatures. J. Phys. Oceanogr. 6(5), 671–678 (1976)

Weinberger, K., Saul, L.: Unsupervised learning of image manifolds by semidefinite programming. Int. J. Comput. Vis. 70(1), 77–90 (2006)

Whittlesey, E.F.: Fixed points and antipodal points. Am. Math. Mon. 70(8), 807–821 (1963)

Yang, C., Duraiswami, R., Gumerov, N.A., Davis, L.: Improved fast gauss transform and efficient kernel density estimation. In Ninth IEEE International Conference on Computer Vision. volume 1, pp. 664–671. Nice, France (2003)

Zhang, Z., Zha, H.: Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM J. Sci. Comput. 26(1), 313–338 (2004)

Acknowledgments

The author was financially supported by the TUCS Graduate Programme, the Academy of Finland grant No. 255718 and the Finnish Funding Agency for Technology and Innovation (Tekes) grant No. 3155/31/2009. He would also like to thank Prof. Marko Mäkelä, Doc. Napsu Karmitsa and Prof. Hannu Oja (University of Turku) and the reviewers for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this appendix we give proofs of Theorems 3.2 and 3.3. In the following, we assume that the given set of sample points \(\varvec{Y}=\{\varvec{y}_i\}_{i=1}^N\subset \mathbb { R}^d\) is fixed. The proofs are carried out by making the following simplifying assumption that can be made without loss of generality.

Assumption 7.1

The points \(\varvec{y}_i\) satisfy the condition \(\sum _{i=1}^n\varvec{y }_i=\varvec{0}\).

First, we recall the density estimate defined by equations (20) and (21) with the bandwidth parametrization \(\varvec{H}= h^2\varvec{I}\). That is,

The following limits hold for the logarithm of the Gaussian kernel density estimate and its derivatives as \(h\) approaches infinity. Uniform convergence in a given compact set \(U\) can be verified by showing that the functions are uniformly bounded (i.e. bounded with respect to all \(\varvec{x}\in U\) and all \(h\ge h_0\) for some \(h_0>0\)) and that they have a uniform Lipschitz constant in \(U\) for all \(h\ge h_0\). Under these conditions, this follows from the Arzelà-Ascoli theorem (e.g. Renardy and Rogers 2004). The proof of the following lemma is omitted due to space constraints.

Lemma 7.1

Let \(\hat{p}_h:\mathbb {R}^d\rightarrow \mathbb {R}\) be a Gaussian kernel density estimate and assume \(7.1\). Then

for all \(\varvec{x}\in \mathbb {R}^d\). Furthermore, convergence to these limits is uniform in any compact set.

The following two lemmata facilitate the proof of Theorem 3.3.

Lemma 7.2

Let \(\hat{p}_h:\mathbb {R}^d\rightarrow \mathbb {R}\) be a Gaussian kernel density estimate and let Assumptions \(3.2\) and \(7.1\) be satisfied. Denote the eigenvalues of \(\nabla ^2\log {\hat{p}_h}\) by \(\lambda _1(\cdot ;h)\ge \lambda _2(\cdot ;h)\ge \dots \ge \lambda _d(\cdot ;h)\) and the corresponding eigenvectors by \(\{\varvec{w}_i(\cdot ;h)\}_{i=1}^d\). Then for any compact set \(U\subset \mathbb {R}^d\) there exists \(h_0>0\) such that

for all \(\varvec{x}\in U, h\ge h_0\) and \(i,j=1,2,\dots ,r+1\) such that \(i\ne j\). Furthermore, if we define

and

where \(\{\varvec{v}_i\}_{i=1}^r\) denote the eigenvectors of the matrix \(\hat{\varvec{\Sigma }}_{\varvec{Y}}\) defined by Eq. (3) corresponding to its \(r\) greatest eigenvalues, then for all \(\varepsilon >0\) there exists \(h_0>0\) such that

Proof

Let \(\tilde{\lambda }_1\ge \tilde{\lambda }_2\ge \dots \ge \tilde{\lambda }_d\) denote the eigenvalues of the matrix \(\hat{\varvec{\Sigma }}_{\varvec{ Y}}\) and let \(\{h_k\}\) be some sequence such that \(\lim _{k\rightarrow \infty }h_k= \infty \). By uniform convergence to the limit (32) under Assumption 7.1 and continuity of eigenvalues of a matrix as a function of its elements (e.g. Ortega 1990 Theorem 3.1.2), for all \(\varepsilon >0\) there exists \(k_0\) such that

for all \(i=1,2,\dots ,r+1\), \(\varvec{x}\in U\) and \(k\ge k_0\). Consequently, condition (33) holds for all \(\varvec{x}\in U\) for any sufficiently large \(h\) by Assumption 3.2. It also follows from Assumption 3.2, condition (36) and the reverse triangle inequality that for all \(\varepsilon >0\) and \(i,j=1,2,\dots ,r+1\) such that \(i\ne j\) and \(|\tilde{ \lambda }_i-\tilde{\lambda }_j|>\varepsilon \) there exists \(k_1\) such that

for all \(\varvec{x}\in U\) and \(k\ge k_1\). This implies condition (34). Similarly, condition (35) follows from uniform convergence to the limit (32) under Assumption 7.1, condition (34) and continuity of eigenvectors as a function of matrix elements when the eigenvalues are distinct (e.g. Ortega 1990 Theorem 3.1.3). \(\square \)

Lemma 7.3

Assume \(3.2\) and \(7.1\) and define the function

where the function \(\varvec{W}\) is defined as in Lemma 7.2, and the set \(S^r_{\infty }\) as in Theorem 3.3. Then the limit

exists for all \(\varvec{x}\in \mathbb {R}^d\). Furthermore, \(\varvec{ x}\in S^r_{\infty }\) if and only if the limit (37) is zero.

Proof

By the limits (31) and (35) the limit (37) exists for all \(\varvec{x} \in \mathbb {R}^d\). Furthermore, for any \(\varvec{x}\in \mathbb {R}^d\), the condition that the limit (37) is zero is equivalent to the condition that

where the vectors \(\varvec{v}_i\) are defined as in Lemma 7.2. By the orthogonality of the vectors \(\varvec{v}_i\), the definition of the set \(S^r_{\infty }\) and Assumption 7.1, this condition is equivalent to the condition that \(\varvec{x}\in S^r_{ \infty }\).\(\square \)

For the proof of Theorem 3.3, we define the set

where the function \(\tilde{\varvec{W}}\) is defined as in Lemma 7.3. Under Assumption 7.1, we prove both claims of Theorem 3.3 by the following two lemmata.

Lemma 7.1

Let \(U\subset \mathbb {R}^d\) be a compact set such that \(U\cap S^r_{\infty }\ne \emptyset \) for some \(0\le r<d\). If Assumptions \(3.2\) and \(7.1\) are satisfied, then for all \(\varepsilon >0\) there exists \(h_0>0\) such that

Proof

The proof is by contradiction. Let \(0\le r<d\) and let \(U\subset \mathbb {R}^d\) be a compact set such that \(U\cap S^r_{\infty }\ne \emptyset \). Assume that there exists \(\varepsilon _1>0\) such that for all \(h_0>0\) there exists \(h\ge h_0\) such that condition (39) is not satisfied. This implies that for all \(h_0>0\) there exists \(h\ge h_0\) such that

Let \(\{\varvec{x}_k\}\) denote a sequence of such points \(\varvec{x}\) with the corresponding sequence \(h_k\). Since the set \(S^r_h\cap U\) is compact by the compactness of \(U\) and the continuity of \(\tilde{\varvec{W}}(\cdot , h)\) in \(U\) for any sufficiently large \(h\), the sequence \(\{\varvec{x}_k\}\) has a convergent subsequence \(\{\varvec{z}_k\}\) whose limit point we shall denote as \(\varvec{z}^*\). Clearly \(\varvec{z}^*\notin S^r_{\infty }\) by condition (40). Thus, by Lemma 7.3 we deduce that for some \(c>0\),

In view of the definition (38), the above limit implies that there exists \(\varepsilon _2>0\) and \(k_0\) such that for all \(k\ge k_0\),

On the other hand, if we define the function \(\varvec{F}^*(\varvec{x}) =-(\varvec{I}-\varvec{V}\varvec{V}^T)\varvec{x}\), the triangle inequality yields

Combining this with the inequality

and noting the convergence of \(\varvec{F}(\cdot ;h_k)\) to the function \(\varvec{F}^*\) (that is uniform in \(U\)) as \(k\rightarrow \infty \) (by Lemmata 7.1 and 7.2), we deduce from (41) that for all \(\varepsilon _2> \varepsilon _3>0\) there exists \(k_1\) such that

for all \(\varvec{y}\in S^r_{h_k}\cap U\) and \(k\ge k_1\).

Condition (42) implies that for all \(0< \varepsilon _3<\varepsilon _2\) there exists \(k_1\) such that

On the other hand, for all \(\varepsilon >0\) we have \(\varvec{z}_k\in B( \varvec{z}^*;\varepsilon )\) for any sufficiently large \(k\) due to the assumption that \(\varvec{z}_k\) converges to \(\varvec{z}^*\). If we choose \(0<\varepsilon <\varepsilon _2\), then the sequence \(\{\varvec{x}_k \}\), whose subsequence is \(\{\varvec{z}_k\}\), has an element \(\varvec{x}_k\notin S^r_{h_k}\cap U\) for some \(k\) by condition (43). This leads to a contradiction with the construction of the sequence \(\{\varvec{x}_k\}\), which states that \(\varvec{x}_k\in S^r_{h_k}\cap U\) for all \(k\). \(\square \)

Lemma 7.5

Let \(\hat{p}_h\) be a Gaussian kernel density estimate, let \(0\le r<d\), let Assumptions \(3.2\) and \(7.1\) be satisfied and define the set \(S^r_{\infty }\) as in Theorem 3.3. Then for any compact set \(U\subset \mathbb {R}^d\) such that \(U\cap S^r_{\infty } \ne \emptyset \) and \(\varepsilon >0\) there exists \(h_0>0\) such that

Proof

Let \(0\le r<d\) and let \(\{\varvec{v}_i\}_{i=r+1}^d\) denote a set of orthonormal eigenvectors of the matrix \(\hat{\varvec{\Sigma }}_{\varvec{ Y}}\) corresponding to the \(d-r\) smallest eigenvalues. The vectors \(\{\varvec{v}_i\}_{i=r+1}^d\) are uniquely determined up to the choice of basis because the eigenvectors \(\{\varvec{v}_i\}_{i=1}^r\) spanning their orthogonal complement are uniquely determined by Assumption 3.2. Define the sets

and

for some orthonormal eigenvectors \(\{\varvec{v}_i\}_{i=r+1}^d\) spanning the orthogonal complement of \(\text{ span }(\varvec{v}_1,\varvec{v}_2,\dots , \varvec{v}_r)\).

Let \(\{\varvec{u}_i(\cdot ;h)\}_{i=1}^d\) denote a set of orthonormal vectors that are orthogonal to the eigenvectors \(\{\varvec{w}_i( \cdot ;h)\}_{i=1}^r\) of \(\nabla ^2\log {\hat{p}_h}\) corresponding to the \(r\) greatest eigenvalues. Define the functions

and

where

and \(\bar{\varvec{V}}=[\varvec{v}_{r+1}\quad \varvec{v}_{r+2}\quad \cdots \quad \varvec{v}_d]\) assuming that the orientation is chosen so that \(\text{ det }(\bar{\varvec{V}})=1\). To fix the orientation of the vectors \(\varvec{u}_i(\varvec{x};h)\), we impose the constraint

Here \(\Vert \cdot \Vert _F\) denotes the Frobenius norm,

\(O(d,d-r)\) denotes a \(d\times (d-r)\) matrix having orthonormal columns and the matrix \(\varvec{W}(\varvec{x};h)\) is defined as in Lemma 7.2. It can be shown that the function \(\varvec{U }(\cdot ;h)\) is well-defined for any \(h>0\).Footnote 2 Spanning the orthogonal complement of the columns of \(\varvec{W}(\cdot ;h)\), the columns of \(\varvec{U}(\cdot ;h)\) are also continuous in a given compact set when \(\varvec{W}(\cdot ;h)\) is continuous. That is, when condition (34) is satisfied in such a set by Lemma 7.2.

The above definitions and condition (35) in the compact set \(D_{\varepsilon }\) imply that for all \(\varepsilon _1,\varepsilon _2 >0\) there exists \(h_0>0\) such that

Consequently, uniform convergence to the limit (31) as \(h\rightarrow \infty \) by Lemma 7.3 together with the property that

following from Assumption 7.1 implies that for all \(\varepsilon _1,\varepsilon _2>0\) there exists \(h_0>0\) such that

where \(\tilde{D}_{\varepsilon }=\{\varvec{y}\in \mathbb {R}^{d-r}\mid \Vert \varvec{y}\Vert \le \varepsilon \}\).