Abstract

Positive judgments on the Self are good predictors of academic achievement. It could be useful to benefit from an instrument able to assess how pupils elaborate self-judgments. So far, such a tool does not exist. The purpose of the present study was to develop a self-report measure of social judgment for children at school (the School Social Judgment Scale—SSJS). 660 pupils completed a questionnaire addressing 12 socio-academic behaviors. An exploratory factor analysis highlighted a four-factor structure of social judgment (Assertiveness, Competence, Effort and Agreeability). A Confirmatory Factor Analysis (CFA) provided further support for this model, both in terms of factorial and construct validity. Reliability ranged from questionable to good depending on SSJS subscales. Multigroup CFA revealed invariance of the SSJS across gender and showed that boys had higher scores than girls for the assertiveness scale. Overall, the SSJS represents an efficient tool to better understand how social judgment for children works. As such, it could assist professionals of education to develop suitable educational support to assist poorly performing children at school.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Understanding the possible role of subjective factors that foster learning and achievement, represents a stimulating challenge for researchers and for teachers. In this perspective, social psychology has taken a growing role in academic sciences, in particular to relate subjective judgments on the Self and academic achievement (Bakadorova and Raufelder 2015; Bong and Skaalvik 2003; D’Amico and Cardaci 2003; Frost and Ottem 2016; Wouters et al. 2011). A well-documented finding is that many children have difficulty in school not because they are objectively unable to perform successfully but because they have come to believe that they are poorly performing pupils (see Pajares and Schunk 2001, for a review). However, what is the meaning of “I feel I can do well” or “I know I will fail” for a young child? In the present research, our aim was to initiate an exploration of this intriguing question by identifying the latent structure of children’s judgments on the Self at school. In our view, knowing the set of beliefs that young individuals hold about their own school-related abilities represents the necessary first step to understand these beliefs and, in a second time, to work on them to foster positive attitudes towards the Self, towards the Others, and finally, towards engagement with learning and achievement.

2 Theoretical framework

2.1 Current research on social judgment

An important aim of research on social judgment is to explore how individuals determine their behaviors and modulate their attitudes towards various objects and situations. A central question is how someone builds beliefs in him- (her) self and others, and whether these beliefs might harm or help him or her in life (Fiske 2015). There is growing evidence in the international literature that two recurrent and relatively independent dimensions underlie these beliefs, regardless of whether targets are Self or Others (Yzerbyt 2016). The first dimension (communal dimension) refers to agreeability, equality and harmony in relationships, the individual’s desire for affiliation with others. It covers characteristics such as friendliness, kindness, politeness, honesty, or trustworthiness. The second dimension (agentic dimension) refers to the human pursuit of differences and individualization, the individual’s desire to advance personal interests and to succeed. It covers characteristics such as independence, ambition, dominance, competence, or intelligence (Abele and Wojciszke 2007, 2013; Paulhus and Trapnell 2008). Research in this area has consistently showed a relationship between the agentic dimension and success in life. For example, individuals who are assigned a position of power (high social status) were found to be more focused on agentic characteristics than on communal qualities (Cislak 2013). In the same way, individuals presented as high-income earners are perceived with more abilities and more intelligence (agentic traits) than those presented as low-income earners; such a relation was not found regarding the communal traits (Oldmeadow and Fiske 2007).

Following on from these findings, recent research suggests that the agentic dimension actually encompasses two different components—one instrumental and the other motivational—which represent two distinct ways of being successful (Abele, Cuddy, Judd and Yzerbyt 2008; Carrier, Louvet, Chauvin and Rohmer 2014; Mollaret and Miraucourt 2016). The instrumental component reflects skills and efficiency and encompasses a set of traits like intelligent or capable; in short, it is linked to the field of competence. The motivational component is related to the pursuit of goals and can be illustrated by traits like ambition or perseverance. Previous research focused on achievement goals—defined as motivational processes affecting learning—showed that two kinds of goals should be distinguished: we can be motivated to do as properly as possible or motivated to do better than others (Dweck 1986). In the first case, the motivation is task-oriented: it is crucial for individuals to make an effort to get there, and they are highly engaged to give of their best even if they don’t like what they are doing (Rohmer and Louvet 2013; Schwinger et al. 2009; Smeding et al. 2015). In the second case, the motivation is self-oriented: the goal is to take advantage of opportunities in order to increase personal success and leadership (Carrier et al. 2014; Smeding et al. 2015). In line with previous work, we propose to name Competence the instrumental component, Effort the task-oriented motivational component, and Assertiveness the self-oriented motivational component. Several recent findings provide evidence for the relevance to distinguish the three components. Perrin and Testé (2010) reported that individuals described as making effort were evaluated more positively than those described as competent with regard to future professional success. Carrier et al. (2014) demonstrated that people presented as competitive, leader, or self-confident (assertive people) were perceived as having more ability to access prestigious positions than people presented as competent or efficient (competent people). Cohen-Laloum et al. (2017) found that individuals who described themselves as assertive had the highest financial resources in real life; simultaneously, effort was not a predictor of actual financial resources. All in all, these results suggest that the three components represent three distinct meanings of the agentic dimension related to distinct valued situations.

2.2 Social judgment for children

Social judgment has traditionally been studied in adult populations and generally focused on the relation between the components of judgment and social or economic success. However, recent work yielded some insight into evaluative judgment towards children, and suggested some discrepancies between adults and children. Whereas communal qualities are not related to achievement in adult populations (Cislak 2013; Fiske et al. 2002; Oldmeadow and Fiske 2007), for children by contrast, Communion (as characterized by traits like kind or nice) is judged to be a noticeable part of academic achievement (Blackwell et al. 2007; Spinath et al. 2014). With regard to the agentic dimension (at a global level), results are congruent with those obtained with adults. Generally speaking, agentic characteristics are judged relevant for achievement (Smeding et al. 2015). In a longitudinal study, some authors have even highlighted that students who valued agentic qualities, showed the best academic performance (Dompnier et al. 2009). However, at a more specific level, the pattern of relationships between Assertiveness, Competence and Effort (on the one hand) and achievement (on the other hand) appears to be inverted in the school context, mainly during primary and secondary school: being competitive, self-assured, or the leader (assertive child) is often considered as a potential cause of problems to perform at school. These characteristics are perceived by teachers as an impediment to be positively evaluated (Heyder and Kessels 2013; Ommundsen et al. 2005; Verniers et al. 2016). Conversely, intelligence and effort—considered as two kinds of abilities (innate and fixed for the first one; nurtured and malleable for the second one)—are consistently judged as key factors to achieve high academic performances (Blackwell et al. 2007; Spinath et al. 2014).

3 The current study

All together, these findings provide some valuable insight into how social judgment for children works. However, all these subjective judgments arise from adults towards children. Therefore, in our opinion, the main issue is still missing: in the child’s perspective, how does it work? In other words, when children make a social judgment about themselves, to what extent do they differentiate the dimensions of social judgment, especially the components of the agentic dimension?

The purpose of the present research was to address directly this key issue. We investigated the relevance to consider distinct conceptual components of the agentic dimension (i.e. Assertiveness, Competence and Effort) and Communion (i.e. Agreeability) as appropriate dimensions in the judgments that pupils form about themselves, and emphasize at school. Getting some insight in how pupils elaborate self-judgments across a full set of relevant dimensions can have important implications for education practice. For that purpose, we aimed to develop a self-report measure of social judgment at school, as assessed by four distinct components (Assertiveness, Competence, Effort and Agreeability). We propose to name this tool the School Social Judgment Scale (SSJS). Our approach first involved exploring the dimensionality of the SSJS (exploratory phase). Then, we planned to test its factorial validity, construct validity, as well as reliability; this also involved testing the multigroup invariance of the SSJS, specifically multigroup invariance across gender (confirmatory phase). Generally speaking, multigroup invariance is an important issue in the psychometric development of an assessment tool (Byrne 2012). Moreover, multigroup invariance is a logical requirement in the context of valid cross-group comparisons (Sass 2011). As educational research often focuses on score comparison across different groups, especially boys and girls (e.g. Heyder and Kessels 2013; Spinath et al. 2014), testing for invariance of the SSJS across gender is of first importance.

4 Methods

4.1 Participants and procedure

A total of 660 pupils from elementary or middle schools in the East of France and in grades 1 to 9 (ranged from 6 to 16 years old) participated in this study on a voluntary basis. All of them studied in regular education classes. A sample of 250 children and adolescents (122 boys and 128 girls; M = 9.98; SD = 2.64) was first recruited to complete the exploratory phase. An independent sample of 410 children and adolescents who were similar to the first sample regarding their gender and age (198 boys and 212 girls; M = 10.21; SD = 2.17) was then recruited to complete the confirmatory phase.

Prior to data collection, an information letter about our research program was sent to the parents a few weeks before the research began. In this letter, it was stressed that participation was anonymous and the data were confidential. Parents were asked to provide informed consent for their child’s participation in the study. Pupils then completed a paper and pencil questionnaire about their usual behavior at school. The language used in the survey, as well as the native language of all the participants, was French.

4.2 Instrument

In our scale development process, we adopted a systematic approach to design a self-report measure able to capture the most relevant aspects of social judgment for children in a brief and pragmatic manner. As a first step, we considered both (a) the conceptual definitions of the two fundamental dimensions (Communion and Agency) used in the literature (Abele and Wojciszke 2007, 2013; see above for details) and (b) the most commonly used traits for operationalizing them in the literature (see Carrier et al. 2014 or Cohen-Laloum et al. 2017). Based on these data, we established an initial list of pilot items consisting of 24 traits known to best capture Agreeability (e.g. warm, friendly, likeable), Assertiveness (e.g. ambitious, dominant, leader), Competence (e.g. competent, capable, efficient), and Effort (e.g. hard-working, courageous, persevering). As a second step, during the item writing process, each trait was converted into a socio-academic behavior representing a familiar situation at school. As a third step, the 24-item content was refined in an iterative process that involved review and commentary by teachers and by experts in the field of social judgment. Teachers were asked to assess to what extent each behavior is both common in the school context and understandable by children. Whenever this was not the case, teachers were asked to reformulate the item. In order to maximally reduce the length of the final scale to make it suitable for young children, experts were asked to detect and rule out all the redundant items which did not provide additional information compared to others. They were also asked to rate each behavior on the basis of common definitions related to Agreeability, Assertiveness, Competence, and Effort. These definitions were all presented with the stem “In this category you will sort out behaviors which correspond to a pupil who…” followed by the definitions. We computed the number of times each behavior had been classified in each category, and we selected the three behaviors that obtained the highest level of agreement. Only three items per target construct were retained in order to reduce test burden for young children. The 12 resulting items can be viewed in the “Appendix”. Each behavior was rated on a 4-point Likert-type scale ranging from 1 (strongly disagree) to 4 (strongly agree).

4.3 Data analysis

All the analyses were performed using Mplus (version 7.3, Muthén and Muthén 1998–2012). Initial inspection of the data revealed that 0.30% of the item scores were missing (for a total of 22 missing values), resulting in a minimum coverage of .988 for any item. Test for normality revealed a slight—but not excessive—departure from normality (one item exhibited a skewness value of 2.2 and two items had a kurtosis value of 2.0 and 4.2, respectively). In such a case, when variables have four (or more) categories, Bentler and Chou (1987) argued that it may be more appropriate to correct the test statistic rather than use a different mode of estimation. Accordingly, as recommended by Brown (2006), we used for all analyses the T2* χ2 test statistic of Yuan and Bentler (2000) from a robust Maximum Likelihood estimator (abbreviated MLR in Mplus) which provides test statistics that are robust to non-normal and missing data (Muthén and Muthén 1998–2012).

To determine the number and nature of factors that account for the covariation among the 12 items, an Exploratory Factor Analysis (EFA) using MLR as the extraction method and GEOMIN as the oblique rotation method (allowing factors to be correlated) was first conducted in a first sample. EFA was used because it is the preferred method in the early stages of scale development in combination with CFA used in latter phases (Brown 2006). In addition to Parallel Analysis (PA), factor selection was guided by goodness-of-fit indices and Chi square difference tests. Regarding goodness-of-fit indices, we used the MLR Chi square statistic (MLR χ2). However, because this statistic is affected by sample size (Brown 2006), alternative fit indices (and cut-off values) recommended by Hu and Bentler (1999) were used: the comparative fit index (CFI) and the Tucker–Lewis fit Index (TLI) (both good when ≥ .95), the Root Mean Square Error of Approximation (RMSEA) (close fit ≤ .06), and the Standardized Root Mean Square Residual (SRMR) (close fit ≤ .08). Regarding Chi square difference testing, a scaled difference in MLR χ2s test (named TRd) was conducted because the difference between two MLR χ2 values for nested models is not distributed as χ2 (Muthén and Muthén 1998–2012). If the difference test is non-significant, the fit of the two tested models is considered as equivalent.

To examine the validity of the model highlighted through the EFA and confirm its meaningfulness in an independent sample (cross-validation), a CFA was computed in a second sample. For this analysis, latent factors were defined by their respective items (as found in the EFA) and correlations between latent factors were permitted. Goodness-of-fit indices and tests for Chi square differences were the same as those previously used for the EFA. To test for multigroup invariance across gender, we used the stepwise analysis outlined by Brown (2006): prior to conduct the multigroup CFAs, we ensured that our posited model was acceptable in both groups; subsequently, multigroup invariance was tested with a least restricted solution—i.e. equal factor structure across groups (or configural invariance)—first evaluated. Nested models were then used to test subsequent models that both maintained previous equality constraints and added more restrictive constraints across groups in the following order: equal factor loadings (or weak factorial invariance), equal item intercepts (or strong factorial invariance), equal item residual variances (or strict factorial invariance), equal factor variances (or variance invariance), equal factor covariances (or covariance invariance), and equal latent factor means (or mean invariance). To assess invariance, a non-significant Chi square difference has been traditionally used to demonstrate evidence of invariance between groups (Byrne 2012). However, basing invariance testing solely on a Chi square difference test may be too stringent because this test is very sensitive to sample size and model complexity (Sass 2011). For these reasons, we assessed invariance not only with the scaled difference in MLR χ2s test (the same as for EFA: TRd) but also with alternative fit criteria (RMSEA value and decrement in CFI) first proposed by Cheung and Rensvold (2002) (see Table 2 for details).

5 Results

5.1 Dimensionality of the SSJS

EFA with oblique rotation was performed on 12 items for our first sample. Based on a scree plot of the eigenvalues obtained from this sample data against the 95th percentile of eigenvalues produced by 50 sets of completely random data, PA suggested four factors. Fit indices indicated an excellent fit of the four-factor SSJS model to the data (MLR χ2 (df) = 15.824(24), ns; CFI = 1.000; TLI = 1.065; RMSEA = .000; SRMR = .019). Comparing the fit between consecutive models revealed that the four-factor model fitted the data significantly better than the (lower-level) three-factor model (TRd = scaled ∆MLRχ2 = 45.717, ∆df = 9, p < .001), and that the three-factor model fitted the data significantly better than the (lower-level) two-factor model (TRd = scaled ∆MLRχ2 = 63.651, ∆df = 10, p < .001). Table 1 presents the four-factor SSJS model. All primary factor loadings but one (equal to .39) were above .40 (recommended cut-off; Velicer et al. 1982) and all of them were significant at p < .05. No secondary loading exceeded .20. Correlations revealed that Agreeability was moderately related to Competence and Effort, but unrelated to Assertiveness. Assertiveness did not correlate with other components, while Competence was moderately related to Effort.

5.2 Validity and reliability of the SSJS

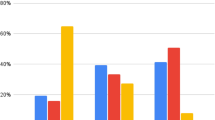

From the second sample ratings of 12 items, a CFA was computed to examine the validity of the four-factor SSJS model. Fit indices indicated an excellent model fit (MLR χ2 (df) = 55.340(48), ns; CFI = .986; TLI = .980; RMSEA = .019; SRMR = .039). Figure 1 represents the SSJS model. As in the EFA, all standardized factor loadings were higher than .40 (except for one equal to .39) and significant at p < .05. Correlations between the four latent factors were very similar in magnitude to those previously observed among the four factors resulting from EFA. Reliability as estimated by the rho coefficient was good for Effort (ρ = .71), acceptable for Competence (ρ = .63), but questionable for Assertiveness (ρ = .59), and Agreeability (ρ = .54).

Four-factor model for the 12-item SSJS. The values above one-headed arrows are standardized factor loadings. The values at double-headed arrows are correlations. The numbers in brackets above the circles are rho coefficients of reliability (rho = ratio of a scale’s estimated true score variance to the scale’s estimated total score variance; Yang and Green 2011)

5.3 Multigroup invariance across gender of the SSJS

A preliminary CFA conducted separately for each group indicated an excellent fit for boys (CFI = .998; TLI = .997; RMSEA = .008; SRMR = .051) and girls (CFI = .966; TLI = .953; RMSEA = .029; SRMR = .053). Having now checked this prerequisite, we were ready to test for multigroup invariance across gender. Table 2 shows the goodness-of-fit statistics for each model, the scaled difference in MLR χ2 test (TRd), and the CFI decrease (with cut-off values). Results provided evidence for configural invariance (excellent model fit indices and RMSEA value < .093 cut-off) as well as for all subsequent invariance models (excellent model fit indices, non-significant TRd, and decrement in CFI less than cut-off values), except for two models. There were only a partial weak factorial invariance (due to a single non-invariant factor loading across groups, thus not preventing invariance evaluation to continue—Byrne 2012) and no factor mean invariance (significant TRd and decrease in CFI more than the .006 cut-off value). One latent mean differed between boys and girls. Whereas the means of Agreeability, Competence, and Effort were equivalent between boys and girls, the mean of Assertiveness differed across groups. Specifically, girls had lower scores than boys for this dimension (M = − .39, critical ratio = − 2.84, p < .01).

6 Discussion

The aim of the present study was to develop a self-report measure of social judgment for children at school, namely the School Social Judgment Scale (SSJS).

Designing this scale, we intended to explore the latent structure of social judgment in children, a rarely investigated population in this area. To be suitable for young children, SSJS needed to be brief, clear and pragmatic; accordingly, in the SSJS, each of the four components of social judgment was operationalized through (only) three socio-academic behaviors which were situations typically encountered at school. We also intended to have an instrument encompassing dimensions which are both relevant within the context of social judgment (as evidenced on adult populations, see Cohen-Laloum et al. 2017, for an example) and strongly related to subjective qualities valued in school (e.g. Verniers et al. 2016). In line with recent literature on social judgment, SSJS needed to distinguish four fundamental components of social judgment—Agreeability, Assertiveness, Competence, and Effort—which are consensually considered as relevant individual differences within an educational context (Dompnier et al. 2007; Pansu and Dubois 2013; Qureshi et al. 2016; Verniers et al. 2016). Taken together, these two points are real assets of the SSJS because they make it the first assessment tool of subjective scholastic judgments to deal successfully with these challenging requirements.

In the first stage of the scale development, we explored the dimensionality of the SSJS through an EFA. Results (1) highlighted certainty about the optimum number and nature of latent factors for the SSJS, and (2) provided support for the relevance of distinguishing various components within the social judgment for children: the best solution to structure social judgments made by children about themselves was a four-factor model consisting of Assertiveness, Competence, Effort and Agreeability. Compared with lower-level models, the four-factor model provided the best fit to the data. In other words, to capture the specific dimensions underlying the social judgment that children form about themselves, a four-factor solution as highlighted by the SSJS seems more appropriate than any other solution, in particular a two-factor solution just including the communal and the agentic dimensions (without any distinction between the components of the latter). This results match those obtained with adult participants (Cohen-Laloum et al. 2017).

In the next phase, we carried out a CFA in order to cross-validate the four-factor structure of the SSJS. Results showed (1) an excellent fit of the four-factor model to the data, (2) a pattern of primary loadings which were adequately high—both of them providing evidence of factorial validity of the four-factor solution of the SSJS; (3) a set of low to medium-sized correlations between scales which represents a valuable clue for construct validity of the SSJS. In addition, the values of the rho coefficient provided mixed support for the reliability of the SSJS subscales. As a whole, this set of results supports the four-factor structure of the SSJS.

Nevertheless, some findings deserve specific attention. First, the reliability of two scales (Agreeability and Assertiveness) was somewhat questionable, even though, given the breadth and brevity of the scales (consisting of only three items per scale), the scales’ reliability was not expected to be extremely high, as with most short-form scales. In addition, such a level of reliability is not uncommon for a self-report measure in the initial step of its development (Nunnally and Bernstein 1994). Finally, when reliability was measured using ρ and not α (as in the present study), values over .50 have already been considered as acceptable (Raines-Eudy 2000). It seems clear that future studies will have to examine to what extent the standard cut-off values for the Cronbach’s Alpha coefficient also apply to the rho coefficient. Altogether, given the questionable level of reliability for the agreeability and assertiveness subscales, future studies using SSJS may benefit from an increase in the number of items on the two scales, moving it from three to four; for example, “you like to assist other children with their homework” and “you like to be the first one” might be good candidates for respectively depicting Agreeability and Assertiveness. This may serve the dual function of achieving high levels of reliability (Nunnally and Bernstein 1994) without increasing too much the work load of young children. If not—given that such reliability values result in attenuated correlations between predictors and criteria—researchers should be cautious regarding the predictive validity of these SSJS subscales.

Second, the low to moderate level of cross-scale correlations is a good point for the construct validity of the SSJS because it provides further empirical support for considering Assertiveness, Competence, and Effort as three distinct components of the agentic dimension (as suggested by Cohen-Laloum et al. 2017). As a matter of fact, Assertiveness was not correlated with Competence or Effort, while the two latter shared a moderate and positive relation. This pattern of relationship probably reflects what each of these components actually refers to (at school and elsewhere). On the one hand, both Effort (being courageous and assiduous) and Competence (being intelligent and capable) (a) are associated with task-oriented behaviors which are expected and rewarded at school (Dompnier and Pansu 2010; Verniers et al. 2016), and (b) represent two sets of intellectual abilities (one is nurtured, the other is innate; see Dweck 1999; Ommundsen et al. 2005). On the other hand, Assertiveness is self-oriented and refers to the intent to satisfy personal interests, which is perceived as a potential cause of problems at school (Dweck 1986; Heyder and Kessels 2013). Regarding correlations between Agreeability and the three other components, a mixed pattern emerged: (a) as consistently shown in previous research (e.g. Paulhus and Trapnell 2008), Agreeability was not related to Assertiveness because they emphasize two different fundamental aspects of human existence. Assertiveness characteristics involve the motivation to go ahead, whereas agreeability traits involve the will to go along (Hogan 1982); (b) at the same time, Agreeability was moderately and positively associated with Competence and Effort. Both points—(a) and (b)—can be interpreted within the context of Piche and Plante’s (1991) values applied to the educational context. School promotes self-transcendence values closely related to Agreeability, Competence and Effort—namely cooperation, rigorousness, or hardworking, but not self-enhancement values as represented by Assertiveness—like domination, competition, or ambition (Heyder and Kessels 2013; Verniers et al. 2016).

The last phase was to assess multigroup invariance of the SSJS across gender. Until the test for factor mean invariance, multigroup CFA revealed no significant between-group differences in any parameter (except for one factor loading). However, the results indicated that the factor means were not invariant across groups. In other words, a significant gender effect was found for one factor mean: girls scored significantly lower than boys on Assertiveness. This finding is in line with studies showing that boys are more prone than girls to promote themselves and to be self-confident in many various contexts, including school (Abele and Wojciszke 2013; Halim et al. 2016; Spinath et al. 2014). This could be interpreted as the result of gender differences in socialization (Heyder and Kessels 2013). Boys are socialized to display more self-enhancement than girls, leading them to be more sensitive to assertiveness-related values in a self-evaluation process.

This study suffers some limitations. First, the present study used a French sample of children to develop the SSJS, which limits the generalizability of the results to other populations, such as English-speaking pupils. A widespread use of the SSJS requires future research in various languages in order to replicate our findings and test for the measurement invariance of the SSJS across countries (Ziegler and Bensch 2013). Second, reliability of the SSJS should be improved. As previously discussed, this could be achieved by increasing the number of items on the two less reliable subscales of the SSJS (Agreeability and Assertiveness). Further aspects of validity also need to be examined before professionals can trustfully use the SSJS as a self-report measure of social judgment and, further, as an assessment tool for better understanding academic achievement. In particular, future studies should examine the construct validity of the SSJS more thoroughly. It could be made through Multitrait-multimethod (MTMM) analyses as a method for assessing the convergent and discriminant validity of the SSJS, especially in relation to (1) other ways to assess the dimensions of social judgment (see Rohmer and Louvet 2013, for example) and (2) teacher and/or parent report formats of the SSJS for characterizing children. In addition, criterion validity should be investigated to provide evidence for the predictive power of the SSJS regarding criteria related to academic achievement. Future studies should investigate to what extent Agreeability, Assertiveness, Competence and Effort (as measured by the SSJS) are related to success at school and, if so, how their relations with academic achievement are strong and distinctive. This step is fundamental because it has been demonstrated by Schwinger et al. (2009) that intelligence as well as self-reported perception of effort were equally strong predictors of academic achievement as operationalized by report cards. However, in this study, intelligence was measured by objective measures, but self-perceived competence was not assessed, and self-evaluations of assertiveness as well as agreeability were not included. All these elements serve as the next logical step in the psychometric evaluation of the SSJS. A third limitation concerns the sample size. Although it was adequate for performing our analyses, future studies could be more extensive by including larger samples, therefore allowing for additional investigations such as multigroup invariance and test for mean differences across age. Such analyses were beyond the scope of the present study but would be of primary interest in the educational context.

7 Conclusion

The results of the present study provided support in favor of the SSJS as a brief and psychometrically sound four-dimensional measure of social judgment. The SSJS offers attractive features for school teachers, psychologists or researchers looking for an instrument able to identify the specific components through which children form a social judgment about themselves. Moreover, the SSJS provides the opportunity to identify whether these components are the same for young people with or without disability for instance, or for pupils and college students. In line with this, recent research has suggested that the relationship between achievement and subjective feelings of competence or motivation to boost efforts may differ for students with dyslexia and average readers (Soriano-Ferrer and Morte-Soriano 2017). Further, Cvencek et al. (2017) found less positive self-judgments and lower performance among U.S. pupils belonging to ethnic minorities (as compared to pupils from the White majority), especially in older samples (8–10-year-old children). Insofar as these components seem to be involved in academic achievement versus school failure—beyond other features usually considered in school (such as standardized Intellectual Quotient tests or level of education)—the SSJS is a promising instrument which could help such professionals to develop suitable educational support for children who underperform at school. For example, it would be useful to know how pupils evaluate themselves on effort. This knowledge could allow professionals to help pupils to integrate this dimension which is both more malleable than intelligence and related to positive attitudes towards school promoting a better investment in learning (Corbières et al. 2006; Scholtens et al. 2013; Wouters et al. 2011).

Moreover, as already pointed out, self-evaluations of pupils are closely related to how teachers subjectively evaluate them. Social support from teachers would thus appear to be an important factor in enhancing the judgment made by children about themselves (Hascoët et al. 2017). By providing specific information on features to promote, the SSJS could help to point this fundamental support in the right direction. All in all, it could be relevant to better value self-judgments at school, rather than emphasizing only objective indicators of school achievement (academic performance), to provide support for children during their educational path.

References

Abele, A. E., Cuddy, A. J., Judd, C. M., & Yzerbyt, V. Y. (2008). Fundamental dimension of social judgment. European Journal of Social Psychology, 38, 1063–1065.

Abele, A. E., & Wojciszke, B. (2007). Agency and communion from the perspective of self versus others. Journal of Personality and Social Psychology, 93, 751–753.

Abele, A. E., & Wojciszke, B. (2013). The big two in social judgment and behavior. Social Psychology, 44, 61–63.

Bakadorova, O., & Raufelder, D. (2015). Perception of teachers and peers during adolescence: Does school self-concept matter? Results of a qualitative study. Learning and Individual Differences, 43, 218–225.

Bentler, P. M., & Chou, C.-P. (1987). Practical issues in structural modeling. Sociological Methods & Research, 16, 78–117.

Blackwell, L. S., Trzesniewski, K. H., & Dweck, C. S. (2007). Implicit theories of intelligence predict achievement across an adolescent transition: A longitudinal study and intervention. Child Development, 78, 246–263.

Bong, M., & Skaalvik, E. M. (2003). Academic self-concept and self-efficacy: How different are they really? Educational Psychology Review, 15, 1–40.

Brown, T. A. (2006). Confirmatory factor analysis for applied research. New York: The Guilford Press.

Byrne, B. M. (2012). Structural equation modeling with Mplus. New York: Psychology Press.

Carrier, A., Louvet, E., Chauvin, B., & Rohmer, O. (2014). The primacy of agency over competence in status perception. Social Psychology, 45, 347–356.

Cheung, G. W., & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling, 9, 233–255.

Cislak, A. (2013). Effects of power and social perception. All your boss can see is agency. Social Psychology, 44, 138–146.

Cohen-Laloum, J., Mollaret, P., & Darnon, C. (2017). Distinguishing the desire to learn from the desire to perform: The social value of achievement goals. The Journal of Social Psychology, 157, 30–46.

Corbières, M., Fraccaroli, F. F., Mbekou, V., & Perron, J. (2006). Academic self-concept and academic interest measurement: A multi-sample European study. European Journal of Psychology of Education, 21, 3–15.

Cvencek, D., Fryberg, S.A., Covarrubias, R., & Meltzoff, A.N. (2017). Self-concepts, self-esteem, and academic achievement of minority and majority North American elementary school children. Child Development. https://doi.org/10.1111/cdev.12802.

D’Amico, A., & Cardaci, M. (2003). Relations among self-efficacy, self-esteem, and school achievement. Psychological Reports, 92, 745–754.

Dompnier, B., & Pansu, P. (2010). Social value of causal explanations in educational settings: Pupils’ self-presentation and teachers’ representations [La valeur sociale des explications causales en contexte éducatif : auto-présentation des élèves et représentations des enseignants]. Swiss Journal of Psychology, 69, 37–49.

Dompnier, B., Darnon, C., & Butera, F. (2009). Faking the desire to learn: A clarification of the link between mastery goals and academic achievement. Psychological Science, 20, 939–943.

Dompnier, B., Pansu, P., & Bressoux, P. (2007). Social utility, social desirability and scholastic judgments: Toward a personological model of academic evaluation. European Journal of Psychology of Education, 3, 333–350.

Dweck, C. S. (1986). Motivational processes affecting learning. American Psychologist, 41, 1040–1048.

Dweck, C. S. (1999). Self-theories: Their role in motivation, personality, and development. Philadelphia: Psychology Press.

Fiske, S. (2015). Intergroup biases: A focus on stereotype content. Current Opinion in Behavioral Sciences, 3, 45–50.

Fiske, S. T., Cuddy, A. J., Glick, P., & Xu, J. (2002). A model of (often mixed) stereotype content: Competence and warmth respectively follow from perceived status and competition. Journal of Personality and Social Psychology, 82, 878–902.

Frost, J., & Ottem, M. (2016). The value of assessing pupils’ academic self-concept. Scandinavian Journal of Educational Research. https://doi.org/10.1080/00313831.2016.1212397.

Halim, M. L., Zosuls, K. M., Ruble, D. N., Tamis-LeMonda, C. S., Baeg, A. S., Walsh, A. S., et al. (2016). Children’s dynamic gender identities across development and the influence of cognition, context, and culture. In C. S. Tamis-LeMonda & L. Balter (Eds.), Child psychology: A handbook of contemporary issues (3rd ed., pp. 193–218). New York: Psychology Press/Taylor & Francis.

Hascoët, M., Pansu, P., Bouffard, T., & Leroy, N. (2017). The harmful aspect of teacher conditional support on student’s self-perception of school competence. European Journal of Psychology of Education. https://doi.org/10.1007/s10212-017-0350-0.

Heyder, A., & Kessels, U. (2013). Is school feminine? Implicit gender stereotyping of school as a predictor of academic achievement. Sex Roles, 69, 605–617.

Hogan, R. (1982). A socioanalytic theory of personality. In M. M. Page (Ed.), 1982 Nebraska symposium on motivation: Personality-current theory and research (pp. 55–89). Lincoln: University of Nebraska Press.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55.

Mollaret, P., & Miraucourt, D. (2016). Is job performance independent from career success? A conceptual distinction between competence and agency. Scandinavian Journal of Psychology, 57, 607–617.

Muthén, L. K., & Muthén, B. O. (1998–2012). Mplus user’s guide (7th ed.). Los Angeles, CA: Muthén & Muthén.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). New York: McGraw Hill.

Oldmeadow, J., & Fiske, S. T. (2007). System justifying ideologies moderate status = competence stereotypes: Roles for belief in a just world and social dominance orientation. European Journal of Social Psychology, 37, 1135–1148.

Ommundsen, Y., Haugen, R., & Lund, T. (2005). Academic Self-concept, implicit theories of ability, and self-regulation strategies. Scandinavian Journal of Educational Research, 49, 461–474.

Pajares, F., & Schunk, D. H. (2001). Self-beliefs and school success: Self-efficacy, self-concept, and school achievement. In R. Riding & S. Rayner (Eds.), Self-perception (pp. 239–266). London: Ablex Publishing.

Pansu, P., & Dubois, N. (2013). The social origin of personality traits: An evaluative function. In E. F. Morris & M. A. Jackson (Eds.), Psychology of personnality (pp. 1–23). New York: Nova Science Publishers.

Paulhus, D. L., & Trapnell, P. D. (2008). Self-presentation on personality scales: An agency-communion framework. In O. P. John, R. W. Robins, & L. A. Pervin (Eds.), Handbook of personality (pp. 493–517). New York: The Guilford Press.

Perrin, S., & Testé, B. (2010). Impact of the locus of causality and internal control on the social utility of causal explanations. Swiss Journal of Psychology, 69, 171–177.

Piche, C., & Plante, C. (1991). Perceived masculinity, feminity and androgyny among primary school boys: Relationships with the adaptation level of these students and the attitudes of the teachers towards them. European Journal of Psychology of Education, 6, 423–435.

Qureshi, A., Wall, H., Humphries, J., & Balani, A. B. (2016). Can personality traits modulate student engagement with learning and their attitude to employability? Learning and Individual Differences, 51, 349–358.

Raines-Eudy, R. (2000). Using structural equation modeling to test for differential reliability and validity: An empirical demonstration. Structural Equation Modeling, 7, 124–141.

Rohmer, O., & Louvet, E. (2013). Social utility and academic success of students with or without disability: The impact of competence and effort [Utilité sociale et réussite universitaire d’étudiants ayant ou non des incapacités motrices : rôles respectifs de la compétence et de l’effort]. Journal of Human Development, Disability, and Social Change, 21, 65–76.

Sass, D. A. (2011). Testing measurement invariance and comparing latent factor means within a Confirmatory Factor Analysis framework. Journal of Psychoeducational Assessment, 29, 347–363.

Scholtens, S., Rydell, A. M., & Wallentin, F. Y. (2013). ADHD symptoms, academic achievement, self-perception of academic competence and future orientation: A longitudinal study. Scandinavian Journal of Psychology, 3, 205–212.

Schwinger, M., Steinmayr, R., & Spinath, B. (2009). How do motivational regulation strategies affect achievement: Mediated by effort management and moderated by intelligence. Learning and Individual Differences, 19, 621–627.

Smeding, A., Dompnier, B., Meier, E., & Darnon, C. (2015). The motivation to learn as a self-presentation tool among Swiss high school students: The moderating role of mastery goals’ perceived social value on learning. Learning and Individual Differences, 43, 204–2010.

Soriano-Ferrer, M., & Morte-Soriano, M. (2017). Teacher perceptions of reading motivation in children with developmental dyslexia and average readers. Procedia-Social and Behavioral Sciences, 237, 50–56.

Spinath, B., Eckert, C., & Steinmayr, R. (2014). Gender differences in school success: What are the roles of students’ intelligence, personality and motivation? Educational Research, 56, 230–243.

Velicer, W., Peacock, A., & Jackson, D. (1982). A comparison of component and factor patterns: A Monte Carlo approach. Multivariate Behavioral Research, 17, 371–388.

Verniers, C., Martinot, D., & Dompnier, B. (2016). The feminization of school hypothesis called into question among junior and high school students. British Journal of Educational Psychology, 86, 369–381.

Wouters, S., Germeijs, V., Colpin, H., & Verschueren, K. (2011). Academic self-concept in high school: Predictors and effects on adjustment in higher education. Scandinavian Journal of Psychology, 52, 586–594.

Yang, Y., & Green, S. B. (2011). Coefficient alpha: A reliability coefficient for the 21st century? Journal of Psychoeducational Assessment, 29, 377–392.

Yuan, K. H., & Bentler, P. M. (2000). Three likelihood-based methods for mean and covariance structure analysis with nonnormal missing data. In M. E. Sobel & M. P. Becker (Eds.), Sociological Methodology 2000 (pp. 165–200). Washington, DC: American Sociological Association.

Yzerbyt, V. (2016). Intergroup stereotyping. Current Opinion in Psychology, 11, 90–95.

Ziegler, M., & Bensch, D. (2013). Lost in translation: Thoughts regarding the translation of existing psychological measures into other languages. European Journal of Psychological Assessment, 29, 81–83.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest.

Appendix

Appendix

1.1 The School Social Judgment Scale (SSJS) for children

1.1.1 Instructions and items

On the following pages, you will find a series of phrases about you. Please read each phrase and decide how much you agree or disagree with that phrase. Then indicate your response using the following scale:

1 = strongly disagree

2 = disagree

3 = agree

4 = strongly agree

Feel free to answer without cheating. Nobody will know about it.

-

1.

In games, you always decide the rules

-

2.

You do your schoolwork to the best of your ability

-

3.

You like making your friends happy

-

4.

The exercises that you have to do in class seem easy to you

-

5.

You are usually the leader when you play with others

-

6.

You understand quickly what you are asked to do in class

-

7.

Whenever you have candy, you give some to your friends

-

8.

You are often the leader of a group

-

9.

You do everything you can to be a good pupil

-

10.

You understand faster than other pupils in your class

-

11.

You like doing favors to make people happy

-

12.

You are working hard even though it is difficult

Rights and permissions

About this article

Cite this article

Chauvin, B., Demont, E. & Rohmer, O. Development and validation of the School Social Judgment Scale for children: Their judgment of the self to foster achievement at school. Soc Psychol Educ 21, 585–602 (2018). https://doi.org/10.1007/s11218-018-9430-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11218-018-9430-5