Abstract

This paper deals with the parametric inference for integrated continuous time signals embedded in an additive Gaussian noise and observed at deterministic discrete instants which are not necessarily equidistant. The unknown parameter is multidimensional and compounded of a signal-of-interest parameter and a variance parameter of the noise. We state the consistency and the minimax efficiency of the maximum likelihood estimator and of the Bayesian estimator when the time of observation tends to infinity and the delays between two consecutive observations tend to 0 or are only bounded. The class of signals in consideration contains among others, almost periodic signals and also non-continuous periodic signals. However the problem of frequency estimation is not considered here. Furthermore, in this paper the signal-plus-noise discretely observed in time model is considered as a particular case of a more general model of independent Gaussian observations forming a triangular array.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the following integrated signal-plus-noise model

where the functions \(f:\varvec{A}\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}\) and \(\sigma :\varvec{B}\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\) are measurable, \(f(\varvec{\alpha },t)\), respectively \(\sigma (\varvec{\beta },t)\), is continuous in the first component \(\varvec{\alpha }\in \varvec{A}\), respectively in \(\varvec{\beta }\in \varvec{B}\); \(\varvec{A}\) is a bounded open convex subset of \({\mathbb {R}}^a\), \(\varvec{B}\) is a bounded open convex subset of \({\mathbb {R}}^b\), \(a,b\ge 0\), \(a+b>0\), and \(\{{\mathrm {W}}_t\}\) is a Wiener process defined over a probability space \((\varOmega ,{\mathcal {F}},{\mathrm {P}})\). We assume that the initial random variable \(X_0\) is independent on the Wiener process \(\{{\mathrm {W}}_t\}\) and does not depend on the unknown parameter \(\varvec{\theta }:=(\varvec{\alpha },\varvec{\beta })\).

Since very long time, this model has received a considerable amount of investigation. The statistical analysis of such signals has attracted much interest, its applications ranging from telecommunications, mechanics, to econometrics and financial studies. For the continuous time observation framework, we cite the well-known work by Ibragimov and Has’minskii (1981) as well as the contributions by Kutoyants (1984) who studied the consistency and the minimax efficiency of the maximum likelihood estimator and the Bayesian estimator.

However, in practice it is difficult to record numerically a continuous time process and generally the observations take place at discrete moments (Mishra and Prakasa Rao 2001). Most of the publications on discrete time observation concern regular sampling, that is the instants of observation are usually equally spaced. Nevertheless, many applications make use of non equidistant sampling. The sampled points can be associated with quantiles of some distribution (see e.g. in another context Blanke and Vial 2014; see also Sacks and Ylvisaker 1968) or can be perturbated by some jitter effect (see e.g. Dehay et al. 2017).

In the present paper we study the asymptotic optimality of the maximum likelihood estimator and the Bayesian estimator of the unknown parameter \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\) built from a discrete time observation \(\{X_{t_0},\dots ,X_{t_n}\}\), \(0=t_0<t_1<\cdots <t_n=T_n\) of the process \(\{X_t\}\) as n and \(T_n\rightarrow \infty \), when the delays between two consecutive observations tend to 0 or are only bounded. The non uniform sampling scheme is scarcely taken in consideration in the usual literature on the inference of such a model (1) of integrated signal-plus-noise. For this scheme of observation we state that the rate of convergence of the maximum likelihood estimator and the Bayesian estimator for the parameter \(\varvec{\alpha }\) of the signal-of-interest is \(\sqrt{T_n}\) while the rate of convergence for the parameter \(\varvec{\beta }\) of the noise variance is \(\sqrt{n}\), without any condition on the convergence to 0 of the delay between two observations as \(n\rightarrow \infty \), in contrary to the model of an ergodic diffusion (Florens-Zmirou 1989; Genon-Catalot 1990; Mishra and Prakasa Rao 2001; Uchida and Yoshida 2012). This fact is due to the non-randomness of the signal-of-interest \(f(\varvec{\alpha },t)\) and of the variance \(\sigma ^2(\varvec{\beta },t)\). Notice that model (1) is not ergodic, and the signal-of-interest is not necessarily continuous or periodic in time. The problem of frequency estimation is not tackled in this work.

The paper is organized as follows. The framework and the assumptions on the model are introduced in Sect. 2. In the following we are going to consider a general parametric estimation problem for a triangular Gaussian array with independent components. The signal-plus-noise discretely observed in time model (1) is a particular case of the general setting. Next specific assumptions for the general model that fit this special case are presented. These conditions are fulfilled for almost periodic signal-plus-noise Gaussian models.

The uniform local asymptotic normality of the log-likelihood in the setting of independent random variables has been stated in Theorem II. 6.2 of Ibragimov and Has’minskii (1981) under a Lindeberg condition. However, in our framework this condition can be simplified using Hellinger-type distances (see Theorem 4.1 and relation (5.6) in Dzhaparidze and Valkeila 1990). Moreover the Hellinger-type distances between two distributions of the observation according two different values of the parameter can be easily estimated (“Appendix A”, see also Gushchin and Küchler 2003). Then the local asymptotic normality of the log-likelihood for our parametric Gaussian model is established in Sect. 3 following a methodology presented in Gushchin and Valkeila (2017).

Sections 4.1 and 4.2 are devoted to prove that the maximum likelihood estimator and the Bayesian estimator are consistent, asymptotically normal and asymptotically optimal, following the method of minimax efficiency from Chapter III in Ibragimov and Has’minskii (1981). Again, using Hellinger-type distances allow us to simplify the computations.

To illustrate the previous results, the trivial example of the linear model is given in Sect. 5.

In “Appendix A”, some elementary results for Hellinger-type distances between products of normal distributions and Hellinger integral of them are provided. These results generalize results stated in Gushchin and Küchler (2003) for zero-mean normal distributions.

We complete this work in “Appendix B” by stating some expressions of the Fisher information matrices and of the identifiability functions in the cases of almost periodic and of periodic functions.

2 Framework

2.1 Triangular Gaussian array model

First consider that the observations come from a triangular random array formed for each \(n>0\) by independent Gaussian variables \(Y_{n,i}\), \(i=1,\dots ,n\) with mean \(F_{n,i}(\varvec{\alpha })\) and variance \(G_{n,i}^2(\varvec{\beta })\) which depend on the unknown parameter \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\in \varTheta =\varvec{A}\times \varvec{B}\subset {\mathbb {R}}^{a+b}\). Hence the likelihood of the observation \(\{Y_{n,i}:i=1\dots ,n\}\) is equal to

Hereafter the properties of the likelihood \(Z_n(\varvec{\theta })\) will be obtained under the following assumptions.

- A1:

-

For every \(n\ge 1\) and every \(i\in \{1,\dots ,n\}\), the functions \(\varvec{\alpha }\mapsto F_{n,i}(\varvec{\alpha })\) and \(\varvec{\beta }\mapsto G_{n,i}^2(\varvec{\beta })\) are differentiable. Moreover there exist a bounded family of positive numbers \(\{h_{n,i}: n\ge 1, i=1,\dots ,n\}\), and for every \(\varepsilon >0\) there is \(\eta >0\) such that for \(|\varvec{\alpha }-\varvec{\alpha }'|\le \eta \) and \(|\varvec{\beta }-\varvec{\beta }'|\le \eta \) we have that

$$\begin{aligned} \big |\nabla \!_{\varvec{\alpha }}F_{n,i}(\varvec{\alpha })-\nabla \!_{\varvec{\alpha }}F_{n,i}(\varvec{\alpha }')\big |\le \varepsilon h_{n,i} \quad \text{ and }\quad \big |\nabla \!_{\varvec{\beta }}G_{n,i}^2(\varvec{\beta })-\nabla \!_{\varvec{\beta }} G_{n,i}^2(\varvec{\beta }')\big |\le \varepsilon h_{n,i}. \end{aligned}$$Here the a-dimensional vector \(\nabla \!_{\varvec{\alpha }}F_{n,i}(\varvec{\alpha })\) is the gradient function of \(F_{n,i}(\varvec{\alpha })\) with respect to \(\varvec{\alpha }\) and the b-dimensional vector \(\nabla \!_{\varvec{\beta }}G_{n,i}^2(\varvec{\beta })\) is the gradient function of \(G_{n,i}^2(\varvec{\beta })\) with respect to \(\varvec{\beta }\).

- A2:

-

There exist constant values \(0<\gamma _1<\gamma _2<\infty \) such that

$$\begin{aligned} 0< & {} \gamma _1h_{n,i}\le \inf _{\varvec{\beta }}G_{n,i}^2(\varvec{\beta })\le \sup _{\varvec{\beta }}G_{n,i}^2(\varvec{\beta })<\gamma _2h_{n,i},\\ \sup _{\varvec{\alpha }}|\nabla \!_{\varvec{\alpha }}F_{n,i}(\varvec{\alpha })|< & {} \gamma _2^{}h_{n,i} \qquad \text{ and }\qquad \sup _{\varvec{\beta }}|\nabla \!_{\varvec{\beta }}G_{n,i}^2(\varvec{\beta })|<\gamma _2^{}h_{n,i}. \end{aligned}$$In the following we also assume that \(T_n:=\sum _{i=1}^nh_{n,i}\rightarrow \infty \), as \(n\rightarrow \infty \).

- A3:

-

There exist two positive definite matrices \(\varvec{J}_a^{(\varvec{\theta })}\) and \(\varvec{J}_b^{(\varvec{\beta })}\) such that

$$\begin{aligned}&\varvec{J}_a^{(\varvec{\theta })} =\lim _{n\rightarrow \infty }\frac{1}{T_n}\sum _{i=1}^n \frac{\nabla \!_{\varvec{\alpha }}^{^{\,\,\varvec{*}\!\!}}F_{n,i}(\varvec{\alpha })\,\nabla \!_{\varvec{\alpha }}F_{n,i}(\varvec{\alpha })}{G_{n,i}^2(\varvec{\beta })} \end{aligned}$$and

$$\begin{aligned}&\varvec{J}_b^{(\varvec{\beta })} = \lim _{n\rightarrow \infty }\frac{1}{2n}\sum _{i=1}^n \nabla \!_{\varvec{\beta }}^{^{\,\,\varvec{*}\!\!}}\ln G_{n,i}^2(\varvec{\beta })\,\nabla \!_{\varvec{\beta }}\ln G_{n,i}^2(\varvec{\beta }), \end{aligned}$$the convergences being uniform with respect to \(\varvec{\theta }\) varying in \(\varTheta =\varvec{A}\times \varvec{B}\). Here and henceforth the superscript \(^{^{\varvec{*}\!}}\) designates the transpose operator for vectors and matrices.

- A4:

-

For every \(\nu >0\) there exists \(\mu _{\nu }>0\) and \(n_{\nu }>0\) such that

$$\begin{aligned} \frac{1}{T_n}\sum _{i=1}^n \frac{\big (F_{n,i}(\varvec{\alpha })-F_{n,i}(\varvec{\alpha }')\big )^2}{h_{n,i}}\ge \mu _{\nu }\quad \text{ and }\quad \frac{1}{n}\sum _{i=1}^n \frac{\big (G_{n,i}^2(\varvec{\beta })-G_{n,i}^2(\varvec{\beta }')\big )^2}{(h_{n,i})^2}\ge \mu _{\nu } \end{aligned}$$for any \(n\ge n_{\nu }\) and all \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\), \(\varvec{\theta }'=(\varvec{\alpha }',\varvec{\beta }')\) in \(\varTheta \) with \(|\varvec{\alpha }-\varvec{\alpha }'|\ge \nu \) and \(|\varvec{\beta }-\varvec{\beta }'|\ge \nu \).

Remarks

-

1.

Assumptions A1 and A2 are technical conditions on the smoothness of the functions \(F_{n,i}(\varvec{\alpha })\) and \(G_{n,i}(\varvec{\beta })\).

-

2.

With assumption A3 the asymptotic Fisher information \(d\times d\)-matrix \(\varvec{J}^{(\varvec{\theta })}\) of the model, \(d:=a+b\), is equal to

$$\begin{aligned} \varvec{J}^{(\varvec{\theta })}:={\mathrm {diag}}\Big [\varvec{J}_a^{(\varvec{\theta })},\varvec{J}_b^{(\varvec{\beta })}\Big ]=\left[ \begin{array}{cc}\varvec{J}_a^{(\varvec{\theta })}&{}{\mathbf {0}}_{a\times b}\\ {\mathbf {0}}_{b\times a}&{}\varvec{J}_b^{(\varvec{\beta })}\end{array}\right] . \end{aligned}$$Under assumptions A1, A2 and A3, the function \(\varvec{\theta }\mapsto \varvec{J}^{(\varvec{\theta })}\) is continuous on \(\varTheta =\varvec{A}\times \varvec{B}\). Furthermore as \(J^{(\varvec{\theta })}\) is a positive definite matrix, the square root \(\big (J^{(\varvec{\theta })}\big )^{\frac{-1}{2}}={\mathrm {diag}}\Big [\big (J_a^{(\varvec{\alpha },\varvec{\beta })}\big )^{\frac{-1}{2}},\big (J_b^{(\varvec{\beta })}\big )^{\frac{-1}{2}}\Big ]\) of its inverse \(\big (J^{(\varvec{\theta })}\big )^{-1}\) is well defined and continuous in \(\varTheta \).

-

3.

Assumption A4 is an identifiability condition. Assume that the following limits are finite:

$$\begin{aligned}&\mu _a(\varvec{\alpha },\varvec{\alpha }') :=\liminf _{n\rightarrow \infty }\frac{1}{T_n}\sum _{i=1}^n \frac{\big (F_{n,i}(\varvec{\alpha })-F_{n,i}(\varvec{\alpha }')\big )^2}{h_{n,i}}, \end{aligned}$$(2)$$\begin{aligned}&\quad \mu _b(\varvec{\beta },\varvec{\beta }') :=\liminf _{n\rightarrow \infty }\frac{1}{n}\sum _{i=1}^n \frac{\big (G_{n,i}^2(\varvec{\beta })-G_{n,i}^2(\varvec{\beta }')\big )^2}{h_{n,i}^2}, \end{aligned}$$(3)the convergences being uniform with respect to \(\varvec{\alpha }\), \(\varvec{\alpha }'\in \varvec{A}\), \(\varvec{\beta }\), \(\varvec{\beta }'\in \varvec{B}\) with \(|\varvec{\alpha }-\varvec{\alpha }'|\ge \nu \) and \(|\varvec{\beta }-\varvec{\beta }'|\ge \nu \), and assume that

$$\begin{aligned} \mu _{\nu }:=\frac{1}{2}\min \Big \{\inf _{|\varvec{\alpha }-\varvec{\alpha }'|\ge \nu }\mu _a(\varvec{\alpha },\varvec{\alpha }')\,,\,\inf _{|\varvec{\beta }-\varvec{\beta }'|\ge \nu }\mu _b(\varvec{\beta },\varvec{\beta }')\Big \}>0 \end{aligned}$$for any \(\nu >0\), then assumption A4 is fulfilled.

2.2 Discrete time observation of the signal-plus-noise model

As an application of the previous model, come back to the continuous time integrated signal-plus-noise model (1) for which we assume that the observations during a time interval \([0,T_n]\) occur at instants \(0=t_{n,0}<t_{n,1}<\cdots <t_{n,n}=T_n\) of the interval \([0, T_n]\), where \(0<h_{n,i}:=t_{n,i}-t_{n,i-1}\le h_n:=\max _ih_{n,i}\). We also assume that \(T_n\rightarrow \infty \) as \(n\rightarrow \infty \) and the set \(\{h_n:n\ge 1\}\) is bounded.

Then the observation of the sequence \(X_{t_{n,i}}\), \(i\in \{0,\dots ,n\}\) corresponds to the observation of \(Y_{n,0}:=X_0\) and of the increments defined by \(Y_{n,i}:=X_{t_{n,i}}- X_{t_{n,i-1}}\), \(i\in \{1,\dots ,n\}\). Since the initial random variable \(X_0\) is independent of the Wiener process \(\{W_t\}\) and its distribution does not depend on the parameter \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\), we do not take care of \(Y_{n,0}\) in the following.

For \(i\in {1,\dots ,n}\), denote

Thus, when the true value of the parameter is \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\), the increment \(Y_{n,i}\), \(i\ge 1\), is equal to

where

Notice that the random variables \(W_{n,i}^{(\varvec{\beta })}\), \(i=1,\dots ,n\), are independent with the same standard normal distribution \({\mathcal {N}}(0,1)\). Hence the triangular Gaussian array model introduced in Sect. 2.1 applies in the present situation.

Moreover, assumptions A1 and A2 are fulfilled when the following specific to model (1) assumptions A1’ and A2’ are satisfied.

- A1’:

-

The functions \(f:\varvec{A}\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}\), and \(\sigma :\varvec{B}\times {\mathbb {R}}^+\rightarrow {\mathbb {R}}^+\), are measurable. The functions \(\varvec{\alpha }\mapsto f(\varvec{\alpha },t)\) and \(\varvec{\beta }\mapsto \sigma ^2(\varvec{\theta },t)\) are differentiable. Furthermore, for every \(\varepsilon >0\) there exists \(\eta >0\) such that for \(|\varvec{\alpha }-\varvec{\alpha }'|\le \eta \) and \(|\varvec{\beta }-\varvec{\beta }'|\le \eta \) we have:

$$\begin{aligned} \sup _t\big |\nabla \!_{\varvec{\alpha }}f(\varvec{\alpha }, t)-\nabla \!_{\varvec{\alpha }}f(\varvec{\alpha }', t)\big |\le \varepsilon \quad \text{ and } \quad \sup _t \big |\nabla \!_{\varvec{\beta }}\sigma ^2(\varvec{\beta }, t)-\nabla \!_{\varvec{\beta }} \sigma ^2(\varvec{\beta }', t)\big |\le \varepsilon . \end{aligned}$$ - A2’:

-

The function \(t\mapsto f(\varvec{\alpha },t)\) is locally integrable in \({\mathbb {R}}\) for any \(\varvec{\alpha }\in \varvec{A}\); moreover

$$\begin{aligned}&0<\inf _{\varvec{\beta },t}\sigma ^2(\varvec{\beta },t)\le \sup _{\varvec{\beta },t}\sigma ^2(\varvec{\beta },t)<\infty ,\\&\quad \sup _{\varvec{\alpha },t}|\nabla \!_{\varvec{\alpha }}f(\varvec{\alpha },t)|<\infty \qquad \text{ and }\qquad \sup _{\varvec{\beta },t}|\nabla \!_{\varvec{\beta }}\sigma ^2(\varvec{\beta },t)|<\infty . \end{aligned}$$

Remarks

-

1.

We readily see that assumptions A1’ and A2’ (as well as A1 and A2) are fulfilled when the parameter set \(\varTheta =\varvec{A}\times \varvec{B}\) is compact and the functions \((\varvec{\alpha },t)\mapsto \big (f(\varvec{\alpha },t),\nabla \!_{\varvec{\alpha }}f(\varvec{\alpha },t)\big )\) and \((\varvec{\beta },t)\mapsto \big (\sigma ^2(\varvec{\beta },t),\nabla \!_{\varvec{\beta }}\sigma ^2(\varvec{\beta },t)\big )\) are almost periodic in t uniformly with respect to \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\in \varTheta \) (see “Appendix B”).

-

2.

Neither assumption A1’ nor assumption A1 are satisfied when we consider the problem of frequency estimation for model (1). For example, the function \(f(\alpha ,t)=\sin (\alpha t)\) with \(\alpha \in \varvec{A}\), \(\varvec{A}\subset {\mathbb {R}}\) does not satisfied assumption A1 since \(\sup _t|\cos (\varvec{\alpha }t)-\cos (\varvec{\alpha }' t)|=2\) when \(\varvec{\alpha }\ne \varvec{\alpha }'\).

-

3.

For model (1), the limits \(\varvec{J}_a^{(\varvec{\theta })}\) and \(\varvec{J}_b^{(\varvec{\beta })}\) in assumption A3 exist when the functions \(\nabla \!_{\varvec{\alpha }}f(\varvec{\alpha },t)\), \(\sigma ^2(\varvec{\beta },t)\) and \(\nabla \!_{\varvec{\beta }}\sigma ^2(\varvec{\beta },t)\) are almost periodic in time t and \(h_n\rightarrow 0\). This is also true when these functions are periodic with the same period \(P>0\) and the delay between two consecutive observations is constant and equal to \(h=P/\nu \) for some fixed positive integer \(\nu \) (see “Appendix B”).

-

4.

For model (1), when the functions \(f(\varvec{\alpha },t)\), \(f(\varvec{\alpha }',t)\), \(\sigma ^2(\varvec{\beta },t)\) and \(\sigma ^2(\varvec{\beta }',t)\) are almost periodic in time t, then the limits \(\mu _a(\varvec{\alpha },\varvec{\alpha }')\) and \(\mu _b(\varvec{\beta },\varvec{\beta }')\) in relations (2) and (3) exist. If in addition for \(\varvec{\alpha }\ne \varvec{\alpha }'\) we have \(\sup _t|f(\varvec{\alpha },t)-f(\varvec{\alpha }',t)|>0\), and for \(\varvec{\beta }\ne \varvec{\beta }'\), \(\sup _t|\sigma ^2(\varvec{\beta },t)-\sigma ^2(\varvec{\beta }',t)|>0\), then \(\mu _a(\varvec{\alpha },\varvec{\alpha }')\) and \(\mu _b(\varvec{\beta },\varvec{\beta }')\) are positive. See “Appendix B”.

-

5.

Some explicit expressions of \(\varvec{J}_a^{(\varvec{\theta })}\), \(\varvec{J}_b^{(\varvec{\beta })}\), \(\mu _a(\varvec{\alpha },\varvec{\alpha }')\) and \(\mu _b(\varvec{\beta },\varvec{\beta }')\) are given in “Appendix B” for functions \(f(\varvec{\theta },t)\) and \(\sigma ^2(\varvec{\beta },t)\) periodic in time t when the delays between two consecutive observations tend to 0 as well as when the delays are constant.

3 LAN property of the triangular Gaussian array model

From now on, we consider the general model of triangular Gaussian array presented in Sect. 2.1. As already mentionned, the results stated in the following apply directely to the integrated signal-plus-noise model described in Sect. 2.2. Under assumptions A1, A2 and A3, the model of triangular Gaussian array is regular in the sense given in Ibragimov IA, Has’minskii (1981, pp 65). To establish the asymptotic normality and the asymptotic efficiency of the maximum likelihood estimator and of the Bayesian estimator, we will apply the minimax efficiency theory from Ibragimov and Has’minskii (1981). Thus, we study the asymptotic behaviour of the likelihood of the observation \(\{Y_{n,1},\dots Y_{n,n}\}\) in the neighbourhood of the true value of the parameter. For this purpose let \(Z_n^{(\varvec{\theta },\varvec{w})}\) be the likelihood ratio defined by

for \(\varvec{\theta }\in \varTheta \) and \(\varvec{w}\in {\mathcal {W}}_{\varvec{\theta },n} :=\{\varvec{w}\in {\mathbb {R}}^d :\varvec{\theta }+\varvec{w}\varvec{\varPhi }^{(\varvec{\theta })}_n\in \varTheta \}\). Recall that \(d:=a+b\). Here the invertible \(d\times d\)-matrix (local normalizing matrix) \(\varvec{\varPhi }_n^{(\varvec{\theta })}\) is equal to \(\varvec{\varPhi }_n^{(\varvec{\theta })}:={\mathrm {diag}}\big [\varvec{\varphi }_n^{(\varvec{\theta })},\varvec{\psi }_n^{(\varvec{\beta })}\big ]\), setting \(\varvec{\varphi }^{(\varvec{\alpha },\varvec{\beta })}_n:=\big (T_n\varvec{J}_a^{(\varvec{\theta })}\big )^{\frac{-1}{2}}\) and \(\varvec{\psi }^{(\varvec{\beta })}_n:=\big (n\varvec{J}_b^{(\varvec{\beta })}\big )^{\frac{-1}{2}}\). Furthermore \({\mathrm {P}}_n^{(\varvec{\theta }')}\), respectively \({\mathrm {P}}_{n,i}^{(\varvec{\theta }')}\), is the distribution of \((Y_{n,1},\dots ,Y_{n,n})\), respectively of \(Y_{n,i}\), when the value of the parameter is \(\varvec{\theta }'\in \varTheta \). Next we state that the log-likelihood ratio \(\ln Z_n^{(\varvec{\theta },\varvec{w})}\) is asymptotically normal as \(n\rightarrow \infty \).

Proposition 1

Assume that \(\varTheta =\varvec{A}\times \varvec{B}\) is open and convex, and conditions A1, A2 and A3 are fulfilled. Then the family \( \{{\mathrm {P}}_n^{\varvec{\theta }}:\varvec{\theta }\in \varTheta \}\) is uniformly locally asymptotically normal (uniformly LAN) in any compact subset \({\mathcal {K}}\) of \(\varTheta \). That is for any compact subset \({\mathcal {K}}\) of \(\varTheta \), for arbitrary sequences \(\{\varvec{\theta }_n\}\subset {\mathcal {K}}\) and \(\{\varvec{w}_n\}\subset {\mathbb {R}}^d\) such that \(\varvec{\theta }_n+\varvec{w}_n\varvec{\varPhi }_n^{(\varvec{\theta }_n)}\in {\mathcal {K}}\) and \(\varvec{w}_n\rightarrow \varvec{w}\in {\mathbb {R}}^d\) as \(n\rightarrow \infty \), the log-likelihood ratio \(\ln Z_n^{(\varvec{\theta }_n,\varvec{w}_n)}\) can be decomposed as

where the random vector \(\varDelta ^{(\varvec{\theta }_n)}_n\) converges in law to the d-dimensional standard normal distribution and the random variable \(r_n(\varvec{\theta }_n,\varvec{w}_n)\) converges in \({\mathrm {P}}_{\varvec{\theta }_n}\)-probability to 0.

Proof

The weak convergence of log-likelihood to a Gaussian limit holds if the Hellinger process (of order 1/2) converges to a corresponding constant and the Lindeberg condition holds, see Chapter 10 in Jacod and Shiryaev (1987). Dzhaparidze and Valkeila (1990, Theorem 4.1) have shown that the Lindeberg condition can be replaced by convergence of the so-called p-divergency process to 0. In the case of independent observations the Hellinger process and the p-divergency process are just the sum of squared Hellinger distances and the corresponding Hellinger p-distances, \(p> 2\) integer (Jacod and Shiryaev 1987, Proposition IV.1.73). Moreover, in the Gaussian case, these distances can be expressed via the means and variances, and the Hellinger p-distances can be estimated via the Hellinger distance (Hellinger 2-distance \(\rho _2\)). See inequality (18) in “Appendix A” below (see also, Gushchin and Küchler 2003). In the following we do not apply directly the notion of Hellinger process and p-divergency process. Thus we do not define them in the paper. For more information on these notions the reader is referred to Jacod and Shiryaev (1987) and Dzhaparidze and Valkeila (1990).

Hence, following Gushchin and Valkeila (2017), to prove that the family \(\{{\mathrm {P}}_n^{(\varvec{\theta })}:\varvec{\theta }\in \varTheta \}\) is LAN uniformly in any compact subset \({\mathcal {K}}\) of \(\varTheta \), it suffices to establish that

and

for any \(\{\varvec{\theta }_n\}\subset {\mathcal {K}}\) and any bounded \(\{\varvec{w}_{n,j}\}\subset {\mathbb {R}}^d\) such that \(\varvec{\theta }_n+\varvec{w}_{n,i}\varvec{\varPhi }_n^{(\varvec{\theta }_n)}\in \varTheta \), \(j=1,2\). The definition of the Hellinger distance \(\rho _2(\cdot ,\cdot )\) is recalled in “Appendix A”.

Let \(\varvec{\theta }_n=(\varvec{\alpha }_n,\varvec{\beta }_n)\in {\mathcal {K}}\), and let \(\{\varvec{w}_{n,j}\}\subset {\mathbb {R}}^d\) be bounded for \(j=1,2\). Denote \(\varvec{\theta }_n^{(j)}=(\varvec{\alpha }_n^{(j)},\varvec{\beta }_n^{(j)}):=\varvec{\theta }_n+\varvec{w}_{n,j}\varvec{\varPhi }_n^{(\varvec{\theta }_n)}\) for \(j=1,2\), and \(\varvec{w}_n=(\varvec{u}_n,\varvec{v}_n):=\varvec{w}_{n,2}-\varvec{w}_{n,1}\). Thanks to equality (12) in “Appendix A”, the Hellinger distance between the two normal distributions \({\mathrm {P}}_{n,i}^{(\varvec{\theta }_n^{(j)})}={\mathcal {N}}\big (F_{n,i}(\varvec{\alpha }_n^{(j)}),G_{n,i}^2(\varvec{\beta }_n^{(j)})\big )\), \(j=1,2\), is equal to

where

and

From assumption A2, we readily obtain that

and

By remark 2 in Sect. 2.1, we know that the functions \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{-1/2}\big |\) and \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{-1/2}\big |\) are well defined and continuous in \(\varTheta \), so they are bounded in the compact subset \({\mathcal {K}}\). Hence when \(\{\varvec{\theta }_n\}\subset {\mathcal {K}}\) and the sequence \(\{\varvec{w}_n=(\varvec{u}_n,\varvec{v}_n)\}\) is bounded, we deduce that condition (5) is fulfilled.

Next, thanks to the Taylor expansion formula with integral reminder in expressions (7) and (8), and to the asymptotic equality (15) in “Appendix A” applied to \(\rho _2^2\big ({\mathrm {P}}_{n,i}^{(\varvec{\theta }_n^{(1)})},{\mathrm {P}}_{n,i}^{(\varvec{\theta }_n^{(2)})}\big )\), assumptions (A1), (A2) and (A3) imply that condition (6) is satisfied.

Hence the proposition is proved. \(\square \)

Remarks

By a direct but boring computation, we can prove the LAN decomposition (4) with the random vector \(\varDelta _n^{(\varvec{\theta })}=\sum _{i=1}^n \varDelta _{n,i}^{(\varvec{\theta })}\) where

and \(W_{n,i}^{(\varvec{\theta })}:=(Y_{n,i}-F_{n,i}(\varvec{\alpha }))/G_{n,i}(\varvec{\beta })\).

4 Efficient estimation

Cramér-Rao lower bound of the mean square risk is not entirely satisfactory to define the asymptotic efficiency of a sequence of estimators. See e.g. Section I.9 in Ibragimov and Has’minskii (1981), see also Section 1.2 in Höpfner (2014). Then we consider here the asymptotic optimality in the sense of local asymptotic minimax lower bound of the risk for the sequence \(\{\bar{\varvec{\theta }}_n\} :=\{\bar{\varvec{\theta }}_n,n > 0\}\) for the estimation of \(\varvec{\theta }\), that is, of the risk

where \(\bar{\varvec{\theta }}_n\) is any statistic function of the observation \(\{Y_{n,i},i=1,\dots ,n\}\). The loss function \(L(\cdot )\) belongs to the set \({\mathcal {L}}\) of non-negative Borel functions on \({\mathbb {R}}^d\) which are continuous at 0 with \(L({\mathbf {0}}_d) = 0\), \(L(-x)=L(x)\), the set \(\{x:L(x)<c\}\) is a convex set for any \(c>0\), and we also assume that the function \(L(\cdot )\in {\mathcal {L}}\) admits a polynomial majorant.

Clearly all functions \(L(\varvec{\theta }) = |\varvec{\theta }|^r\), \(r>0\), as well as \(L(\varvec{\theta }) =1_{\{|\varvec{\theta }|>r\}}\), \(r > 0\), belong to \({\mathcal {L}}\). (Here \(1_{\{x > r\}}\) denotes the indicator function of \((r,\infty )\).)

Since the model of observation is locally asymptotically normal then the local asymptotic minimax risk \({\mathcal {R}}_{\varvec{\theta }}(\{\bar{\varvec{\theta }}_n\}) \) for any sequence \(\{\bar{\varvec{\theta }}_n=(\bar{\varvec{\alpha }}_n,\bar{\varvec{\beta }}_n)\}\) of estimators of \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\) admits a lower bound for any loss function \(L\in {\mathcal {L}}\). More precisely

where \(\varvec{\xi }^{(\varvec{\theta })}\) is a random d-dimensional vector whose distribution is zero-mean Gaussian with \(d\times d\)-matrix variance equal to \(\big (\varvec{J}^{(\varvec{\theta })}\big )^{-1}\) (see Le Cam 1969 and Hájek 1972; see also Ibragimov and Has’minskii 1981).

4.1 Maximum likelihood estimator

Recall that the maximum likelihood estimator \(\widehat{\varvec{\theta }}_n\) is any statistics defined from the observation such that

In the next theorem we establish that \(\widehat{\varvec{\theta }}_n\) is an efficient estimator of \(\varvec{\theta }\) in the sense that its asymptotic minimax risk \({\mathcal {R}}_{\varvec{\theta }}(\{\widehat{\varvec{\theta }}_n\})\) is equal to the lower bound \({\mathrm {E}}\big [L\big (\varvec{\xi }^{(\varvec{\theta })}\big )\big ]\).

Theorem 1

Let \(\varTheta =\varvec{A}\times \varvec{B}\) be open, convex and bounded. Assume that conditions A1–A4 are fulfilled. Then the maximum likelihood estimator \(\widehat{\varvec{\theta }}_n=(\widehat{\varvec{\alpha }}_n,\widehat{\varvec{\beta }}_n)\) of \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\) is consistent and asymptotically normal uniformly with respect to \(\varvec{\theta }\) varying in any compact subset \({\mathcal {K}}\) of \(\varTheta =\varvec{A}\times \varvec{B}\),

setting \(\varvec{J}^{(\varvec{\theta })}={\mathrm {diag}}\big [\varvec{J}_a^{(\varvec{\theta })},\varvec{J}_b^{(\varvec{\beta })}\big ]\). Moreover it is locally asymptotically minimax at any \(\varvec{\theta }\in \varTheta \) for any loss function \(L(\cdot )\in {\mathcal {L}}\), in the sense that inequality (11) becomes an equality for \(\bar{\varvec{\theta }}_n=\widehat{\varvec{\theta }}_n\).

Accordingly, under assumptions A1’, A2’, A3 and A4, the conclusions of the theorem are also valid for the integrated signal-plus-noise model (1) observed at discrete instants as presented in Sect. 2.2.

Proof

To prove this theorem, we state that in our framework the conditions in Theorem 1.1 and Corollary 1.1 from Chapter III in Ibragimov and Has’minskii (1981) are fulfilled. Then we are going to establish the following properties.

-

N1

The family \( \{{\mathrm {P}}^{\varvec{\theta }}_{(n)}, \ \varvec{\theta }\in \varTheta \} \) is uniformly LAN in any compact subset of \(\varTheta \).

-

N2

For every \(\varvec{\theta }\in \varTheta \), the \(d\times d\)-matrix \(\varvec{\varPhi }_n^{(\varvec{\theta })}\) is positive definite, \(d=a+b\), and there exists a continuous \(d\times d\)-matrix valued function \((\varvec{\theta },\varvec{\theta }')\mapsto B(\varvec{\theta },\varvec{\theta }')\) such that for every compact subset \({\mathcal {K}}\) of \(\varTheta \)

$$\begin{aligned} \lim _{n\rightarrow \infty } \big |\varvec{\varPhi }_n^{(\varvec{\theta })}\big |=0\qquad \text{ and }\qquad \lim _{n\rightarrow \infty } \big (\varvec{\varPhi }_n^{(\varvec{\theta })}\big )^{-1}\varvec{\varPhi }_n^{(\varvec{\theta }')}=B(\varvec{\theta },\varvec{\theta }') \end{aligned}$$where the convergences are uniform with respect to \(\varvec{\theta }\) and \(\varvec{\theta }'\) varying in \({\mathcal {K}}\).

-

N3

For every compact subset \({\mathcal {K}}\) of \(\varTheta \), there exist \(s>a+b\), \(p\ge 1\), \(B=B({\mathcal {K}})>0\) and \(q=q({\mathcal {K}}) \in {\mathbb {R}}\), such that

$$\begin{aligned} \sup _{\varvec{\theta }\in {\mathcal {K}}}\sup _{\varvec{w}_1,\varvec{w}_2\in {\mathcal {W}}_{\varvec{\theta },r,n}}|\varvec{w}_1-\varvec{w}_2|^{-s}\rho _{p}^{p}\left( {\mathrm {P}}_n^{(\varvec{\theta }+\varvec{w}_1\varvec{\varPhi }_n^{(\varvec{\theta })})}, {\mathrm {P}}_n^{(\varvec{\theta }+\varvec{w}_2\varvec{\varPhi }_n^{(\varvec{\theta })})}\right) < B(1+ r^q) \end{aligned}$$for any \(r>0\). Here \({\mathcal {W}}_{\varvec{\theta },r,n}:=\{\varvec{w}\in {\mathbb {R}}^d: |\varvec{w}|<r\,\,\text{ and }\,\,\varvec{\theta }+\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}\in \varTheta \}\).

-

N4

For any compact subset \({\mathcal {K}}\) of \(\varTheta \), and for every \(N>0\), there exists \(n_1=n_1(N,{\mathcal {K}})>0\) such that

$$\begin{aligned} \sup _{\varvec{\theta }\in {\mathcal {K}}}\ \sup _{n>n_1}\ \sup _{\varvec{w}\in {\mathcal {W}}_{\varvec{\theta },n}}|\varvec{w}|^N H\left( {\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }+\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })})}\right) <\infty . \end{aligned}$$Here \(\rho _p(\cdot ,\cdot )\) is the Hellinger p-distance, and \(H(\cdot ,\cdot )\) is the Hellinger integrale. Their definitions are recalled in “Appendix A”. Recall that \({\mathcal {W}}_{\varvec{\theta },n}:=\{\varvec{w}\in {\mathbb {R}}^d: \varvec{\theta }+\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}\in \varTheta \}\).

-

(1)

In Proposition 1 we have stated that the family \(\{{\mathrm {P}}_{\varvec{\theta }}^{(n)}, \, \varvec{\theta }\in \varTheta \}\) is uniformly LAN in any compact subset of \(\varTheta \) (condition N1). In addition, as \(\varvec{\varPhi }_n^{(\varvec{\theta })}={\mathrm {diag}}\big [\varvec{\varphi }_n^{(\varvec{\theta })},\varvec{\psi }_n^{(\varvec{\beta })}\big ]\), \(\varvec{\varphi }_n^{(\varvec{\theta })}:=\big (T_n \varvec{J}_a^{(\varvec{\theta })}\big )^{\frac{-1}{2}}\) and \(\varvec{\psi }_n^{(\varvec{\beta })}:=\big (n \varvec{J}_b^{(\varvec{\beta })}\big )^{\frac{-1}{2}}\), from the continuity of \(\varvec{\theta }\mapsto \varvec{J}_a^{(\varvec{\theta })}\) and \(\varvec{\beta }\mapsto \varvec{J}_b^{(\varvec{\beta })}\) we deduce that condition N2 is fulfilled with

$$\begin{aligned} B(\varvec{\theta },\varvec{\theta }')={\mathrm {diag}}\left[ \big (\varvec{J}_a^{(\varvec{\theta })}\big )^{\frac{1}{2}}\big (\varvec{J}_a^{(\varvec{\alpha }',\varvec{\beta }')}\big )^{\frac{-1}{2}}\,,\,\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{\frac{1}{2}}\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{\frac{-1}{2}}\right] . \end{aligned}$$ -

(2)

Now we check condition N3. Let be fixed the compact subset \({\mathcal {K}}\subset \varTheta \), the integer \(p\ge 2\) and \(r>0\). Denote \(\varvec{\theta }_n^{(i)}=(\varvec{\alpha }_n^{(i)},\varvec{\beta }_n^{(i)}):=\varvec{\theta }+\varvec{w}_i\varvec{\varPhi }_n^{(\varvec{\theta })}\), and \(\varvec{w}=(\varvec{u},\varvec{v}):=\varvec{w}_2-\varvec{w}_1\). Thanks to the independence of the Gaussian random variables \(Y_{n,i}\), \(i=1,\dots ,n\), we have that (see inequality (18))

$$\begin{aligned} \rho _p^p\big ({\mathrm {P}}_n^{(\varvec{\theta }_n^{(1)})},{\mathrm {P}}_n^{(\varvec{\theta }_n^{(2)})}\big ) \le C_p \rho _2^p\big ({\mathrm {P}}_n^{(\varvec{\theta }_n^{(1)})},{\mathrm {P}}_n^{(\varvec{\theta }_n^{(2)})}\big ). \end{aligned}$$From inequalities (19) as well as (9) and (10) we obtain that

$$\begin{aligned} \rho _2^2\big ({\mathrm {P}}_n^{(\varvec{\theta }_n^{(1)})},{\mathrm {P}}_n^{(\varvec{\theta }_n^{(2)})}\big ) \le C\left( |\varvec{u}|^2\big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{-1/2}\big |^2 +|\varvec{v}|^2\big |\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{-1/2}\big |^2\right) \end{aligned}$$for some \(C>0\). We know that the functions \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{-1/2}\big |\) and \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{-1/2}\big |\) are continuous (remark 2 Sect. 2.1), they are bounded on the compact subset \({\mathcal {K}}\subset \varTheta \). Hence there exists a constant \(b({\mathcal {K}})>0\) such that

$$\begin{aligned} \rho _2^2\big ({\mathrm {P}}_n^{(\varvec{\theta }_n^{(1)})},{\mathrm {P}}_n^{(\varvec{\theta }_n^{(2)})}\big ) \le b({\mathcal {K}})\,|\varvec{w}|^2 \end{aligned}$$and condition N3 is fulfilled with p even integer, \(s=p>\min \{3,a+b\}\) , \(q=0\), and \(B=C_p\,b({\mathcal {K}})^{p/2}\).

-

(3)

It remains to prove that condition N4 is fulfilled. For that purpose we study the Hellinger integral \(H\big ({\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }_n)}\big )\) where \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\) and \(\varvec{\theta }_n=(\varvec{\alpha }_n,\varvec{\beta }_n):=\varvec{\theta }+\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}\). The independence of the Gaussian random variables \(Y_{n,i}\), \(i=1,\dots ,n\), implies the equality:

$$\begin{aligned} H\big ({\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }_n)}\big ) = \prod _{i=1}^n \left( 1+\frac{y_{n,i}^2}{2(1+y_{n,i})}\right) ^{-1/4} \exp \left( \frac{-x_{n,i}^2}{4(2+y_{n,i})}\right) \end{aligned}$$where \(x_{n,i}\) and \(y_{n,i}\) are defined according to relations (7) and (8) with evident modifications.

-

(i)

Assume that \(\varvec{\theta }\in {\mathcal {K}}\) and \(|\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}|\le \gamma _1\gamma _2^{-1}/2\). Then equality (8) and assumption (A2) imply that \(|y_{n,i}|\le 1/2\) for every i. Thanks to inequality \((1+a)^{-1/4} \le e^{-a/8}\) for \(0\le a\le 1/2\), we deduce that

$$\begin{aligned} H\big ({\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }_n)}\big )\le & {} \prod _{i=1}^n \exp \left( \frac{-y_{n,i}^2}{16(1+y_{n,i})}\right) \exp \left( \frac{-x_{n,i}^2}{4(2+y_{n,i})}\right) \\\le & {} \exp \left( \frac{-1}{24}\sum _{i=1}^n(x_{n,i}^2+y_{n,i}^2)\right) . \end{aligned}$$First, Taylor expansion formula applied to equalities (7) and (8) with assumptions (A1) and (A2), entail that for any \(\epsilon >0\), there exists \(\nu _{\varepsilon }>0\) such that for \(|\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}|\le \nu _{\epsilon }\) we have the inequality:

$$\begin{aligned} \left| x_{n,i}^2-\left( \frac{\varvec{u}\varvec{\varphi }_n^{(\varvec{\theta })}\nabla _{\varvec{\alpha }}^{^{\,\,\varvec{*}\!\!}}F_{n,i}(\varvec{\alpha })}{G_{n,i}(\varvec{\beta })}\right) ^2\right| \le T_{n}^{-1}h_{n,i}|\varvec{u}|^2\big |(\varvec{J}_a^{(\varvec{\theta })})^{\frac{-1}{2}}\big |^2 \epsilon . \end{aligned}$$Second, by assumption (A3), for every \(\epsilon >0\), there exists \(n_{\epsilon }>0\) such that for \(n\ge n_{\epsilon }\),

$$\begin{aligned} \left| \sum _{i=1}^n\left( \frac{\varvec{u}\varvec{\varphi }_n^{(\varvec{\theta })}\nabla _{\varvec{\alpha }}^{^{\,\,\varvec{*}\!\!}}F_{n,i}(\varvec{\alpha })}{G_{n,i}(\varvec{\beta })}\right) ^2-|\varvec{u}|^2\right| \le |\varvec{u}|^2\big |(\varvec{J}_a^{(\varvec{\theta })})^{\frac{-1}{2}}\big |^2\epsilon . \end{aligned}$$In the same way we can study \(y_{n,i}^2\). Then, by the continuity of the positive functions \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{-1/2}\big |\) and \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{-1/2}\big |\), we deduce the existence of \(n_1=n_1({\mathcal {K}})>0\) and \(\nu >0\) such that for every \(\varvec{\theta }\in {\mathcal {K}}\), \(n\ge n_1\) and \(\varvec{w}\in {\mathcal {W}}_{\varvec{\theta },n}\) with \(\big |\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}\big |\le \nu \) we have:

$$\begin{aligned} H\big ({\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }_n)}\big ) \le \exp \left( \frac{-1}{24}\sum _{i=1}^n(x_{n,i}^2+y_{n,i}^2)\right) \le e^{-|\varvec{w}|^2/48}. \end{aligned}$$ -

(ii)

Assumption (A2) implies that

$$\begin{aligned} |x_{n,i}|^2\ge \gamma _1^{-1}h_{n,i}^{-1}\big (F_{n,i}(\varvec{\alpha })-F_{n,i}(\varvec{\alpha }_n)\big )^2. \end{aligned}$$Then, from the identifiability assumption A4, for \(\nu >0\) there exist \(\mu _{\nu ,1}>0\) and \(n_{\nu ,1}>0\) such that for \(n>n_{\nu ,1}\), with \(|\varvec{u}\varphi _n^{(\varvec{\theta })}|=|\varvec{\alpha }_n-\varvec{\alpha }|\ge \nu \) we have:

$$\begin{aligned} \frac{1}{T_n}\sum _{i=1}^n |x_{n,i}|^2 \ge \mu _{\nu ,1}. \end{aligned}$$Since \(|\varvec{u}\phi _n^{(\varvec{\theta })}|=|\varvec{\alpha }_n-\varvec{\alpha }|\le {\mathrm {diam}}(\varvec{A})\), we deduce that

$$\begin{aligned} \sum _{i=1}^n |x_{n,i}|^2 \ge \frac{T_n\mu _{\nu ,1}|\varvec{u}\phi _n^{(\varvec{\theta })}|^2}{{\mathrm {diam}}(\varvec{A})^2} \ge \frac{|\varvec{u}|^2\mu _{\nu ,1}\big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{\frac{1}{2}}\big |^2}{{\mathrm {diam}}(\varvec{A})^2}. \end{aligned}$$Notice that for the last inequality we have used the relation : \(|\varvec{u}\phi _n^{(\varvec{\theta })}|^2=T_n^{-1}\big |\varvec{u}\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{\frac{-1}{2}}\big |^2 \ge T_n^{-1}|\varvec{u}|^2\big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{\frac{1}{2}}\big |^2\). Next, from the equality \(\ln (1+a)=a\int _0^1(1+ta)^{-1}dt\) for \(a>-1\), and thanks to assumption (A2) we easily establish that

$$\begin{aligned}&\ln \left( 1+\frac{y_{n,i}^2}{1+y_{n,i}}\right) =\int _0^1\frac{\big (G_{n,i}^2(\varvec{\beta }_n)-G_{n,i}^2(\varvec{\beta })\big )^2 dt }{tG_{n,i}^4(\varvec{\beta }_n)+tG_{n,i}^4(\varvec{\beta })+(1-t)G_{n,i}^2(\varvec{\beta }_n)G_{n,i}^2(\varvec{\beta })}\\&\quad \ge \frac{\big (G_{n,i}^2(\varvec{\beta }_n)-G_{n,i}^2(\varvec{\beta }_n)\big )^2 }{2G_{n,i}^2(\varvec{\beta }_n^{(2)})G_{n,i}^2(\varvec{\beta }_n^{(1)})} \ge \frac{\big (G_{n,i}^2(\varvec{\beta }_n)-G_{n,i}^2(\varvec{\beta })\big )^2 }{2\gamma _1^2h_{n,i}^2}. \end{aligned}$$The identifiability assumption (A4) entails that for \(\nu >0\) there exist \(\mu _{\nu ,2}>0\) and \(n_{\nu ,2}>0\) such that for \(n>n_{\nu ,2}\) and \(|\varvec{v}\psi _n^{(\varvec{\beta })}|=|\varvec{\beta }_n-\varvec{\beta }|\ge \nu \) we have:

$$\begin{aligned} \frac{1}{n}\sum _{i=1}^{n}\ln \left( 1+\frac{y_{n,i}^2}{1+y_{n,i}}\right) \ge \mu _{\nu ,2}. \end{aligned}$$As previously we deduce that

$$\begin{aligned} \sum _{i=1}^{n}\ln \left( 1+\frac{y_{n,i}^2}{1+y_{n,i}}\right) \ge \frac{|\varvec{v}|^2\mu _{\nu ,2}\big |\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{\frac{1}{2}}\big |^2}{{\mathrm {diam}}(\varvec{B})^2}. \end{aligned}$$Then, by the continuity of the positive functions \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_a^{(\varvec{\theta })}\big )^{-1/2}\big |\) and \(\varvec{\theta }\mapsto \big |\big (\varvec{J}_b^{(\varvec{\beta })}\big )^{-1/2}\big |\), we deduce the existence of \(\mu _{\nu }=\mu (\nu ,{\mathcal {K}})>0\) and \(n_2=n_2(\nu ,{\mathcal {K}})>0\) such that for every \(\varvec{\theta }\in {\mathcal {K}}\), \(n\ge n_2\) and \(\varvec{w}\in {\mathcal {W}}_{\varvec{\theta },n}\) and \(\big |\varvec{w}\varvec{\varPhi }_n^{(\varvec{\theta })}\big |\ge \nu \) we have

$$\begin{aligned} H\big ({\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }_n)}\big ) \le e^{-|\varvec{w}|^2\mu _{\nu }}. \end{aligned}$$ -

(iii)

From previous parts (i) and (ii), there exists \(n_o>0\) and \(\mu >0\) such that for every \(\varvec{\theta }\in {\mathcal {K}}\), \(n\ge n_o\) and \(\varvec{w}\in {\mathcal {W}}_{\varvec{\theta },n}\), we have:

$$\begin{aligned} H\big ({\mathrm {P}}_n^{(\varvec{\theta })},{\mathrm {P}}_n^{(\varvec{\theta }_n)}\big ) \le e^{-|\varvec{w}|^2\mu }. \end{aligned}$$Hence condition N4 is satisfied. This achieves the proof of the theorem. \(\square \)

4.2 Bayesian estimator

In this section, the unknown parameter \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\) is supposed to be a random vector with known prior density distribution \(\pi (\cdot )\) on the parameter set \(\varTheta =\varvec{A}\times \varvec{B}\). We are going to study the property of the Bayesian estimator \(\widetilde{\varvec{\theta }}_n\) that minimizes the mean Bayesian risk defined as

where for simplicity of presentation the loss function \(l(\cdot )\) is equal to \(l(\varvec{\theta })=|\varvec{\theta }|^r\) for some \(r>0\) (see e.g. Ibragimov and Has’minskii 1981). Here \(\varvec{\delta }_n={\mathrm {diag}}\big [\sqrt{T_n}{\mathbb {I}}_{a\times a},\sqrt{n}{\mathbb {I}}_{b\times b}\big ]\). Then, we state that

Theorem 2

Let \(\varTheta =\varvec{A}\times \varvec{B}\) be open convex and bounded. Assume that the conditions of Theorem 1 are fulfilled. Assume that the prior density \(\pi (\varvec{\theta })\) is continuous and positive on \(\varTheta \) and that the loss function \(l(\varvec{\theta })=|\varvec{\theta }|^a\) for some \(a>0\). Then, uniformly with respect to \(\varvec{\theta }=(\varvec{\alpha },\varvec{\beta })\) varying in any compact subset \({\mathcal {K}}\) of \(\varTheta \), the corresponding Bayesian estimator \(\widetilde{\varvec{\theta }}_n=(\widetilde{\varvec{\alpha }}_n,\widetilde{\varvec{\beta }}_n)\) converges in probability and is asymptotically normal:

Moreover, the Bayesian estimator \(\widetilde{\varvec{\theta }}_n\) is locally asymptotically minimax at any \(\varvec{\theta }\in \varTheta \) for any loss function \(L(\cdot )\in {\mathcal {L}}\), in the sense that inequality (11) becomes an equality for \(\bar{\varvec{\theta }}_n=\widetilde{\varvec{\theta }}_n\).

Proof

This is a direct consequence of Theorem 2.1 in Chapter III of Ibragimov and Has’minskii (1981) and the proof of Theorem 1. \(\square \)

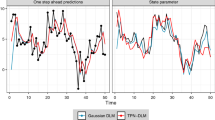

5 Linear parameter models

Consider the specific case where \(f(\varvec{\alpha },t)=\varvec{\alpha }\varvec{f}(t)^{^{\varvec{*}\!}}=\alpha _1 f_1(t)+\cdots +\alpha _af_a(t)\), and \(\sigma ^2(\beta ,t)=\beta \sigma ^2(t)\). \(\varvec{\theta }=(\varvec{\alpha },\beta )\) and \(\varTheta =\varvec{A}\times \varvec{B}\subset {\mathbb {R}}^a\times {\mathbb {R}}^+\):

In this case \(a\ge 1\), \(b=1\). We assume that

and there exists a positive definite \(a\times a\)-matrix \(\varvec{J}_a\) which fulfils:

Here \(\varvec{f}(\cdot ):=\big (f_1(\cdot ),\dots ,f_a(\cdot )\big )\), \(\varvec{F}_{n,i}:=\int _{t_{n,i-1}}^{t_{n,i}}\varvec{f}(t)\,dt\) and \(G_{n,i}^2:=\int _{t_{n,i-1}}^{t_{n,i}}\sigma ^2(t)\,dt\).

Using the notation of Sect. 2 we have that \(F_{n,i}(\varvec{\alpha })=\varvec{\alpha }\varvec{F}_{n,i}\) and \(G_{n,i}^2(\beta )=\beta G_{n,i}^2\), \(\varvec{J}_a^{(\varvec{\theta })}=\beta ^{-2}\varvec{J}_a\), \(\varvec{J}_b^{(\beta )}=\beta ^{-2}/2\),

and

Then Theorems 1 and 2 can be applied in this case. Furthermore the maximum likelihood estimator \(\widehat{\varvec{\theta }}_n=(\widehat{\varvec{\alpha }}_n,\widehat{\beta }_n)\) has an explicit expression

and we readily obtain that \(\widehat{\varvec{\theta }}_n\) converges in norm \(L^r\) to \(\varvec{\theta }\) as \(n\rightarrow \infty \) for any \(r\ge 1\).

References

Blanke D, Vial C (2014) Global smoothness estimation of a Gaussian process from general sequence designs. Electron J Stat 8:1152–1187

Corduneanu C (1968) Almost periodic functions. Interscience Publishers Wiley, Hoboken

Dehay D, Dudek AE, El Badaoui M (2017) Bootstrap for almost cyclostationary processes with jitter effect. Digital Signal Proc 73:93–105

Dzhaparidze K, Valkeila E (1990) On the Hellinger type distances for filtered experiments. Probab Theory Relat Fields 85:105–117

Florens-Zmirou D (1989) Approximate discrete-time schemes for statistics of diffusion processes. Statistics 20(4):547–557

Genon-Catalot V (1990) Maximum contrast estimation for diffusion process from discrete observations. Statistics 21:99–116

Gushchin AA, Küchler U (2003) On parametric statistical models for stationary solutions of affine stochastic delay differential equations. Math Methods Stat 12(1):31–61

Gushchin A, Valkeila E (2017) Quadratic approximation for log-likelihood ratio processes. In: Panov Vladimir (ed) Modern problems of stochastic analysis and statistics, selected contributions in Honor of Valentin Konakov, Springer Proceedings in Mathematics & Statistics 208, pp 179–215. Springer International Publishing AG, Cham

Hájek J (1972) Local asymptotic minimax and admissibility in estimation. In: Proceedings of 6-th Berkeley symposium mathematics statistical probabilities

Höpfner R (2014) Asymptotic statistics with a view to stochastic processes. De Gruyter, Berlin

Ibragimov IA, Has’minskii RZ (1981) Statistical estimation, asymptotic theory. Springer, New York

Jacod J, Shiryaev AN (1987) Limit theorems for stochastic processes. Springer, Berlin

Kutoyants Y (1984) Parameter estimation for stochastic processes. In: Research and exposition in mathematics, vol 6. Heldermann-Verlag, Berlin

Le Cam L (1969) Théorie Asymptotique de la Décision Statistique. Presses de l’Université de Montréal, Montréal

Mishra MN, Prakasa Rao BLS (2001) Asymptotic minimax estimation in nonlinear stochastic differential equations from discrete observations. Stat Decis 19:121–136

Sacks J, Ylvisaker D (1968) Designs for regression problems with correlated errors: many parameters. Ann Math Stat 39:49–69

Uchida M, Yoshida N (2012) Adaptative estimation of an ergodic diffusion process based on sampled data. Stoch Process Appl 122:2885–2924

Acknowledgements

The authors express their gratitude to an anonymous referee for a thorough reading of the manuscript and for the valuable suggestion to deduce the main results using Hellinger-type distances. The authors would like also to thank Pr. Yury Kutoyants for helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

First we recall the definitions of some notions that are needed in the paper. For more information the readers are referred to Jacod and Shiryaev (1987) and Dzhaparidze and Valkeila (1990).

Let \({\mathrm {P}}\) and \(\tilde{{\mathrm {P}}}\) be two probability measures on a measurable space \((\varOmega ,{\mathcal {F}})\), and let Q be any measure dominating \({\mathrm {P}}\) and \(\tilde{{\mathrm {P}}}\). Put \(Z=d{\mathrm {P}}/dQ\) and \({\tilde{Z}}=d\tilde{{\mathrm {P}}}/dQ\). The Hellinger p-distance \(\rho _p({\mathrm {P}},\tilde{{\mathrm {P}}})\) of order \(p\ge 1\) between \({\mathrm {P}}\) and \(\tilde{{\mathrm {P}}}\), is defined by \(\rho _p^p({\mathrm {P}},\tilde{{\mathrm {P}}}) :={\mathrm {E}}_Q\big [|Z^{1/p}-{\tilde{Z}}^{1/p}|^p\big ],\) and \(\rho _2({\mathrm {P}},\tilde{{\mathrm {P}}})\) is called simply Hellinger distance. The Hellinger integral of order \(\alpha \in (0,1)\) of \({\mathrm {P}}\) and \(\tilde{{\mathrm {P}}}\) is equal to \(H(\alpha ;{\mathrm {P}},\tilde{{\mathrm {P}}}) :={\mathrm {E}}_Q\big [Z^{\alpha }{\tilde{Z}}^{1-\alpha }\big ]\). When \(\alpha =1/2\), \(H({\mathrm {P}},\tilde{{\mathrm {P}}}):=H(1/2;{\mathrm {P}},\tilde{{\mathrm {P}}})\) is the Hellinger integral of \({\mathrm {P}}\) and \(\tilde{{\mathrm {P}}}\), and \(H({\mathrm {P}},\tilde{{\mathrm {P}}})=1-\frac{1}{2}\rho _2^2({\mathrm {P}},\tilde{{\mathrm {P}}})\). Finally introduce the symmetrized Kullback-Leibler divergence by \(J({\mathrm {P}},\tilde{{\mathrm {P}}}):={\mathrm {E}}_Q\big [Z\ln (Z/{\tilde{Z}})+{\tilde{Z}}\ln ({\tilde{Z}}/Z)\big ]\) if \({\mathrm {P}}\sim \tilde{{\mathrm {P}}}\), and \(J({\mathrm {P}},\tilde{{\mathrm {P}}})=\infty \) otherwise. It is easy to verify that all the previous definitions do not depend on the choice of Q.

Next, we establish some technical results for non-centered normal distributions. These results have been stated in Lemma 4.1 and 4.2 of Gushchin and Küchler (2003) in the case of zero-mean normal distributions.

First we consider two normal distributions on \({\mathbb {R}}\).

Lemma 1

Consider two normal distributions \(\varrho ={\mathcal {N}}(\mu ,\sigma ^2)\) and \(\tilde{\varrho }={\mathcal {N}}(\tilde{\mu },\tilde{\sigma }^2)\) on \({\mathbb {R}}\). Let \(\varrho _o={\mathcal {N}}(0,1)\) and \(\tilde{\varrho }'={\mathcal {N}}(x,1+y)\) where \(x=(\tilde{\mu }-\mu )/\sigma \) and \(y=(\tilde{\sigma }^2-\sigma ^2)/\sigma ^2\). Then,

and for every \(p\ge 1\) there exists \(C_p>0\), such that

Moreover,

as \(|x|+|y| \rightarrow 0\).

Proof

The lemma can be established with elementary computations. The proof is left to the reader (see Gushchin and Küchler 2003). Notice that \(1+y=\tilde{\sigma }_2/\sigma _2>0\). \(\square \)

When \(\varOmega ={\mathbb {R}}^{n}\), \({\mathrm {P}}_n=\varrho _1\times \cdots \times \varrho _n\) and \(\tilde{{\mathrm {P}}}_n=\tilde{\varrho }_1\times \cdots \times \tilde{\varrho }_n\) where \(\varrho _i\) and \(\tilde{\varrho }_i\), \(i=1,\dots ,n\) are probability measures on \({\mathbb {R}}\), it is easy to verify

Lemma 2

If \(\varrho _i\) and \(\tilde{\varrho }_i\) are any normal distributions, then

For every even integer \(p\ge 2\) there exists \(C_p>0\) depending only of p such that

Moreover, there exists \(C>0\) such that if \(\varrho _i={\mathcal {N}}(\mu _i,\sigma _i^2)\), \(\tilde{\varrho }_i={\mathcal {N}}(\tilde{\mu _i},\tilde{\sigma }_i^2)\) and where \(x_i=(\tilde{\mu }_i-\mu _i)/\sigma _i\) and \(y_i=(\tilde{\sigma }_i^2-\sigma _i^2)/\sigma _i^2\), we have:

Proof

-

(i)

Inequalities (17) are direct consequence of Lemma 1 as well as the inequalities, \(1+\sum _{i} a_i\le \prod _{i} (1+a_i)\le \exp \left( \sum _{i} a_i\right) \) for \(a_i\ge 0\), \(i=1,\dots \)

-

(ii)

To prove inequality (18) we adapt the proof given by Gushchin and Küchler (2003) when the normal distributions have zero means. First, let \(p>2\), integer and even. From relation (4.1) in Gushchin and Küchler (2003) (see also Corollary 3.1 in Dzhaparidze and Valkeila 1990), we know that

$$\begin{aligned} \rho _p^p({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\le & {} C_p\left( \left( \frac{1}{2}\sum _{i=1}^n\rho _2^2(\varrho _i,\tilde{\varrho }_i)\right) ^{p/2}+\sum _{i=1}^n\rho _p^p(\varrho _i,\tilde{\varrho }_i)\right) \end{aligned}$$for some \(C_p>0\). Then thanks to equalities (12) and (13), we can consider that \(\varrho _i={\mathcal {N}}(0,1)\) and \(\tilde{\varrho }_i={\mathcal {N}}(x_i,y_i)\), \(y_i>-1\), without loss of generality. Then inequality (14) and the fact that \(p\ge 2\) imply that

$$\begin{aligned} \rho _p^p({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n) \le C_p'\left( \sum _{i=1}^n (x_i^2+y_i^2)\right) ^{p/2}. \end{aligned}$$(20)for some \(C_p'>0\). Hence inequality (19) is proved. From relation (13) applied to \(J(\varrho _i,\tilde{\varrho }_i)\), we also deduce that

$$\begin{aligned} \rho _p^p({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\le C_p'(1+\sup _iy_i)^{p/2}\big (J({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\big )^{p/2}. \end{aligned}$$If \(\rho _2^2({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\ge 1\) we trivially have that \(\rho _p^p({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\le 2\le 2\rho _2^p({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\). Assume now that \(\rho _2^2({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\le 1\), that is, \(H({\mathrm {P}},\tilde{{\mathrm {P}}})\ge \frac{1}{2}\). In this case, relation (17) entails that \(J({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\le 30\rho _2^2({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\). Moreover, the Hellinger integrales being bounded by 1, from equalities (16) and (12) we obtain that

$$\begin{aligned} 2^4\ge H^{-4}({\mathrm {P}}_n,\tilde{{\mathrm {P}}}_n)\ge H^{-4}(\varrho _i,\tilde{\varrho }_i)\ge 1+\frac{y_i^2}{4(1+y_i)} \end{aligned}$$and \(y_i\le y_o:=30+8\sqrt{15}\), \(i=1,\dots ,n\). Then inequality (20) entails that

$$\begin{aligned} \rho _p^p({\mathrm {P}},\tilde{{\mathrm {P}}}) \le 60C_p'(1+y_o)^{p/2}\rho _2^{p}({\mathrm {P}},\tilde{{\mathrm {P}}}). \end{aligned}$$Hence inequality (18) is proved. \(\square \)

Appendix B

To illustrate assumptions A3 and A4 we state the following results the proofs of which are left to the reader. (See also the particular case of the linear parameter model in Sect. 5).

Almost periodic functions First recall that a function \(t\mapsto \phi (\varvec{\chi },t)\) is almost periodic in \({\mathbb {R}}\) uniformly with respect to \(\varvec{\chi }\) varying in a set X when for every \(\epsilon >0\), there exists \(l_{\epsilon }>0\) such that for any \(a\in {\mathbb {R}}\) there is \(\rho \in [a,a+l_{\epsilon }]\) for which

See e.g chapters II and IV in Corduneanu (1968). As an example, let k be a positive number and let \(\lambda _1,\dots ,\lambda _k\) be k distinct real numbers. Then the function \(\phi (\varvec{\chi },t)=\chi _1\cos (\lambda _1t)+\cdots +\chi _k\cos (\lambda _kt)\) is almost periodic in t uniformly with respect to \(\varvec{\chi }=(\chi _1,\dots ,\chi _k)\) varying in any compact subset X of \({\mathbb {R}}^k\).

Now assume that the function \(t\mapsto \big (f(\varvec{\alpha },t),\nabla \!_{\varvec{\alpha }}f(\varvec{\alpha },t)\Big )\) is almost periodic in \({\mathbb {R}}\) uniformly with respect to \(\varvec{\alpha }\) varying in \(\varvec{A}\), the function \(t\mapsto \big (\sigma ^2(\varvec{\beta },t),\nabla \!_{\varvec{\beta }}\sigma ^2(\varvec{\beta },t)\big )\) is almost periodic in \({\mathbb {R}}\) uniformly with respect to \(\varvec{\beta }\) varying in \(\varvec{B}\), \(\inf _{\varvec{\beta },t}\sigma ^2(\varvec{\beta },t)>0\) and \(h_n\rightarrow 0\) as \(n\rightarrow \infty \). Then \(\varvec{J}_a^{(\varvec{\theta })}\) and \(\mu _a(\varvec{\alpha },\varvec{\alpha }')\) exists and

the convergences being uniform with respect to \(\varvec{\alpha }\) and \(\varvec{\alpha }'\) varying in \(\varvec{A}\) and with respect to \(\varvec{\beta }\) varying in \(\varvec{B}\). Notice that \(\mu _a(\varvec{\alpha },\varvec{\alpha }')>0\) as soon as there exists t such that \(f(\varvec{\alpha },t)\ne f(\varvec{\alpha }',t)\). If in addition, \(t_{n,i}-t_{n,i-1}=h_n \) then \(\varvec{J}_b^{(\varvec{\beta })}\) and \(\mu _b(\varvec{\beta },\varvec{\beta }')\) exist and

the convergences being uniform with respect to \(\varvec{\beta }\) and \(\varvec{\beta }'\) varying in \(\varvec{B}\).

Periodic functions When the functions \(f(\varvec{\alpha },t)\) and \(\sigma ^2(\varvec{\beta },t)\) are periodic in t with the same period \(P>0\), we obtain expressions for \(\varvec{J}_a^{(\varvec{\theta })}\), \(\mu _a(\varvec{\alpha },\varvec{\alpha }')\), \(\varvec{J}_b^{(\varvec{\beta })}\) and \(\mu _b(\varvec{\beta },\varvec{\beta }')\). For continuous functions when \(h_n\rightarrow 0\) we have the relations:

and

If in addition, \(t_{n,i}-t_{n,i-1}=h_n \) then

and

Now we no longer assume that the delays between two observations tend to 0, but we assume that the sampling scheme has some periodic feature, that is the instants of observation are defined as \(0\le t_0<\cdots <t_\nu =P\), and \(t_{i+j\nu }=t_i+jP\), for some \(\nu \in {\mathbb {N}}\), and for any \(i=0,\dots ,\nu \) and any \(j\in {\mathbb {N}}\). Then we obtain that

and

where we have omitted the unnecessary index n. Furthermore

and

Furthermore when we assume that \(t_{i}-t_{i-1}=h>0\) fixed and \(P=\nu h\), \(\nu \in {\mathbb {N}}\), we obtain that

and

Rights and permissions

About this article

Cite this article

Dehay, D., El Waled, K. & Monsan, V. Efficient parametric estimation for a signal-plus-noise Gaussian model from discrete time observations. Stat Inference Stoch Process 24, 17–33 (2021). https://doi.org/10.1007/s11203-020-09225-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11203-020-09225-1