Abstract

The discrepancies among various global university rankings derive us to compare and correlate their results. Thus, the 2015 results of six major global rankings are collected, compared and analyzed qualitatively and quantitatively using both ranking orders and scores of the top 100 universities. The selected six global rankings include: Academic Ranking of World Universities (ARWU), Quacquarelli Symonds World University Ranking (QS), Times Higher Education World University Ranking (THE), US News & World Report Best Global University Rankings (USNWR), National Taiwan University Ranking (NTU), and University Ranking by Academic Performance (URAP). Two indexes are used for comparison namely, the number of overlapping universities and Pearson’s/Spearman’s correlation coefficients between each pair of the studied six global rankings. The study is extended to investigate the intra-correlation of ARWU results of the top 100 universities over a 5-year period (2011–2015) as well as investigation of the correlation of ARWU overall score with its single indicators. The ranking results limited to 49 universities appeared in the top 100 in all six rankings are compared and discussed. With a careful analysis of the key performance indicators of these 49 universities one can easily define the common features for a world-class university. The findings indicate that although each ranking system applies a different methodology, there are from a moderate to high correlations among the studied six rankings. To see how the correlation behaves at different levels, the correlations are also conducted for the top 50 and the top 200 universities. The comparison indicates that the degree of correlation and the overlapping universities increase with an increase in the list length. The results of URAP and NTU show the strongest correlation among the studied rankings. Shortly, careful understanding of various ranking methodologies are of utmost importance before analysis, interpretation and usage of ranking results. The findings of the present study could inform policy makers at various levels to develop policies aiming to improve performance and thereby enhance the ranking position.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Global university rankings have reshaped the context of higher education and gained increasing interest and importance from a broad range of stakeholders and interested groups including students, parents, institutions, academics, policy makers, political leaders, donors/funding agencies, news media, etc. They use ranking results for different purposes (Bastedo and Bowman 2011; Dill and Soo 2005; Hazelkorn 2008, 2009, 2014, 2015a, b; Marope et al. 2013; Salmi and Saroyan 2007). Many evidences and signs can be observed for such interest such as growing number of ranking systems, conferences/meetings/training about rankings, visitors to ranking websites, advertisings, etc.

The global rankings benchmark institutions, fields, subjects and scientists worldwide according to various indicators. These indicators are largely research oriented using bibliometric measures retrieved from Web of Science, Scopus or Google Scholar databases. Global rankings have defined various approaches for university excellence. Therefore, they have used different criteria and weights. Academic Ranking of World Universities (ARWU) is based on excellent academic achievement using superstars’ indicators. Quacquarelli Symonds World University Ranking (QS) and Times Higher Education World University Ranking (THE) are focused on the reputation and internationalization of universities. University Ranking by Academic Performance (URAP) and National Taiwan University Ranking (NTU) focus only on scientific research performance. US News & World Report Best Global University Rankings (USNWR) emphasizes academic research and the overall reputation.

Axiomatically, global rankings with different methodologies might produce different results. Therefore, there is of utmost importance for all stakeholders to know that whether a good university is good using different ranking systems and applying different indicators. In an attempt to have a concrete answer to the above question, the present study is trying to analyze and understand deeply the correlation among six global ranking 2015 results.

Related literature

Comparative studies of ranking systems attract authors’/scientists’ interests to be the main topic for their research. This is due to the differences in methodologies that have an impact on ranking results. The comparative studies took several stages started with a comparison focused on methodologies, such as indicators employed, their weights and emphases. Bowden (2000) divided the indicators into teaching quality, research quality, educational infrastructure, etc. Dill and Soo (2005) classified the indicators into input, process and output/outcome, whereas Usher and Savino (2007) categorized ranking indicators into seven categories namely beginning characteristics, learning inputs-staff, learning inputs-resources, learning outputs, financial outcomes, research, and reputation. As stated by Cakir et al. (2015), these comparison studies are mainly based on qualitative analysis and not on quantitative measures such as correlation coefficients and overlapping. However, several quantitative comparative studies were also conducted (Aguillo et al. 2010; Chen and Liao 2012; Cheng 2011; Hou et al. 2011; Huang 2011; Khosrowjerdi and Seif Kashani 2013).

Aguillo et al. (2010) used three rank-order similarity measures to study the correlations among 2008 results of five global ranking systems namely ARWU, THES–QS, Webometrics, HEEACT (NTU in this study) and ranking of the Center for Science & Technology Studies at Leiden University (CWTS). They estimated the degree of similarity in the top 10, 100, 200 and 500 universities. They found high similarities between ARWU and HEEACT, whereas the bigger differences were noticed between THES–QS and Webometrics. Hou et al. (2011) studied the 2009 rankings’ results of ARWU, THES–QS, THE and HEAACT. They found that there was strong correlation between ARWU total ranking with its own single indicator ‘Highly cited researchers’ in 21 broad subject categories, HiCi (over 0.8), and ‘Article published in Nature and Science, N&S,’ (over 0.9) for universities between top 30 and top 100. Moreover, the HEEACT’s total ranking had strong correlation (0.8) with all of its single indicators except ‘number of articles’ of last 11 years. Huang (2011) used the top 20 universities of ARWU, THE, QS and HEEACT for 2010 to conclude that there was a similarity between ARWU and HEEACT ranking results as well as between THE and QS results. Cheng (2011) analyzed the 2011 ranking results of the top 100 institutions in three global ranking systems namely ARWU, QS and THE. He found that only 35 universities appeared in all three rankings. Also, he calculated the correlation coefficient values as 0.42, 0.54 and 0.7 for QS/THE, QS/ARWU and ARWU/THE ranking pairs respectively. The coefficients were positive and significant at <0.05.

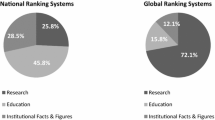

Chen and Liao (2012) investigated correlation of the results of four global rankings and their indicators over 4 years period (2007–2010). They concluded that the overlapping rate of the top 200 in ARWU, THES–QS and HEEACT was 55 % and decreased to 41 % if the Webometrics result was considered as well. They found that the strongest correlation was between ARWU and HEEACT. Also, they observed strong correlation between overall ranking and single indicators such as between ARWU overall ranking with ‘highly cited papers’ and ‘publications in Nature and Science.’ Khosrowjerdi and Seif Kashani (2013) studied the similarities and status of top Asian universities in the list of the top 200 universities in six global rankings (for the year 2010) namely, ARWU, QS, THE, HEEACT, Webometrics and CWTS. They concluded that there were some parallelisms among these international rankings. The Spearman’s correlation coefficients were 0.78, 0.53 and 0.58 for QS/Webometrics, QS/THE and ARWU/HEEACT ranking pairs, respectively. Cakir et al. (2015) conducted a comprehensive systematic comparison of national and global university ranking systems in terms of indicators, coverage and ranking results (rank-order). They observed that the national rankings had a large number of indicators mainly focused on educational and institutional measures, whereas fewer indicators that were focused on research were the key features of global rankings.

Although previous studies argued that there were some similarities and differences among ranking systems, most of these studies are based on qualitative analysis. Moreover, the studies that employed quantitative indexes largely focused on rank-order correlations or overlapping. Obviously, the studies with quantitative analysis are scarce and there is a need for more studies to maximize our learning, knowledge and understanding. Shortly, the literature review has confirmed that the comparative studies for ranking systems are focused on three dimensions. The first is based on ranking methodologies, whereas the second and the third are based on ranking results using rank-order and score, respectively. The first dimension attracted many authors, whereas in the latest years, the second and third dimensions emerged, but with more focus on correlations using rank order.

To our knowledge, the present study is the first to use six global rankings for quantitative correlation analysis using both rank-order and scores for the top 100 universities in their lists. Thus, it is a comprehensive study to collect and include broad global ranking results with different methodologies. This study is focused only on web-independent global rankings to analyze deeply and define the similarities among the six major ranking systems.

Therefore, the present work aims to explore the degree of consistency/inconsistency for results of the selected six global rankings in evaluating and ranking the top 100 universities by both quantitative and qualitative comparisons. The work is extended to suggest how to use ranking results positively to enhance the performance and ranking of higher education institutions. Moreover, the common universities appear in the six global ranking are analyzed to know the reasons behind such outstanding status of universities. This is in line with the current open healthy debate and discussion that can help ranking providers to improve ranking methodologies through responding to various views of experts and stakeholders.

Research questions

In the light of literature review, the following research questions are suggested:

-

To what extent the six most popular global ranking systems produce comparable ranking results and scores?

-

How does the correlation behave at different levels?

-

What are the reasons for consistency or inconsistency among six ranking results?

-

What are the major indicators that influence the ranking results of ARWU?

-

Which universities are covered in the top 100 of all studied six rankings?

-

What are the main features of a world-class university (WCU)?

-

What are the policy implications of the findings of this study?

Study design, data and methods

The study is focused on the top 100 universities of six major global rankings namely Academic Ranking of World Universities (ARWU), Quacquarelli Symonds World University Ranking (QS), Times Higher Education World University Ranking (THE), US News & World Report Best Global University Rankings (USNWR), National Taiwan University Ranking (NTU), and University Ranking by Academic Performance (URAP). These six rankings were selected due to their publicity and information availability about ranking methodology as well as their wide range of indicators employed offered opportunity for deep analysis and understanding. They had different characteristics such as different critical indicators, indicators’ number (6–13) and weights, data sources, purposes and publishers, etc. These features might enrich the present study and exhibit different dimensions for interpretation. These rankings are using different indicators. Four (ARWU, USNWR, NTU and URAP) out of six rankings’ methodologies are based on research metrics, whereas the other two (QS and THE) are mainly based on reputation using surveys. Leiden and SCImago rankings were excluded due to the non-availability of overall ranking data. On the other hand, Webometrics ranking was excluded due to the absence of scores for ranked universities. Complete methodologies and information are available on the official websites of ranking providers (ARWU, NTU, URAP, USNWR, QS and THE).

The data for six global rankings’ results (for the year 2015) were collected for the top 100 universities. The data gathering involved the universities’ names as well as their ranking positions and scores. Due to the problem that ARWU published only the scores for the top 100, the study was limited to the top 100 for all six ranking systems. Moreover, the data are collected for the top 50 (six global rankings) and for the top 200 (five rankings after excluding ARWU) to test the effect of the list length of top universities on the correlation.

The data were analyzed, with the help of SPSS (version 22), to compare each pair of ranking systems through Pearson’s and Spearman’s correlation coefficients. The correlations were examined using ranking orders and scores achieved by each university. Correlation coefficients were calculated for only overlapping universities in each pair of global rankings.

Results and discussion

The selected six global ranking systems were compared using two indexes namely, the number of overlapping universities between each pair of the six rankings and the Pearson’s and Spearman’s correlation coefficients. These coefficients were calculated using ranking order or score achieved by a university. It is important to mention that all the results and discussion belong to the results of the top 100 in the studied six global rankings unless otherwise mentioned.

Inter-system overlapping of universities

The results of the top 100 (Table 1) indicate that the overlapping universities range from 61 to 91 depending on the ranking pairs. The maximum overlapping occurs between URAP and NTU, whereas the lowest overlapping is between QS and ARWU. This can be explained as follows: both URAP and NTU methodologies are based on 100 % bibliometric indicators as well as using Web of Science (WoS)—Thomson Reuter database. On the other hand the lowest overlapping, occurred between QS and ARWU, is due to the difference in their methodologies in many points:

-

ARWU emphasizes only on academic achievement, whereas QS includes non-academic indicators

-

Both systems have different weights for research-based indicators

-

Data sources for research indicators are different, i.e., QS uses Scopus database, whereas ARWU uses WoS

-

ARWU uses only quantitative data from open sources, while QS collects critical data (number of overall and international academic faculty staff and students) from a university itself as well as it gathers peoples’ views from statistical questionnaires. Fifty percent of QS score is based on two reputation surveys (academics and employers), whereas no surveys are used by ARWU.

Although THE focuses also on survey, the weight of reputation indicator is lower (33 %) than QS (50 %), the overlapping universities between ARWU and THE (68) are higher than those universities between ARWU and QS (61). Such opinion-based indicators are subjective and questionable regarding the selection of academics/employers and their response rates (Chen and Liao 2012; Cheng 2011). Different respondents are invited to participate in surveys. They are not familiar with all universities and their opinions are highly influenced by the popularity of a university much more than actual performance or its current degree of excellence (Cheng 2011).

On the other hand, USNWR compares universities globally and focuses on academic research and reputation overall. It uses 10 indicators under three groups including two reputation indicators (25 %), six bibliometric indicators (65 %) and two school-level indicators (10 %). It means that eight out of ten are research indicators with 90 % of the total score. Therefore, it is not surprising to see that the overlapping universities are higher (77–81) for USNWR with all studied global rankings except QS (66). The number of overlapping universities are 81, 81, 78, 77 and 66 for USNWR with URAP, NTU, THE and ARWU, and QS respectively.

Inter-system ranking correlation

Correlation based on rank order The results of the top 100 show that values of the rank order correlation coefficient, Spearman’s rho (Table 1), are positive in all pairs of ranking systems. The coefficients range between 0.538 and 0.933 confirming that a moderate to very high correlations are appeared between the six ranking results.

Correlation based on scores The data treatment is a common problem to all ranking systems (Harvey 2008). The difference in overall scores is used to rank institutions even it is so small or minute that can be fairly considered statistically insignificant difference (Cheng 2011; Harvey 2008). Considering the measurement standard error, one can expect that universities with a small difference in scores might be placed the same ranking position (Cheng 2011; Soh 2011a, b). Interestingly, Table 2 summarizes the scores for the universities ranked 1, 2, 10, 50 and 100 in the studied six global rankings, as well as the difference in score for 4 intervals from rank 1 to rank 2, 10, 50 and 100. It is clear that the differences in scores for any interval are not constant, but vary depending on the ranking system. For example, the difference in scores between the universities ranked 1 and 100 are 76.10, 34.00, 36.04, 38.84, 39.08 and 31.20 for ARWU, USNWR, URAP, NTU, THE, and QS, respectively. This is the case for other difference from rank 1 to 2 or 10 or 50. It is interesting to note that the difference between rank 1 and 2 in ARWU equals 26.70, whereas it is only varied between 1.05 and 5.70 for the other five rankings. These findings indicate that relying only on the ranking position is not enough to view the full picture. But with scores, one can get much better understanding of the performance of universities worldwide. Therefore, the present study also compares the six global rankings using scores.

Since the assigned ranks for universities depend on overall scores achieved by the universities, it is of interest to explore the correlation between the top 100 universities using the overall scores of the studied six global rankings. Accordingly, the Pearson’s correlation coefficients are calculated (Table 1). Again, the results show that the Pearson’s r values are positive. These values vary from 0.551 to 0.964 confirming that moderate to very high correlations are confirmed between the studied six global ranking results. Furthermore, all correlation coefficients calculated based on scores are higher than the corresponding values using rank order except the correlation between ARWU and THE or QS.

The following general findings can be concluded from Table 1:

-

The maximum consistency of QS ranking results (ranking order or score) are with THE. This is not surprising due to the fact that both are characterized by the reputation of universities and peer review.

-

In general, all four rankings (ARWU, URAP, NTU and USNWR) have stronger correlation with THE than QS. QS ranking has more emphasis on qualitative reputation (50 %) than THE (33 %) and less emphasizes on evidence based research indicators (20 %) than THE (38.5 %), where QS uses citations per faculty (20 %) and THE uses citations (30 %), international collaboration papers (2.5 %) and publications per academic staff (6 %).

-

The non-survey based rankings (ARWU, URAP and NTU) have correlation with survey-based rankings in the following order, with respect to strength: USNWR > THE > QS. This is in agreement with the allocated % weight of reputation; USNWR (25 %), THE (33 %) and QS (50 %). QS, THE and USNWR allocate 50, 33, and 25 % for reputation surveys. QS conducts two reputation surveys (academic 40 % and employers 10 %), THE conducts two reputation surveys (research excellence 18 % and teaching 15 %), whereas USNWR employs two research surveys (global 12.5 % and regional 12.5 %).

Effect of the list length of top universities

To test the effect of the list length of top universities on the correlation, the overlapping institutions (N) and the correlation coefficients are calculated for the top 50, top 100 and top 200 (Table 1). Moreover, the degree of correlation is measured using the criteria suggested by Aguillo et al. (2010). The correlation is considered very high for coefficient values >0.9, high for values between 0.7 and 0.9, medium for values between 0.4 and 0.7, low for values between 0.2 and 0.4 and negligible for values <0.2. Applying these criteria we can find that for the top 100 the six ranking pairs (QS/ARWU, QS/USNWR, QS/URAP, QS/NTU, THE/NTU, THE/URAP) have a medium consistency, seven ranking pairs (ARWU/THE, THE/QS, USNWR/NTU, THE/USNWR, ARWU/NTU, ARWU/URAP, URAP/USNWR) high consistency and two ranking pairs (ARWU/USNWR, URAP/NTU) very high consistency, i.e., nine out of 15 ranking pairs (60 %) are highly or very highly consistent. Similarly, seven out of 15 ranking pairs (46.6 %) are highly or very highly consistent for the top 50, whereas only seven out of 10 ranking pairs (70 %) are highly or very highly consistent for the top 200 (Table 3). For top 200, the ARWU is excluded because it announces the cumulative ranking scores of only top 100 universities. After a careful inspection of these data for the three lists one can find the following:

-

The percentage of overlapping universities increases with an increase in the list length in most of the pairs of ranking indexes.

-

The correlation coefficients using both score-based (Pearson) and rank-based (Spearman) correlations become higher with increasing the list length in most of the pairs of ranking indexes.

-

The order of high and very high consistency is as follows: top 200 (70 %) > top 100 (60 %) > top 50 (46.6 %).

-

The order of medium and low degree of correlation is as follows: top 50 (53.4 %) > top 100 (40 %) > top 200 (30 %).

All of these findings indicate that the degree of correlation and the number of overlapping universities increase with an increase in the list length. This is in good agreement with the findings of Aguillo et al. (2010), where they used four lengths (top 10, 100, 200 and 500) for 2008 results of four rankings (ARWU, THE–QS, Webometrics and NTU previously HEEACT).

Intra-system correlation patterns

The ARWU was selected to be the case study for the intra-system correlation patterns due to the fact that it was the first global ranking published 2003 as well as it was the most valid, credible and realistic ranking system (Marginson 2007). It was a good indicator of university excellence (Taylor and Braddock 2007). Two investigations were made namely, i.e., longitudinal and indicator-based.

Longitudinal intra-system correlation The year to year correlation coefficients of ARWU over 5 years period (2011–2015) are positive. All coefficient values are ≥0.991 revealing that the correlation between overall ARWU scores are highly consistent between any year results with other year over the period 2011–2015. Moreover, the correlating universities of ARWU 2015 results with 2014, 2013, 2012 and 2011 are 97, 93, 93 and 92, respectively. All of these findings indicate that the similarity among results of a specific ranking system is very high especially within a ranking system with no change in its methodology like ARWU over the studied period (2011–2015). These findings are in good agreement with those observed by Aguillo et al. (2010) that used similarity measures for ARWU ranking over the 4-year period of 2005–2008.

Indicator-based intra-system correlation The correlation between the overall ARWU 2015 scores and the scores of its individual indicators for the top 100 universities were investigated. The findings show that all indicators have positive correlations with the total score. All Pearson’s correlation coefficient values are positive and are greater than 0.6. The values of Pearson’s r are 0.930, 0.865, 0.839, 0.804, 0.712 and 0.607 for correlation between the overall ARWU scores and Article published in Nature and Science (N&S), Highly cited researchers in 21 broad subject categories (HiCi), Staff winning Noble prizes and Fields medals (Award), Alumni of an institution winning Noble prizes and Fields medals (Alumni), Per capita academic performance of an institution (PCP) and paper indexed in Science citation index-expanded and Social science citation index (PUB), respectively. These values show a moderate (0.607) and strong correlation (0.712, 0.804, 0.834 and 0.865). The strongest correlation (0.930) is between the overall ARWU scores and its single indicator namely N&S.

The strong correlation between ARWU total ranking and its single indicators (N&S and HiCi) is in good agreement with the findings observed by Chen and Liao (2012) and Hou et al. (2011). Each indicator from both weights 20 % of the total ARWU score. Thus, the major indicators that are influencing the ranking results of ARWU are respectively N&S and HiCi.

Furthermore, the correlations between the individual indicators for 2015 ARWU were also examined using Pearson’s test. The values of Pearson’s r for each pair of six indicators are positive and statistically significant at the 0.01 level. Pair-wise values are as follows: 0.883 (HiCi/N&S), 0.771 (Alumni/Award), 0.704 (Award/PCP), 0.674 (Award/N&S), 0.658 (HiCi/PUB), 0.657 (Alumni/PCP), 0.644 (Alumni/N&S), 0.621 (N&S/PCP), 0.601 (N&S/PUB), 0.548 (HiCi/Award), 0.502 (HiCi/Alumni), 0.487 (HiCi/PCP), 0.370 (Alumni/PUB), 0.186 (Award/PUB) and 0.126 (PUB/PCP). These values indicate that there are three strong, nine moderate, one weak and two negligible correlations. The correlation between Highly cited researchers in 21 broad subject categories (HiCi) and Article published in Nature and Science (N&S) is the strongest correlation among all six indicators.

Shared universities in the top 100 of all six global rankings

An examination of the top 100 universities in the studied six global rankings shows the following:

-

167 universities make up the top 100 on the six global rankings.

-

49 out of 167 universities are included in the lists of top 100 on all six systems (Table 4). It confirms that 118 universities appear as “Top 100” in only from 1 to 5 global ranking systems, but not in all six rankings.

Table 4 Comparison of ranking of the top 100 universities overlapping in all six global rankings for 2015 -

Harvard, MIT, Stanford and Oxford have maintained their Top 10 positions in all the six ranking systems.

-

Cambridge is in the Top 10 on all of the six rankings except NTU, where it is ranked 14th.

-

Caltech, 1st, 5th, 7th and 7th in THE, QS, ARWU and USNWR, respectively, is 38th and 50th in NTU and URAP. Similar discrepancies can be observed for Princeton University, University of California Berkeley and Swiss Federal Institute of Technology Zurich.

To test the degree of consistency of the results of six global ranking systems, the 49 universities appearing in the top 100 for all six ranking systems were analyzed and classified into four categories based on the following assumptions using ranking order:

-

Highly consistent universities in six global rankings: the inter-ranking system difference is in the range of 0–10 positions.

-

Reasonably consistent universities: the inter-ranking system difference is in the range of 11–20 positions.

-

Inconsistent universities: the inter-ranking system difference is in the range of 21–40 positions.

-

Highly inconsistent universities: the inter-ranking system difference is >40 positions.

Accordingly, Table 4 shows that there are 7, 10, 16 and 16 universities that are highly consistent, reasonably consistent, inconsistent and highly inconsistent cases, respectively. It means that the number of universities that are consistent and inconsistent among the results of the studied six rankings are 17 and 32 universities, respectively. Therefore, although the 49 universities appear in the top 100 universities in all six systems, the degree of inconsistency between their ranking order is 65.3 %. It means that there is some degree of consistency (34.7 %, around one-third) and much more inconsistency (around two-thirds).

Also, the degree of consistency of the results of six global ranking systems, the 49 universities appearing in the top 100 for all six ranking systems were also analyzed and classified into four categories based on the following assumptions using normalized scores:

-

Highly consistent universities in six global rankings: the inter-ranking system difference is in the range of 0–0.20 normalized scores.

-

Reasonably consistent universities: the inter-ranking system difference is in the range of >0.20 to <0.3 normalized scores.

-

Inconsistent universities: the inter-ranking system difference is in the range of >0.30 to <0.40 normalized scores.

-

Highly inconsistent universities: the inter-ranking system difference is >0.40 normalized scores.

The normalized score was calculated using the following equation (Tofallia 2012):

where X max and X min are the largest and smallest scores for a university in a given global ranking.

Accordingly, Table 5 shows that there are 7, 9, 14 and 19 universities that are highly consistent, reasonably consistent, inconsistent and highly inconsistent cases, respectively. It means that the number of universities that are consistent and inconsistent among the results of the studied six rankings are 16 and 33 universities, respectively. Therefore, although 49 universities appear in the top 100 universities in all six systems, the degree of inconsistency between their ranking order is 67.3 %. It means that there is some degree of consistency (32.7 %, around one-third) and much more inconsistency (around two-thirds).

In comparing the consistency analysis using ranking differences (Table 4) and normalized score differences (Table 5) one can notice that Harvard University has maintained its position, top 1, using both ranking and normalized scores. Also, 12 out of 49 universities have maintained their degree of consistency, whereas 14, 18 and 5 out of 49 universities changed 1, 2 and 3 group/category, respectively. It means that only 24.5 % of the universities have maintained their categorizations.

Key features of a world-class university

The key features of a world-class university can be defined by analyzing the shared characteristics among top-ranking universities worldwide. Therefore, an analysis of the 49 universities (Table 4) appeared in all studied six global rankings was conducted. Various indicators for the 49 universities were collected from InCites Web of Science database and from the ranking results of QS and THE published at their official websites.

The findings indicate that all indicators for the 49 universities are more than the corresponding global baseline during the 10 years period (2005–2014). The 49 universities, that are covered in the top 100 in all the six studied global rankings, have the following indicators over the period 2005–2014:

-

Total WoS publications output comprise 3,207,523 papers which was equivalent to 15.44 % of the world research output.

-

Their % share in the world in various indicators is as follows: citations 29.7 %, international collaboration 31.7 %, highly cited papers 41.51 %, papers in top 1 % 33.19 %, papers in top 10 % 27.34 %, industry collaboration 31.66 %, and hot papers 30.9 %. All of these indicators are much higher than their share of the world research output (15.44 %), indicating that their research impact and excellence punch above their weight relative to the publication output.

-

The following indicators for the 49 universities are higher than the global baseline: % paper cited (70 %), whereas the global baseline is only 57 %; citation impact 15.9, whereas the global baseline is only 8.26; and impact relative to the world is 1.92.

-

Also, these 49 universities are characterized by low student faculty ratio, high proportion of postgraduates and students, high research income per academic staff, high research income from industry per academic staff, high institutional income per academic staff, high proportion of international student and academic staff and high doctoral degree awarded per academic staff.

In brief, the high research quantity, quality and excellence; high international outlook/visibility; the very highly sound funds/finance; in-demand degree programs; large and diverse sources (endowment & income) and close cooperation with business, industry and community give these 49 universities the highest teaching and research reputations, thereby attract and retain the best faculty/researchers and talented students from all nations. Therefore, these 49 universities have maintained their positions in the top 100 in all six global rankings.

Policy implications

Due to the stronger/aggressive global competition for funding, talented faculty and students, the global rankings are important in higher education. Although, there are controversial and different stakeholders’ views, university rankings are here to stay. Indeed the global ranking has reshaped and will continue to reshaping higher education landscape as it has gained increasing interest and importance from a broad range of stakeholders and interesting groups. Moreover, ranking results have a great and significant influence and impact on nations, institutions and various stakeholders. These include current and prospective students and their parents; faculty and researchers; university administrators; alumni; businesses and industry; government and political leaders; and donors and funding bodies. There are different uses and benefits of rankings for different stakeholders. For example, students/parents use rankings to make choices for study and research; political leaders frame education policies in the country and regularly refer to rankings as a measure of economic strength and ambition; institutions define performance targets, implement marketing activities, detect weaknesses that need to be addressed, know the overall standing of HEI relative to the others, collaborate with globally well-ranked institutions, re-examine vision and mission statements, brand and advertise themselves and as evidence to seek support and funding; academics support their own professional reputation and status; donors and funding bodies inform their decision making; governments guide their decisions about funding allocation and restrict scholarships for studies abroad to students admitted to highly ranked institutions; whereas employers (industry & businesses) guide decisions about collaboration, funding, sponsorship & employment.

Many policy lessons could be derived from the global ranking systems’ methodologies and the findings of the present study:

-

Global ranking is a global phenomenon explored as a result of increasing globalization of higher education and massive competition for funding and talented faculty/researchers/students as well as changing universities’ roles in knowledge-based society/economy.

-

There is no ideal global ranking system until now.

-

Although there are many criticisms and comments, global rankings are developed to stay. Global ranking has become an interest/important business for all stakeholders as well as for international higher education.

-

Different approaches for excellence are developed and applied by various global rankings. They use different criteria and indicators that can be categorized into reputation, research, innovation/knowledge transfer, teaching/learning, international outlook/orientation, relevance/employability, resources/fund and scientific activity on website.

-

Global rankings can be used to enhance and promote economic competitiveness of higher education systems for nations

-

Rankings are biased to English language international publications/journals and older/larger comprehensive universities with medical school

-

From medium to high correlations are confirmed between the results of the studied six global rankings, although they use different criteria, indicators, weights, etc.

-

The indicators of the studied six major global rankings complement each other. Integration of these indicators together can form a comprehensive metric for most of higher education activities/roles.

-

Global rankings are research oriented (60–100 %); Scopus and Web of Science are the two main databases for collecting bibliometric indicators.

-

A pronounced difference is there in the results of global rankings in the following cases:

-

Using two different database sources

-

Having different weights for research-based indicators

-

One ranking system uses only quantitative data and the other system uses both quantitative data and gathering peoples’ views (opinion-based indicators)

Therefore ARWU and QS 2015 results are the lowest overlapping universities among the studied six global rankings

-

-

A high similarity among results (correlation coefficients ≥0.991) of a specific ranking system (ARWU) is confirmed over 5 years’ period (2011–2015).

-

The key features of a world-class university are visionary leadership, high quality & supportive environment (research & education), large & diverse sources (endowment & income), continuous benchmarking with top universities worldwide, close cooperation with business, industry & community, in-demand degree programs (international profile), attract & retain best faculty & researchers, relatively low student faculty ratio (≤10 students per faculty), high proportion of postgraduate programs & students, have sufficient qualified staff (administrative & technical) to support teaching & research, have very sound funds/finance, international visibility & presence, high international outlook, high proportion of international faculty and students, high internationally collaborated papers, and high proportion of researchers.

It is obvious that the global rankings are mainly research oriented. It may be due to the fact that research is the most globalized activity in higher education and the research metrics are internationally comparable on objective quantitative criteria. Moreover, there is a positive relationship between research and economy growth success (Marginson and van der Wende 2007). Accordingly, European Union (EU) and national governments initiate a lot of policies and strategies that encourage additional investments in R&D. For example, there is a greater investment in EU higher education and research area (ERA) as well as more focus on developing research excellence and networking. EU research framework program offers incentive for EU states’ institutions to conduct research with collaboration across countries. This EU-funded research network secures a research funding only for researchers that collaborate from various nations (Shehatta and Mahmood 2016).

Furthermore, countries and HEIs are informed and guided by rankings to develop policy and decision-making processes (Hazelkorn 2014) and many evidences are appeared worldwide to indicate that rankings have a great influence on higher education system and institutions. Some countries have used ranking as a benchmarking or quality assurance tool, eligibility criteria for research international collaborations/partnerships, restrict students’ scholarships to the top ranked universities (Russia, Saudi Arabia, etc.) (Altbach 2012). Some countries (France, Germany, China, Russia, Spain, etc.) develop policies to encourage mergers between universities or between universities and research centers to create and strengthen small number of universities to become world-class universities. Other countries (Romania, Albania, Serbia, etc.) use ranking indicators to classify and accredit universities and HEIs. Russia initiates a new project (5-100-2020) aiming to enter at least five universities in the top 100 by 2020. On the other hand, many universities updated their ambitions and goals such as to be among the top 25 (University of Manchester’s strategic plan 2020), or among the top 50 (University of Western Australia) or among the top 100 (University of Toulouse and Novosibirsk State University). Japan developed policies and strategies to enhance global visibility of Japanese institutes such as developing new international partnerships, attracting and retaining international students, producing internationally competitive research, and developing global centers of excellence and graduate schools. Also, a policy is established to increase the international students from 100,000 to 300,000 by 2020 (IHEP 2009). Germany introduced, in 2005, the excellence initiative (Gawellek and Sunder 2016) that aims to enhance Germany’s research landscape, as a consequence of the absence of German universities in the ARWU. Saudi Arabia (KSA) is one of the emerging nations in global rankings and investing heavily in higher education (1.1 % of GDP; Al-Ohali and Shin 2013) in creating world class universities. A variety of KSA policies and strategies have been developed and implemented to promote higher education excellence. These policies include enhancing university capacities, study-abroad policy, human capital development, research development and innovation, collaboration with world class universities. As a result of such policies, some Saudi universities become internationally visible in major global rankings. In brief, universities worldwide are aligning strategic planning with global rankings. In general, countries applied one out of two approaches: (1) support the development of world-class system across country, and (2) focus only on development of small number of world class universities. Many policies and strategies guided by ranking results are mentioned by Hazelkorn (2014, 2015b).

Therefore, it is obvious that the implications for policies of nations and institutions in responding to rankings can be categorized into seven main interrelated areas, namely strategic planning, prestige and reputation, quality assurance and excellence, allocation of funding, admissions and financial aid, internationalization, and research excellence through inter- and intra-collaboration and team works.

Investing heavily in R&D is the base for knowledge-based society that is essential for economic growth. Nations recognized that universities and HEIs are the key players in shaping their knowledge power and innovation capacity through linking higher education and research, developing strategies for research, promoting innovation capacity and embedding high quality doctoral programs in universities. Each country strives to upgrade the national higher education system to obtain significant number of world-class universities. Therefore, governments support enhancing internationally competitive universities. Accordingly, governments develop various policies aiming to build world-class universities and to support R&D in higher education. China is an interesting example, which developed a policy on R&D growth that will spend like EU-27 by 2018 and US by 2022 (Ritzen 2010). Also, EU develop Horizon 2020 program (2014–2020) to spend >70 billion EUR. Therefore, the development of the following policy-related initiatives would help achieving country ambitions:

-

Developing a national R&D policy/strategy in the context of university rankings and globally connected market and workforce.

-

Establishing strong collaboration and cooperation between higher education, government, business and industry.

-

Increasing the expenditure of GDP on R&D.

-

Enhancing research capacity in higher education.

-

Centers of excellence

-

Skilled talented researchers/scientists

-

-

Creating economic development policy culture/environment.

-

Developing incentive and motivation systems to encourage faculty, scientists and researchers to be fully engaged as knowledge workers.

These national and institutional policies will enhance the knowledge production, application and diffusion, thereby enhance the ranking position of universities and contribute significantly in global innovation economy of the country.

Finally, the findings of the present study have many policy implications such as developing national/institutional initiatives for using various ranking indicators to enhance the global competitiveness of higher education institutions as well as higher education systems towards: (a) integration and engagement into world class academic community, (b) competitive international cutting edge research development. These initiatives could include the following national and institutional programs as examples:

-

Enhancing and sustaining the global vision

-

Aligning national/institutional policy with ranking indicators

-

Adopting and adapting internal quality system that involves various ranking indicators

-

Benchmarking university performance using ranking indicators as sources of information to adopt reform initiatives and policies

-

Adopting institutional/national policies/strategies that enhance research output, impact and excellence

-

Improving the use of data based decisions

-

Rewarding high-achieving faculty and researchers

-

Ensuring prestige and reputation

-

Attracting talented faculty/researchers/students

-

Encouraging attendance in international conferences

-

Increase exposure of staff/institution

-

Sustained interaction with peers

-

Know the advancements in their field

-

-

Enhancing internationalization (students, faculty and research)

-

Establishing and implementing an initiative to enhance global competitiveness of universities as well as HE system

-

Conducting self-assessment of the performance of each university by applying various ranking indicators and develop improvement action plan accordingly (rankings as a diagnostic tools)

-

Promoting national and international collaborations

-

Resource allocating and fundraising

-

Expanding postgraduate programs/students

-

Promoting competition in research publications in international journals or in journals indexed in Web of Science and Scopus

-

Enhancing English-language facilities, infrastructure and capacity necessary for research

-

Establishing centers of research excellence and science parks (Techno valleys)

-

Motivating researchers

The critical determinants for success of such efforts depend mainly on:

-

Understand the various ranking systems and their methodologies

-

The improvement initiative should reflect/cover various rankings’ indicators and not be tied to a specific ranking. This is due to the fact that excellence approach differs considerably from one ranking to other and all complement each other. Using multiple rankings instead of using only a specific ranking can lead to a more comprehensive pool, with all indicators of various ranking systems that can monitor and evaluate most of HE activities.

In summary, it is very important to understand rankings’ methodologies to better understand their results. This should be done before translating ranking results into actions that should be undertaken at national and institutional levels in responding to these results. Indeed, rankings have profound influence and impact on higher education system strategic planning and attitudes worldwide. Such impact could have implications and changes in the structure of systems and institutions (Hazelkorn 2014, b; Marginson 2007; Salmi and Saroyan 2007).

Conclusion

There is an obvious positive correlation in the ranking results (ranking order and score) between each pair of the studied six major global rankings. These coefficients range between 0.538 and 0.933 using rank order and between 0.551 and 0.964 using score revealing a moderate to very high degree of correlation among the six ranking results.

The highest degree of similarity is observed between URAP and NTU because both are based on bibliometric indicators and use a common international database namely Thomson Reuters Web of Science. On the other hand the correlations between QS and NTU (0.538), USNWR (0.586) and URAP (0.594) have the lowest similarity. This is not surprising as a result of the big difference between QS and NTU, USNWR and URAP ranking methodologies; QS ranking is based on research quality (60 %), out of this weight 40 % for reputation survey, graduate employability (10 %), international outlook (10 %) and teaching quality (20 %), whereas the other three rankings are mainly based on bibliometric data to evaluate research output, impact/quality and excellence. Forty percent of the total score of QS based on peer review system that is subjective depending upon the opinion, does not produce consistent results reflecting the actual achievement (Dill and Soo 2005; Taylor and Braddock 2007). Moreover, QS uses Scopus database for the research indicators, and the other three rankings employ Web of Science.

Although some correlations are observed, relying on the results of a single ranking system for developing important policies and decisions will be incorrect for other rankings due to the fact that different ranking indicators are employed. Therefore, decisions may differ when using the results of a specified ranking. In brief, the validity of decisions based on the results of a specific ranking and will be used as the basis for such decisions.

Furthermore, it is of utmost importance for all stakeholders to read and understand all ranking information such as scope, methodology, indicators, weights, source of data, scoring, limitations, etc. This is the key to correctly understand and evaluate the performance of various universities. The indicators for various ranking systems can be used as a starting point to identify strengths and weaknesses at university and national level in order to develop reform initiative. Knowing the best practices of the top universities in various rankings, as well as continuous benchmarking with peers, nationally and internationally, could be a huge source for learning and setting the targets as well as shaping the future.

The distinctive features of top universities featured in the top 100 lists of these rankings are derived from the data collected from InCites. These features include the high research quantity, quality and excellence; high international outlook/visibility; the very highly funds/finance; in-demand degree programs; large and diverse sources (endowment & income) and close collaboration with business, industry and community.

References

Aguillo, I. F., Bar-Ilan, J., Levene, M., & Ortega, J. L. (2010). Comparing university rankings. Scientometrics, 85(1), 243–256.

Al-Ohali, M., & Shin, I. C. (2013). International collaboration. In L. Smith & A. Abouammoh (Eds.), Higher education in Saudi Arabia (pp. 159–166). Dordrecht: Springer.

Altbach, P. G. (2012). The globalization of college and university rankings. Change, 1, 26–31.

Bastedo, M. N., & Bowman, N. A. (2011). College rankings as an inter-organizational dependency: Establishing the foundation for strategic and institutional account. Research in Higher Education, 52(1), 3–23.

Bowden, R. (2000). Fantasy higher education: University and college league tables. Quality in Higher Education, 6(1), 41–60.

Cakir, M. P., Acarturk, C., Alasehir, O., & Cilingir, C. (2015). A comparative analysis of global and national university ranking systems. Scientometrics, 103, 813–848.

Chen, K., & Liao, P. (2012). A comparative study on world university rankings: A bibliometric survey. Scientometrics, 92(1), 89–103.

Cheng, S. K. (2011). World university rankings: Take with a large pinch of salt. European Journal of Higher Education, 1(4), 369–381.

Dill, D., & Soo, M. (2005). Academic quality, league tables and public policy: A cross-national analysis of university ranking systems. Higher Education, 49(4), 494–533.

Gawellek, B., & Sunder, M. (2016). The German excellence initiative and efficiency change among universities, 2001-2011. http://econstor.eu/bitstream/10419/126581/1/846676990.pdf. Accessed June 27, 2016.

Harvey, L. (2008). Rankings of higher education institutions: A critical review. Quality in Higher Education, 14(3), 187–208.

Hazelkorn, E. (2008). Learning to live with league tables and ranking: The experience of institutional leaders. Higher Education Policy, 21, 193–215.

Hazelkorn, E. (2009). Rankings and the battle for world-class excellence: Institutional strategies and policy choices. Higher Education Management and Policy, 21(1), 1–22.

Hazelkorn, E. (2014). Reflections on a decade of global rankings: What we’ve learned and outstanding issues. European Journal of Higher Education, 49(1), 12–28.

Hazelkorn, E. (2015a). Rankings and quality assurance: Do rankings measure quality? Policy Brief Number 4. CHEA International Quality Group: Washington, DC.

Hazelkorn, E. (2015b). Rankings and the reshaping of higher education: The battle for world-class excellence. New York: Palgrave MacMillan.

Hou, Y. Q., Morse, R., & Jiang, Z. L. (2011). Analyzing the movement of ranking order in world universities’ rankings: How to understand and use universities’ rankings effectively to draw up a universities’ development strategy. Evaluation Bimonthly, 30, 43–49.

Huang, M. X. (2011). The comparison of performance ranking of scientific papers for world universities and other ranking systems. Evaluation Bimonthly, 29, 53–59.

IHEP (Institute for Higher Education Policy). (2009). Impact of college rankings on institutional decision making: Four country case studies. http://www.ihep.org/sites/default/files/uploads/docs/pubs/impactofcollegerankings.pdf. Accessed June 26, 2016.

Khosrowjerdi, M., & Seif Kashani, Z. (2013). Asian top universities in six world university ranking systems. Webology, 10(2), Article 114. Retrieved from http://www.webology.org/2013/v10n2/a114.pdf.

Marginson, S. (2007). Global university rankings: Implications in general and for Australia. Journal of Higher Education Policy and Management, 29(2), 131–142.

Marginson, S., & van der Wende, M. (2007). Globalisation and higher education. OECD education working papers no. 8. OECD Publishing. http://dx.doi.org/10.1787/173831738240. Accessed June 25, 2016.

Marope, P. T. M., Wells, P. J., & Hazelkorn, E. (Eds.). (2013). Rankings and accountability in higher education: Uses and misuses. Paris: UNESCO.

Ritzen, J. (2010). A chance for European universities. Amsterdam: University Press.

Salmi, J., & Saroyan, A. (2007). League tables as policy instruments: Uses and misuses. Higher Education Management and Policy, 19, 1–39.

Shehatta, I., & Mahmood, K. (2016). Research collaboration in Saudi Arabia 1980–2014: Bibliometric patterns and national policy to foster research quantity and quality. Libri, 66(1), 13–29.

Soh, K. C. (2011a). Don’t read university rankings like reading football league tables: Taking a close look at the indicators. Higher Education Review, 44(1), 15–29.

Soh, K. C. (2011b). Mirror, mirror on the wall: A closer look at the top ten in university rankings. European Journal of Higher Education, 1(1), 77–83.

Taylor, P., & Braddock, R. (2007). International university ranking systems and the idea of university excellence. Journal of Higher Education Policy & Management, 29(3), 245–260.

Tofallia, C. (2012). A different approach to university rankings. Higher Education, 63, 1–18.

Usher, A., & Savino, M. (2007). A global survey of university league tables. Higher Education in Europe, 32(1), 5–15.

Official websites of ranking systems

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shehatta, I., Mahmood, K. Correlation among top 100 universities in the major six global rankings: policy implications. Scientometrics 109, 1231–1254 (2016). https://doi.org/10.1007/s11192-016-2065-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-2065-4