Abstract

Recent interest towards university rankings has led to the development of several ranking systems at national and global levels. Global ranking systems tend to rely on internationally accessible bibliometric databases and reputation surveys to develop league tables at a global level. Given their access and in-depth knowledge about local institutions, national ranking systems tend to include a more comprehensive set of indicators. The purpose of this study is to conduct a systematic comparison of national and global university ranking systems in terms of their indicators, coverage and ranking results. Our findings indicate that national rankings tend to include a larger number of indicators that primarily focus on educational and institutional parameters, whereas global ranking systems tend to have fewer indicators mainly focusing on research performance. Rank similarity analysis between national rankings and global rankings filtered for each country suggest that with the exception of a few instances global rankings do not strongly predict the national rankings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Performance analysis and benchmarking of universities have attracted increasing interest from researchers, policy makers and news media outlets in the past decade (IHEP 2007). University rankings are used for different purposes by various stakeholders in the society (Dill and Soo 2005). Prospective students and their families are the foremost interested audience of university rankings, given the stakes involved with choosing an appropriate university in terms of time investment, future career prospects and financial resources (Bowman and Bastedo 2009; Griffith and Rask 2007). Rankings provide important information especially for those students who plan to go abroad for higher education. In addition to this, university administrators increasingly see rankings as a means to develop strategies for fostering the growth and development of their institutions (Bastedo and Bowman 2011; Hazelkorn 2008). At the national level, policy makers may employ the information provided by rankings to evaluate the trends in higher education systems in the world, as well as in their own countries (Hazelkorn 2007; Salmi and Saroyan 2007; Sponsler 2009). Finally, news media outlets utilize university rankings as a means to inform the society about the status of higher education institutions in the country and in the world. The news about ranking results attract interest from a wide variety of readers every year.

In order to meet the information needs of various stakeholders, several ranking systems have been developed by various types of organizations, including news media, university research labs, professional societies, governmental agencies and non-governmental organizations (Usher and Savino 2007). The development of citation databases such as Thomson Reuter’s Web of Knowledge and Elsevier’s Scopus has facilitated access to bibliometric data on academic output, which constitute the backbone of many existing ranking systems. In addition to data on academic output, other data sources such as fiscal information, infrastructure and facilities in university campuses, student quality and the quality of education have been other major components of existing ranking systems (Dill and Soo 2005).

In addition to global ranking systems such as the Shanghai Jia Tong University’s Academic Ranking of World-class Universities (ARWU) and Times Higher Education World University Rankings (THE Ranking), several national ranking systems have been developed by local institutions. Although most national rankings are not equally known as global rankings, given their access and in-depth knowledge about local institutions, they tend to include a more comprehensive set of indicators that are often excluded by global rankings due to the challenges involved with collecting such data at the global scale. In contrast, global ranking systems tend to rely on internationally accessible bibliometric/webometric data and reputation surveys conducted with academics and other stakeholders for ranking universities. The emphasis on publication-based bibliometric indicators in global rankings has been subjected to criticism since such indicators tend to favour large research universities without emphasizing other important missions of an university, such as education and service to public (Federkeil et al. 2012). National rankings especially aim to target such aspects given their better access to national data sources. Another issue is the relatively small coverage of universities in global rankings which provide limited coverage for a select number of institutions located at a few developed countries in the world. Citation databases provide another source of bias since publications in some fields and languages are better represented than others (Van Leeuven et al. 2001; van Raan 2005). Ranking methodologies including the aggregation of scores across possibly correlated indicators are also critiqued in terms of their explanatory role and the volatility they generate in the rankings (Saisana et al. 2011). In short, there is a diversity among existing global and national rankings in terms of their information resources and ranking methodologies, which inevitably influence the outcomes of rankings in important ways.

The impact of such differences in methodologies on ranking outcomes and quality assessment have attracted interest in the higher education literature. Bowden (2000) focused on a comparative analysis of rankings published by news media in the UK (The Times, Sunday Times, Financial Times, Daily Telegraph and an independent website called Red Mole) and ranking systems published in Canada (Maclean), USA (US News), Asia (Asiaweek) and Europe (Der Spiegel, Liberation) by similar organizations. A qualitative comparison between these ranking systems was provided based on their methodological approaches and the ranking results. For instance, the UK rankings were compared in terms of the matches in their top/bottom 10 and how they ranked new universities in contrast to established institutions. International rankings were only analyzed in terms of the types of criteria they employed. The comparative analysis highlighted some similarities among the performance indicators employed, such as entry level grades, teaching quality, research quality, faculty to student ratios and educational infrastructure. The results also pointed out that there are differences among national rankings in terms of how they classify universities for ranking purposes. Finally, international rankings covered in the study were found to rely on reputation surveys.

Similarly, studies by van Dyke (2005) and Dill and Soo (2005) offer a comparative analysis of rankings published by newspapers/magazines and non-profit research institutes in Australia (The Good Universities Guide, Melbourne Institute), Canada (Maclean), Germany (CHE/Stern), Poland (Perspektywy), the UK (The Times Good University Guide and The Guardian University Guide) and the US (the US News and World Report America’s Best Colleges and TheCenter ranking) to explore if there is an emerging consensus among national rankings on the measurement of academic quality. Dill and Soo divided the indicators used in these rankings into input, process and output measures. The study indicated that all of the national rankings emphasize input indicators such as student staff ratio, minimum entrance scores, percent of top ranking students, measures of student diversity, percent of faculty with a PhD and financial resources/facilities, and there is homogeneity among rankings in terms of the specific input indicators they use. The emphasis on input indicators is especially strong in commercial rankings published by newspapers/magazines. On the other hand, process, output and reputation measures were found to be more diversely used and tend to carry less weight as compared to input indicators, with the exception of more graduate study oriented rankings such as Asiaweek, TheCenter and Perspektywy.

In a more comprehensive study, Usher and Savino (2007) examined 14 national and 3 global ranking systems by comparing their methods of data collection, data selection and indicator weights. The authors categorized ranking indicators based on the shared themes among systems, such as beginning characteristics, learning inputs-staff, learning inputs-resources, learning outputs, financial outcomes, research and reputation. The distribution of weights and indicators among different rankings entailed a plurality of approaches to university rankings, since no two rankings were found to be alike in terms of their methodologies. The authors also identified regional differences among ranking systems, e.g. rankings in China emphasize research output and reputation rather than student input, whereas in the UK reputation surveys tend to have a higher influence, and in the North America national rankings focus on learning inputs/outputs and beginning characteristics rather than research performance. The comparisons among national and international rankings are mainly based on qualitative differences in terms of ranking indicators, not based on quantitative measures of overlap or rank-order correlation measures.

In contrast to the studies reviewed so far, Aguillo et al. (2010) performed a comparative analysis of global ranking systems based on their rank-order similarity measure. The study focused on five global rankings, namely ARWU, QS-Times Higher Education Supplement, WEBOMETRICS, LEIDEN and the Higher Education Evaluation and Accreditation Council of Taiwan (HEEACT) rankings. The authors employed percent overlap, Spearman’s footrule and the inverse-rank similarity measure developed by Bar-Ilan et al. (2007) to evaluate the degree of similarity among global rankings in terms of the order of institutions in the top 10, 100, 200 and 500 lists. The findings indicated a higher degree of similarity between global rankings that emphasize research performance such as ARWU and HEEACT. The authors argued that QS-THE and WEBOMETRICS differed from these rankings as they emphasize additional factors, such as the reputation survey in the case of QS-THE, and web visibility and presence measures in the case of WEBOMETRICS. Aguillo et al.’s study focused on only similarities among global rankings. No comparisons were made between national and global rankings.

Existing studies in the literature pointed out several similarities as well as differences among existing ranking systems, but most of these studies do not employ quantitative techniques for comparing national and global ranking systems. Moreover, comparative analysis of ranking indicators reported in these studies mainly focus on either national or global rankings only. This study aims to address this gap in the literature by performing a quantitative analysis of twelve national and six global ranking systems. The emergence of global rankings such as SCImago, WEBOMETRICS and URAP, which cover a considerably larger number of institutions worldwide, has made it possible to make more meaningful comparisons among global and national rankings. More specifically, in the present study, we aim at answering the following research questions:

-

What are the differences and similarities among national ranking systems in terms of the indicators they employ?

-

What are the differences and similarities between national ranking systems and global ranking systems in terms of the indicators they employ?

-

Can global ranking systems be substitutes for national ranking systems?

In order to answer the proposed research questions, we compared national and global ranking systems in terms of the ranking indicators they employ, as well as in terms of their rank-similarity scores, as described in the analysis section. The rest of the paper is organized as follows. The next section provides an overview of our ranking selection process, the classification methodology and the rank-similarity measure we employed. This is followed by the results section that presents the findings of our categorization and rank-similarity analysis. The paper concludes with a discussion of our main findings.

Methodology

This section provides an overview of the criteria used to select national rankings, brief descriptions of the national and global ranking systems considered in this study, and the statistical measures employed to make comparisons among global and national rankings. Our analysis covered the 2012 edition of each national and global ranking in our sample.

National ranking system selection criteria

In order to assure that the selected ranking systems constitute a representative sample of the national ranking systems in the world, we followed the following criteria for selection: If there is a single national ranking published in a country, we included that ranking system in the analysis only if the ranking covers institutions as a whole rather than subject-based rankings of departments within institutions. If there exists more than one ranking system in a country, we included only one of them in the analysis, based on the following criteria.

-

The availability of sufficient published information about ranking methodology

-

The sustainability of ranking, specified by being published for at least 2 years

-

The presence of the ranking system as a system of ranking rather than benchmarking

National ranking system selection process

Our ranking system selection process begins with a search process over online publication databases and the World Wide Web with search queries that target national ranking systems for each country. The retrieved items include the official websites of rankings, technical reports analyzing different systems, news articles published related with those ranking systems and scientific publications in related journals such as Scientometrics, Infometrics and Higher Education. The main criterion to select a ranking system is the availability of detailed information about its ranking methodology. If the country has only one ranking system and if it is a system of ranking rather than benchmarking, that system is selected. If there are more than one national ranking systems in a particular country, the sustainability of the ranking is considered as a tie-breaking factor. We chose those rankings that have been published consistently in the past 2 years. According to the given selection process, 30 ranking systems from 22 countries have been extracted in the first step. The following ranking systems were excluded due to the reasons given in Table 1.Footnote 1

Consequently, 12 national ranking systems from 12 different countries were considered for further analysis.

Overview of selected national ranking systems

Twelve national ranking systems that satisfied our selection criteria were considered in this study. In this section we briefly introduce each national ranking system.

Brazil’s first national ranking was published in 2012 by a daily newspaper called Folha (2012). The ranking mainly focuses on four dimensions: education (20 %), market assessment (20 %), research (55 %), and innovation (5 %). The education dimension is based on a group of scientists’ subjective rankings of the top 10 institutions in their respective fields. Similar to education, the market assessment dimension is also based on subjective opinions of managers or directors regarding the best 3 institutions in the country. Quality of research is composed of 9 objective indicators which mainly focus on publication, citation, collaboration counts and their ratios per faculty. Finally, the innovation dimension is based on the number of patent applications made by the institutions.

Chilean universities were evaluated in 2011 by Group of Advanced Studies and published as the EI Mercurio University Ranking (2012). The ranking uses 18 indicators in total that are listed under the following groups: students, academics, training process, research, doctoral and institutional management. The majority of indicators are size independent.

In China, there are a number of university ranking systems. The ARWU group (2011) has recently published a comprehensive ranking named as the Greater China Ranking, which evaluates Mainland China, Taiwan, Hong Kong and Macau universities in 2011. The ranking employs 13 indicators under 4 broad categories; Education (35 %), Research (35 %), Faculty (25 %) and Resources (5 %).

Macedonian universities were also evaluated by the ARWU group (2012a, b). The Macedonian ranking has 18 indicators in total that are grouped under 3 main dimensions with the following weights: Teaching and Learning (42 %), Research (40 %) and Social Service (18 %).

Independent Kazakhstan Quality Assurance Agency for Education (2011) published its first ranking of higher education institutions in 2008. In the 2011 edition of the ranking there are 7 main dimensions consisted of 44 specific indicators which highly focused on national size independent measures. Education has higher importance when compared to research.

Lithuanian universities have been ranked since 1997 by the Veidas magazine (2012). Universities are ranked according to totally 39 indicators such as quality of education, internationalization, the number of programmes, budget, research papers, library facilities and assessment by students.

In Malaysia, the Rating System for Malaysian Higher Education Institutions (SETERA) was first published in 2007 (Malaysian Qualifications Agency 2011). Subsequent rankings have been published biannually. It includes 26 criteria represented by 62 indicators. The subjective and size independent indicators have higher importance for this ranking. The 5 main dimensions used in the ranking methodology are governance (12 %), physical and financial resources (3 %), talent (quality, experience and diversity) (5 %), process (40 %) and quality of graduates and graduate satisfaction (40 %).

Pakistani Higher Education Institutions were first ranked in 2010 by Higher Education Commission of Pakistan (2010). The main objectives of the ranking are summarized as increasing the quality of research and the motivation for higher education institutions to take place in world rankings. There are totally 25 ranking indicators with 3 main dimensions: implementation status of quality assurance criteria (24 %), teaching quality (40 %) and research (36 %).

In Poland, Perspektywy Education Foundation (2012) has published the first national ranking in 2008. There are 5 dimensions assessed in the Perspectywy 2012 ranking: prestige (25 %), research (45 %), teaching and learning (10 %), innovation (5 %) and internationalization (15 %).

The Complete University Guide (2012) has been ranking higher education institutions in the UK since 2007. The ranking includes 9 indicators including student satisfaction survey score, research assessment/quality, entry standards (average of admissions test scores), student staff ratio, academic services spend (the expenditure per student on all academic services), facilities spend (the expenditure per student on staff and student facilities), good honours (number of graduates with first or upper second class degrees divided by total number of graduates), graduate prospects (a measure of the employability of graduates) and completion rate of students.

US News and World Report (2012) magazine publishes a ranking of US colleges and universities since 1983. The current ranking is based on multiple categories tapping on different aspects of higher education such as assessment by administrators at peer institutions, retention of students, faculty resources, student selectivity, financial resources, alumni giving, graduation rate performance and high school counselor ratings of colleges. In particular, the US News national universities ranking is based on (1) undergraduate academic reputation score derived from a peer review survey among academics (22.5 %), (2) retention score based on the proportion of first year students who return to campus for the third year and eventually graduate (22.5 %), faculty resources score derived from sub-indicators including class size, adjusted faculty salary, student-faculty ratio, proportion of full time faculty and proportion of professors with the highest degree (20 %), (3) student selectivity score based on admissions test scores of incoming students, proportion of enrolled first year students who were in the top 10 % in their high school classes and the university’s acceptance rate (12.5 %), financial resources based on average spending per student (10 %), graduation rate performance (7.5 %) alumni giving rate as an indicator of long term student satisfaction (5 %).

Turkish higher education institutions have been ranked by University Ranking by Academic Performance (URAP) laboratory located at Middle East Technical University since 2009. The URAP-TR ranking (University Ranking by Academic Performance, 2012a) is based on 9 equally weighted indicators, namely number of publications in the last year, publications per faculty, number of citations accumulated in the last year by papers published in the last 5 years, citations per faculty, total number of scientific documents published in the last 5 years, total documents per faculty, number of PhD students, proportion of PhD students among all students and the number of students per faculty.

A summary of national ranking systems covered in the study is presented in Table 2.

Overview of global ranking systems

Eight global ranking systems, namely ARWU, HEEACT, LEIDEN, SCImago, QS, THE, URAP and WEBOMETRICS were considered in this study. The 2012 editions of these global rankings were used for the comparative analysis presented in the next section. This section provides a brief overview of each system in terms of their coverage and ranking indicators.

Academic Ranking of World Universities—ARWU (2012b) was first published in June 2003 by the Center for World-Class Universities and the Institute of Higher Education of Shanghai Jiao Tong University, China. The ARWU ranking has announced the top 500 universities of the world on an annual basis since 2003. ARWU selects universities which have Nobel Laureates, Fields Medalists, highly cited researchers or authors whose articles were published in Nature or Science. In addition to this, universities from all countries which have a significant amount of articles indexed by Science Citation Index-Expanded (SCIE) and/or the Social Science Citation Index (SSCI) are also included. ARWU uses 6 main indicators, namely, alumni (total number of alumni who won a Nobel prize or a Fields medal—10 %), awards (number of faculty with a Nobel prize or a Fields medal—20 %), the number of faculty in the highly cited researchers list (20 %), number of papers published in Nature and Science journals (20 %), number of articles indexed in SCI-Expanded and SSCI (20 %), and an estimation of number of faculty members (10 %).

Higher Education Evaluation and Accreditation Council of Taiwan (HEEACT) world university ranking, recently renamed as Performance Ranking of Scientific Papers for World Universities (National Taiwanese University 2012), aims to evaluate and rank universities in terms of their academic publication performance. HEEACT publishes the ranking of top 500 world universities annually since 2007. The HEEACT ranking employs multiple indicators grouped under 3 categories; research productivity, research impact and research excellence. Indicators under research productivity includes the number of articles published in the past 11 years (10 %) and the number of articles published in the current year (15 %). The research impact dimension includes number of citations in the past 11 years (15 %), number of citations in the past 2 years (10 %), and average number of citations in the past 11 years (10 %). Finally, the research excellence dimension consists of the h-index of the last 2 years (10 %), the number of highly cited papers in the past 11 years (15 %) and the number of articles of the current year in high impact journals (15 %).

The LEIDEN Ranking is published by The Centre for Science and Technology Studies (2012) at Leiden University. The LEIDEN system provides multiple ranking tables based on individual criteria grouped under two broad categories called impact and collaboration. Indicators listed under impact includes mean citation score (MCS), mean normalized citation score (MNCS) and proportion of top 10 % publications (PP 10 %). The collaboration dimension includes indicators such as proportion of inter-institutional collaborative publications, proportion of international collaborative publications, proportion of collaborative publications with industry, and mean geographical collaboration distance (MGCD). LEIDEN ranks the top 500 institutions in the world along each criteria separately. In this study the ranking based on the PP10 % indicator was considered for comparison purposes, since LEIDEN considers it as the most stable and important impact indicator.

SCImago Global Institutions Rankings (SIR) is published by the SCImago Research Group (2012) since 2007. SCImago evaluates those universities with 100 or more articles published in the last year in journals indexed by the Scopus database. SCImago provides separate rankings for each of the following indicators; number of publications, output ratio produced in collaboration with foreign institutions, source normalized impact, ratio of publications in the most influential journals (based on SCImago Journal Rank), specialization index that measures thematic concentration and dispersion of an institution’s scientific output, excellence rate (number of papers in top 10 % of most cited papers), scientific leadership (number of documents in which the institution is the main contributor) and excellence with leadership (number of documents in the excellence rate in which the institution is the main contributor). SCImago neither favors any single indicator nor provides a composite score combining all the indicators for ranking purposes.

The QS World University Rankings is published by the Quacquarelli Symonds Company (2012) since 2004. The QS ranking covers about 800 universities and it is based on 6 indicators, namely academic reputation (40 %), employer reputation (10 %), faculty student ratio (20 %), citations per faculty compiled from the Scopus database (20 %), proportion of international students (5 %), proportion of international faculty (5 %). For the purpose of comparison, top 500 list of the 2012 edition of the QS ranking was used in this study.

The THE World University Rankings published by Times Higher Education (2012) considers 13 performance indicators to rank universities based on bibliometric resources and reputation surveys. These indicators are grouped under 5 areas, namely teaching (30 %), research (30 %), field normalized citations accrued in the last 5 years (30 %), international outlook (7.5 %) and industry income (2.5 %). The teaching dimension covers ratings obtained from the reputation survey, staff to student ratio, number of doctorate degrees per faculty, proportion of postgraduate students to undergraduates and institutional income (scaled for size). The research dimension is based on research reputation score derived from the reputation survey, university income (scaled against faculty size and adjusted for purchasing-power parity) and research output per faculty (scaled for faculty size and normalized for subject). The industry income dimension includes research income earned from industry per faculty, whereas the international outlook dimension consists of the ratio of international to domestic students, the ratio of international to domestic staff, the number of papers that have at least one international co-author.

URAP’s (University Ranking by Academic Performance, 2012b) ranking of Top 2000 world universities has been published by the URAP lab at the Middle East Technical University since 2010. The ranking is based on six academic performance indicators based on bibliometric resources obtained from the Web of Science database. The indicators include the number of articles published in the last year (21 %), the number of all scientific documents published in the last 5 years (10 %), the number of citations accrued in the last year for the documents published in the last 5 years (21 %), number of published papers that include at least one international co-author (15 %), journal impact total, which is derived by aggregating the impact factors of journals in which a university published articles in the past 5 years (18 %) and journal citation impact total, which aggregates the impact factors of journals in which the citing articles were published (15 %).

The WEBOMETRICS Ranking of World Universities developed by Cybermetrics Lab (2012) in Spain ranks universities based on their web presence and visibility. WEBOMETRICS is the most comprehensive ranking in terms of coverage as it includes roughly 20,000 higher education institutions. These institutions are ranked based on their web visibility (50 %) and activity (50 %). Visibility is measured over the number of links the institution’s web domain receives from third party institutions. Activity is split into three equal subcategories, namely presence (the number of web pages), openness (number of rich files hosted) and excellence (number of papers in the top 10 % of each subject, supplied by SCImago). For the purpose of comparison, the 2012 edition of the top 500 Webometrics Ranking of the WorldFootnote 2 list was used in this study.

Rank similarity measurement

In order to make a fair comparison among multiple ranking lists with different degrees of coverage, we appropriated Aguillo et al.’s (2010) methodology for calculating the similarity among two rankings. Similar to Aguillo et al., we used Bar-Ilan et al.’s (2007) Inverse Rank (M) measure to compute the similarities among global and national rankings. We compared the top-k lists drawn from each global ranking filtered for each country to the top-k lists drawn from the corresponding national ranking. The k values ranged from 5 to 200, depending on the number of institutions represented in the global rankings from the target country. We computed rank similarity values for multiple k values to account for differences in coverage and overlap among the global rankings and the reference national ranking.

The M measure is an improved version of a rank similarity measure proposed by Fagin et al. (2003) which compares the top-k lists by assigning a rank of k + 1 for those items that are not ranked in one of the lists. Bar-Ilan et al. (2007) pointed out that this approach gives excessive weight to non-overlapping elements irrespective of the position in which the non-overlapping cases occur. Bar-Ilan’s M measure uses reciprocals of ranks that assigns more weight towards matches or non-matches that occur in the top of the two lists versus matches or non-matches that occur at the bottom. The formula used to compute the M measure for the top-k elements drawn from two rankings is given as:

where

and

σ(i) represents the rank of item i in a list, k is the number of top elements obtained from both ranking lists, Z is the set of common elements among the two top-k lists, S is the set of elements unique to the first top-k list, and T is the set of elements unique to the second top-k list.

Some rankings such as QS do not individually rank all institutions below a certain threshold. For instance, in the QS ranking only institutions that are ranked in the top 400 are assigned individual ranks, whereas institutions in the range 401–600 are ranked in groups of 50 (e.g. 401–450, 451–500 etc.). Since the M-similarity measure can accommodate equal rank values, universities that are in the same ranking group are assigned the same ordinal rank in our rank similarity analysis.

Results

The results are presented in two sections. The first section is based on the analysis of the indicators used in the ranking systems, while the second section is based on the analysis of rank-similarities between national and global rankings.

Indicator analysis

The data consisted of the indicators employed in twelve national and eight global ranking systems. The twelve national ranking systems employed 190 indicators, whereas the eight global ranking systems employed 44 indicators. Twenty-four indicators were employed by both the national and the global ranking systems. Accordingly, the data consisted of a total of 210 indicators. Table 3 shows the number of indicators employed by each ranking system.

The indicator data reveals that the majority of the national ranking indicators (166 of 190 indicators) were employed only by the national ranking systems. In other words, those 166 indicators were not employed by the global ranking systems (Table 4). On the other hand, the global rankings revealed a different picture: Less than half of the global ranking indicators (20 of 44 indicators) were employed only by the global rankings. The remaining 24 indicators were employed by both global and national ranking systems.

Tables 3 and 4 indicate that the national ranking systems exhibit different characteristics than the global ranking systems, in terms of the total and mean number of indicators, as well as the overlap between the indicators employed by the two ranking systems. In particular, the national ranking systems rely on a larger number of indicators, both in total and in the mean number of indicators employed.

The frequency of indicators used in different ranking systems are presented in Table 5a, b.

An investigation of the frequently-used indicators, listed in Table 5a, b, reveals that indicators used in the national ranking systems were possibly designed as a response to the needs of the stakeholders in the society. On the other hand, the global ranking systems were mostly based on internationally recognized indicators.

The descriptive statistics presented in the above tables suggest that national ranking systems does not necessarily comprise a sub-class of global ranking systems. In other words, the rankings produced by national ranking systems may reveal different results compared to the rankings produced by global ranking systems. This observation can be justified by looking at the rank-similarity analysis presented in the next section. However, there is one caveat that has to be addressed in the above analysis. The data, as described above, are composed of 210 indicators, which were kept intact in terms of the indicator names. Therefore, similar indicators might have been counted as different. To resolve this issue, we identified four set of dimensions and classified the indicators in those dimensions, as described below.

-

Coverage of indicator

-

National: used only by the national ranking systems

-

Global: used only by the global ranking systems

-

National and global: used by both the national and the global ranking systems

-

-

Size dependency

-

Size-independent indicators

-

Size-dependent indicators

-

Subjective indicators (based on opinion surveys)

-

-

Input and output characteristics of the indicator

-

Output: research output (e.g., number of publications) and educational output (e.g. doctoral degrees awarded, employer satisfaction with graduates)

-

Process: educational, managerial and research processes (e.g. academic governance, institutional assessment on the curriculum)

-

Input: financial, educational and research resources (e.g. budget, research funds)

-

Institutional properties: institution specific capacities and capabilities (e.g. number of study programs, number of faculty/staff)

-

-

Indicator subcategories

-

Research indicators

-

Academic publications and impact

-

Research capability and funding

-

Technology transfer

-

-

Education indicators

-

Student profile and services

-

Academic programs and accreditation

-

Alumni

-

Teaching quality assurance and assessment

-

Postgraduate student profile

-

-

Institutional facts and figures

-

Faculty profile and development

-

Educational facilities and resources

-

Managerial and organizational activities

-

-

After the identification of the classification categories, each indicator was classified into each category by using a card-sorting method (Nielsen 1995) followed by mutual agreement among the authors of the present study. We have also calculated a weight metric for each indicator, by summing up the percentage weights of each indicator in different ranking systems (Table 6). The underlying motivation for calculating the weight metric was that it might be a more informative metric of an indicator, compared to the frequency of use. Accordingly, we calculated indicator weights for national ranking systems and global ranking systems separately (Tables 6, 7).

The classification given in Tables 6 and 7 reveals that global ranking systems largely rely on indicators that are related to academic publications and impact (62.3 %), research capabilities and funding (9.8 %), educational facilities and resources (9.5 %), faculty quality (7.4 %) and student profile (4.6 %). On the other hand, national ranking systems tend to emphasize faculty and student profile (25.0 %) and teaching quality (13.5 %), whereas academic publications and impact (17.6 %) have a less central role. Moreover, none of the global rankings in our sample covered indicators such as technology transfer, program accreditation, and managerial/institutional features, whereas these indicators collectively account for 10.6 % in the national rankings.

A further analysis of indicators, according to the four dimensions are presented below.

Size dependency

The results of the classification of the indicators into the three dependency categories (size-independent, size-dependent, and subjective) reveal that size-independent indicators have a higher weight (59.9 %) in the national ranking systems compared to both size-dependent indicators (23.2 %) and subjective indicators (16.9 %), as shown in Table 8. However, in the global ranking systems size-independent indicators have a slightly smaller weight (41.5 %) than size-dependent indicators (46.7 %). A Chi square test showed that the distribution of dependency indicator categories is significantly associated with whether the type of the ranking is national or global, χ2(2) = 10.8, p < 0.01. Therefore, an analysis in terms of the dependency dimension highlights the major role of size-independency in the national ranking systems. On the other hand, global ranking systems employ both size-independent and size-dependent indicators approximately in equal weights.

Input and output characteristics of the indicators

The indicators were classified into four categories according to their input and output characteristics. The analysis reveals that the major determining indicator in the global ranking systems are the output indicators (72.5 %, Table 9). The output indicators also comprise the largest percentage in national indicators, though to a lesser degree (39.1 %). Another difference between the national and the global ranking systems is that the national ranking systems place higher weight to the process indicators and input indicators, compared to the global ranking systems. A Chi square test showed that the distribution of input and output indicator categories is significantly associated with whether the type of the ranking is national or global, χ2(3) = 20.5, p < 0.001.

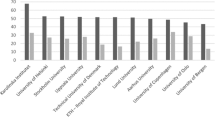

Indicator subcategories

The indicators were then classified into three major categories, as research, education, and institutional facts and figures. This classification reveals that the national ranking systems place more emphasis to indicators related to education. On the other hand, the global ranking system indicators are largely research oriented (Fig. 1). A Chi square test showed that the distribution of these indicator subcategories is significantly associated with whether the type of the ranking is national or global, χ2(2) = 34.8, p < 0.0001.

Major features of the national and global ranking systems

Overview

Descriptive statistics for each of the twelve national ranking systems and the six global ranking systems are presented in Table 10. The table excludes LEIDEN and SCImago global rankings because no specific weights are assigned to each indicator in those two ranking systems. A closer look at Table 10 shows that except for the Turkey—URAP-TR ranking (which gives a lower weight to national-specific indicators) and the Brazil—RUF Folha’s University Ranking (which gives equal weight to national-specific indicators and the indicators that are common between national and global ranking systems), all national ranking systems place a higher emphasis on the indicators that are specific to national ranking systems. On the other hand, one of the six global ranking systems (WEBOMETRICS) employs mainly global-specific indicators.

As for size independency, size-independent indicators are introduced higher weight in nine out of twelve national ranking systems (Chile, China, Kazakhstan, Lithuania, Macedonia, Malaysia, Pakistan, Poland, Turkey, the U.K., and the U.S.), whereas three out of six global ranking systems (QS, THE, and WEBOMETRICS) employ size-independent rankings.

As for the Input/Output characteristics, three of the six global ranking systems (ARWU, HEEACT, and URAP) employ only the indicators that exhibit output characteristics. The national ranking systems, however, employ a wider spectrum of the indicators in terms of the Input/Output characteristics.

Finally, two global ranking systems (HEEACT and URAP) employ only research indicators. The QS ranking system is the only ranking system that places a higher weight to education indicators compared to other indicators in the major indicators subcategory. In the national ranking systems, two are exceptional (UK − Complete University Guide and USA − U.S. Newsand Report) in the sense that they place a higher emphasis on research indicators. The Chile − EI Mercurio ranking places a higher emphasis to the rankings that specify institutional facts and figures, and Malaysia − Setera ranking places an equal weight to research indicators and education indicators. The remaining nine national ranking systems place a higher emphasis on education indicators than both research indicators and the indicators that specify institutional facts and figures.

Rank-similarity assessment of national and global university rankings

In this section the results of national university rankings published in Brazil, Chile, China, Poland, Turkey, USA, and UK will be compared with global university rankings including ARWU, HEEACT, LEIDEN, QS, Times (THE), URAP and WEBOMETRICS by using rank similarity measures. Our aim is to investigate the reasons underlying the similarities and differences between national and global ranking systems.

Four national ranking systems were excluded from similarity analysis due to their inconsistencies with the global rankings in our sample. The national Malaysian ranking applies the same ranking category to large groups of universities which makes it impossible to compare it against global rankings. For instance, there are nine Malaysian universities which are included in URAP and QS rankings, but they are all rated as top institutions in the local ranking. A similar situation applies to the Lithuanian ranking, where only URAP (6) and QS (3) include institutions from this country. Moreover, only one global system (URAP) ranks universities from Pakistan and Macedonia. Thus, these four countries are excluded from the comparative analysis presented below. Finally, the SCImago global ranking was excluded from similarity analysis since SCImago ranks universities along individual indicators without combining them or favouring any single indicator over others.

Since most global rankings announce the top 500 to 2000 universities worldwide, a great variability is observed in the number of institutions covered when the global rankings are filtered for a specific country. Since national rankings tend to offer a much larger coverage of local universities, making comparisons at the national level yields methodological challenges. Table 11 below summarizes the percent of overlap between the 6 global rankings and each national ranking investigated in this study.

In Table 11, 100 % represents the total number of universities ranked in the corresponding national ranking. In some cases like USA, the local ranking may include smaller number of universities as compared to some of the global rankings. For instance, URAP ranks 400 US universities, whereas the local ranking by US News and Reports includes 275 universities. Only 69 of these universities are included in both lists. When the global rankings are compared in terms of their coverage of local universities, URAP stands out as its coverage of local institutions ranges between 21 and 75 %. URAP is followed by WEBOMETRICS (0–55 %), QS (0–41 %), THE (0–40 %), ARWU (0–30 %), LEIDEN (0–30 %), HEEACT (0–29 %). This variability of coverage is due to the variation in the number of institutions ranked by each system and the skewed distribution of high-ranking institutions among the countries.

The variability of coverage presents two challenges for making a fair comparison among global rankings in terms of their consistency with national rankings. The first issue is the low overlap among the lists, which is particularly evident in cases such as Brazil, Chile, Turkey, Lithuania, Pakistan and Poland where only a few universities can make it into the global rankings. The second issue is related with non-overlapping cases where an institution ranked in one list may not be present in the other list and vice versa. Since local rankings tend to include a considerably higher number of institutions, this makes it difficult to directly employ standard rank correlation methods to compare global and national rankings, which can only measure the similarity between the respective rankings of institutions common to both lists. As previously discussed in the methodology section, in order to make fair comparisons among multiple rankings with different degrees of coverage, we appropriated Bar-Ilan et al.’s (2007) Inverse Rank (M) measure to compute the similarities among global and national rankings. For this purpose, top k lists drawn from each global ranking for each country and top k lists drawn from the national rankings are compared, where k ranged from 10 to 200 based on the degree of overlap between national and global rankings in our sample. In the following subsections, the results of our rank similarity analysis for each country are presented.

Brazil

Figure 2 below summarizes the rank similarity measures M (Inverse Rank measure, see the Methodology section) to evaluate the similarities among Brazil’s national ranking and global rankings. The evaluation compares each global ranking and Brazil’s national ranking, for the top k = 10, 20, 30, 50 and 80 universities. The number of overlaps between Brazil’s national ranking and global rankings are 40 (URAP), 11 (WEBOMETRICS), 8 (LEIDEN), 6 (ARWU), 5 (QS), 5 (HEEACT) and 2 (THE), respectively. Figure 2 indicates, when the similarity measures are calculated for the top 10 universities for each pair, five global rankings (URAP, WEBOMETRICS, ARWU, HEEACT and QS) exhibit a stronger similarity to the Brazil’s Folha national ranking. In contrast, THE and LEIDEN have lower M values than the others. Since the number of overlapping institutions ranges between 11 and 2 except URAP, the M scores of most global ranking systems decrease as k increases. URAP continues to maintain a high similarity value as k is increased from 10 to 80. This shows that the URAP ranking has a high degree of similarity with the Brazil’s Folha national ranking.

The high degree of similarity between URAP and Folha rankings can be accounted by the fact that both rankings emphasize research indicators as a measure of performance. Another finding is that URAP, ARWU and HEEACT have similar scores for the top ten universities, since these ranking systems emphasize research indicators. However, the scores diverge as the k value increases, where ARWU’s and HEEACT’s scores systematically decrease. This is because ARWU and HEEACT cover only six and five universities from Brazil respectively, and the M score decreases for each non-matching university, whereas URAP covers 40 universities among the 191 universities ranked by Folha in a consistent way to maintain a high M score. The QS ranking also has a high degree of similarity, especially for small k values. Since the reputation survey constitutes an important part of both the QS and Folha rankings, the similarity between these two systems suggests that both research and reputation indicators provide similar rankings in the Brazilian context. Finally, WEBOMETRICS has a relatively high M similarity score with the Folha ranking. This may be due to the high correlation among web presence and the top 10 % measures with other academic performance measures in the context of Brazilian universities.

Chile

Figure 3 below depicts the M values obtained between each global ranking and Chile’s EI Mercurio national ranking, across multiple k values. The number of overlaps between Chile’s national ranking and global rankings are 14 (URAP), 3 (QS), 2 (LEIDEN), 2 (ARWU), 2 (HEEACT) and 1 (WEBOMETRICS) respectively. No Chilean university appears in the THE ranking, and one university appears in the WEBOMETRICS, so both rankings are excluded from the similarity analysis.

When the top five universities are compared, LEIDEN, ARWU and QS have the highest M values with respect to the national EI Mercurio ranking. LEIDEN and ARWU’s M plots are identical since they include the same two Chilean universities in the same order. Finally, URAP’s similarity score stays around 0.60 whereas HEEACT’s similarity is the lowest.

Chile’s national ranking emphasizes indicators grouped under educational and institutional properties, whereas research output constitutes a negligible weight among other indicators. The QS ranking has similar weights for both educational and institutional properties. ARWU and LEIDEN’s good performance at smaller k values is due to their correct prediction of the top two institutions in Chile’s national ranking, but their M values decrease as the size of the compared lists increase. In the Chilean context, the top ranking universities seem to score high on both reputation and research performance indicators, which may explain why global rankings that emphasize academic performance and reputation measures have converging rank similarity values in Chile.

China

Figure 4 below summarizes the M values obtained between each global ranking and the Greater China Ranking when top 10, 20, 30, 50, 80 and 100 universities are compared. The number of overlaps between the Chinese national ranking and global rankings are 64 (URAP), 38 (ARWU), 37 (LEIDEN), 28 (QS), 28 (HEEACT), 21 (THE) and 19 (WEBOMETRICS) respectively.

Figure 4 shows that overall the HEEACT ranking is the most similar to the Chinese national ranking. This is followed by WEBOMETRICS, QS and THE rankings. URAP, ARWU and LEIDEN have lower scores, especially for the top 10 universities. Moreover, none of the rankings could score a similarity score higher than 0.6. This finding suggests that the indicators specific to the Chinese case differentiates the national ranking from the global rankings.

Poland

Figure 5 below shows the M values obtained between each global ranking and Poland’s Perspectywy national ranking across multiple k values. The number of overlaps between Poland’s national ranking and global rankings are 32 (URAP), 5 (WEBOMETRICS), 3 (LEIDEN), 2 (QS), 2 (ARWU), 2 (HEEACT) and 2 (THE) respectively.

URAP ranking has a perfect similarity score for the top 5, and it maintains a similarity value above 0.80 as the k value increases. HEEACT and WEBOMETRICS follow URAP with an initial similarity degree above 0.80, which eventually decreases due to the low coverage of Polish universities in these rankings. THE, LEIDEN and QS have smaller M values which indicate that there is a discrepancy in the way they rank the top two schools in Poland in reference to the national ranking.

The Polish national ranking system is mainly based on national-only indicators (81 %) and emphasizes educational and institutional parameters (75 %) in contrast to research-based indicators (25 %). This indicator distribution does not match URAP, which is strictly based on size-dependent, research-based indicators. However, URAP scored the highest M values among all global rankings. This result seems to suggest that there is a strong correlation between educational/institutional indicators and size-dependent research output measures, especially in the top 5, in the Polish context.

Turkey

Figure 6 below summarizes the M values obtained between each global ranking and Turkey’s national ranking across multiple k values. The number of overlaps between Turkey’s national ranking and global rankings are 73 (URAP), 8 (QS), 5 (THE), 2 (LEIDEN), 2 (WEBOMETRICS) and 1 (ARWU) respectively. No Turkish university appears in the HEEACT ranking. Due to inadequate number of data points ARWU and HEEACT are excluded from further analysis.

As far as the top 10 is concerned, URAP and LEIDEN are tied with the highest M similarity scores, which is followed by relatively lower M scores of THE, WEBOMETRICS and QS. Especially QS’s top 10 is very different from the national ranking, which produced a very low M value. As the k index is increased from 10 to 100, URAP’s M score gradually increases whereas LEIDEN’s score declines given the fact that it is ranking only 2 Turkish universities. The results indicate that especially the top 10 positions differ between URAP and the Turkish national ranking. The M measures for URAP and the national ranking increase as the number of comparisons is increased, which suggests more consistency among both lists at lower ranks. The Turkish national ranking includes several size-independent indicators that seemed to favour different Turkish universities in the top 10 and 20 lists in contrast to URAP, which is strictly based on size-dependent ranking indicators.

UK

Figure 7 below summarizes the M values obtained between each global ranking and the UK national ranking when top 10, 20, 30, 50, 80, 100 and 120 universities are compared. The number of overlaps between UK’s national ranking and global rankings are 68 (URAP), 46 (QS), 46 (THE), 35 (ARWU), 35 (LEIDEN), 34 (HEEACT) and 32 (WEBOMETRICS) respectively.

The results indicated that LEIDEN, QS, ARWU and WEBOMETRICS have a higher degree of similarity with the UK national ranking, especially when the top 10 and 20 lists are compared. THE, URAP and HEEACT perform in a similar way initially with lower M values, but as the k values increase THE exhibits a higher degree of similarity in contrast to URAP and HEEACT. The UK national ranking heavily relies on national-only ranking indicators (89 %), with an emphasis on educational factors (50 %) followed by research (25 %) and institutional facts and figures (25 %). QS has the most similar profile along these dimensions among the global ranking systems included in the analysis, which seem to contribute to the higher degree of similarity obtained for the QS ranking since reputation surveys play a key role in both QS and the UK national ranking. The LEIDEN ranking is based on the proportion of publications of an institution that belong to the top 10 % most frequently cited publications in a number of fields, which seem to correlate well with the national UK ranking. Moreover, ARWU relies on indicators that correlate with reputation, such as the presence of Nobel laureates and highly cited researchers at an institution. This may account for the situation of ARWU as the third best predictor of the national ranking among global ranking systems.

USA

Figure 8 below summarizes the M values obtained between each global ranking and the US News national ranking when top 10, 20, 30, 50, 80, 100, 150 and 200 universities are compared. The number of overlaps between USA’s national ranking and global rankings are 129 (WEBOMETRICS), 82 (QS), 69 (URAP), 63 (ARWU), 60 (THE), 54 (LEIDEN) and 50 (HEEACT) respectively.

Since American universities are heavily represented in all global rankings, comparisons up to top 200 universities can be made in this case. Figure 8 indicates that ARWU is the most similar global ranking to the US national ranking. This is followed by QS, HEEACT, WEBOMETRICS and URAP. LEIDEN and THE have lower similarity values especially when the top 10 and 20 universities are compared. Reputation is an important parameter in the US News and Report Ranking, which correlates well with the presence of Nobel laureates and highly cited researchers at an institution. Since ARWU gives high weights to such ranking indicators, this seems to be a relevant factor for explaining the similarity observed between the two rankings. The second most similar ranking QS also heavily relies on reputation surveys.

The influence of ranking coverage on similarity analysis

URAP ranks top 2000 universities in the world, which is more than twice the number of universities ranked in the second largest ranking analysed in the paper. A ranking with higher coverage may have an advantage over other rankings in the similarity analysis since they tend to have more overlap with national rankings, particularly for developing countries. In order to test if the sample size introduces a bias in similarity measures, we considered only the top 500 universities from URAP and computed the M values with respect to each national ranking. Figure 9 below shows a scatterplot that summarizes the differences between the M values for each version of URAP. Table 13 in the “Appendix” shows the M measures and the frequency of overlap for both URAP versions with respect to each national ranking. The M scores obtained for both versions were strongly correlated, r = 0.79, p < 0.01. Figure 9 suggests that M measures for the top 10 remains to stay near or on the reference line, so they are not strongly influenced by the filtering process. Moreover, M measures are not influenced in the case of China and the US, whereas the deviation for UK is negligible. The largest difference occurs for the developing countries, namely Brazil, Chile, Poland and Turkey. However, the difference is rather small when k equals 10 and 20 (i.e. when the top 10 and top 20 lists are compared). This is mainly due to the fact that when URAP is filtered to top 500 universities, the degree of overlap decreases for countries such as Chile, Poland, Turkey and Brazil, because only a few universities from these countries are ranked in the top 500 (see Table 13 for the overlap and M statistics). Since the M measure gives more weight to matches at the top, this situation did not change the rank similarities for the top 10 and top 20 comparisons. Moreover, in the case of Poland and Turkey, URAP remained to be one of the top predictors despite the decrease in overlap. Therefore, the properties of the M statistic as a similarity measure and the strategy to make comparisons at multiple levels (e.g. top 10, 20, 30) seem to provide a fair comparison among global and national rankings, despite the differences among global rankings in terms of their coverage.

Comparisons of rank similarity measures computed for the original and the filtered versions of the URAP ranking. The reference line y = x is provided to aid the comparison. Multiple points with the same color indicate M values for the top 10, 20, 30,… etc. lists for the corresponding national ranking

Summary

The mean and standard deviations of M scores for each global ranking is given in Table 12 below. Unlike developing countries, the variation of M values seems to be smaller for developed counties that have a higher number of universities in global rankings. In particular, the standard deviations are especially high for developing countries such as Brazil, Chile, Poland and Turkey. China, UK and the US have less variability among average M scores.

Discussion

This section discusses the results reported in the previous section in response to the three main research questions stated in the introduction. In particular, we summarize the similarities and differences among national ranking systems as well as between national and global ranking systems in terms of the indicators they employ, and discuss to what extent global rankings can substitute national rankings.

Similarities and differences among national ranking systems

Our analysis of national rankings showed considerable variability across countries in terms of the total number of ranking indicators employed by each system. A national ranking system on average employs 22 different indicators. National rankings for UK, US, Macedonia and Malaysia employ more than 30 indicators, whereas Pakistan and Chile rankings employ 9 indicators, which is the minimum.

The indicators were categorized under three dimensions, including size dependency, input/output characteristics and major categories. In terms of size dependency, national rankings tend to favor size-independent indicators (Mean = 0.66, SD = 0.20). Brazil, Turkey, Pakistan and Lithuania tend to deviate from this pattern as they equally favor size-dependent criteria.

As far as input/output characteristics are concerned, national rankings tend to equally favor output (Mean = 0.36, SD = 0.21) and institutional properties (Mean = 0.35, SD = 0.15), followed by input (Mean = 0.19, SD = 0.11) and process (Mean = 0.10, SD = 0.10) indicators. Brazil, China and Turkey differ from other national rankings as they emphasize output measures more than the others. Poland, Lithuania and Kazakhstan put greater weight on institutional properties.

Finally, according to major indicator categories, national rankings tend to emphasize educational parameters (Mean = 0.53, SD = 0.24) and treat research (Mean = 0.27, SD = 0.21) and institutional facts and figures equally (Mean = 0.21, SD = 0.10). National rankings for Turkey and Brazil tend to deviate from this pattern as they put greater emphasis on research indicators. US, UK, Kazakhstan, Lithuania and Chile strongly emphasize educational parameters. China stands out among other rankings in terms of its weight on institutional facts and figures (Mean = 0.45).

To sum up, national ranking systems show greater similarity in terms of the weight they assign to size-independent and educational indicators. This is possibly because per capita measures (e.g. students per faculty, publications per faculty, etc.) are easier to collect at the national and institutional levels. The national ranking systems give more emphasis on educational indicators as their target population are mainly local students and their families who seek guidance for selecting a university for higher education.

Similarities and differences between national and global ranking systems

As far as global ranking systems are concerned, size dependent (Mean = 0.48, SD = 0.29) and size independent (Mean = 0.44, SD = 0.33) measures seem to be equally emphasized. URAP and HEEACT differ from other rankings as they strongly emphasize size-dependent measures. QS and THE differ from the rest due to their emphasis on subjective indicators based on reputation surveys. As far as input/output characteristics are concerned, global rankings tend to emphasize output measures (Mean = 0.69, SD = 0.32) followed by institutional profiles (Mean = 0.27, SD = 0.28). THE ranking stands out from the rest since it is the only global ranking including indicators reflecting input characteristics. Finally, according to major indicator categories, global ranking systems tend to emphasize research (Mean = 0.63, SD = 0.27), followed by education (Mean = 0.24, SD = 0.20) and institutional facts and figures (Mean = 0.13, SD = 0.15). Again HEEACT and URAP stand out as they strictly favor indicators tapping on research performance. The high standard deviation values across all three categorization dimensions indicate that there is considerable variability among global rankings, which highlight the variety of approaches employed by different global ranking systems. Therefore, it is not surprising to see one university at a higher rank in one global ranking system compared to the others. For instance, filtering and sorting of US universities in global rankings reveal that Princeton University is ranked 3rd in LEIDEN, 5th in THE, 6th in QS and 6th in ARWU, whereas it is ranked 29th in HEEACT, 30th in WEBOMETRICS and 40th in URAP.

When national and global rankings are compared in terms of the types of indicators they employ, we observed that national rankings tend to rely on a larger number of indicators. Overall, 166 indicators are unique to national rankings, whereas 20 indicators are used by global rankings only, and 24 indicators are employed by both national and global systems. When different groupings of these indicators are compared, significant differences between national and global rankings are observed. In particular, national and global rankings systems significantly differ in terms of size dependency, input/output characteristics as well as indicator subcategory groupings. Therefore, these findings suggest that national ranking systems does not necessarily comprise a sub-class of global ranking systems.

National and global rankings are also compared in terms of their rank similarities in an effort to observe to what extent global rankings can predict the results of national rankings. Although our analysis of indicator categories suggest important differences among national and global rankings, some of these indicators may be expected to correlate with each other and hence produce similar ranking outcomes. Our rank similarity analysis suggests that in some cases there is a high degree of similarity among national and global rankings (e.g. URAP and Poland, QS and UK) as measured by the M scores. However, the high degree of variability in similarity scores suggest that this is not a general pattern. In other words, no single global ranking consistently outperforms the others as a predictor of all national rankings.

This variability seems to be partly due to the variability in the number of universities covered in each global ranking. Most global rankings include top 500-700 universities, where US, UK and Chinese universities are more likely to be represented. Despite this difference in coverage, there are also cases with high degree of similarity. For example, URAP scored the highest M score for the Brazilian and Polish rankings, whereas QS and LEIDEN have the highest similarity scores for the UK national ranking. None of the global rankings have high similarity scores with respect to the Chinese ranking. Observed variation of M values seems to be smaller for developed countries (UK and US), which has higher number of universities in global rankings, while developing countries have higher variation of M values with less number of universities. For example, average M scores between US national ranking and global rankings are between 0.5 and 0.6, which suggests a moderate level of similarity. In other words, despite the initial variability of similarity for top 10 and 20 comparisons, the outcomes of US national ranking and global rankings are on average almost equally similar to each other.

Can global rankings substitute for national rankings

Our indicator categorization and rank similarity analysis suggest a non-trivial picture, where in some rare cases global rankings such as URAP exhibit a high degree of similarity with national rankings such as Poland, Chile and Brazil, whereas in other cases the national rankings deviate from all global rankings as in the case of China. Therefore, considering a rich set of indicators sensitive to local properties at the national level seems to be a necessity, particularly for developing countries who are under-represented in the top 500-700 lists of most global rankings. Ranking systems such as URAP partially addresses this need by considering a larger number of universities. However, focusing on research performance alone does not seem to address the need for ranking criteria emphasizing other important aspects such as the quality of education and institutional properties.

Conclusion

Overall, our findings indicate that national rankings tend to include a larger number of indicators that primarily focus on educational and institutional parameters, whereas global ranking systems tend to have fewer indicators mainly focusing on research performance. Rank similarity analysis between national and global rankings for each country suggest that with the exception of a few instances global rankings do not strongly predict the national rankings. In countries like Brazil, Chile and Poland, some of the global ranking systems succeeded in predicting the national ranking, which suggest that in those countries there is probably a larger correlation among research performance indicators and educational/institutional parameters that are typically given different weights by global and national systems. In general, it is difficult for global ranking systems to gather reliable per capita data at the national level, which may account for the strong emphasis on size-dependent, bibliometric indicators in global rankings. The proliferation of national rankings especially in the developing countries will not only provide us a better picture of the status of higher education in each country, but will also open the possibility of improving existing global ranking methodologies through comparative analysis and benchmarking.

Notes

There are many ranking systems published in the UK, predominantly by news media such as The Times, The Guardian, etc. The Complete University Guide, the only ranking not published by a news media organization, was selected for the present study.

References

Aguillo, I. F., Bar-Ilan, J., Levene, M., & Ortega, J. L. (2010). Comparing university rankings. Scientometrics, 85(1), 243–256.

ARWU. (2011). Greater China ranking. http://www.shanghairanking.com/Greater_China_Ranking/Greater_China_Ranking2011.html. Accessed 12 June 2013.

ARWU. (2012a). Macedonian higher education institutions ranking. http://www.shanghairanking.com/Macedonian_HEIs_Ranking. Accessed 12 June 2013.

ARWU. (2012b). Academic ranking of world universities. http://www.shanghairanking.com/. Accessed 12 June 2013.

Bar-Ilan, J., Levene, M., & Lin, A. (2007). Some measures for comparing citation databases. Journal of Informetrics, 1(1), 26–34.

Bastedo, M. N., & Bowman, N. A. (2011). College rankings as an interorganizational dependency: Establishing the foundation for strategic and institutional accounts. Research in Higher Education, 52(1), 3–23.

Bowden, R. (2000). Fantasy higher education: university and college league tables. Quality in Higher Education, 6(1), 41–60.

Bowman, N. A., & Bastedo, M. N. (2009). Getting on the front page: Organizational reputation, status signals, and the impact of US News and World Report on student decisions. Research in Higher Education, 50(5), 415–436.

Centre for Science and Technology Studies. (2012). The Leiden ranking. http://www.leidenranking.com/. Accessed 12 June 2013.

Cybermetrics Lab. (2012). Webometrics ranking of world universities. http://www.webometrics.info. Accessed 12 June 2013.

Dill, D. D., & Soo, M. (2005). Academic quality, league tables, and public policy: A cross- national analysis of university ranking systems. Higher Education, 49(4), 495–533.

El Mercurio. (2012). Ranking de Calidad en la Docencia de Pregrado. http://rankinguniversidades.emol.com/category/rankings/2012/. Accessed 12 June 2013.

Federkeil, G., van Vught, F. A., & Westerhejden, D. F. (2012). An evaluation and critique of current rankings. In F. A. van Vught & F. Ziegele (Eds.), Multimensional ranking: The design and devlopment of U-multirank (pp. 39–70). New York: Springer.

Folha. (2012). Ranking Universitaro Folha. http://ruf.folha.uol.com.br/2012/. Accessed 12 June 2013.

Griffith, A., & Rask, K. (2007). The influence of the US News and World Report collegiate rankings on the matriculation decision of high-ability students: 1995–2004. Economics of Education Review, 26(2), 244–255.

Hazelkorn, E. (2007). The impact of league tables and ranking system on higher education decision making. Higher Education Management and Policy, 19, 1–24.

Hazelkorn, E. (2008). Learning to live with league tables and ranking: The experience of institutional leaders. Higher Education Policy, 21, 193–215.

Higher Education Commission of Pakistan. (2010). Quality and research based ranking of Pakistani HEIs. http://www.hec.gov.pk/InsideHEC/Divisions/QALI/Others/RankingofUniversities/Pages/Introduction.aspx. Accessed 12 June 2013.

Independent Kazakhstan Quality Assurance Agency for Education. (2011). University rankings 2011 in Kazakshtan. http://iqaa.kz/rankings/. Accessed 12 June 2013.

Institute for Higher Education Policy (IHEP). (2007). College and university ranking systems: Global perspectives and American challenges. Washington, DC: IHEP.

Malaysian Qualifications Agency. (2011). The MQA rating system for higher education institutions in Malaysia for 2011 (SETARA ‘11). http://www.mqa.gov.my/portal2012/red/en/ratings_setara11.cfm. Accessed 12 June 2013.

National Taiwanese University. (2012). Performance ranking of scientific papers for world universities (formerly known as the HEEACT ranking). http://nturanking.lis.ntu.edu.tw. Accessed 12 June 2013.

Nielsen, J. (1995). Card Sorting to discover the users’ model of the information space. http://www.useit.com/papers/sun/cardsort.html. Retrieved on December 10, 2013.

Perspektywy Education Foundation. (2012). Perspektywy University ranking. http://www.perspektywy.org/. Accessed 12 June 2013.

Quacquarelli Symonds Company. (2012). The QS world university rankings. http://www.iu.qs.com/university-rankings/. Accessed 12 June 2013.

Saisana, M., d’Hombres, B., & Saltelli, A. (2011). Rickety numbers: Volatility of university rankings and policy implications. Research Policy, 40, 165–177.

Salmi, J., & Saroyan, A. (2007). League tables as policy instruments: Uses and misuses. Higher Education Management and Policy, 19, 1–39.

SCImago Research Group. (2012). SCImago global institutions rankings. http://www.scimagoir.com/. Accessed 12 June 2013.

Sponsler, B. A. (2009). The role and relevance of rankings in higher education policymaking. Issue Brief. Institute for Higher Education Policy.

The Complete University Guide. (2012). http://www.thecompleteuniversityguide.co.uk. Accessed 12 June 2013.

Times Higher Education. (2012). The world university rankings. http://www.timeshighereducation.co.uk/world-university-rankings/. Accessed 12 June 2013.

University Ranking by Academic Performance. (2012a). URAP ranking of Turkish universities. http://tr.urapcenter.org/. Accessed 12 June 2013.

University Ranking by Academic Performance. (2012b). URAP ranking of top 200 universities. http://www.urapcenter.org/. Accessed 12 June 2013.

U.S. News & World Report. (2012). America’s best colleges and universities. http://colleges.usnews.rankingsandreviews.com/. Accessed 12 June 2013.

Usher, A., & Savino, M. (2007). A global survey of university league tables. Higher Education in Europe, 32(1), 5–15.

Van Dyke, N. (2005). Twenty years of university report cards. Higher Education in Europe, 30(2), 103–125.

Van Leeuven, T. N., Moed, H. F., Tussen, R. J. W., Visser, M. S., & van Raan, A. F. J. (2001). Language biases in the coverage of the citation index and its consequences for international comparisons of national research performance. Scientometrics, 51(1), 335–346.

Van Raan, A. F. J. (2005). Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics, 62(1), 133–143.

Veidas Magazine. (2012). Lietuvos Universitetų Reitingas 2012. http://www.veidas.lt/lietuvos-universitetu-reitingas-2012. Accessed 12 June 2013.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

See Table 13.

Rights and permissions

About this article

Cite this article

Çakır, M.P., Acartürk, C., Alaşehir, O. et al. A comparative analysis of global and national university ranking systems. Scientometrics 103, 813–848 (2015). https://doi.org/10.1007/s11192-015-1586-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-015-1586-6