Abstract

There is a growing application of Artificial Intelligence (AI) in K-12 science classrooms. In K-12 education, students harness AI technologies to acquire scientific knowledge, ranging from automated personalized virtual scientific inquiry to generative AI tools such as ChatGPT, Sora, and Google Bard. These AI technologies inherit various strengths and limitations in facilitating students’ engagement in scientific activities. There is a lack of framework to develop K-12 students’ epistemic considerations of the interaction between the disciplines of AI and science when they engage in producing, revising, and critiquing scientific knowledge using AI technologies. To accomplish this, we conducted a systematic review for studies that implemented AI technologies in science education. Employing the family resemblance approach as our analytical framework, we examined epistemic insights into relationships between science and AI documented in the literature. Our analysis centered on five distinct categories: aims and values, methods, practices, knowledge, and social–institutional aspects. Notably, we found that only three studies mentioned epistemic insights concerning the interplay between scientific knowledge and AI knowledge. Building upon these findings, we propose a unifying framework that can guide future empirical studies, focusing on three key elements: (a) AI’s application in science and (b) the similarities and (c) differences in epistemological approaches between science and AI. We then conclude our study by proposing a development trajectory for K-12 students’ learning of AI-science epistemic insights.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial Intelligence (AI) has proliferated in the recent decade. Applications in various areas including healthcare, education, social media, robotics, and entertainment depend on AI technology (Su & Yang, 2022). For instance, GPT-4 and Google Bard have human capabilities of reading images, writing programming codes, and solving mathematical problems. Owing to the prominence of AI in our society, educational researchers have argued that K-12 students need to develop AI literacy (Druga et al., 2019; Ng et al., 2021, 2022). AI literacy is conceptualized as a set of cognitive skills and affective attitudes by some scholars (Ng et al., 2021). For example, Ng et al. (2021) define components of AI literacy as knowing and understanding AI, using and applying AI, evaluating and creating AI, as well as AI ethics. The former three components follow the cognitive skills levels in Bloom’s taxonomy (Bloom et al., 1956), while the latter component concerns with values behind engaging with AI technologies. These components are related to the mental functions and affective emotion that deal with AI technologies, which contrasts with the epistemic considerations on where knowledge generated from AI technologies comes from and how we can justify the truth of knowledge generated by AI technologies. As literature regarding the incorporation of AI technologies in science education is emerging, there is a need to conceptualize learning outcomes regarding the epistemic aspects of such incorporation. Such move can guide teachers’ curriculum planning and instruction in K-12 education.

Without a deeper understanding of epistemic interactions between AI and science, students might believe in the authority of AI technologies in portraying scientific claims. AI algorithms can either create fake scientific claims or advance scientific research (Sun, 2023). On one hand, an AI platform, Grover, can generate a news article which falsely claims that vaccines against measles are linked to autism (Robitzski, 2019). AI technologies, such as the use of ChatGPT, can advance scientific research such as climate change research. ChatGPT can facilitate data analysis, communication of climate change information to a wider audience, supporting decision-making on climate change, as well as generating climate scenarios that inform policy making (Biswas, 2023). However, as cautioned by Biswas (2023), AI technologies like ChatGPT inherit several limitations, including a lack of contextual awareness of the socio-scientific issue, inability to understand the intricacies of scientific phenomenon of its impact, as well as inheriting inaccuracies depending on the dataset used to train ChatGPT (Biswas, 2023). If our next generation does not develop a comprehensive understanding of the strengths and limitations of AI in generating scientific knowledge, they might not be able to use these AI technologies wisely and strategically in learning, solving, and reasoning scientific problems.

More importantly, given the popularity of large pre-trained language models in AI technologies, students and members of public might use these models for important decision-making, such as taking vaccines against measles. When ChatGPT was released, a higher percentage of users expressed positive sentiments while only a small percentage of users expressed concerns about misuse of ChatGPT (Haque et al., 2022). Hence, we argue that students should develop a deep understanding of the interaction between AI technologies and scientific knowledge, in order for them to critically reason scientific knowledge generated by AI language models and make decisions. When AI is incorporated in science classrooms, students might have a list of big questions (Billingsley et al., 2018) on the relationships between science and AI. Students might question the uncertainty of science and perceive that AI can answer all questions that science cannot answer (W. J. Kim, 2022). Although the tension between science and AI is important for developing students’ curiosity (Billingsley et al., 2023a), it appears that the agenda in the school curriculum focused on AI and science as a set of cognitive skills. In fact, epistemic considerations are important for students to understand the nature, source, and justification of knowledge of AI and science (Mason, 2016). Epistemic insight refers to knowledge about knowledge, how different disciplines interact, and the similarities and differences between disciplines (Billingsley et al., 2012, 2018). In the context of our study, epistemic insight encompasses how the field of AI technologies interact with the field of science, as well as similarities and differences between the disciplines of AI and science.

As far as we know, there is not any clear conceptualization or rubric that describes epistemic insights into the relationships between science and AI. Without such a clear conceptualization, it is difficult to move the school curriculum agenda from considering AI literacy in science as a set of cognitive skills to AI literacy as a set of epistemic insights. More importantly, it is difficult for science education researchers, or technology education researchers, to develop instruments to measure students’ epistemic insights into science and AI. Although emerging works examine the application of epistemic insights into relationships between AI and science in K-12 education (Billingsley et al., 2023a; K. Kim et al., 2023; W. J. Kim, 2022), we argue that more research efforts are needed to consolidate the concepts of epistemic insights into the relationships between AI and science.

Previous systematic reviews focused on the issues of AI in general (Tahiru, 2021), other discipline areas such as language education (Liang et al., 2021) or other target groups such as higher education (Ouyang et al., 2022). Only one systematic review study concerns the application of AI in STEM education (Xu & Ouyang, 2022). Xu and Ouyang (2022) revealed trends of instructional strategies, contexts and instructor’s involvement in the application of AI in STEM education. However, their systematic review did not address the ways of knowing required for teachers and students to use AI in teaching and learning of science. Hence, this paper presents a systematic review of educational literature of epistemic insights into relationships between AI and science. Our circumspection is that these ideas about epistemic insights were not explicitly addressed in the literature, but they are present in different corners of the literature. To distill these ideas from literature, we applied categories in the family resemblance approach framework from Erduran and Dagher (2014) to holistically examine and categorize epistemic insights into relationships between AI and science, making these epistemic insights in literature explicit. Specifically, we systematically collected, screened, and reviewed literature on the interdisciplinary application of AI in science in educational settings from 2012 to 2023. Our work will summarize if, what, and how literature addressed AI-science epistemic insights. For those categories of epistemic insights that are lacking in the literature, we will fill in the research gap by critically reviewing literature from other fields, aiming to bring a theoretical framework characterizing AI-science upfront. The research questions below guide the present study:

-

o

RQ1. How many of these studies consider epistemology as an explicit targeted instructional outcome?

-

o

RQ2. Using a family resemblance approach as an analytical framework, what categories of AI-science epistemic insights are addressed in the literature?

-

o

RQ3. How do they conceptualize categories of AI-science epistemic insights in literature?

2 Literature Review

2.1 Learning Artificial Intelligence (AI) in K-12 Education

With a wider application of big data and artificial networks in problem-solving in various disciplines (Ozbay & Alatas, 2020; Yu et al., 2018), Artificial Intelligence (AI) becomes a popular topic in education literature (Tahiru, 2021; Zhai et al., 2021). AI is defined as machines that simulate human intelligence to perform tasks such as visual perception, decision-making, and translations between languages (Cambridge Dictionary, 2023; Su et al., 2022). With machine learning, manual calibration is no longer needed for sustaining AI human–computer interactions as machines make predictions by learning (Zhai et al., 2021). The general public can easily touch upon AI because many daily life applications, devices, and services use AI to provide rapid and immediate solutions (Zawacki-Richter et al., 2019). A recent generic AI application is ChatGPT which provides a human-like conversation interface for generating codes and answering text-based inquiries (Lund & Wang, 2023). Extensive real-life applications of AI leads to its popularity in school curricula (Knox, 2020).

Apart from real-life applications of AI, there are various types of emerging AI technologies that facilitate teaching and learning in schools: educational robots, intelligent tutoring system, automation, and student behavior detection (Xu & Ouyang, 2022). For educational robots, there are social robots and programming robots: social robots, such as RoboTespian (Verner et al., 2020), interact with students verbally and physically; programming robots engaged students in programming languages (Atman Uslu et al., 2022). Intelligent tutoring system provides customized feedback and instructions to students, for example, a virtual agent can teach physics concepts and provide appropriate scaffolds to student by detecting students’ ability (Myneni et. al., 2013; cited in Xu & Ouyang, 2022). Moreover, automation provides immediate assessment of students’ responses and generates a new task for students, such as the use of automated games in facilitating students’ language learning (Higgins & Heilman, 2014). Also, for student behavior detection, one example is that the use of Intelligent Science Station technology incorporated with a mixed-reality AI system provided guided discovery in the sequence of predict-observe-explain and self-explanation (Yannier et. al., 2020). Apart from those applications stated by Xu and Ouyang (2022), other applications can also be incorporated into universities and further education. For example, like the works of scientists, graduate students use large language processing models to analyze Covid-19 vaccine adverse events (Cheon et al., 2023). Another potential application is that graduate students can learn to use a combination of a geographical information system and AI technologies to monitor underground water for the purpose of irrigations (Taşan, 2023).

Although AI technologies have wider applications in facilitating teaching and learning in all levels of education, there is not any specific framework that theorizes the set of AI literacy in K-12 science education. Many previous studies focused on how researchers can assess students’ scientific understanding using AI technologies, instead of directly utilizing AI technologies in teaching and learning of scientific knowledge. For example, some studies concerned with the use of AI in automatically assessing students’ representation of scientific models (X. Zhai et al., 2022; Zhai, 2021a, 2021b). By contrast, students can apply AI in learning canonical science content knowledge (Deveci Topal et al., 2021), cultivating reasoning skills (Chin et al., 2013) and developing sophisticated mental models (White & Frederiksen, 1989). Given the potential values of AI in teaching and learning of science, there is a lack of conceptualization of AI literacy specific to the discipline of science. In the past, there are some conceptualizations regarding generic AI literacy, with one popular conceptualization by Ng et al. (2021):

-

Knowing and understanding AI: A basic understanding of the principles and applications of AI is an important component of K-12 students’ AI literacy. AI can be applied to visual personal assistants, natural language processing, image analytics, and deep learning (Ng et al., 2022). It has a widespread application in the fields of healthcare, business, social media, and automatic vehicles.

-

Using and applying AI: K-12 students construct their algorithms and logic according to a set of principles to solve problems across contexts (Vazhayil et al., 2019). An example is that students learn the principles of coding in micro:bit and write their own codes to construct automated smart home.

-

Evaluating and creating AI: K-12 students critically evaluate the design of AI technologies in relation to the contexts of problem-solving (Han et al., 2018). For instance, students construct automated grocery ordering system by detecting the amount of food in the fridge (Hong et al., 2007). This reduces the time for people to check the amount of food in fridge and reorder through online groceries shops. Students evaluate each other’s constructed AI grocery ordering system. They examine which AI grocery ordering system is more user-friendly and provides an optimal solution.

-

AI ethics: K-12 education develops students an attitude that AI should be ethically and appropriately applied in different contexts. Students should abide by data protection policy and take legal responsibilities (Javadi et al., 2021). For example, some schools do not allow students to use ChatGPT to complete their homework (Thorp, 2023), despite the fact that ChatGPT could be a tool for curation of extra-curricular knowledge.

The conceptualization by Ng et al. (2021) is a starting point to enrich our understanding of AI literacy. Not only are students required to understand fundamental skills and techniques in AI (Kandlhofer et al., 2016), but students are also able to critically evaluate AI technologies (Han et al., 2018; How & Hung, 2019) and develop human-centered considerations when they worked with AI (Druga et al., 2019; Gong et al., 2020). Nonetheless, such conceptualization remains generic and does not take into consideration the application of AI in science education. Without such discipline-specific conceptualization, researchers cannot evaluate how educators help students develop AI literacy in science.

2.2 Epistemologies of Science and AI, AI-Science Epistemic Insights and AI-Science Epistemic Practices

AI literacy in science education is apparently more than the components of AI literacy theorized by Ng et al. (2021) which is merely concerned with a set of cognitive, higher order thinking skills and ethics. Some science educators, such as Kelly and Licona (2018), argue for the importance of incorporating epistemology in these “practices.” Students propose, communicate, evaluate, and legitimize knowledge when they are aware of the nature of the discipline (Kelly & Licona, 2018). Specifically, when students evaluate scientific knowledge, they draw on a range of epistemic criteria on what counts as evidence and what counts as justification (Duschl, 2008). When AI literacy is promoted in science education setting, it is important to take epistemology into consideration as students commonly reason scientific knowledge by drawing on both cognitive skills and epistemology of interaction between AI and science. Their understanding of the nature of AI might be related to how they reason and evaluate scientific information generated by AI technologies. To name an example, students commonly perceived AI-generated information “accurate” (Qin et al., 2020) and “smart” (Demir & Güraksin, 2021); hence, they were more likely to trust information generated by AI technologies (Qin et al., 2020). They might not realize that generation of knowledge in the fields of AI and science has limitations when they reason scientific claims generated by AI technologies. To develop their epistemic understanding of AI and science for reasoning scientific information generated by AI technologies, a clear conceptualization on the interaction between science and AI is needed.

As argued by Billingsley et al. (2023a), students need to develop “epistemic insights” of AI in science education. Epistemic insight refers to the epistemology of knowledge, views on how knowledge about disciplines, and their interactions (Billingsley, 2017). Compared with the conceptualization of nature of science by Lederman (2006), epistemic insight is a much broader conceptualization. Epistemic insight encompasses general epistemological beliefs, knowledge about specific disciplines, and how various disciplines interact to make each discipline distinctive from each other (Billingsley & Hardman, 2017; Konnemann et al., 2018).

Despite numerous research studies on AI literacy, more work is needed to theorize epistemic insight of the domain-general and domain-specific nature of AI and science, as well as the relationships between AI and science. Without such theoretical work, it is difficult to determine what sort of understanding students need to attain to differentiate and draw relationships between science and AI when students engage in AI in science lessons. Some studies reported that students’ conception of AI is “uninformed” and “naïve” (K. Kim et al., 2023; Mertala et al., 2022). From students’ point of view, misuse of AI will “destroy mankind” and “may become the fate of humanity” (Mertala et al., 2022). This type of negative consequence is far more destructive than those of misuse of science. Moreover, students might think the misuse of science for military purposes costs lives (Bergmann & Zabel, 2018). Similar to science, students are also inclined to believe in the superiority of AI that AI is flawless and does not require human calibration (K. Kim et al., 2023). Although students show an understanding that scientific knowledge is tentative and subject to change (Khishfe & Lederman, 2006), their belief in AI might potentially lead to their understanding that the application of AI in science would make scientific knowledge absolute. As suggested by Billingsley et al. (2023a), a more explicit discussion of how AI improves or worsens scientific practices necessitates students’ AI literacy in science. These big questions might include the following: (a) How might AI facilitate observations and modeling in science? (b) What are the consequences of misusing AI in communicating scientific evidence? Asking these “Big Questions” (Shipman et al., 2002) in lessons reduces the compartmentalization of AI and scientific practices and promotes students’ epistemic curiosity (Billingsley & Fraser, 2018; Billingsley et al., 2023a).

In light of the above, it is important to consider AI-science epistemic insights and how such epistemic insights can be applied by K-12 students to generate, revising, and evaluating scientific knowledge. Past research literature only articulates the ways of knowing and characteristics of individual disciplines, which is considered as epistemology of respective disciplines (e.g., nature of science in Lederman (2006)) (Fig. 1). Recently, some scholars, like Billingsley et al. (2023a), argue that K-12 students need to consider epistemic insights into relationships between AI and science disciplines. Such AI-science epistemic insights involve student’s understanding of similarities and differences between the disciplines of AI and science, and how AI technologies can be applied to the field of science. The purpose of this manuscript is to delineate such AI-science epistemic insights, for the aim to drive such incorporation of AI in K-12 science curriculum and instruction. Eventually, K-12 students will draw on such AI-science epistemic insights to engage in a range of AI-science epistemic practices. For example, K-12 students can develop their awareness that generative AI tools can be biased by their large language models so that they critically evaluate the sources of scientific claims generated by ChatGPT.

2.3 Using Family Resemblance Approach to Conceptualize Epistemic Insights in the Integration of AI in Science Education

Given the focus on domain-general and domain-specific nature of epistemic insights, we draw on the family resemblance approach (FRA) framework from Erduran and Dagher (2014) to characterize how AI is different from science and the relationship between AI and science in K-12 education in literature. Their framework can be potentially applied to this study to draw distinctions and the relationship between AI and science because of numerous reasons. Firstly, their framework has been empirically applied to science education to see the power and limits of disciplines. Erduran and Kaya (2018) applied the framework to teacher education and identified that teachers became cognizant of the fact that the growth of scientific knowledge depends on the interaction between theory, law, and model.

Secondly, their framework offers categories that give a holistic account of various aspects of how AI and science interact, as well as the distinction between AI and science. Just like science and religion (Billingsley et al., 2012), some parts of AI and science are complementary while some parts are not. Drawing on Wittgenstein (1958)’s definition of family resemblance, the framework from Erduran and Dagher (2014) consists of ways of knowing in the cognitive–epistemic system and social–institutional system: the cognitive–epistemic system consists of aims and values, practices, methods, and knowledge; while the social–institutional system comprises categories on political, economic, and social dimensions of science (Erduran & Dagher, 2014):

-

Aims and values: the cognitive and epistemic objectives of science are related to those of application of AI, such as that AI is an inclusive tool for equitable access to scientific knowledge for people with disabilities (Watters & Supalo, 2021);

-

Practices: the set of epistemic and cognitive activities that leads to connections between science and AI. For example, AI technologies facilitate computational modeling of scientific phenomenon (Goel & Joyner, 2015);

-

Methods: the relationship between ways of inquiry in AI and science, such as how AI bridges human–science interface (Guo & Wang, 2020);

-

Knowledge: the sources, forms, and statuses of knowledge acquired by AI to facilitate production of scientific knowledge, such as AI owning procedural and declarative knowledge for generation of scientific knowledge (Gonzalez et al., 2017);

-

Social–institution dimensions: political, economic, and social dimensions of interaction between AI technology and science, for example, ethical rules stipulated by organizations to regulate the use of AI and science for unintended consequences (Klemenčič et al., 2022).

Our circumspection is that without an analytical framework to depart from our systematic review of epistemic insights of AI in science education, it is difficult to organize and synthesize literature on the ways of knowing K-12 students need to acquire when they engage AI in science. The FRA framework by Erduran and Dagher (2014) in fact shares similarities with the framework of epistemic insights by Billingsley (2017), while the former framework indicates concrete categories for characterizing ways of knowing in disciplines while the later framework emphasizes on interactions and relationships between disciplines (see Table 1 for summary). Hence, the framework from Erduran and Dagher (2014) offers a solid and holistic account of how literature discusses the ways of knowing what students need to achieve in K-12 education. The FRA framework accounts for similarities and differences in ways of knowing between the fields of science and AI technology. Specifically, the FRA framework offers five dimensions, namely aims and values, practices, knowledge, and methods, which provides an analytical lens for disentangling epistemic insights into the relationships between science and AI technology in our systematic literature review. In fact, according to their framework, applying and evaluating AI is encompassed in the category of practice while the category of social-institutional dimension is also coherent with AI ethics in the components of AI literacy (Ng et al., 2021). The categories can be a departure point that does not only differentiate between AI and science but also their relationships discussed in our systematic review.

The most important strength of this framework is that the categories can be applied across disciplines for comparison. Although this framework originally delineates the way of knowing in science education, its theoretical and empirical applications have been evidenced in wider fields of study (see a systematic review in Author 1 (2022)). Park et al. (2020) applied the framework to the analysis of aims and values, and practices of science, technology/engineering, and mathematics; Erduran and Cheung (2024) used their framework to see the differences between STEM and arts; Puttick and Cullinane (2021) applied this framework to account for the way of knowing in geography education; Chan et al. (2023) adapted this framework in the field of health geography to examine how scientific content in the media affects public everyday mobility practices; Cheung et al. (2023) also applied this framework to delineate the way of knowing in science communication. As evidenced in wider applications in various areas, the analytical framework from Erduran and Dagher (2014) can potentially disentangle the ways of knowing between science and AI as well as their relationships.

3 Methodology

In order to map the epistemic insights into relationships between AI and science in science education, we conducted a systematic review from 2012 to 2023 when empirical studies about AI-science have proliferated (Xu & Ouyang, 2022). Our study is guided by the Preferred Reporting Items Systematic Reviews and Meta-Analyses (PRISMA) principles (Moher et al., 2009).

3.1 Database Search

To locate the studies that discuss AI and science in science education, the following databases were selected: Web of Science, Scopus, and ERIC. Filters were used to all research, book chapters and peer-reviewed articles in the field of education and educational research from January 2012 to March 2023 (Based on the specific requirements of bibliographic databases, we propose the searching strategies. In terms of the research questions, two types of keywords were used as the search terms. First, keywords related to AI and specific AI applications were added (i.e., “AI” OR “artificial intelligence” OR “machine learning” OR “deep learning”). Second, keywords related to science and specific science subjects were added (i.e., “science” OR “Physics” OR “Chemistry” OR “Biology”). Third, the keyword “science education” was added.

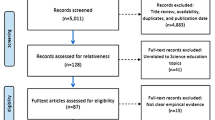

3.2 Searching Criteria and Screening Process

The screening process involved the following procedures: (1) removing the duplicated articles; (2) reading the titles and abstracts and removing the articles according to the inclusion and exclusion criteria; (3) reading the full texts and removing the articles according to the inclusion and exclusion criteria; (4) extracting data from the final filtered articles (see Fig. 2).

For the first round of searching, 627 articles were located through the Web of Science, 547 articles were located through Scopus, and 131 through ERIC. After removing the duplicated ones, 1182 articles remained for the second round of screening. By reviewing titles and abstracts according to the inclusion and exclusion criteria, 882 articles were excluded for the reason of “not related to science education,” 252 were excluded for the reason of “not related to AI technology,” and 2 were excluded for the reason of “not in English.” By reviewing the full texts of the remained 106 articles, 9 articles were excluded because of lack of full-text accessibility, 9 papers that were published before 2012 were excluded, 21 articles were excluded because AI was not used in science education in those papers. Moreover, 52 were excluded because they only consider AI as a system for assessment in science education or a generic teaching and learning tool. We did not include these studies because there was not any specific implication on the interaction between ways of knowing in AI and that of science. For example, in one study, Zhai et al. (2022) explored the use of machine learning to automatically assess students’ scientific models. This kind of study was excluded in our analysis as our review focused on how learners acquired interaction between AI and scientific knowledge in teaching and learning of science. Hence, a total of 15 articles that met the criteria were identified for the systematic review.

3.3 Analytical Framework and Procedure

The epistemic insight is an analytical framework, which refers to the interactions of knowledge between two disciplines (Billingsley & Hardman, 2017; Konnemann et al., 2018). To identify domain-general and domain-specific nature of science and AI, epistemic insights highlight what sort of understanding students need to attain to differentiate and draw relationships between science and AI when students engage in AI in science lessons. Based on the nature of epistemic insights, we draw on the FRA framework (Erduran & Dagher, 2014) to characterize how AI is different from science and the relationship between AI and science in K-12 education in literature. The FRA framework has been empirically applied to science education to see the power and limits of disciplines, and it offers a holistic account of various aspects of how AI and science interact. More importantly, categories of the FRA can be applied across disciplines for comparison, so that we can try to characterize epistemic insights into relationships between AI and science and address the ways of knowing required for teachers and students to use AI in science education.

To answer RQ1, we examined whether epistemic aspects of disciplines were emphasized or downplayed in eligible studies, we analyzed the targeted learning outcomes of incorporating AI in science education in these studies. According to Xu and Ouyang (2022), there are different types of learning outcomes in incorporation of AI technologies in STEM classrooms, including learning performance, pattern and behavior, higher order thinking, affective perception: learning performance, pattern and behavior includes improvement in content knowledge and discourse between peers; higher order thinking refers to using AI technology to develop learners’ ability to evaluate, to reason and to solve problems; affective perception refers to using AI technology to develop students’ attitude towards science and AI technology. An extra aspect, epistemology, was added into our analysis as our objective was to see to what extent epistemic insights into relationships between AI and science were emphasized in these studies.

To address RQs 2 and 3, we used content analysis method (Zupic & Čater, 2015) to classify 15 articles related to AI and science in science education to answer the research questions. Before analyzing the articles, we created a tentative coding scheme with definitions that align with the categories in the FRA framework. Afterwards, we critically examined all articles and spotted statements related to epistemic insights into the relationships between AI and scientific knowledge. As the original definitions for each FRA category is specific for nature of science, we refined the definitions of the coding scheme (see Table 2) according to statements in the surveyed articles concerning the interaction between AI and scientific knowledge.

All four authors in the research team were involved in analyzing the 15 eligible articles in systematic review. In the first phase, 20% of articles were coded by four coders independently in order to calculate coding reliability (Cheung & Tai, 2023). Krippendorff’s (2004) alpha reliability was 0.91 among four coders at this phase. In the second phase, four members were divided into two sub-groups, with two members in each sub-group coding the same set of articles. If there were conflicting coding interpretation between two members within the same sub-group, they would discuss their interpretation of the members of another sub-group in meetings until we had reached a consensus.

4 Findings

The outcome of our systematic review was presented in the following section. Firstly, we presented a quantitative view on two trends: (a) the targeted learning outcomes of application of AI in science within the surveyed studies; (b) the number of studies addressing categories of epistemic insights into how science and AI technologies are related. Secondly, we qualitatively documented what epistemic insights into the relationships between science and AI were identified from literature search. Such a qualitative summary enables the construction of a theoretical framework and instruments for future studies on epistemic insights into interaction between science and AI technologies.

4.1 RQ1. How Many of These Studies Consider Epistemology as an Explicit Targeted Instructional Outcome?

According to Fig. 3, in the eligible studies, epistemology is the targeted learning outcome of a small proportion of studies (N = 3). This shows that only a few studies considered developing learners’ ways of knowing in AI and science as an important instructional outcome. Learning performance, pattern, and behavior is a popular targeted learning outcome (N = 5) among science education studies. Other targeted learning outcomes in the studies include higher order thinking (N = 3) and affective perception (N = 1).

4.2 RQ2. Using a Family Resemblance Approach as an Analytical Framework, What Categories of AI-Science Epistemic Insights were Addressed in the Literature?

As justified in the literature review section, we used FRA categories from Erduran and Dagher (2014) to distill epistemic insights into relationships between science and AI in eligible studies (Fig. 4). Epistemic insights are conceptualized as how two disciplines, in this case, science and AI technologies, interact (Billingsley & Fraser, 2018). Epistemic insights from five categories were examined, aims and values, knowledge, methods, practices, and social–institutional aspects. It is found that epistemic insights into how scientific and AI technological knowledge interact are addressed in the smallest number of studies (N = 3). Sources, certainty, and forms of AI knowledge regarding its application in science are downplayed in literature. The kinds of epistemic insights that are mostly addressed in the studies are how scientific and AI aims and values interact (N = 7).

The differences between studies published in science education literature sources and non-science-education literature sources addressing epistemic insights into the interaction between AI and science were also surveyed. Science education sources refer to journals, conference papers, or book chapters with specific readerships for science educators. Six sources where eligible studies were located are considered as science education sources, which included Asia–Pacific Science Education, Physics Education, Science and Education, Journal of Science Education for Students with Disabilities, International Journal of Science Education, and Journal of Science Education and Technology. It was that science education sources and non-science education sources actually articulated each category of AI-science epistemic insights evenly.

4.3 RQ3. How do They Conceptualize Categories of AI-Science Epistemic Insights in Literature?

In the last decade, the FRA serves as both a theoretical and analytical framework to characterize ways of knowing in various disciplines, including STEM (Park et al., 2020), engineering (Barak et al., 2022), STEAM (Erduran & Cheung, 2024), science communication (Cheung et al., 2023), and health communication (Chan et al., 2023). The FRA framework considers that a discipline, such as “AI technologies” as a family concept, shares some similarities with another discipline (Barak et al., 2022). While commonalities between the two disciplines are acknowledged, discipline-specific features are also taken into account (Irzik & Nola, 2011; Kaya & Erduran, 2016).

The analytical categories from FRA (Erduran & Dagher, 2014) were used to categorize epistemic insights into relationships between AI technologies and science within literature focused on science education issues. These categories include aims and values, practices, methods, knowledge, and social–institutional dimension. By examining every part of the eligible studies in-depth, three types of epistemic insights emerged from our analysis: (a) application of AI technologies in science; (b) similarities in ways of knowing between science and AI technologies; (c) differences in ways of knowing between science and AI technologies. Table 3 shows the categorization of epistemic insights according to the FRA analytical categories.

Aims and values refer to the cognitive and epistemic objectives of science are related to those of application of AI, such as that AI enhances public’s scientific literacy. They include the following: AI and science aim at producing high-quality intellectual outcomes by mitigating and minimizing errors; AI demonstrates a different degree of creativity as scientists; application of AI in science requires interdisciplinary thinking. On the other hand, methods refer to the relationship between ways of inquiry in AI and science, such as how they collect data and validate evidence. They include the following: AI and science share similar methods of observation and classification; AI methods involve machine learning algorithms to construct explanations while scientific methods use evidence to construct explanations; AI is an interactive method for bridging human–science interface. Moreover, practices refer to the set of epistemic and cognitive activities that lead to connecting science and AI. For example, AI can automatically categorize data and facilitate scientific investigations. This category includes integration of AI facilitates scientific practices data collection, representation, and classification; AI provides guidance on the visualization of scientific phenomenon; integration of AI in science promotes cooperation and communication which helps gain knowledge of machine learning mechanisms of AI; AI allows investigation of scientific phenomena and figuring out scientific problems in virtual laboratory; AI facilitates computational modeling of scientific phenomena, which has more variation than traditional modeling practices. Knowledge refers to source, forms, and limitations of knowledge in AI and science are compared and related. For example, the source of knowledge from AI is from historical recordings and data sources, while creation of scientific knowledge requires creativity and imagination. The category of knowledge includes that construction of scientific knowledge requires the weighting of scientific evidence, while in AI programming, representativeness becomes an important consideration for data to become evidence. Lastly, social–institutional refers to social-institutional ways of knowing such as ethics, social values, economic, and political dimensions of science and AI, which includes those designers and investigators of AI and science are unbiased and not affected by political or economic factors; ethical rules (e.g., human morality) are needed to prevent unintended consequences of incorporating AI in science; AI promotes accessibility of science to people with disabilities. In the following sections, we documented how these studies conceptualize categories of AI-science epistemic insights in the systematic review of literature.

4.3.1 Aims and Values

Aims and values are an important role in K-12 education because they provide a foundation for learners to act (Barak et al., 2022). Seven studies address such epistemic insights into relationships between science and AI. According to Huang and Qiao (2022), the application of AI in science requires human qualities of interdisciplinary thinking. These human qualities of interdisciplinary thinking in the course of application of AI in science include creativity, problem-solving, cooperativity, critical thinking, and algorithmic thinking (Korkmaz & Xuemei, 2019). For instance, AI technologies in science require integration of algorithmic thinking and scientific knowledge into solving problems and handling fragmented data. One of the example activities mentioned by Huang and Qiao (2022) is the classification of organisms using machine learning models. Human creativity is required to apply machine learning models to identify organisms at risk of distinction, such as monitoring coral reef bleaching. However, AI technologies and scientists share various degrees of creativity (Billingsley et al., 2023a; Goel & Joyner, 2015). Scientific methods and observations are apparently grounded in scientists’ creativity (Billingsley et al., 2023a). Machines such as AI can only supplement scientists’ creativity by analyzing large quantities of unmanageable data, including classification of merging galaxies and green pea galaxies (Billingsley et al., 2023a). The extent to which AI demonstrates human creativity is smaller than that demonstrated by human scientists.

The commonality shared between AI technologies and science is that both fields aim to produce high-quality intellectual outcomes by minimizing and mitigating errors. Science and AI have different epistemic endeavors. Science aims at generating knowledge claims while AI technologies aim at generating information for problem-solving and making predictions (W. J. Kim, 2022). They (2022) also argued that inevitable errors have emerged in the processes of AI and science, while both fields seek to address these errors. An example application of AI in science modeling is Modeling & Inquiry Learning Application (MILA) (Goel & Joyner, 2015). In navigating MILA, students observed ecological phenomena and modeled the casual path leading to their observations. It allowed students to apply conceptual models of ecological systems to their creation of simulations, followed by their assessment of the results of the simulation. They then practice scientific modeling in the AI application and revised it according to the errors they made. Another application is that machine learning can be incorporated into augmented reality–based laboratories in order to diversify students’ representational thinking (Sung et al., 2021). The processes of diversification are prone to various types of model training errors. Lee et al. (2023) also ascertained the role of errors in developing AI speaker systems to support science hands-on laboratory. Errors make the validation process more rigorous, hence developing a more reliable and accurate AI speaker system when students require information on the quantity of chemicals from the system (Lee et al., 2023).

4.3.2 Practices

Engaging students in practices requires students to develop an epistemic understanding of the disciplines, for example, how experts in the field establish credibility for the claims of knowledge (Osborne, 2014). Six out of 15 studies address such epistemic insights into relationships between science and AI in terms of practices. In the Next Generation Science Standards, scientific practices consist of asking questions, planning and carrying out investigations, analyzing and interpreting data, developing and using models, using mathematics, constructing explanations, engaging in argumentation, obtaining, evaluating, and communicating information (NRC, 2012). While the framework for K-12 science education focuses on a set of scientific practices, our systematic review results documented how ways of knowing of AI facilitated these scientific practices. Seldom do these studies in our systematic review mention the similarities and differences between AI and science in terms of practices.

AI can facilitate scientific practices, including data collection, representation, and classification (Billingsley et al., 2023a; W. J. Kim, 2022; Zhang et al., 2021). Zhang et al. (2021) documented an AI-based application, bio sketchbook, which facilitated individuals’ observations of features of organisms. As argued by Erduran and Kaya (2018), visualization is one of the core scientific practices. Bio sketchbook comprises a webpage and a server with models of data processing, namely a classification detection model and a sketch-generating model (Zhang et al., 2021). The sketch-generating model provides guidance and correction in drawing colors, by obtaining the classification of plants in real-time and converting pictures into line drawings (Zhang et al., 2021). Apart from visualization, the integration of AI promotes cooperation and peer communication on machine learning mechanisms behind AI applications. Machine learning algorithms can be classified into supervised, semi-supervised, unsupervised, and reinforcement (Ayodele, 2010). In using machine learning algorithms, learners need to negotiate ways to “teach” programmed algorithms to handle new data according to the designated problems.

Other potential applications of AI in scientific practices are supporting identification of scientific problems in virtual laboratories (Billingsley et al., 2023a; Xiaoming Zhai, 2021a, 2021b), as well as facilitating computational modeling of scientific phenomena (Goel & Joyner, 2015). Goel and Joyner (2015) reported that students who were equipped with MILA-S could apply conceptual models to generate simulations to test their hypothesized models. Meanwhile, AI-assisted virtual laboratory provided low-cost and safe solution for students to investigate the phenomena (Xiaoming Zhai, 2021a, 2021b).

A more notable outcome of this systematic review is that the focus was on the application of AI in scientific practices. Similarities and differences between AI practices and scientific practices are less addressed in literature. For example, practices in using AI technologies can include using algorithms, evaluating the strengths and weaknesses of algorithms, interpreting the precise meaning of algorithm outputs, and relating the outputs to individual scenarios (Aslam & Hoyle, 2022), which are different from scientific practices from NRC (2012). In the field of clinical science, despite the emergence of AI in determining the quantities and types of medicines administered by medical doctors, decision-making of medicine depends on human–human interaction between doctors and patients (Aslam & Hoyle, 2022). Owing to a lack of humanity, AI technologies themselves are unable to generate solutions that match individual humanistic scenarios. It requires human subjective decisions to interpret these outputs of AI.

4.3.3 Methods and Methodological Rules

According to Cambridge Dictionary (2023), methods refer to ways of doing something. In total, there were five studies addressing epistemic insights into relationships between science and AI in terms of their methods. AI is an interactive method for bridging interface between science and human (Gonzalez et al., 2017; Guo & Wang, 2020). Being interactive and the prerequisite of data inputting is a methodological rule of AI, as AI requires human input to generate output, such as scientific knowledge. In the example given by Gonzalez et al. (2017), AI is incorporated as avatars in Orlando (FL) Science Center who interacted with visitors on Turing test for human intelligence. Like Orlando (FL) Science Center, a botanic garden in China was incorporated with AI technologies, using visitors’ plant-related voice data to input deep learning networks in order to improve the knowledge-based plant science education system (Guo & Wang, 2020). Interactivity and data input help disseminate scientific knowledge to the public.

What AI technologies and science have in common is that both classification and observation are methods (Billingsley et al., 2023a). Scientific methods can be classified as manipulative hypothesis-testing, non-manipulative hypothesis-testing, manipulative non-hypothesis-testing, non-manipulative non-hypothesis-testing (Brandon, 1994). AI programming often involves non-manipulative methods or does not always require a hypothesis, which is similar to scientific methods. Science is a subject grounded in materiality (Chappell et al., 2019; Tang, 2022) which refers to physical properties of a cultural artifact that leads to the use of an object. Material inquiry involves argumentation about the property or behavior of material objects through human interaction with the objects (Tang, 2022). In contrast, AI programming revolves around algorithms composition (Fernández & Vico, 2013) in which materiality plays a less important role.

AI methods involve machine learning algorithms to construct explanations while scientific methods use evidence to construct explanations (W. J. Kim, 2022). Learning algorithms are important epistemic methods for AI to improve performance and scientific methods are ways for scientists to find reliable answers (W. J. Kim, 2022). While the methods used by AI and science to construct explanations are different, both methods depend on rules and data (van der Waa et al., 2021).

4.3.4 Knowledge

There are only three studies addressing how the source, form, and nature of AI knowledge and scientific knowledge interact. Gonzalez et al. (2017) argued that AI holds procedural and declarative knowledge, refining the contexts of scientific inquiry according to participant’s responses. This contrasts with what W. J. Kim (2022) presented that science involves epistemological knowledge. Kim (2022) also elaborated that the construction of scientific knowledge requires the weighting of scientific evidence but AI does not. It seems that both literatures point to that epistemic considerations are necessary for generating scientific knowledge instead of generating AI knowledge. However, from other perspectives, engaging AI in knowledge production requires epistemic consideration of programming software and choices of algorithms. Choices of programming software include google cloud machine learning engine, azure machine learning studio, tensorflow, H2O.AI, Cortana, IBM Watson, and Amazon Alexa. Billingsley et al. (2023a) argue that scientific knowledge requires human application of creativity while AI uses historical recordings and data sources to create knowledge.

One salient epistemic insight into the differences between science and AI that is absent is that the role of indigenous knowledge is more important to the development of scientific knowledge than that of AI knowledge. In Science, Mistry and Berardi (2016) argued that solving real-world problems should start with indigenous knowledge and then consult scientific knowledge as both forms of knowledge are supported by systematic observation of a complex environment over a long period of time. Food control and management stemmed from Nigeria’s indigenous strategies such as planning early maturing crops and relocation of crops to higher grounds (Obi et al., 2021). In contrast, some small local communities do not have access to AI technologies, being unable to contribute to indigenous knowledge in developing AI technologies. For example, to an indigenous person, it is difficult to understand the concept of time and natural universe as they do not have understood circular logic (Fixico, 2013). Similarly, an indigenous person might not understand the logic of input and output, hence AI technologies might be difficult to them, or their indigenous knowledge might lead to an innovative use of AI in generating scientific knowledge.

4.3.5 Social–Institutional Aspects

Our systematic review finds four studies addressing epistemic insights into relationships between science and AI in terms of social-institutional aspects. As AI becomes more prevalent in science, it is crucial to establish ethical rules to prevent unintended consequences. Ethics refers to a system of moral principles that define what is good for individuals and society (Kohlberg & Hersh, 1977). The importance of ethics in planning, conducting, and publishing research has been emphasized in the literature (Creswell, 2012; Guraya et al., 2014). Confidentiality and respect for people are two ethical principles that should be taken into account when collecting and holding personal data. Researchers have a moral responsibility to protect research participants from harm. It is important to inform the research subject about who will hold the data, who will have access to data, and the reason for holding the data. In the AI context, ethical principles should be established to ensure that the use of AI does not violate the rights and dignity of individuals. This includes protecting personal data and privacy and ensuring that AI does not perpetuate biases or discrimination. The use of AI should also be transparent and accountable (Wahde & Virgolin, 2022).

The application of technology to make science more accessible to people with disabilities by providing digital accessibility and innovative solutions to overcome accessibility barriers has been well-documented in the literature (Kulkarni, 2019; Laabidi et al., 2014); AI should not be an exception. For instance, AI-powered e-learning environments can be designed to consider the needs of learners with disabilities in terms of content exploitation and delivery (Watters & Supalo, 2021). AI technology can help in taming scientific literature by assisting readers to sort through search results quickly, especially for those with cognitive impairments (Perkel & Van Noorden, 2020).

Regarding AI in politics and economy, there is a growing concern that the use of AI systems could be biased or discriminatory, in contrast with W. J. Kim (2022). For instance, algorithms that are used for recruitment may be designed to favor certain groups (Hofeditz et al., 2022). There have been several studies and articles that explore the relationship between politics and AI, as well as the ethical considerations involved in developing and deploying AI systems (Ferrer et al., 2021; Hagendorff et al., 2022). Similarly, in the field of science, there have been cases where economic or political interests have influenced research outcomes. For instance, the tobacco industry has been known to fund research that downplays the health risks associated with smoking (Tong et al., 2005). While there is no conclusive evidence on the impartiality of designers and investigators of AI and science, it is important to acknowledge the potential impact of political and economic factors on research outcomes. Researchers and designers need to be aware of these biases and take measures to mitigate them.

5 Discussion and Conclusion

The results of our systematic review signify that there is only a small number of studies (N = 15) that mentioned the ways of knowing when AI technologies are incorporated in science classrooms. In this pool of literature, there is only a small proportion (20%) of the studies considering epistemic insights into relationships between science and AI as a targeted instructional outcome. In technology education literature, AI is often conceptualized as a set of skills, attitudes, and ethics (Ng et al., 2021, 2022). That is to say, more research efforts are required to conceptualize what means by epistemic insights into relationships between science and AI technologies, despite the increasing popularity of applications of AI in science classrooms. Developing students’ epistemic insights into both disciplines can promote student’s epistemic curiosity about why scientists do not rely on ways of knowing in either science or AI (Billingsley et al., 2023a).

5.1 A Unifying Framework that Characterizes AI-Science Epistemic Insights

As far as we know, there is a lack of a unifying framework to delineate epistemic insights into relationships between science and AI. To contribute to science education literature, our systematic review draws on analysis of literature and constructs such unifying framework (Fig. 5). An interesting result is that despite a few studies considering epistemic insights as an instructional outcome, there are different parts in the literature, including literature review and discussion, describing the relationships between science and AI in terms of their ways of knowing. This particular strength is afforded by using the FRA categories from Erduran and Dagher (2014) to distill these ideas out and make these ideas explicit. In our unifying framework, the term “relationships” can be understood from three perspectives, similarities in the ways of knowing between AI and science, differences in the ways of knowing between AI and science, as well as how ways of knowing of AI are applied to that of science. They are grounded in the center of epistemic insights, guiding the fluid movement (indicated by dotted lines) of different types of ways of knowing in science classrooms, namely aims and values, practices, knowledge, methods, and social–institutional aspects. In each category of ways of knowing, there is a detailed summarization of how our surveyed literature documents epistemic insights into relationships between science and AI. As documented in Table 3, some family resemblance approach categories address all three perspectives while some do not. For example, in aims and values, the literature mentioned that AI in science requires interdisciplinary thinking (application); AI and science have different degrees of creativity (differences); AI and science are aiming for high-quality intellectual outcomes by mitigating errors (similarities). On the other hand, literature only focused on how AI is applied to scientific practices, without comparing and contrasting practices involved in doing AI and doing science. We argue that much more research is needed in the future to fill in the gaps in this framework in the future.

Nature, sources, and forms of knowledge in science and AI are underrepresented in literature. In fact, there are similarities and differences in the ways of knowing between AI and science. For example, we have argued the indigenous knowledge plays a more important role in shaping scientific knowledge than knowledge of AI technologies (Mistry & Berardi, 2016). Big Questions (Billingsley et al., 2018) such as “Does local cultural knowledge shape AI knowledge, scientific knowledge or both?” and “Is there any weight of evidence during the construction of scientific knowledge and that of AI knowledge?” can facilitate students’ epistemic curiosity in learning the interaction between AI and science. Teaching and learning AI in science classrooms needs to include these big questions. Incorporating AI into science classrooms is not simply asking students to follow “cookbook” procedures, instead, they should arouse students’ interest in how and why these procedures contribute to the development of knowledge in AI or science. Particularly, students need to pay attention to similarities and differences in methods used in AI and science. We extended the findings of our systematic review and relate to the degree of materiality involved in both methods (Chappell et al., 2019; Tang, 2022), which materiality is grounded in scientific methods to a greater extent.

Regarding informed beliefs on social–institutional dimension, science can be influenced by political and economic factors, such as equity, power structures within research groups, and funding sources (Erduran & Dagher, 2014). We have reoriented the view from W. J. Kim (2022) that both AI and science can be biased. For example, AI systems can be discriminatory during recruitment processes (Hofeditz et al., 2022), while the tobacco industry–funded scientific research that downplays the health risks of smoking (Tong et al., 2005). Thus, we have modified such viewpoint regarding epistemic insights into relationships between science and AI in terms of socio-institutional aspects in our unifying framework (Fig. 5).

The unifying framework presented has implications for future empirical research. Epistemic insights described in this framework can be a part of coding framework for characterizing students and teachers’ views on interaction between science and AI. We envisage future research might carry out interview and questionnaire studies that validate this emerging framework by removing and adding components into the framework. Apart from interviews and questionnaire studies, future research efforts can also look at the effectiveness of teaching interventions that explicitly address the unifying framework. Although there are some research studies in our review that explore conversation of epistemic insights into relationships between science and AI (Billingsley et al., 2023a; W. J. Kim, 2022), they did not quantitatively measure pre- and post-changes in students’ epistemic insights. As far as we know, there is not any instrument measuring students’ epistemic insights into relationships between science and AI. Content in Table 3 can also guide the development of a Likert-scale instrument that measures students’ ways of knowing about relationships between science and AI.

5.2 K-12 Development Trajectories for AI-Science Epistemic Insights

Based on the unifying framework we synthesized from the systematic review, we here propose a development trajectory in K-12 education (Fig. 6). Such trajectory provides a specification of how students appreciate ways of knowing and their interaction between the disciplines of AI and science, as well as learning outcomes of epistemic insights using FRA categories in upper primary, lower secondary, and upper secondary.

In upper primary, students begin to expose to AI programming and robotics (Dai et al., 2024). Through the FRA framework, upper primary students start to recognize that science and AI disciplines concern different questions and identify differences between the disciplines of science and AI. While in lower secondary, students begin to appreciate that science and AI are not necessarily two separate disciplines. For example, seventh graders use the Orange program to develop models to predict future weather (Park et al., 2023). They begin to discuss the similarities, differences, and relationships between science and AI using each FRA category (Park et al., 2023). In upper secondary, students do not only develop a mere understanding of how AI and science interact. Upper secondary students begin to internalize the view that AI can be applied to a range of scientific practices, such as reasoning, investigation, and solving scientific phenomena. They engaged in these practices by drawing on their understanding of the interaction between the AI and science disciplines guided by the FRA categories.

5.3 Limitations

The limitations of this systematic review should be acknowledged. The inclusion of science education studies only is to echo with the themes that “marry up conversations about how knowledge is and should be changing with discussions about what it means to be a scientist and the skills and insights that matter in a digital age” (Billingsley et al., 2023b, p.1). Although epistemic insights are present in literature in other STEAM disciplines such as engineering, our study takes beginning steps is to map out the epistemic insights into relationships between science and AI technology, as a starting point of theorization and investigation. We envisage that in the future, there will be more theoretical and empirical studies layering out the epistemic insights into relationships between AI and other STEM disciplines. This systematic review builds a solid framework that prompts future studies on AI-STEM epistemic insights.

6 Conclusion

The consideration of epistemic aspects for the incorporation of AI technologies in science has been lacking in science education literature. As explained in this paper, we follow a systematic review procedure to identify literature that concerns the interaction between the disciplines of AI and science. Particularly, we applied the five FRA categories, namely aims and values, methods, knowledge, practices, and social–institution to distill AI-science epistemic insights and synthesize a framework in relation to that. Such framework can be potentially applied to developing instruments that measure K-12 students’ understanding of epistemic interaction between AI and science disciplines, as well as driving the development of pedagogical framework that infuses AI technologies in K-12 science education. Based on the theoretical framework we developed, we also propose a learning trajectory that describes the learning outcomes of AI-science epistemic insights in various stages of K-12 education. We anticipate that our theoretical contribution will drive future research studies, as well as teaching and learning in K-12 education, that focus on promoting students’ AI-science epistemic insights in terms of categories in the FRA framework.

References

Antonenko, P., & Abramowitz, B. (2023). In-service teachers’ (mis)conceptions of artificial intelligence in K-12 science education. Journal of Research on Technology in Education, 55(1), 64–78. https://doi.org/10.1080/15391523.2022.2119450

Aslam, T. M., & Hoyle, D. C. (2022). Translating the machine: Skills that human clinicians must develop in the era of artificial intelligence. Ophthalmology and Therapy, 11(1), 69–80.

Atman Uslu, N., Yavuz, G. Ö., & KoçakUsluel, Y. (2022). A systematic review study on educational robotics and robots. Interactive Learning Environments. https://doi.org/10.1080/10494820.2021.2023890

Ayodele, T. O. (2010). Types of machine learning algorithms. New Advances in Machine Learning, 3, 19–48.

Barak, M., Ginzburg, T., & Erduran, S. (2022). Nature of engineering. Science & Education. https://doi.org/10.1007/s11191-022-00402-7

Billingsley, B. (2017). Teaching and learning about epistemic insight. School science review.

Bergmann, A., & Zabel, J. (2018). “They implant this chip and control everyone.”‘Misuse of science’as a central frame in students’ discourse on neuroscientific research. . Challenges in Biology Education Research, 170.

Billingsley, B., & Fraser, S. (2018). Towards an understanding of epistemic insight: The nature of science in real world contexts and a multidisciplinary arena [Editorial]. Research in Science Education, 48(6), 1107–1113. https://doi.org/10.1007/s11165-018-9776-x

Billingsley, B., & Hardman, M. (2017). Epistemic insight and the power and limitations of science in multidisciplinary arenas. School Science Review, 99(367), 99–367.

Billingsley, B., Taber, K., Riga, F., & Newdick, H. (2012). Secondary school students’ epistemic insight into the relationships between science and religion—A preliminary enquiry. Research in Science Education, 43(4), 1715–1732. https://doi.org/10.1007/s11165-012-9317-y

Billingsley, B., Nassaji, M., Fraser, S., & Lawson, F. (2018). A framework for teaching epistemic insight in schools. Research in Science Education, 48(6), 1115–1131. https://doi.org/10.1007/s11165-018-9788-6

Billingsley, B., Heyes, J. M., Lesworth, T., & Sarzi, M. (2023a). Can a robot be a scientist? Developing students’ epistemic insight through a lesson exploring the role of human creativity in astronomy. Physics Education, 58(1). https://doi.org/10.1088/1361-6552/ac9d19

Billingsley, B., Zeidler, D., & Grzes, M. (2023b). Call for Papers: Science & Education Special Issue: The Future of Knowledge: Conversations about Artificial Intelligence and Epistemic Insight. Science and Education.

Biswas, S. S. (2023). Potential use of chat gpt in global warming. Annals of Biomedical Engineering, 51(6), 1126–1127.

Bloom, B. S., Engelhart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (1956). Taxonomy of educational objectives: The classification of educational goals. Handbook 1: Cognitive domain (pp. 201–207). New York: McKay.

Brandon, R. N. (1994). Theory and experiment in evolutionary biology. Synthese, 59–73.

Cambridge Dictionary (Ed.) (2023). Cambridge University.

Chan, H. Y., Cheung, K. K. C., & Erduran, S. (2023). Science communication in the media and human mobility during the COVID-19 pandemic: a time series and content analysis. Public Health, 218, 106–113.

Chappell, K., Hetherington, L., Keene, H. R., Wren, H., Alexopoulos, A., Ben-Horin, O., . . . Bogner, F. X. (2019). Dialogue and materiality/embodiment in science| arts creative pedagogy: Their role and manifestation. Thinking Skills and Creativity, 31, 296–322.

Cheon, S., Methiyothin, T., & Ahn, I. (2023). Analysis of COVID-19 vaccine adverse event using language model and unsupervised machine learning. PLoS ONE, 18(2), e0282119.

Cheung, K. K. C., & Tai, K. W. (2023). The use of intercoder reliability in qualitative interview data analysis in science education. Research in Science & Technological Education, 41(3), 1155–1175.

Cheung, K. K. C., Chan, H. Y., & Erduran, S. (2023). Communicating science in the COVID-19 news in the UK during Omicron waves: exploring representations of nature of science with epistemic network analysis. Humanities and Social Sciences Communications, 10(1), 1–14.

Chin, D. B., Dohmen, I. M., & Schwartz, D. L. (2013). Young children can learn scientific reasoning with teachable agents. IEEE Transactions on Learning Technologies, 6(3), 248–257. https://doi.org/10.1109/TLT.2013.24

Creswell, J. W. (2012). Educational research: Planning, conducting, and evaluating quantitative and qualitative research: Pearson Education, Inc.

Dai, Y., Lin, Z., Liu, A., Dai, D., & Wang, W. (2024). Effect of an analogy-based approach of artificial intelligence pedagogy in upper primary schools. Journal of Educational Computing Research, 61(8), 159–186.

Demir, K., & Güraksin, G. E. (2021). Determining middle school students’ perceptions of the concept of artificial intelligence: A metaphor analysis. Participatory Educational Research, 9(2), 297–312.

Deveci Topal, A., Dilek Eren, C., & Kolburan Geçer, A. (2021). Chatbot application in a 5th grade science course. Education and Information Technologies, 26(5), 6241–6265. https://doi.org/10.1007/s10639-021-10627-8

Druga, S., Vu, S. T., Likhith, E., & Qiu, T. (2019). Inclusive AI literacy for kids around the world. Paper presented at the Proceedings of FabLearn 2019.

Duschl, R. (2008). Science education in three-part harmony: Balancing conceptual, epistemic, and social learning goals. Review of Research in Education, 32(1), 268–291.

Erduran, S., & Dagher, Z. R. (2014). Reconceptualizing nature of science for science education. Reconceptualizing the nature of science for science education: Scientific knowledge, practices and other family categories (pp. 1–18). Springer, Netherlands.

Erduran, S., & Kaya, E. (2018). Drawing nature of science in pre-service science teacher education: Epistemic insight through visual representations. Research in Science Education, 48(6), 1133–1149. https://doi.org/10.1007/s11165-018-9773-0

Erduran, S & Cheung, K. K. C. (2024). A family resemblance approach to nature of STEAM. London Review of Education.

Fernández, J. D., & Vico, F. (2013). AI methods in algorithmic composition: A comprehensive survey. Journal of Artificial Intelligence Research, 48, 513–582.

Ferrer, X., van Nuenen, T., Such, J. M., Coté, M., & Criado, N. (2021). Bias and discrimination in AI: A cross-disciplinary perspective. IEEE Technology and Society Magazine, 40(2), 72–80.

Fixico, D. (2013). The American Indian mind in a linear world: American Indian studies and traditional knowledge. Routledge.

Goel, A., & Joyner, D. (2015). Impact of a creativity support tool on student learning about scientific discovery processes. Paper presented at the Proceedings of the Sixth International Conference on Computational Creativity.

Gong, X. Y., Wei, D. R., Gong, X., Xiong, Y., Wang, T., & Mou, F. Z. (2020). Analysis on TCM clinical characteristics and syndromes of 80 patients with novel coronavirus pneumonia. Chin J Inf Tradit Chin Med, 27, 1–6. Retrieved from https://www.scopus.com/inward/record.uri?eid=2-s2.0-85090357026&partnerID=40&md5=32878738076f422d1d4f320f85046eda

Gonzalez, A. J., Hollister, J. R., DeMara, R. F., Leigh, J., Lanman, B., Lee, S.-Y., . . . Wong, J. (2017). AI in informal science education: Bringing turing back to life to perform the turing test. International Journal of Artificial Intelligence in Education, 27, 353–384.

Guo, L., & Wang, J. (2020, 2020//). A framework for the design of plant science education system for China’s botanical gardens with artificial intelligence. Paper presented at the HCI International 2020 – Late Breaking Posters, Cham.

Guraya, S. Y., London, N., & Guraya, S. S. (2014). Ethics in medical research. Journal of Microscopy and Ultrastructure, 2(3), 121–126.

Hagendorff, T., Bossert, L. N., Tse, Y. F., & Singer, P. (2022). Speciesist bias in AI: How AI applications perpetuate discrimination and unfair outcomes against animals. AI and Ethics, 1–18.

Han, X., Hu, F., Xiong, G., Liu, X., Gong, X., Niu, X., . . . Wang, X. (2018, 30 Nov.-2 Dec. 2018). Design of AI + curriculum for primary and secondary schools in Qingdao. Paper presented at the 2018 Chinese Automation Congress (CAC).

Haque, M. U., Dharmadasa, I., Sworna, Z. T., Rajapakse, R. N., & Ahmad, H. (2022). “I think this is the most disruptive technology”: Exploring sentiments of ChatGPT early adopters using Twitter data. arXiv preprint arXiv:2212.05856.

Higgins, D., & Heilman, M. (2014). Managing what we can measure: Quantifying the susceptibility of automated scoring systems to gaming behavior. Educational Measurement: Issues and Practice, 33(3), 36–46.

Hofeditz, L., Clausen, S., Rieß, A., Mirbabaie, M., & Stieglitz, S. (2022). Applying XAI to an AI-based system for candidate management to mitigate bias and discrimination in hiring. Electronic Markets, 1–27.

Hong, K. s., Kim, H. J., & Lee, C. (2007, 6–8 Dec. 2007). Automated grocery ordering systems for smart home. Paper presented at the Future Generation Communication and Networking (FGCN 2007).

How, M.-L., & Hung, W. L. D. (2019). Educing AI-thinking in science, technology, engineering, arts, and mathematics (STEAM) education. Education Sciences, 9(3). doi:https://doi.org/10.3390/educsci9030184

Huang, X., & Qiao, C. (2022). Enhancing computational thinking skills through artificial intelligence education at a STEAM high school. Science & Education, 1–21.

Irzik, G., & Nola, R. (2011). A family resemblance approach to the nature of science for science education. Science & Education, 20, 591–607.

Javadi, S. A., Norval, C., Cloete, R., & Singh, J. (2021). Monitoring AI services for misuse. Paper presented at the Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society.

Kandlhofer, M., Steinbauer, G., Hirschmugl-Gaisch, S., & Huber, P. (2016, 12–15 Oct. 2016). Artificial intelligence and computer science in education: From kindergarten to university. Paper presented at the 2016 IEEE Frontiers in Education Conference (FIE).

Kaya, E., & Erduran, S. (2016). From FRA to RFN, or how the family resemblance approach can be transformed for science curriculum analysis on nature of science. Science & Education, 25, 1115–1133.

Kelly, G. J., & Licona, P. (2018). Epistemic practices and science education. History, philosophy and science teaching: New perspectives, 139–165.

Khishfe, R., & Lederman, N. (2006). Teaching nature of science within a controversial topic: Integrated versus nonintegrated. Journal of Research in Science Teaching: The Official Journal of the National Association for Research in Science Teaching, 43(4), 395–418.

Kim, W. J. (2022). AI-integrated science teaching through facilitating epistemic discourse in the classroom. Asia-Pacific Science Education, 8(1), 9–42. https://doi.org/10.1163/23641177-bja10041

Kim, K., Kwon, K., Ottenbreit-Leftwich, A., Bae, H., & Glazewski, K. (2023). Exploring middle school students’ common naive conceptions of Artificial Intelligence concepts, and the evolution of these ideas. Education and Information Technologies. https://doi.org/10.1007/s10639-023-11600-3

Klemenčič, E., Flogie, A., & Repnik, R. (2022). Science education in Slovenia. In R. Huang, B. Xin, A. Tlili, F. Yang, X. Zhang, L. Zhu, & M. Jemni (Eds.), Science education in countries along the Belt & Road: Future insights and new requirements (pp. 471–485). Singapore: Springer Nature Singapore.

Knox, J. (2020). Artificial intelligence and education in China. Learning, Media and Technology, 45(3), 298–311. https://doi.org/10.1080/17439884.2020.1754236

Kohlberg, L., & Hersh, R. (1977). Moral development: A review of the Theory. Theory in to Practice, 16 (2), 53–59. Go to original source.

Konnemann, C., Höger, C., Asshoff, R., Hammann, M., & Rieß, W. (2018). A role for epistemic insight in attitude and belief change? Lessons from a cross-curricular course on evolution and creation. Research in Science Education, 48(6), 1187–1204. https://doi.org/10.1007/s11165-018-9783-y