Abstract

This study aims to explore the middle schoolers' common naive conceptions of AI and the evolution of these conceptions during an AI summer camp. Data were collected from 14 middle school students (12 boys and 2 girls) from video observations and learning artifacts. The findings revealed 6 naive conceptions about AI concepts: (1) AI was the same as automation and robotics; (2) AI was a cure-all solution; (3) AI was created to be smart; (4) All data can be used by AI; and (5) AI had nothing to do with ethical considerations. The evolution of students’ conceptions of AI was captured throughout the summer camp. This study will contribute to clarifying what naive conceptions of AI were common in young students and investigating design considerations for the AI curriculum in K-12 settings to address them effectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The importance of integrating Artificial Intelligence (AI) education into K-12 contexts as expanding Computer Science (CS) education has recently been emphasized by numerous stakeholders (Chai et al., 2021; Chiu et al., 2021; Touretzky et al., 2019). Early exposure to AI concepts and principles for young students is critical in order to build students' core competencies and serve as a strong basis for their future careers as well as adapting to AI-based environments throughout society (Huang, 2021; Kong et al., 2022; Touretzky & Gardner-McCune, 2022; Yang, 2022). Perhaps most importantly, if students understand AI, it can lead to greater societal participation as educated citizens capable of understanding the meaning of AI technologies (Druga et al., 2019), using AI to solve problems (Kong & Abelson, 2022; Ng et al., 2021), and understanding the ethical implications of using AI (Ng et al., 2021; Zhang et al., 2022). To this end, several K-12 AI curricula have been introduced into classrooms utilizing varied technology such as robotics (Williams et al., 2021), AR/VR (Lai et al., 2021), AI conversational agents (Van Brummelen et al., 2021), tangible computing tools (Sabuncuoglu, 2020), and block-based programming like Scratch (Kwon et al., 2021), Machine Learning for Kids (Lane, 2021), and MIT App inventor (Tang, 2019).

Nevertheless, several barriers stand in a way that should be addressed in integrating the AI curriculum in K-12 settings. In particular, scholars have suggested that the abstract features of AI make it difficult to teach in K-12 settings (Hayes & Kraemer, 2017; Lacerda Queiroz et al., 2021; Ottenbreit-Leftwich et al., 2022; Wang et al., 2016). To add to the confusion, AI is often used as an umbrella term to include several sub-components like Computer Vision (CV), Machine Learning (ML), Natural Language Process (NLP), and AI ethics (Long & Magerko, 2020; Touretzky et al., 2019). Since these components are abstract concepts, students frequently struggle with the lack of clear examples and direct sensory terms associated with concrete concepts. Concrete concepts contain more sensorimotor information rather than linguistically conveyed information of abstract ones (Schwanenflugel, 1991). Unlike concrete concepts, students frequently struggle to relate the abstract one to the more real and familiar referents through anchoring (Glenberg et al., 2008; Nathan, 2021). Therefore, it often takes more time for students to verify their meaning and make decisions about abstract concepts compared to concrete ones (Borghi et al., 2017; Clark & Paivio, 1991; Schwanenflugel, 1991).

Based on these aforementioned features, teaching AI in K-12 brings about additional challenges as teachers, educators, and even scholars are still grappling with AI learning objectives, standards, and frameworks. In addition, many students are arriving with their conceptions of AI that have been informed by media and fiction (Cave & Dihal, 2019; Dipaola et al., 2022). Students are also likely to have formed ideas about AI due to their daily exposure to AI-based devices that could cause those naive conceptions such as Alexa and Siri (Williams et al., 2021; Wong et al., 2020).

Naive conceptions or preconceptions among students are a challenge for K-12 education in all disciplines (e.g., Chiodini et al., 2021; Soeharto et al., 2019). National Research Council (1997) suggested five types of existing students’ conceptions that need to be considered as we design and develop effective instruction: non-scientific beliefs, conceptual misunderstanding, preconceived notions, factual misconceptions, and vernacular misconceptions (Davis, 1997; Leaper et al., 2012). These naive conceptions are often resistant to change, persistent, and deeply rooted in some of the scientific concepts, making it difficult for students to reconceptualize these ideas and achieve the correct concepts for better academic performance (Greiff et al., 2018; Morrison et al., 2019).

All things considered, the abstract features of the AI concepts and the naive conceptions mentioned above may present challenges to students in grasping AI concepts clearly. Therefore, it would be beneficial to explore the naive conceptions of AI among students in middle school contexts in order to design and develop more effective instruction that could use these conceptions to build better student understanding of AI (e.g., Lin & Van Brummelen, 2021; Tedre et al., 2021; Vartiainen et al., 2021). There have been few studies on K-12 students’ naive conceptions of AI and how those concepts might change during the learning process (e.g., Mertala et al., 2022; Ottenbreit-Leftwich et al., 2022) or teachers’ conceptions of teaching AI (e.g., Sanusi et al., 2022; Yau et al., 2022). This study, in contrast to previous studies, focused on observing the students’ utterances and behaviors during the learning intervention. The researchers took the role of participant observers and captured the moment of how they described the AI concepts while interacting with teachers and students. It could reveal more information and evidence of AI preconceptions they had, by observing their verbal and behavioral interaction with others (Creswell & Gutterman, 2019). In addition, since most previous studies have focused on attitudes or non-cognitive learning gains (e.g., Dang & Liu, 2021; Gonzalez et al., 2022), this study sought to explore the naive conceptions of AI in middle school students and how these conceptions were transformed while engaging in AI-related learning activities. The specific research questions addressed in this study were as follows:

-

RQ1: What were the common naive conceptions about AI among middle school students?

-

RQ2: How did students’ naive conceptions about AI evolve while participating in an AI learning experience?

2 Literature review

2.1 The definition of naive conceptions and impact on students’ learning

Naive conceptions or preconceptions are viewed from many perspectives as naive beliefs (McCloskey et al., 1980), incorrect ideas (Fisher, 1985), preconceptions (Hashweh, 1988), models of reality among individuals (Champagne et al., 1983), alternative frameworks (Driver & Easley, 1978), and children science (Gilbert et al., 1982). However, most of the terms are commonly perceived as distorted ideas held by students, and often conflict with scientific concepts based on the consensus (Clement, 1993; Smith et al., 1994). Not surprisingly, naive conceptions are one of the most salient factors for students’ performance during the learning process; many researchers tried to categorize the types of naive conceptions to identify the cause of those conceptions with the intent to modify them with learning interventions (e.g., Gurel et al., 2015; National Research Council, 1997; Treagust, 1988).

To address these challenges, the National Research Council (1997) identified five types of preconceptions in science education as follows: (1) non-scientific beliefs, (2) preconceived notions, (3) conceptual misunderstandings, (4) vernacular misconceptions, and (5) factual misconceptions. Non-scientific belief indicates that students acquired their knowledge from non-scientific derivations like mythical or religious instructions. Preconceived conceptions are the most common conceptions derived from their everyday experiences of their own or the environments around them. Due to their lack of complete understanding of the scientific concepts, students may be confused about a clear understanding of the phenomenon. Conceptual misunderstandings arise when students learn scientific information that does not challenge their nav from non-scientific notions which often causes accommodation or changing cognitive structures due to the inconsistency between mental structure and outside information. Thus, incorrect mental models were constructed based on those misunderstandings. The vernacular misconception is derived from the usage of terms that are different from the context of daily life and scientific context. Students often have difficulties understanding the concepts of weight and mass due to their overlapping use in everyday contexts. The factual misconception is a notion buried in students’ belief systems which are formed at a young age but unchallenged until they reach adulthood (Impey et al., 2012; Karpudewan et al., 2017; Samsudin et al., 2021).

Similar to the science education context, researchers have found that students exhibited numerous naive conceptions about CS and programming. Students often have naive conceptions about syntactic, conceptual, and strategic knowledge, due to the lack of clear understanding of strategies, programming environments, teachers’ instruction based on their knowledge, students’ erroneous mental models, and being unaccustomed to syntax and natural language (Kwon, 2017; Qian & Lehman, 2017).

In general, educators and instructional designers attempt to identify students’ naive conceptions, the types of those conceptions, and what causes the naive conceptions prevalent among students, in order to design more effective instruction. naive conceptions can be altered through developmentally appropriate instruction. Scholars have already started to identify K-12 students’ naive conceptions in an effort to design more effective instruction. These studies have found that students often describe AI as anthropomorphic technology and AI as being located everywhere (e.g., Kreinsen & Schulz, 2021; Ottenbreit-Leftwich et al., 2022; Mertala et al., 2022). However, the types and causes of naive conceptions of AI among middle school students are rarely identified. Therefore, it would be beneficial to explore and categorize students’ naive conceptions of AI, in order to design more effective AI instruction.

2.2 Instructional approaches to address naive conceptions through instruction

Several studies tried to focus on employing varied instructional approaches to address students’ naive conceptions and promote their academic performance (e.g., Gurel et al., 2015; Kwon, 2017; Qian & Lehman, 2017). Misunderstandings of concepts are the critical obstacles that prevent students from learning new ones. When students have naive conceptions, students are persisted in developing correct scientific models which impede their subsequent learning (McDermott & Shaffer, 1992; National Research Council, 1997).

Identifying the students’ common naive conceptions, providing the students with forums, and examples to confront their naive conceptions, and promoting them to reconstruct their knowledge on the basis of the correct scientific models are efficient ways to address the naive conceptions in Science Education (National Research Council, 1997). Also, using introductory laboratory practices, or small group discussions with forums identify students’ naive conceptions effectively without students' embarrassment or frustration (Hake, 1992). Formative assessment or diagnostic test is one of the broadly used methods to identify naive conceptions comprehensively (e.g., Gurel et al., 2015; Kaczmarczyk et al., 2010). This provides teachers to approach the weaknesses or challenges of students’ naive conceptions systematically so that they could make evidence-based decisions for designing the learning interventions.

Teachers can ask questions that challenge students’ naive conceptions, provide evidence to support students’ explanations of the phenomena, and revisit those concepts later to consolidate their understanding. Along with the verbal and text descriptions, using sketches of the phenomena indicate their naive conceptions and it would be much easier for teachers to make them transform. Because some of the naive conceptions are not clear enough or vague for students to express them either verbally or by text (Benson et al., 1993; National Research Council, 1997). Drawing pictures could also be intuitively understandable for both teachers and students which will help to challenge students’ naive conceptions based on the evidence.

Reconstructing students’ knowledge with a correct framework is the final step to overcoming their naive conceptions. Drawing concept maps could be a useful technique to overview whether the new framework settled well. It could demonstrate the relationships between concepts and enhance the comprehension of correct concepts through collaborative group work (Basili & Sanford, 1991). This could lead to a discussion that improves an in-depth understanding of their conceptual framework as well as using meta-cognition to think about their naive conceptions. By using scientific inquiry, it provides the opportunity to think about their thinking in correct conceptions as well as trace the change of naive conceptions. Also, teachers can design inquiry-based learning to understand the phenomena by observing and reasoning to construct the correct conceptual framework (National Research Council, 2004).

In general, previous studies have explored students’ naive conceptions about AI and designed instruction accordingly (e.g., Ottenbreit-Leftwich et al., 2022), however, it is critical to examine how that age-appropriate instruction helps students to evolve their conceptions in the early stage. Therefore, this study will examine students’ naive conceptions of AI and examine how those conceptions evolve during AI instruction.

3 Methods

3.1 Research design

This study was designed as a case study to develop an in-depth understanding by analyzing a single case of an AI summer camp designed for 6th-8th graders (Creswell & Poth, 2016; Creswell & Gutterman, 2019). The main purpose of the case study was to identify the naive conceptions about AI and the evolution of those concepts by observing students during an AI summer camp. Thus, the case study provided a bounded context to examine students’ naive conceptions by scrutinizing the learning moments, developing case descriptions, and identifying themes based on contextual information.

3.2 Participants

The participants were recruited from public middle schools in the midwestern United States. The summer camp was promoted through social media, and a brief introduction of the program and the target group of participants was provided. A link to the registration site was provided so that those who were interested could register for the program. Consequently, a total of 14 students (twelve boys, and two girls) volunteered to participate in the week-long summer camp to learn about AI concepts and principles. Most of them were interested in CS or STEM education. All had prior experience participating in other summer camps to learn programming with a visual block-based programming language Scratch (https://scratch.mit.edu/) and/or participating in projects with science or STEM contexts.

3.3 Context

The AI summer camp curriculum, “AI4ME,” was implemented across five days and covered core AI concepts such as Computer Vision (CV), Machine Learning (ML), Natural Language Processing (NLP), and AI ethics. Most parts of the curriculum were designed to include hands-on activities with both unplugged and plugged elements. The unplugged activities were designed to promote a conceptual understanding of AI without using computing devices and engagement of the students. The plugged activities were implemented by using AI-based tools like Machine Learning for Kids (https://machinelearningforkids.co.uk/) and Teachable machine (https://teachablemachine.withgoogle.com/) to help students understand the essence of AI through hands-on activities. On the last day, students formed teams to complete a final project which was to design an in-school application integrating AI concepts and principles for solving the problems in their schools. The students’ teams were established based on their interests in specific proposed problems and each team created a technical proposal to use AI to develop an application to solve the problem. The teams periodically presented their proposals to the other teams and teachers to gather feedback and finalize their plans.

3.4 Data collection and analysis

The data were collected from two sources: video observations and learning artifacts. The video observations were recordings of the summer camp experience, resulting in over 40 h of video data for each student (n = 14). The student's learning artifacts were also collected, such as the AI-based artifacts, worksheets, and sticky notes for the AI-based in-school application. Those data could reveal what kinds of naive conceptions of AI are prevalent among middle school students and how students’ naive conceptions of AI have changed during the learning process.

To analyze the videos and learning artifacts, the researchers employed inductive thematic analysis (Braun & Clarke, 2006) with emergent thematic coding to organize coded data according to similarities in themes or concepts (Saldaña, 2016). The main purpose of the analysis is to figure out the naive conceptions of AI and their transformation during the summer camp. Thus, we employed inductive thematic analysis to derive the themes from capturing the learning moments and experiences of students while learning about AI concepts. Five main procedures were used for the analysis: (a) Observing the data, (b) Note-taking, (c) Generating initial code, (d) Searching for themes, and (e) Determining the themes. The process of analysis was described as follows.

First, two researchers in a team mainly reviewed the videos and learning artifacts such as worksheets and ML4Kids projects to find evidence representing students’ naive conceptions, focusing on students’ utterances, and writing down results. Second, the researchers jotted down notes of initial ideas or descriptions to capture the important scene from the videos and learning artifacts. Third, the researchers listed the potential codes based on the annotated data from videos and learning artifacts. In this step, they used open coding to make the initial concepts from the data and shared them through Google Spreadsheets. Then, two researchers in the team reviewed those codes and clarified the meaning of codes with example quotes based on the students’ utterances, and worksheets. After that, for the saturation of coding, axial coding was conducted to draw connections between codes and refined concepts (Braun & Clarke, 2006; Saldaña, 2016; Williams & Moser, 2019). Fourth, the researchers listed the potential themes and grouped the codes that align with each theme based on the collated data. Two researchers in the team reviewed the potential themes and clarified their meaning, which has been done through a similar process during the initial coding. Then, selective coding was employed to modify the redundant codes and themes to maintain the consistency of the thematic structure (Braun & Clarke, 2006; Saldaña, 2016; Williams & Moser, 2019). If the meaning is not clear enough, three researchers discussed it until they reached a consensus (Harry et al., 2005). Lastly, the research team determined the themes based on congruence with the codes and produced the tentative theme report including the name of the theme, codes, descriptions, and example quotes (Creswell & Gutterman, 2019; Saldaña, 2016).

To ensure the validity, reliability, and trustworthiness of emerged codes and themes, several techniques were employed. For the validity, expert reviews on the codes and themes were conducted by two faculty members who were experts in designing and implementing AI education and CS education in K-12 contexts. We confirmed the reliability of the analysis by checking second coders’ agreement of the initial data analysis and revising codes and themes accordingly. To improve trustworthiness, peer debriefing and brief coding sessions were conducted by the research team. After reviewing the collated data, codes, and themes, some of the codes and themes were modified based on the discussion (Lincoln & Guba, 1985; Saldaña, 2016). Additionally, intensive long-term involvement and gathering of rich data were made to achieve validity and trustworthiness (Maxwell, 2013). For instance, five days, and more than 40 h of intensive summer camp were conducted as an intensive long-term involvement. That involvement was captured by videos and learning objects that were diverse and comprehensive to produce a full understanding of students' naive conceptions and their changes.

4 Findings

4.1 In-depth understanding of students’ naive conceptions of AI

Based on analyzing the videos and the learning artifacts, the researchers identified five main themes which revealed the middle school students’ common naive conceptions of AI: (1) AI was the same as automation, (2) AI was a cure-all solution, (3) AI was created to be smart, (4) All data can be used by AI, (5) AI was impartial and fair, and (6) AI had nothing to do with ethical considerations. The students’ naive conceptions about AI were identified on the first day of the summer camp.

4.1.1 Theme 1: AI was the same as automation and robotics

Most students often conflated AI and automation. Students often indicated that something was AI because it was automated or involved automated processes with minimal or no human intervention. Automation only follows the machine-driven process based on the programming by human programmers. It does not recommend any possible answers by itself in terms of classification or prediction based on ML. On the other hand, AI includes ML which incorporates learning from data, identifying patterns through the dataset, and recommending the most efficient decisions based on the data.

Students perceived machines with automation functions such as RFID, IoT, or industrial robots as AI. For instance, the first activity during the summer camp was a game called ‘AI or Not AI?’ Students were asked to consider whether everyday objects such as toasters or washing machines should be considered AI or not AI. Students were shown an everyday object and then asked to position themselves from 0 (Not AI) to 100 (AI). Once situated, teachers would ask students to share their reasoning for their assessment.

Throughout the activity, a number of students argued that many automated instances were examples of AI such as electronic toll collection on the highway using RFID technology, washing machines controlled by personal mobile phones using IoT, and industrial robots in the factory. The following excerpt demonstrates how the students were confused with distinguishing between automation and AI (see Table 1).

Similarly, most students described that any kind of robotics was the same concept as AI. However, most robots are currently operational on the basis of automation, not AI. These naive conceptions of students’ were likely informed by their everyday experiences and the environments around them. For example, student 1 brought up the definition of AI and quoted that “AI is a robot or app that can do tasks and learn.” student 7 mentioned AI as “Robots used for different things, artificial intelligence.”

Due to the students’ exposure to the image of robots, which were originally more related to mechanisms of automation often being presented as AI-based systems on YouTube or in movies (e.g., Mitchells vs. Machines, Wallace and Gromit: The Wrong Trousers, Real Steel). It also could be a conceptual misunderstanding that some of the students had constructed a defective mental model (Davis, 1997; Taylor & Kowalski, 2014) that AI is equal to robots and tended to describe them with attributes of personality or human forms (Emmert-Streib et al., 2020; Kreinsen & Schulz, 2021; Mertala et al., 2022; Ottenbreit-Leftwich et al., 2022; Szczuka et al., 2022). For example, the following example showcases the students’ preconception of considering AI equal to a robot. As one of their projects, students found out the problems in their daily life and tried to solve them by integrating AI concepts. One of the students thought that AI could be a feasible solution to his father’s problem. His father was a woodchopper but gained back pain due to the heavy workload. In this regard, he planned to make an ‘AI woodchopper’ that could help lower his father’s workload. He merely thought that AI is a robot that could ‘magically’ take the difficult part of the work but lacked in elaborating solutions with components of AI like a CV or detecting woods, ML for learning about the location of woods through data (See Table 2).

4.1.2 Theme2: AI was a cure-all solution

The majority of students thought of AI as a ‘cure-all’ solution that could create optimal answers to every problem. Regardless of the context, they often described AI as a ‘one-size-fits-all’ feature that can solve even unfamiliar problems ‘magically’ through its intelligence. Thus, students devised solutions with AI to every single problem in their daily life regardless while writing a technical proposal activity at the beginning of the summer camp. This could be explicated that students confused AI with ‘Strong AI’ or ‘Conscious AI’ (Kaplan & Haenlein, 2019) who usually perceive AI as a machine that can be operated the same as human intelligence and solve every aspect of the problem (Ali et al., 2021; DiPaola et al., 2022; Greenwald et al., 2021; Marx et al., 2022). Therefore, it is a vernacular misconception, deriving from the usage of terms with different meanings in the contexts of daily life and academia (Davis, 1997; Samsudin et al., 2021).

Namely, AI technologies currently implemented in the field are more like ‘Weak AI’ or ‘Narrow AI’, which refers to systems that could only operate within an ML pre-defined range by humans. Most AI has its area and definite limitations that only function in specific contexts like answering questions with NLP and recognizing people’s faces using a CV. Regardless of these limitations, students lack thinking of the sub-components of AI such as CV, ML, or NLP, to plan the optimized solutions considering the contexts of the problems.

For instance, one team created a technical proposal for an AI tutoring app that helps students with questions while they do homework. In the middle of the presentation, one of the students was questioned by his peers about how they can deal with the problem if they receive too many questions about their AI tutoring app from the users (See Table 3).

During the presentation, they devised a solution based on the AI concepts learned. However, they thought that AI could solve the problem they would face easily by using AI-powered spam robots without thinking of the features of AI components. This indicated that they lacked thinking about which components of AI could effectively tackle the problem and perceived AI as a cure-all solution. Therefore, a lack of understanding of the subconcepts of AI well led to students perceiving AI as a ‘Strong AI’ or ‘Conscious AI’ rather than a ‘Weak AI’ or ‘Narrow AI’ because it might be a cure-all solution without considering the contexts and components of AI (Kaplan & Haenlein, 2019).

4.1.3 Theme3: AI was created to be smart

Some of the students discerned AI is already born to be smart, precise, and efficient so they don’t need to train them with the data. Students did not realize or ignored the importance of the proper amount and quality of the data. They misunderstood that AI was already programmed to have intelligence rather than learned from data. To illustrate, the students wrote their definitions of AI at the beginning of the summer camp as follows: “AI is something that has an intelligence like a human”, “AI is a computer program that can think by itself.”, and “AI is the ability of a computer to think by itself with no human interaction at all.” Similarly, some of the students described the strengths of AI as “doesn’t need to learn most of the time.”, and “Fast and efficient”.

All of these learning artifacts written by students are based on the thought that AI is flawless and complete without human interventions and input from the data. However, AI is more like a newborn baby in the early stage that needs to be trained step-by-step with the proper amount and quality of data (Pedro et al., 2019; Zawacki-Richter et al., 2019). To make AI reach intentional goals like predicting the results from the future or classifying the data with a category, learning or training from data is a necessary part of AI, which students often forget to recognize. Additionally, AI is not always fast, effective, or precise in every problem context. Considering the features of the tasks are important that if tasks are relevant to numbers and repetitive, then AI outperforms humans. On the other hand, dealing with tasks with emotions or subtle nuance, and human interactions are believed to be much better performed by humans compared to AI.

These thoughts could be explained by preconceived notions that students have a clear understanding of the phenomenon (Davis, 1997; Soeharto et al., 2019) because of their exposure to AI through their daily experiences and media (Cave & Dihal, 2019; Dipaola et al., 2022; Kreinsen & Schulz, 2021; Mertala et al., 2022). For instance, AI described in the movie often showed students suggesting the optimal solutions to its users or masters by asking them about some of the problems they faced, such as Jarvis in the Iron Man franchise movie (Dipaola et al., 2022). The movie doesn’t describe the necessity of data to train the AI system. Similarly, when students use YouTube or Netflix, the systems recommend videos or TV shows based on their interests. However, they are not aware of the mechanism of the AI recommender system that whenever they choose videos or TV shows, those data are sent to the system to learn about users’ interests. This also could be a conceptual misunderstanding that the flawed mental model has been created that AI knows everything regardless of any learning process. In general, exposure to AI with less description of data training and the construction of a flawed mental model could mislead students to perceive it as a flawless and precise one.

4.1.4 Theme4: All data can be used by AI

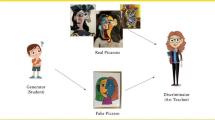

Related to theme 3, most of the students were not aware of the importance of data quality. They often inadequately consider the quality of data and that just providing a lot of data to AI will render the results they expect to see. For example, throughout the “Tic-tac-toe” activity, students gathered the team and made their tic-tac-toe machine with Machine Learning for Kids. They tried to train their own teams’ machines through ML by playing the tic-tac-toe game to provide enough data for AI. After training each team’s tic-tac-toe machine, they competed with each other’s machine in a tournament to win the game (See Fig. 1). However, some of the teams just clicked the tic-tac-toe screen randomly to provide the data to the machine without considering strategic movement and how to win the game. They merely focused on providing a certain amount of data but lacked considering the quality. These patterns were also verified in students’ Tic-tac-toe AI artifacts by analyzing the dataset in Machine Learning for Kids. As a result, a team with considered both quality and quantity of data won the game, but others who repetitively played the game without using the winning strategy could not render the results they expected.

Along with that, while students engage in the “Census at School” activity, similar things occurred. The activity is mainly about gathering the data from colleagues, facilitators, and teachers with pen and paper, providing those data with AI for machine learning, and making AI predict students’ future careers based on the survey results by employing Machine Learning for Kids. Some of the students made up the survey answers randomly and put them into the AI. Others copied and pasted the survey data to train the AI. Those were consistently identified when researchers analyzed the students’ dataset in Machine Learning for Kids. Consequently, the AI machine with poor quality dataset delivered unintended results that the AI could not predict the students’ careers precisely based on their answers.

This could be categorized as preconceived notions (Davis, 1997; Karpudewan et al., 2017) that they were new to data literacy or distinguishing the validity of data as well as the quantity of them (Marques et al., 2020; Marx et al., 2022; Nagarajan et al., 2020; Szczuka et al., 2022; Vartiainen et al., 2021). As most of the quantitative data represented in the news, or media were often accepted by most people, without a doubt. Students merely focused on how many answers are in the survey data instead of considering their validity or reliability which is critically relevant to the quality of data with the lens of using “critical data literacy” or “critical approach to data” (Raffaghelli & Stewart, 2020; Sanders, 2020). In addition, this could become a factual misconception (Davis, 1997; Soeharto et al., 2019) as well that those belief systems sometimes remain unchallenged until they become adults that a number of people continued to count on the quantitative data blindly without thinking about their quality like accountability for data-driven decision-making (Kippers et al., 2018; Mandinach & Schildkamp, 2021).

4.1.5 Theme5: AI had nothing to do with ethical considerations

A considerable amount of evidence was observed during the learning interventions that a certain number of students were not familiar with the ethical features of AI. They often overlooked or forgot about the ethical considerations of AI while utilizing it. Typically, students frequently linked data with money, some of them repeatedly mentioned selling their team’s collected data for monetization which is relevant to the privacy issue. The following excerpt indicates the students’ lack of thinking in ethical considerations with AI (see Table 4).

Relatedly, some students perceived AI as impartial and fair. During the activity of sharing students’ thoughts about AI and describing its strengths and weaknesses of it on the whiteboard, some of them described how AI could make decisions without any bias. Students described the features of AI as follows: “The data process is much faster than a human and there are almost no errors in it”, “AI doesn’t have feelings”, and “Emotionless is good because it would be able to save someone without feeling bad about others died in surgery context”. These comments from the students assumed that most students believed in the impartiality and fairness of AI. AI is believed to be emotionless because it could make decisions without any prejudice or bias and rarely makes errors compared to humans.

The category of preconceived notions could explain it. Students frequently conflated a clear comprehension of the phenomena with a partial understanding of the scientific concepts (Davis, 1997; Soeharto et al., 2019). Since AI ethics are being emphasized recently in educational contexts, most of them are not familiar with ethical considerations like bias, privacy, transparency, and fairness (Borenstein & Howard, 2021; Hagendorff, 2020) while creating or using AI technologies. Therefore, students were deficient in thinking about ethical issues in collecting and using data from individuals related to privacy issues. It is crucial to include ethical considerations since AI could cause challenges of violating privacy or bias in AI since AI is a socio-technical system (STS) involving complicated interactions between humans, machines, and environments based on the systems (Badham et al., 2000; Coeckelbergh, 2020; Gunkel, 2012; Zhang et al., 2022). In this regard, AI is strongly influenced by the stakeholders’ intentions closely related to AI (Holmes et al., 2020). AI has the possibility to be biased users, and programmers should be aware of the fact that AI is not always making impartial and fair decisions. Additionally, it could be a factual misconception based on the common belief (Davis, 1997; Impey et al., 2012) that machines could make impartial decisions compared to humans has remained, unchallenged (Ali et al., 2021; Szczuka et al., 2022; Zhang et al., 2022).

4.2 Transformation of naive conceptions toward AI

In general, five major naive conceptions and myths about AI have been revealed through the analysis. The types of those naive conceptions and their descriptions have been explicated with the framework that National Research Council (1997) suggested. Table 5 shows detailed information about the revealed naive conceptions of middle school students toward AI.

Through the analysis of student learning activities and outcomes, the researchers could observe and reveal the transformation of the naive conceptions of students throughout the summer camp as follows. First, students realized that every machine which merely has an automated function is not as same as AI. While explaining the daily objects including whether the AI-based technologies or not during the “AI or Not AI” activity, students started to recognize the importance of ML to distinguish between automation and AI. Unlike automation, AI could think and conduct decisions on its own through training with the proper amount of dataset. Students distinguished mere automation or robotics from AI based on ML, putting data for training AI as an essential part. In the course of the intervention, teachers and facilitators tried to ask questions to challenge their naive conceptions and let students show evidence of their thinking by requiring an explanation of the phenomena. Similarly, while learning about NLP, similar strategies have been integrated. Teachers provided them with examples and non-examples of using NLP in their daily life to check their conceptual understanding of NLP as well as identify their naive conceptions to address them. The following excerpt showed that one student explained AI as “thinking by itself” but not as operating automatically without human intervention (See Table 6).

Second, the naive conception of perceiving AI could render a “cure-all”, and optimal solutions changed. Since the confusion of recognizing AI equals Strong AI is one of the main causes of this naive conception, students began to realize that the current AI is more like Weak AI. Due to the limitation of Weak AI, students comprehended that they needed to consider analyzing the problem first and understanding the function of subcomponents from AI to plan the optimal solutions. To this end, students stopped thinking that AI could magically solve the problem or devise solutions regardless of the problem context. During the intervention, teachers and facilitators provided them with timely feedback when they created the technical proposal using AI technologies to design in-school applications. They kept moving around among each team and reminded them of the lessons from lectures and hands-on activities such as the quality of the dataset, types of ML, or varied sensors in CV. To this end, while planning the technical proposal for designing an in-school AI-based application, students grouped by a team consolidated the meaning and the functions of the sub-components from the AI. For instance, one of the teams devised the AI mouse trap. They elaborated the artifact using the sensors to employ image and sound recognition from CV to capture rats’ appearance and sounds. Also, they employed supervised learning from ML to train the machine to distinguish ‘rat’ and ‘no rat’ to avoid errors (See Fig. 2).

Third, they changed their naive conception of AI being created to be smart to AI requiring a comprehensive dataset for training. Along with that, they changed their preconception of considering all data can be used by AI as valid. While learning about the ML concepts, students experienced several hands-on practices emphasizing the training with data such as fish classification with a Teachable machine, Census at school, and training the Tic-tac-toe with Machine Learning for Kids. By doing so, they realized that AI should require training with a good amount of quality data. Most of them experienced that the quality of the data is important to consider, due to someone just made up the data, or just copying and pasting the data from others affecting the validity of data and getting poor results, like losing the game or AI-based artifacts rendering unexpected results which did not meet the needs or goals of their team. To illustrate, by competing with the Tic-tac-toe machine with other teams, they understood that the more accurate data for AI, the better results acquired, and becoming AI smart. The transformation of preconception was observed through “Writing my definition of AI”. One of the students changed his definition of AI to “AI when machines learn from trial and error, labels from humans, or finding similarities in data.” Similarly, some of the students described the weakness of AI as “They can’t build or learn by themselves.”, and “You need to have a good quality of data.” These showed the transformation of their naive conceptions that AI needs ML with comprehensive quantity and quality of dataset to be smart.

Fourth, students who thought AI had nothing to do with ethical considerations have changed and they started to consider implementing sub-components from AI ethics during their learning engagement. Through the emphasis on the ethical issues of using AI during the lectures and hands-on activities, students have been well aware that AI could deliver distorted results due to the partial dataset. Thus, they started to consider the components of AI ethics like privacy, transparency, safety, and fairness in their learning activities. Teachers and facilitators kept mentioning ethical considerations and showed them representative examples of ethical issues about AI. These strategies worked so well on middle-schoolers that AI ethics penetrated their cognitive structures which can be observed through their mentions and learning artifacts. Particularly, the aforementioned naive conceptions were strongly addressed at the time of presenting technical proposals to design in school applications. Students in a team were frequently asked to answer possible problems that occurred such as “how to deal with the data collected by the users?”, “what if the users do not want to agree on their offering personal information to the developers?”, and “how to address the problems caused by biased data input from users?”. They also included the protection plan for the user data and promised to use that data with a good purpose in terms of legal boundaries only during the presentations. Similarly, while students designed the AI club activity recommending application, one of the team described a process of training an AI model by employing supervised learning and being aware of the possibility of wrong data could cause biased or unfair recommendations for users (See Fig. 3). Additionally, most of the students changed their description of the features of AI to “Bias can affect the outcome of the program”, “Bias can affect machines”, and “There is a chance that there is an error in a decision”.

5 Discussion and implications

Early exposure to AI concepts is largely beneficial for students in terms of understanding the mechanisms of AI itself in their daily life or AI literacy and for their future preparation for an AI-relevant career. Nonetheless, the naive conceptions of AI in K-12 contexts need to be identified since this hinders the clear understanding of AI concepts for young students. Thus, it would be worth exploring the naive conceptions and myths and tackling them through the comprehensively designed AI curriculum. In this study, the major research findings centered on what naive conceptions middle school students have toward AI and how those naive conceptions were transformed through the learning interventions.

The contribution of this study could be revealed in two aspects. First, it comprehensively revealed the middle school students’ naive conceptions of AI concepts. By analyzing students’ actual interactions and the learning artifacts they created during the summer camp, the researchers could catch students’ naive conceptions of AI and track the transformation of their cognitive structures based on their performance. Since teaching and learning AI concepts have been emphasized recently, there are several difficulties to be addressed, particularly for young students who have struggled to grasp those concepts. Nonetheless, there have been scant studies conducted on identifying students’ naive conceptions of AI which are critical causes to be identified first. Therefore, the findings will advance our knowledge about common naive conceptions of middle-schoolers on AI so that teachers and educators can design their interventions based on the empirical foundations, particularly in K-12 settings. Based on the findings, some of the students’ naive conceptions were in line with previous studies such as perceiving AI as robotics, or AI as created to be smart (e.g., Kreinsen & Schulz, 2021; Mertala et al., 2022; Ottenbreit-Leftwich et al., 2022; Szczuka et al., 2022; Zhang et al., 2022). These efforts were also similar to the previous studies emphasizing naive conceptions should be identified first and tackled to modify the main causes. This could encourage students to reconstruct their knowledge structures from unscientific concepts to scientific ones (e.g., Gurel et al., 2015; Kaczmarczyk et al., 2010; Kwon, 2017; National Research Council, 1997; Qian & Lehman, 2017).

Second, the findings will help in investigating the design considerations for the AI curriculum focusing on the change of students’ naive conceptions. As the teaching and learning strategies have been suggested to address the naive conceptions in several disciplines (Benson et al., 1993; Ginat et al., 2013; National Research Council, 1997; Porter et al., 2013), this study rendered the meaningful outcomes that those strategies could be extended to the context of teaching and learning AI concepts for young students. By constantly asking questions to challenge their naive conceptions, collaborative hands-on experiences on creating learning artifacts, explaining the reasons why they use those AI concepts, and modifying through the feedback from others working in a good way that their naive conceptions gradually changed into more scientific concepts. This would provide a good example of teaching strategies for teachers and educators who tried to design and implement AI curricula in K-12 classroom. It is consistent with the findings from the studies that students’ naive conceptions could be modified based on the learning interventions that were designed in age-appropriate hands-on practices, discussion, and participation of students (e.g., Basili & Sanford, 1991; Hake, 1992; McCauley et al., 2008; Simon et al., 2010; Teague & Lister, 2014).

6 Limitations and future studies

Throughout the study, we identified five categories of AI preconceptions that middle school students had and the evolution of those concepts during the summer camp. However, we used limited data from 14 middle school students. The limited number of participants rendered a small part of naive conceptions about AI among middle schoolers, it would be more beneficial to expand the contexts from early childhood to higher education to derive more meaningful and comprehensive findings. Moreover, the constraints of the ratio of participants, and the results inferred from the study could not catch the in-depth group dynamics of the collaboration among students during the learning engagement. With more female students’ participation, we could explore the difference between the learning process about AI concepts as well as the disparities of naive conceptions between genders for future study. Furthermore, future studies could employ diverse methods for data collection not only the videos and learning artifacts from students but also quantitative data like surveys or knowledge tests to identify more generalizable findings.

Data availability statement

The datasets generated during and/or analyzed during the current study are not publicly available due to the data such as videos and photos containing teachers’ and students’ personal identification information that could compromise research participant privacy and consent but are available from the corresponding author on reasonable request.

References

Ali, S., DiPaola, D., Lee, I., Sindato, V., Kim, G., Blumofe, R., & Breazeal, C. (2021). Children as creators, thinkers, and citizens in an AI-driven future. Computers and Education Artificial Intelligence, 2, 100040. https://doi.org/10.1016/j.caeai.2021.100040

Badham, R., Clegg, C., & Wall, T. (2000). Socio-technical theory. John Wiley.

Basili, P. A., & Sanford, J. P. (1991). Conceptual change strategies and cooperative group work in chemistry. Journal of Research in Science Teaching, 28(4), 293–304. https://doi.org/10.1002/tea.3660280403

Benson, D. L., Wittrock, M. C., & Baur, M. E. (1993). Students preconceptions of the nature of gases. Journal of Research in Science Teaching, 30(6), 587–597. https://doi.org/10.1002/tea.3660300607

Borenstein, J., & Howard, A. (2021). Emerging challenges in AI and the need for AI ethics education. AI and Ethics, 1, 61–65. https://doi.org/10.1007/s43681-020-00002-7

Borghi, A. M., Binkofski, F., Castelfranchi, C., Cimatti, F., Scorolli, C., & Tummolini, L. (2017). The challenge of abstract concepts. Psychological Bulletin, 143(3), 263–292. https://doi.org/10.1037/bul0000089

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706QP063OA

Van Brummelen, J., Heng, T., & Tabunshchyk, V. (2021). Teaching tech to talk: K-12 conversational artificial intelligence literacy curriculum and development tools. In M. Neumann, P. Virtue & M. Guerzhoy (Eds.), Proceedings of 2021 AAAI Symposium on Educational Advances in Artificial Intelligence. AAAI. https://doi.org/10.48550/arXiv.2009.05653

Cave, S., & Dihal, K. (2019). Hopes and fears for intelligent machines in fiction and reality. Nature Machine Intelligence, 1(2), 74–78. https://doi.org/10.1038/s42256-019-0020-9

Chai, C. S., Lin, P. Y., Jong, M. S. Y., Dai, Y., Chiu, T. K., & Qin, J. (2021). Perceptions of and behavioral intentions towards learning artificial intelligence in primary school students. Educational Technology & Society, 24(3), 89–101. https://www.jstor.org/stable/27032858

Champagne, A., Gunstone, R., & Klopfer, L. (1983). Naive knowledge and science learning. Research in Science and Technological Education, 1(2), 173–183. https://eric.ed.gov/?id=ED225852

Chiodini, L., Moreno Santos, I., Gallidabino, A., Tafliovich, A., Santos, A. L., & Hauswirth, M. (2021). A curated inventory of programming language misconceptions. In Proceedings of the 26th ACM Conference on Innovation and Technology in Computer Science Education V. 1 (pp. 380–386). Association for Computing Machinery. https://doi.org/10.1145/3430665.3456343

Chiu, T. K., Meng, H., Chai, C. S., King, I., Wong, S., & Yam, Y. (2021). Creation and evaluation of a pretertiary artificial intelligence (AI) curriculum. IEEE Transactions on Education, 65(1), 30–39. https://doi.org/10.1109/TE.2021.3085878

Clark, J. M., & Paivio, A. (1991). Dual coding theory and education. Educational Psychology Review, 3, 149–210. https://doi.org/10.1007/BF01320076

Clement, J. (1993). Using bridging analogies and anchoring intuitions to deal with students’ preconceptions in physics. Journal of Research in Science Teaching, 30(10), 1241–1257. https://doi.org/10.1002/tea.3660301007

Coeckelbergh, M. (2020). AI ethics. MIT Press.

Creswell, J. W., & Gutterman, C. N. (2019). Educational research: Planning, conducting and evaluating quantitative and qualitative research (6th ed.). Pearson.

Creswell, J. W., & Poth, C. N. (2016). Qualitative inquiry and research design: Choosing among five approaches (4th ed.). Sage Publications.

Dang, J., & Liu, L. (2021). Robots are friends as well as foes: Ambivalent attitudes toward mindful and mindless AI robots in the United States and China. Computers in Human Behavior, 115, 106612. https://doi.org/10.1016/j.chb.2020.106612

Davis, B. G. (1997). Misconceptions as barriers to understanding science. In National Research Council (Eds.), Science teaching reconsidered: A handbook. (pp. 27–32). National Academies Press.

Dipaola, D., Payne, B. H., & Breazeal, C. (2022). Preparing children to be conscientious consumers and designers of AI technologies. In S. C. Kong & H. Abelson (Eds.), Computational thinking education in K-12: Artificial intelligence literacy and physical computing (pp. 181–205). MIT Press.

Driver, R., & Easley, J. (1978). Pupils and paradigms: A review of literature related to concept development in adolescent science students. Studies in Science Education, 5(1), 61–84. https://doi.org/10.1080/03057267808559857

Druga, S., Vu, S. T., Likhith, E., & Qiu, T. (2019). Inclusive AI literacy for kids around the world. In P. Blikstein & N. Holbert (Eds.), Proceedings of FabLearn 2019 8th Annual Conference on Maker Education (pp. 104–111). The Association for Computing Machinery. https://doi.org/10.1145/3311890.3311904

Emmert-Streib, F., Yli-Harja, O., & Dehmer, M. (2020). Artificial intelligence: A clarification of misconceptions, myths, and desired status. Frontiers in Artificial Intelligence, 3, 524339. https://doi.org/10.3389/frai.2020.524339

Fisher, K. M. (1985). A misconception in biology: Amino acids and translation. Journal of Research in Science Teaching, 22(1), 53–62. https://doi.org/10.1002/tea.3660220105

Gilbert, J., Osborne, R., & Fensham, P. (1982). Children’s science and its consequences for teaching. Science Education, 66, 623–633. https://eric.ed.gov/?id=EJ266160

Ginat, D., Menashe, E., & Taya, A. (2013). Novice difficulties with interleaved pattern composition. In I. Diethelm & R. T. Mittermeier (Eds.) Proceedings of International Conference on Informatics in Schools: Situation, Evolution, and Perspectives (pp. 57–67). ISSEP. https://doi.org/10.1007/978-3-642-36617-8

Glenberg, A., de Vega, M., & Graesser, A. C. (2008). Framing the debate. In M. de Vega, A. Glenberg, & A. C. Graesser (Eds.), Symbols and embodiment: Debates on meaning and cognition (pp. 1–10). Oxford University Press.

Gonzalez, M. F., Liu, W., Shirase, L., Tomczak, D. L., Lobbe, C. E., Justenhoven, R., & Martin, N. R. (2022). Allying with AI? Reactions toward human-based, AI/ML-based, and augmented hiring processes. Computers in Human Behavior, 130, 107179. https://doi.org/10.1016/j.chb.2022.107179

Greenwald, E., Leitner, M., & Wang, N. (2021). Learning artificial intelligence: Insights into how youth encounter and build understanding of AI concepts. Proceedings of the AAAI Conference on Artificial Intelligence, 35(17), 15526–15533. https://doi.org/10.1609/aaai.v35i17.17828

Greiff, S., Molnár, G., Martin, R., Zimmermann, J., & Csapó, B. (2018). Students’ exploration strategies in computer-simulated complex problem environments: A latent class approach. Computers & Education, 126, 248–263. https://doi.org/10.1016/j.compedu.2018.07.013

Gunkel, D. J. (2012). The machine question: Critical perspectives on AI, robots, and ethics. MIT Press.

Gurel, D. K., Eryilmaz, A., & McDermott, L. C. (2015). A review and comparison of diagnostic instruments to identify students’ misconceptions in science. Eurasia Journal of Mathematics, Science and Technology Education, 11(5), 989–1008. https://doi.org/10.12973/eurasia.2015.1369a

Hagendorff, T. (2020). The ethics of AI ethics: An evaluation of guidelines. Minds and Machines, 30(1), 99–120. https://doi.org/10.1007/s11023-020-09517-8

Hake, R. R. (1992). Socratic pedagogy in the introductory physics laboratory. The Physics Teacher, 30, 546–552. https://doi.org/10.1119/1.2343637

Harry, B., Sturges, K. M., & Klingner, J. K. (2005). Mapping the process: An exemplar of process and challenge in grounded theory analysis. Educational Researcher, 34(2), 3–13. https://doi.org/10.3102/0013189X034002003

Hashweh, M. (1988). Descriptive studies of students’ conceptions in science. Journal of Research in Science Teaching, 25(2), 121–134. https://doi.org/10.1002/tea.3660250204

Hayes, J. C., & Kraemer, D. J. M. (2017). Grounded understanding of abstract concepts: The case of STEM learning. Cognitive Research: Principles and Implications, 2(1), 7. https://doi.org/10.1186/s41235-016-0046-z

Holmes, W., Bialik, M., & Fadel, C. (2020). Artificial Intelligence in Education. Center for curriculum redesign.

Huang, X. (2021). Aims for cultivating students’ key competencies based on artificial intelligence education in China. Education and Information Technologies, 26(5), 5127–5147. https://doi.org/10.1007/s10639-021-10530-2

Impey, C., Buxner, S., & Antonellis, J. (2012). Non-scientific beliefs among undergraduate students. Astronomy Education Review, 11(1), 1–12. https://doi.org/10.3847/AER2012016

Kaczmarczyk, L. C., Petrick, E. R., East, J. P., & Herman, G. L. (2010). Identifying student misconceptions of programming. In G. Lewandowski, S. Wolfman, T. J. Cortina, & E. L. Walker, (Eds.), Proceedings of the 41st ACM technical symposium on Computer science education (pp. 107–111). Association of Computing Machinery. https://doi.org/10.1145/1734263.1734299

Kaplan, A., & Haenlein, M. (2019). Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Business Horizons, 62(1), 15–25. https://doi.org/10.1016/j.bushor.2018.08.004

Karpudewan, M., Zain, A. N. M., & Chandrasegaran, A. L. (Eds.). (2017). Overcoming students’ misconceptions in science. Springer.

Kippers, W. B., Poortman, C. L., Schildkamp, K., & Visscher, A. J. (2018). Data literacy: What do educators learn and struggle with during a data use intervention? Studies in Educational Evaluation, 56, 21–31. https://doi.org/10.1016/j.stueduc.2017.11.001

Kong, S. C., & Abelson, H. (Eds.). (2022). Computational thinking education in K-12: Artificial intelligence literacy and physical computing. MIT Press.

Kong, S. C., Cheung, W. M. Y., & Tsang, O. (2022). Evaluating an artificial intelligence literacy programme for empowering and developing concepts, literacy and ethical awareness in senior secondary students. Education and Information Technologies, 1–22. https://doi.org/10.1007/s10639-022-11408-7

Kreinsen, M., & Schulz, S. (2021). Students' conceptions of artificial intelligence. In Berges. M., Mühling. A, & Armoni, M. (Eds.), Proceedings of the 16th Workshop in Primary and Secondary Computing Education (pp. 1–2). Association for Computing Machinery. https://doi.org/10.1145/3481312.3481328

Kwon, K. (2017). Novice programmer’s misconception of programming reflected on problem-solving plans. International Journal of Computer Science Education in Schools, 1(4), 1–12. https://doi.org/10.21585/ijcses.v1i4.19

Kwon, K., Jeon, M., Guo, M., Yan, G., Kim, J., Ottenbreit-Leftwich, A. T., & Brush, T. A. (2021). Computational thinking practices: Lessons learned from a problem-based curriculum in primary education. Journal of Research on Technology in Education, 1–18. https://doi.org/10.1080/15391523.2021.2014372

Lacerda Queiroz, R., Ferrentini Sampaio, F., Lima, C., & Machado Vieira Lima, P. (2021). AI from concrete to abstract. AI & SOCIETY, 36(3), 877–893. https://doi.org/10.1007/s00146-021-01151-x

Lai, Y. H., Chen, S. Y., Lai, C. F., Chang, Y. C., & Su, Y. S. (2021). Study on enhancing AIoT computational thinking skills by plot image-based VR. Interactive Learning Environments, 29(3), 482–495. https://doi.org/10.1080/10494820.2019.1580750

Lane, D. (2021). Machine learning for kids: A project-based introduction to artificial intelligence. No Starch Press.

Leaper, C., Farkas, T., & Brown, C. S. (2012). Adolescent girls’ experiences and gender-related beliefs in relation to their motivation in Math/Science and English. Journal of Youth and Adolescence, 41, 268–282. https://doi.org/10.1007/s10964-011-9693-z

Lin, P., & Van Brummelen, J. (2021). Engaging teachers to co-design integrated AI curriculum for K-12 classrooms. In Y. Kitamura, A. J. Quigley, K. Isbister, T. Igarashi, P. Bjorn, & S. Drunker (Eds.), Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–12). Association for Computing Machinery. https://doi.org/10.1145/3411764.3445377

Lincoln, Y. S., & Guba, E. G. (1985). Naturalistic inquiry. Sage publications.

Long, D., & Magerko, B. (2020). What is AI literacy? Competencies and design considerations. In R. Bernhaupt, F. F. Muller, D. Verweij & J. Andres (Eds.), Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1–16). Association for Computing Machinery. https://doi.org/10.1145/3313831.3376727

Mandinach, E. B., & Schildkamp, K. (2021). Misconceptions about data-based decision making in education: An exploration of the literature. Studies in Educational Evaluation, 69, 100842. https://doi.org/10.1016/j.stueduc.2020.100842

Marques, L. S., Gresse von Wangenheim, C., & Hauck, J. C. (2020). Teaching machine learning in school: A systematic mapping of the state of the art. Informatics in Education, 19(2), 283–321. https://doi.org/10.15388/infedu.2020.14

Marx, E., Leonhardt, T., Baberowski, D., & Bergner, N. (2022). Using matchboxes to teach the basics of machine learning: An analysis of (possible) misconceptions. In Proceedings of the Second Teaching Machine Learning and Artificial Intelligence Workshop (pp. 25–29). PMLR. https://proceedings.mlr.press/v170/marx22a

Maxwell, J. A. (2013). Qualitative research design: An interactive approach. Sage Publications.

McCauley, R., Fitzgerald, S., Lewandowski, G., Murphy, L., Simon, B., Thomas, L., & Zander, C. (2008). Debugging: A review of the literature from an educational perspective. Computer Science Education, 18(2), 67–92. https://doi.org/10.1080/08993400802114581

McCloskey, M., Caramazza, A., & Green, B. (1980). Curvilinear motion in the absence of external forces: Naive beliefs about the motion of objects. Science, 210(4474), 1139–1141. https://doi.org/10.1126/science.210.4474.113

McDermott, L. C., & Shaffer, P. S. (1992). Research as a guide for curriculum development: An example from introductory electricity. Part I: Investigation of student understanding. American journal of physics, 60(11), 994–1003. https://doi.org/10.1119/1.17003

Mertala, P., Fagerlund, J., & Calderon, O. (2022). Finnish 5th and 6th-grade students' pre-instructional conceptions of artificial intelligence (AI) and their implications for AI literacy education. Computers and Education: Artificial Intelligence, 100095. https://doi.org/10.1016/j.caeai.2022.100095

Morrison, G. R., Ross, S. J., Morrison, J. R., & Kalman, H. K. (2019). Designing effective instruction. John Wiley & Sons.

Nagarajan, A., Minces, V., Anu, V., Gopalasamy, V., & Bhavani, R. R. (2020). There's data all around you: Improving data literacy in high schools through STEAM-based activities. In Proceedings of Fablearn Asia 2020 (pp. 17–20). The Association for Computing Machinery. https://par.nsf.gov/biblio/10166600

Nathan, M. J. (2021). Foundations of embodied learning: A paradigm for education. Routledge.

National Research Council. (1997). Science teaching reconsidered: A handbook. National Academies Press. https://doi.org/10.17226/5287

National Research Council. (2004). How students learn: History, mathematics, and science in the classroom. National Academies Press. https://doi.org/10.17226/10126

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., & Qiao, M. S. (2021). Conceptualizing AI literacy an exploratory review. Computers and Education: Artificial Intelligence, 2, 100041. https://doi.org/10.1016/j.caeai.2021.100041

Ottenbreit-Leftwich, A., Glazewski, K., Jeon, M., Jantaraweragul, K., Hmelo-Silver, C. E., Scribner, A., Lee, S., Mott, B., & Lester, J. (2022). Lessons Learned for AI Education with Elementary Students and Teachers. International Journal of Artificial Intelligence in Education, 1–23. https://doi.org/10.1007/s40593-022-00304-3

Pedro, F., Subosa, M., Rivas, A., & Valverde, P. (2019). Artificial intelligence in education: Challenges and opportunities for sustainable development. https://unesdoc.unesco.org/ark:/48223/pf0000366994

Porter, L., Bailey Lee, C., & Simon, B. (2013). Halving fail rates using peer instruction: a study of four computer science courses. In Proceedings of the 44th ACM technical symposium on Computer science education (pp. 177–182). Association for Computing Machinery. https://doi.org/10.1145/2445196.2445250

Qian, Y., & Lehman, J. (2017). Students’ misconceptions and other difficulties in introductory programming: A literature review. ACM Transactions on Computing Education (TOCE), 18(1), 1–24. https://doi.org/10.1145/3077618

Raffaghelli, J. E., & Stewart, B. (2020). Centering complexity in ‘educators’ data literacy to support future practices in faculty development: A systematic review of the literature. Teaching in Higher Education, 25(4), 435–455. https://doi.org/10.1080/13562517.2019.1696301

Sabuncuoglu, A. (2020). Designing a one-year curriculum to teach artificial intelligence for middle school. In Proceedings of the 2020 ACM Conference on Innovation and Technology in Computer Science Education (pp. 96–102). Association for Computing Machinery. https://doi.org/10.1145/3341525.3387364

Saldaña, J. (2016). The coding manual for qualitative researchers (3rd ed.). Sage publications.

Samsudin, A., Afif, N. F., Nugraha, M. G., Suhandi, A., Fratiwi, N. J., Aminudin, A. H., Adimayuda, R., Linuwih, S., & Costu, B. (2021). Reconstructing students’ misconceptions on work and energy through the PDEODE* E tasks with think-pair-share. Journal of Turkish Science Education, 18(1), 118–144. https://doi.org/10.36681/tused.2021.56

Sander, I. (2020). What is critical big data literacy and how can it be implemented? Internet Policy Review, 9(2), 1–22. https://doi.org/10.14763/2020.2.1479

Sanusi, I. T., Oyelere, S. S., & Omidiora, J. O. (2022). Exploring teachers’ preconceptions of teaching machine learning in high school: A preliminary insight from Africa. Computers and Education Open, 3, 100072. https://doi.org/10.1016/j.caeo.2021.100072

Schwanenflugel, P. J. (1991). Why are abstract concepts hard to understand? In P. J. Schwanenflugel (Ed.), The psychology of word meanings (pp. 235–262). Psychology Press.

Simon, B., Kohanfars, M., Lee, J., Tamayo, K., & Cutts, Q. (2010). Experience report: peer instruction in introductory computing. In Proceedings of the 41st ACM technical symposium on Computer science education (pp. 341–345). Association of Computing and Machinery. https://doi.org/10.1145/1734263.1734381

Smith, J. P., III., DiSessa, A. A., & Roschelle, J. (1994). Misconceptions reconceived: A constructivist analysis of knowledge in transition. The Journal of the Learning Sciences, 3(2), 115–163. https://doi.org/10.1207/s15327809jls0302_1

Soeharto, S., Csapó, B., Sarimanah, E., Dewi, F. I., & Sabri, T. (2019). A review of students’ common misconceptions in science and their diagnostic assessment tools. Jurnal Pendidikan IPA Indonesia, 8(2), 247–266. https://doi.org/10.15294/jpii.v8i2.18649

Szczuka, J. M., Strathmann, C., Szymczyk, N., Mavrina, L., & Kramer, N. C. (2022). How do children acquire knowledge about voice assistants? A longitudinal field study on children’s knowledge about how voice assistants store and process data. International Journal of Child-Computer Interaction, 33, 100460. https://doi.org/10.1016/j.ijcci.2022.100460

Tang, D. (2019). Empowering novices to understand and use machine learning with personalized image classification models, intuitive analysis tools, and MIT App Inventor (Doctoral dissertation, Massachusetts Institute of Technology).

Taylor, A. K., & Kowalski, P. (2014). Student misconceptions: Where do they come from and what can we do? In V. A. Benassi, C. E. Overson, & C. M. Hakala (Eds.), Applying the science of learning in education: Infusing psychological science into the curriculum (pp. 259–273). Society for the Teaching of Psychology.

Teague, D., & Lister, R. (2014). Programming: reading, writing, and reversing. In Proceedings of the 2014 conference on Innovation & technology in computer science education (pp. 285–290). Association for Computing Machinery. https://doi.org/10.1145/2591708.2591712

Tedre, M., Toivonen, T., Kahila, J., Vartiainen, H., Valtonen, T., Jormanainen, I., & Pears, A. (2021). Teaching machine learning in K–12 classroom: Pedagogical and technological trajectories for artificial intelligence education. IEEE Access, 9, 110558–110572. https://doi.org/10.1109/ACCESS.2021.3097962

Touretzky, D., & Gardner-McCune, C. (2022). Artificial Intelligence Thinking in K-12. In S. C. Kong & H. Abelson (Eds.), (2022) Computational thinking education in K-12: Artificial intelligence literacy and physical computing (pp. 153–180). MIT Press.

Touretzky, D., Gardner-McCune, C., Martin, F., & Seehorn, D. (2019). Envisioning AI for K-12: What should every child know about AI? Proceedings of the AAAI Conference on Artificial Intelligence, 33(1), 9795–9799. https://doi.org/10.1609/aaai.v33i01.33019795

Treagust, D. F. (1988). Development and use of diagnostic tests to evaluate students’ misconceptions in science. International Journal of Science Education, 10(2), 159–169. https://doi.org/10.1080/0950069880100204

Vartiainen, H., Toivonen, T., Jormanainen, I., Kahila, J., Tedre, M., & Valtonen, T. (2021). Machine learning for middle schoolers: Learning through data-driven design. International Journal of Child-Computer Interaction, 29, 100281. https://doi.org/10.1016/j.ijcci.2021.100281

Wang, D., Zhang, L., Xu, C., Hu, H., & Qi, Y. (2016). A tangible embedded programming system to convey event-handling concept. Proceedings of the TEI ’16: Tenth International Conference on Tangible, Embedded, and Embodied Interaction, Eindhoven, Netherlands. https://doi.org/10.1145/2839462.2839491

Williams, R., Kaputsos, S. P., & Breazeal, C. (2021). Teacher perspectives on how to train your robot: A middle school AI and ethics curriculum. Proceedings of the AAAI Conference on Artificial Intelligence, 35(17). 15678–15686. https://ojs.aaai.org/index.php/AAAI/article/view/17847

Williams, M., & Moser, T. (2019). The art of coding and thematic exploration in qualitative research. International Management Review, 15(1), 45–55.

Wong, G. K., Ma, X., Dillenbourg, P., & Huan, J. (2020). Broadening artificial intelligence education in K-12: Where to start? ACM Inroads, 11(1), 20–29. https://doi.org/10.1145/3381884

Yang, W. (2022). Artificial Intelligence education for young children: Why, what, and how in curriculum design and implementation. Computers and Education: Artificial Intelligence, 3, 100061. https://doi.org/10.1016/j.caeai.2022.100061

Yau, K. W., Chai, C. S., Chiu, T. K., Meng, H., King, I., & Yam, Y. (2022). A phenomenographic approach on teacher conceptions of teaching Artificial Intelligence (AI) in K-12 schools. Education and Information Technologies, 1–24. https://doi.org/10.1007/s10639-022-11161-x

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). A systematic review of research on artificial intelligence applications in higher education–where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27. https://doi.org/10.1186/s41239-019-0171-0

Zhang, H., Lee, I., Ali, S., DiPaola, D., Cheng, Y., & Breazeal, C. (2022). Integrating ethics and career futures with technical learning to promote AI literacy for middle school students: An exploratory study. International Journal of Artificial Intelligence in Education, 1–35. https://doi.org/10.1007/s40593-022-00293-3

Funding

This study was funded by U.S. DEPARTMENT OF DEFENSE (NATIONAL CENTER FOR THE ADVANCEMENT OF STEM EDUCATION), and the award number is 076967-00003C.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kim, K., Kwon, K., Ottenbreit-Leftwich, A. et al. Exploring middle school students’ common naive conceptions of Artificial Intelligence concepts, and the evolution of these ideas. Educ Inf Technol 28, 9827–9854 (2023). https://doi.org/10.1007/s10639-023-11600-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-11600-3