Abstract

The literature on the use of machine learning (ML) models for the estimation of real estate prices is increasing at a high rate. However, the black-box nature of the proposed models hinders their adoption by market players such as appraisers, assessors, mortgage lenders, fund managers, real estate agents or investors. Explaining the outputs of those ML models can thus boost their adoption by these domain-field experts. However, very few studies in the literature focus on exploiting the transparency of eXplainable Artificial Intelligence (XAI) approaches in this context. This paper fills this research gap and presents an experiment on the French real estate market using ML models coupled with Shapley values to explain the models. The used dataset contains 1,505,033 transactions (in 7 years) from nine major French cities. All the processing steps for preparing, building, and explaining the ML models are presented in a transparent way. At a global level, beyond the predictive capacity of the models, the results show the similarities and the differences between these nine real estate submarkets in terms of the most important predictors of property prices (e.g., living area, land area, location variables, number of dwellings in a condominium), trends over years, the differences between the markets of apartments and houses, and the impact of sales before completion. At the local level, the results show how one can easily interpret and evaluate the contribution of each feature value for any single prediction, thereby providing essential support for the understanding and adoption by domain-field experts. The results are discussed with respect to the existing literature in the real estate field, and many future research avenues are proposed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Automated Valuation Models (AVM)

A real estate automated valuation model (AVM) is a statistically based computer program that uses real estate information, such as property characteristics (e.g., age, number of rooms), comparable sales, or price trends, to calculate an estimate of a property value. It is commonly used for real estate appraisal, property valuation or land valuation (e.g., Bogin & Shui, 2020; Hurley & Sweeney, 2022). Real estate is considered by most countries to be the largest asset class and plays a major role in social and economic systems. Providing fast and accurate estimations of property value is very important at a local or global level in a country’s social and economic system. The financial system of a country is directly impacted by real estate price fluctuations, as they are frequently used as collateral by central banks for mortgage lending (Gibilaro & Mattarocci, 2018; Pavlov & Wachter, 2011). Recently, the European Banking Authority revised guidelines on loan origination and monitoring, giving banks and financial institutions a real opportunity to start relying increasingly on AVM-based solutions for real estate valuations (European Banking Authority, 2020). New technologies such as AVMs allow finance and real estate professionals to obtain the full picture of the potential of each property within minutes and save them a significant amount of time (Charlier, 2022). At the individual or local level, most households consider buying a house to be one of the largest financial transactions of their lives (Pedersen et al., 2013). Real estate agents and brokers typically use AVMs to obtain a general sense of home-sale trends in a specific locale and may use them as a starting point in determining the asking price on a new listing. For a homebuyer, AVMs can be used to obtain a sense of prevailing prices for homes of different sizes in neighborhoods that interest him or her. Even if a homeowner does not plan on selling anytime soon, an AVM can be used to get a general sense of a property’s value and whether it has appreciated since it was bought. Additionally, a good estimation of a property value is considered fundamental for an investor willing to diversify the portfolio because of the alternatives among housing securities and other possible investments (D’Amato et al., 2019).

Methods for AVM

Hedonic regressions such as linear or multiple linear regressions were initially the most popular methods used for real estate AVM. These methods assume that there is a linear relationship between the price of a house and its characteristics, such as structural characteristics (e.g., size), neighborhood characteristics (e.g., proximity to amenities such as schools or public transportation) and locational characteristics (e.g., geographic position). The price of the house is set as the dependent variable to estimate, and each characteristic is used as an independent variable for the regression model. The main advantage of these methods is related to the ability to interpret the coefficient of each independent variable in the regression equation to provide the relative importance of this variable for price estimation. This ease of interpretation of hedonic regression outputs gives more confidence and trust in these models and thus has motivated their adoption by actors such as analysts, appraisers, assessors, mortgage lenders, fund managers and researchers. However, the quantity and the variety of variables necessary for housing price estimations are sufficiently complex to provide very accurate estimations with the linear relationship assumption behind hedonic regressions. This disadvantage has favored the emergence of nonlinear methods for AVM, such as data envelopment analysis or more sophisticated artificial intelligence (AI) techniques (e.g., fuzzy inference, fuzzy logic, genetic algorithms, machine learning). Machine learning (ML) techniques have become particularly popular in recent years, as they usually provide more accurate estimations than hedonic regressions. Methods such as artificial neural networks, support vector machines or ensemble tree-based algorithms (e.g., random forest, gradient boosting, adaptive boosting) commonly provide better real estate price estimations (Sing et al., 2022; Tchuente & Nyawa, 2022; Yoshida et al., 2022). Nevertheless, beyond their important predictive capacity, these methods are still considered black-box methods due to their complexity and the difficulty of intrinsically explaining their outputs. This lack of explainability of the outputs of ML techniques leads to a lack of confidence in their usage by many actors in the AVM context. Providing explainable outputs of ML techniques for AVM would naturally increase their adoption, as they are usually more accurate than traditional hedonic regression methods.

Explainable Artificial Intelligence (XAI) Techniques

Beyond AVM, the black-box nature of ML methods in AI is a wider issue that slows down the adoption of these methods in many other applicative fields (Arrieta et al., 2020; Bücker et al., 2022; Ferrettini et al., 2022; Tchuente et al., 2024). There are also an increasing number of legal constraints requiring the explicability of black box AI models. For example, in February 2019, the Polish government added an amendment to a banking law that gives a customer the right to receive an explanation in case of a negative credit decision. This is one of the direct consequences of implementing the General Data Protection Regulation (GDPR) in the European Union (EU). This means that a bank must be able to explain why a loan was not granted if the decision process was automatic using methods such as ML. In recent years, many research works have been interested in studying techniques for explaining the output of black box AI methods and ML methods in particular (Explainable Artificial Intelligence or XAI). Several directions are emerging in this context (Adadi & Berrada, 2018): model agnostic vs. model-specific techniques, local vs. global techniques, intrinsic vs. post hoc techniques, surrogate techniques, and visualization techniques. An XAI technique is model agnostic when it can explain the predictions of any ML method; otherwise, it is model specific (only explains the predictions of a specific method). An XAI technique is local when it explains a single prediction; it is also global when the entire model can be explained. An XAI technique is post hoc when the explanation is provided only after the model is built; it is intrinsic when explanations are constructed during the model building. An XAI technique can be a surrogate model when it constructs an interpretable model (e.g., linear model) to approximate the predictions of the complex black box model so that we can provide conclusions about the black box model by interpreting its surrogate. An XAI technique is also a visualization helping to explore the patterns inside the model (e.g., a neural unit in a deep neural network).

Research Question and Contributions

In general, XAI techniques are increasingly successfully used in many fields for explaining predictions of black-box ML methods (Chen et al., 2022a, b; Park & Yang, 2022; Senoner et al., 2022; Wang et al., 2022). Despite the large and increasing literature on ML-based AVM (Binoy et al., 2021; Cajias & Wins, 2021; Valier, 2020; Wang & Li, 2019), very few studies, and only very recently, are focusing on explanations of those models (e.g., Iban, 2022; Mayer et al., 2022; Rico-Juan & de La Paz, 2021). However, providing explanations for ML-based AVMs could significantly boost their adoption compared to hedonic regression models, and much more interest should be focused on explainable AI or ML-based AVMs (Cajias, 2020). Thus, our research question is formulated as follows:

How can eXplainable artificial intelligence (XAI) improve the interpretations of machine learning (ML)-based automated valuation models (AVMs)?

To answer this question, we conduct an experiment with a recent 7-year dataset consisting of 1,505,033 real estate transactions in nine major French cities. The process for extracting and preparing the dataset is presented in a transparent way. Based on the use of the random forest ML method (Breiman, 2001) and Shapley values of the SHAP library (Lundberg & Lee, 2017), we present the outputs of local and global explanations for the nine cities. The results show that one can easily and locally explain every single estimation of a property value with a clear quantification of the contribution of each input feature. The goal is to show how market players (e.g., appraisers, real estate agents, real estate brokers, fund managers, investors) can rely on such an approach for adopting powerful predictions of ML-based AVM. At a global level, the aggregation of Shapley values for computing feature importance, allows us to assess the major differences in the real estate global market among the nine studied cities. For instance, comparisons are performed in terms of the most important features, the correlations between those features, the differences between house and apartment markets, and the differences between sales of old dwellings versus sales before completion. These results also emphasize the importance of the spatial dependency and spatial heterogeneity of submarket methods in AVM (Anselin, 2013; Basu & Thibodeau, 1998; Bitter et al., 2007).

Thus, the contributions of this paper with respect to the literature can be expressed in four points: (i) we address the explainability issue of ML-based AVM, which is currently little studied in the literature; (ii) we experiment on the specific French real estate market which was never studied in this context; (iii) we train and explain the ML outputs of nine major cities to be able to explain the specificities and differences of multiple submarkets in the same country; and (iv) we present the overall analytic ML process with transparency by integrating simulatability (reproducible study), decomposability (explanations of models parameters), and algorithmic transparency (explanation of the learning algorithm) (Chakraborty et al., 2017; Murdoch et al., 2019).

The rest of this paper is structured as follows: Sect. “Automated Valuation Models (AVM)” presents some related works. Section “Methods for AVM” presents the data and the methods used in the experiment. Section “Explainable Artificial Intelligence (XAI) Techniques” presents the experimental settings. The results of the experiment are presented in Sect. “Research Question and Contributions”. Section “Related Works” presents the discussion, implications, limitations, and future research directions of this study. Finally, Sect. “Data and Methodology” provides the main conclusions of this paper.

Related Works

In general, the literature on the usage of machine learning techniques for predicting real estate prices is very wide (Binoy et al., 2021; Valier, 2020; Wang & Li, 2019). Almost all the studies in this context use a specific dataset to evaluate the predictive capacity of multiple ML techniques or to compare the performances between ML techniques and hedonic regressions. The most performant methods mainly depend on each study context, even if methods such as random forest or artificial neural networks commonly provide the best results. However, very few, and only very recent, studies have specifically focused on the interpretability of ML techniques for AVM. Interpretability strategies used in these studies can be divided into four categories: explainability based on sensitivity analysis, explainability based on visualizations, a mix of black box models with interpretable models, and the use of post hoc global and local explainable methods.

First, some studies rely on sensitivity analysis to evaluate the importance of global features of ML techniques for AVM (Dimopoulos & Bakas, 2019; Iban, 2022). Sensitivity analysis usually consists of examining the impact of each feature or group of features on the model’s predictions (Zhang, 2019). For instance, (Dimopoulos & Bakas, 2019) used a dataset containing 3,786 apartment transactions (with 9 independent variables) in Nicosia district (Cyprus) to investigate the capabilities of ML-based AVM to increase their transparency through a sensitivity analysis. Four models are compared in the study: 2 hedonic regressions (multilinear regression and higher order nonlinear regression) and 2 ML tree-based methods (random forest and gradient boosting). For each model, they applied a sensitivity analysis based on a modified version of the profile method (Gevrey et al., 2003). The results show similar patterns for all four methods used. However, certain differences were also depicted, which highlights the need for such analyses on the trained ML models. In another context in Turkey, (Iban, 2022) uses another sensitive analysis method that measures the importance of a feature by calculating the increase in the model’s prediction after permuting the feature: PFI (Permutation Feature Importance) (Breiman, 2001). The results show that the PFI method is a faster and more robust option for feature selection in this context. Similar evaluations are proposed by (Lorenz et al., 2023), but with a specific focus on explaining residential rent predictions in Frankfurt (Germany).

Second, some studies rely on explainable visualization techniques that use methods such as partial dependence plots (PDPs) (Lee, 2022; Lorenz et al., 2023), individual conditional expectation (ICE) (Mayer et al., 2022), or accumulated local effects (ALE) plots (Chen et al., 2022a, b; Lorenz et al., 2023). PDP and ALE are global model-agnostic methods, while ICE is a local model-agnostic method. In general, PDP allows visualizing the marginal effect of one or two features on the predicted outcome of a machine learning model (Friedman, 2001), while ALE describes how features influence the prediction on average (Apley & Zhu, 2020). Compared to PDP, ALEs are commonly faster with less bias. As a local model, ICE plots one line per instance, showing how the prediction changes when a feature changes. In their study, (Lee, 2022) uses PDP to show how machine learning black box models can be interpreted in the specific context of property tax assessment. The author trains a neural network with data from two cities in South Korea (Seoul and Jeollanam) containing 13 independent variables. This shows that two variables (site area and building area) of the 13 input variables produced noticeable patterns in the PDP analysis. The results allow a better understanding of the differences in the real estate market between both cities. In another context, (Chen et al., 2022a, b) use ALE to investigate whether user-generated images may be used for monitoring housing prices. They compare the differences between a hedonic model and two ML models (random forest and gradient boosting) using a large dataset of sold properties (226,332 properties) in London with 23 dependent features. Their findings show that random forest outperforms the other models in terms of predictive capacity, and the explicability provided by this model is similar to that of the hedonic model.

Third, studies relying on the mix of black box models with interpretable models usually combine ML methods with hedonic regressions. For instance, (Li et al., 2021) combine the extreme gradient boosting (XGBoost) ML model with a hedonic price model to analyze the comprehensive effects of influential factors on housing prices. Using data from 12,137 housing units in Shenzhen (China) containing 35 independent features, XGBoost is first used to identify the most important features that impact housing prices. Next, only these important features are used in the hedonic model, which is easily interpretable. The results showed that combining the two models can lead to good performance and increase understanding of the spatial variations in housing prices. Similarly, (Mayer et al., 2022) present a successful combination of advanced ML techniques (deep learning and gradient boosting) and STAR (Smooth Transition Autoregressive) models to both provide excellent predictive capacity and supervised dimension reduction for explaining circumstances where their covariate effects can be described in a transparent way. Using data from Miami (13,932 housing units) and Switzerland (67,000 housing units) with more than 77 independent variables, they compare and explain the predictions of these combined models with traditional hedonic regression models. They also rely on ICE plots for locally visualizing the most important features.

Finally, some other studies rely on post hoc local or global explanations by particularly using the SHAP (Shapley Additive exPlanations) framework (Chen et al., 2020; Iban, 2022; Rico-Juan & de La Paz, 2021). SHAP is a game theoretic approach used to explain the output of any machine learning model (Lundberg & Lee, 2017). SHAP is based on the game’s theoretically optimal Shapley values. The idea behind Shapley values is the assumption that each feature value of an instance is a “player” in a game where the payout is the prediction. Coming from coalitional game theory, the Shapley values method estimates for a single prediction of how to fairly distribute the “payout” among the input features. Shapley value feature importance can also be aggregated on multiple instance predictions to provide global features important for the whole model. For instance, (Chen et al., 2020) rely on SHAP global importance of features for exploring the impact of environmental elements (e.g., green view index, sky view index, building view index) on housing prices. They first use multisource data on 2,547 real estate transactions in Shangai with more than 20 independent features (location features, structure features, neighborhood features, urban environmental features) for training a linear regression model and three ensemble learning models (XGBoost, Random Forest, Gradient Boosting). Next, SHAP global explanations allow, for instance, to show that urban environmental characteristics account for 16% of housing prices in Shangai. Using another dataset consisting of 392,412 real estate transactions (with 52 independent features) from Alicante (Spain), (Rico-Juan & de La Paz, 2021) present a comparative study of explanations provided on two hedonic models and a selection of ML models (Nearest Neighbors, Decision Tree, Random Forest, Adaboost, XGBoost, CatBoost, Neural Network). SHAP and PDP are mainly used to explain the results of ML models. Their results show that a combination of techniques (hedonic models and ML models with explanations) would add information on the unobservable nonlinear relationships between housing prices and housing characteristics. (Iban, 2022) rather use 1,002 transactions (with 43 physical and spatial independent features) in Turkey (Yenis ehir district) and compare the outputs of a multiple regression analysis and three tree-based ML models (gradient boosting, XGBoost, LightGBM). First, PFI (Permutation Feature Importance) is used to keep only the most important features. Next, SHAP is used to provide local explanations. The results show the possibility of observing the value determinants that contribute to the price prediction of each real estate sample using tree-based models. Beyond these studies, which primarily rely on structured data, other approaches, such as the one by (Wan & Lindenthal, 2023), focus on identifying the most relevant areas in unstructured input data (images) for predicting real estate prices.

In summary, compared to the vast literature on ML-based AVMs, only very few and very recent studies have addressed the specific interpretability or explainability issues of these models. The results obtained in this research field are commonly specific to the location (e.g., city, country) where the experiments are performed (Tchuente & Nyawa, 2022; Valier, 2020). However, the previously presented studies in this section mostly rely on data from only one city in each country. This cannot be enough to study the regional specificities of the real estate market of a whole country (Guliker et al., 2022; Gupta et al., 2022). For example, this will not be enough to be able to explain the similarities and differences of multiple real estate submarkets in the same country (Bourassa et al., 2003, 2007; Guliker et al., 2022; McCluskey & Borst, 2011; Tchuente & Nyawa, 2022). Moreover, these studies do not always present their analytic process in a transparent way with simulatability (reproducible studies), decomposability (intuitive explanations of model parameters), and algorithmic transparency (explanation of the learning algorithms) (Chakraborty et al., 2017). Thus, the contributions of this paper with respect to the literature can be expressed in four points: (i) we address the explainability issue of ML-based AVM, which is currently very little studied in the literature; (ii) we experiment on the specific French real estate market, which has never been studied in this context; (iii) we train and explain the ML outputs of nine major cities to explain the specificities and differences of multiple submarkets in the same country; and iv) we present the overall analytic ML process with transparency by integrating simulatability, decomposability, and algorithmic transparency.

Data and Methodology

Data

The dataset used in this study (“Demands of land values”) has been released with an open license by the French government since April 2019. The raw dataset contains data about real estate transactions in French territories (metropolitan and French overseas departments), except two departments: Alsace-Moselle and Mayotte. These data are very reliable because they come directly from notarial acts and cadastral information. The dataset is updated every six months and contains all transactions for the past 5 years. The data were accessed two times: in the first semester of 2019 and in the first semester of 2022. Thus, the studied dataset includes transactions over 7 years (from 2015 to 2021). For the whole country, these transactions represent approximately 28 GB of data (stored in csv format). As indicated in the motivation of this study, we are focusing on the 10 largest cities in France (in terms of populations): Paris, Marseille, Lyon, Toulouse, Nice, Nantes, Montpellier, Strasbourg, Bordeaux and Lille (Fig. 1).

Due to political, economic, and geographic factors, the real estate markets are very different in each of these cities. As the city of Strasbourg is in the Alsace-Moselle department, transactions for this city are not provided in the dataset; therefore, our study focuses on the 9 other largest French cities, including a total of 1,505,033 transactions (in the 7 years) for those cities.

For each transaction in the dataset, 43 variables are available. However, a significant number of these variables refer to technical data about notarial acts and are not relevant for our study. The variables that could be related to real estate price estimations are listed and described in the following Table 1.

Detailed descriptive statistics (minimum, maximum, average, median, standard deviation, number of missing values, number of unique values) for these variables in the raw dataset are provided for each city in Table 2. This allows, for instance, to quickly identify variables with potential outliers that should be well managed in the preparation and cleaning process (see “data preparation” section) before using machine learning techniques (e.g., low prices, high prices, missing values).

Figure 2 shows how the transactions are distributed per city (A), per year (B), per quarter (C) and per year and quarter (D). By far, Paris has the greatest number of transactions (455,774), and Lille has the fewest (80,707 transactions). The trend per year shows an overall increase until 2019 but a slight decrease in 2020 and 2021 (this may be a consequence of the COVID-19 pandemic in 2020 and 2021). In general, the most important part of transactions is made in the last quarter of each year.

Figure 3 shows the total number of transactions per city and per year. We can observe, for instance, that despite the COVID-19 pandemic, there was a significant increase in the number of transactions in 2021 for some cities (e.g., Paris, Nice) but also a sharp decrease for other cities (e.g., Marseille, Toulouse, Montpellier).

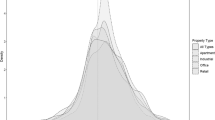

Figure 4 shows the distribution of the number of transactions per discrete variable (type of sale, type of residence, city).

Figure 4A shows that most of the transactions concern old dwellings (sale) and new dwellings before their completion (sale before completion). The other types of sales are marginal (adjudication, exchange, land to build, expropriation). Figure 4C shows that the previous repartition is slightly similar for all the studied cities, except Paris, where the difference between the number of old dwellings and the number of new dwellings before completion is even more accentuated. Very few new dwellings (sales before completion) are sold in Paris compared to other cities. As we are interested in real values of properties, we will only focus on old dwellings sales (sale) and new dwellings (before completion) in the predictive analysis. In Fig. 4B, we can observe that apartments and outbuildings are the most sold type of residence. Industrial locations and houses are less represented, but they are not marginal. Figure 4D shows that this last repartition of type of residence is similar in almost all the studied cities, except Paris, where the difference between the number of apartments and the number of houses is even more accentuated. In Paris, the real estate market is highly focused on apartments, and only very few houses are concerned, compared to other cities. Because we are only interested in residential real estate, we will only use the transactions for apartments and houses in the predictive analysis.

Figure 5 represents the box plots of prices per city (Fig. 5A) and per type of residence (apartments and houses) for each city. We can observe that Paris is by far the most expensive city, followed by Bordeaux, Lyon and Nice. The repartition per apartment and house shows that houses are generally more expensive, except in the case of Lille. The gap between apartments and houses is also more accentuated in Paris and Nice.

Methodology

Our methodology is first based on ML method evaluations (Nyawa et al., 2023; Rampini & Cecconi, 2021; Sing et al., 2022; Steurer et al., 2021). ML relies on a few input and output variable assumptions to make predictions based on evidence in the presence of uncertainty. When the goal is to perform a prediction task, there are four key steps commonly followed when creating a predictive ML model: (i) choosing and preparing a training dataset, (ii) selecting an algorithm (or ML method) to apply to the training dataset, (iii) training the algorithm to build the model, and (iv) using and improving the model for predictive tasks.

The chosen ML method usually depends on the size and type of data, the insights to be obtained from the data, and how those insights will be used. The best algorithm is most often obtained by trial and error. Figure 6 presents the methodology adopted for our experiment using ML algorithms. This methodology consists of five steps. The first four steps (data preparation, data splitting, training of ML models, evaluation of predictions of ML models) are common steps used for ML-based predictive analytics, but they strongly depend on each application context. The fifth step is specifically added to the context of this study to provide local and global explanations of real estate prices for each city. All these steps are successively described below.

Data Preparation

Preparing data before training any ML model is a crucial step to ensure suitable results. Explaining data preparation steps for building ML models is essential for transparency with simulatability or reproducible studies (Chakraborty et al., 2017). In practice, it has generally been found that data preparation accounts for approximately 80% of the total predictive modeling effort (Zhang et al., 2003) for many reasons. For instance, although real-world data are impure, high-performance mining systems require high-quality data, and accurate data yield high-quality patterns. Additionally, real-world data are usually incomplete (e.g., missing attribute values, missing certain attributes of interest, or only aggregate data are available), noisy (e.g., containing errors or outliers), and inconsistent (containing discrepancies in codes or names), and these types of data can disguise useful patterns. In our case, the steps for preparing the data are presented in Fig. 7. The successive steps are as follows: attribute selection, inconsistency removal, outlier removal, filling in missing values, geocoding from address, and one-hot encoding.

The attribute selection step consists of selecting only data from the 9 cities in all the raw datasets. As stated in the “Data” section, the raw dataset contains 43 variables for each transaction. In this step, we also select only the valuable variables (see Table 1) that are naturally related to the price of each transaction. Because we are only interested in residential real estate transactions, we also only keep the transactions with the sales and sales before completion sale types for apartments and residential houses. In the inconsistency removal step, we remove all transactions with missing or bad values for the following key attributes: postal code, price (since it is our target dependent variable), living area and number of rooms. In the outlier removal step, for each city, we remove transactions with price outliers (Fig. 5A) to keep only the most common real estate transactions that represent the majority of the population. The outlier price values are identified with a common box plot method, which consists of using the interquartile range, i.e., all values above the third quartile Q3 plus one-half the interquartile range. The step of filling in missing values consists of replacing the missing values of the land area variable with zero (essentially for apartments, meaning that they naturally have a land area of 0 when the value is missing). Because the location is a highly important predictor of real estate prices (Tchuente & Nyawa, 2022), the geocoding step adds the precise location (latitude and longitude) as new attributes for each transaction (Clapp, 2003; Ozhegov & Ozhegova, 2022). Elements of the address of each transaction (street number, repetition index, street type, postal code and city) are used to find the latitude and longitude of the dwelling using the French government geocoding APIFootnote 1 (Tchuente & Nyawa, 2022). Finally, for all other discrete attributes (sale type, residence type), we perform the one-hot encoding transformation to convert them into continuous and Boolean dummy variables with 0 or 1 for each of their values. For instance, we have two possible values (apartment or house) for the residence type, so this variable is replaced by two dummy variables, with each of them taking the value 0 or 1 for each transaction. Many machine learning algorithms require this transformation for the effective handling of discrete attributes.

Train Validation and Test Split of the Prepared Data

Once the data are prepared, the next methodology step consists of creating our training/validation and testing sets by dividing the original sample into two groups with proportions of 75% and 25% (training and testing sets, respectively). The training/validation set is used in step 3 for training ML algorithms using their parameters (hyperparameters) for choosing the best hyperparameter combination that provides the best predictions using the training set. The test set is used to evaluate the best-built model for each ML algorithm on entirely new data that were not used in the training step to prevent model overfitting on the training data.

ML Algorithm Training

ML algorithm training is the process of tuning model variables and parameters to predict the appropriate results more accurately. Training an ML algorithm is usually iterative and uses various optimization methods depending on the chosen model. These optimization methods do not require human intervention, which is part of the power of ML. The machine learns from the provided data with little to no specific direction from the user. It is very difficult to know a priori which ML algorithm will provide the best predictions for a given dataset. The common practice consists of experimentally evaluating several potential suitable algorithms in each context and choosing the one that provides the best predictive capacity. In a previous paper with a similar dataset (Tchuente & Nyawa, 2022), we evaluated eight popular machine learning algorithms (artificial neural networks, random forest, gradient boosting, AdaBoost, support vector regression, k-nearest neighbors, linear regression), and the random forest provides the best predictive capacity. Thus, we only used random forest in this experiment. Random forest is an ensemble learning method that contains several decision trees on various subsets of the given dataset and takes the average to improve the predictive accuracy (Breiman, 2001). It has the advantage of being more accurate than single decision trees for complex problems, reducing overfitting problems, and being more tolerant of missing values. However, it is less interpretable than single decision trees and can require higher computational cost both when training and using the model. A random forest regressor is trained here using 5-fold cross-validation, which allows us to avoid overfitting by first training and testing each algorithm with a set of hyperparameters using only the training set. It consists of the following three phases: (i) partitioning the training data into several subsets (5 subsets here), (ii) holding out a set at a time and training the model on the remaining set (4 sets here), and (iii) testing the model on the holdout set. The hyperparameters that provide the best predictions after this 5-fold cross-validation will be used for evaluation on the test set (step 4).

Comparative Evaluation of ML Algorithms

As stated in the previous section, the predictions of the best model on the test set are evaluated in this step. The most common metrics for evaluating ML models for regressions will be used here: Q1, MedAE, Q3, MAE, RMSE, MSLE, and R2.

-

Q1 defines the first quartile of the prediction error distribution (error values larger than 25% of all prediction errors).

-

MedAE represents the median error (error values larger than 50% of all prediction errors).

-

Q3 defines the third quartile of the prediction error distribution (error values larger than 75% of all prediction errors).

-

MAE measures the mean absolute error; for a set of \(\:\varvec{n}\) error terms \(\:\left\{{\varvec{e}}_{\varvec{i}},\:\varvec{i}=1,\dots\:,\varvec{n}\right\}\), the MAE is defined by the following:

-

RMSE: quantifies the root mean square error; for a set of \(\:\varvec{n}\) error terms \(\:\left\{{\varvec{e}}_{\varvec{i}},\:\varvec{i}=1,\dots\:,\varvec{n}\right\}\), the RMSE is defined by the following:

-

MSLE defines the mean squared logarithmic error; for a set of \(\:\varvec{n}\) prices \(\:\left\{{\varvec{y}}_{\varvec{i}},\:\varvec{i}=1,\dots\:,\varvec{n}\right\}\) and a set of \(\:\varvec{n}\) predicted price values \(\:\left\{{\widehat{\varvec{y}}}_{\varvec{i}},\:\varvec{i}=1,\dots\:,\varvec{n}\right\}\), the MSLE is defined by the following:

-

R2: computed for the regression model; it represents the proportion of the variance of the dependent variable (output) that is explained by the independent variables (inputs).

Post Hoc Local and Global Explainability Using Shapley Values

The evaluations of the previous step allow us to find the best price predictive model for each city. Even if this ML model has a good predictive capacity, it is commonly perceived as a black-box tool that slows down the real adoption by practitioners (Cajias, 2020; Cajias & Wins, 2021). Thus, this step allows us to overcome this black-box issue and provide explanations of single predictions (local explainability) or the global behavior of the model (global explainability). In recent years, several ML model explainability frameworks have been proposed (Adadi & Berrada, 2018; Arrieta et al., 2020). However, only a few of them, such as SHAP (using Shapley values), can provide both local and global explanations for any ML model (model agnostic). In the experiment in this paper, the selected random forest “black box” model for each city will be explained using SHAP. The process would be the same, even if another ML model was used for training data (e.g., a neural network). As almost all model agnostic explainable frameworks, SHAP is used after the model is built (post hoc method).

Shapley values were named in honor of Lloyd Shapley, who introduced the concept in 1951 and went on to win the Nobel Memorial Prize in Economic Sciences in 2012. Simply put, Shapley values are a method for showing the relative impact of each feature (or variable) we’re measuring on the eventual output of the machine learning model by comparing the relative effect of the inputs against the average. The technical explanation for the concept of SHAP is the computation Shapley values from coalitional game theory (Lundberg & Lee, 2017). Game theory is a theoretical framework for social interactions with competing actors. It is the study of optimum decision-making by independent and competing agents in a strategic context. A “game” is any scenario in which there are many decision-makers, each of whom seeks to maximize his or her outcomes. The optimal choice will be influenced by the decisions of others. The game determines the participants’ identities, preferences, and possible tactics as well as how these strategies influence the result. In the same context, cooperative game theory (a branch of game theory) posits that coalitions of players are the main units of decision-making and may compel cooperative conduct. As a result, cooperative games may be seen as a competition between a coalition of players rather than between individual players. Therefore, the goal is to develop a “formula” for measuring each player’s contribution to the game; this formula is the Shapley value. The scenario is as follows: a coalition of players collaborates to achieve a specific total benefit as a result of their collaboration. Given that certain players may make more contributions to the coalition than others and that various players may have varying levels of leverage or efficiency, what ultimate distribution of profit among players should result in any given game? In other words, we want to know how essential each participant is to the total collaboration and what kind of reward he or she can anticipate as a result. One potential solution to this issue is provided by the Shapley coefficient values. Therefore, within the machine learning context, the feature values of a data instance serve as coalition members. Shapley values will then tell us how to divide the “payout” among the features in a fair manner, which is the prediction. A player may be a single feature value, as in tabular data. A player may alternatively be defined as a set of feature values.

The Shapley value is defined as the marginal contribution of variable value to prediction across all conceivable “coalitions” or subsets of features. In other words, it is one approach to redistributing the overall profits among the players, given that they all cooperate. The amount that each “player” (or feature) receives after a game is defined as follows:

With:

-

\(\:x\): the observation input

-

\(\:\varphi\:i\left(x\right)\): Shapley value for feature i for input x for game/Model f.

-

\(\:F\): the set of all features

-

\(\:{f}_{S}\): the trained model on the subset of features S.

-

\(\:{f}_{S\cup\:\left\{i\right\}}\): the trained model on the subset of features S and {i}.

-

\(\:{x}_{S}\): the restricted input of x given the subset of features S.

-

\(\:{x}_{S\cup\:\left\{i\right\}}\): the restricted input of x given the subset of features S and {i}.

Shapley values have a number of desirable characteristics. Such values satisfy the following four properties: efficiency, symmetry, dummy, and linearity (Table 3). These aspects may be considered a definition of fair weight when taken together.

Results

Predictive Capacity of the ML Models per City

The Python scikit-learn library was used for training the random forest models. The cross-validation for each city was performed using four main hyperparameters:

-

The number of trees in the forest (n_estimators) with four values: 500, 1,000, 2,500, 3,000.

-

The maximum depth of the tree (max_depth) with four values: 8, 16, 32, 64.

-

The use of bootstrap sampling when building trees (bootstrap): True or False.

For all the cities, the best-performing models after cross-validations were obtained with 2,500 trees, a maximum depth of 32, and no bootstrap. The evaluation metrics of those models using the test set are presented in Table 4. Except for the R2 and MSLE metrics, all the other metrics represent values in euros. We can clearly observe that the predictive capacity of the model is quite different depending on the cities. The models are less accurate for cities such as Paris, Nice, Bordeaux, and Lyon (highest values of errors such as MedAE and lowest values of R2) and more accurate for cities such as Nantes, Lille, Toulouse, and Marseille (lowest values of errors such as MedAE and highest values of R2). However, beyond these predictive capacities, we also want to explain locally and globally these models using Shapley Values.

Local Explanations

The Python SHAP library was used to explain the previous machine-learning models for each city. As the computing Shapley values have exponential complexity based on the number of instances used for the computation, we randomly select a subset of 1,000 instances for computing Shapley values for each city in the experiment. It took an average of 24 h to compute these values for a city with a computer having 8 CPUs (Intel Xeon with 3.6 GHz each) and 131 Go of RAM. To ensure that these instances were representative of all the transactions used for testing each model, we verified that the sample and the whole transactions have approximately the same price summary statistics for each city (average and standard deviation).

Local explanations allow us to understand why the model has predicted a specific value given an instance. It is important to note that all local explanations provided by SHAP are relative to a baseline value: the average of the target variable on all the instances used for computing the Shapley values. In our case, this is the average price of the 1,000 instances for each city used.

Figure 8 shows an example of a local SHAP explanation of a predicted price (with the random forest model) of a real estate in Marseille. The two plots (force plot and bar plot) in this figure are complementary and provide a detailed view of the explanation. The force plot shows the baseline value (average real estate price of 184 900 € for Marseille in this case), the predicted value (263,125 € for the studied dwelling) and the marginal contribution of each feature (relative to the baseline) for reaching this prediction. Features that contribute to the increase in the estimated price are in red, and features that contribute to the decrease in the estimated price are in blue. The length of each band is proportional to the contribution of each feature. The bar plot shows a better view of those contributions with the exact Shapley value of each feature, ranked in descending order. For this example, compared to the baseline, the four most important features that contribute to the predicted price are the precise location (longitude with the value 5.365 contribute to + 37,562 €), the living area (with the value of 72 m2 contribute to + 27,658€), the land area (with the value of 0 meaning no land area, rather contribute to a decrease of -20,004 €), and the year of the sale (with the value 2020 contribute to + 19,824 €). Because Shapley Values follow the efficiency property, the sum of the baseline value with all the values of marginal contributions of each feature is equal to the predicted value. The symmetry property can also be easily illustrated here: the two features representing the type of sale (sale before completion or sale) have approximately the same contribution (approximately + 3,000€ for each) because they contribute equally to all possible coalitions; a transaction here can be a sale or a sale before completion. The same remark applies to the type of residence features (house or apartment).

Figure 9 shows another example of a local SHAP explanation for Marseille city, but with an estimated price (85,260€) lower than the baseline value (still 184,900 €). This lower estimation compared to the baseline is mostly explained by a living area of 41 m2 (contribution of -41,084 €), followed by the land area (no land area, contribution of -26,975 €), the precise location (latitude of 43.31, contribution of -12,919 €), etc. Only the global location (postal code with the value 13,004 €) and the residence type of this dwelling have a small positive contribution to the estimated price.

Thus, beyond the predictive performance of complex or black-box ML models (Hjort et al., 2022; Sing et al., 2022; Steurer et al., 2021; Valier, 2020), the two examples of explanations presented using Shapley values demonstrate how the predicted value can be explained in a manner that is understandable to users, whether they are experts in the field or not (e.g., appraisers, assessors, taxpayers, real estate agents, investors, homebuyers, homeowners)(Charlier, 2022; D’Amato et al., 2019; Gibilaro & Mattarocci, 2018; Lee, 2022; Lorenz et al., 2023; Pedersen et al., 2013). Consequently, these users have easy-to-understand elements that can help them gain more confidence in the predictions, or at least provide transparency about the generated prediction. Beyond enhancing confidence in the results, these elements could also help identify potential biases in the constructed model, thereby offering a means to improve the model itself in the future. Such explanations are constructed regardless of the complexity of the upstream ML model. In this case, a random forest model was used; however, the explanations would be presented in the same form, regardless of the underlying trained and adopted ML model (e.g., ANN, SVM, deep learning, etc.).

Global Explanations

Shapley values can be aggregated to provide global explanations of the ML model (Figs. 10 and 11). Figure 10 shows the global importance (mean of absolute Shapley values) of input variables for each city in descending order. This will allow us to analyze the main factors influencing the overall real estate market in each city, as well as to examine the similarities and differences between the cities. Although Fig. 10 presents the overall importance of the variables, the values shown are absolute values. They do not allow for an analysis of the direction of the relationship (positive or negative influence) of each variable on property prices. Figure 11 provides this additional information. This figure represents a scatter plot for each variable, where each point represents a feature value. The x position of a point represents the SHAP value (positive or negative influence on the price). The color of a point displays the original value of a feature: blue indicates a low feature value, and red indicates a high feature value. Thus, Figs. 10 and 11 allow us to analyze both the overall importance of the variables and the direction of the relationships between these variables and the prices.

We can observe many differences in the real estate markets in those cities. For instance, the living area is the most important variable in the prediction for almost all cities, except Bordeaux and Lille, where the land area is most important. We can also observe that for all cities, low values of living area and land area generally imply a decrease in price (Fig. 11), as one would naturally expect. More generally, cities where living areas are more important may indicate a high presence of densely populated zones. However, cities where land areas are more important may indicate a greater potential for development, as the presence of large plots of land can offer more opportunities for expansion, subdivision, or redevelopment. From another perspective, in urban markets, land is often at a premium due to high density and limited availability, making land area a significant factor. In suburban or rural markets, living area might take precedence due to the lower cost and higher availability of land. Moreover, some studies focus more specifically on the decomposition of property values into land values and building values (which among other factors depends on the living area of the buildings) (Pan et al., 2021).

Next, the location also plays a very important role in the price prediction for all cities at a low granularity level (latitude and longitude) or at a high granularity level (postal code). This is not surprising insofar as location has always been considered one of the most determining factors of property values (Basu & Thibodeau, 1998; Tchuente & Nyawa, 2022). However, there are some clear differences depending on cities. For example, the precise location (latitude and longitude) is more important than the global postal code for most of the cities (e.g., Lyon, Marseille, Nice, Bordeaux, Lille), except Toulouse, where the global postal code is more important. This thus indicates a greater fragmentation into geographical sub-markets (Kauko et al., 2002) for the city of Toulouse, where we can observe that some specific postal codes (with a high value) are less expensive than others.

The city of Toulouse (Fig. 10D) also stands out particularly for the strong influence of the number of lots (number of dwellings in a condominium) on real estate prices (second most important variable after the living area). More specifically, the fewer the number of lots in condominiums, the higher the prices tend to be (Fig. 11D). This trend is practically the same in other cities, except for Paris, where many condominiums with higher number of lots tend to have higher prices (Fig. 11A). The difference in the influence of the number of lots on prices between Paris and other cities (e.g., Toulouse) can be explained in several ways. For instance, larger condominiums with more lots can benefit from economies of scale, which might lower the cost per unit for maintenance and amenities. This can make individual units more attractive to buyers due to lower overall costs. In addition, larger condominiums often offer more and better amenities (e.g., swimming pools, gyms, security services) due to a higher number of contributing units. This can enhance the overall value of the property. However, condominiums with fewer lots may offer more exclusivity and privacy, potentially attracting a different segment of buyers who are willing to pay a premium for these features.

The year of the transaction is very important for some specific cities (in the top three of important variables): Paris, Lyon, Bordeaux, and Nantes (Fig. 10). This demonstrates that price fluctuation trends are more important for these cities. In Fig. 11, it can be clearly seen that the trend is upward for all these cities. This upward trend over the years is widespread, as it is also observed in all the other cities studied. In a global manner, while rising real estate prices can be beneficial for property owners and investors, they can have far-reaching negative consequences for affordability, social equity, financial stability, and broader economic health (Frayne et al., 2022).

The other variables such as the type of sales (apartment/house), the type of residence (sale/sale before completion), the quarter of the transaction, or the number of rooms are less important in the prediction (for all cities) compared to other variables. However, the relative importance of variables can sometimes be hidden due to correlations among input variables. This can pose interpretation challenges such as ambiguity (lack of clarity on which of the correlated features is actually driving the prediction) or redundancy (redundant information among the correlated features, making it difficult to distinguish their unique contributions) (Aas et al., 2021). Ideally, highly correlated variables should be managed (e.g., combined or removed) during the feature engineering stage before building ML models. However, since the assumption of independence between variables is rarely verified in real-world data, SHAP also allows for post-hoc analysis of potentially correlated variables to provide more clarity in the explanations. For instance, Fig. 12 shows a clustered version of Fig. 10, where most correlated input variables are clustered using hierarchical clustering. Additional insights can thus be derived from this figure. We can see, for example, that the number of rooms is highly correlated in the predictions with the living area. This can logically explain why the variable representing the number of rooms is less significant in the previous results, as its importance is strongly encapsulated in the variable representing the living area. For some specific cities such as Bordeaux and Lille (where the land area is the most important feature), we can observe that the land area is highly correlated with the number of lots. It can therefore be concluded that real estate prices in these two cities are strongly influenced by large condominiums spread over large land areas. Finally, the variables representing latitude, longitude, or postal code are generally clustered together, thus demonstrating the strong importance of location as identified previously.

Regarding the two discrete variables (type of sale and type of residence), we can observe specific patterns. For instance, sales before completion are more expensive than traditional sales in all cities. This can be attributed to several factors (e.g., Li & Chau, 2019):

-

Developer financing needs: developers often use pre-sales to secure financing for their projects or to transfer some of the market risks to buyers.

-

Buyer expectations: buyers may anticipate an increase in property value by the time the project is completed or may purchase for speculative reasons.

-

Customization opportunities: buyers often have the chance to customize design features or choose the best units in a development.

-

Market dynamics: scarcity of available units and extensive marketing campaigns can drive up prices.

-

Incentives: developers or governments may offer incentives to attract buyers.

-

Economic conditions: regulations that require a certain percentage of units to be sold before completion, or low interest rates.

For the type of residence, apartments are significantly more expensive than houses in some cities, e.g., Lille, Toulouse, and Bordeaux. This could be explained by the fact that these three cities are among those in France that attract the youngest people (e.g., students or young professionals), who generally prefer to live in apartments. Thus, this preference can create greater pressure on the apartment market (e.g., Tyvimaa & Kamruzzaman, 2019). For the other cities, the differences between apartments and houses markets are less pronounced.

To assess the robustness of the obtained results, we also calculated the relative importance of variables by city using the classical PFI (Permutation Feature Importance) method integrated with Random Forest (Breiman, 2001) (see Fig. 13). It can be observed that the order of importance of the variables and the specificities by city are almost the same compared to the Shapley values presented in Fig. 10. In the same vein, we also assessed the overall influence of each variable by city using the classical PDP (Partial Dependence Plots) method (Friedman, 2001). PDPs provide a complementary means of analyzing the relationship between each independent variable and the dependent variable through a trend curve. We also observed that the trends and directions of the relationships are the same as those seen with the Shapley values (Fig. 11), such as for the city of Toulouse (see Fig. 14). This therefore reinforces the results obtained here, although it should be noted that the Shapley values have the advantage of providing more precise and interpretable information (expressed in the units of the dependent variable) on the actual contributions of each independent variable.

Relative importance of variables per city using the Random Forest’s features importance method (Breiman, 2001)

Discussion

Contributions and Experimental Discussion

Our experiment allows us to evaluate local and global explanations of an automated valuation model based on a random forest ML model using Shapley values. The whole analytic process is presented in a transparent way by considering simulatability, decomposability, and algorithmic transparency (Chakraborty et al., 2017; Murdoch et al., 2019). The used dataset contains 7-year historical real estate transactions (1,505,033 transactions) from nine major French cities. Thus, we were able to compare the real estate markets for these nine cities using the models’ predictive capacities and an explainable artificial intelligence framework. For instance, the predictive capacities of models built in our experiment for each city show that they are less accurate for high-cost French cities (e.g., Paris, Nice, Bordeaux, Lyon) compared to medium-cost French cities (e.g., Nantes, Lille, Toulouse, and Marseille) (Tchuente & Nyawa, 2022). This result can be viewed as a difference in the spatial dependence and spatial heterogeneity between these medium-cost cities and high-cost cities (Anselin, 2013; Basu & Thibodeau, 1998; Bitter et al., 2007).

It is now well recognized that ML methods usually outperform other AVMs for the prediction or evaluation of property prices (Valier, 2020; Mayer et al., 2018; Pérez-Rave et al., 2019). However, the “black-box” nature of these models considerably hinders their use by professionals such as appraisers, assessors, mortgage lenders, fund managers, and even researchers. Explainable AI frameworks are designed to demystify the use and outputs of AI techniques (principally ML models) and can thus be highly valuable for the adoption of ML-based AVMs (Cajias, 2020; Cajias & Wins, 2021). However, very few studies have proposed and demonstrated the relevance of XAI for ML-based AVM models (e.g., Iban, 2022; Mayer et al., 2022; Rico-Juan & de La Paz, 2021). The main contribution of this paper is to fill this research gap using an experimental approach with both local and global explanations. We provide a particular use of XAI as a means to compare the behavior of multiple real estate submarkets in the same country (nine major cities in France).

Among current XAI frameworks, the use of Shapley values particularly allows both local and global explanations on any ML model with potentially several levels of granularity on input variables. We show in the experiment how one can provide an explanation of a single prediction, to understand why the model predicts the low or high price of a dwelling, and what are the exact contributions of each feature for that prediction. This approach can, for instance, provide more confidence for an appraiser or assessor who wants to clearly understand the estimated value of a property with any built ML model (Charlier, 2022; D’Amato et al., 2019; Gibilaro & Mattarocci, 2018; Lee, 2022; Lorenz et al., 2023; Pedersen et al., 2013).

Aggregating Shapley values allows us to also provide global explanations for each city by visualizing the most and least important features along with their global contributions. The findings can be summarized in the following points:

-

The most important variable is the living area, which is the most important variable for almost all cities, except Bordeaux and Lille, where land area is the most important. The living area is usually highly correlated with the number of rooms, while the land area is usually highly correlated with the number of lots (number of dwellings in a condominium).

-

Variables that are important only in the case of specific cities; for instance, Toulouse is the only city where the number of lots is among the most important variables and the global postal code is more important in the predictions than precise locations (latitude and longitude). More globally, for cities such as Toulouse, Lyon, Montpellier, Nantes, and Lille, we can observe that prices are more influenced by some specific postal codes. This denotes a strong geographical segmentation of the real estate market of those cities.

-

The perceptible price trends for some cities: for instance, the year of the transaction is among the most important variables for Paris, Lyon, Toulouse, and Nantes. This highlights a particular dynamic of the real estate market in those cities.

-

The differences between apartments and houses: Apartments are significantly more expensive than houses in some cities: Lille, Toulouse, and Bordeaux.

-

The differences between sales of old dwellings and sales before completion: the two most important variables (living area and land area) have a higher influence on old sales compared to sales before completion for all cities, even if there is a nuance in the case of Paris.

Most existing AVM studies focus on modeling real estate prices for an individual city; however, such models are often not interesting for mortgage lenders with assets spread out across a country (Guliker et al., 2022). In this case, for instance, using both local and global explanations of ML-based AVM would be of interest. Most existing AVM studies that seek to compare the differences in real estate markets among many geographic areas usually focus only on the differences in terms of the predictive capacity of ML models (e.g., Guliker et al., 2022; Rampini & Cecconi, 2021; Tchuente & Nyawa, 2021) or rely on hedonic models (e.g., Cordera et al., 2019; Sisman & Aydinoglu, 2022). Global explanations such as those provided in this study can be more relevant in this context, as they are first built from ML models (usually more accurate than hedonic models) with the advantage of providing explanations such as what could be inferred from hedonic models.

However, in the same way that there are metrics for measuring the accuracy of predictive capacities of ML models (e.g., MAE, RMSE, R2), it also seems fundamental to consider the evaluation of the quality of explanations provided by XAI frameworks like SHAP. Although this question remains relatively unexplored in the literature, some proposals are beginning to emerge in this regard, focusing on quantitative or qualitative evaluations of local and global explanations (Tchuente et al., 2024).

For local explanations, some alternative and equally popular XAI techniques such as LIME (Local Interpretable Model-agnostic Explanations) (Ribeiro et al., 2016), which rely on white-box models constructed around the instance to be explained, propose explanation evaluation metrics such as the “local fidelity”. The “local fidelity” assesses the trustworthiness of the explanations. It quantifies how well the simplified model approximates the behavior of the original model for the specific instance being explained. This helps users understand the reliability and accuracy of the interpretability provided by LIME. However, a technique like SHAP, which relies on Shapley values of features, does not inherently provide such local evaluation metrics. In general, it is not possible to directly compare local explanations between SHAP and LIME, for instance, since SHAP provides explanations based on an average prediction value, while LIME offers explanations in absolute terms. Although some recent approaches attempt to standardize the local explanations provided by various techniques (Amparore et al., 2021), they are not yet mature and are very specific to linear explanation models. Nowadays, the most common method for evaluating local explanations remains qualitative, through validation by domain experts who understand very well the subject and the features used in the models. Some few studies have already been proposed in this direction for explainable AVMs (Bauer et al., 2023; Holstein et al., 2023; Wan & Lindenthal, 2023).

For global explanations, quantitative evaluation commonly involves robustness tests, using alternative methods to verify that important variables (and their order) remain consistent regardless of the method used (e.g., global stability or global fidelity)(Agarwal et al., 2022). In our case, for example, we observed that the most important variables of the models for all cities remained stable when comparing the results from the PFI and PDP methods. From a qualitative evaluation perspective, it would involve obtaining explicit feedback from key stakeholders involved in the studied markets.

Research Implications

The literature on AVM using ML techniques is growing at a fast rate (Valier, 2020; Wang & Li, 2019). However, the adoption of ML-based AVMs by practitioners is hampered by the black-box nature of the most performant ML models. XAI methods can bring much clarity in this context while ensuring greater predictive capabilities. However, the associated literature for AVM is still very recent and scarce. Building an AVM is a highly experimental exercise that is specific to the application context (geographic location) through spatial dependence and spatial heterogeneity (Anselin, 2013). The most performant models and their characteristics also depend on each application context. In the same way, many studies need to be performed to explain those models for each application context.

For instance, a previous study (Tchuente & Nyawa, 2022) for the French real estate market was performed to compare the predictive capacity of several machine learning models. This paper particularly complements this approach by focusing on the interpretability of such models at the local and global levels for each city. The impacts of all variables on the model prediction can now be easily accessed. The approach used in this paper can also be compared to recent applications of XAI in AVM that rely on SHAP or even other XAI techniques such as sensitivity analysis (Dimopoulos & Bakas, 2019; Iban, 2022), visualizations with PDP or ALE (Chen et al., 2022a, b; Lee, 2022; Mayer et al., 2022), or combinations of ML models and hedonic regressions (Li et al., 2021; Mayer et al., 2022). In general, the particularity of this paper with respect to this literature can be expressed in two major points: (ii) we experiment on the specific French real estate market, which has never been studied in this context, and (ii) we train and explain the ML outputs of nine major cities to be able to clarify the specificities and differences of multiple submarkets, in line with submarket approaches in the AVM literature (Bourassa et al., 2003, 2007; Guliker et al., 2022; McCluskey & Borst, 2011).

Practical Implications

Real estate price fluctuations have direct impacts on the financial system due to banks’ central role as mortgage lenders and the frequent use of real estate as collateral (Koetter & Poghosyan, 2010). Using AI for automating real estate price estimations and XAI for explaining the estimations could improve the activities of real estate appraisers in this context (European Banking Authority, 2020). Without explanations, AI’s decisions can be easily contested, or end users may want to check if the decision is fair. For instance, end users have a legal “right to explanations” under the EU’s GDPR and the Equal Credit Opportunity Act in the US. The approach in this paper can also be relevant to property tax assessment (Lee, 2022). Tax assessment must be performed in an accurate and transparent manner to estimate the value of properties to calculate their property tax. The estimation must be accurate to avoid significant inequality in the taxpayers’ burden. However, it must also be explainable and transparent because most countries grant taxpayers the right to appeal the estimated price, and the tax administration is responsible for explaining estimations to taxpayers.

In general, the approach and the results of these studies can be of interest for all actors related to real estate markets (Cajias, 2020). For instance, real estate investors, markets analysts, fund managers, or real estate agencies could directly benefit from the global explanations provided for a better understanding of similarities and differences of the real estate markets in studied cities. They can also rely on local explanations to better understand the estimated price of a specific dwelling. This last advantage is also valid for a buyer, or a seller wishing to estimate and understand the price of a property he wishes to buy or sell, respectively.

Limitations and Future Research Directions

This study has some limitations that can be improved upon in future research. First, as with many other XAI frameworks, SHAP makes transparent the correlations picked up by predictive ML models. However, making correlations transparent does not make them causal (Chou et al., 2022). For instance, we found that the living area is the most important variable for price prediction for most cities, while land area is the most important variable for a few other cities. A correlation is highlighted here and provides good insight. However, explaining the causality of this correlation will need more domain expertise (Renigier-Biłozor et al., 2022) or will need further appropriate studies with other characteristics, such as sociocultural characteristics (Ibrahim et al., 2016).

Second, the results of this study are limited to the specific French context. However, beyond the cases studied in France, the aim of this paper is to transparently present the entire methodology and process for providing explanations for ML-based AVMs. The process presented here is therefore generic and can be replicated in various other cities and countries to provide context-specific results. In addition, explanations for ML predictions of other types of real estate, such as commercial real estate, should also be considered (e.g., Deppner et al., 2023; Francke & van de Minne, 2024).

Third, even if the predictive capacity of the built model and the provided explanations using Shapley values are valuable, the dataset used can be enriched to improve the precision of the model and analyze the impact of many other structural dwelling characteristics (e.g., presence of elevator, swimming pool) and their conditions (e.g., Oust et al., 2023), or environmental characteristics. For instance, the dataset can be linked to other publicly available datasets or social media data to include additional features such as neighborhood characteristics (e.g., accessibility to bus stations, subway stations, schools, hospitals), location characteristics (e.g., distance to the center of the city, distance to city employment center), and urban environmental characteristics (e.g., green view index, sky view index) (Chen et al., 2020; Li et al., 2021).

Fourth, rather than focusing on a single XAI framework, the dataset can be used to perform an experimental benchmark and compare the relevance of explanations provided by many other XAI approaches, such as surrogate techniques (Amparore et al., 2021; Ribeiro et al., 2016), visualization techniques (Chen et al., 2022a, b; Lee, 2022), or the mix of ML techniques with explainable hedonic techniques (Li et al., 2021; Mayer et al., 2022).

Fifth, it could also be relevant to study and explain the volatility issues of ML-based AVMs compared to hedonic models (Mayer et al., 2018).

Finally, beyond real estate AVM issues, some other interesting predictive analytic issues in real estate, such as the prediction of best locations for investments (Kumar et al., 2019), could be studied in light of XAI approaches.

Conclusion

In this paper, we proposed an approach with an experiment for explaining local and global predictions of a black-box ML-based AVM using Shapley values. Existing studies focusing on explaining ML models for AVM are still very sparse in the literature, whereas they are essential for the real adoption and use of ML models by real estate markets players (e.g., appraisers, real estate agents, real estate brokers, fund managers, investors). Our experiment relies on a real-life dataset containing 7-year historical real estate transactions for nine major French cities. We transparently present the different steps for extracting and preparing raw data before training, validating, and testing an ML random forest regressor. We show how one can easily explain and understand a single estimation of a property price using the built ML model and computed Shapley values. The global explanations with aggregated Shapley values allow the comparison of similarities and differences in the nine studied real estate markets (submarkets). The approach and the results are discussed with respect to the existing related studies in the literature and lead to several future research avenues that are highlighted.

Data availability

The dataset used in this study is publicly available at https://www.data.gouv.fr/fr/datasets/5c4ae55a634f4117716d5656/.

Notes

References

Aas, K., Jullum, M., & Løland, A. (2021). Explaining individual predictions when features are dependent: More accurate approximations to Shapley values. Artificial Intelligence, 298, 103502.

Adadi, A., & Berrada, M. (2018). Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). Ieee Access: Practical Innovations, Open Solutions, 6, 52138–52160.

Agarwal, C., Krishna, S., Saxena, E., Pawelczyk, M., Johnson, N., Puri, I., Zitnik, M., & Lakkaraju, H. (2022). Openxai: Towards a transparent evaluation of model explanations. Advances in Neural Information Processing Systems, 35, 15784–15799.

Amparore, E., Perotti, A., & Bajardi, P. (2021). To trust or not to trust an explanation: Using LEAF to evaluate local linear XAI methods. PeerJ Computer Science, 7, e479.

Anselin, L. (2013). Spatial econometrics: Methods and models (Vol. 4). Springer Science & Business Media.

Apley, D. W., & Zhu, J. (2020). Visualizing the effects of predictor variables in black box supervised learning models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 82(4), 1059–1086.

Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., García, S., Gil-López, S., Molina, D., & Benjamins, R. (2020). Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82–115.

Basu, S., & Thibodeau, T. G. (1998). Analysis of spatial autocorrelation in house prices. The Journal of Real Estate Finance and Economics, 17(1), 61–85.

Bauer, K., von Zahn, M., & Hinz, O. (2023). Expl (AI) ned: The impact of explainable artificial intelligence on users’ information processing. Information Systems Research, 34(4), 1582–1602.

Binoy, B. V., Naseer, M. A., Kumar, P. P. A., & Lazar, N. (2021). A bibliometric analysis of property valuation research. International Journal of Housing Markets and Analysis.

Bitter, C., Mulligan, G. F., & Dall’erba, S. (2007). Incorporating spatial variation in housing attribute prices: A comparison of geographically weighted regression and the spatial expansion method. Journal of Geographical Systems, 9(1), 7–27.

Bogin, A. N., & Shui, J. (2020). Appraisal Accuracy and Automated Valuation models in Rural Areas. The Journal of Real Estate Finance and Economics, 60(1), 40–52. https://doi.org/10.1007/s11146-019-09712-0

Bourassa, S. C., Hoesli, M., & Peng, V. S. (2003). Do housing submarkets really matter? Journal of Housing Economics, 12(1), 12–28.

Bourassa, S. C., Cantoni, E., & Hoesli, M. (2007). Spatial dependence, housing submarkets, and house price prediction. The Journal of Real Estate Finance and Economics, 35(2), 143–160.

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32.

Bücker, M., Szepannek, G., Gosiewska, A., & Biecek, P. (2022). Transparency, auditability, and explainability of machine learning models in credit scoring. Journal of the Operational Research Society, 73(1), 70–90.