Abstract

Using contiguous relations we construct an infinite number of continued fraction expansions for ratios of generalized hypergeometric series \({}_3F_2(1)\). We establish exact error term estimates for their approximants and prove their rapid convergence. To do so, we develop a discrete version of Laplace’s method for hypergeometric series in addition to the use of ordinary (continuous) Laplace’s method for Euler’s hypergeometric integrals.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 1813, Gauss [14] introduced a general continued fraction that represents the ratio of two \({}_2F_1\) hypergeometric functions. It is interesting because it contains a variety of continued fraction expansions of several important elementary functions and some of more transcendental ones. In 1901, Van Vleck [26] established a general result on its convergence. Gauss’s continued fraction is derived from a three-term contiguous relation for \({}_2F_1\). In 1956, using other contiguous relations, Frank [13] constructed some more (eight or so) continued fractions of a similar sort and discussed their convergence. In 2005, Borwein et al. [7] obtained an explicit bound for the error term in certain special cases of Gauss’s continued fraction. In 2011, based on Gauss’s continued fraction and other means, Colman et al. [8] developed an efficient algorithm for the validated high-precision computation of certain \({}_2F_1\) functions.

The generalized hypergeometric series of unit argument \({}_3F_2(1)\) also admits three-term contiguous relations, among which the basic twelve relations were found by Wilson [27]; see also Bailey [5]. Thus it is feasible and interesting to discuss or utilize allied continued fractions for \({}_3F_2(1)\). For instance, Zhang [29] used contiguous relations for \({}_3F_2(1)\) to give new proofs of three of Ramanujan’s elegant continued fractions for products and quotients of gamma functions, namely, entries 34, 36, and 39 in Ramanujan’s second notebook [24, Chapter 12], or in its corrected version by Berndt, Lamphere, and Wilson [6]. In a similar vein, Denis and Singh [9] dealt with entries 25 and 33 of the same notebook.

To give a further motivation for \({}_3F_2(1)\) continued fractions, we look at the special case in which one of the numerator parameters, say \(a_0\), is equal to one

where \(\varGamma ( a )\) is Euler’s gamma function. This series is well defined and non-terminating if

in which case the series is absolutely convergent if and only if

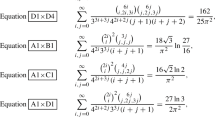

This class of infinite sums are interesting because they contain a lot of special evaluations, some of which are presented in Table 1. Therefore it is important to establish a general framework for the precise and efficient computations of the series (1). Naturally, our approach here is based on three-term contiguous relations and allied continued fractions. As an illustration of a more general story to be developed in this article, we shall present a continued fraction expansion of the series (1) with an exact error term estimate for its approximants that exhibits an exponentially fast convergence (see Theorem 1.1).

To state Theorem 1.1, let \(\{q(n)\}_{n=0}^{\infty }\) and \(\{r(n)\}_{n=0}^{\infty }\) be infinite sequences defined by

where \(q_i(n)\) and \(r_i(n)\) are given by formulas in Table 2 and \(q_0(0) := 1\), \(r_0(0) := 1\), \(r_1(0) = -1\). The modulo 3 structure in (4) is the reflection of a \(\mathbb {Z}_3\)-symmetry in the relevant contiguous relations (see Sect. 2.1). Under condition (2), all the q(n) and r(n) have non-vanishing denominators, while all the r(n) have non-vanishing numerators if and only if the parameters satisfy

Thus the (formal) infinite continued fraction

makes sense, provided that the conditions (2) and (5) are satisfied.

Theorem 1.1

If conditions (2), (3), and (5) are fulfilled then continued fraction (6) converges to series (1) exponentially fast and there exists an exact error term estimate for its approximants

as \(n \rightarrow +\infty \), where the constant \(C(a_1, a_2; b_1, b_2)\) is given by

Theorem 1.1 is only a corollary to a specific example of infinitely many continued fractions with exact error estimates, which we shall establish in Theorems 3.2 and 3.3 (see Example 9.1). To generate infinitely many continued fractions, we naturally need infinitely many contiguous relations, so we then need a general theory, beyond the scopes of Bailey [5] and Wilson [27], that presides over all contiguous relations for \({}_3F_2(1)\). Our previous paper [10] develops such a theory and the present article relies substantially on the main results of that paper.

2 Contiguous and recurrence relations

The hypergeometric series of unit argument \({}_3F_2(1)\) with full five parameters is defined by

With the notation \({\varvec{a}}= (a_0, a_1, a_2; a_3, a_4) = (a_0, a_1, a_2; b_1, b_2)\), this series is often denoted by \({}_3F_2({\varvec{a}})\). It is well defined and non-terminating as a formal sum if \({\varvec{a}}\) satisfies

in which case \({}_3F_2({\varvec{a}})\) is absolutely convergent if and only if

where \(s({\varvec{a}})\) is called the parametric excess for \({}_3F_2({\varvec{a}})\). We say that \({\varvec{a}}\) is balanced if \(s({\varvec{a}}) = 0\).

In order to discuss contiguous relations, however, we find it more convenient in many respects to replace \({}_3F_2({\varvec{a}})\) by the renormalized hypergeometric series defined by

This latter series is well defined and non-terminating as a formal sum, whenever

in which case series \({}_3f_2({\varvec{a}})\) is absolutely convergent if and only if (8) is satisfied. Note that

as long as both sides of Eq. (9) make sense.

2.1 Contiguous relations

It follows from [10, Theorem 1.1] that for any distinct integer vectors \(\varvec{k}\), \(\varvec{l}\in \mathbb {Z}^5\) different from \(\varvec{0}\) there exist unique rational functions \(u({\varvec{a}})\), \(v({\varvec{a}}) \in \mathbb {Q}({\varvec{a}})\) such that

An identity of the form (10) is called a contiguous relation for \({}_3f_2(1)\). An algorithm to calculate \(u({\varvec{a}})\) and \(v({\varvec{a}})\) explicitly is given in [10, Recipe 5.4]. According to it, one calculates the connection matrix \(A({\varvec{a}}; \varvec{k})\) as in [10, Formula (30)] and defines \(r({\varvec{a}}; \varvec{k}) \in \mathbb {Q}({\varvec{a}})\) to be its (1, 2)-entry as in [10, Formula (33)]. One also calculates \(r({\varvec{a}}; \varvec{l})\) as well as \(r({\varvec{a}}; \varvec{l}-\varvec{k})\) in similar manners. If \(\varvec{k}\) and \(\varvec{l}\) are distinct then \(r({\varvec{a}}; \varvec{l}-\varvec{k})\) is non-zero in \(\mathbb {Q}({\varvec{a}})\) and the coefficients in (10) are represented as

as in [10, Proposition 5.3], where according to [10, Formula (32)] one has

In order to formulate our main results in Sect. 3.2, we need one more fact about the structure of \(r({\varvec{a}}; \varvec{k})\) which is not discussed in [10]. Given a vector \(\varvec{k}= (k_0,k_1,k_2; l_1, l_2) \in \mathbb {Z}^5\), let

where \(m_{\pm } := \max \{ \pm m, 0\}\). Note that \(\prod _{i=0}^2 \prod _{j=1}^2 (b_j-a_i; \, l_j-k_i) = \langle {\varvec{a}}; \varvec{k}\rangle _+/\langle {\varvec{a}}+ \varvec{k}; \varvec{k}\rangle _-\).

Lemma 2.1

For any non-zero vector \(\varvec{k}\in \mathbb {Z}_{\ge 0}^5\) with \(s(\varvec{k}) = 0\) there exists a non-zero polynomial \(\rho ({\varvec{a}}; \varvec{k}) \in \mathbb {Q}[{\varvec{a}}]\) such that the rational function \(r({\varvec{a}}; \varvec{k})\) can be written as

Proof

A non-zero polynomial \(p({\varvec{a}}) \in \mathbb {Q}[{\varvec{a}}]\) is said to be a denominator of a rational function \(r({\varvec{a}}) \in \mathbb {Q}({\varvec{a}})\) if the product \(p({\varvec{a}}) \, r({\varvec{a}})\) becomes a polynomial. A denominator of the least degree, which is unique up to constant multiples, is referred to as the reduced denominator. Any denominator is divisible by the reduced denominator in \(\mathbb {Q}[{\varvec{a}}]\). A denominator of a matrix with entries in \(\mathbb {Q}({\varvec{a}})\) is, by definition, a common denominator of those entries.

For \(i = 0, 1, 2\), \(\mu = 1, 2\), let \(\varvec{e}_{\mu }^i := (\delta _{0i}, \delta _{1i}, \delta _{2i}; \delta _{1\mu }, \delta _{2\mu })\), where \(\delta _{*\star }\) is Kronecker’s delta. A vector of this form is said to be basic. A product of contiguous matrices in [10, Table 2] yields

where \(\{i, j, k\} = \{0, 1, 2\}\). Any \(\varvec{k}= (k_0, k_1, k_2; l_1, l_2) \in \mathbb {Z}_{\ge 0}^5\) with \(s(\varvec{k}) = 0\) admits a decomposition \(\varvec{k}= \varvec{v}_l + \cdots + \varvec{v}_1\) with each \(\varvec{v}_i\) basic, so \(A({\varvec{a}}; \varvec{k})\) can be computed by the chain rule

Thus \(A({\varvec{a}}; \varvec{k})\) has a denominator each irreducible factor of which is of the form \(b_{\mu } - a_i + \hbox {an integer}\). A factor of this form is said to be of type \(b_{\mu }- a_i\) and the product of all factors of this type is referred to as the \(b_{\mu }-a_i\) component of the denominator.

Claim

For each \(i = 0,1,2\) and \(\mu = 1,2\) the matrix \(A({\varvec{a}}; \varvec{k})\) admits a denominator whose \(b_{\mu }- a_i\) component is exactly the factorial function \((b_{\mu }-a_i; (l_{\mu }-k_i)_+)\).

To show the claim we may assume \(i = 0\) and \(\mu = 1\) without loss of generality.

-

(1)

If \(m_0 := k_0-l_1 \ge 0\), then take the decomposition \(\varvec{k}= l_1 \varvec{e}_1^0 + m_0 \varvec{e}_2^0 + k_1 \varvec{e}_2^1 + k_2 \varvec{e}_2^2\).

-

(2)

If \(m_1 := l_1-k_0 > 0\), then take the decomposition \(\varvec{k}= k_{12} \varvec{e}_2^1 + k_{22} \varvec{e}_2^2 + k_0 \varvec{e}_1^0 + k_{11} \varvec{e}_1^1 + k_{21} \varvec{e}_1^2\), where \(k_{ij}\) are non-negative integers such that \(k_1 = k_{11}+k_{12}\), \(k_2 = k_{21}+k_{22}\), \(m_1 = k_{11}+k_{21}\) and \(l_2 = k_{12} + k_{22}\); such \(k_{ij}\) exist thanks to \(\varvec{k}\in \mathbb {Z}_{\ge 0}^5\) and \(s(\varvec{k}) = 0\).

We use the fact that \(A({\varvec{a}}; m \varvec{e}_{\mu }^i)\) has a denominator \((b_{\mu }-a_j; m)(b_{\mu }-a_k; m)\), where \(\{i, j, k\} = \{0, 1, 2\}\), which follows by induction on \(m \in \mathbb {Z}_{\ge 0}\). In case (1), the decomposition of \(\varvec{k}\) and the chain rule (14) imply that \(A({\varvec{a}}; \varvec{k})\) has a denominator without \(b_1-a_0\) component. In case (2), the decomposition of \(\varvec{k}\) leads to the product \(A({\varvec{a}}; \varvec{k}) = A_2({\varvec{a}}; \varvec{k}) A_1({\varvec{a}}; \varvec{k})\) with

Observe that \(A_1({\varvec{a}}; \varvec{k})\) has a denominator whose \(b_1-a_0\) component is \((b_1-a_0; k_{11}+k_{21}) = (b_1-a_0; m_1)\), while \(A_2({\varvec{a}}; \varvec{k})\) has a denominator without \(b_1-a_0\) component. So \(A({\varvec{a}}; \varvec{k})\) has a denominator whose \(b_1-a_0\) component is \((b_1-a_0; m_1)\). The claim is thus verified.

For each entry of \(A({\varvec{a}}; \varvec{k})\), the Claim implies that for \(i = 0,1,2\) and \(\mu = 1, 2\) the \(b_{\mu }-a_i\) component of its reduced denominator must divide the factorial \((b_{\mu }-a_i; \, (l_{\mu }-k_i)_+)\), so the reduced denominator itself must divide the product \(\langle {\varvec{a}}; \varvec{k}\rangle _+ = \prod _{i=0}^2 \prod _{\mu =1}^2 (b_{\mu }-a_i; \, (l_{\mu }-k_i)_+)\). Thus one can take \(\langle {\varvec{a}}; \varvec{k}\rangle _+\) as a denominator of \(A({\varvec{a}}; \varvec{k})\). The index of a rational function is the degree of its numerator minus that of its denominator. An induction on the length l of product (14) shows that the index \(\le i-j\) for the (i, j)-entry of \(A({\varvec{a}}; \varvec{k})\). Another induction shows that the (1, 2)-entry is divisible by \(s({\varvec{a}})-1\). All these facts lead to expression (13) for \(r({\varvec{a}}; \varvec{k})\). \(\square \)

2.2 Symmetry and dichotomy

Let \(G = S_3 \times S_2\) be the group acting on \({\varvec{a}}= (a_0,a_1,a_2; b_1, b_2)\) by permuting \((a_0, a_1, a_2)\) and \((b_1, b_2)\) separately. It is obvious that \({}_3f_2({\varvec{a}})\) is invariant under this action, so that any element \(\tau \in G\) transforms the contiguous relation (10) into a second one

where \({}^{\tau }\!\varphi ({\varvec{a}}) := \varphi (\tau ^{-1}({\varvec{a}}))\) is the induced action of \(\tau \) on a function \(\varphi ({\varvec{a}})\).

Take an element \(\sigma \in G\) such that \(\sigma ^3\) is identity and set

Formula (15) with \(\tau = \sigma \) followed by a shift \({\varvec{a}}\mapsto {\varvec{a}}+ \varvec{k}\) yields

and similarly Formula (15) with \(\tau = \sigma ^2\) followed by another shift \({\varvec{a}}\mapsto {\varvec{a}}+ \varvec{l}\) gives

If \(\varvec{k}\) is non-zero, non-negative \(\varvec{k}\in \mathbb {Z}_{\ge 0}^5\) and balanced \(s(\varvec{k}) = 0\), then so are \(\varvec{l}-\varvec{k}= \sigma (\varvec{k})\) and \(\varvec{l}\) by definition (16), hence Lemma 2.1 applies not only to \(\varvec{k}\) but also to \(\sigma (\varvec{k})\) and \(\varvec{l}\). Putting Formulas (12) and (13) for these vectors into Formula (11) we have

Definition 2.2

For any non-zero vector \(\varvec{k}\in \mathbb {Z}_{\ge 0}^5\) with \(s(\varvec{k}) = 0\), we consider two cases.

-

(1)

The case is said to be of straight type when \(\sigma \) is identity, \(\varvec{l}= 2 \varvec{k}\) and \(\varvec{p}= 3 \varvec{k}\).

-

(2)

The case is said to be of twisted type when \(\sigma \) is a cyclic permutation of the upper parameters \((a_0, a_1, a_2)\) that acts on the lower parameters \((b_1, b_2)\) trivially,

$$\begin{aligned} \varvec{k}= \begin{pmatrix} k_0, &{} k_1, &{} k_2 \\ &{} l_1, &{} l_2 \end{pmatrix}, \qquad \varvec{p}= \begin{pmatrix} p, &{} p, &{} p \\ &{} 3 l_1, &{} 3 l_2 \end{pmatrix}, \end{aligned}$$(20)with \(p := k_0+k_1+k_2 = l_1 + l_2\), and if \(\sigma (a_0,a_1,a_2; b_1, b_2) = (a_{\lambda }, a_{\mu }, a_{\nu }; b_1, b_2)\), then

$$\begin{aligned} \varvec{l}= \begin{pmatrix} k_0 + k_{\lambda }, &{} k_1 + k_{\mu }, &{} k_2 + k_{\nu } \\ &{} 2 l_1, &{} 2 l_2 \end{pmatrix}, \end{aligned}$$(21)where the index triple \((\lambda , \mu , \nu )\) is either (2, 0, 1) or (1, 2, 0).

This dichotomy is only due to the restriction of our attention to symmetries \(\sigma \) such that \(\sigma ^3 = 1\). Taking other symmetries from \(S_3 \times S_2\) would lead to other patterns of twists. It is an interesting problem to treat some other cases or to exhaust all cases that are possible.

2.3 Recurrence relations

In the situation of Definition 2.2, the shifts \({\varvec{a}}\mapsto {\varvec{a}}+ n \varvec{p}\), \(n \in \mathbb {Z}_{\ge 0}\), in the contiguous relation (10) and its companions (17) and (18) induce a system of recurrence relations

for \(n \in \mathbb {Z}_{\ge 0}\), where the sequences \(f_i(n)\), \(q_i(n)\) and \(r_i(n)\) are defined by

In view of the modulo 3 structure in (22), it is convenient to set

Then the system (22) is unified into a single three-term recurrence relation

If \(\varvec{k}\) is non-negative, \(\varvec{k}\in \mathbb {Z}_{\ge 0}^5\), then so are \(\varvec{l}\) and \(\varvec{p}\) by Formula (16), hence all f(n), \(n \in \mathbb {Z}_{\ge 0}\), are well defined under single assumption (7). If moreover \(\varvec{k}\) is balanced, \(s(\varvec{k}) = 0\), then so are \(\varvec{l}\) and \(\varvec{p}\) again by Formula (16), hence all f(n), \(n \in \mathbb {Z}_{\ge 0}\) have the same parametric excess. Thus all these series are convergent under the single assumption (8). In what follows we refer to \(\varvec{k}\) as the seed vector while \(\varvec{p}\) as the shift vector. We remark that \(\varvec{k}\) is primary in the sense that \(\varvec{l}\) and \(\varvec{p}\) are derived from \(\varvec{k}\) by the rule (16), but \(\varvec{p}\) is likewise important because it is \(\varvec{p}\) rather than \(\varvec{k}\) that is directly responsible for the asymptotic behavior of the sequence f(n).

2.4 Simultaneousness

In place of the series \({}_3f_2({\varvec{a}})\), we consider another series

Let \(\varvec{k}\), \(\varvec{l}\), and \(\varvec{p}\) be vectors as in (16) such that \(s(\varvec{k}) = 0\) and hence \(s(\varvec{l}) = s(\varvec{p}) = 0\). By assertion (3) of [10, Theorem 1.1] the contiguous relation (10) for \({}_3f_2({\varvec{a}})\) is simultaneously satisfied by \({}_3h_2({\varvec{a}}) := \exp ( \pi \sqrt{-1} \, s({\varvec{a}})) \, {}_3g_2({\varvec{a}})\), but the factor \(\exp ( \pi \sqrt{-1} \, s({\varvec{a}}))\) is irrelevant by \(s(\varvec{k}) = s(\varvec{l}) = 0\), thus (10) is satisfied by \({}_3g_2({\varvec{a}})\) itself. Let \(g_i(n)\) and g(n) be defined from \({}_3g_2({\varvec{a}})\) in the same manner as \(f_i(n)\) and f(n) are defined from \({}_3f_2({\varvec{a}})\) in Sect. 2.3, that is, let

Then the sequences f(n) in (23a) and g(n) in (26b) solve the same recurrence relation (24). With this observation we are now ready to consider continued fractions.

3 Continued fractions

First, we present a general principle to establish an exact error estimate for the approximants to a continued fraction. Next, we announce the final goal of this article, Theorems 3.2 and 3.3, which will be achieved by the principle after a rather long journey of asymptotic analysis.

3.1 A general error estimate

Let \(\{ q(n) \}_{n=0}^{\infty }\) and \(\{ r(n) \}_{n=1}^{\infty }\) be sequences of complex numbers such that r(n) is non-zero for every \(n \in \mathbb {N}:= \mathbb {Z}_{\ge 1}\). We consider a sequence of finite continued fractions

The convergence of (27) can be described in terms of the three-term recurrence relation

A non-trivial solution X(n) to Eq. (28) is said to be recessive if \(X(n)/Y(n) \rightarrow 0\) as \(n \rightarrow +\infty \) for any solution Y(n) not proportional to X(n). Recessive solution, if it exists, is unique up to non-zero constant multiples. Any non-recessive solution is said to be dominant.

Theorem 3.1

(Pincherle [23]) Sequence (27) is convergent if and only if the recurrence equation (28) has a recessive solution X(n), in which case (27) converges to the ratio X(0) / X(1).

We refer to Gil et al. [16], Jones and Thron [18], and Gautschi [15] for more accessible sources on Pincherle’s theorem. Let us make this theorem more quantitative. For any non-trivial solution x(n) to Eq. (28) and any positive integer \(m \in \mathbb {N}\) one has

Thus if x(n; m) is a non-trivial solution to (28) that vanishes at \(n = m+2\), then

One can express the solution x(n; m) in the form

where X(n) and Y(n) are recessive and dominant solutions to (28), respectively, so that \(R(m) \rightarrow 0\) as \(m \rightarrow +\infty \). Hence if X(0) is non-zero then so is x(0; m) for every \(m \gg 0\) and

where \(\omega (n) := X(n) \cdot Y(n+1) - X(n+1) \cdot Y(n)\) is the Casoratian of X(n) and Y(n), thus

In order to apply this general estimate to continued fractions for \({}_3f_2(1)\), we want to set up the situation in which the sequences f(n) in (23a) and g(n) in (26b) are recessive and dominant solutions, respectively, to the recurrence relation (24). We present in Sect. 4 a sufficient condition for f(n) to be recessive, while we impose in Sect. 6 a further constraint that insures the dominance of g(n). In fact, upon assuming those conditions, we deduce asymptotic representations for f(n) and g(n) showing that they are actually recessive and dominant, respectively. The asymptotic analysis there is used not only to prove such a qualitative assertion but also to get a precise asymptotic behavior for the ratio \(R(n) = f(n+2)/g(n+2)\). We have also to evaluate the initial term \(\omega (0)\) for the Casoratian of f(n) and g(n); this final task is done in Sect. 7.

3.2 Main results on continued fractions

Let \(\{ q(n)\}_{n=0}^{\infty }\) and \(\{r(n)\}_{n=1}^{\infty }\) be sequences (23b) and (23c) derived from \(u({\varvec{a}})\) and \(v({\varvec{a}})\) as in Formula (19). Consider the continued fraction \(\mathbf {K}_{j=0}^{\infty } \, r(j)/q(j)\), where \(r(0) := 1\) by convention. It is said to be well defined if q(j) and r(j) take finite values with r(j) non-zero for every \(j \ge 0\).

Let \(\mathcal {S}(\mathbb {R})\) be the set of all real vectors \(\varvec{p}= (p_0, p_1, p_2; q_1, q_2) \in \mathbb {R}^5\) such that

Note that (30) in particular implies \(p_1, p_2 > 0\) and that \(\mathcal {S}(\mathbb {R})\) is a 4-dimensional polyhedral convex cone defined by a linear equation and a set of linear inequalities. It is the space to which the shift vector \(\varvec{p}\) in (16) should belong, or rather as an integer vector it should lie on

The following functions of \(\varvec{p}\in \mathcal {S}(\mathbb {R})\) play important roles in several places of this article:

where \(e_1 := p_0+p_1+p_2 = q_1+q_2\), \(e_2 := p_0 p_1 + p_1 p_2 + p_2 p_0 + q_1 q_2\), and \(e_3 := p_0 p_1 p_2\). We remark that \(\varDelta (\varvec{p})\) is the discriminant (up to a positive constant multiple) of the cubic equation

which plays an important role in Sect. 6.2. Moreover, for \(\varvec{k}= (k_0, k_1, k_2; l_1, l_2) \in \mathbb {Z}^5\) we put

We are now able to state the main results of this article; they are stated in terms of the seed vector \(\varvec{k}\), but a large part of their proofs will be given in terms of the shift vector \(\varvec{p}\). For continued fractions of straight type in Definition 2.2, we have the following theorem.

Theorem 3.2

(Straight Case) If \(\varvec{k}= (k_0, k_1, k_2; l_1, l_2) \in \mathcal {S}(\mathbb {Z})\) satisfies either

then \(|D(\varvec{k})| > 1\) and there exists an error estimate of continued fraction expansion

as \(n \rightarrow + \infty \), provided that \(\mathrm {Re}\, s({\varvec{a}})\) is positive, \({}_3f_2({\varvec{a}})\) is non-zero, and the continued fraction \(\mathbf {K}_{j=0}^{\infty } \, r(j)/q(j)\) is well defined, where \(D(\varvec{k})\) is defined in (32) with \(\varvec{p}\) replaced by \(\varvec{k}\), while

with \(\rho ({\varvec{a}}; \varvec{k}) \in \mathbb {Q}[{\varvec{a}}]\) being the polynomial in (13), explicitly computable from \(\varvec{k}\),

with \(s_2(\varvec{k}) := k_0 k_1 + k_1 k_2 + k_2 k_0 - l_1 l_2\) and \(\gamma ({\varvec{a}}; \varvec{k})\) defined by Formula (34).

A numerical inspection shows that about 43 % of the vectors in \(\mathcal {S}(\mathbb {Z})\) satisfy condition (35) (see Remark 6.2). In the straight case with \(\varvec{k}\in \mathcal {S}(\mathbb {Z})\), Formulas (19) become simpler

We turn our attention to continued fractions of twisted type in Definition 2.2.

Theorem 3.3

(Twisted Case) If \(\varvec{k}= (k_0, k_1, k_2; l_1, l_2) \in \mathbb {Z}^5_{\ge 0}\) satisfies the condition

then there exists an error estimate of continued fraction expansion

as \(n \rightarrow +\infty \), provided that \(\mathrm {Re}\, s({\varvec{a}})\) is positive, \({}_3f_2({\varvec{a}})\) is non-zero, and the continued fraction \(\mathbf {K}_{j=0}^{\infty } \, r(j)/q(j)\) is well defined, where \(E(l_1, l_2)\) and \(c_{\mathrm {t}}({\varvec{a}}; \varvec{k})\) are given by

with \(\rho ({\varvec{a}}; \varvec{k}) \in \mathbb {Q}[{\varvec{a}}]\) being the polynomial in (13), explicitly computable from \(\varvec{k}\),

and \(\gamma ({\varvec{a}}; \varvec{k})\) being defined by Formula (34).

The proofs of Theorems 3.2 and 3.3 will be completed at the end of Sect. 7.

4 Continuous Laplace method

We shall find a class of directions \(\varvec{p}= (p_0, p_1, p_2; q_1, q_2) \in \mathbb {R}^5\) in which the sequence

behaves like \(n^{\alpha }\) as \(n \rightarrow +\infty \) for some \(\alpha \in \mathbb {R}\), where we assume \(s(\varvec{p}) = 0\) so that the parametric excesses for f(n) are independent of n, always equal to \(s({\varvec{a}})\). We remark that the current f(n) corresponds to the sequence \(f_0(n)\) in Sect. 2.3, not to f(n) in Formula (23a).

In terms of the series \({}_3f_2({\varvec{a}})\), Thomae’s transformation [1, Corollary 3.3.6] reads

To investigate the asymptotic behavior of f(n), take Thomae’s transformation of (43) to have

and then apply ordinary Laplace’s method to the Euler integral representation for (45c). Since this analysis is not limited to \({}_3f_2(1)\), we shall deal with more general \({}_{p+1}f_p(1)\) series.

4.1 Euler integral representations

The renormalized generalized hypergeometric series \({}_{p+1}f_p(z)\) is defined by

where \({\varvec{a}}= (a_0, \dots , a_p; b_1, \dots , b_p) \in \mathbb {C}^{p+1} \times \mathbb {C}^p\) are parameters such that none of \(a_0, \dots , a_p\) is a negative integer or zero. Then (46) is absolutely convergent on the open unit disk \(|z| < 1\).

It is well known that if the parameters \({\varvec{a}}\) satisfy the condition

then the improper integral of Euler type

is absolutely convergent, and the series (46) admits an integral representation

where \(I = (0, \, 1)\) is the open unit interval, \(\varvec{t}= (t_1, \dots , t_p) \in I^p\) and \(d \varvec{t}= d t_1 \cdots d t_p\).

We are more interested in \({}_{p+1}f_p(1)\), that is, in the series (46) at unit argument \(z = 1\)

It is well known that series (49) is absolutely convergent if and only if

in which case we have \({}_{p+1}f_p( {\varvec{a}}; z ) \rightarrow {}_{p+1}f_p( {\varvec{a}})\) as \(z \rightarrow 1\) within the open unit disk \(|z| < 1\).

Lemma 4.1

If conditions (47) and (50) are satisfied, then the integral

is absolutely convergent and the series (49) admits an integral representation

Proof

If r denotes the distance of \(\varvec{t}\) from \(\varvec{1} := (1, \dots ,1)\) then one has

The absolute convergence of integral (51) off a neighborhood U of \(\varvec{1}\) is due to condition (47), while that on U follows from condition (50) and estimate (53). In view of

Formula (52) is derived from Formula (48) by Lebesgue’s convergence theorem. \(\square \)

The series (49) is symmetric in \(a_0, a_1, \dots , a_p\), but the integral representation (52) is symmetric only in \(a_1, \dots , a_p\). This fact is efficiently used in the next subsection.

4.2 Asymptotic analysis of Euler integrals

Observing that the 0-th numerator parameter of the sequence \(f_1(n)\) in (45c) is independent of n, we consider a sequence of the form

The associated Euler integrals have an almost product structure which allows a particularly simple treatment in applying Laplace’s approximation method.

Proposition 4.2

If \(\varvec{k}= (0, k_1, \dots , k_p; l_1, \dots , l_p) \in \mathbb {R}^{2 p+1}\) is a real vector such that

then \(E_p({\varvec{a}}+ n \, \varvec{k})\) admits an asymptotic representation as \(n \rightarrow +\infty \),

uniform for \({\varvec{a}}=(a_0, \dots , a_p; b_1, \dots , b_p)\) in any compact subset of \((\mathbb {C}\setminus \mathbb {Z}_{\le 0}) \times \mathbb {C}^p \times \mathbb {C}^p\), where

Proof

The proof is an application of the standard Laplace method to the integral (52), so only an outline of it is presented. Replacing \({\varvec{a}}\) with \({\varvec{a}}+ n \, \varvec{k}\) in definition (51), we have

where \(\Phi (\varvec{t})\), \(\phi (\varvec{t})\), and \(u(\varvec{t})\) are defined by

Observe that \(\phi (\varvec{t})\) attains a unique minimum at \(\varvec{t}_0 := (k_1/l_1, \dots , k_p/l_p)\) in the interval \(I^p\), since

The standard formula for Laplace’s approximation then leads to

where \(\mathrm {Hess}(\phi ; \varvec{t}_0)\) is the Hessian of \(\phi \) at \(\varvec{t}_0\) while \(\Phi _{\scriptstyle \mathrm {max}}\) and C are given by Formulas (56). \(\square \)

4.3 Recessive sequences

We return to the special case of \({}_3f_2(1)\) series and prove the following.

Theorem 4.3

If \(\varvec{p}= (p_0,p_1,p_2;q_1,q_2) \in \mathbb {R}^5\) is balanced, \(s(\varvec{p}) = 0\), and

then the sequence \(f(n) = {}_3f_2({\varvec{a}}+ n \varvec{p})\) in (43) admits an asymptotic representation

uniform in any compact subset of \(\mathrm {Re}\, s({\varvec{a}}) > 0\), where \(s_2(\varvec{p}) := p_0 p_1 + p_1p_2 + p_2 p_0 -q_1 q_2\).

Proof

By Formulas (45) and (52), the sequence (43) can be written \(f(n) = \psi _2(n) \, e_2(n)\) with

Conditions \(s(\varvec{p}) = 0\) and (57) imply that \(p_1\), \(p_2 > 0\) and \(q_j - p_i > 0\) for every \(j = 1\), 2 and \(i = 0\), j, so Stirling’s formula applied to (59a) yields an asymptotic representation

as \(n \rightarrow + \infty \), where A and B are given by

When \(p = 2\), \(k_1 = q_1-p_0\), \(k_2 = q_2-p_0\), \(l_1 = p_1\), \(l_2 = p_2\), condition (54) becomes (57), so Proposition 4.2 applies to the sequence (59b). In this situation, we have \(\Phi _{\scriptstyle \mathrm {max}}= A^{-1}\) in (56a) and \(C = B^{-1} \cdot \varGamma (s({\varvec{a}})) \cdot \{p_1p_2-(q_1-p_0)(q_2-p_0)\}^{-s({\varvec{\scriptstyle a}})}\) in (56b), where we have \(p_1 p_2 -(q_1-p_0)(q_2-p_0) = s_2(\varvec{p})\) from \(s(\varvec{p}) = 0\). Thus Formula (55) reads

Combining Formulas (60) and (61), we have the asymptotic representation (58). \(\square \)

Thomae’s transformation (44) rewrites \({}_3f_2({\varvec{a}})\) so that the parametric excess \(s({\varvec{a}})\) appears as an upper parameter and the invariance \(s({\varvec{a}}) = s({\varvec{a}}+ n \varvec{p})\), \(n \in \mathbb {Z}_{\ge 0}\), for balanced \(\varvec{p}\) facilitates the analysis leading to Theorem 4.3. Note that (44) is only one of an order 120 group of transformations for \({}_3F_2(1)\) (see [19, Theorem 3] for an impressive account). We wonder if other transformations of the group could be applied to cover some non-balanced cases.

Remark 4.4

We take this opportunity to review some existing results on the large-parameter asymptotics of \({}_2F_1\) and \({}_3F_2\). For the former, we refer to a classical book of Luke [20, Chap. 7] and more recent articles of Temme [25], Paris [22], Farid Khwaja and Olde Daalhuis [11], Aoki and Tanda [2], and Iwasaki [17], where much work has used the traditional (continuous) version of Laplace’s method, while [2] employs exact WKB analysis. For the latter, there are very few to cite; some results are mentioned in [20, Sect. 7.4], but most work has focused on the asymptotics of terminating series such as the behavior as \(n \rightarrow \infty \) of the ‘extended Jacobi’ polynomials \({}_3F_2(-n, n+ \lambda , a_3; b_1, b_2; z)\), to which one can apply very different techniques such as ones based on generating series; see e.g., Fields [12]. Temme [25] comments on the difficulty of obtaining large-parameter asymptotics of \({}_3F_2\) functions, even in the terminating cases. As an attempt to overcome this difficulty, we shall introduce a discrete version of Laplace’s method.

5 Discrete Laplace method

When a solution to a recurrence equation is given in terms of hypergeometric series, we want to know its asymptotic behavior and thereby to check whether it is actually a dominant solution. To this end, regarding the series as a “discrete” integral, we develop a discrete Laplace method as an analogue to the usual (continuous) Laplace method for ordinary integrals. While Theorems 3.2 and 3.3 on continued fractions are the final goal of this article, the main result of this section, Theorem 5.2, and the method leading to it are the methodological core of the article.

5.1 Formulation

Let \(\varvec{\sigma }= (\sigma _i) \in \mathbb {R}^I\), \(\varvec{\lambda }= (\lambda _i) \in \mathbb {R}^I\), \(\varvec{\tau }= (\tau _j) \in \mathbb {R}^J\), \(\varvec{\mu }= (\mu _j) \in \mathbb {R}^J\) be real numbers indexed by finite sets I and J. Suppose that the pairs \((\varvec{\sigma }, \varvec{\tau })\) and \((\varvec{\lambda }, \varvec{\mu })\) are balanced to the effect that

Let \(\varvec{\alpha }(n) = (\alpha _i(n)) \in \mathbb {C}^I\) and \(\varvec{\beta }(n) = (\beta _j(n)) \in \mathbb {C}^J\) be sequences in \(n \in \mathbb {N}\) of complex numbers indexed by \(i \in I\) and \(j \in J\). Suppose that they are bounded, that is, for some constant \(R > 0\),

In practical applications, \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) will typically be independent of n; however, allowing such a moderate dependence upon n as in (63) is quite helpful in developing the theory.

Given \(0 \le r_0 < r_1 \le + \infty \), we consider the sum of gamma products

where \(\lceil x \rceil := \min \{ m \in \mathbb {Z}\,:\, x \le m \}\) denotes the ceiling function. We remark that the reflection of discrete variable \(k \mapsto \lceil r_0 n \rceil + \lceil r_1 n \rceil -1 -k\) in (64) induces an involution

where \(r(n) := (r_0+r_1) n +1 - \lceil r_0 n \rceil - \lceil r_1 n \rceil \) and the resulting data are indicated with a prime, while the reflection leaves \(r_0\) and \(r_1\) unchanged. Since \(-1 < r(n) \le 1\), if \(\alpha _i(n)\) and \(\beta _j(n)\) are bounded then so are \(\alpha _i'(n)\) and \(\beta _j'(n)\). This reflectional symmetry is helpful in some occasions. Moreover, for any integer \(s \le r_0\) the shift\(k \mapsto k + s n\) in (64) results in the translations

Taking \(s = \lfloor r_0 \rfloor \) we may assume \(0 \le r_0 < 1\), where \(\lfloor x \rfloor := \max \{ m \in \mathbb {Z}\,:\, m \le x \}\) is the floor function. This normalization is also sometimes convenient.

It is insightful to rewrite the gamma product G(k; n) as

where \(l_i(x)\) and \(m_j(x)\) are affine functions defined by

We remark that condition (62) is equivalent to the balancedness of affine functions

The sum g(n) is said to be admissible if

where if \(r_1 = +\infty \) then by \(l_i( r_1 ) \ge 0\) and \(m_j( r_1 ) \ge 0\), we mean \(\sigma _i > 0\) and \(\tau _j > 0\). Condition (69) says that \(l_i(x)\) and \(m_j(x)\) are non-constant affine functions taking non-negative values at both ends of the interval \([r_0, \, r_1]\), so they must be positive in its interior, that is,

To work near the endpoints of the interval, we introduce four index subsets

Then there exists a positive constant \(c > 0\) such that

This “uniformly away from zero” property will be important in applying a version of Stirling’s formula which is given later in (90), especially when \(I_0 \cup I_1 \cup J_0 \cup J_1 = \emptyset \) (regular case).

Lemma 5.1

We have \(\sigma _i > 0\) for \(i \in I_0\) while \(\sigma _i < 0\) for \(i \in I_1\), in particular \(I_0 \cap I_1 = \emptyset \). Similarly we have \(\tau _j > 0\) for \(j \in J_0\) while \(\tau _j < 0\) for \(j \in J_1\), in particular \(J_0 \cap J_1 = \emptyset \). If

then the sum g(n) is well defined, that is, every summand \(G(k; n) = H(k/n; n)\) in (64) takes a finite value for any \(n \ge (R+1)/c\) with R and c given in (63) and (72).

Proof

By condition (69a), if \(i \in I_0\) then \(0 \le l_i( r_1 ) = l_i( r_1 ) - l_i( r_0 ) = (r_1-r_0) \sigma _i\) with \(r_1-r_0 > 0\) and \(\sigma _i \ne 0\), which forces \(\sigma _i > 0\), while if \(i \in I_1\) then \(0 \le l_i( r_0 ) = l_i( r_0 ) - l_i( r_1 ) = (r_0-r_1) \sigma _i\) with \(r_0-r_1 < 0\) and \(\sigma _i \ne 0\), which forces \(\sigma _i < 0\). A similar argument using (69b) leads to the assertions for \(J_0\) and \(J_1\). The sum g(n) fails to make sense only when the argument of an upper gamma factor of a summand G(k; n) takes a negative integer value or zero, that is,

This cannot occur for \(i \in I \setminus (I_0 \cup I_1)\) and \(n \ge (R+1)/c\), since (63) and (72) imply that \(l_i(k/n) \, n + \mathrm {Re}\, \alpha _i(n) \ge c n -R \ge 1\) for any \(k \in \mathbb {Z}\) such that \(r_0 \le k/n \le r_1\). Observe that

where \(l := k - \lceil r_0 n \rceil \) ranges over \(0, 1, \dots , \lceil r_1 n \rceil - \lceil r_0 n \rceil -1\). This cannot be a negative integer or zero, if condition (73) is satisfied for \(\nu = 0\). A similar argument can be made for \(\nu = 1\), since condition (73) for \(\nu =1\) is obtained from that for \(\nu =0\) by applying reflectional symmetry (65). Thus if (73) is satisfied then g(n) is well defined for \(n \ge (R+1)/c\). \(\square \)

To carry out analysis it is convenient to quantify condition (73) by writing

where \(\mathrm {dist}(z, Z)\) stands for the distance between a point z and a set Z in \(\mathbb {C}\), and cut off by 1 is simply to make \(\delta _{\nu }(n) \le 1\) as it really works only when \(0 < \delta _{\nu }(n) \ll 1\). Condition (74) or (73) is referred to as the genericness for the data \(\varvec{\alpha }(n)\).

5.2 Main results on discrete Laplace method

To state the main result of this section we introduce the following quantities:

where |I| and |J| are the cardinalities of I and J. We refer to \(\Phi (x)\) as the multiplicative phase function for the sum g(n) in (64).

Thanks to positivity (70) the function \(\Phi (x)\) is smooth and positive on \((r_0, \, r_1)\). If we employ the convention \(0^0 = 1\), which is natural in view of the limit \(x^x \rightarrow 1\) as \(x \rightarrow +0\), then \(\Phi (x)\) is continuous and positive at \(x = r_0\) as well as at \(x = r_1\) when \(r_1 < +\infty \), even if some of the \(l_i(x)\)’s or \(m_j(x)\)’s vanish at one or both endpoints. When \(r_1 = + \infty \), some calculations using balancedness condition (62) shows that

where \(\varvec{\sigma }^{\varvec{{\scriptstyle \sigma }}} := \prod _{i \in I} \sigma _i^{\sigma _i}\), \(\varvec{\sigma }^{\varvec{{\scriptstyle \lambda }}} := \prod _{i \in I} \sigma _i^{\lambda _i}\), and so on; note that all of \(\sigma _i\) and \(\tau _j\) are positive due to the admissibility condition (69) for the \(r_1 = + \infty \) case. Thus it is natural to define

With this understanding we assume the continuity at infinity:

Then \(\Phi (x)\) is continuous on \([r_0, \, r_1]\) even when \(r_1 = + \infty \) and it makes sense to define

as a positive finite number. Therefore the function

is a real-valued, continuous function on \([r_0, \, r_1)\), smooth in \((r_0, \, r_1)\); if \(r_1 < + \infty \) then it is also continuous at \(x = r_1\); otherwise, \(\phi (x)\) is either continuous at \(x = + \infty \) or tends to \(+ \infty \) as \(x \rightarrow + \infty \). We refer to \(\phi (x)\) as the additive phase function for the sum g(n) in (64).

When \(r_1 = + \infty \) we have to think of the (absolute) convergence of infinite series (64). If the strict inequality \(\varvec{\sigma }^{\varvec{{\scriptstyle \sigma }}} < \varvec{\tau }^{\varvec{{\scriptstyle \tau }}}\) holds in (78) then it certainly converges. Otherwise, in order to guarantee its convergence, suppose that there is a constant \(\sigma > 0\) such that for any \(n \in \mathbb {N}\),

where

Thanks to positivity (70), the function u(x; n) is also smooth and nowhere vanishing on \((r_0, \, r_1)\), but it may be singular at one or both ends of the interval when some of the \(l_i(x)\)’s or \(m_j(x)\)’s vanish there. To deal with this situation we say that g(n) is left-regular if \(I_0 \cup J_0 = \emptyset \); right-regular if \(I_1 \cup J_1 = \emptyset \); and regular if \(I_0 \cup J_0 \cup I_1 \cup J_1 = \emptyset \). If g(n) is left-regular resp. right-regular with \(r_1 < + \infty \), then u(x; n) is continuous at \(x = r_0\) resp. \(x = r_1\). When \(r_1 < + \infty \) the reflectional symmetry (65) exchanges left and right regularities to each other. We remark that if \(r_1 = + \infty \) then right-regularity automatically follows from admissibility.

The maximum of \(\Phi (x)\) or equivalently the minimum of \(\phi (x)\) plays a leading role in our analysis, so it is important to think of the first and second derivatives of \(\phi (x)\). Differentiations of (79) with balancedness condition (62) took into account yield

Denote by \(\mathrm {M{\scriptstyle ax}}\) the set of all maximum points of \(\Phi (x)\) on \([r_0, \, r_1]\). Suppose that \(\Phi (x)\) attains its maximum \(\Phi _{\scriptstyle \mathrm {max}}\) only within \((r_0, \, r_1)\), that is, \(r_0\), \(r_1 \not \in \mathrm {M{\scriptstyle ax}}\). Moreover suppose that every maximum point is non-degenerate to the effect that

which is referred to as properness of the maximum. By Formula (82a) any \(x \in \mathrm {M{\scriptstyle ax}}\) is a root of

which is called the characteristic equation for g(n), while \(\chi ( x )\) is referred to as the characteristic function for g(n). It is easy to see that Eq. (84) has only a finite number of roots, unless \(\chi (x) \equiv 0\), so \(\mathrm {M{\scriptstyle ax}}\) must be a finite set. Note that \(\phi '(x)\) and \(\chi (x)\) have the same sign.

Equation (84) can be used to determine the set \(\mathrm {M{\scriptstyle ax}}\) explicitly. In applications to hypergeometric series, one usually puts \(\sigma _i\), \(\tau _j = \pm 1\) and \(\lambda _i\), \(\mu _j \in \mathbb {Z}\), thus (84) is equivalent to an algebraic equation with integer coefficients and hence any \(x \in \mathrm {M{\scriptstyle ax}}\) must be an algebraic number. In this case with \(r_1 = +\infty \), since \(\varvec{\sigma }^{\varvec{{\scriptstyle \sigma }}} = \varvec{\tau }^{\varvec{{\scriptstyle \tau }}} = \varvec{\sigma }^{\varvec{{\scriptstyle \lambda }}} = \varvec{\tau }^{\varvec{{\scriptstyle \mu }}} = 1\), the continuity at infinity (78) is trivially satisfied with \(\Phi (+ \infty ) = 1\) in (77), thus condition \(\mathrm {M{\scriptstyle ax}}\Subset (r_0, \, +\infty )\) in (83) includes \(\Phi _{\scriptstyle \mathrm {max}}> 1\).

Theorem 5.2

If balancedness (62), boundedness (63), admissibility (69), genericness (74) and properness (83) are all satisfied, with continuity at infinity (78) and convergence (80) being added when \(r_1 = + \infty \), then the sum g(n) in (64) admits an asymptotic representation

where \(\gamma (n)\) is defined in Formula (81) while \(\Phi _{\scriptstyle \mathrm {max}}\) and C(n) are defined by

in terms of the notations in (75), whereas the error term \(\Omega (n)\) is estimated as

for some constants \(K > 0\), \(\lambda > 1\), and \(N \in \mathbb {N}\), where \(\delta _0(n)\) and \(\delta _1(n)\) are defined in (74). This estimate is valid uniformly for all \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) satisfying conditions (63) and (74) along with (80) when \(r_1 = +\infty \), in which case \(I_1 = \emptyset \) and so \(\delta _1(n) = 1\).

Things are simpler when \(\mathrm {M{\scriptstyle ax}}\) consists of a single point \(x_0 \in (r_0, \, r_1)\), in which case the main idea for proving Theorem 5.2 is to divide the sum (64) into five components:

with each component being a partial sum of (64) defined by

where if \(r_1 = + \infty \) then the right-end component should be omitted. In order for the division (88) to make sense, the number \(\varepsilon \) must satisfy

How to take \(\varepsilon \in (0, \, \varepsilon _0)\) will be specified in the course of establishing Theorem 5.2.

We want to think of h(n) as the principal part of g(n), while other four components as remainders. Thus estimating the top component h(n) is the central issue of this section, but treatment of both ends \(g_0(n)\) and \(g_1(n)\) is also far from trivial. For the sake of simplicity we shall deal with the case \(|\mathrm {M{\scriptstyle ax}}| = 1\) only, but even when \(|\mathrm {M{\scriptstyle ax}}| \ge 2\) things are essentially the same and it will be clear how to modify the arguments. The reflectional symmetry (65) reduces the discussion at the right end or right side to the discussion at the left counterpart. The top and side sums are regular, so we shall begin by estimating regular sums in Sect. 5.3.

In the present article, we are working in the balanced cases, that is, under condition (62); it is an interesting problem to extend our method so as to cover non-balanced cases.

In the sequel, we shall often utilize the following version of Stirling’s formula: For any positive number \(c > 0\) and any compact subset \(A \Subset \mathbb {C}\), we have

where Landau’s symbol O(1 / n) is uniform with respect to \((x, a) \in \mathbb {R}_{\ge c} \times A\).

5.3 Regular sums and side components

In this subsection, we assume that g(n) in (64) satisfies balancedness (62), boundedness (63), and admissibility (69), along with continuity at infinity (78) and convergence (80) if \(r_1 = + \infty \), while properness (83) is not assumed and genericness (74) is irrelevant to regular sums.

Lemma 5.3

If the sum g(n) in (64) is regular then there exists an integer \(N_0 \in \mathbb {N}\) and a constant \(C_0 > 0\) such that H(x; n) in Formula (67) can be written

Proof

Since g(n) is regular, that is, \(I_0 \cup I_1 \cup J_0 \cup J_1 = \emptyset \), we have the uniform positivity (72) for all \(i \in I\), \(j \in J\), and \(x \in [r_0, \, r_1]\). This together with boundedness (63) allows us to apply Stirling’s formula (90) to all gamma factors \(\varGamma (l_i(x) n + \alpha _i(n))\) and \(\varGamma (m_j(x) n + \beta _j(n))\) of H(x; n) in (67). Taking definitions (75) and (81) into account, we use Formula (90) to have

where the O(1 / n) term is uniform with respect to \(x \in [r_0, \, r_1]\) as well as to \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) satisfying condition (63). Then balancedness (68) yields the desired Formula (91). \(\square \)

Proposition 5.4

If the sum g(n) in Formula (64) is regular then it admits an estimate

for a constant \(C_1 > 0\) and an integer \(N_0 \in \mathbb {N}\) which is the same as in Lemma 5.3.

Proof

From representation (91), we have

First we consider the case \(r_1 < + \infty \). Since g(n) is regular and \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) are bounded by assumption (63), the definition (75b) implies that u(x; n) is bounded for \((x, n) \in [r_0, \, r_1] \times \mathbb {Z}_{\ge N_0}\). Replacing the constant \(C_0\) by a larger one if necessary, we have \(|H(x; n)| \le C_0 \cdot n^{\mathrm {Re}\, \gamma (n)} \cdot \Phi _{\scriptstyle \mathrm {max}}^{\, n}\) for any \(x \in [r_0, \, r_1]\) and \(n \ge N_0\). Thus by definitions (64) and (67), we have for any \(n \ge N_0\),

with the constant \(C_1 := C_0 (1+r_1-r_0)\).

We proceed to the case \(r_1 = + \infty \) and \(\varvec{\sigma }^{\varvec{{\scriptstyle \sigma }}} = \varvec{\tau }^{\varvec{{\scriptstyle \tau }}}\) in which condition (80) takes place. Since g(n) is regular and \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) are bounded by (63), the definition (75b) implies that

uniformly for \(n \in \mathbb {N}\). By condition (80) there exists a constant \(C_2 > 0\) such that

In view of definitions (64) and (67), this estimate together with Formula (92) yields

for any integer \(n \ge N_0\), where \(C_1 := C_2 \, (1+C_0)\, (1+r_0)^{-\sigma }/\sigma \).

The proof ends with the case where \(r_1 = + \infty \) and \(\varvec{\sigma }^{\varvec{{\scriptstyle \sigma }}} < \varvec{\tau }^{\varvec{{\scriptstyle \tau }}}\). By Stirling’s formula (90) and asymptotic representation (76), there exists a constant \(C_3 > 0\) such that

with \(0< \rho := \varvec{\sigma }^{\varvec{{\scriptstyle \sigma }}}/\varvec{\tau }^{\varvec{{\scriptstyle \tau }}} < 1\). Take a number \(r_2 > r_0\) so large that \(d := C_3 \cdot \rho ^{r_2/2} < \Phi _{\scriptstyle \mathrm {max}}\) and let \(g(n) = g_1(n) + g_2(n)\) be the decomposition according to the division \([r_0, \,+\infty ) = [r_0, \, r_2) \cup [r_2, \, +\infty )\). Then an estimate for the \(r_1 < + \infty \) case applies to \(g_1(n)\), while one has \(|H(x; n)| \le C_3 \cdot d^n \cdot (x n)^c \cdot \rho ^{x n/2}\) for \(x \ge r_2\), where \(c := \sup _{n \ge N_0} \mathrm {Re}\, \gamma (n)\), and hence

for any \(n \ge N_0\). It is clear from \(0< d < \Phi _{\scriptstyle \mathrm {max}}\) that the proposition follows. \(\square \)

Proposition 5.4 can be used to estimate the side components \(h_0(n)\) and \(h_1(n)\) in (88).

Lemma 5.5

For any \(0< \varepsilon < \varepsilon _0\) there exist \(N_1^{\varepsilon } \in \mathbb {N}\) and \(C_1^{\varepsilon } > 0\) such that

where

\(\Phi _0^{\varepsilon } := \displaystyle \max _{r_0 + \varepsilon \le x \le x_0-\varepsilon } \Phi (x)\) and \(\Phi _1^{\varepsilon } := \displaystyle \max _{x_0 + \varepsilon \le x \le r_1-\varepsilon } \Phi (x)\).

Proof

We have only to apply Proposition 5.4 with \(r_0\) and \(r_1\) replaced by \(r_0 + \varepsilon \) and \(x_0-\varepsilon \) to deduce the estimate for \(h_0(n)\). In a similar manner, we apply the proposition this time with \(r_0\) and \(r_1\) replaced by \(x_0 + \varepsilon \) and \(r_1-\varepsilon \) to get the estimate for \(h_1(n)\). \(\square \)

5.4 Top component

We consider the top component h(n) in (88). Recall the setting in Sect. 5.2 that \(\mathrm {M{\scriptstyle ax}}= \{ x_0 \} \Subset (r_0, \, r_1)\), \(\Phi _{\scriptstyle \mathrm {max}}= \Phi ( x_0 ) = e^{- \phi ( x_0)}\), \(\phi '( x_0 ) = 0\), and \(\phi ''( x_0 ) > 0\). Since the sum h(n) is regular, Lemma 5.3 implies that H(x; n) can be written as in (91a) with estimate (91b) now being

The local study of H(x; n) near \(x = x_0 \) is best performed in terms of new variables

Taylor expansions around \(x = x_0 \) show that \(\phi ( x )\) and u(x; n) can be written

with \(a := \textstyle \frac{1}{2} \, \phi ''( x_0 ) > 0\) and some positive constants b, c, \(\varepsilon _1 > 0\). It is clear that a and b are independent of n. We can also take c and \(\varepsilon _1\) uniformly in n because \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) are bounded by assumption (63). If we put

then Formula (91a) yields \(H(x; n) = H_{\mathrm {a}}(x; n) + H_{\mathrm {b}}(x; n) + H_{\mathrm {c}}(x; n)\), which in turn gives

where \(l := \lceil (x_0-\varepsilon ) n \rceil \) and \(m := \lceil (x_0 + \varepsilon ) n \rceil \).

To estimate \(h_{\mathrm {a}}(n)\) we use some a priori estimates, which will be collected in Sect. 5.6.

Lemma 5.6

For any \(0< \varepsilon < \varepsilon _2 := \min \{ \varepsilon _0, \, \frac{\varepsilon _1}{2}, \, \frac{a}{4 b} \}\) and \(n \ge N_1(\varepsilon ) := \max \{ 2/\varepsilon , \, N_0(\varepsilon ) \}\),

where \(M_3(a, b)\) is defined in Lemma 5.17 and currently \(a := \frac{1}{2} \phi ''(x_0) > 0\).

Proof

Put \(\psi (z; a) := e^{- a \, z^2 + \delta (z)}\) with \(\delta (z) := - n \cdot \eta \left( n^{-1/2} z \right) \). Then (95a) and (94a) read

Consider the sequence \(\varDelta : \xi _k := (k - x_0 n)/\sqrt{n}\)\((k = l, \dots , m)\). From the definitions of l and m,

which together with \(0< \varepsilon < \varepsilon _2\) and \(n \ge N_1(\varepsilon )\) implies inclusion \([\xi _l, \, \xi _m] \subset [- \varepsilon _1 \sqrt{n}, \, \varepsilon _1 \sqrt{n}]\), so the estimate (97b) is available for all \(z \in [\xi _l, \, \xi _m]\). From Formula (97a), we have

where \(R(\psi ; \varDelta )\) is the left Riemann sum of \(\psi (z; a)\) for equipartition \(\varDelta \) of the interval \([\xi _l, \, \xi _m]\).

Let \(\varphi (z; a) := e^{-a z^2}\). Since \(|\xi _k - z| \le 1/\sqrt{n}\) for any \(z \in [\xi _k, \, \xi _{k+1}]\), Lemma 5.17 yields

where estimate (119) is used in the second inequality. By the partition of Gaussian integral

and bounds \(\xi _l \le -\varepsilon \sqrt{n}/2\) and \(\xi _m \ge \varepsilon \sqrt{n}\), which follow from (98) and \(n \ge 2/\varepsilon \), we have

with \(M_5(a, b; \varepsilon ) := 2 M_3(a, b) + (5/a) \cdot (2 \varepsilon )^{-3/2}\), where the estimate

and \(\sqrt{n} \ge \sqrt{2/\varepsilon }\) are used in the second and third inequalities, respectively. Upon writing \(R(\psi ; \varDelta ) = \sqrt{\pi /a} \, \{ 1 + e_2(n) \cdot n^{-1/2} \}\), Formula (96) follows from (99) and (100). \(\square \)

Lemma 5.7

For any \(0< \varepsilon < \varepsilon _2\) and \(n \ge N_1(\varepsilon )\), we have

where \(M_6(a) := 2 M_4(a/2) \sqrt{\pi /a} + 2/a\) with \(M_4(a)\) defined in Lemma 5.18 and \(a := \frac{1}{2} \phi ''(x_0) > 0\). For any \(0< \varepsilon < \varepsilon _0\) there exists a constant \(C_2(\varepsilon ) > 0\) such that

Proof

If \(| y | \le 2 \varepsilon _2\)\((\le \varepsilon _1)\) then estimate (94a) yields

which together with estimate (94b) and definition (95b) gives

where \(\varphi _1(z; a) := |z| \, e^{- a z^2}\). If \(0< \varepsilon < \varepsilon _2\) and \(n \ge N_1(\varepsilon )\) then \([\xi _l, \, \xi _m] \subset [- 2 \varepsilon _2 \sqrt{n}, \, 2 \varepsilon _2 \sqrt{n}]\) follows from (98), so estimate (103) is available for all \(z \in [\xi _l, \, \xi _m]\), yielding

where the Riemann sum \(R(\varphi _1; \varDelta ) := \sum _{k=l}^{m-1} \varphi _1(\xi _k; a/2) \cdot \frac{1}{\sqrt{n}}\) is estimated as

where the second inequality is obtained by Lemma 5.18. Now (101) follows readily.

Since \(\varvec{\alpha }(n)\) and \(\varvec{\beta }(n)\) are bounded by (63), there exists a constant \(C_1(\varepsilon ) > 0\) such that \(|u(x; n)| \le C_1(\varepsilon )\) for any \(n \in \mathbb {N}\) and \(x \in [x_0-\varepsilon , \, x_0+\varepsilon ]\), which together with (93) yields

Since \(m-l = \lceil (x_0 + \varepsilon ) n\rceil - \lceil (x_0 - \varepsilon ) n\rceil \le (2 \varepsilon + 1) n\), we have for any \(n \ge N_0(\varepsilon )\),

where \(C_2(\varepsilon ) := (2 \varepsilon + 1) \cdot C_0(\varepsilon ) \cdot C_1(\varepsilon )\). This establishes estimate (102). \(\square \)

Proposition 5.8

For any \(0< \varepsilon < \varepsilon _2\), there is a constant \(M(\varepsilon ) > 0\) such that

for any \(n \ge N_1(\varepsilon )\), where \(\varepsilon _2\) and \(N_1(\varepsilon )\) are given in Lemma 5.6.

Proof

This readily follows from \(h(n) = h_{\mathrm {a}}(n) + h_{\mathrm {b}}(n) + h_{\mathrm {c}}(n)\) and Lemmas 5.6 and 5.7. \(\square \)

5.5 Irregular sums and end components

We shall estimate the left-end component \(g_0(n)\) in (88). When \(r_1 < +\infty \) the estimate for the right-end component \(g_1(n)\) follows from the left-end counterpart by reflectional symmetry (65). If we make the translation \(k \mapsto l := k - \lceil r_0 n \rceil \) for convenience, we can write

where \({\bar{\alpha }}_i(n) :=\alpha _i(n) + \sigma _i (\lceil r_0 n \rceil - r_0 n) \) and \({\bar{\beta }}_j(n) := \beta _j(n) + \tau _j (\lceil r_0 n \rceil - r_0 n)\). Note that \({\bar{\alpha }}_i(n)\) here is the same as \(\alpha _i^{(0)}(n)\) in Formula (73). Put \(I_0^+ := I \setminus I_0\) and \(J_0^+ := J \setminus J_0\), where the index sets \(I_0\) and \(J_0\) are defined in (71). Then G(k; n) factors as

From Lemma 5.1 one has \(\sigma _i > 0\) for \(i \in I_0\) and \(\tau _j > 0\) for \(j \in J_0\), whereas condition (68) at \(x = r_0\) implies that \(( \bar{\lambda }_i )_{i \in I_0^+}\) and \(( \bar{\mu }_j )_{j \in J_0^+}\) are balanced to the effect that

However, since \((\sigma _i)_{i \in I_0}\) and \((\tau _j)_{j \in J_0}\), resp. \((\sigma _i)_{i \in I_0^+}\) and \((\tau _j)_{j \in J_0^+}\), may not be balanced, we put

where the relation \(\rho _0 = - \rho _0^+\) follows from the first condition of (62).

We begin by giving an asymptotic behavior of \(G_0(l; n)\) as \(l \rightarrow \infty \) in terms of

Note that \(\Phi _0\) is positive and \(u_0(n)\) is non-zero due to the positivity of \(\sigma _i\) and \(\tau _j\) for \(i \in I_0\) and \(j \in J_0\). We use the following general fact about the gamma function.

Lemma 5.9

For any \(z \in \mathbb {C}\setminus \mathbb {Z}_{\le 0}\) and any integer m such that \(m \ge 1+ |\mathrm {Re}\, z|\),

Proof

If \(\mathrm {Re}\, z > 0\) we have \(\mathrm {dist}(z, \, \mathbb {Z}_{\le 0}) = |z|\) and the results follows readily. If \(\mathrm {Re}\, z \le 0\) then \(\mathrm {Re}(z+m) \ge 1\) and so the sequence \(|z|, |z+1|, \cdots , |z+m-1|\) contains \(\mathrm {dist}(z, \mathbb {Z}_{\le 0})\) as its minimum with the next smallest \(\ge 1/2\) and all the rest \(\ge 1\), thus \(|(z; \, m)| = |z||z+1|\cdots |z+m-1| \ge \mathrm {dist}(z, \mathbb {Z}_{\le 0})/2\), hence \(|\varGamma (z)| = |\varGamma (z+m)/(z; \, m)| \le 2|\varGamma (z+m)|/\mathrm {dist}(z, \mathbb {Z}_{\le 0})\). \(\square \)

Lemma 5.10

There exists a constant \(K_0 > 0\) such that

where for \(l = 0\) the convention \(l^{\rho _0 \, l} = 1\) is employed.

Proof

Note that \(G_0(l; n)\) in (105b) takes a finite value for every \(l \ge \kappa := \max _{i \in I_0} (R+1)/\sigma _i\) and \(n \in \mathbb {N}\), since (63) implies that \(\sigma _i l + \mathrm {Re}\, {\bar{\alpha }}_i(n) \ge \sigma _i l + \mathrm {Re}\, \alpha _i(n) \ge \sigma _i l - R \ge 1\) for \(i \in I_0\). By Stirling’s formula, (90) we have \(G_0(l; n) = u_0(n) \cdot l^{\gamma _0(n) + \rho _0 \, l} \cdot \Phi _0^{l} \cdot \{1+ O(1/l) \}\) as \(l \rightarrow +\infty \) uniformly with respect to \(n \in \mathbb {N}\). Thus there exists a constant \(M_0 > 0\) such that

Take the smallest integer \(m \ge \max _{i \in I_0} \{ 1 + \sigma _i (\kappa +1) + R \}\) and put

Since \(1+ |\sigma _i l + \mathrm {Re}\, {\bar{\alpha }}_i(n)| \le 1 + \sigma _i l + |\mathrm {Re}\, {\bar{\alpha }}_i(n)| \le 1 + \sigma _i l + |\mathrm {Re}\, \alpha _i(n)| + \sigma _i \le m\) for any \(0 \le l < \kappa \), \(n \in \mathbb {N}\) and \(i \in I_0\), Lemma 5.9 implies that for any \(0 \le l < \kappa \) and \(n \in \mathbb {N}\),

In view of condition (74), there exists a constant \(M_0' > 0\) such that

Then by \(1 \le \delta _0(n)^{-1}\) the estimate (108) holds with the constant \(K_0 := \max \{ M_0, \, M_0' \}\).

\(\square \)

We proceed to the investigation into \(G_0^+(l; n)\) by writing

where \({\bar{l}}_i(x) := \sigma _i x + \bar{\lambda }_i\) and \({\bar{m}}_j(x) := \tau _j x + \bar{\mu }_j\), and then by putting

Note that \(\Phi _0^+(x)\) and \(u_0^+(x; n)\) are well-defined continuous functions on \([0, \, \varepsilon ]\) with \(\Phi _0^+(x)\) being positive while \(u_0^+(x; n)\) non-vanishing and uniformly bounded in \(n \in \mathbb {N}\).

Lemma 5.11

For any \(0< \varepsilon < \varepsilon _0\), there exist an integer \(N_0(\varepsilon ) \in \mathbb {N}\) and a constant \(K_0^+(\varepsilon ) > 0\) such that for any \(n \ge N_0(\varepsilon )\) and \(0 \le x \le \varepsilon \),

Proof

From the definitions of \(I_0^+\), \(J_0^+\), \({\bar{l}}_i(x)\), \({\bar{m}}_j(n)\), there is a constant \(c(\varepsilon ) > 0\) such that

By condition (63), \(H_0^+(x; n)\) takes a finite value for any \(x \in [0, \, \varepsilon ]\) and \(n \ge N_0(\varepsilon ) := (R+1)/c(\varepsilon )\) and Stirling’s formula (90) implies that \(H_0^+(x; n)\) admits an asymptotic formula

uniform in \(x \in [0, \, \varepsilon ]\), where one also uses the equality \(\sum _{i \in I_0^+} {\bar{l}}_i(x) - \sum _{j \in J_0^+} {\bar{m}}_j(x) = \rho _0^+ \, x\), which is due to balancedness condition (106) and definition (107). From this estimate and the boundedness of \(u_0^+(x; n)\) coming from (63), the assertion (110) follows readily. \(\square \)

Now we are able to give an estimate for the left-end component \(g_0(n)\) in terms of

Lemma 5.12

For any \(0< \varepsilon < \varepsilon _4 := \min \{ \varepsilon _0, \, \varepsilon _2, \, \varepsilon _3 \}\) with \(\varepsilon _0\), \(\varepsilon _2\), and \(\varepsilon _3\) defined in (89), Lemma 5.6 and (111a), respectively, there exist \(N_0(\varepsilon ) \in \mathbb {N}\) and \(K_0(\varepsilon ) > 0\) such that

Proof

It follows from Formulas (109), (110), and (107) that

Multiplying this estimate by inequality (108), we have from Formula (105a),

for any \(n \ge N_0(\varepsilon )\) and \(0 \le l := k - \lceil r_0 n \rceil < \varepsilon n\), where \(K(\varepsilon ) := K_0 \cdot K_0^+(\varepsilon )\) and

A bit of differential calculus shows the following:

-

(i)

If either \(\rho _0 > 0\) or \(\rho _0 = 0\) with \(\Phi _0 \le 1\), then \(\varphi (t; n)\) is non-increasing in \(t \ge 0\) and hence \(\varphi (t; n) \le \varphi (0; n) = 1 = \Psi _0(\varepsilon )^n\) for any \(t \ge 0\).

-

(ii)

If either \(\rho _0 < 0\) or \(\rho _0 = 0\) with \(\Phi _0 > 1\), then \(\frac{d}{d t} \varphi (t; n) \ge 0\) in \(0 \le t \le \varepsilon _3 \, n\) with equality only when \(t = \varepsilon _3 \, n\), so that \(\varphi (t; n) \le \varphi (\varepsilon n; n) = (\varepsilon ^{\rho _0} \Phi _0)^{\varepsilon n} = \Psi _0(\varepsilon )^n\) for any \(0 \le t \le \varepsilon n\)\((< \varepsilon _3 \, n)\), where \(\varepsilon _3\) and \(\Psi _0(\varepsilon )\) are defined in (111a) and (111b), respectively.

In either case \(0 < \varphi (t; n) \le \Psi _0(\varepsilon )^n\) for any \(0 \le t < \varepsilon n\) and thus (112) and (111c) lead to

for any \(n \ge N_0(\varepsilon )\) and \(0 \le l : = k - \lceil r_0 n \rceil < \varepsilon n\). Since

summing up (113) over the integers \(0 \le l \le \lceil (r_0+\varepsilon ) n \rceil - \lceil r_0 n \rceil -1\)\((< \varepsilon n)\) yields

Since \(\gamma _0(n)\) is bounded by condition (63), we can take a constant \(K_0(\varepsilon ) \ge K(\varepsilon ) \cdot (2 + \varepsilon )^{|\mathrm {Re}\, \gamma _0(n)|+1}\) to establish the lemma. \(\square \)

Proposition 5.13

For any \(d > \Phi (r_0)\), there exists a positive constant \(\varepsilon _5 \le \varepsilon _4\) such that

for some \(M_0(d, \varepsilon ) > 0\) and \(N_0(\varepsilon ) \in \mathbb {N}\) independent of d, where \(\Phi (x)\) is defined in (75a) and \(\varepsilon _4\) is given in Lemma 5.12. When \(r_1 < + \infty \), a similar statement can be made for the right-end component \(g_1(n)\) in (88); for any \(d > \Phi (r_1)\) there exists a sufficiently small \(\varepsilon _6 > 0\) such that

Proof

We show the assertion for the left-end component \(g_0(n)\) only as the right-end counterpart follows by reflectional symmetry (65). Observe that \(\Psi _0(\varepsilon ) \rightarrow 1\), \(\Psi _0^+(\varepsilon ) \rightarrow \Phi (r_0)\), and so \(\varDelta _0(\varepsilon ) \rightarrow \Phi (r_0)\) as \(\varepsilon \rightarrow +0\). Thus given \(d > \Phi (r_0)\) there is a constant \(0< \varepsilon _5 < \varepsilon _4\) such that \(d > \varDelta _0(\varepsilon )\) for any \(0 < \varepsilon \le \varepsilon _5\). Then Lemma 5.12 enables us to take a constant \(M_0(d, \varepsilon )\) as in (114). \(\square \)

Proof of Theorem 5.2

As is mentioned at the end of Sect. 5.2 only the singleton case \(\mathrm {M{\scriptstyle ax}}= \{ x_0 \}\) is treated for the sake of simplicity. We can take a number d so that \(\max \{\Phi (r_0), \, \Phi (r_1) \}< d < \Phi _{\scriptstyle \mathrm {max}}\), since \(\Phi (x)\) attains its maximum only at the interior point \(x_0 \in (r_0, \, r_1)\). For this d take the numbers \(\varepsilon _5\) and \(\varepsilon _6\) as in Proposition 5.13 and put \(\varepsilon := \min \{ \varepsilon _5, \, \varepsilon _6 \}\). For this \(\varepsilon \) consider the numbers \(\Phi _0^{\varepsilon }\) and \(\Phi _1^{\varepsilon }\) in Lemma 5.5, both of which are strictly smaller than \(\Phi _{\scriptstyle \mathrm {max}}\). Take a number \(d_0\) so that \(\max \{ d, \, \Phi _0^{\varepsilon }, \, \Phi _1^{\varepsilon } \}< d_0 < \Phi _{\scriptstyle \mathrm {max}}\) and put \(\lambda := \Phi _{\scriptstyle \mathrm {max}}/d_0 > 1\). Then the estimates in Propositions 5.8 and 5.13 and Lemma 5.5 are put together into Eq. (88) to yield

where C(n) is defined in (86) and \(\Omega (n)\) admits the estimate (87). \(\square \)

Even without assuming properness (83) we have the following convenient proposition.

Proposition 5.14

Suppose that the sum g(n) in (64) satisfies balancedness (62), boundedness (63), admissibility (69), and genericness (74) along with continuity at infinity (78) and convergence (80) when \(r_1 = + \infty \). For any \(d > \Phi _{\scriptstyle \mathrm {max}}\), there exist \(K > 0\) and \(N \in \mathbb {N}\) such that

Proof

Divide g(n) into three components; sums over \([r_0, \, r_0+ \varepsilon ]\), \([r_0+\varepsilon , \, r_1-\varepsilon ]\), and \([r_1-\varepsilon , \, r_1]\). Take \(\varepsilon > 0\) sufficiently small depending on how d is close to \(\Phi _{\scriptstyle \mathrm {max}}\). Apply Proposition 5.13 to the left and right components and then use Lemma 5.5 in the middle one. \(\square \)

5.6 A priori estimates

We present the a priori estimates used in Sect. 5.4. In what follows, we often use the inequality

Given a positive constant a, we consider the function \(\varphi (x; a) := e^{-a x^2}\).

Lemma 5.15

If x, \(y \in \mathbb {R}\), and \(| y-x | \le 1\), then

where \(M_1(a) := a \, \displaystyle \sup _{x \in \mathbb {R}} (2 |x| +1) e^{-\frac{a}{2} (x^2 - 4|x|-2)} < \infty \).

Proof

Put \(h := y-x\). It then follows from inequality (115) that

whenever \(|h| \le 1\). Dividing both sides by \(e^{a x^2}\) we have

which proves the lemma. \(\square \)

Let \(b >0\), \(m \ge 1\), \(0 < \varepsilon \le \frac{a}{4 b}\), and suppose that a function \(\delta (x)\) admits an estimate

Lemma 5.16

Under condition (117), the function \(\psi (x; a) := e^{- a x^2 + \delta (x)}\) satisfies

where \(M_2(a) := \displaystyle \sup _{x \in \mathbb {R}} |x|^3 \, e^{- \frac{a}{4} \, x^2} < \infty \).

Proof

For \(|x| \le \varepsilon m\), we have

where the last inequality is by the definition of \(M_2(a)\). \(\square \)

Lemma 5.17

Under condition (117), if \(|x| \le \varepsilon m\), \(|y| \le \varepsilon m\), and \(|y-x| \le 1/m\), then

where \(M_3(a, b) := M_1(a) + b M_2(a) + b M_1( a/2 ) M_2( a )\).

Proof

Putting \(y = x + h\) with \(|h| \le 1/m\), we have

where t.i. refers to trigonometric inequality. \(\square \)

Lemma 5.18

If x, \(y \in \mathbb {R}\) and \(| y-x | \le 1\), then \(\varphi _1(x; a) := |x| \, e^{-a x^2}\) satisfies

where \(M_4(a) := 1+ M_1(a) \cdot \displaystyle \max _{x \in \mathbb {R}} (|x| +1) e^{-\frac{a}{4} x^2} < \infty \).

Proof

Putting \(y = x + h\) with \(|h| < 1\), one has

Thus estimate (120) has been proved. \(\square \)

6 Dominant sequences

Recall that the hypergeometric series \({}_3g_2({\varvec{a}})\) is defined in (25) and the subset \(\mathcal {S}(\mathbb {Z}) \subset \mathbb {Z}^5\) is defined in (31). In what follows, we fix any positive numbers R, \(\sigma > 0\) and let

where \(|\!| \cdot |\!|\) is the standard norm on \(\mathbb {C}^5\). As an application of Sect. 5 we shall show the following.

Theorem 6.1

If \(\varvec{p}= (p_0, p_1, p_2; q_1, q_2) \in \mathcal {S}(\mathbb {Z})\) is any vector satisfying either

where \(\varDelta (\varvec{p})\) is the polynomial in (33), then \(|D(\varvec{p})| > 1\) and there exists an asymptotic formula

uniformly valid with respect to \({\varvec{a}}\in \mathbb {A}(R, \sigma )\), where \(D(\varvec{p})\) is defined in (32) and

with \(s_2(\varvec{p}) := p_0 p_1 + p_1 p_2 + p_2 p_0 - q_1 q_2\) as in Theorem 4.3.

Remark 6.2

Conditions (30) and (121) are invariant under multiplication of \(\varvec{p}\) by any positive scalar. This homogeneity allows one to restrict \(\mathcal {S}(\mathbb {R})\) to \(\mathcal {S}_1(\mathbb {R}) := \mathcal {S}(\mathbb {R}) \cap \{ q_1 = 1\}\), which is a 3-dimensional solid tetrahedron. A numerical integration shows that the domain in \(\mathcal {S}_1(\mathbb {R})\) bounded by inequalities (121) occupies some 43 % of the whole \(\mathcal {S}_1(\mathbb {R})\) in volume basis. Thus we may say that about 43 % of the vectors in \(\mathcal {S}(\mathbb {Z})\) satisfy condition (121).

By the definition of \({}_3g_2({\varvec{a}})\) one can write \(g(n) := {}_3g_2({\varvec{a}}+ n \varvec{p}) = \sum _{k=0}^{\infty } \varphi (k; n)\) with

We remark that the current g(n) corresponds to the sequence \(g_0(n)\) in (26a), not to g(n) in (26b). In general a gamma factor \(\varGamma (\sigma k + \lambda n + \alpha )\) is said to be positive resp. negative on an interval of k, if \(\sigma k + \lambda n\) is positive resp. negative whenever k lies in that interval. Since \(\varvec{p}\in \mathcal {S}(\mathbb {Z})\), all lower and an upper gamma factors of \(\varphi (k; n)\) are positive in \(k > 0\), while the remaining two upper factors changes their signs when k goes across \((q_1-p_0) n\) or \((q_2-p_0) n\). Thus it is natural to make a decomposition \(g(n) = g_1(n) + g_2(n) + g_3(n)\) with

where if \(q_1 = q_2\) then \(g_2(n)\) should be null so we always assume \(q_1 < q_2\) when discussing \(g_2(n)\). It turns out that the first component \(g_1(n)\) is the most dominant among the three, yielding the leading asymptotics for g(n). The proof of Theorem 6.1 is completed at the end of Sect. 6.3.

6.1 First component

For the first component \(g_1(n)\), applying Euler’s reflection formula for the gamma function to the two negative gamma factors in the numerator of \(\varphi (k; n)\), we have

where \(L_1 = q_1-p_0\), \(\sigma _1 = 1\), \(\lambda _1 = p_0\), \(\alpha _1 = a_0\), and

Under the assumption of Theorem 6.1, the sum \(G_1(n)\) satisfies all conditions in Theorem 5.2. Indeed, balancedness (62) follows from \(s(\varvec{p}) = 0\); boundedness (63) is trivial because \(\alpha _1\) and \(\beta _j\) are independent of n; admissibility (69) is fulfilled with \(r_0 = 0\) and \(r_1 = L_1\) due to condition (30); genericness (74) is trivial since \(I_0 \cup I_1 = \emptyset \) with \(J_0 = \{1\}\) and \(J_1 = \{4\}\) by inequalities in (30). To verify properness (83), notice that the characteristic equation (84) now reads

Thanks to \(s(\varvec{p}) = 0\), this equation reduces to a linear equation in x having the unique root

where \(s(\varvec{p}) = 0\) again leads to \(s_2(\varvec{p}) = p_1 p_2-(q_1-p_0)(q_2-p_0)\), which together with (30) yields \(s(\varvec{p}) -p_0(q_2-p_0) = (q_1-p_1)(q_1-p_2) > 0\) and hence \(s_2(\varvec{p})> p_0(q_2-p_0) > 0\), that is,

If \(\phi _1(x)\) is the additive phase function for \(G_1(n)\) then it follows from (82b) and (30) that

Thus in the interval \(0< x < L_1\), the function \(\phi _1(x)\) has only one local and hence global minimum at \(x = x_0\), which is non-degenerate. Therefore properness (83) is satisfied with \(\mathrm {M{\scriptstyle ax}}= \{x_0 \}\) and hence Theorem 5.2 applies to the sum \(G_1(n)\).

Lemma 6.3

For any \(\varvec{p}\in \mathcal {S}(\mathbb {Z})\), we have \(|D(\varvec{p})| > 1\) and an asymptotic representation

uniform with respect to \({\varvec{a}}\in \mathbb {A}(R, \sigma )\), where \(D(\varvec{p})\), \(t({\varvec{a}})\), and \(B({\varvec{a}}; \varvec{p})\) are as in (32) and (122).

Proof

Substituting \(x = x_0\) into Formulas (75) and using \(s(\varvec{p}) = 0\) repeatedly, one has

while \(\gamma _1 := \gamma (n)\) in definition (81) now reads \(\gamma _1 = - s({\varvec{a}}) - 1\). Since \(\delta _0(n) = \delta _1(n) = 1\) in (87) by \(I_0 \cup I_1 = \emptyset \), Formula (85) in Theorem 5.2 implies that

where Formula (86) allows one to calculate the constant \(C_1 := C(n)\) as

In view of the relation between \(G_1(n)\) and \(g_1(n)\), the above asymptotic formula for \(G_1(n)\) gives the one for \(g_1(n)\). Finally \(|D(\varvec{p})| > 1\) follows from Lemma 6.4. \(\square \)

Lemma 6.4

Under condition (30), one has \(|D(\varvec{p}) | = (\Phi _1)_{\mathrm {max}}> \Phi _1(0) > 1\).

Proof

First, \(|D(\varvec{p})| = (\Phi _1)_{\mathrm {max}}\) is obvious from the definition (32) of \(D(\varvec{p})\) and the expression for \((\Phi _1)_{\mathrm {max}}\), while \((\Phi _1)_{\mathrm {max}} > \Phi _1(0)\) is also clear from \(\mathrm {M{\scriptstyle ax}}= \{ x_0 \}\). Regarding \(\varvec{p}= (p_0,p_1,p_2; q_1, q_2)\) as real variables subject to the linear relation \(s(\varvec{p}) = 0\) and ranging over the closure of the domain (30), we shall find the minimum of

For any fixed \((p_0, p_1, p_2)\), due to the constraint \(s(\varvec{p}) = 0\), one can thought of \(\Phi _1(0)\) as a function of single variable \(q_1\) in the interval \(p_0 \le q_1 \le p_1 + p_2\). Differentiation with respect to \(q_1\) shows that \(\Phi _1(0)\) attains its minimum (only) at the endpoints \(q_1 = p_0\), \(p_1+p_2\), whose value is

So \(\Phi _1(0) > \Psi (p_0, p_1, p_2)\) for any \(p_0< q_1 < p_1+p_2\). With a fixed \(p_0 > 0\) we think of \(\Psi (p_0, p_1, p_2)\) as a function of \((p_1, p_2)\) in the closed simplex \(p_0 \le p_1 + p_2\), \(p_1 \le p_0\), \(p_2 \le p_0\). It has a unique critical value \(\Psi (p_0, 2 p_0/3, 2 p_0/3) = 3^{p_0} > 1\) in the interior of the simplex, while on its boundary one has \(\Psi (p_0, \alpha , p_0) = \Psi (p_0, p_0, \alpha )= \Psi (p_0, \alpha , p_0-\alpha ) = p_0^{p_0} \alpha ^{-\alpha } (p_0-\alpha )^{\alpha -p_0} \ge 1\) for any \(0 \le \alpha \le p_0\). Therefore, we have \(\Phi _1(0) > \Psi (p_0, p_1, p_2) \ge 1\) under condition (30). \(\square \)

6.2 Second component

Taking the shift \(k \mapsto k + (q_1-p_0) n\) in \(\varphi (k; n)\) (see (66)) and applying the reflection formula to the unique negative gamma factor in the numerator of \(\varphi (k+(q_1-p_0) n; n)\), one has

where \(L_2 = q_2-q_1 > 0\), \(\sigma _1 = \sigma _2 = 1\), \(\lambda _1 = q_1\), \(\lambda _2 = 0\), \(\alpha _1 = a_0\), \(\alpha _2 = a_0-b_1+1\), and

Rewriting \(k \mapsto 2 k\) or \(k \mapsto 2 k+1\) according as k is even or odd, we have a decomposition \(G_2(n) = G_{20}(n) - G_{21}(n) + H_2(n)\), where \(G_{2\nu }(n)\) is given by

while if \(L_2\) or n is even then \(H_2(n) := 0\); otherwise, i.e., if both of \(L_2\) and n are odd then

Obviously, \(G_{20}(n)\) and \(G_{21}(n)\) have the same multiplicative phase function, which we denote by \(\Phi _2(x)\). Let \(\phi _2(x) := - \log \Phi _2(x)\) be the associated additive phase function. In order to make the second component \(g_2(n)\) weaker than the first one \(g_1(n)\), we want to make \(\phi _2'(x) \ge 0\) or equivalently \(\chi _2(x) \ge 0\) for every \(0 \le x \le L_2/2\), where \(\chi _2(x)\) is the common characteristic function (84) for the sums \(G_{20}(n)\) and \(G_{21}(n)\), which is given by

The non-negativity of \(\chi _2(x)\) in the interval \(0 \le x \le L_2/2\) is equivalent to

It is easy to see that \(G_{20}(n)\) and \(G_{21}(n)\) satisfy balancedness (62), boundedness (63), and admissibility (69) conditions, where \(r_0 = 0\), \(r_1 = L_2/2\), and \(I_0 = \{2\}\), \(I_1 = J_0 = \emptyset \), \(J_1 = \{4\}\), while genericness (74) for \(G_{2\nu }(n)\) becomes \(b_1-a_0 \not \in \mathbb {Z}_{\ge \nu +1}\) for \(\nu = 0, 1\).

Lemma 6.5

Under the assumption of Lemma 6.3, if \(\varvec{p}\) satisfies the additional condition (123) then there exist positive constants \(0< d_2 < |D(\varvec{p})|\), \(C_2 > 0\) and \(N_2 \in \mathbb {N}\) such that

Proof

Condition (123) implies that \(\Phi _2(x)\) is decreasing everywhere in \(0 \le x \le L_2/2\) and is strictly so near \(x = 0\) since \(\chi (0; \varvec{p}) = (q_1-p_0)(q_1-p_1)(q_1-p_2) > 0\) by condition (30). Hence \(\Phi _2(x)\) attains its maximum (only) at the left end \(x = 0\) of the interval, having the value

whereas \((\Phi _2)_{\mathrm {max}} = \Phi _1(L_1) < (\Phi _1)_{\mathrm {max}} = |D(\varvec{p})|\) follows from Lemma 6.4. Thus if \(d_2\) is any number such that \((\Phi _2)_{\mathrm {max}}< d_2 < |D(\varvec{p})|\), then Proposition 5.14 shows that

for some \(K_2 > 0\) and \(N_2 \in \mathbb {N}\), where \(\delta (z) := \min \{ 1, \, \mathrm {dist}(z, \, \mathbb {N})\}\) for \(z \in \mathbb {C}\).

We have to take care of \(H_2(n)\) when \(L_2\) and n are both odd. Stirling’s formula (90) yields

as \(n \rightarrow +\infty ,\) where \(\gamma _2 := \alpha _1+\alpha _2-\beta _1-\beta _2-\beta _3-\beta _4+1\). Since \(\Phi _2(L_2/2)< (\Phi _2)_{\mathrm {max}} < d_2\), upon retaking \(K_2 > 0\) suitably, one has \(|H_2(n)| \le K_2 \cdot d_2^n \le K_2 \cdot d_2^n/ \delta (b_1-a_0)\) for any \(n \ge N_2\).

Then from the relation between \(g_2(n)\) and \(G_2(n) = G_{20}(n)-G_{21}(n) + H_2(n)\) one has

Since \(M_2({\varvec{a}})\) is bounded for \({\varvec{a}}\in \mathbb {A}(R, \sigma )\) the lemma follows (here \(\sigma \) is irrelevant).

\(\square \)

Lemma 6.5 tempts us to ask when condition (123) is satisfied.

Lemma 6.6

For any \(\varvec{p}\in \mathcal {S}(\mathbb {R})\) condition (121) implies condition (123).

Proof

We use the following general fact. Let \(\chi (x)\) be a real cubic polynomial with positive leading coefficient and \(\varDelta \) be its discriminant. If \(\varDelta < 0\) then \(\chi (x)\) has only one real root so that once \(\chi (c_0) > 0\) for some \(c_0 \in \mathbb {R}\) then \(\chi (x) > 0\) for every \(x \ge c_0\). Even if \(\varDelta = 0\), once \(\chi (c_0) > 0\) then \(\chi (x) \ge 0\) for every \(x \ge c_0\) with possible equality \(\chi (c_1) = 0\), \(c_1 > c_0\), only if \(\chi (x)\) attains a local minimum at \(x = c_1\). Currently, \(\chi (x; \varvec{p})\) has discriminant \(\varDelta (\varvec{p})\) in Formula (33) and \(\chi (0; \varvec{p}) = (q_1-p_0)(q_1-p_1)(q_1-p_2) > 0\) by condition (30). Thus if \(\varDelta (\varvec{p}) \le 0\) then \(\chi (x; \varvec{p}) \ge 0\) for every \(x \ge 0\); this is just the case (a) in condition (121).

We proceed to the case (b) in (121). The derivative of \(\chi (x; \varvec{p})\) in x is given by

Note that \(2 q_1 - q_2\), \(3 q_1-p_1-p_2\), \(p_1+p_2-q_2 > 0\) by condition (30). Having axis of symmetry \(x = -(2 q_1-q_2)/3 < 0\), the quadratic function \(\chi '(x; \varvec{p})\) is increasing in \(x \ge 0\) and hence

where the last inequality stems from (b) in condition (121). Thus \(\chi (x; \varvec{p}) \ge \chi (0; \varvec{p}) > 0\) for any \(x \ge 0\), so condition (123) is satisfied. \(\square \)

The converse to the implication in Lemma 6.6 is also true, accordingly conditions (121) and (123) are equivalent for any \(\varvec{p}\in \mathcal {S}(\mathbb {R})\), but the proof of this fact is omitted as it is not needed in this article. In the situation of Lemma 6.5 we proceed to the third component.

6.3 Third component

For the third component \(g_3(n)\), taking the shift \(k \mapsto k+(q_2-p_0)n\) in \(\varphi (k; n)\), one has

where \(\sigma _1 = \sigma _2 = \sigma _3 = \tau _1 = \tau _2 = \tau _3 = 1\) and