Abstract

We consider gated polling systems with two special features: (i) retrials and (ii) glue or reservation periods. When a type-i customer arrives, or retries, during a glue period of the station i, it will be served in the following service period of that station. Customers arriving at station i in any other period join the orbit of that station and will retry after an exponentially distributed time. Such polling systems can be used to study the performance of certain switches in optical communication systems. When the glue periods are exponentially distributed, we obtain equations for the joint generating functions of the number of customers in each station. We also present an algorithm to obtain the moments of the number of customers in each station. When the glue periods are generally distributed, we consider the distribution of the total workload in the system, using it to derive a pseudo-conservation law which in turn is used to obtain accurate approximations of the individual mean waiting times. We also investigate the problem of choosing the lengths of the glue periods, under a constraint on the total glue period per cycle, so as to minimize a weighted sum of the mean waiting times.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is devoted to the performance analysis of a class of single-server queueing systems with multiple customer types. Our motivation is twofold: (i) to obtain insight into the performance of certain switches in optical communication systems and (ii) to obtain insight into the effect of having particular reservation periods, windows of opportunity during which a customer can make a reservation for service. Our class of queueing systems combines several features, viz. polling, retrials and the new feature of so-called glue periods or reservation periods. These will first be discussed separately, while their relation to optical switching will also be outlined.

Polling systems are queueing models in which a single server visits a finite number of, say, N stations in some prescribed order. Polling systems have been extensively studied in the literature. For example, various different service disciplines (rules which describe the server’s behavior while visiting a station) have been considered, both for models with and without switchover times between stations. We refer to Takagi [20, 21] and Vishnevskii and Semenova [22] for literature reviews and to Boon, van der Mei and Winands [5], Levy and Sidi [16] and Takagi [19] for overviews of the applicability of polling systems.

Switches in communication systems form an important application area of polling systems. Here, packets must be routed from the source to the destination, passing through a series of links and nodes. In copper-based transmission links, packets from various sources are time-multiplexed, and this may be modeled by a polling system. In recent years, optical networking has become very important, because optical fibers offer major advantages with respect to copper cables: huge bandwidth, ultralow losses and an extra dimension, viz. a choice of wavelengths.

Buffering of optical packets is not similar to buffering in conventional time-multiplexed systems, as photons cannot wait. Whenever there is a need to buffer photons, they are sent into a local fiber loop, thus providing a small delay to the photons without losing or displacing them. If, at the completion of a loop, a photon still needs to be buffered, it is again sent into the fiber delay loop. From a queueing theoretic perspective, this raises the need to add the feature of retrial queues to a polling system: instead of having a queueing system with one server and N ordinary queues (i.e., N stations), it has one server and N retrial queues (i.e., N orbits). Retrial queues have received much attention in the literature; see, for example the books by Falin and Templeton [11] and by Artalejo and Gomez-Corral [3]. Various multiclass retrial queues with multiple orbits were investigated in [4, 10, 15, 17]. However, these papers did not consider a polling model setting. Langaris [12,13,14] has pioneered the study of polling models with retrial customers. His assumptions are quite different from ours (in particular, he assumes exponential timers for station visits, which are renewed when a customer arrival occurs), and are not aimed at the performance modeling of optical switches.

A third important feature in the present paper is that of so-called glue or reservation periods. The glue period is activated just before the arrival of the server at a station. During a glue period, customers arriving at the station (either new arrivals or retrying customers) stick in the queue of that station and will be served during the following service period of that station, whereas during any other period, arriving customers at the station join the orbit of that station and will retry after an exponentially distributed time. One motivation for studying glue periods is the following. A sophisticated technology that one might try to add to the use of fiber delay loops in optical networking is varying the speed of light by changing the refractive index of the fiber loop, cf. [18]. Using a higher refractive index in a small part of the loop one can achieve ‘slow light’, which implies slowing down the packets. This feature is, in our model, incorporated as glue periods, where we slow down the packets arriving at the end of the fiber loop just before the server arrives, so that they do not have to retry but get served during the subsequent service period. Not restricting ourselves to optical networks, one can also interpret a glue period as a reservation period, i.e., a period in which customers can make a reservation at a station for service in the subsequent service period of that station. In our model, the reservation period immediately precedes the service period and could be seen as the last part of a switchover period.

The first attempt to study a polling model which combines retrials and glue periods is [8], which mainly focuses on the case of a single server and a single station, but also outlines how that analysis can be extended to the case of two stations. In [1], an N-station polling model with retrials and deterministic glue periods is considered, for the case of gated service at all stations. Under the gated service discipline, when the server visits a station, the server serves only the customers that were present at that station. The distribution of the steady-state joint station size (i.e., the number of customers in each station) was derived in [1], both at an arbitrary epoch and at the beginnings of the switchover, glue and service periods.

The main contributions of this paper are as follows. (i) When the glue periods are exponentially distributed, we obtain equations for the joint generating functions of the numbers of customers, both at embedded epochs and at arbitrary epochs, as well as a system of linear equations for the moments of the station sizes. Also, we present an algorithm to compute those moments. (ii) When the glue periods are generally distributed, we derive a pseudo-conservation law, i.e., an exact expression for a weighted sum of the mean waiting times at all stations, which leads to accurate approximations of the individual mean waiting times. (iii) We also investigate the problem of choosing the lengths of the glue periods, under a constraint on the total glue period per cycle, so as to minimize a weighted sum of the mean waiting times.

The rest of the paper is organized as follows. In Sect. 2, we describe the model. Section 3 contains a detailed analysis for the generating functions and the moments of the station sizes at different time epochs when the glue periods are exponentially distributed. We also present a numerical example, which in particular provides insight into the behavior of the polling system in the case of long glue periods. In Sect. 4, we derive a pseudo-conservation law for a system with generally distributed glue periods. Subsequently, we use this pseudo-conservation law for deriving an approximation for the mean waiting times at all stations. This approximation is used to minimize weighted sums of the mean waiting times by optimally choosing the lengths of the glue periods, given the total glue period per cycle. Finally, Sect. 5 lists some topics for further research.

2 The model

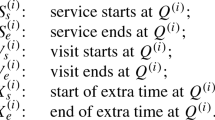

We consider a single-server cyclic polling system with retrials and glue periods. This model was first introduced in [8] for a single-station vacation model and a two-station model with switchover times. Further, this was extended in [1] to an N-station model with switchover times. In both papers, the model was studied for deterministic glue periods. We index the stations by i, \(i=1, \ldots , N\), in the order of server movement. For ease of presentation, all references to station indices greater than N or less than 1 are implicitly assumed to be modulo N. Customers arrive at station i according to a Poisson process with rate \(\lambda _i\), and they are called type-i customers, \(i=1,\ldots ,N\). The overall arrival rate is denoted by \(\lambda =\lambda _1+\cdots +\lambda _N\). The service times of customers at station i are independent and identically distributed (i.i.d.) random variables with a generic random variable \(B_i\), \(i=1,\ldots ,N\). Let \(\tilde{B}_i(s)=\mathbb {E}[\mathrm{e}^{-sB_i}]\) be the Laplace–Stieltjes transform (LST) of the service time distribution at station i. The switchover times from station i to station \(i+1\) are i.i.d. random variables with a generic random variable \(S_i\). Let \(\tilde{S}_i(s)=\mathbb {E}[\mathrm{e}^{-sS_i}]\) be the LST of the switchover time from station i to station \(i+1\), \(i=1, \ldots , N\). The interarrival times, the service times and the switchover times are assumed to be mutually independent. After the server switches to station i, a glue period occurs, which is followed by the service period of station i. After the service period, the server starts switching to the next station. See Fig. 1. The glue periods of station i are i.i.d. random variables with a generic random variable \(G_i\). Let \(\tilde{G}_i(s)=\mathbb {E}[\mathrm{e}^{-sG_i}]\) be the LST of the glue period distribution at station i.

Each station consists of an orbit and a queue. During a glue period, arriving customers (either new arrivals or retrying customers) at station i stick and wait in the queue of station i to receive service during the service period of station i, whereas during any other period, arriving customers at station i join the orbit of station i and will retry after a random amount of time. The inter-retrial time of each customer in the orbit of station i is exponentially distributed with mean \(\nu _i^{-1}\) and is independent of all other processes.

A single server cyclically moves from one station to another serving the glued customers at each of the stations. The service discipline at all stations is gated. During the service period of station i, the server serves all glued customers in the queue of station i, i.e., all type-i customers waiting at the end of the glue period (customers in the orbit and newly arriving customers during the course of the service period will not be served).

The utilization of the server at station i, \(\rho _i\), is defined by \(\rho _i = \lambda _i \mathbb {E}[B_i]\) and the total utilization of the server \(\rho \) is given by \(\rho =\sum _{i=1}^N \rho _i\). It can be routinely shown that a necessary and sufficient condition for the stability of this polling system is \(\rho < 1\). We therefore assume that \(\rho <1\). The mean cycle length, \(\mathbb {E}[C]\), is independent of the station involved (and the service discipline) and is given by

which can be derived as follows: Since the probability of the server being idle (in steady state) is \(1-\rho \), and this equals \(\frac{\sum ^N_{i=1} (\mathbb {E}[G_i]+\mathbb {E}[S_i])}{\mathbb {E}[C]}\) by the theory of regenerative processes, we have (2.1).

Let \((X_{1}^{(i)},X_{2}^{(i)},\ldots ,X_{N}^{(i)})\) denote the vector of numbers of customers of type 1 to type N in the system (hence in the orbit) at the start of a glue period of station i, \(i=1,\ldots ,N\), in the steady state. Further, let \((Y_{1}^{(i)},Y_{2}^{(i)},\ldots ,Y_{N}^{(i)})\) denote the vector of numbers of customers of type 1 to type N in the system at the start of a service period at station i, \(i=1,\ldots ,N\), in the steady state. We distinguish between those who are queueing (glued) and those who are in the orbit of station i: We write \(Y_{i}^{(i)} = Y_{i}^{(iq)} + Y_{i}^{(io)}\), \(i=1,\ldots ,N\), where q denotes in the queue and o denotes in the orbit. Finally, let \((Z_{1}^{(i)},Z_{2}^{(i)},\ldots ,Z_{N}^{(i)})\) denote the vector of numbers of customers of type 1 to type N in the system (hence in the orbit) at the start of a switchover from station i to station \(i+1\), \(i=1,\ldots ,N\), in the steady state.

3 The polling system with retrials and exponential glue periods

In [1], the authors calculated the generating functions and the mean values of the number of customers at different time epochs when the glue periods are deterministic. In this section, we assume that the glue periods are exponentially distributed with mean \(\mathbb {E}[G_i] =1/\gamma _i\), \(i= 1, \ldots , N\). We will derive a set of partial differential equations for the joint generating function of the station size (i.e., the number of customers in each station) and then obtain a system of linear equations for the first and the second moments of the station size. We also provide an iterative algorithm for solving the system of linear equations.

Observe that the generating function for the vector of numbers of arrivals at station 1 to station N during the service time of a type-i customer, \(B_i\), is \(\beta _i(\mathbf{z}):= \tilde{B}_i(\sum _{j=1}^{N}\lambda _j(1-z_j))\) for \(\mathbf{z} = (z_1,z_2, \ldots , z_N)\). Similarly, the generating function for the vector of numbers of arrivals at station 1 to station N during a switchover time from station i to station \(i+1\), \(S_i\), is \(\sigma _i(\mathbf{z}):=\tilde{S}_i(\sum _{j=1}^{N}\lambda _j(1-z_j))\).

3.1 Station size analysis at embedded time points

In this subsection, we study the steady-state joint distribution and the mean of the numbers of customers in the system at the start of a glue period, service period and switchover period. Let us define the following joint generating functions of the number of customers in each station at the start of a glue period, service period and switchover period:

for \(\mathbf{z} = (z_1,z_2, \ldots , z_N)\) with \(|z_i| \le 1\), \(i=1,\ldots ,N\), and \(|w| \le 1\).

Let \(M^o_i(t)\) represent the number of customers in the orbit of station i, \(i= 1, \cdots , N,\) and \(\Upsilon (t)\) the number of glued customers, at time t. Further, let \(\tau _j\) be the time at which an arbitrary glue period starts at station j, \(j= 1, \ldots , N\). Note that \(M_i^o(\tau _j)=X_i^{(j)}\). We define

Then, all the generating functions for the numbers of customers in the steady state described above can be expressed in terms of \(\phi _{i}(\mathbf{z},w)\), as shown below in Proposition 1.

Proposition 1

The generating functions \(\tilde{R}_{v}^{(i)}(\mathbf{z},w), \tilde{R}_{s}^{(i)}(\mathbf{z})\) and \(\tilde{R}_{g}^{(i)}(\mathbf{z})\) satisfy the following:

Proof

Equation (3.1) is obtained according to the law of total expectation, as follows:

To obtain (3.2), observe that the customers at the end of a service period are the customers in the orbit at the beginning of that visit plus the customers who arrive during the service times of the glued customers at the beginning of that visit. Hence,

Also, to obtain (3.3), observe that the customers at the end of a switchover from station \(i-1\) to station i are the customers in the orbit at the beginning of that switchover plus the customers who arrived during that switchover period. Hence,

from which, with (3.2), we get (3.3).\(\square \)

We have the following result for the generating functions \(\phi _i(\mathbf{z},w)\), \(i=1,\ldots ,N\).

Theorem 1

The generating functions \(\phi _i(\mathbf{z},w)\), \(i=1,\ldots ,N\), satisfy the following equation:

Proof

Note that

Thus, we have

Since \(\phi _i(\mathbf{z};w;0)= \mathbb {E}[z_1^{X_{1}^{(i)}} z_2^{X_{2}^{(i)}}\cdots z_N^{X_{N}^{(i)}}] = \tilde{R}_{g}^{(i)}(\mathbf{z})= \gamma _{i-1} \sigma _{i-1}(\mathbf{z}) \phi _{i-1}(\mathbf{z},\beta _{i-1}(\mathbf{z}))\) and \(\phi _i(\mathbf{z};w;\infty )=0\), integrating the above equation with respect to t from 0 to \(\infty \) yields

This completes the proof. \(\square \)

We now calculate the mean value of the station sizes at embedded time points using the differential equation (3.4). For an N-tuple \(\varvec{l}=(l_1, \ldots , l_N)\) of nonnegative integers, we define

and \(\mathbf{z}^{\varvec{l}}=z_1^{l_1}z_2^{l_2}\cdots z_N^{l_N}\). With this notation, we define the following scaled moment:

where \(\partial \mathbf{z}^{\varvec{l}}=\partial z_1^{l_1} \cdots \partial z_N^{l_N}\), and \({\varvec{1}}\) is the N-dimensional row vector with all its components equal to one. The first scaled moments of \(\phi _i(\mathbf{z},w)\), \(i=1,2,\ldots ,N,\) can be obtained from the following theorem.

Theorem 2

We have

-

(i)

\(\Phi _i^{({\varvec{0}},0)}=\frac{1}{\gamma _i}\), \(i=1,\ldots ,N\).

-

(ii)

\(\Phi _{i}^{({\varvec{1}}_j,0)}\) and \(\Phi _{i}^{({\varvec{0}},1)}\), \(0\le i,j \le N\), are given by the following recursion: for \(j=1,\ldots , N\),

$$\begin{aligned} \Phi _{j}^{({\varvec{0}},1)}= & {} \frac{\lambda _j}{\gamma _j}\mathbb {E}[C], \end{aligned}$$(3.5)$$\begin{aligned} \Phi _j^{({\varvec{1}}_j,0)}= & {} \frac{\lambda _j}{\nu _j}\left( \mathbb {E}[C]-\frac{1}{\gamma _j}\right) , \end{aligned}$$(3.6)$$\begin{aligned} \Phi _{i}^{({\varvec{1}}_j,0)}= & {} \frac{\gamma _{i+1}}{\gamma _{i}}\Phi _{i+1}^{({\varvec{1}}_j,0)}+ \frac{\lambda _j}{\gamma _{i}} \left( (\delta _{i,j-1}-\rho _{i})\mathbb {E}[C]-\frac{1}{\gamma _{i+1}}-\mathbb {E}[S_{i}]\right) ,\nonumber \\&\quad i=j-1,j-2,\ldots ,j-N+1, \end{aligned}$$(3.7)

where \({\varvec{0}}\) is the N-dimensional row vector with all its elements equal to zero, \({\varvec{1}}_j\) is the N-dimensional row vector whose jth element is one and all other elements are zero, and \(\delta _{ij}\) is the Kronecker delta. Note that if i is nonpositive in (3.7), then it is interpreted as \(i+N\).

Proof

Taking the partial derivative of Eq. (3.4) with respect to \(z_j\) and putting \(\mathbf{z}={\varvec{1}}-, w= 1-\), we have

Taking the partial derivative of Eq. (3.4) with respect to w and putting \(\mathbf{z}={\varvec{1}}-, w=1-\) yields

Summing (3.8) over \(i=1, \ldots ,N\), we have

Adding (3.9) and (3.10) and multiplying the resulting equation by \(\mathbb {E}[B_j]\) yields

and summing this over \(j=1,\ldots ,N\) gives

where we have used (2.1). Plugging (3.11) into (3.10) leads to

which is (3.6). Inserting this equation into (3.9) yields (3.5). When \(i=j\) in Equation (3.8), we have

On the other hand, when \(i\ne j\), i.e., \(i=j-1,j-2, \ldots ,j-N+1\), in Equation (3.8), we have

Finally, (3.7) follows from (3.12) and (3.13). \(\square \)

Next, we calculate \(\Phi _i^{({\varvec{l}},m)}\) for \(|\varvec{l}|+m \ge 2\). Equation (3.4) can be written as

From this we get

where \(\Gamma _{i,m}^{(\varvec{l})}=\frac{1}{{\varvec{l}}!}\frac{\partial ^{|{\varvec{l}}|}}{\partial \mathbf{z}^{\varvec{l}}} \left( (\beta _i(\mathbf{z})-1)^m\sigma _i(\mathbf{z})\right) \big |_{\mathbf{z}={\varvec{1}}-}\) and the inequality \({\varvec{l}}'\le {\varvec{l}}\) is interpreted componentwise. Therefore, from (3.14) we have the following proposition.

Proposition 2

For \(|\varvec{l}|+m \ge 2\),

For each \(k\ge 2\), (3.15) is a system of linear equations for \(\{\Phi _i^{({\varvec{l}},m)}: i=1,\ldots ,N, {\varvec{l}} \in {\mathbb {Z}}_+^N, m\in {\mathbb {Z}}_+, |{\varvec{l}}|+m=k\}\), where \(\mathbb {Z}_+\) denotes the set of nonnegative integers. The system of equations (3.15) with \( |{\varvec{l}}|+m=k\) can be written in a matrix form as

where X is a column vector with components \(\Phi _i^{({\varvec{l}},m)}\) (\(i=1,\ldots ,N, {\varvec{l}} \in {\mathbb {Z}}_+^N, m\in {\mathbb Z}_+, |{\varvec{l}}|+m=k\)), A is a nonnegative square matrix and b is a positive column vector. Since X is also a positive vector, (3.16) implies that the maximal eigenvalue of A is strictly less than 1. Therefore, (3.16) uniquely determines \(\Phi _i^{({\varvec{l}},m)}\) (\(i=1,\ldots ,N, {\varvec{l}} \in {\mathbb Z}_+^N, m\in {\mathbb Z}_+, |{\varvec{l}}|+m=k\)). Also, (3.15) uniquely determines \(\Phi _i^{({\varvec{l}},m)}\) (\(i=1,\ldots ,N, {\varvec{l}} \in {\mathbb Z}_+^N, m\in {\mathbb Z}_+, |{\varvec{l}}|+m\ge 2\)).

By solving the system of linear equations (3.15), we can obtain \(\Phi _i^{({\varvec{l}},m)}\) (\(i=1,\ldots ,N, {\varvec{l}} \in {\mathbb Z}_+^N, m\in {\mathbb Z}_+, |{\varvec{l}}|+m\ge 2\)). One can use Gaussian elimination to solve that system of equations. We will also use an iterative method, as shown below in Theorem 3. This theorem can be easily proved by the fact that A is a nonnegative square matrix with maximal eigenvalue less than 1 and b is a positive column vector in (3.16).

Theorem 3

For \(i, {\varvec{l}}, m,n\) with \(i=1,\ldots , N\), \(|\varvec{l}|+m=k\), \(n\in \mathbb {Z}_+\), define \(\Phi _i^{({\varvec{l}},m)}(n)\) as follows:

Then, we have that

-

(i)

\(\Phi _i^{({\varvec{l}},m)}(n)\) is nondecreasing in n.

-

(ii)

\(\lim _{n \rightarrow \infty }\Phi _i^{({\varvec{l}},m)}(n)=\Phi _i^{({\varvec{l}},m)}\).

3.2 Station size analysis at arbitrary time points

In the previous subsection, we found the generating functions of the number of customers at the beginnings of the glue period, service period and switchover period in terms of \(\phi _i(\mathbf{z},w)\). We now represent the generating function of the number of customers at arbitrary time points in terms of \(\phi _i(\mathbf{z},w)\), as shown below in Theorem 4. This will allow us to obtain the moments of the station size distribution at arbitrary time points.

Theorem 4

-

(a)

The joint generating function, \(R^{(i)}_{s}(\mathbf{z})\), of the number of customers in the orbit at an arbitrary time point in a switchover period from station i is given by

$$\begin{aligned} R^{(i)}_{s}({\mathbf{z}}) = \frac{\gamma _i}{\mathbb {E}[S_i]}\phi _i(\mathbf{z},\beta _i(\mathbf{z}))\frac{1-\sigma _i(\mathbf{z})}{\sum _{j=1}^N\lambda _j(1-z_{j})}. \end{aligned}$$(3.17) -

(b)

The joint generating function, \(R^{(i)}_{g}(\mathbf{z},w)\), of the number of customers in the queue and in the orbit at an arbitrary time point in a glue period of station i is given by

$$\begin{aligned} R^{(i)}_{g}(\mathbf{z},w) = \gamma _i \phi _i(\mathbf{z},w). \end{aligned}$$(3.18) -

(c)

The joint generating function, \(R^{(i)}_{v}(\mathbf{z},w)\), of the number of customers in the queue and in the orbit at an arbitrary time point in a service period of station i is given by

$$\begin{aligned} R^{(i)}_{v}(\mathbf{{z}},w)= & {} \frac{\gamma _i}{\rho _i\mathbb {E}[C]} \frac{\phi _i(\mathbf{z},w) -\phi _i(\mathbf{z},\beta _i(\mathbf{z}))}{ w - \beta _i(\mathbf{z})} \frac{1-\beta _i (\mathbf{z})}{\sum _{j=1}^N\lambda _j(1-z_{j})}. \end{aligned}$$(3.19)

Proof

(a) Notice that the number of customers in the orbit at an arbitrary time point in a switchover period from station i is the sum of two independent terms: the number of customers at the beginning of the switchover period and the number of customers who arrived during the elapsed switchover period. The generating function of the former is \(\tilde{R}_{s}^{(i)}(\mathbf{z})\), and the generating function of the latter is given by \(\frac{1-\sigma _i(\mathbf{z})}{\mathbb {E}[S_i]\left( \sum _{j=1}^N\lambda _j(1-z_{j})\right) }\). Thus,

from which and (3.2) we get (3.17).

(b) By the theory of Markov regenerative processes,

This equation yields (3.18).

(c) Notice that the number of customers in the system at an arbitrary time point in a service period consists of two parts: the number of customers in the system at the beginning of the service of the customer currently in service and the number of customers who arrived during the elapsed time of the current service. The generating function of the former is given by (see Remark 3 of [1] for a detailed proof)

and the generating function of the latter is given by

From (3.20) and (3.21), we have

Since \(\rho _i=\frac{\mathbb {E}[Y_i^{(iq)}]\mathbb {E}[B_i]}{\mathbb {E}[C]}\), (3.19) follows from the above equation. \(\square \)

We introduce the following scaled moments:

These moments satisfy the following theorem, which can be derived by using Equations (3.17), (3.18) and (3.19).

Theorem 5

We have

-

(i)

\(\Psi _{g,i}^{(\varvec{l},m)}=\gamma _i \Phi _i^{(\varvec{l},m)}\).

-

(ii)

\(\Psi _{v,i}^{(\varvec{l},m)}=\frac{\gamma _i}{\rho _i\mathbb {E}[C]} \sum _{{\varvec{l}}'\le {\varvec{l}}}\sum _{k=0}^{|{\varvec{l}}-{\varvec{l}}'|}\Phi _{i}^{({\varvec{l}}',m+k+1)} \eta _{i,k}^{({\varvec{l}}-{\varvec{l}}')}\),

where \(\eta _{i,m}^{(\varvec{l})}=\frac{1}{{\varvec{l}}!}\frac{\partial ^{|{\varvec{l}}|}}{\partial \mathbf{z}^{{\varvec{l}}}} \left( \frac{-(\beta _i (\mathbf{z})-1)^{m+1}}{\sum _{j=1}^N\lambda _j(1-z_{j})}\right) \Big |_{\mathbf{z}=\mathbf{1}-}\).

-

(iii)

\(\Psi _{s,i}^{(\varvec{l})}=\frac{\gamma _i}{\mathbb {E}[S_i]} \sum _{{\varvec{l}}'\le {\varvec{l}}}\Theta _i^{({\varvec{l}}')} \zeta _{i}^{({\varvec{l}}-{\varvec{l}}')}\), where \(\zeta _{i}^{(\varvec{l})}=\frac{1}{{\varvec{l}}!}\frac{\partial ^{|{\varvec{l}}|}}{\partial \mathbf{z}^{{\varvec{l}}}} \left( \frac{1-\sigma _i (\mathbf{z})}{\sum _{j=1}^N\lambda _j(1-z_{j})}\right) \Big |_{\mathbf{z}=\mathbf{1}-}\) and \(\Theta _{i}^{(\varvec{l})}=\frac{1}{{\varvec{l}}!}\frac{\partial ^{|{\varvec{l}}|}}{\partial \mathbf{z}^{{\varvec{l}}}} \phi _i(\mathbf{z},\beta _i(\mathbf{z}))\big |_{\mathbf{z}=\mathbf{1}-}\). Moreover, \(\Theta _{i}^{(\varvec{l})}\) is given by

$$\begin{aligned} \Theta _i^{(\varvec{l})} = \sum _{{\varvec{l}}'\le {\varvec{l}}}\sum _{k=0}^{|{\varvec{l}}-{\varvec{l}}'|}\Phi _{i}^{({\varvec{l}}',k)} \Delta _{i,k}^{({\varvec{l}}-{\varvec{l}}')}, \end{aligned}$$where \(\Delta _{i,m}^{(\varvec{l})}=\frac{1}{{\varvec{l}}!}\frac{\partial ^{|{\varvec{l}}|}}{\partial \mathbf{z}^{{\varvec{l}}}} (\beta _i(\mathbf{z})-1)^m\big |_{\mathbf{z}=\mathbf{1}-}\).

From now on, we obtain the first and second moments of the station sizes of each type of customers in steady state. Let \(M_i^{o}\) and \(\Upsilon \) be the steady-state random variables corresponding to \(M_i^{o}(t)\) and \(\Upsilon (t)\), respectively. That is, \(M_i^{o}\) is the number of customers in the orbit of station i in the steady state and \(\Upsilon \) is the number of glued customers in the steady state. Let \(M_i^{oq}\) be the number of customers in the orbit of station i plus the glued customers in the queue of station i in the steady state, and \(M_i\) be the number of customers in station i (including the customer in service at station i) in the steady state. Moreover, we define the following indicator random variables: for \(i=1, \ldots ,N\),

Then, we have that for \(i=1,\ldots ,N\),

Therefore, the mean station sizes, \(\mathbb {E}[M_i^o], \mathbb {E}[M_i^{oq}]\), and \(\mathbb {E}[M_i]\), \(i=1,\ldots ,N\), are given by

Now, in order to obtain the second moments of the station sizes, \(\mathbb {E}[M_i^{o}M_j^{o}], \mathbb {E}[M_i^{oq}M_j^{oq}]\), and \(\mathbb {E}[M_iM_j]\), \(i,j=1,\ldots ,N\), note that

Therefore, the second moments of the station sizes are given by

3.3 A numerical example

In this subsection, we present numerical results for the first and second moments of the number of customers in each station. The expression for the mean number of customers in each station is given by (3.24), together with (3.22) and (3.23). By using the formulas (3.25)–(3.27), we can obtain an expression for the variance of the number of customers in each station and an expression for the covariance of the numbers of customers in two different stations. Note that these moments are expressed in terms of \(\Phi _i^{(\varvec{l},m)}\), as shown in Theorem 5. Therefore, these moments can be obtained by using Theorems 2 and 3. In the following numerical example, we consider a single-server polling model with five stations (i.e., \(N=5\)).

Example 1. We assume that the arrival rate of type-i customers is \(\lambda _i=0.025\) for all i, \(i=1,\ldots ,5\). The service times of type-i customers are exponentially distributed with means \(\mathbb {E}[B_1]=1, \mathbb {E}[B_2]=2, \mathbb {E}[B_3]=4, \mathbb {E}[B_4]=8\) and \(\mathbb {E}[B_5]=16\), respectively. Hence, the total utilization of the server is \(\rho =\sum _{i=1}^5 \rho _i=0.775<1\). The switchover times from station i to station \(i+1\) are deterministic with \(\mathbb {E}[S_i]=1\) for all i, \(i=1,\ldots ,5\). The retrial rate of customers in the orbit of station i is \(\nu _i=1\) for all i, \(i=1,\ldots ,5\). The glue periods at station i are exponentially distributed with parameters \(\gamma _i\), \(i=1,\ldots ,5\). We assume that \(\gamma _i\) is the same for all i, i.e., \(\mathbb {E}[G_i]=\mathbb {E}[G]\) for all i, \(i=1,\ldots ,5\).

In Fig. 2, we plot the mean number of customers in station i, \(\mathbb {E}[M_i]\), \(i=1,\ldots ,5\), varying the mean glue period \(\mathbb {E}[G]\). In Fig. 3, we plot the squared coefficient of variation (SCV) for the number of customers in station i, \(\text{ SCV }[M_i]\), \(i=1,\ldots ,5\), varying \(\mathbb {E}[G]\). In Fig. 4, we plot the correlation coefficient of the numbers of customers in two different stations, \(\text{ Cor }[M_1,M_2]\), \(\text{ Cor }[M_1,M_3]\), \(\text{ Cor }[M_1,M_4]\) and \(\text{ Cor }[M_3,M_5]\), varying \(\mathbb {E}[G]\). In Figs. 2a, 3a and 4a, we vary \(\mathbb {E}[G]\) from 0 to 10 in order to better reveal the behavior of the system for small \(\mathbb {E}[G]\). In Figs. 2b, 3b and 4b, we vary \(\mathbb {E}[G]\) from 0 to 1000 in order to examine the behavior of the system for large \(\mathbb {E}[G]\).

We can draw the following conclusions from these plots:

-

If the glue period of a station is small, the chance for customers to retry in that glue period is low, and therefore, the mean number of customers in the station is large.

-

If the glue period of a station is large, customers face a long delay before getting served in the station, so the mean number of customers in the station is large.

-

There exists an optimal glue length at which each station has a minimum mean station size.

-

The figures suggest that, as \(\mathbb {E}[G] \rightarrow \infty \),

-

(i)

the mean numbers of customers grow linearly,

-

(ii)

the squared coefficient of variation tends to a limit,

-

(iii)

the correlation coefficients between the numbers of customers in different stations tend to some limit.

-

(i)

In [23], the author considers classical polling systems with a branching-type service discipline like exhaustive or gated service, and without glue periods, for the case that the switchover times become large. It is readily seen that our polling model starts to behave very similarly as such a polling model when the glue periods grow large; indeed, every type-i customer will now almost surely become glued during the first glue period of station i that it experiences during its stay in the system, and therefore, it will be served during the first service period of station i after its arrival to the system–just as in an ordinary gated polling system. However, we cannot immediately apply the asymptotic results of [23] when the switchover times become large, because it considers deterministic switchover times, while the focus is on the waiting time distribution.

4 The polling system with retrials and general glue periods

In [1] and in Sect. 3 of the present paper, we have presented the distribution and mean of the number of customers at different time epochs for a gated polling model with retrials and glue periods, where the glue periods are deterministic and exponentially distributed, respectively. In this section, we assume that the glue periods have general distributions. We first consider the distribution of the total workload in the system and present a workload decomposition. Next, we use this to obtain a pseudo-conservation law, i.e., an exact expression for a weighted sum of the mean waiting times. The pseudo-conservation law is subsequently used to obtain an approximation for the mean waiting times of all customer types. We present numerical results that indicate that the approximation is very accurate. Finally, we use this approximation to optimize a weighted sum of the mean waiting times, \(\sum _{i=1}^N c_i \mathbb {E}[W_i]\), where \(c_i\), \(i=1,\ldots ,N,\) are positive constants and \(\mathbb {E}[W_i]\) is the mean waiting time of a type-i customer until the start of its service, by choosing the glue period lengths, given the total glue period in a cycle.

4.1 Workload distribution and decomposition

Define V as the amount of work in the system in the steady state. Furthermore, let \(\tilde{B}(s) = \sum _{i=1}^N \lambda _i (1- \tilde{B}_i(s))\). The LST of the amount of work at an arbitrary time can be written as

where \(V^{(S)}_{i}\), \(V^{(G)}_{i}\) and \(V^{(D)}_{i}\) are the amount of work in the system during the switchover time from station i, the glue period of station i and the service period of station i, respectively.

Let \(V_i^{(X)}\), \(V_i^{(Y)}\) and \(V_i^{(Z)}\) be the work in the system at the start of the glue period of station i, the service period of station i and the switchover period from station i, respectively. We know that

Therefore,

Furthermore, using the last formula of the proof of Theorem 2 in Boxma et al. [7], but with our notation, we have

Substituting (4.2) and (4.3) in (4.1), we have

Define the idle time as the time the server is not serving customers (i.e., the sum of all the switchover and glue periods). Let \(V^{(Idle)}\) be the amount of work in the system at an arbitrary moment in the idle time. We have, by (4.2) and (2.1),

It is known that the LST of the amount of work at the steady state, \(V_{M/G/1}\), in the standard M / G / 1 queue where the arrival rate is \(\sum _{i=1}^N \lambda _i\) and the LST of the service time distribution is \(\sum ^N_{i=1} \frac{\lambda _i}{\sum ^N_{j=1}\lambda _j} \tilde{B}_i(s)\), is given by

From Eqs. (4.4), (4.5) and (4.6), we have

In Theorem 2.1 of [6], a workload decomposition property has been proved for a large class of single-server multiclass queueing systems with service interruptions (like switchover periods or breakdowns). It amounts to the statement that, under certain conditions, the steady-state workload is in distribution equal to the sum of two independent quantities: (i) the steady-state workload in the corresponding queueing model without those interruptions and (ii) the steady-state workload at an arbitrary interruption epoch. The gated polling model with glue periods and retrials of the present paper satisfies all the assumptions of Theorem 2.1 of [6], and therefore, in agreement with what we have seen above, the workload decomposition indeed holds.

4.2 Pseudo-conservation law

By the workload decomposition, it is shown in [6] that

where \(F_i\) is the work left in station i at the end of a service period of station i (and hence at the start of a switchover from station i). Other than \(\mathbb {E}[F_i]\), Eq. (4.7) is independent of the service discipline. Note that \( \mathbb {E}[F_i] = \mathbb {E}[Z_i^{(i)}] \mathbb {E}[B_i]\). To find \(\mathbb {E}[Z_i^{(i)}]\), we will derive a relation between \(\mathbb {E}[Z_i^{(i)}]\) and \(\mathbb {E}[Y_i^{(iq)}]\). \(\mathbb {E}[Y_i^{(iq)}]\) consists of the following three parts:

-

(i)

Mean number of type-i customers who were already present at the end of the previous visit to station i and who are glued during the glue period just before the current visit to station i.

-

(ii)

Mean number of type-i customers who have arrived during the time interval from the end of the previous visit to station i to the start of the glue period of station i just before the visit to station i, and who are glued during that glue period.

-

(iii)

Mean number of type-i customers who arrive during the glue period of station i just before the visit to station i.

Note that (i) equals \((1-\tilde{G}_i(\nu _i)) \mathbb {E}[Z_i^{(i)}]\) because the mean number of type-i customers who were present at the end of the previous visit to station i is \(\mathbb {E}[Z_i^{(i)}]\), and the probability that a customer who was present at the end of the previous visit to station i is glued during the glue period just before the current visit to station i is \(1-\tilde{G}_i(\nu _i)\). (ii) equals \((1-\tilde{G}_i(\nu _i)) \lambda _i \left( (1-\rho _i) \mathbb {E}[C] - \mathbb {E}[G_i]\right) \). Here, \(\lambda _i \left( (1-\rho _i) \mathbb {E}[C] - \mathbb {E}[G_i]\right) \) is the mean number of type-i customers who have arrived during the time interval from the end of the previous visit to station i to the start of the glue period of station i just before the visit to station i. Finally, (iii) equals \(\lambda _i \mathbb {E}[G_i]\). Therefore,

Since \(\rho _i=\frac{\mathbb {E}[Y_i^{(iq)}] \mathbb {E}[B_i]}{\mathbb {E}[C]}\), we have \(\mathbb {E}[Y_i^{(iq)}]=\lambda _i \mathbb {E}[C]\). Hence, by (4.8), we get

Therefore, \(\mathbb {E}[F_i]\) is given by

The first term on the right-hand side equals the mean amount of work for type-i customers who arrived at station i during a service period of station i. The second term is interpreted as follows: Since \(\lambda _i (\mathbb {E}[C]-\mathbb {E}[G_i])\) is the mean number of type-i customers who arrive during one cycle excluding the glue period of station i in that cycle, \((\tilde{G}_i(\nu ))^k \rho _i (\mathbb {E}[C]-\mathbb {E}[G_i])\) is the mean amount of work for type-i customers who arrive during the kth previous cycle excluding the glue period of station i in that cycle, and who are present at the end of the current service period of station i. Therefore, the second term, which is \(\sum ^\infty _{k=1}(\tilde{G}_i(\nu ))^k \rho _i (\mathbb {E}[C]-\mathbb {E}[G_i])\), is the mean amount of work for type-i customers who were present in the orbit of station i at the beginning of the service period of station i.

From Eqs. (4.7) and (4.9), together with (2.1), we obtain the following pseudo-conservation law:

4.3 Approximation of the mean waiting times

We now use the pseudo-conservation law to find an approximation for the mean waiting times of all customer types. Below we briefly sketch the idea behind the approximation. Everitt [9] has developed a method to approximate the mean waiting times in an ordinary gated polling system (without retrials and glue periods). The idea in this approximation is that an arriving customer first has to wait for the residual cycle time, until the server begins a new visit to its station. Subsequently, it has to wait for the service times of all customers of the same type, who arrived before it, in the elapsed cycle time. This leads to \(\mathbb {E}[W_j] = (1+ \rho _j) \mathbb {E}[R_{c_j}]\), where \(\mathbb {E}[R_{c_j}]\) is the mean of the residual time of a cycle starting with a visit to station j, which is the same as the mean of the elapsed time of a cycle starting with a visit to station j. Next, Everitt assumed that for all j, this mean residual cycle time is independent of j, i.e., \(\mathbb {E}[R_{c_j}] \approx \mathbb {E}[R_{c}]\), leading to the approximation \(\mathbb {E}[W_j] \approx (1+ \rho _j) \mathbb {E}[R_{c}]\) for the model without retrials and glue periods.

In this paper, we introduce a similar type of approximation, including one extra term, for the mean waiting times in the model with retrials and glue periods:

In the Appendix, we provide a detailed derivation of (4.11). The first term on the right-hand side of (4.11) is the same as the term in [9]. The second term on the right-hand side is added because not every customer who arrives in a particular cycle receives service in that cycle. The type-j customers arriving during any period other than the glue period of station j receive service in the following service period with probability \(1-\tilde{G_j}(\nu _j)\). Furthermore, the type-j customers arriving during any period other than a glue period of station j have to wait for a geometric number (with parameter \(1-\tilde{G_j}(\nu _j)\)) of cycles before receiving service. Since a type-j customer arrives during a period other than a glue period of station j with probability \(\frac{\mathbb {E}[C]-\mathbb {E}[G_j]}{\mathbb {E}[C]}\), the mean number of cycles until an arbitrary type-j customer receives its service is \(\frac{\mathbb {E}[C]-\mathbb {E}[G_j]}{\mathbb {E}[C]}\times \frac{\tilde{G}_j (\nu _j)}{1-\tilde{G}_j (\nu _j)}\). The second term is obtained by multiplying this mean number of cycles and the mean cycle time.

It should be noted that, in reality, the mean residual cycle times for station i and station j (\(j\ne i\)) are not equal. A key element of our approximation is to assume that they are equal. We can now use the pseudo-conservation law to determine the one unknown term \(\mathbb {E}[R_c]\): By substituting (4.11) in (4.10) and using (2.1), we get

Substitution of (4.12) into (4.11) yields an approximation for the mean waiting times of all customer types: For \(j=1,2,\dots ,N\),

We now consider various examples to compare the above approximation results with the exact analysis from [1] for deterministic glue periods and from Sect. 3 of the present paper for exponentially distributed glue periods. Further, we will compare the results of this approximation with the simulation results for the case that the glue periods follow a gamma distribution.

4.3.1 Deterministic glue periods

In the numerical example of Table 1, we consider a two-station polling system. The switchover times and service times are exponentially distributed. We keep the parameters of station 1 fixed, \(\lambda _1= 1, \mathbb {E}[B_1] =0.45, \mathbb {E}[S_1]=1, G_1=0.5, \nu _1 = 1\), and vary the parameters of station 2.

4.3.2 Exponential glue periods

In the numerical example of Table 2, we consider a three-station polling system. The switchover times are deterministic, and the service times are exponentially distributed. We keep the parameters of station 1 fixed, \(\lambda _1= 1, \mathbb {E}[B_1] =0.3, \mathbb {E}[G_1]=0.5\). Further, the switchover times and the exponential retrial rates of all three stations are fixed, \(S_1 =S_2 =S_3 =1\) and \(\nu _1=\nu _2=\nu _3 = 1\).

4.3.3 Gamma-distributed glue periods

In the above two examples, we can get the exact mean waiting times using the method in [1] and Sect. 3 of this paper. In the numerical examples of Table 3, we compare the approximate mean waiting times with simulation results, for a polling system where the lengths of the glue periods are gamma-distributed.

We consider a five-station polling system in which the glue periods, switchover times and service times are all gamma-distributed. We simulate such a system to find the mean waiting times. We also give a 95% confidence interval for the mean waiting times obtained using simulations. We have generated one million cycles, splitting this into ten periods of \(10^5\) cycles and using the results of these ten periods to obtain confidence intervals. Then, we compare the simulation results with the results obtained using the approximation formula. Here, k and \(\theta \) are, respectively, the shape and the scale parameters of the gamma distribution with probability density function \(\frac{1}{\Gamma (k) \theta ^k} x^{k-1} \mathrm{e}^{- \frac{x}{\theta }}\).

The values of the parameters are listed in Table 3a. Table 3b shows the mean waiting times by simulation, along with 95% lower and upper confidence bounds. Table 3c shows the approximate mean waiting times.

The numerical results suggest that the approximations of the mean waiting times are very accurate. In only two cases (the fourth case in Table 2 and case (iii) in Table 3), the error is in the order of 5%; in all other cases, we find errors that are typically less than 2%. This is probably due to the fact that the pseudo-conservation law is exact, while the mean residual cycle times \(\mathbb {E}[R_{c_j}]\) typically do not differ too much from queue to queue. We also would like to mention two facts, which emphasize that our approximation (4.13) is quite simplistic, but which also suggest a certain robustness of the polling system as well as our approximation.

(i) The approximate mean waiting times for the two totally symmetric stations are the same, independent of their order in the system, but this is not quite true in reality. Consider a five-station polling model with deterministic glue periods, and exponential service time and switchover time distributions. Let \(\varvec{\lambda }=(0.1,0.05,0.1,0.38,0.38)\), \(\mathbb {E}[B_1]=\mathbb {E}[B_3]=\mathbb {E}[B_4]=\mathbb {E}[B_5]=1\), \(\mathbb {E}[B_2]=0.2\), \(\mathbb {E}[S_i]=1\) for all i, \(i=1,\ldots ,5\), \(\mathbb {E}[G_1]=\mathbb {E}[G_2]=\mathbb {E}[G_3]=1\), \(\mathbb {E}[G_4]=\mathbb {E}[G_5]=5\), and \((\nu _1,\nu _2,\nu _3,\nu _4,\nu _5)=(2,1,2,10,10)\). We get the exact and the approximate mean waiting times as

We can see that even in a very asymmetric case, while the exact mean waiting times are not the same for the symmetric stations 1 and 3, they are still very close and the approximation is within 5% of them.

(ii) The approximate mean waiting time at one station is independent of the change in the retrial rates of the other stations, which is also not true in reality. Consider a two-station polling model with deterministic glue periods, and exponential service time and switchover time distributions. Let \(\lambda _1=\lambda _2=1,~\mathbb {E}[B_1]=\mathbb {E}[B_2]=0.45,~\mathbb {E}[S_1]=\mathbb {E}[S_2]=1,~\mathbb {E}[G_1]=\mathbb {E}[G_2]=0.5,\) and \(\nu _1=1\). For \(\nu _2= 1/1000\), we get the exact and the approximate mean waiting times as \((\mathbb {E}[W_1],\mathbb {E}[W_2])= (68.0292, 59014.9628)\) and \(\text{ Approx }(\mathbb {E}[W_1],\mathbb {E}[W_2])=(71.6074, 59011.3845)\). Further for \(\nu _2= 1000\), we get the exact and the approximate mean waiting times as \((\mathbb {E}[W_1],\mathbb {E}[W_2])= (73.4677,24.2730)\) and \(\text{ Approx }(\mathbb {E}[W_1],\mathbb {E}[W_2])=(71.6074,26.1333)\). We can see that in both cases, even though the exact mean waiting times are not the same for station 1, the approximation is still within 5% of them.

4.4 Optimal choice of the glue period distributions

In this subsection, we discuss an optimization problem for the choice of the distributions of the glue periods, \(G_i\), \(i=1,\ldots ,N\), to minimize the weighted sum of the mean waiting times \(\sum ^N_{i=1} c_i \mathbb {E}[W_i]\), subject to the constraint \(\sum ^N_{i=1} \mathbb {E}[G_i]=L\), where \(c_i\), \(i=1,\ldots ,N,\) and L are positive constants. Because we do not have explicit formula for the mean waiting time, it is difficult to exactly solve the constrained minimization problem. Instead of finding the exact solution of the constrained minimization problem, we will find the optimal choice of the distributions of \(G_i\), \(i=1,\ldots ,N\), to

where \(U_i\) is the approximation of \(\mathbb {E}[W_i]\) given by the right-hand side of (4.13). Note that under the constraint \(\sum ^N_{i=1} \mathbb {E}[G_i] =L\), the objective function of the minimization problem becomes as follows:

By Jensen’s inequality, it can be shown that if the nondeterministic glue period distributions with means \(g_i\) are changed to the degenerate (deterministic) ones with the same means \(g_i\), \(i=1,\dots ,N\), then the right-hand side of (4.14) becomes strictly smaller. Therefore, the above optimization problem becomes as follows:

where

Since \(U(g_1,\ldots ,g_N)\) is continuous on \(\mathcal{{D}} \equiv \{(g_1,\ldots ,g_N): g_1>0,\ldots ,g_N>0, g_1+\cdots +g_N=L\}\) and \(U(g_1,\ldots ,g_N)\rightarrow \infty \) as \(\min \{g_1,\ldots ,g_N\}\rightarrow 0+\), \(U(g_1,\ldots ,g_N)\) takes a minimum at a point in \(\mathcal{D}\). At a minimum point \((g_1,\ldots ,g_N)\), there exists a Lagrange multiplier \(\kappa \) satisfying

where

For each \(i=1,\ldots ,N\), the function \(f_i:(0,L) \rightarrow (-\infty ,f_i(L))\) is bijective, continuous and strictly increasing. Therefore, it has the inverse function \(h_i:(-\infty ,f_i(L)) \rightarrow (0,L)\), which is also continuous and strictly increasing. Therefore, Eq. (4.18) and the constraints (4.15) and (4.16) can be written as

Since \(\lim _{\kappa \rightarrow -\infty }\sum ^N_{j=1} h_j(\kappa )=0\), \(\lim _{\kappa \rightarrow (\min \{f_1(L),\ldots ,f_N(L)\})-} \sum ^N_{j=1} h_j(\kappa ) > L\) and \(\sum ^N_{j=1} h_j(\kappa )\) is strictly increasing in \(\kappa \), (4.19) has a unique solution, say \(\kappa ^*\). Therefore, from (4.20), the optimal solution \((g_1^*,\ldots ,g_N^*)\) is given by

We will now consider a few numerical examples to look at the dependency of different system characteristics and the respective optimal glue periods. In [2], a similar system was studied with a focus on optical switches, where the revenue of the system depended on distributing glue periods optimally to each station. In these examples, we will look at the problem of minimizing \(\sum _{i=1}^N c_i U_i\), that is, the weighted waiting cost of the system given that the sum of expected values of glue periods is fixed. Since the optimization problem showed that the system performs best when the glue periods are deterministic, we will only consider models with deterministic glue periods.

We consider a three-station model, and in each case vary one parameter to study how the system performs under certain changes. In all the cases, the sum of the lengths of deterministic glue periods is fixed, \(L= 3\), and the service times and the switchover times are exponentially distributed. The switchover times are symmetric and fixed for all three stations, i.e., \(\mathbb {E}[S_i] = 2\) for all \(i = 1,2,3\).

-

(i)

Case 1: In this case, we keep all system parameters symmetric except the arrival rate \(\lambda _i\) of each station. Let \(\nu _i=1\), \(\mathbb {E}[B_i]=1\) and \(c_i=1\) for all \(i=1,2,3\). In Table 4, we show the optimal values of \(g_1, g_2, g_3\) and \(\sum _{i=1}^N c_i U_i\) for different values of \(\lambda _i\).

-

(ii)

Case 2: In this case, we keep all system parameters symmetric except the mean service time \(\mathbb {E}[B_i]\) of each station. Let \(\lambda _i= 1\), \(\nu _i=1\), and \(c_i=1\) for all \(i=1,2,3\). In Table 5, we show the optimal values of \(g_1, g_2, g_3\) and \(\sum _{i=1}^N c_i U_i\) for different values of \(\mathbb {E}[B_i]\).

-

(iii)

Case 3: In this case, we keep all system parameters symmetric except the retrial rate \(\nu _i\) of each station. Let \(\lambda _i=0.25\), \(\mathbb {E}[B_i]=1\) and \(c_i=\rho _i\) for all \(i=1,2,3\). In Table 6, we show the optimal values of \(g_1, g_2, g_3\) and \(\sum _{i=1}^N c_i U_i\) for different values of \(\nu _i\). Note that in this case \(\sum _{i=1}^N c_i U_i = \sum _{i=1}^N \rho _i \mathbb {E}[W_i]\).

-

(iv)

Case 4: In this case, we keep all system parameters symmetric except the weight \(c_i\) of each station. Let \(\lambda _i=0.25\), \(\mathbb {E}[B_i]=1\) and \(\nu _i=1\) for all \(i=1,2,3\). In Table 7, we show the optimal values of \(g_1, g_2, g_3\) and \(\sum _{i=1}^N c_i U_i\) for different values of \(c_i\).

We can draw the following conclusions about the optimal allocation of the glue periods using the above method:

-

The optimal allocation depends on the arrival rate or the mean service time of a station only via the factor \(\rho \). This is due to the fact that the second term of (4.17) only involves \(\rho \), while the first term on the right-hand side of Eq. (4.17) is independent of \(g_i\). However, the exact optimal allocation might depend on the arrival rate or the mean service time of a station.

-

The higher the retrial rate, the shorter the length of the glue period assigned to the station.

-

The higher the weight allocated to a station, the longer the length of the glue period assigned to the station. This helps us in scenarios when a waiting cost is associated with the stations.

5 Conclusions and suggestions for further research

In this paper, we have studied a gated polling model with the special features of retrials and glue, or reservation, periods. For the case of exponentially distributed glue periods, we have presented an algorithm to obtain the moments of the number of customers in each station. We expect that this method can be adapted to handle phase-type distributed glue periods.

For generally distributed glue periods, we have obtained an expression for the steady-state distribution of the total workload in the system, and we have used it to derive a pseudo-conservation law for a weighted sum of the mean waiting times, which in turn led us to an accurate approximation of the individual mean waiting times. A topic for further research is to analyze the exact waiting time distribution, for exponentially distributed glue periods and for deterministic glue periods.

The introduction of the concept of a glue period was motivated by the wish to obtain insight into the performance of certain switches in optical communication systems. We have considered the optimal choice of the glue period lengths, under the constraint that the total glue period length per cycle is fixed. A topic for further study is the unconstrained counterpart of this optimization problem; a complication one then faces is that the objective function for the optimization can be nonconvex. In fact, it is possible that the Hessian of the objective function is not positive semi-definite even for the two-station system. However, it still seems to be intuitively natural that there will exist a unique solution for the optimization problem.

Not restricting ourselves to optical communications, one can also interpret a glue period as a reservation period–a window of opportunity for claiming service at the next visit of the server to a station. It would be interesting to study the reservation periods in more detail and in particular to consider the problem of choosing reservation periods in such a way that some objective function is optimized.

References

Abidini, M.A., Boxma, O.J., Resing, J.A.C.: Analysis and optimization of vacation and polling models with retrials. Perform. Eval. 98, 52–69 (2016)

Abidini, M.A., Boxma, O.J., Koonen A.M.J., Resing, J.A.C.: Revenue maximization in an optical router node—allocation of service windows. In: International Conference on Optical Network Design and Modelling (ONDM), Cartagena (2016)

Artalejo, J.R., Gómez-Corral, A.: Retrial Queueing Systems: A Computational Approach. Springer, Berlin (2008)

Avrachenkov, K., Morozov, E., Steyaert, B.: Sufficient stability conditions for multi-class constant retrial rate systems. Queueing Syst. 82, 149–171 (2016)

Boon, M.A.A., van der Mei, R.D., Winands, E.M.M.: Applications of polling systems. Surv. Oper. Res. Manag. Sci. 16, 67–82 (2011)

Boxma, O.J.: Workloads and waiting times in single-server systems with multiple customer classes. Queueing Syst. 5, 185–214 (1989)

Boxma, O.J., Kella, O., Kosiński, K.M.: Queue lengths and workloads in polling systems. Oper. Res. Lett. 39, 401–405 (2011)

Boxma, O.J., Resing, J.A.C.: Vacation and polling models with retrials. In: 11th European Workshop on Performance Engineering (EPEW 2014), Florence (2014)

Everitt, D.: Simple approximations for token rings. IEEE Trans. Commun. 34, 719–721 (1986)

Falin, G.I.: On a multiclass batch arrival retrial queue. Adv. Appl. Probab. 20, 483–487 (1988)

Falin, G.I., Templeton, J.G.C.: Retrial Queues. Chapman and Hall, London (1997)

Langaris, C.: A polling model with retrial customers. J. Oper. Res. Soc. Jpn. 40, 489–508 (1997)

Langaris, C.: Gated polling models with customers in orbit. Math. Comput. Model. 30, 171–187 (1999)

Langaris, C.: Markovian polling system with mixed service disciplines and retrial customers. Top 7, 305–322 (1999)

Langaris, C., Dimitriou, I.: A queueing system with \(n\)-phases of service and \((n-1)\)-types of retrial customers. Eur. J. Oper. Res. 205, 638–649 (2010)

Levy, H., Sidi, M.: Polling models: applications, modeling and optimization. IEEE Trans. Commun. 38, 1750–1760 (1990)

Moutzoukis, E., Langaris, C.: Non-preemptive priorities and vacations in a multiclass retrial queueing system. Stoch. Models 12, 455–472 (1996)

Okawachi, Y., Bigelow, M.S., Sharping, J.E., Zhu, Z., Schweinsberg, A., Gauthier, D.J., Boyd, R.W., Gaeta, A.L.: Tunable all-optical delays via Brillouin slow light in an optical fiber. Phys. Rev. Lett. 94, 153902 (2005). (4pages)

Takagi, H.: Application of polling models to computer networks. Comput. Netw. ISDN Syst. 22, 193–211 (1991)

Takagi, H.: Queueing analysis of polling models: progress in 1990–1994. In: Dshalalow, J.H. (ed.) Frontiers in Queueing: Models, Methods and Problems, pp. 119–146. CRC Press, Boca Raton (1997)

Takagi, H.: Analysis and application of polling models. In: Haring, G., Lindemann, C., Reiser, M. (eds.) Performance Evaluation: Origins and Directions. Lecture Notes in Computer Science, vol. 1769, pp. 423–442. Springer, Berlin (2000)

Vishnevskii, V.M., Semenova, O.V.: Mathematical methods to study the polling systems. Autom. Remote Control 67, 173–220 (2006)

Winands, E.M.M.: Branching-type polling systems with large setups. OR Spectr. 33, 77–97 (2011)

Acknowledgements

We are grateful to the reviewer and the associate editor for valuable comments and suggestions, which considerably improved this paper. The research is supported by the IAP program BESTCOM, funded by the Belgian government, and by the Gravity program NETWORKS, funded by the Dutch government. The authors gratefully acknowledge several discussions with Professor Ton Koonen (TU Eindhoven) about optical communications. B. Kim’s research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIP) (NRF-2017R1A2B4012676). J. Kim’s research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2017R1D1A1B03029542).

Author information

Authors and Affiliations

Corresponding author

Appendix: Approximation of the mean waiting times

Appendix: Approximation of the mean waiting times

Below we outline a method to approximate the mean waiting times of all customer types. The arrival of a type-i customer occurs either during a glue period of station i or during any other period. At the start of the service period, the customers which will be served in the current service period are fixed. The mean length of the service period is now the same irrespective of the order in which these customers are served. Without loss of generality, we will assume that the customers who arrive during a glue period of station i are served first and then customers who retry are served.

Let \(\bar{W}_i\) and \(\tilde{W}_i\) denote the waiting times of type-i customers who arrive during a glue period of station i and any other period, respectively. Further, \(G_{i_\mathrm{res}}\) denotes the residual time of a glue period of station i. Finally, \(C_{i_\mathrm{res}}\) denotes the residual time of a non-glue period of station i. A type-i customer arriving during a glue period of station i has to wait for the residual glue period. Further, it has to wait for all the customers who arrived before it during the glue period. Therefore,

A type-i customer arriving during a non-glue period of station i has to wait for the residual non-glue period and the glue period. Then, it either gets in the queue for service or it remains in the orbit. With probability \(\tilde{G}_i (\nu _i)\) it remains in the orbit and has to wait until the next visit to get served, and this repeats. Therefore, on average, it has to wait for \(\tilde{G}_i (\nu _i)/(1-\tilde{G}_i (\nu _i))\) cycles before it gets into the queue for service. When it gets in the queue, it has to wait for all the type-i customers who have arrived during the glue period to be served, and then the customers who arrived before it and who will be served in the current service period (on average this number is approximately equal to the number of customers who arrived during the residual non-glue period before the arrival of the tagged customer). Therefore,

The probability that a type-i customer arrives during a glue period of station i is \(\mathbb {E}[G_i]/\mathbb {E}[C]\), and the probability that it arrives during a non-glue period equals \(1-(\mathbb {E}[G_i]/ \mathbb {E}[C])\). Therefore,

Let \(R_{c_i}\) be the residual cycle time of the system with respect to station i. Then,

We assume that \(\mathbb {E}[R_{c_i}] =\mathbb {E}[R_{c}]\) for all \(i=1,\ldots ,N\). We thus obtain (4.11):

Rights and permissions

About this article

Cite this article

Abidini, M.A., Boxma, O., Kim, B. et al. Performance analysis of polling systems with retrials and glue periods. Queueing Syst 87, 293–324 (2017). https://doi.org/10.1007/s11134-017-9545-y

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-017-9545-y