Abstract

In this paper, we consider an M\({}^X\)/M/1/SET-VARI queue which has batch arrivals, variable service speed and setup time. Our model is motivated by power-aware servers in data centers where dynamic scaling techniques are used. The service speed of the server is proportional to the number of jobs in the system. The contribution of our paper is threefold. First, we obtain the necessary and sufficient condition for the stability of the system. Second, we derive an expression for the probability generating function of the number of jobs in the system. Third, our main contribution is the derivation of the Laplace–Stieltjes transform (LST) of the sojourn time distribution, which is obtained in series form involving infinite-dimensional matrices. In this model, since the service speed varies upon arrivals and departures of jobs, the sojourn time of a tagged job is affected by the batches that arrive after it. This makes the derivation of the LST of the sojourn time complex and challenging. In addition, we present some numerical examples to show the trade-off between the mean sojourn time (response time) and the energy consumption. Using the numerical inverse Laplace–Stieltjes transform, we also obtain the sojourn time distribution, which can be used for setting the service-level agreement in data centers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider a single-server queue with batch Poisson arrivals, variable service speed and setup time. Our model is motivated by power-aware servers in data centers [9, 10, 17]. The CPU of a server is able to process at multiple speeds by using either frequency scaling [13] or dynamic voltage and frequency scaling (DVFS) techniques [11, 15]. In recent years, CPUs with variable speed have become popular because they can save energy consumption while keeping acceptable response time for jobs. The server can automatically adjust its speed according to the workload in the system. By doing so, the power consumption is small at low workload and is large at high workload.

In this paper, we assume that jobs arrive at the system in batches according to a Poisson process and that the arrival process is independent of the state of the system. The service requirement of each job in a batch is independently and identically distributed (i.i.d.) with an exponential distribution. The service speed of the server is instantaneously adapted according to the number of jobs in the system. In particular, the service rate of the server is proportional to the number of jobs in the system. Furthermore, the server is turned off immediately after becoming empty in order to save energy consumption. At the moment when a batch arrives at an empty system, the OFF server is turned on. However, some exponentially distributed setup time is needed in order to reactivate the OFF server. We call the above queueing model the M\({}^{X}\)/M/1/SET-VARI queue where SET and VARI stand for setup and variable service rate, respectively.

The contribution of this paper is threefold. First, we obtain the necessary and sufficient condition for the existence of the unique stationary queue length distribution, which we call the stability condition hereafter. We show that the stability condition of our model is that the logarithmic moment of the batch size is finite. Interestingly, the system can be stable even if the mean batch size is infinite. Second, we derive the probability generating function (PGF) of the number of jobs in the system. It should be noted that the number of jobs in the system of our model is identical to that of the M\({}^{X}\)/M/\(\infty \) queue with setup time, which to the best of our knowledge has not been investigated in the literature. Third, we derive the Laplace–Stieltjes transform (LST) of the sojourn time distribution, which is obtained in series form involving infinite-dimensional matrices. The derivation of the sojourn time distribution is challenging because the sojourn time of a tagged job depends on not only the state of the system upon arrival but also on the batches arriving after it. Therefore, the sojourn time distribution cannot be obtained directly from the PGF of the queue length distribution via the distributional Little’s law [8].

Our model extends the one proposed by Lu et al. [9] in which an M/M/1/SET-VARI queue was considered. In [9], the solution in terms of infinite series was presented for the stationary queue length distribution. From the queue length distribution, the mean response time is obtained via Little’s law and the mean power consumption is obtained. These metrics are used in [9] to find the energy-response trade-off. However, the sojourn time distribution was not considered in [9]. Baba [2] considered the M\({}^{X}\)/M/1 queue with setup time where the processing speed of the server is fixed. He derived the PGF of the number of jobs in the system and the LST of the sojourn time distribution. Adan and D’Auria [1] considered a single-server queueing system where jobs arrive according to a Poisson arrival stream, the service requirements of jobs follow the exponential distribution with mean 1 and the service rate of the server is controlled by a threshold. They derived the stationary distribution of the number of jobs in the system and the LST of the sojourn time distribution in explicit form. The sojourn time distribution of our model is derived using first-step analysis, which is also adopted by Adan and D’ Auria [1]. The difference is that the underlying Markov chain in Adan and D’ Auria [1] is homogeneous after a threshold, while our underlying Markov chain is spatially nonhomogeneous. As a result, the former allows explicit expression while our formulae involve inverse mappings of infinite matrices.

The remainder of this paper is organized as follows: In Sect. 2, we describe the M\({}^{X}\)/M/1/SET-VARI queue in detail. In Sect. 3, we derive the stability condition. In Sect. 4, we derive the PGF of the number of jobs in the system in an integral but computable form. In Sect. 5, we derive the LST of the sojourn time distribution. In Sect. 6, we present numerical experiments showing the energy-performance trade-off and the sojourn time distribution by numerically inverting the Laplace–Stieltjes transform. Finally, in Sect. 7, we present the conclusion of this paper and future work.

2 Model

In this section, we describe our queueing model, the M\({}^X\)/M/1/SET-VARI queue, in detail. The M\({}^X\)/M/1/SET-VARI queue has a single-server operating under the FCFS (First Come First Served) service discipline and an infinite buffer space. Batches of jobs arrive at the system according to a Poisson process with rate \(\lambda \). The numbers of jobs in batches are i.i.d., where X is the batch size with distribution \(x_i={\mathsf {P}} (X=i)\) for \(i\in {\mathbb {N}}:=\{1,2,\dots \}\) and the PGF (Probability Generating Function) is denoted by \({\widehat{X}}(z):=\sum _{k\ge 1} x_kz^k\).

The special feature of our model is that the service speed of the server is proportional to the number of jobs in the system. In particular, the service rate is \(n\mu \), provided that the number of jobs in the system is n. This is equivalent to the following assumption: The service requirements of jobs are i.i.d. with exponential distribution with mean 1. The basic speed of the server when there is one job in the system is given by \(\mu \in (0,\infty )\). When there are n jobs in the system, the speed of the server is scaled up to \(n\mu \). Thus, when there are n jobs in the system, the residual sojourn time of the ongoing job follows the exponential distribution with mean \((n\mu )^{-1}\) due to the memoryless property of exponential distributions.

In order to save energy, the server is turned off immediately if the system becomes empty upon a service completion. Furthermore, when a batch arrives at the empty system, the server is turned on. However, the server needs some setup time before it can process jobs. Therefore, if a batch arrives at the empty system, it has to wait until the setup time finishes. We assume that the setup time follows the exponential distribution with mean \(\alpha ^{-1}\). During the setup time, the server cannot serve a job but consumes energy.

Let I(t) and N(t) denote the state of the server and the number of jobs in the system, respectively, at time t. When the server is off or in the setup process, \(I(t)=0\), and when the server is processing a job, \(I(t)=1\). In the current setting, the joint stochastic process \(\{Z(t):=(I(t),N(t)); t\ge 0\}\) is an irreducible continuous-time Markov chain with state space \({\mathscr {S}}=\{(0,j);\,j\in {\mathbb {Z}}_+\}\cup \{(1,j);\,j\in {\mathbb {N}}\}\), where \({\mathbb {Z}}_+:=\{0\}\cup {\mathbb {N}}\). We assume that \(x_1>0\) so that the Markov chain \(\{Z(t)\}\) is irreducible. Figure 1 shows the state transition diagram of this Markov chain for a special case where the maximum batch size is two.

Remark 1

As mentioned in Sect. 1, the PGF of the number of jobs in the system of the M\({}^X\)/M/1/SET-VARI queue is identical to that of the M\({}^{X}\)/M/\(\infty \) queue with setup time. However, the sojourn time distributions of these two models may be different because the sojourn time distribution of a tagged job of the latter is determined upon its arrival, while that of the former is affected by future arrivals. Some researchers have studied the M\({}^X\)/M/\(\infty \) queue without setup time. For example, Shanbhag [14] derived moment generating functions of some performance measures, for example, the number of jobs in the system and the sojourn time. Cong [3] derived the stability condition.

3 The stability condition

In this section, we derive the stability condition of our model. Because \(\{Z(t);t\ge 0\}\) is an irreducible and regular Markov chain, \(\{Z(t)\}\) is positive recurrent if and only if a unique stationary distribution exists.

It follows from Theorem 1 that the stability condition of the M\({}^X\)/M/1/SET-VARI queue is that the logarithmic moment of the batch size is finite. Cong [3] derived the same stability condition for the special case of the M\({}^X\)/M/\(\infty \) queue without setup time. The addition of the setup time does not change the stability of the system. This is intuitively clear because the effects of setup times disappear when the system is under heavy load (i.e., large number of jobs present).

It should be noted that the proof of Cong [3] is based on the transient solution, which is derived using the method of collective marks. Here, we prove the stability condition for a more general model than the one in Cong [3] using alternative methods.

Theorem 1

\(\{Z(t);t\ge 0\}\) has a unique stationary distribution if and only if

Proof

It should be noted that \(\{Z(t)\}\) is positive recurrent if and only if there exists an invariant measure \({\varvec{\xi }}:=(\xi _{i,j})_{(i,j)\in {\mathscr {S}}}\) of \(\{Z(t)\}\) such that \(\xi _{i,j}>0\) for \((i,j)\in {\mathscr {S}}\) and \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \) [12]. We define the generating functions \({\widehat{\xi }}_0(z)\) and \({\widehat{\xi }}_1(z)\) as follows:

The invariant measure \({\varvec{\xi }}\) satisfies the following balance equations:

Multiplying (3.3) by \(z^j\), taking the sum over \(j\in {\mathbb {N}}\), and rearranging the result, we obtain

Multiplying (3.4) by z and (3.5) by \(z^j\) and taking the sum over \(j\ge 2\) yields

Rearranging the above equation, we find that

where \(q(z):=(1-{\widehat{X}}(z))/(1-z)\). We define Q(z) as the primitive function of q(z) such that \(Q(0)=0\). The solution of (3.7) is given by

where H(z) is some function which will be determined later. Differentiating (3.8) and substituting the result into (3.7), we obtain

It follows from \({\widehat{\xi }}_1(0)=0\) and \(Q(0)=0\) that \(H(0)=0\). Therefore, we have

Substituting this equation into (3.8), we obtain

It should be noted that (3.6) and (3.9) are equivalent to the system of balance equations (3.2)–(3.6).

Assuming that \(\{Z(t)\}\) is positive recurrent, we will prove that (3.1) holds. Thus, there exists an invariant measure \({\varvec{\xi }}\) such that \(\xi _{i,j}>0\) for \((i,j)\in {\mathscr {S}}\) and \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \). Therefore, we have

From (3.9) and (3.10), we also have

Because of (3.11), \(\xi _{i,j}>0\), \((i,j)\in {\mathscr {S}}\), and \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \). We can take the limit of (3.12) as \(z\uparrow 1\) in order to obtain

Using \(Q(z)=\sum _{j\ge 1}\mathsf {P}[X\ge j]z^j/j\), we can show the following inequality:

where \(\gamma \) is Euler’s constant [16]. The first inequality in (3.14) is due to

while the second inequality in (3.14) is because \(X \ge 1\). Therefore, it follows from (3.13), (3.18) and \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \) that \(\mathsf {E}[\log (X+1)]<\infty \).

Now, assuming that \(\mathsf {E}[\log (X+1)]<\infty \), we will prove the existence of a positive invariant measure \({\varvec{\xi }}\) such that \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \). We select an arbitrary \(\xi _{0,0}>0\). First, we prove that \(\xi _{i,j}>0\) for any \((i,j)\in {\mathscr {S}}\). We can recursively prove that \(\xi _{0,j}>0\) for \(j\in {\mathbb {N}}\) by using (3.3), and it follows from (3.2) that \(\xi _{1,1}>0\). In addition, comparing the coefficients of \(z^j\) on both sides of (3.7), we have, for \(j\in {\mathbb {N}}\),

where we have used \(q(z)=\sum _{j\ge 0}\mathsf {P}[X>j]z^j\). Due to \(\xi _{0,0}>0\), we can also prove that \(\xi _{1,j}>0\) for \(j\ge 2\) by using the recursive formula (3.15). Thus, under the assumption that \(\xi _{0,0}>0\), it follows that \(\xi _{i,j}>0\) for any \((i,j)\in \mathscr {S}\).

Next, we prove that \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \). From (3.6), we have

where the second inequality is to cover the case \(\mathsf {P}[X< \infty ] <1\). From (3.9) and (3.16), we also have

Using \(Q(z)=\sum _{j\ge 1}\mathsf {P}[X\ge j]z^j/j\), we can show the following inequality:

where the inequality in (3.18) is due to

Taking the limit of (3.17) as \(z\uparrow 1\), it follows from (3.18) that

Note that

where the second equation holds because \(\xi _{i,j}>0\) for \((i,j)\in {\mathscr {S}}\). It follows from (3.19), (3.20), \(\xi _{0,0}>0\) and \(\mathsf {E}[\log (X+1)]<\infty \) that there exists an invariant measure \({\varvec{\xi }}\) such that \(\xi _{i,j}>0\) for \((i,j)\in {\mathscr {S}}\) and \(\sum _{(i,j)\in {\mathscr {S}}}\xi _{i,j}<\infty \). Therefore \(\{Z(t)\}\) is positive recurrent. \(\square \)

4 The number of jobs in the system

In this section, we consider the number of jobs in the system in steady state, i.e., assuming \(\mathsf {E}[\log (X+1)]<\infty \). From Theorem 1, there exists the unique stationary distribution \({\varvec{\pi }}=(\pi _{i,j})_{i,j\in {\mathscr {S}}}\), where \(\pi _{i,j}:=\lim _{t\rightarrow \infty }\mathsf {P}[\,I(t)=i, N(t)=j\,]\) for \((i,j)\in {\mathscr {S}}\). We define the number of jobs in the system in steady state as N, and its PGF as \({\widehat{\pi }}(z):=\sum _{j=0}^\infty \pi _{0,j}z^j+\sum _{j=1}^\infty \pi _{1,j}z^j\). The PGF \({\widehat{\pi }}(z)\) is given by Theorem 2.

Theorem 2

\({\widehat{\pi }}(z)\) is given as follows:

Proof

The stationary distribution \({\varvec{\pi }}\) is the positive invariant measure which satisfies the normalizing condition, i.e., \({\widehat{\pi }}(1)=1\). From (3.6) and (3.9), we have

By partial integration, we obtain

From (4.3) and \({\widehat{\pi }}(1)=1\), we obtain \(\pi _{0,0}\) as follows:

Substituting (4.4) into (4.3), we obtain Theorem 2. \(\square \)

Remark 2

As mentioned in Sect. 1, the number of jobs in the system in our model is identical to that in the M\({}^{X}\)/M/\(\infty \) queue with setup time. Simplifying equation (2.9) in Shanbhag [14] for the system without setup time, we know that the PGF of the number of customers for that system, denoted by \({\widehat{\pi }}^*(z)\), can be obtained as follows:

It is easy to see that (4.1) tends to (4.5) as \(\alpha \rightarrow \infty \).

We can easily obtain the average number of jobs in the system.

Corollary 1

Assuming that \(\mathsf {E}[X] < \infty \), \({\mathsf {E}}[N]\) is given as follows:

where \(\pi _{0,0}\) is given by (4.4).

Proof

Differentiating (4.1), we obtain

Taking the limit as \(z\uparrow 1\) in the above equation yields

where the third equality is due to L’Hospital’s rule. From the relation \(\mathsf {E}[N]={\widehat{\pi }}'(1)\), we obtain Corollary 1. \(\square \)

5 Sojourn time distribution

In this section, we derive the LST of the sojourn time distribution. Note that the LST of a distribution function F(t) is defined as \(F^*(s):=\int _{t\ge 0} \mathrm{e}^{-st}F({\mathrm{d}}t)\). We assume that \(\mathsf {E}[X]<\infty \) in this section for the existence of the equilibrium distribution.

In the M\({}^{X}\)/M/1/SET-VARI queue, the server changes the speed upon arrivals and departures of jobs. Therefore, the sojourn time distribution of a tagged job is affected by the batches that arrive after it. This makes the derivation of the sojourn time distribution complex and challenging. We first derive the conditional LST for the sojourn time distribution. Then, combining with the queue length distribution, we obtain the unconditional LST for the sojourn time distribution.

First, we consider the case where the server is processing a job when the tagged job arrives. Let \(S_1(n,m)\) denote the residual sojourn time of the tagged job, given that it is in the mth position and the system state is (1, n). Conditioning on the first-step transitions, we have, for \(m\in {\mathbb {N}}\) and \(n\ge m\),

where Y denotes the exponential random variable with mean 1, and \(S_1(n,0)=0\) for \(n \in {\mathbb {N}}\). Furthermore, let \(\psi _1(n,m,s)\) denote the LST of \(S_1(n,m)\). Taking the LST of both sides of (5.1), we obtain, for \(m\in {\mathbb {N}}\) and \(n\ge m\),

where \(\psi _1(n,0,s)=1\). We use the convention that \(\psi _1(n,m,s)=0\) for \(n<m\). Furthermore, we define the infinite column vector \({\varvec{\psi }}_1(m,s)\) as

where \({\varvec{a}}^{\top }\) denotes the transposed vector of \({\varvec{a}}\). Note that the nth element of \({\varvec{\psi }}_1(m,s)\) is \(\psi _1(n-1,m,s)\) for \(n\ge m+1\).

We define infinite matrices \({\varvec{\varLambda }}^{(1)}\) and \({\varvec{M}}\) as

Rearranging (5.2) by using these matrices, we obtain

From \({\varvec{\psi }}_1(n,0,s)=1\) for any \(n\in {\mathbb {N}}\), we have

where \({\varvec{1}}\) is the infinite column vector whose elements are all equal to 1. Let \(\parallel {\varvec{z}}\parallel _2\) denote the Euclidean norm of the vector \({\varvec{z}}\). We prove that the operator norm of the infinite matrix \({\varvec{\varLambda }}^{(1)}\), \(\parallel {\varvec{\varLambda }}^{(1)}\parallel =\sup _{\parallel {\varvec{z}}\parallel _2=1}\parallel {\varvec{\varLambda }}^{(1)}{\varvec{z}}\parallel _2\), is strictly smaller than 1. Indeed, for all \({\varvec{z}}=(z_1,z_2,\dots )^{\top }\) such that \(\parallel {\varvec{z}}\parallel _2=1\), we have

where the second inequality holds because of Jensen’s inequality. Thus,

Because \(\parallel {\varvec{\varLambda }}^{(1)}\parallel \) is strictly smaller than 1, \(({\varvec{I}}-{\varvec{\varLambda }}^{(1)})\) has an inverse mapping, where \({\varvec{I}}\) is the infinite identity matrix [6, Section 29, Theorem 8]. Therefore, from (5.5), we obtain the following recurrence equations, for \(m\in {\mathbb {N}}\):

Solving this equation, we obtain

Next, we consider the case where the server is not processing a job when the tagged job arrives. Let \(S_0(n,m)\) denote the residual sojourn time of the tagged job, given that the tagged job is in the mth position and the system state is (0, n). Let \(\psi _0(n,m,s)\) denote the LST of \(S_0(n,m)\) for \(n\in {\mathbb {N}}\) and \(m\le n\). In addition, we define the infinite column vector \({\varvec{\psi }}_0(m,s)\) as

where \(\psi _0(n,0,s)=1\), \(n\in {\mathbb {Z}}_+\), and \(\psi _0(n,m,s)=0\), \(n\in {\mathbb {Z}}_+\) and \(m>n\). We use the convention that \(\psi _0(n,m,s)=0\) for \(n<m\). As with the analysis for \({\varvec{\psi }}_1(m,s)\), i.e., (5.1)–(5.6), we obtain

where the infinite matrices \({\varvec{\varLambda }}^{(0)}\) and \({\varvec{A}}\) are defined as follows:

Note that \(({\varvec{I}}-{\varvec{\varLambda }}^{(0)})\) has an inverse mapping, which can be proved similarly to the analysis for \({\varvec{\varLambda }}^{(1)}\).

Next, we derive the unconditional LST of the sojourn time distribution. To this end, we define \(\tau (i,n,m)\) as the probability that the tagged job is located in the mth position and the state of the system becomes (i, n) immediately after its arrival. Let \(({{\mathscr {I}}},{\mathscr {L}}_p)\) denote the state of the system just before the tagged job arrives at the system. Let \({\mathscr {P}}\) denote the position at which the tagged job is located immediately after it enters the system. Let \({\widetilde{X}}\) denote the number of jobs in the batch to which the tagged job belongs. Under the assumption that \(\mathsf {E}[X]<\infty \), the distribution of \({\widetilde{X}}\) is the equilibrium distribution of X, given in [4], for \(k\in {\mathbb {N}}\),

In addition, from PASTA [18], we have, for \((i,n)\in {\mathscr {S}}\),

Let \({\mathscr {X}}(n,m):=\{k\,;\,x_k>0,\,n\le k\le m\}\). We obtain, for \(n\in {\mathbb {N}}\) and \(n\ge m\),

Similarly to the above, we obtain, for \(n\in {\mathbb {Z}}_+\) and \(n\ge m\),

Since we use the convention that \(\psi _i(n,m,s)=0\) for \(n<m\), the LST of the sojourn time distribution, denoted by \({\psi }(s)\), can be expressed as follows by using \(\psi _0(n,m,s)\) and \(\psi _1(n,m,s)\):

It is obvious that the infinite series included in \(\psi (s)\) converges. The reason is that \(\sum _{n=1}^\infty \sum _{m=1}^n\tau (0,n,m)+\sum _{n=2}^\infty \sum _{m=2}^n\tau (1,n,m)=1\) and \(0\le \psi _i(n,m,s)\le 1\) for \(i=1,2\), \(n\in {\mathbb {N}}\) and \(1\le m\le n\).

For a compact expression of (5.10), we define the infinite matrices \({\varvec{I}}_m\), for \(m\in {\mathbb {N}}\), and \({\varvec{B}}\) as

In addition, we define the infinite row vectors \({\varvec{\pi }}_0\) and \({\varvec{\pi }}_1\) as

Rearranging (5.10) by using these matrices and vectors, we obtain

From (5.6), (5.7) and (5.13), we obtain the LST of the sojourn time distribution as follows:

Theorem 3

The LST of the sojourn time distribution, \(\psi (s)\), is given as follows:

where \({\varvec{\varLambda }}^{(1)}\), \({\varvec{M}}\), \({\varvec{\varLambda }}^{(0)}\), \({\varvec{A}}\), \({\varvec{I}}_m\) and \({\varvec{B}}\) are given by (5.3), (5.4), (5.8), (5.9), (5.11 and (5.12, respectively.

Remark 3

The LST of the sojourn time distribution given in Theorem 3 is in series form involving infinite-dimensional matrices. Therefore, an approximation is necessary for numerical calculation. In Sect. 6.2, for numerical experiments, we present a method to approximate \(\psi (s)\). However, we have not yet been able to find a bound for the error. It is important future work to find an approximation method with guaranteed accuracy.

6 Numerical results

In this section, we present some numerical results for the M\({}^X\)/M/1/SET-VARI queue. We consider three types of distribution for X: the binomial distribution with parameters \(n\in {\mathbb {N}}\) and \(0<p<1\), denoted by \(\text {Binom}(n,p)\), the discrete uniform distribution with parameters \(a,b\in {\mathbb {N}}\) (\(a\le b\)), denoted by \(\text {Unif}\{a,b\}\), and the geometric distribution with parameter \(0< p<1\), denoted by \(\text {Geo}(p)\).

6.1 Energy consumption and response time trade-off

We consider the trade-off between the average energy consumption and the average sojourn time. In order to compare the variable speed CPU with the fixed speed CPU, we also consider the \(\text {M}^{X}/\text {M}/1/\text {SET-FIX}\) queue where the service speed is fixed, while other settings are kept the same as the M\({}^X\)/M/1/SET-VARI queue. We use the PGF of the number of jobs in the system derived for the \(\text {M}^{X}/\text {M}/1/\text {SET-FIX}\) queue in [2]. The assumptions regarding energy consumption per unit time for each state are given in Table 1.

Note that the constants \(K_{\text {service}}\) and \(K_{\text {set}}\) from Table 1 depend on the particular system. Let \(\mathsf {E}[W_{\text {v}}]\) and \(\mathsf {E}[W_{\text {f}}]\) denote the average sojourn time of the variable speed queue and that of the fixed speed queue, respectively. In addition, let \(\mathsf {E}[P_{\text {v}}]\) and \(\mathsf {E}[P_{\text {f}}]\) denote the average energy consumption of the variable speed queue and the fixed speed queue, respectively. \(\mathsf {E}[P_{\text {v}}]\) and \(\mathsf {E}[P_{\text {f}}]\) can be expressed as follows:

We will explore the relationship between the average sojourn time and the average power consumption. In addition, we will compare \(\mathsf {E}[W_{\text {v}}]\) with \(\mathsf {E}[W_{\text {f}}]\) under the condition that \(\mathsf {E}[P_{\text {v}}]=\mathsf {E}[P_{\text {f}}]\). In what follows, we assume that \(\alpha =0.1\) and \(\lambda \mathsf {E}[X]=1\). Note that \(\lambda \mathsf {E}[X]\) is the mean number of jobs arriving per unit time. In the numerical experiments, the value of \(\lambda \) and the distribution of X change while keeping \(\lambda \mathsf {E}[X] = 1\).

The procedure of numerical experiments is as follows. First, fixing the value of \(\mu \in (0,3]\), we compute the average energy consumption per unit time of the M\({}^X\)/M/1/SET-VARI queue, denoted by \(A_{\text {v}}\), and the average sojourn time. Let \(\mu _{\text {f}}(A)\) denote the unique service rate which realizes the average energy consumption A in the fixed queue. Next, we compute \(\mu _{\text {f}}(A_{\text {v}})\) by

As a result, the energy consumption for both models is kept the same. Finally, we compute the average sojourn time of the M\({}^X\)/M/1/SET-FIX queue under the parameter \(\mu _{\text {f}}(A_{\text {v}})\). In this numerical experiment, we compute the average sojourn time from the average number of jobs in the system using Little’s formula [8].

In Fig. 2, we present the results when X is \(\text {Binom}(9,1/6)\) (and \(\lambda =0.4\)) and when X is \(\text {Binom}(9,1/3)\) (and \(\lambda =0.25\)). Jobs in the case of \(\text {Binom}(9,1/3)\) are more likely to arrive in larger batches than those in the case of \(\text {Binom}(9,1/6)\). In Fig. 3, we present the results when X is \(\text {Unif}\{1,4\}\) (and \(\lambda =0.4\)) and when X is \(\text {Unif}\{1,7\}\) (and \(\lambda =0.25\)). Similarly, jobs in the case of \(\text {Unif}\{1,7\}\) are likely to arrive in larger batches than those in the case of \(\text {Unif}\{1,4\}\).

In all the cases in Figs. 2 and 3, we observe the trade-off between the average sojourn time and the average power consumption, i.e., the average sojourn time is smaller when the average power consumption is larger. In addition, keeping the average number of arrivals per unit time (\(\lambda {\mathsf {E}}[X]\)) and the average power consumption the same, arriving in larger batches results in a smaller average sojourn time. This result seems intuitively true and might be proven.

Let’s compare the variable speed queue with the fixed queue. Our numerical results show that for high-performance applications, in which delays must be kept small, having variable speed can result in both shorter delays and lower energy than having fixed speed, while the opposite is true for applications where energy usage is more important than delay performance.

These observations could be explained as follows: Energy consumption in the variable service queue monotonically increases with the number of jobs in the system while that in fixed service queue is constant whenever there are jobs in the system.

6.2 The sojourn time distribution

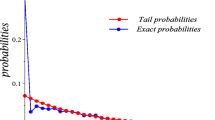

In Figs. 4 and 5, we present the probability densities of the sojourn time distributions computed by numerically inverting the Laplace–Stieltjes transform. In what follows, we assume that \(\alpha =0.1\) and \(\lambda {\mathsf {E}}[X]=1\). The LST of the sojourn time distribution is given in Theorem 3, but it is in series form involving infinite-dimensional matrices. Therefore, as mentioned in Remark 3, approximation is necessary for numerical calculation.

We present a procedure to compute the LST of the sojourn time distribution, \(\psi (s)\). First, we truncate the infinite vectors \({\varvec{\pi }}_0\) and \({\varvec{\pi }}_1\) to the vectors of their first \((N^*+1)\) elements, where the constant \(N^*\) is determined by

This is equivalent to disregarding the states with more than \(N^*\) jobs in the system whose probability is \(10^{-4}\). We compute \(\pi _{0,0}\) by (4.4) and \(\pi _{i,j}\), \(i=0,1\), \(j=1,\dots ,N^*\), by (3.3), (3.4) and (3.5). In addition, we truncate the infinite matrices appearing in \(\psi (s)\) to their \((N^* + 1) \times (N^* + 1)\) north-west corner matrices. We compute each element of the infinite matrices by (5.3), (5.4), (5.8), (5.9), (5.11) and (5.12). Let \(\psi ^*(s)\) denote the function computed by Theorem 3 using the truncated matrices and vectors. In our numerical experiments, we use the value of \(\psi ^*(s)\) as an approximation to the LST of the sojourn time distribution. It is important future work to estimate the error of this approximation. Our extensive numerical experiments show that the approximation is fairly accurate in the sense that the final results do not change much as \(N^{*}\) is increased.

Next, we present the procedure to compute the value of the sojourn time distribution for \(t\in [0,T]\) by numerically inverting the Laplace–Stieltjes transform [5] for fixed \(T > 0\). The function \(f^{(K)}(t)\) and the constant \(K^*(t)\) are defined as follows:

where \(i=\sqrt{-1}\) and \(\text {Re}(a+ib)=a\). In our numerical experiments, we use the value of \(f^{(K^*(t))}(t)\) as the sojourn time distribution. The constant h is the step size; we set \(h=1/100\).

In Figs. 4 and 5, we investigate the impact of the batch size distribution on the sojourn time distribution. Figure 4 presents the sojourn time distribution for \(\lambda =0.4\), \(\mathsf {E}[X]=2.5\) and \(\mu =0.1\), while Fig. 5 shows that for \(\lambda =0.25\), \(\mathsf {E}[X]=4\) and \(\mu =0.1\). Note that in both figures \(\lambda {\mathsf {E}}[X]=1\). We observe that the curves of Binom(9, 1 / 6) and Uni(1, 4) almost coincide. The values of second, third and fourth moments are 7.5, 25.8 and 99.2 for Binom(9, 1 / 6), and 7.5, 25.0 and 113.5 for Uni(1, 4). On the other hand, the values of second, third and fourth moments are 10.0, 58.8 and 480.0 for Geo(1 / 2.5). This suggests that high-order moments (roughly fourth or higher) have less influence in the sojourn time distribution.

Compared with Fig. 4, the curves for the binomial distribution, uniform distribution and geometric distribution are different in Fig. 5. The second moments are 18.0 for Binom(9, 1 / 3), 20.0 for Uni(1, 4) and 38.0 for Geo(1 / 2.5). This suggests that the second moment of the batch size has a significant impact on the sojourn time distribution.

7 Conclusion

In this paper, we have studied the M\({}^X\)/M/1/SET-VARI queue. We have derived the PGF of the number of jobs in the system in an integral form. Furthermore, we have derived the LST of the sojourn time distribution, which is obtained in series form involving infinite-dimensional matrices. Through numerical experiments, we have been able to observe some insights into the sojourn time distribution and the average energy consumption. One remark is that the stationary queue length distribution and the sojourn time distribution of the finite buffer version can be obtained using almost the same procedure as for the infinite buffer model, so we have omitted that analysis here. Furthermore, the finite buffer is easier in the sense that it is always stable and the sojourn time distribution does not involve infinite matrices. As future work, we plan to consider the model where the service rate is an arbitrary function of the number of jobs in the system. Models with general setup time and service time distributions may also be investigated somewhere else.

References

Adan, I., D’Auria, B.: Sojourn time in a single-server queue with threshold service rate control. SIAM J. Appl. Math. 76(1), 197–216 (2016)

Baba, Y.: The M\({}^X\)/M/1 queue with multiple working vacation. Am. J. Oper. Res. 2(2), 217–224 (2012)

Cong, T.D.: On the M\({}^X\)/G/\(\infty \) queue with heterogeneous customers in a batch. J. Appl. Probab. 31(1), 280–286 (1994)

Downton, F.: Waiting time in bulk service queues. J. R. Stat. Soc. 17(2), 256–261 (1955)

Durbin, F.: Numerical inversion of Laplace transforms: an efficient improvement to Dubner and Abate’s method. Comput. J. 17(4), 371–376 (1974)

Fomin, S.V., et al.: Elements of the Theory of Functions and Functional Analysis, vol. 1. Courier Corporation, North Chelmsford (1999)

Gandhi, A., Harchol-Balter, M., Adan, I.: Server farms with setup costs. Perform. Eval. 67(11), 1123–1138 (2010)

Keilson, J., Servi, L.D.: A distributional form of Little’s law. Oper. Res. Lett. 7(5), 223–227 (1988)

Lu, X., Aalto, S., Lassila, P.: Performance-energy trade-off in data centers: Impact of switching delay. In: Proceedings of 22nd IEEE ITC Specialist Seminar on Energy Efficient and Green Networking (SSEEGN), pp. 50–55 (2013)

Maccio, V.J., Down, D.G.: On optimal policies for energy-aware servers. Perform. Eval. 90, 36–52 (2015)

Mittal, S.: A survey of techniques for improving energy efficiency in embedded computing systems. Int. J. Comput. Aided Eng. Technol. 6, 440–459 (2014)

Norris, J.R.: Markov Chains. Cambridge University Press, Cambridge (1998)

Rohde, U.L.: Digital PLL Frequency Synthesizers: Theory and Design. Prentice-Hall, Englewood Cliffs, NJ (1983)

Shanbhag, D.N.: On infinite server queues with batch arrivals. J. Appl. Probab. 3(1), 274–279 (1966)

Sueur, E.L., Heiser, G.: Dynamic voltage and frequency scaling: the laws of diminishing returns. In: Proceedings of the 2010 International Conference on Power Aware Computing and Systems, pp. 1–8 (2010)

Whittaker, E.T., Watson, G.N.: A Course of Modern Analysis. Cambridge University Press, Cambridge (1996)

Wierman, A., Andrew, L., Tang, A.: Power-aware speed scaling in processor sharing systems: optimality and robustness. Perform. Eval. 69, 601–622 (2012)

Wolft, R.W.: Poisson arrivals see time average. Oper. Res. 30, 223–231 (1982)

Acknowledgements

We would like to thank the guest editors and two anonymous referees for their constructive comments which significantly improved the presentation of the paper. The research of TP was partially supported by JSPS KAKENHI Grant Number 26730011.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yajima, M., Phung-Duc, T. Batch arrival single-server queue with variable service speed and setup time. Queueing Syst 86, 241–260 (2017). https://doi.org/10.1007/s11134-017-9533-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-017-9533-2