Abstract

The quantum approximate optimization algorithm (QAOA) has been put forth as a method for near-term quantum computers to solve optimization problems. However, assessments of QAOA performance have mostly focused on small structured problem instances while performance on more general instances is less clear. Here, we numerically simulate QAOA pure state dynamics for every instance of MaxCut on non-isomorphic unweighted graphs with nine or fewer vertices with depth parameters \(p\le 3\). We find the approximation ratios and optimized circuit parameters concentrate across graphs of a given size and empirically show increases in concentration as graph size increases. The parameter concentration leads to two median-angle heuristics that overcome difficulties in QAOA parameter optimization and obtain mean approximation ratios within 3% and 0.2% of the optimal. We also analyze the probability to measure an optimal solution and find increasing variations between graphs as depth increases, in stark contrast to the approximation ratios which concentrate as depth increases. The resulting benchmark data set gives empirical bounds for on-going experimental realizations and lays groundwork for theoretical extensions to greater problem sizes and depths where QAOA may prove important for practically relevant problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Noisy, intermediate-scale quantum (NISQ) computers may soon solve problems of practical importance with a quantum computational advantage [2, 3]. One promising approach uses the quantum approximate optimization algorithm (QAOA) [4,5,6,7,8,9,10,11,12,13,14,15,16,17,18] or its variants [19,20,21,22,23,24,25] to find approximate solutions to combinatorial optimization problems. By using alternating layers of a mixing operator and a cost operator, QAOA promises to prepare an approximation to the quantum state that maximizes the expectation value of the cost operator [4]. Moreover, the cost operator may represent an instance of unconstrained combinatorial optimization, which opens the application of QAOA to a wide variety of practical but challenging computational problems, including the well-known graph problem MaxCut.

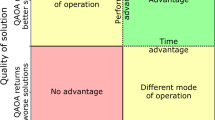

Alongside theoretical guarantees, the empirical performance of QAOA is an important open question for evaluating computational utility. The optimal number of alternating circuit layers as well as their tuning has been observed to vary with specific problem instances. Previous efforts examining MaxCut have tested performance in terms of the approximation ratio, which quantifies the average cost function value observed relative to the optimal value. These include studies on families of problem instances represented by 2-regular graphs [4,5,6], 3-regular graphs [7,8,9,10,11], small samples of random graphs [12, 13], and small samples of graphs with various fixed symmetries [14]. Notably, Crooks has shown that, for some instances, QAOA exceeds the worst-case performance bounds set by the Goemans–Williamson algorithm [13], which yields the best conventional lower bound on the approximation ratio for MaxCut. Theoretical arguments indicate QAOA will not exceed Goemans–Williamson for all instances [26, 27], however, as a heuristic method it need not exceed this bound for every instance to be useful in practice. Similarly, a classical algorithm has been developed which can outperform QAOA on MaxCut when the depth parameter \(p=1\) (to be defined shortly) [28], however, QAOA may still have practical utility as a heuristic when \(p>1\). This collection of results has encouraged further study of the more general circumstances under which QAOA may provide a practical improvement in performance.

An additional measure of QAOA performance is the resources required to prepare the optimized circuit layers. By design, the alternating layers of QAOA are tuned to prepare the approximate quantum state using parameterized gates optimized with respect to the observed cost value. However, the effort to identify these optimized circuits becomes intractable for large numbers of parameters. Previous studies have used optimization heuristics based on machine learning [13, 14] and various numerical optimization algorithms [7,8,9, 12, 23]. These results have been limited to small sets of graphs, often with simple regular structures, and an open question is whether there are general heuristics that yield good parameters quickly and consistently.

Here, we use numerical simulations of pure state dynamics to quantify performance bounds of QAOA solving an exhaustive set of MaxCut instances for \(n\le 9\) vertex graphs at depths \(p \le 3\). The exhaustive problem-instance set gives a thorough and systematic account of QAOA behavior on MaxCut for small graphs. We also evaluate the effectiveness of circuit optimization using the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm [29] by benchmarking against exact optimization software and brute force solutions for all graphs at \(p=1\) and for graphs with \(n \le 6\) vertices at \(p=2\). We confirm BFGS returns optimal angles for QAOA state preparation in all these cases. Ultimately, our simulation results reveal patterns in the optimized circuits that support heuristics for more efficient parameter selection.

Our characterizations of QAOA performance for solving MaxCut address the approximation ratio and the probability of obtaining the optimal result. While the former measure estimates the average cost function value returned by QAOA relative to the actual optimal value, the latter quantifies the probability to recover the optimal cut. We compile the results for each graph instance into a data set [1] that serves as a validated benchmark to support experimental testing of QAOA on NISQ devices as well as analyses of how specific graph structure features are correlated with performance, see Ref. [30].

The remainder of the presentation is organized as follows: we review QAOA in Sect. 2 and discuss our approach to variational circuit simulations in Sect. 3. We present results from these simulations in Sect. 4, including trends in the performance measures and gate parameters, before concluding with our key findings in Sect. 5.

2 Quantum approximate optimization algorithm

The quantum approximate optimization algorithm (QAOA) is a variational algorithm designed to find good approximate solutions to combinatorial optimization problems [4]. A problem instance is specified by a cost function C(z) with \(z = (z_{n-1}, z_{n-2}, \ldots , z_0)\) and \(z_j \in \{0,1\}\). QAOA recovers a candidate solution \(z^*\) and the quality of this candidate can be quantified by the normalized value of the cost function

Here,

is the globally optimal value with optimal solution

and \({\mathcal {R}}(z_\mathrm{max})=1\) while \({\mathcal {R}} < 1\) otherwise.

QAOA encodes the bitstring z as a quantum state with respect to the computational basis \(\vert z_j \rangle \in \{\vert 0 \rangle , \vert 1 \rangle \}\) such that \(\vert z \rangle = \vert z_{n-1},\ldots ,z_0\rangle \). The cost function C(z) is expressed as the operator \({\hat{C}}\) that is diagonal in the computational basis with matrix elements

The initial state of QAOA is taken as a uniform superposition of the computational basis states

that is transformed by a series of p unitary operations as

with variational angle parameters \(\varvec{\gamma } = (\gamma _1,\ldots ,\gamma _p)\) and \(\varvec{\beta } = (\beta _1,\ldots ,\beta _p)\). The first unitary operator

applies a C(z)-dependent phase to each of the computational basis states. The second unitary

applies coupling in the computational basis as

where \({\hat{X}}_j\) is the Pauli X operator on qubit j. The latter transitions between the \(\{\vert z \rangle \}\) depend on the C(z)-dependent phases from \({\hat{U}}({\hat{C}}, \gamma _q)\) and the angle parameters \((\varvec{\gamma }, \varvec{\beta })\). QAOA selects these parameters to maximize the value \(\langle {\hat{C}} \rangle \), as discussed in more detail in Sect. 2.1.

Measurement of the state \(\vert \psi _p(\varvec{\gamma }, \varvec{\beta })\rangle \) in the computational basis yields each \(z_j \in \{0,1\}\) and therefore a candidate solution \(z^*\). The probability to observe a specific z is given by the Born rule

Following measurement, the observed result z is used to calculate the cost C(z). Repeating the sequence of preparation and measurement approximates the distribution of z given by Eq. (10). The proposed solution \(z^*\) may then be selected as the argument that yields the largest cost function as defined by Eq. (1).

As shown by Farhi et al. [4], the probability that a measurement returns the optimal solution \(z_\mathrm{max}\) converges to unity as the QAOA depth p goes to infinity. At finite p there are theoretical arguments for worst-case performance [26, 27, 31], e.g., for certain bipartite graphs. Apart from worst-case performance bounds, less is known about the performance of QAOA at finite p, where studies suggest a modest p may suffice for achieving a quantum computational advantage [8, 13].

2.1 Gate parameter optimization

Finding optimal solutions, or good approximations, to Eq. (1) requires optimizing the angle parameters that tune each QAOA layer. A standard approach to optimizing these angles is to pick an initial set of values \((\varvec{\gamma }^{(0)},\varvec{\beta }^{(0)})\) and then prepare and measure many copies of the state \(\vert \psi _p(\varvec{\gamma }^{(0)},\varvec{\beta }^{(0)})\rangle \) to estimate the expectation value of the cost function operator as

Analytically, P(z) depends on \(\vert \psi _p(\varvec{\gamma }, \varvec{\beta })\rangle \) through Eq. (10).

When using an optimization algorithm, such as gradient descent or BFGS [29], new angles \((\varvec{\gamma }^{(1)},\varvec{\beta }^{(1)})\) are tested for increases in \( \langle {\hat{C}}\rangle \). After \((\varvec{\gamma }^{(1)},\varvec{\beta }^{(1)})\) have been selected, the process is repeated with the new angles: prepare and measure a set of states \(\vert \psi _p (\varvec{\gamma }^{(1)},\varvec{\beta }^{(1)})\rangle \), send the results \(\{z \}\) to a classical computer to calculate an estimate of \(\langle {\hat{C}}(\varvec{\gamma }^{(1)},\varvec{\beta }^{(1)})\rangle \), then pick new angles to try with the quantum computer. The optimization process is repeated until a set of angles is found to maximize \(\langle {\hat{C}} \rangle \). States with the optimized angles are repeatedly prepared and measured to sample z and identify a solution \(z^*\) that gives the best approximation.

Previous studies have found patterns in the optimized parameters that can potentially simplify gate-angle optimization. Zhou et al. [7] examined regular graphs and developed parameter optimization approaches for deep circuits using linear interpolation or discrete cosine and sine transformations of parameters from lower depths. Brandão et al. [10] have argued that parameters should be transferable between graphs with sufficiently similar structure, suggesting that approaches like that of Zhou et al. may be applicable within any families of structured graphs. Other studies suggest that parameter patterns apply more generally between varying types of graphs, as observed in small samples of random graphs [12, 13], or perhaps even between varying problems and QAOA-like algorithms, as in an approach to Max-k Vertex Cover using a variant formulation of QAOA [23]. Together these studies suggest that parameter patterns may be generic and important in simplifying optimization for QAOA. However, it is unclear if these patterns will extend over general graphs or if they are byproducts of the structures and small samples of graphs examined so far.

In addition to the angle parameters, a depth parameter p defines a given state preparation circuit and instance of QAOA. Higher depths theoretically give better performance [4], but they also require more computationally intensive optimizations. It is therefore efficient to use the smallest depth needed to obtain a desired quality of solution. Previous studies have characterized performance for 2-regular graphs at arbitrary depths [4, 5] and established performance bounds for 3-regular graphs at several low depths [11]. For general graphs, an analytical expression that characterizes performance has been derived for the lowest-possible depth \(p=1\) [5, 6] and the relationship between depth and performance under specific parameter schedules has been examined for graphs with varying symmetry [14]. Worst-case instances [26, 31] and complexity theory bounds [27] also place limitations on QAOA performance as a universal approach to optimization. However, much less is known about QAOA performance on general graphs at depths \(p> 1\) and which depths suffice for applications.

2.2 Characterizing QAOA performance

The quality of a solution \(z^*\) is characterized by the normalized cost function in Eq. (1). In QAOA, \(z^*\) depends on measurements that probabilistically sample the computational basis states following Eq. (10). Therefore, we characterize QAOA performance using the quantum approximation ratio

where \(\langle {\hat{C}}(\varvec{\gamma }, \varvec{\beta })\rangle \) is the cost as in Eq. (11). The quantity r estimates the approximation ratio with respect to measurements of \(\vert \psi _p(\varvec{\gamma }, \varvec{\beta })\rangle \). Previously, Farhi et al. have argued that measured bitstrings \(z^*\) yield \({\mathcal {R}}(z^*) \ge r\) with high probability. They used the variance \(\langle C(z)^2\rangle - \langle C(z) \rangle ^2\) to bound the mean cost value \(\langle C \rangle _\mathrm{meas}\) for a set of measurement samples to within one of the theoretical mean, \(\langle C \rangle -1 \le \langle C \rangle _\mathrm{meas} \le \langle C \rangle +1\). This implies that at least one measured sample \(z^*\) must have \(C(z^*) \ge \langle C \rangle -1\), hence \({\mathcal {R}}(z^*) \ge r-1/C_\mathrm{max} \approx r\), cf. Sec. III of Ref. [4]. The quantum r is thus related to the classical notion of an approximation ratio as a minimum bound on performance for the optimization algorithm.

A second way to characterize QAOA quantifies the probability to obtain an optimal solution \(z_\mathrm{max}\) from Eq. (3).To be clear, QAOA is an approximation algorithm and it is not expected to always return an optimal solution. The optimal solution probability is of interest because it gives the likelihood of obtaining the best possible outcome of using QAOA. In general, there can be multiple optimal solutions, which we denote as the set of k optimal solutions \(\{z_\mathrm{max}^i\}_{i \in [k]}\), and the probability of obtaining an optimal solution is

where \(P(z_\mathrm{max}^i)\) is the probability of \(z_\mathrm{max}^i\) from Eq. (10). Letting \(P_s\) be a desired threshold to recover the max cut, the minimal number of samples \(N_s\) to observe a cut that maximizes the cost function is given by

since the probability to observe no maximum cut in \(N_s\) samples is \(P' = (1-P(C_{max}))^{N_s}\) and \(P' = 1-P_s\).

2.3 MaxCut

MaxCut partitions a graph G such that the number of edges shared between the partitions is maximized. Let \(G=(V,E)\) with \(V = \{n-1,\ldots ,0\}\) the set of vertex labels and \(E = \{ \langle j,k\rangle : j,k \in V\}\) the set of unweighted edges. For each vertex \(j \in V\), a cut \(z = (z_{n-1},\ldots ,z_0)\) assigns a binary label \(z_j \in \{0,1\}\) that denotes the corresponding set. We express the cost function for MaxCut as a sum of pair-wise clauses

with

An individual clause \(C_{\langle j,k\rangle }(z)\) is maximized when two vertices j, k connected by an edge are assigned to opposing sets, and the purpose of MaxCut is to find a cut \(z_\mathrm{max}\) that maximizes Eq. (15). There are always at least two maximum cuts since the \(C_{\langle j,k\rangle }\) is invariant under \(0\leftrightarrow 1\) for all the bits \(z_j\) in any z.

From Eq. (4), the cost function is cast as a sum of operators for the edges

with edge operators

where \(\hat{\mathbb {1}}\) is the identity operator and \({\hat{Z}}_j\) is the Pauli Z operator on qubit j. The notation \({\hat{Z}}_j {\hat{Z}}_k\) uses an implicit tensor product with the identity operator on the Hilbert space of unlisted qubits.

3 Simulations of QAOA

We simulate QAOA to solve MaxCut of all connected non-isomorphic graphs with \(n \le 9\) vertices [32]. We only consider connected graphs since solutions for any disconnected graph can be constructed from separate solutions of the connected graph components. The number of graphs \(N_n\) grows rapidly with the number of vertices n, as shown in Table 1.

3.1 Global optimal solutions for \(p=1\)

We first optimize instances of QAOA for depth \(p=1\) using the expectation value of the cost function operator

Previously, Hadfield derived a general expression for \(\langle {\hat{C}}_{\langle j,k\rangle } \rangle \) at depth \(p=1\) as [6]

where d = deg\((j)-1\) and e = deg\((k)-1\) are one less than the degrees of the vertices j and k connected by the edge \(\langle j,k\rangle \) and f is the number of triangles containing \(\langle j, k\rangle \).

We maximize \(\langle {\hat{C}}(\gamma _1, \beta _1)\rangle \) using the numerical optimization software Couenne [33] [?], which optimizes problems of the form

Here, we specify the continuous variable \({\mathbf {x}} = (\gamma _1, \beta _1)\) and the integer variable \({\mathbf {y}}\) is not used. The function \(F({\mathbf {x}},{\mathbf {y}})\) then corresponds with Eq. (19) while the polynomial constraints \(p_i({\mathbf {x}})\) are not included since there are no constraints on the angles. Couenne simplifies this problem by reformulating it with auxiliary variables. Then, a convex relaxation of the reformulated problem is found and solved using branch and bound techniques. The process is repeated until an optimal solution is recovered [33] [?]. The results provide globally optimal instances of QAOA for \(p=1\).

3.2 Numerical searches for general p

For QAOA depth \(p > 1\), we use the Broyden–Fletcher–Goldfarb–Shanno (BFGS) optimization algorithm [29]. BFGS has been used previously in a wide variety of contexts including QAOA [7]. BFGS begins with an initial set of angles and then iteratively finds angles that converge to a local maximum of \(\langle {\hat{C}} (\varvec{\gamma }, \varvec{\beta }) \rangle \). Each iteration is determined by the numerical gradient of \(\langle {\hat{C}} \rangle \) and an approximate Hessian second-derivative matrix that is constructed from the gradient at successive steps. Using the approximate Hessian when calculating the steps gives faster convergence than first-order methods while also avoiding the computational expense of calculating the exact Hessian.

Convergence of multi-start BFGS optimization for the 853 graphs with \(n=7\) vertices and depths \(p=1,2,3\) in (a–c), respectively. Solid black lines show the mean approximation ratio r over the graphs and black dashed lines show ± one standard deviation. For \(p=1\) BFGS converges to the global optimal solutions from Couenne in red

Apart from our conventional optimization approach with BFGS, it is noteworthy that other quantum-specific optimization approaches [34,35,36] and theoretical results [37] have been topics of ongoing development in the literature. These methods purport to improve on aspects of the variational parameter optimization, and may therefore be of interest in future studies of QAOA. However, for the problems addressed here, BFGS suffices to obtain perfect agreement with exact results in all cases we test, as detailed below.

We test convergence of BFGS using random initial angles and monitoring the local maximum of \(\langle {\hat{C}} \rangle \). We repeat the initialization procedure a fixed number of times that varies with depth p. We use 50 random-angle seeds when \(p=1\), 100 random seeds when \(p=2\), and 500 seeds when \(p=3\). As shown in Fig. 1a, the average and standard deviation of the quantum approximation ratio at \(p=1\) quickly converges to the solution obtained using Couenne. We compared results from BFGS to results from Couenne for every graph with \(n \le 8\) vertices and found exact agreement out to the 6-digit precision of Couenne. We were unable to verify solutions at \(n=9\) due to numerical issues with Couenne, so instead we checked these results using brute force searches, thereby resolving the issue of being unable to verify with Couenne. Note these numerical issues are not with BFGS but with the Couenne approach for checking optimality of BFGS. We are not aware of any numerical issues with BFGS, or the results in this paper, which are computed with BFGS. As shown in Fig. 1b, c, we observe similar behavior in convergence for the cases of \(p=2\) and 3.

We extend our validation of BFGS for \(n=9\) with \(p=1\) and \(n \le 6\) at \(p=2\) using brute force search. We evaluate \(\langle {\hat{C}} \rangle \) for all \((\gamma _1,\gamma _2,\beta _1,\beta _2)\) on a grid with spacing \(\pi /100\) for each angle. We found both BFGS and brute force return values for the optimal \(\langle {\hat{C}} \rangle \) to within an additive factor of \(10^{-2}\). As BFGS consistently recovers larger maxima, we attribute these differences to coarse-graining in the brute force method. We conclude that BFGS is finding globally optimal results at \(p=2\) for these graphs.

To verify our results are consistent for \(n > 6\) with \(p=2\) and for all n with \(p=3\), we run BFGS calculations a second time using different sets of 100 or 500 random seeds. We observe increases in \(\langle {\hat{C}} \rangle \) after the second round of BFGS for less than 1% of graphs at each n and p. We conclude that BFGS typically finds globally optimal solutions at these higher n and p though it is unclear how deviations may grow beyond \(p=3\).

3.3 Symmetry analysis

The optimized angles recovered by BFGS exhibit a variety of symmetries, in which multiple angle solutions give the same optimized expectation value of the cost function. We simplify these results by systematically reporting a single assignment for the optimized angles to compare the distributions of angles recovered across different graph instances. Symmetry analysis proves essential for revealing patterns in the optimized angles discussed in Sects. 4.3–4.4. We summarize how we use the symmetries below with detailed descriptions of the symmetries deferred to “Appendix.”

Many of these observed symmetries are identified by extending the analysis of Zhou et al. for regular graphs [7], which we apply more broadly to graphs where each vertex has even degree or each vertex has odd degree. Specifically, the angles \(\beta _q\) are periodic over intervals of \(\pi /2\) and, without loss of generality, may always transform to the interval \(-\pi /4 \le \beta _q \le \pi /4\) for all q. We always set \(\beta _1 < 0\) following the “time-reversal” symmetry that follows from the dynamics in Eq. (6). There are no symmetries in \(\gamma _q\) for generic graphs, so generally these have \(-\pi \le \gamma _q \le \pi \) for all q. For graphs where every vertex has even degree or every vertex has odd degree, we use symmetries to always transform to angles in the half-intervals \(-\pi /2 \le \gamma _q \le \pi /2\). A small number of graphs have additional symmetries and for these we report angles with the most component \((\gamma _q,\beta _q)\) pairs inside the intervals

4 QAOA simulation results

We analyze QAOA performance on the MaxCut problem for graphs with various numbers of vertices n at various depths p using the two performance measures of Sect. 2.2. We also discuss patterns in the observed optimized angle distributions and we construct a search heuristic that uses these patterns to reduce the computational expense of parameter optimization.

4.1 Approximation ratio

Our first measure of QAOA performance on MaxCut is the approximation ratio r from Eq. (12). We present the distribution of r across graphs with various n and p. Figure 2 shows an example of the distribution of r for all 853 graphs with \(n=7\) vertices for depths \(p = 0,\ldots ,3\) in panels (a)–(d), respectively. Note that \(p=0\) corresponds to the initial state in Eq. (5). Trends for other n are similar and described further below. In Fig. 2, the width of the plotted bars is smaller than the histogram bin width \(w=0.02\) to clearly visualize the distributions. Each panel also overlays a Gaussian distribution \((w/\sqrt{2\pi \sigma ^2})\exp (-(r-{\bar{r}})^2/2\sigma ^2)\) using the mean \({\bar{r}}\) and standard deviation \(\sigma \) in Table 2 as calculated from the observed distributions of r.

Mean r across all graphs with various numbers of vertices n and depths p. (a–d) show \(p=0,\ldots ,3\), respectively. Error bars represent standard deviations of the r, the dashed red line represents the worst-case approximation ratio \(r_\mathrm{GW}\) of the Goemans–Williamson algorithm, and crosses show the smallest observed r

Figure 2a shows r for the initial states of Eq. (5). Every z is equally probable in these states, so r is equivalent to the approximation ratio obtained by averaging random cuts from the uniform distribution. This cuts half the edges in E on average, \(\langle {\hat{C}} \rangle = E/2\), while the maximum number of cuts is bounded by the total number of edges, \(C_\mathrm{max} \le E\), so \(r = \langle {\hat{C}}\rangle /C_\mathrm{max} \ge 1/2\).

Figure 2b–d shows similar distributions of the r for \(p=1,2,3\), respectively. These are well described by Gaussian distributions for each p and r is approaching one as p increases. QAOA instances also perform more similarly with increasing depth as indicated by the decrease in standard deviation of r.

We further quantify increases in r with p by calculating \({\bar{F}}_R(r)\) as the fraction of graphs with approximation ratio r that exceeds a threshold R. This is given by the complimentary cumulative distribution function

where \(N_n\) is the number of non-isomorphic connected graphs with n vertices, \(\sum _{G(n)}\) is the sum over these graphs, and \(\varTheta \) is the Heaviside step function. Table 2 presents \({\bar{F}}_R(r )\) for \(n=7\) with \(R = 0.7, 0.8,\) and 0.9. Almost all graphs exceed \(r \ge 0.7\) at \(p=1\) and more than half exceed \(r \ge 0.8\). At \(p=2\), all graphs have \(r > 0.8\) while most graphs have \(r > 0.9\) at \(p=3\). We note that the latter instances exceed the worst-case lower bound for the approximation ratio of \(r_{GW} = 0.87856\) set by the Goemans–Williamson algorithm [38], which represents the best universal lower bound from a conventional algorithm.

We analyzed the distribution of r for all \(n\le 9\). Similar trends are observed for all n: the mean r over the set of graphs increases as p increases and the distributions become narrower in terms of the standard deviation. The distributions of r over different graphs at the same n are well described by Gaussian distributions when \(n \ge 7\), while for \(n < 7\) the small numbers of graphs yield more irregular histogram distributions.

Figure 3 shows the mean and minimum of r as a function of n for \(p=0,\ldots ,3\) in panels (a)–(d), respectively. Averages for \(p=0\) are approximately 2/3 for all n while the standard deviation decreases with increasing n due to the number of graphs sampled increasing as \(N_n\). For \(p\ne 0\) in Fig. 3b–d, the smallest graphs reach an optimal \(r=1\) while larger graphs decay to steady values of \(r<1\) as n increases. There is not much variation in the mean or standard deviation of r between nearby n, especially at the largest n, so larger n might be expected to return similar r distributions. As p increases, at each n the mean r becomes larger and the standard deviations become smaller, which is consistent with the trends at \(n=7\) seen in Fig. 2. The mean r all exceed the Goemans–Williamson \(r_\mathrm{GW}\) by at least a standard deviation at \(p=3\). The minimum values of the r, however, do not exceed the Goemans–Williamson bound at large n for the p in the figure; the worst-case graphs for each n and p are shown in Supplemental Information. For a detailed analysis of QAOA performance and how it relates to graph structure, based on the data set developed here, we refer the reader to Ref. [30]. For all data considered here, see Ref. [1].

4.2 Probability of the maximum cut

We next assess the performance of QAOA in terms of the probability for obtaining the maximum cut, \(P(C_\mathrm{max})\). Figure 4 shows an example of the normalized distribution of the \(P(C_\mathrm{max})\) for the 853 graphs with \(n=7\) at varying depths p. Statistics describing these distributions are given in Table 3.

The distribution for \(p=0\) shown in Fig. 4a peaks around \(P(C_\mathrm{max}) \approx 0\) with a rapid decrease away from zero, as expected. An exponential fit for this histogram distribution yields \(\exp (-\kappa _0P(C_\mathrm{max}))\) with \(\kappa _0 = 29.1 \pm 0.9\). At \(p=0\), each possible cut is equally probable in the initial state and there are \(2^n\) possible cuts with at least two maximum cuts. This implies the bound

where the “0” subscript refers to the initial state. The minimum value \(P_0(C_\mathrm{max})=1/2^{n-1}\) gives the main contribution to the lowest bar in Fig. 4a while higher values are obtained for those graphs with additional symmetries, for example, in a complete graph where symmetry gives \(n'!/[(n'/2)!^2]\) maximum cuts, where \(n' = n\) for n even and \(n'=n+1\) for n odd. The peak around \(P_0(C_\mathrm{max})=1/2^{n-1}\) indicates that large degeneracies like this are uncommon in the total set of graphs.

Figure 4b shows \(P(C_\mathrm{max})\) when \(p = 1\). We fit this distribution with an exponential starting at the second histogram bar and similar to the \(p=0\) distribution. This yields a smaller decay constant \(\kappa _1 = 7.0 \pm 0.3\). Panels (c) and (d) show the distributions for \(p=2\) and 3, respectively. These distributions are better described by Gaussian distributions with means and standard deviations presented in Table 3. The means increase with p and the distributions widen indicating greater differences between graphs at larger p. The graphs pass thresholds \(P(C_\mathrm{max}) > R\) gradually, as seen by \({\bar{F}}_R(P(C_\mathrm{max}))\).

We contrast distributions of \(P(C_\mathrm{max})\) against distributions of the quantum approximation ratio r in Fig. 2 and Table 2. The mean of \(P(C_\mathrm{max})\) increases the least when going from \(p=0\) to \(p=1\), while by contrast the mean of r increases the most in going from \(p=0\) to \(p=1\). The exponential and Gaussian distributions are also significantly different at small p. At higher p, \(P(C_\mathrm{max})\) and r both follow Gaussian distributions but there are differences in how their widths change with p, with increasing differences between different graphs for \(P(C_\mathrm{max})\) and the opposite for r.

We explain why distributions of r have high averages and narrow widths when concurrently \(P(C_\mathrm{max})\) presents broad widths and relatively small averages. Consider the normalized eigenspectrum \({\hat{C}}/C_\mathrm{max}\) of energies \(C(z)/C_\mathrm{max}\). For each n, we made a histogram of \(C(z)/C_\mathrm{max}\) for each graph, then average these values to obtain an average spectrum for \(C(z)/C_\mathrm{max}\). Figure 5 shows the average spectrum of \(C(z)/C_\mathrm{max}\) at \(n=7\) vertices with each data point indicating the fraction of basis states in a histogram bin of width 1/12 averaged over all graphs with \(n=7\). The results look similar for other \(n \ge 6\) while the distributions for \(n \le 5\) are irregular due to small numbers of graphs. We show error bars in the figure denoting the first and third quartiles of the distributions of the fractions of basis states, calculated by listing the fractions for each graph in increasing order then taking the entries 1/4 and 3/4 of the way up the list, rounded to the nearest integer.

Most basis states give sub-optimal cuts \(C(z)/C_\mathrm{max} < 1\) in the average spectrum of Fig. 5, with the majority of states in the range \(2/3 \lesssim C(z)/C_\mathrm{max} \lesssim 0.95\) and with few optimal states at \(C(z)/C_\mathrm{max} = 1\). Thus, optimization of r in state preparation favors large total probabilities in many states with near-optimal \(C(z)/C_\mathrm{max}\) over the smaller probability of preparing truly optimal states. This gives relatively high and uniform r for different graphs but a much more variable \(P(C_\mathrm{max})\).

Probabilities for obtaining the maximum cut \(P(C_\mathrm{max})\), averaged over graphs at various n, with \(p=0,\ldots ,3\) in (a–d), respectively. Asymmetric error bars show the quartiles of the distributions of \(P(C_\mathrm{max})\), the dashed black line in (a) follows \(1/2^{n-1}\) from Eq. (24), crosses show minimum values, solid lines show the exponential fits of Eq. (25) to the indicated data, with parameters from Table 4

Figure 6 shows how the average of \(P(C_\mathrm{max})\) varies for the distribution of graphs at various n and p using asymmetric error bars expressed as quartiles. The mean of \(P(C_\mathrm{max})\) decreases exponentially with n as shown by the blue fit to the averages

with the fit parameters in Table 4. We fit to a subset of the data at each p to include only the largest n where decay is observed, and we show the n we fit at each p by the location of the fit curve.

At \(p=0\) the first quartiles follow the minimum of Eq. (24), shown by the dashed black line. This indicates many of the graphs saturate the minimum theoretical value of \(P_0(C_\mathrm{max})\). As n increases, the averages and quartiles of the \(P(C_\mathrm{max})\) distributions approach small values near the minimum of Eq. (24).

Figure 6b shows the averages and quartiles of the distributions of \(P(C_\mathrm{max})\) at \(p =1\). The average \(P(C_\mathrm{max})\) have increased relative to \(p=0\) and are again decreasing exponentially with n, but the rate of exponential decay \(k_p\) is about half of the rate at \(p=0\), see Table 4. The error bars denoting quartiles reduce for \(n=3\), as these graphs are approaching full optimization. For higher n the error bars increase to indicate there are larger deviations between different graphs at \(p=1\).

The same trends continue at higher p: the average \(P(C_\mathrm{max})\) increases with p, with decreasing exponential decay constants in Table 4. The distributions widen as p increases at large n, indicating \(P(C_\mathrm{max})\) is increasingly sensitive to graph structure. This is in contrast to the distributions of the r, which narrow as p increases, with more similar r for different graphs.

4.3 Optimized angle distributions

We next discuss the distribution of optimized angle parameters shown in Figs. 7, 8, and 9 for the 853 graphs at \(n=7\). These figures present two-dimensional histograms of the angle distributions, where the optimized (\(\gamma _j, \beta _j)\) have been organized into bins of size \(\pi /20\times \pi /20\) and counted. We use a logarithmic color scale to visualize the distributions and add a base of one counts in each bin so the logarithm does not diverge when there are zero counts.

Patterns have been observed previously in the optimized angles for solving simple families of graphs. Optimized angle patterns have been observed for 3-regular graphs [7], small samples of random graphs [12, 13], and even in a variant formulation of QAOA solving the Max-k Vertex Cover problem [23]. An argument has been given that angle patterns should be expected within families of graphs with systematic structure [10]. However, it is not clear if similar angle patterns hold for over the set of general graphs considered here.

4.3.1 Angle patterns at \(p=1\)

Figure 7 shows the distribution of optimized angles for all graphs with \(n=7\) vertices at depth \(p=1\) in Eq. (6). The overwhelming majority of the angles are focused in the bright spot near \(\gamma _1 \approx -\pi /6\), \(\beta _1 \approx -\pi /8\). This concentration is generic for all n studied: a very limited range of angles \((\gamma _1, \beta _1)\) are observed to optimize almost all graphs with \(p=1\).

We categorize the optimized angles using a partitioning of parameter space. Using \(\varGamma = [-\pi /2,0]\) and \({\mathrm {B}}= [-\pi /4,0]\) from Eq. (22), we say angle-pairs \((\gamma _j,\beta _j)\) inside \((\varGamma ,{\mathrm {B}})\) follow the angle patterns and say angle-pairs outside \((\varGamma ,{\mathrm {B}})\) deviate from the pattern. Categorizing by \((\varGamma ,{\mathrm {B}})\) quantifies how many graphs have angles that follow the observed patterns in Fig. 7 at \(p=1\) and also extends to categorizing graphs when \(p>1\).

Let \(D_{n,p}\) be the number of n-vertex graphs that deviate from the angle patterns at depth p. At \(p=1\), \(D_{n,p}\) is the number of graphs with \((\gamma _1,\beta _1) \not \in (\varGamma ,{\mathrm {B}})\). For general p, \(D_{n,p}\) is the number of graphs with \((\gamma _j,\beta _j) \not \in (\varGamma ,{\mathrm {B}})\) for any j. Let

be the fraction of n-vertex graphs that deviate from the patterns at depth p, where the \(N_n\) is the total number of graphs from Table 1. We calculate the \(D_{n,p}\) using a numerical error tolerance of \(\epsilon = 10^{-5}\), so that \(\gamma _j\) that are in \(\varGamma \) to within numerical error are counted as \(\gamma _j \in \varGamma \), and similarly for the \(\beta _j\).

Table 5 lists \(D_{n,p}\) and the fractions \({\mathcal {D}}_{n,p}\) of graphs that do not follow the angle patterns at \(p=1\) for \(n \le 9\). Every graph follows the pattern with \(n \le 6\), a small number of graphs deviate beginning with \(n=7\). The number of graphs that deviate increases with n, but they are a small fraction of the total number of graphs.

4.3.2 Angle patterns at \(p > 1\)

Figure 8 shows the distributions of optimized angles for all graphs with \(n=7\) vertices with depth \(p=2\). The observed \((\gamma _1, \beta _1)\) differ from the values at \(p=1\) because these angles are optimized together with \((\gamma _2,\beta _2)\) at \(p=2\). The majority of \((\gamma _1, \beta _1)\) are still concentrated near \(\gamma _1 \approx -\pi /6\), \(\beta _1 \approx -\pi /8\) as for \(p=1\), but the distribution is more dispersed within \((\varGamma ,{\mathrm {B}})\) in comparison with Fig. 7. There is a new cluster of angles at \(\gamma _1 \approx \pi /3\) with a spread over all the \(\beta _1\) (recall \(\beta _1 \le 0\) by the symmetry of Sect. 3.3), and a small cluster of graphs with angles \(\gamma _1 \approx -9\pi /10\), \(\beta _1 \approx -\pi /4\).

Figure 8b shows the distribution of the second layer of angles \((\gamma _2,\beta _2)\). The majority of angles follow a peaked distribution in the parameter space of \((\varGamma ,{\mathrm {B}})\). The distribution is shifted from the distribution at \(p=1\) to new angles \(\gamma _2 \approx -\pi /4\) and \(\beta _2 \approx -\pi /13\). There are also subsets of graphs with angles that form clusters around seemingly random parameter values.

We again categorize the optimized angles as agreeing with the pattern when they are inside the parameter space of \((\varGamma ,{\mathrm {B}})\) from Eq. (22) and deviating from the pattern when they are outside \((\varGamma ,{\mathrm {B}})\) to within numerical error. The total fraction of graphs that deviate from the pattern is \({\mathcal {D}}_{n,p}\) from Eq. (26). We further separate the \({\mathcal {D}}_{n,p}\) into components to understand which \((\gamma _j,\beta _j)\) deviate and their correlations at \(p=2,3\).

Let \({\mathcal {D}}^{(j)}_{n,p}\) denote the fraction of graphs that deviate only in the jth angle pair, \((\gamma _j,\beta _j) \not \in (\varGamma ,{\mathrm {B}})\) and \((\gamma _k,\beta _k) \in (\varGamma ,{\mathrm {B}})\) for all \(k \ne j\). Let \({\mathcal {D}}^{(jk)}_{n,p}\) denote the fraction of graphs where two angle pairs deviate, \((\gamma _j,\beta _j) \not \in (\varGamma ,{\mathrm {B}})\) and \((\gamma _k,\beta _k) \not \in (\varGamma ,{\mathrm {B}})\) but \((\gamma _l,\beta _l) \in (\varGamma ,{\mathrm {B}})\) for all \(l \not \in \{k,j\}\), and similarly let \({\mathcal {D}}^{(jkl)}_{n,p}\) denote the fraction of graphs where the jth, kth, and lth angle-pairs deviate from \((\varGamma ,{\mathrm {B}})\). The total fraction of graphs that deviate from the angle patterns is the sum of fractions that deviate in different sets of angle pairs, for example, at \(p=2\) the total fraction of graphs that deviate is the sum of fractions that deviate in one or both angle pairs, \({\mathcal {D}}_{n,p} = {\mathcal {D}}^{(1)}_{n,p} + {\mathcal {D}}^{(2)}_{n,p} + {\mathcal {D}}^{(12)}_{n,p}\).

Table 6 shows the fractions of graphs that deviate from the angle patterns at \(p=2\). The total fraction of graphs that deviate \({\mathcal {D}}_{n,p}\) typically decreases as n increases, however, the \({\mathcal {D}}_{n,p}\) is also increasing with p as seen by comparison with Table 5. The angles deviate most often in \((\gamma _2,\beta _2)\) or in both \((\gamma _1,\beta _1)\) and \((\gamma _2,\beta _2)\), with \({\mathcal {D}}^{(2)}_{n,p} \approx {\mathcal {D}}^{(12)}_{n,p}\), while deviations in only \((\gamma _1,\beta _1)\) are observed less often, with \({\mathcal {D}}^{(1)}_{n,p} < {\mathcal {D}}^{(2)}_{n,p}, {\mathcal {D}}^{(12)}_{n,p}\).

Figure 9 shows the optimized angle distributions at \(p=3\) and \(n=7\); Table 7 shows the fractions of graphs that deviate from \((\varGamma ,{\mathrm {B}})\). These continue the pattern: the majority of angles are concentrated in a single cluster in \((\varGamma ,{\mathrm {B}})\) at each layer, with additional small clusters of angles distributed unpredictably over the parameter space. The fractions of graphs in these clusters increases with p. Deviations from the angle patterns occur most often in the final layer of angles and in combinations connecting the final layer with earlier layers, with relatively large \( {\mathcal {D}}^{(3)}_{n,p} \approx {\mathcal {D}}^{(23)}_{n,p} \approx {\mathcal {D}}^{(123)}_{n,p}\). Typically, smaller fractions of graphs deviate in the earlier layers only, as in \({\mathcal {D}}^{(1)}_{n,p}, {\mathcal {D}}^{(2)}_{n,p}\), and \({\mathcal {D}}^{(12)}_{n,p},\) or in disjoint layers, as in \({\mathcal {D}}^{(13)}_{n,p}\). The total fractions of graphs that deviate from the patterns \({\mathcal {D}}_{n,p}\) decreases with n and increases with p.

The deviations in the clusters of angles away from \((\varGamma ,{\mathrm {B}})\) appears to be due to differences in graph structure. We have confirmed that optimal angles deviate from \((\varGamma ,{\mathrm {B}})\) for some graphs. While parameter optimization is limited by the sampling of random seeds with BFGS, these deviations are not attributed to limits on sampling. We expect the clusters contain graphs with similar structures, with more graphs in the clusters at higher p indicating a greater sensitivity to graph structure features. In Supplemental Information, we show an example of the cluster at \(\gamma _1 > 0\) for \(n=7\) and \(p=2\) from Fig. 8a. The graphs show similarities, such as an axis of reflection symmetry, which may be related to their deviations in the angles. Our line of reasoning, that similar graphs are optimized with similar angles, is consonant with the previous theoretical analysis of Brandão et al. [10] who analyzed angles for graphs drawn from similar distributions; see also Shaydulin et al. [14, 39] who have used graph symmetries in an approach for predicting QAOA angles. A new result brought out by our approach is that angles that optimize a graph at a given p are not necessarily close to the angles that optimize the same graph at \(p+1\). However, for most graphs at the n and p tested here, the angle variations are minimal and contained in \((\varGamma ,{\mathrm {B}})\). This motivates a heuristic approach to identifying optimized angles for most graphs, developed in the next section.

4.4 Median angles

We consider how patterns of optimized angles may identify good approximate angles for most graphs and greatly reduce the computational cost of searching for angles with BFGS. Similar uses of angle patterns have been considered for 3-regular graphs [7], families of structured graphs [10], and small samples of random graphs [13].

We define a set of angles that follows the angle patterns by first taking the median \(\gamma _1\) over all \(\gamma _1\) for graphs with \(n=7\) and \(p=3\), then define similar median \(\gamma _j\) and \(\beta _j\) for each j to obtain the set of angles shown in Table 8. We consider two approaches to QAOA that use these angles to avoid the computationally expensive random seeding of the standard BFGS approach. The first approach uses the median angles in the evolution of Eq. (6) without any optimization, results from this approach are denoted \(r_\mathrm{m}\) and \(P_\mathrm{m}(C_\mathrm{max})\). The second approach uses the median angles as seeds in a single BFGS optimization, results from this approach are denoted \(r_\mathrm{mB}\) and \(P_\mathrm{mB}(C_\mathrm{max})\). Figures 10 and 11 compare results from the median angle approaches at \(n=7\) and \(p=3\) to our previous results from BFGS optimization with hundreds of random seeds. The results are visualized using two-dimensional histograms on a logarithmic color scale.

Figure 10a compares the standard r from the full BFGS search to the \(r_\mathrm{m}\) from the median angles without any optimization. The results are concentrated near the diagonal, shown by the white dotted line, and the mean and standard deviation of the difference \(r - r_\mathrm{m}\) is small, as seen in Table 9. The median angles \(r_\mathrm{m}\) give a good approximation to the r from the full BFGS search.

Two-dimensional histograms comparing the approximation ratio from the full BFGS search to results using the median angles without optimization (a) and using the median angles as seeds in BFGS (b), for \(n=7\) and \(p=3\). Dashed white lines indicate the diagonals where \(r_\mathrm{m} = r\) and \(r_\mathrm{mB} = r\)

Figure 10b compares the standard r against the \(r_{\mathrm{mB}}\) from single BFGS optimizations using the median angles as seeds. The \(r_{\mathrm{mB}}\) are significantly improved in comparison with the \(r_\mathrm{m}\), with 87% of the results satisfying \(r_\mathrm{mB} = r\) up to an additive factor of \(10^{-6}\), with significantly reduced mean and standard deviation of the difference \(r_\mathrm{mB} - r\) in Table 9. The approximation ratio from the single runs of BFGS with the median angle seeds are typically identical or close to results from the BFGS search with random seeds and are calculated at a small fraction of the computational expense.

Figure 11 assesses the probability of obtaining the maximum cut in the median angle approaches. The \(P_\mathrm{m}(C_\mathrm{max})\) in Fig. 11a roughly follow the \(P(C_\mathrm{max})\) along the diagonal but with some considerable spread below the diagonal; note the difference in scale in comparison with the r in Fig. 10. There is also some spread above the diagonal, indicating the median angles give higher probabilities for the maximum cut for some graphs. The mean and standard deviation of the difference \(P_\mathrm{m}(C_\mathrm{max}) - P(C_\mathrm{max})\) is shown in Table 9; they are small but larger than the corresponding values for \(r-r_\mathrm{m}\). Overall, the median angles give probabilities for the maximum cut that are typically close to the full BFGS results, but the probabilities are more sensitive to graph structures and vary significantly for some graphs.

Histograms similar to Fig. 10 but showing the probability of obtaining a maximum cut

Figure 11b shows \(P_\mathrm{mB}(C_\mathrm{max})\), where the median angles have been used in a single BFGS optimization. The results are much closer to the diagonal than in Fig. 11a and the mean and standard deviation of the difference \(P(C_\mathrm{max}) - P_\mathrm{mB}(C_\mathrm{max})\) are smaller than the corresponding quantities with \(P_\mathrm{m}(C_\mathrm{max})\). In comparison with the \(r_\mathrm{mB}\), we again see the probability of obtaining the maximum cut is more sensitive to the choice of angles, with greater deviations for some graphs in the figures and a larger mean and standard deviation of the difference in Table 9. However, for most graphs the median angles work very well, with 87% of graphs obtaining identical probabilities for the maximum cut using the median angles as seeds in BFGS and using the much more computationally expensive search over random seeds.

5 Conclusions

We have presented results that identify empirical performance bounds on optimized instances of QAOA for MaxCut. Using numerical simulations, we investigated an exhaustive set of MaxCut instances for depths \(p \le 3\) on graphs with \(n\le 9\) vertices. We calculated optimal solutions using exact numerical search and brute force search at \(p=1\) and 2 and validated results from BFGS at depths \(p \le 3\). The catalog of graph instances, optimized angles, and simulated states are available online [1].

Our analysis used the approximation ratio r and the probability for obtaining a maximum cut \(P(C_\mathrm{max})\) as measures of QAOA performance. We observed that r becomes more similar across graph structures as p and n increase and the Gaussian distributions of r narrow. Most graphs at \(n \le 9\) exceed the worst-case Goemans–Williamson bound by \(p=3\) in these narrow distributions, indicating viability of modest-depth QAOA to outperform the lower GW bound in many (though not all) instances. A detailed analysis of correlations between graph features and QAOA performance, using this data set, has been presented in Ref. [30].

In contrast to the narrowing distributions of r, distributions of \(P(C_\mathrm{max})\) were found to broaden with increasing p. We attributed the difference to design of the cost function and its corresponding spectrum. A preponderance of nearly optimal states skews the optimization of r away from the much smaller set of truly optimal states. While this yields a large, uniform value for r, it leaves \(P(C_\mathrm{max})\) less constrained. One alternative is to focus optimization on state preparation to ensure the largest values of \(P(C_\mathrm{max})\), for example by choosing gate angles that optimize a nonlinear function such as \(\langle \exp (\alpha {\hat{C}})\rangle \) [24]. For \(p>0\), we observed an exponential decay in \(P(C_\mathrm{max})\) with respect to n. The rate constant, \(k_p\), was found to decrease as p increases, and this raises the question as to whether such exponential behavior can predict performance metrics at larger n and p.

The patterns observed in the optimized angles across this exhaustive set of instances mirror previous results for narrower cases [7, 10, 12, 13, 23]. Using these patterns as a search heuristic demonstrated high-quality results for most graphs and required a significantly smaller computational cost than BFGS search with random seeding. For larger n and p, this work suggests identifying median angles from optimizations on a small set of graphs and using these angles on varying graphs at the same n and p. If the angle distributions remain concentrated, then median angles from the small set of graphs should successfully transfer to the majority of other graphs. Future works could check on the validity of the heuristic at higher n and p by comparing against optimized results generated from other methods, such as BFGS or quantum natural gradient, or assess how graph features affect the success of angle transfer. Identifying the prevalence of angle patterns at larger n and p as well as the correlation with graph properties may enable new search heuristics, for example, for general QAOA-like algorithms, as suggested by the work of Cook et al. [23].

References

Lotshaw, P.C., Humble, T.S.: QAOA dataset (2021). https://code.ornl.gov/qci/qaoa-dataset-version1

Arute, F., Arya, K., Babbush, R., Bacon, D., Bardin, J.C., Barends, R., Biswas, R., Boixo, S., Brandao, F.G., Buell, D.A., et al.: Quantum supremacy using a programmable superconducting processor. Nature 574(7779), 505 (2019)

Zhong, H.S., Wang, H., Deng, Y.H., Chen, M.C., Peng, L.C., Luo, Y.H., Qin, J., Wu, D., Ding, X., Hu, Y., Hu, P., Yang, X.Y., Zhang, W.J., Li, H., Li, Y., Jiang, X., Gan, L., Yang, G., You, L., Wang, Z., Li, L., Liu, N.L., Lu, C.Y., Pan, J.W.: Quantum computational advantage using photons. Science (2020). https://doi.org/10.1126/science.abe8770

Farhi, E., Goldstone, J., Gutmann, S.: A quantum approximate optimization algorithm. arXiv preprint arXiv:1411.4028 (2014)

Wang, Z., Hadfield, S., Jiang, Z., Rieffel, E.G.: Quantum approximate optimization algorithm for MaxCut: a fermionic view. Phys. Rev. A 97(2), 022304 (2018)

Hadfield, S.: Quantum algorithms for scientific computing and approximate optimization. arXiv preprint arXiv:1805.03265. Eq. 5.10, p. 114 (2018)

Zhou, L., Wang, S.T., Choi, S., Pichler, H., Lukin, M.D.: Quantum approximate optimization algorithm: performance, mechanism, and implementation on near-term devices. Phys. Rev. X 10, 021067 (2020)

Guerreschi, G.G., Matsuura, A.Y.: QAOA for max-cut requires hundreds of qubits for quantum speed-up. Sci. Rep. 9, 1–7 (2019)

Medvidović, M., Carleo, G.: Classical variational simulation of the quantum approximate optimization algorithm. arXiv preprint arXiv:2009:01760v1 (2020)

Brandão, F.G.S.L., Broughton, M., Farhi, E., Gutmann, S., Neven, H.: For fixed control parameters the quantum approximate optimization algorithm’s objective function value concentrates for typical instances. arXiv preprint arXiv:1812.04170 (2018)

Wurtz, J., Love, P.: Bounds on MAXCUT QAOA performance for \(p > 1\). arXiv preprint arXiv:2010.11209 (2020)

Shaydulin, R., Alexeev, Y.: Evaluating quantum approximate optimization algorithm: a case study. In: 2019 Tenth International Green and Sustainable Computing Conference (IGSC), pp. 1–6 (2019). https://doi.org/10.1109/IGSC48788.2019.8957201

Crooks, G.E.: Performance of the quantum approximate optimization algorithm on the maximum cut problem. arXiv preprint arXiv:1811.08419 (2018)

Shaydulin, R., Hadfield, S., Hogg, T.,, Safro, I.: Ruslan Shaydulin and Stuart Hadfield and Tad Hogg and Ilya Safro. arXiv preprint arXiv:2012.04713 (2020)

Herrman, R., Ostrowski, J., Humble, T.S., Siopsis, G.: Lower bounds on circuit depth of the quantum approximate optimization algorithm. Quant. Inf. Process. 20(2), 1–17 (2021)

Akshay, V., Philathong, H., Morales, M.E.S., Biamonte, J.D.: Reachability deficits in quantum approximate optimization. Phys. Rev. Lett. 124, 090504 (2020)

Szegedy, M.: What do QAOA energies reveal about graphs? arXiv preprint arXiv:1912.12272v2 (2020)

Pagano, G., Bapat, A., Becker, P., Collins, K.S., De, A., Hess, P.W., Kaplan, H.B., Kyprianidis, A., Tan, W.L., Baldwin, C., Brady, L.T., Deshpande, A., Liu, F., Jordan, S., Gorshkov, A.V., Monroe, C.: Quantum approximate optimization of the long-range Ising model with a trapped-ion quantum simulator. Proc. Natl. Acad. Sci. 117(41), 25396 (2020). https://doi.org/10.1073/pnas.2006373117

Wang, Z., Rubin, N.C., Dominy, J.M., Rieffel, E.G.: \(XY\)-mixers: analytical and numerical results for the quantum alternating operator ansatz. Phys. Rev. A 101, 012320 (2020)

Zhu, L., Tang, H.L., Barron, G.S., Mayhall, N.J., Barnes, E., Economou, S.E.: An adaptive quantum approximate optimization algorithm for solving combinatorial problems on a quantum computer. arXiv preprint arXiv:2005.10258 (2020)

Jiang, Z., Rieffel, E.G., Wang, Z.: Near-optimal quantum circuit for Grover’s unstructured search using a transverse field. Phys. Rev. A 95, 062317 (2017)

Bärtschi, A., Eidenbenz, S.: Grover mixers for QAOA: shifting complexity from mixer design to state preparation. arXiv preprint arXiv:2006.00354v2 (2020)

Cook, J., Eidenbenz, S., Bärtschi, A.: The quantum alternating operator Ansatz on maximum \(k\)-vertex cover. arXiv preprint arXiv:1910.13483v2 (2020)

Li, L., Fan, M., Coram, M., Riley, P., Leichenauer, S.: Quantum optimization with a novel Gibbs objective function and ansatz architecture search. Phys. Rev. Res. 2, 023074 (2020)

Tate, R., Farhadi, M., Herold, C., Mohler, G., Gupta, S.: Bridging classical and quantum with SDP initialized warm-starts for QAOA. arXiv preprint arXiv:2010.14021 (2020)

Bravyi, S., Kliesch, A., Koenig, R., Tang, E.: Obstacles to variational quantum optimization from symmetry protection. Phys. Rev. Lett. 125, 260505 (2020)

Khot, S., Kindler, G., Mossel, E., O’Donnel, R.: Optimal inapproximability results for MAX-CUT and other 2-variable CSPs? Electronic Colloquium on Computational Complexity, Report No. 101 (2005)

Hastings, M.B.: Classical and quantum bounded depth approximation algorithms. arXiv:1905.07047 (2019)

Press, W.H., Flannery, B.P., Teukolsky, S.A.: Numerical recipes in Fortran 77: the art of scientific computing, 2nd edn. Cambridge University Press (1993). https://people.sc.fsu.edu/\(\sim \)inavon/5420a/DFP.pdf

Herrman, R., Treffert, L., Ostrowski, J., Lotshaw, P.C., Humble, T.S., Siopsis, G.: Impact of graph structures for QAOA on MaxCut. Quantum Inf. Process. 289:1–21 (2021)

Farhi, E., Gamarnik, D., Gutmann, S.: The quantum approximate optimization algorithm needs to see the whole graph: worst case examples. arXiv:2005.08747 (2020)

McKay, B.: Graphs. https://users.cecs.anu.edu.au/\(\sim \)bdm/data/graphs.html. Accessed 8 July (2020)

Belotti, P.: Couenne: a user’s manual. Technical report, Lehigh University, Technical report (2009)

Yamamoto, N.: On the natural gradient for variational quantum eigensolver. arXiv:1909.05074 (2019)

Wierichs, D., Gogolin, C., Kastoryano, M.: Avoiding local minima in variational quantum eigensolvers with the natural gradient optimizer. Phys. Rev. Res. 2, 43246 (2020)

Stokes, J., Izaac, J., Killoran, N., Carleo, G.: Quantum natural gradient. Quantum 4, 269 (2020)

Harrow, A.W., Napp, J.C.: Low-depth gradient measurements can improve convergence in variational hybrid quantum-classical algorithms. Phys. Rev. Lett. 126, 140502 (2021)

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. Assoc. Comput. Mach. 42(6), 1115 (1995)

Shaydulin, R., Wild, S.M.: Exploiting symmetry reduces the cost of training QAOA. IEEE Trans. Quantum Eng. 2, 1–9 (2021)

Belotti, P., Lee, J., Liberti, L., Margot, F., Wächter, A.: Branching and bounds tightening techniques for nonconvex MINLP. Optim. Methods Softw. 24(4-5), 597–634 (2009)

Acknowledgements

P. C. L. thanks Zak Webb and Yan Wang for discussing time-reversal symmetry. This work was supported by DARPA ONISQ program under award W911NF-20-2-0051. J. Ostrowski acknowledges the Air Force Office of Scientific Research award, AF-FA9550-19-1-0147. G. Siopsis acknowledges the Army Research Office award W911NF-19-1-0397. J. Ostrowski and G. Siopsis acknowledge the National Science Foundation award OMA-1937008. This research used resources of the Compute and Data Environment for Science (CADES) at the Oak Ridge National Laboratory, which is supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC05-00OR22725.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None of the authors has a conflict of interest to declare that is relevant to the content of the paper.

Data and code

Data and code from this study are available online [1].

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

P. C. Lotshaw: This manuscript has been authored by UT-Battelle, LLC under Contract No. DE-AC05-00OR22725 with the U.S. Department of Energy. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this manuscript, or allow others to do so, for United States Government purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-public-access-plan).

Angle symmetries

Angle symmetries

In this appendix, we discuss details of the angle symmetries from Sect. 3.3. Most of the symmetries have been described previously by Zhou et al. [7], although we note two of the symmetries they described for regular graphs apply more broadly to graphs where every vertex has even degree or every vertex has odd degree. Each symmetry relates different sets of angles \((\varvec{\gamma }, \varvec{\beta })\) and \((\varvec{\gamma }', \varvec{\beta }')\) that give the same approximation ratio in Eq. (11),

where

with \((\varvec{\gamma }', \varvec{\beta }')\) related to \((\varvec{\gamma }, \varvec{\beta })\) in different ways for the different symmetries. An example is periodic behavior of the \(\beta _q\) angles over intervals of \(\pi /2\), we use this to restrict every \(\beta _q\) component to the interval \(-\pi /4 \le \beta _q \le \pi /4\), as described by Zhou et al. There are a variety of additional symmetries we describe below, with derivations in subsequent subsections.

The first type of symmetry applies to graphs where every vertex has even degree. In this case the \(\gamma _q\) angles are periodic over intervals of \(\pi \) since all the eigenvalues of \({\hat{C}}\) are even, as shown in detail in the next subsection. Thus the symmetry of Eq. (27) holds for any pair of angles with \(\varvec{\beta } = \varvec{\beta }'\) and

where \(\varvec{\gamma }'\) differs from \(\varvec{\gamma }\) by a shift \(\gamma _q' = \gamma _q \pm \pi \) for any q. We use this symmetry to organize the angle distributions so \(|\gamma _q| \le \pi /2\) for all q for graphs where every vertex degree is even. We search for generic optimized angles in our implementation of the BFGS algorithm from Sect. 3.2, but if we find a \(|\gamma _q| > \pi /2\) then we add or subtract \(\pi \) to get a \(|\gamma _q'| \le \pi /2\).

The second type of symmetry applies to graphs where every vertex has odd degree. This gives a joint symmetry in both sets of angles in Eq. (27),

In Eq. (30), \(\varvec{\gamma }'\) differs from \(\varvec{\gamma }\) in the qth component, \(\gamma _q' = \gamma _q \pm \pi \) for any q, and \(\varvec{\beta }'\) differs from \(\varvec{\beta }\) in the sign of all subsequent components, \(\beta _r'= -\beta _r\) for all \(r \ge q\). The proof follows from Pauli operator commutation relations applied to the two unitary operators of Eqs. (7)–(8), as shown in detail later. In our calculations, we use this symmetry to organize the angles so \(|\gamma _q| \le \pi /2\) for all q for graphs where every vertex degree is odd, similar to how we organize the angles when all the vertex degrees are even.

The third analytic symmetry we use is the “time-reversal” symmetry [7]

The symmetry is related to the \({\hat{B}}\) and \({\hat{C}}\) operators in Eqs. (7)–(8) and the initial state \(\vert \psi _0\rangle \) of Eq. (5), which have real-valued matrix elements and coefficients in the computational basis. We give a proof at the end of “Appendix.” We use this symmetry to always transform to angles with \(\beta _1 \le 0\). The \(\beta _q\) for \(q > 1\) can be positive or negative.

We find additional symmetries in the optimized angles for a small subset of graphs. For these, we report the angles with the most component-pairs \((\gamma _i,\beta _i) \in (\varGamma , {\mathrm {B}})\) from Eq. (22). Using the angles with the most components in \((\varGamma , {\mathrm {B}})\) is designed to emphasize the angle patterns in Sect. 4.3. The number of graphs M(p) we used the \((\varGamma , {\mathrm {B}})\) symmetry on is shown for each n and p in Table 10. The symmetry was used most often when a graph became fully optimized. For example, we found two sets of angles for an \(n=3\) graph that optimized the cost function \(\langle {\hat{C}} \rangle = C_\mathrm{max}\); we saved the angles with the most components in \((\varGamma ,{\mathrm {B}})\). Asterisks in the table denote each graph which we used the rule on for that n and p was fully optimized. Note when graphs are optimized at some p we do not simulate them at depths \(p' > p\), so they do not carry over to columns for greater depths in the table. Overall, the rule is applied to a very limited subset of the graphs we study.

1.1 Symmetry when all vertices have even degree

When all the vertices have even degree, the angle components \(\gamma _q\) are periodic over intervals of \(\pi \), as discussed around Eq. (29). To demonstrate equivalence of \(\langle {\hat{C}} \rangle \) in Eq. (27) for the angles \(\varvec{\gamma }'\) from Eq. (29) and \(\varvec{\gamma }\) from Eq. (28), in the next paragraph we show \({\hat{C}}\) of Eq. (17) has only even eigenvalues when each vertex has even degree. Then \(C(z) = 2m_z\) for all z, where \(m_z\) is an integer. The periodicity over \(\pi \) follows since the matrix elements from the unitary operator \({\hat{U}}({\hat{C}},\gamma _q)\) of Eq. (7) are invariant under changes \(\gamma _q \rightarrow \gamma _q \pm \pi \), since

where the factor \(1 = \exp (\mp i2m_z\pi )\) connects the two expressions.

To show the eigenvalues C(z) are all even when all the vertex degrees are even, we begin by showing there exists a single bitstring \(z^{(0)}\) for which \(C(z^{(0)})\) is even. Then we show that if C(z) is even for any z and all the vertex degrees are even, then modifying any bit \(z_k\) in the bitstring z to get a new bitstring \(z'\) will give a \(C(z')\) that is also even. Together these imply that every bitstring has even C(z) when all the vertex degrees are even, since any bitstring can be made from a series of modifications to \(z^{(0)}\) and every modification gives an even C(z).

First consider the zero bitstring \(z^{(0)} = (0,0,\ldots ,0)\). From Eqs. (15)–(16), this has \(C(z^{(0)}) = 0\) which is even. Next consider an arbitrary bitstring \(z = (z_{n-1}, z_{n-2}, \ldots , z_k, \ldots , z_0)\) for which C(z) is even. Suppose we flip a bit \(z_k\) to make a new bitstring \(z' = (z_{n-1}, z_{n-2}, \ldots , z_k', \ldots , z_0)\) where \(z_k' \ne z_k\) but the \(z_{j \ne k}\) are the same. The change \(z_k \rightarrow z_k'\) will change the value of each \(C_{\langle j,k\rangle }\) from Eq. (16) that is associated with \(z_k\), so that if \(C_{\langle j,k\rangle }(z)\) = 1 then \(C_{\langle j,k\rangle }(z') = 0\) and vice-versa. The total number of edge terms \(C_{\langle j,k\rangle }\) can be separated into \(\kappa \) terms that increase in value and \(\delta \) terms that decrease in value when \(z \rightarrow z'\). The value of the cost function for \(z'\) can then be expressed as \(C(z') = C(z) + \kappa - \delta \). The number of edge components \(C_{\langle j,k\rangle }\) that depend on \(z_k\) is even when each vertex degree is even, so \(\delta + \kappa = 2m\) for some integer m. This implies that \(\kappa \) and \(\delta \) are either both even or both odd; either way, the difference \(\kappa -\delta \) is even. Since C(z) is even by assumption, \(C(z') = C(z) + \kappa - \delta \) is also even.

We have shown there is a bitstring \(z^{(0)}\) for which \(C(z^{(0)})\) is even and we have shown changing any such bitstring gives a new \(C(z')\) which is also even. This implies C(z) is even for all z for every graph where each vertex degree is even. By Eq. (32), the angle components \(\gamma _q\) are periodic over intervals of \(\pi \) for these graphs.

1.2 Symmetry when all vertices have odd degree

When all the vertices have odd degree, there is a symmetry Eq. (27) for angle-pairs \((\varvec{\gamma }, \varvec{\beta })\) and \((\varvec{\gamma }',\varvec{\beta }')\) from Eqs. (28) and (30), respectively. Only graphs with an even number of vertices can have this symmetry since it is impossible to have a graph with an odd number of vertices where every vertex has odd degree. We prove the symmetry holds in a simple case with \(p=1\), the extension to \(p>1\) uses similar reasoning. We begin by considering the relations between the unitary operators with \((\varvec{\gamma }, \varvec{\beta })\) and \((\varvec{\gamma }',\varvec{\beta }')\), then use the analysis to relate the time evolution and probabilities P(z) for states with the different angles.

Let \(\gamma _1' = \gamma _1 \pm \pi \). The unitary operator \({\hat{U}}({\hat{C}}, \gamma _1)\) from Eq. (7) is a product of unitary-operator components for each edge, a single component can be expressed as

Separate out a term \(\exp \left( \mp i (\pi /2){\hat{Z}}_j {\hat{Z}}_k\right) = \mp i {\hat{Z}}_j {\hat{Z}}_k\) to obtain

where \(e^{i\delta }\) is an overall phase.

Now consider the unitary operator \({\hat{U}}({\hat{C}}, \gamma _1)\) of Eq. (7). Using Eq. (34), we have

where \(e^{i\eta }\) is a phase factor. The term \(\prod _{j=0}^{n-1} {\hat{Z}}_j\) comes from the product of \({\hat{Z}}_j {\hat{Z}}_k \) for all the edges—each \({\hat{Z}}_j\) is raised to an odd power in the product since each vertex degree is odd and using \(({\hat{Z}}_j)^2 = \hat{\mathbb {1}}\) reduces this to \(\prod _{j=0}^{n-1} {\hat{Z}}_j \).

We will use Eq. (35) to simplify the time evolution of \(\vert \psi _p(\gamma _1,\beta _1)\rangle \) in Eq. (6). This includes the unitary operator \({\hat{U}}({\hat{B}}, \beta _1)\) from Eq. (8), which shows up in the product \({\hat{U}}({\hat{B}}, \beta _1) {\hat{U}}({\hat{C}}, \gamma _1)\). Our next goal will be to use commutation relations to move the \(\prod _{j=0}^{n-1} {\hat{Z}}_j\) to the left side of the product.

Express the \({\hat{U}}({\hat{B}},\beta _1)\) from Eq. (8) as

Now consider the product of the unitary operators

The Pauli operators anticommute so

We are now ready to show the angles \((\gamma _1, \beta _1)\) and \((\gamma _1',\beta _1')\) give equivalent \(\langle {\hat{C}} \rangle \) in Eq. (11), where \(\gamma _1' = \gamma _1 \pm \pi \) and \(\beta _1' = - \beta _1\). To demonstrate the equivalence we show the computational basis state probabilities P(z) are the same for both \((\gamma _1, \beta _1)\) and \((\gamma _1',\beta _1')\).

The probability amplitude for a basis state \(\vert z \rangle \) is

Using Eq. (38), this can be expressed as

Squaring the amplitudes, we obtain

The \(\left( \prod _{j=0}^{n-1} {{\hat{Z}}_j} \right) \) can be moved to the left since it commutes with \(\vert z \rangle \langle z \vert \), then using \(\left( \prod _{j=0}^{n-1} {{\hat{Z}}_j}^\dag \right) \left( \prod _{j=0}^{n-1} {{\hat{Z}}_j} \right) = \hat{\mathbb {1}}\) gives

where P(z) is the probability of \(\vert z \rangle \) from Eq. (10). The P(z) are the same for \((\gamma _1, \beta _1)\) and \((\gamma _1',\beta _1')\) so \(\langle {\hat{C}}(\gamma _1,\beta _1)\rangle = \langle {\hat{C}}(\gamma _1',\beta _1')\rangle \) in Eq. (11), which is the desired symmetry.

1.3 Time-reversal symmetry

We finally consider the “time-reversal” symmetry with the angles of Eqs. (28) and (31) in the symmetry relation Eq. (27) [7]. The symmetry is related to structures of the \({\hat{B}}\) and \({\hat{C}}\) operators, which have real-valued matrix elements in the computational basis in Eqs. (9) and (17), and the structure of the initial state \(\vert \psi _0\rangle \), which has real-valued coefficients in the computational basis in Eq. (5). To demonstrate the symmetry we calculate generic computational basis probabilities P(z) for states with both sets of angles and show they are equal, thus the \(\langle {\hat{C}} \rangle \) are equal following Eq. (11).

Consider the probability amplitude \(\langle z \vert \psi _p(\varvec{\gamma },\varvec{\beta })\rangle \) for a single basis state \(\vert z \rangle \). From Eqs. (5)–(8) this is

Taking the complex conjugate of Eq. (43) only changes the sign of the angle terms since the \({\hat{B}}\) and \({\hat{C}}\) matrices have real-valued matrix elements in the computational basis, thus

The Born probabilities P(z) from Eq. (10) are the same for both states since

so the \(\langle {\hat{C}} \rangle \) are the same in Eq. (11).

Rights and permissions

About this article

Cite this article

Lotshaw, P.C., Humble, T.S., Herrman, R. et al. Empirical performance bounds for quantum approximate optimization. Quantum Inf Process 20, 403 (2021). https://doi.org/10.1007/s11128-021-03342-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11128-021-03342-3