Abstract

The aim of the paper was to propose a bounded-error quantum polynomial-time algorithm for the max-bisection and the min-bisection problems. The max-bisection and the min-bisection problems are fundamental NP-hard problems. Given a graph with even number of vertices, the aim of the max-bisection problem is to divide the vertices into two subsets of the same size to maximize the number of edges between the two subsets, while the aim of the min-bisection problem is to minimize the number of edges between the two subsets. The proposed algorithm runs in \(O(m^2)\) for a graph with m edges and in the worst case runs in \(O(n^4)\) for a dense graph with n vertices. The proposed algorithm targets a general graph by representing both problems as Boolean constraint satisfaction problems where the set of satisfied constraints are simultaneously maximized/minimized using a novel iterative partial negation and partial measurement technique. The algorithm is shown to achieve an arbitrary high probability of success of \(1-\epsilon \) for small \(\epsilon >0\) using a polynomial space resources.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Given an undirected graph \(G = (V ,E)\) with a set V of even number of vertices and a set E of unweighted edges, two graph bisection problems will be considered in the paper: the max-bisection problem and the min-bisection problem. The goal of the max-bisection problem is to divide V into two subsets A and B of the same size so as to maximize the number of edges between A and B, while the goal of the min-bisection problem is to minimize the number of edges between A and B. In theory, both bisection problems are NP-hard for general graphs [9, 14].

These classical combinatorial optimization problems are special cases of graph partitioning [13]. The graph partitioning has many applications, for example divide-and-conquer algorithms [24], compiler optimization [21], VLSI circuit layout [5], load balancing [17], image processing [30], computer vision [22], distributed computing [25] and route planning [7]. In practice, there are many general-purpose heuristics for graph partitioning, e.g., [18, 31, 32] that handle particular graph classes, such as [6, 26, 32]. There are also many practical exact algorithms for graph bisection that use the branch-and-bound approach [8, 23]. These approaches make expensive usage of time and space to obtain lower bounds [1, 2, 8, 16].

On conventional computers, approximation algorithms have gained much attention to tackle the max-bisection and the min-bisection problems. The max-bisection problem, for example, has an approximation ratio of 0.7028 due to [10] which is known to be the best approximation ratio for a long time by introducing the RPR\(^2\) rounding technique into semidefinite programming (SDP) relaxation. In [12], a poly-time algorithm is proposed that given a graph admitting a bisection cutting, a fraction \(1-\varepsilon \) of edges finds a bisection cutting an \((1-g(\varepsilon ))\) fraction of edges where \(g(\varepsilon ) \rightarrow 0\) as \(\varepsilon \rightarrow 0\). A 0.85-approximation algorithm for the max-bisection is obtained in [28]. In [33], the SDP relaxation and the RPR\(^2\) technique of [10] have been used to obtain a performance curve as a function of the ratio of the optimal SDP value over the total weight through finer analysis under the assumption of convexity of the RPR\(^2\) function. For the min-bisection problem, the best-known approximation ratio is \(O(\log \,n)\) [27] with some limited graph classes have known polynomial-time solutions such as grids without holes [11] and graphs with bounded tree width [20].

The aim of the paper was to propose an algorithm that represents the two bisection problems as Boolean constraint satisfaction problems where the set of edges are represented as set of constraints. The algorithm prepares a superposition of all possible graph bisections using an amplitude amplification technique, then evaluates the set of constraints for all possible bisections simultaneously and then amplifies the amplitudes of the best bisections that achieve the maximum/minimum satisfaction to the set of constraints using a novel amplitude amplification technique that applies an iterative partial negation and partial measurement. The proposed algorithm targets a general graph where it runs in \(O(m^2)\) for a graph with m edges and in the worst case runs in \(O(n^4)\) for a dense graph with number edges close to \(m = {\textstyle {{n(n - 1)} \over 2}}\) with n vertices to achieve an arbitrary high probability of success of \(1-\epsilon \) for small \(\epsilon >0\) using a polynomial space resources.

The paper is organized as follows: Sect. 2 shows the data structure used to represent a graph bisection problem as a Boolean constraint satisfaction problem. Section 3 presents the proposed algorithm with analysis on time and space requirements. Section 4 concludes the paper.

2 Data structures and graph representation

Several optimization problems, such as the max-bisection and the min-bisection problems, can be formulated as Boolean constraint satisfaction problems [3, 4] where a feasible solution is a solution with as many variables set to 0 as variables set to 1, i.e., balanced assignment, as follows: For a graph G with n vertices and m edges, consider n Boolean variables \(v_0, \ldots , v_{n-1}\) and m constraints by associating with each edge \((a, b) \in E\) the constraint \(c_l=v_a\oplus v_b\), with \(l=0,1,\ldots ,m-1\), then the max-bisection is the problem that consists of finding a balanced assignment to maximize the number of constraints equal to logic-1 from the m constraints, while the min-bisection is the problem that consists of finding a balanced assignment to maximize the number of constraints equal to logic-0 from the m constraints, such that if a Boolean variable is set to 0, then the associated vertex belongs to the first partition, and if a Boolean variable is set to 1, then the associated vertex belongs to the second partition.

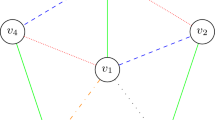

For example, consider the graph G shown in Fig. 1a. Let \(G = (V ,E)\), where,

Assume that each vertex \(a \in V\) is associated with a Boolean variable \(v_a\), then the set of vertices V can be represented as a vector X of Boolean variables as follows,

and if each edge \((a, b) \in E\) is associated with a constraint \(c_l=v_a\oplus v_b\), then the set of edges E can be represented as a vector Z of constraints as follows,

such that,

In general, a bisection \(G_P\) for the graph G can be represented as \(G_p = (x ,z(x))\) such that each vector \(x \in \{0,1\}^n \) of variable assignments is associated with a vector \(z(x)\in \{0,1\}^m\) of constraints evaluated as functions of the variable assignment x. In the max-bisection and the min-bisection problems, the vector x of variable assignments is restricted to be balanced so there are \(M = \left( {\begin{array}{ll} n \\ {{\textstyle {n \over 2}}} \\ \end{array}} \right) \) possible variable assignments among the \(N=2^n\) possible variable assignments, and the solution of the max-bisection problem is to find the variable assignment that is associated with a vector of constraints that contains the maximum number of 1’s, and the solution for the min-bisection problem is to find the variable assignment that is associated with a vector of constraints that contains the maximum number of 0’s. For example, for the graph G shown in Fig. 1a, a max-bisection for G is ((0, 1, 0, 1, 0, 1, 1, 0), (1, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1)) with 10 edges connecting the two partitions as shown in Fig. 1b, and a min-bisection for G is ((0, 0, 0, 0, 1, 1, 1, 1), (0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0)) with 3 edges connecting the two partitions as shown in Fig. 1c. It is important to notice that a variable assignment \(x= (0,1,0,1,0,1,1,0)\) is equivalent to \(\overline{x}= (1,0,1,0,1,0,0,1)\), where \(\overline{x}\) is the bit-wise negation of x.

3 The algorithm

Given a graph G with n vertices and m edges, the proposed algorithm is divided into three stages: The first stage prepares a superposition of all balanced assignments for the n variables. The second stage evaluates the m constraints associated with the m edges for every balanced assignment and stores the values of constraints in constraint vectors entangled with the corresponding balanced assignments in the superposition. The third stage amplifies the constraint vector with maximum (minimum) number of satisfied constraints using a partial negation and iterative measurement technique.

3.1 Balanced assignments preparation

To prepare a superposition of all balanced assignments of n qubits, the proposed algorithm can use any amplitude amplification technique, e.g., [15, 34, 35]. An extra step should be added after the amplitude amplification to create an entanglement between the matched items and an auxiliary qubit \(\left| {ax_1 } \right\rangle \), so that the correctness of the items in the superposition can be verified by applying measurement on \(\left| {ax_1 } \right\rangle \) without having to examine the superposition itself. So, if \(\left| {ax_1 } \right\rangle = \left| {1 } \right\rangle \) at the end of this stage, then the superposition contains the correct items, i.e., the balanced assignments, otherwise, repeat the preparation stage until \(\left| {ax_1 } \right\rangle = \left| {1 } \right\rangle \). This is useful for not having to proceed to the next stages until the preparation stage succeeds.

The preparation stage to have a superposition of all balanced assignments of n qubits will use the amplitude amplification technique shown in [35] since it achieves the highest known probability of success using fixed operators and it can be summarized as follows, prepare a superposition of \(2^n\) states by initializing n qubits to state \(\left| {0} \right\rangle \) and apply \(H^{\otimes n}\) on the n qubits

where H is the Hadamard gate and \(N=2^n\). Assume that the system \(\left| {\Psi _0 } \right\rangle \) is rewritten as follows,

where \(X_T\) is the set of all balanced assignments of n bits and \(X_F\) is the set of all unbalanced assignments. Let \(M=\left( {\begin{array}{ll} n \\ {{\textstyle {n \over 2}}} \\ \end{array}} \right) \) be the number of balanced assignments among the \(2^n\) possible assignments, \(\sin (\theta ) = \sqrt{{M / N}}\) and \(0 < \theta \le \pi /2\), then the system can be rewritten as follows,

where \(\left| {\psi _1 } \right\rangle = \left| {\tau } \right\rangle \) represents the balanced assignments subspace and \(\left| {\psi _0 } \right\rangle \) represents the unbalanced assignments subspace.

Let \(D=WR_0 \left( \phi \right) W^\dag R_\tau \left( \phi \right) , R_0 \left( \phi \right) = I - (1 - e^{i\phi } )\left| 0 \right\rangle \left\langle 0 \right| , R_\tau \left( \phi \right) = I - (1 - e^{i\phi } )\left| \tau \right\rangle \left\langle \tau \right| \), where \(W=H^{\otimes n}\) is the Walsh–Hadamard transform [19]. Iterate the operator D on \(\left| \Psi _0\right\rangle \) for q times to get,

such that,

where \(y=cos(\delta ), \cos \left( \delta \right) = 2\sin ^2 (\theta )\sin ^2 ({\textstyle {\phi \over 2}}) - 1, 0<\theta \le \pi /2\), and \(U_q\) is the Chebyshev polynomial of the second kind [29] defined as follows,

Setting \(\phi =6.02193\approx 1.9168\pi , M=\left( {\begin{array}{ll} n \\ {{\textstyle {n \over 2}}} \\ \end{array}} \right) , N=2^n\) and, \(q = \left\lfloor {{\textstyle {\phi \over {\sin (\theta )}}}} \right\rfloor \), then \(\left| {a_q } \right| ^2 \ge 0.9975\) [35]. The upper bound for the required number of iterations q to reach the maximum probability of success is,

and using Stirling’s approximation,

then, the upper bound for required number of iterations q to prepare the superposition of all balanced assignments is,

It is required to preserve the states in \(\left| {\psi _1 } \right\rangle \) for further processing in the next stage. This can be done by adding an auxiliary qubit \(\left| {ax_1 } \right\rangle \) initialized to state \(\left| {0} \right\rangle \) and have the states of the balanced assignments entangled with \(\left| {ax_1 } \right\rangle = \left| {1} \right\rangle \), so that, the correctness of the items in the superposition can be verified by applying measurement on \(\left| {ax_1 } \right\rangle \) without having to examine the superposition itself. So, if \(\left| {ax_1 } \right\rangle = \left| {1 } \right\rangle \), then the superposition contains the balanced assignments, otherwise, repeat the preparation stage until \(\left| {ax_1 } \right\rangle = \left| {1 } \right\rangle \). This is useful to be able to proceed to the next stage when the preparation stage succeeds. To prepare the entanglement, let

and apply a quantum Boolean operator \(U_f\) on \(\left| {\Psi _2 } \right\rangle \), where \(U_f\) is defined as follows,

and \(f:\left\{ {0,1} \right\} ^n \rightarrow \{ 0,1\}\) is an n inputs single output Boolean function that evaluates to true for any \(x \in X_T\) and evaluates to false for any \(x \in X_F\); then,

Apply measurement \(M_1\) on the auxiliary qubit \(\left| {ax_1 } \right\rangle \) as shown in Fig. 2. The probability of finding \(\left| {ax_1 } \right\rangle =\left| {1} \right\rangle \) is,

and the system will collapse to,

3.2 Evaluation of constraints

There are M states in the superposition \(\left| \Psi _3^{(M_1 = 1)}\right\rangle \), each state has an amplitude \({\textstyle {1 \over {\sqrt{M} }}}\), and then let \(\left| \Psi _4\right\rangle \) be the system after the balanced assignment preparation stage as follows,

where \(\left| ax_1\right\rangle \) is dropped from the system for simplicity and \(\alpha = {\textstyle {1 \over {\sqrt{M} }}}\). For a graph G with n vertices and m edges, every edge (a, b ) connecting vertcies \(a,b \in V\) is associated with a constraint \(c_l=v_a\oplus v_b\), where \(v_a\) and \(v_b\) are the corresponding qubits for vertices a and b in \(\left| \Psi _4\right\rangle \), respectively, such that \(0 \le l < m, 0 \le m \le {\textstyle {{n(n - 1)} \over 2}}, 0 \le a,b \le n-1\) and \(a \ne b\), where \({\textstyle {{n(n - 1)} \over 2}}\) is the maximum number of edges in a graph with n vertices.

To evaluate the m constraints associated with the edges, add m qubits initialized to state \(\left| 0\right\rangle \),

For every constraint \(c_l=v_a \oplus v_b\), apply two \(Cont\_\sigma _X\) gates, \(Cont\_\sigma _X(v_a,c_l)\) and \(Cont\_\sigma _X(v_b,c_l)\), so that \(\left| c_l\right\rangle =\left| v_a\oplus v_b\right\rangle \). The collection of all \(Cont\_\sigma _X\) gates applied to evaluate the m constraints is denoted \(C_v\) in Fig. 2, and then the system is transformed to,

where \(\sigma _X\) is the Pauli-X gate which is the quantum equivalent to the NOT gate. It can be seen as a rotation of the Bloch Sphere around the X-axis by \(\pi \) radians as follows,

and \(Cont\_U(v,c)\) gate is a controlled gate with control qubit \(\left| v\right\rangle \) and target qubit \(\left| c\right\rangle \) that applies a single qubit unitary operator U on \(\left| c\right\rangle \) only if \(\left| v\right\rangle =\left| 1\right\rangle \), so every qubit \(\left| c_l^k\right\rangle \) carries a value of the constraint \(c_l\) based on the values of \(v_a\) and \(v_b\) in the balanced assignment \(\left| x_k \right\rangle \), i.e., the values of \(v_a^k\) and \(v_b^k\), respectively. Let \(\left| z_k \right\rangle =\left| {c_0^k c_1^k \ldots c_{m-1}^k }\right\rangle \), then the system can be rewritten as follows,

where every \(\left| x_k \right\rangle \) is entangled with the corresponding \(\left| z_k \right\rangle \). The aim of the next stage is to find \(\left| z_k \right\rangle \) with the maximum number of \(\left| 1 \right\rangle \)’s for the max-bisection problem or to find \(\left| z_k \right\rangle \) with the minimum number of \(\left| 1 \right\rangle \)’s for the min-bisection problem.

3.3 Maximization of the satisfied constraints

Let \(\left| {\psi _c } \right\rangle \) be a superposition on M states as follows,

where each \(\left| {z_k } \right\rangle \) is an m-qubit state and let \(d_k=\left\langle {z_k } \right\rangle \) be the number of 1’s in state \(\left| {z_k } \right\rangle \) such that \(\left| {z_k } \right\rangle \ne \left| {0 } \right\rangle ^{\otimes m}\), i.e., \(d_k \ne 0\). This will be referred to as the 1-distance of \(\left| {z_k } \right\rangle \).

The max-bisection graph \(\left| {x_{\max }} \right\rangle \) is equivalent to find the state \(\left| {z_{\max }} \right\rangle \) with \(d_{\max }=\max \{d_k,\,0\le k \le M-1\}\) and the state \(\left| {z_{\min } } \right\rangle \) with \(d_{\min }=\min \{d_k,\,0\le k \le M-1\}\) is equivalent to the min-bisection graph \(\left| {x_{\min }} \right\rangle \). Finding the state \(\left| {z_{\min } } \right\rangle \) with the minimum number of 1’s is equivalent to finding the state with the maximum number of 0’s, so, to clear ambiguity, let \(d_{\max 1}=d_{\max }\) be the maximum number of 1’s and \(d_{\max 0}=d_{\min }\) be the maximum number of 0’s, where the number of 0’s in \(\left| {z_k } \right\rangle \) will be referred to as the 0-distance of \(\left| {z_k } \right\rangle \).

To find either \(\left| {z_{\max } } \right\rangle \) or \(\left| {z_{\min } } \right\rangle \), when \(\left| {\psi _c } \right\rangle \) is measured, add an auxiliary qubit \(\left| {ax_2 } \right\rangle \) initialized to state \(\left| 0 \right\rangle \) to the system \(\left| {\psi _c } \right\rangle \) as follows,

The main idea to find \(\left| {z_{\max } } \right\rangle \) is to apply partial negation on the state of \(\left| {ax_2 } \right\rangle \) entangled with \(\left| {z_k } \right\rangle \) based on the number of 1’s in \(\left| {z_k } \right\rangle \), i.e., more 1’s in \(\left| {z_k } \right\rangle \), gives more negation to the state of \(\left| {ax_2 } \right\rangle \) entangled with \(\left| {z_k } \right\rangle \). If the number of 1’s in \(\left| {z_k } \right\rangle \) is m, then the entangled state of \(\left| {ax_2 } \right\rangle \) will be fully negated. The mth partial negation operator is the mth root of \(\sigma _X\) and can be calculated using diagonalization as follows,

where \(t={\root m \of {{ - 1}}}\), and applying V for d times on a qubit is equivalent to the operator,

such that if \(d=m\), then \(V^m=\sigma _X\). To amplify the amplitude of the state \(\left| {z_{\max } } \right\rangle \), apply the operator MAX on \(\left| {\psi _m } \right\rangle \) as will be shown later, where MAX is an operator on \(m+1\) qubits register that applies V conditionally for m times on \(\left| ax_2 \right\rangle \) based on the number of 1’s in \(\left| {c_0 c_1 \ldots c_{m-1} } \right\rangle \) as follows (as shown in Fig. 3a),

so, if \(d_1\) is the number of \(c_l=1\) in \(\left| {c_0 c_1 \ldots c_{m-1} } \right\rangle \), then,

Amplifying the amplitude of the state \(\left| {z_{\min } } \right\rangle \) with the minimum number of 1’s is equivalent to amplifying the amplitude of the state with the maximum number of 0’s. To find \(\left| {z_{\min } } \right\rangle \), apply the operator MIN on \(\left| {\psi _m } \right\rangle \) as will be shown later, where MIN is an operator on \(m+1\) qubits register that applies V conditionally for m times on \(\left| ax_2 \right\rangle \) based on the number of 0’s in \(\left| {c_0 c_1 \ldots c_{m-1} } \right\rangle \) as follows (as shown in Fig. 3b),

where \(\overline{c_l}\) is a temporary negation of \(c_l\) before and after the application of \(Cont\_V(c_l ,ax_2)\) as shown in Fig. 3, so, if \(d_0\) is the number of \(c_l=0\) in \(\left| {c_0 c_1 \ldots c_{m-1} } \right\rangle \) then,

For the sake of simplicity and to avoid duplication, the operator Q will denote either the operator MAX or the operator MIN, d will denote either \(d_1\) or \(d_0, \left| {z_s } \right\rangle \) will denote either \(\left| {z_{\max } } \right\rangle \) or \(\left| {z_{\min } } \right\rangle \), and \(d_s\) will denote either \(d_{\max 1}\) or \(d_{\max 0}\), so,

and the probabilities of finding the auxiliary qubit \(\left| ax_2 \right\rangle \) in state \({\left| 0 \right\rangle }\) or \({\left| 1 \right\rangle }\) when measured is, respectively, as follows,

To find the state \({\left| z_s \right\rangle }\) in \(\left| {\psi _m } \right\rangle \), the proposed algorithm is as follows, as shown in Fig. 3:

-

1-

Let \(\left| {\psi _r } \right\rangle = \left| {\psi _m } \right\rangle \).

-

2-

Repeat the following steps for r times,

-

i-

Apply the operator Q on \(\left| {\psi _r } \right\rangle \).

-

ii-

Measure \(\left| ax_2 \right\rangle \), if \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \), then let the system post-measurement is \(\left| {\psi _r } \right\rangle \), apply \(\sigma _X\) on \(\left| ax_2 \right\rangle \) to reset to \(\left| 0 \right\rangle \) for the next iteration and then go to Step (i), otherwise restart the stage and go to Step (1).

-

i-

-

3-

Measure the first m qubits in \(\left| {\psi _r } \right\rangle \) to read \(\left| z_s \right\rangle \).

For simplicity and without loss of generality, assume that a single \(\left| z_s \right\rangle \) exists in \(\left| \psi _v \right\rangle \), although such states will exist in couples since each \(\left| z_s \right\rangle \) is entangled with a variable assignment \(\left| x_s \right\rangle \) and each \(\left| x_s \right\rangle \) is equivalent to \(\left| \overline{x_s} \right\rangle \); moreover, different variable assignments might give rise to constraint vectors with maximum distance, but such information is not known in advance.

Assuming that the algorithm finds \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) for r times in a row, then the probability of finding \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) after Step (2-i) in the first iteration, i.e., \(r=1\) is given by,

The probability of finding \(\left| \psi _r \right\rangle =\left| z_s \right\rangle \) after Step (2-i) in the first iteration, i.e., \(r=1\) is given by,

The probability of finding \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) after Step (2-i) in the rth iteration, i.e., \(r>1\) is given by,

The probability of finding \(\left| \psi _r \right\rangle =\left| z_s \right\rangle \) after Step (2-i) in the rth iteration, i.e., \(r>1\) is given by,

To get the highest probability of success for \(\hbox {Pr}{(\psi _{r} = z_s)}\), Step (2) should be repeated until, \(\left| \hbox {Pr}^{(r)}{(ax_2 = 1)} - \hbox {Pr}^{(r)}{(\psi _r = z_s)} \right| \le \epsilon \) for small \(\epsilon \ge 0\) as shown in Fig. 4. This happens when \(\sum \nolimits _{k = 0,k\ne s}^{M - 1} {\sin ^{2r} \left( {{\textstyle {{d_k \pi } \over {2m}}}} \right) } \le \epsilon \). Since the Sine function is a decreasing function then for sufficient large r,

where \(d_{ns}\) is the next maximum distance less than \(d_s\). The values of \(d_s\) and \(d_{ns}\) are unknown in advance, so let \(d_s=m\) be the number of edges, then in the worst case when \(d_s=m, d_{ns}=m-1\) and \(m=n(n-1)/2\), the required number of iterations r for \(\epsilon = 10^{ - \lambda }\) and \(\lambda >0\) can be calculated using the formula,

where \(0 \le m \le {\textstyle {{n(n - 1)} \over 2}}\). For a complete graph where \(m={\textstyle {{n(n - 1)} \over 2}}\), then the upper bound for the required number of iterations r is \(O\left( {n^4 } \right) \). Assuming that a single \(\left| z_s \right\rangle \) exists in the superposition will increase the required number of iterations, so it is important to notice here that the probability of success will not be over-cooked by increasing the required number of iteration r similar to the common amplitude amplification techniques.

The probability of success for a max-bisection instance of the graph shown in Fig. 1 with \(n=8\) and \(m=12\) where the probability of success of \(\left| ax_2\right\rangle \) is 0.6091 after the first iteration and with probability of success of 0.7939 after iterating the algorithm where the probability of success of \(\left| z_{\max }\right\rangle \), is amplified to reach the probability of success of \(\left| ax_2\right\rangle \)

3.4 Adjustments on the proposed algorithm

During the above discussion, two problems will arise during the implementation of the proposed algorithm. The first one is to finding \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) for r times in a row which a critical issue in the success of the proposed algorithm to terminate in polynomial time. The second problem is that the value of \(d_s\) is not known in advance, where the value of \(\hbox {Pr}^{(1)}{(ax_2 = 1)}\) shown in Eq. 35 plays an important role in the success of finding \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) in the next iterations, this value depends heavily on the density of 1’s, i.e., the ratio \({\textstyle {{d_s } \over m}}\).

Consider the case of a complete graph with even number of vertices, where the number of egdes \(m = {\textstyle {{n(n - 1)} \over 2}}\) and all \(\left| z_k \right\rangle \)’s are equivalent and each can be taken as \(\left| z_s \right\rangle \) then,

This case is an easy case where setting \(m=d_s\) in mth root of \(\sigma _X\) will lead to a probability of success of certainty after a single iteration. Assuming a blind approach where \(d_{s}\) is not known, then this case represents the worst ratio \({\textstyle {{d_s } \over m}}\) where the probability of success will be \(\approx 0.5\) for sufficient large graph. Iterating the algorithm will not lead to any increase in the probability of both \(\left| z_s \right\rangle \) and \(\left| ax_2 \right\rangle \).

In the following, adjustments on the proposed algorithm for the max-bisection and the min-bisection graph will be presented to overcome these problems, i.e., to be able to find \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) after the first iteration with the highest probability of success without a priori knowledge of \(d_s\).

3.4.1 Adjustment for the max-bisection problem

In an arbitrary graph, the density of 1’s will be \({\textstyle {{d_{\max 1} } \over m}}\). In the case of a complete graph, there are M states with 1-distance (\(d_k\)) equals to \({\textstyle {{n^2} \over 4}}\). This case represents the worst density of 1’s where the density will be \({\textstyle {{n^2 } \over {2n(n - 1)}}}\) slightly greater than 0.5 for arbitrary large n. Iterating the proposed algorithm will not amplify the amplitudes after arbitrary number of iterations. To overcome this problem, add \(\mu _{\max }\) temporary qubits initialized to state \(\left| 1 \right\rangle \) to the register \(\left| {c_0 c_1 ...c_{m - 1} } \right\rangle \) as follows,

so that the extended number of edges \(m_{ext}\) will be \(m_{ext}=m+\mu _{\max }\) and \(V=\root m_{ext} \of {{\sigma _X }}\) will be used instead of \(V=\root m \of {{\sigma _X }}\) in the MAX operator, then the density of 1’s will be \({\textstyle {{n^2 + 4\mu _{\max } } \over {2n(n - 1) + 4\mu _{\max } }}}\). To get a probability of success \(\hbox {Pr}_{\max }\) to find \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) after the first iteration,

then the required number of temporary qubits \(\mu _{\max }\) is calculated as follows,

where \(\omega = {\textstyle {2 \over \pi }}\sin ^{ - 1} \left( {\sqrt{{{{\hbox {Pr}_{\max } } \over {M\alpha ^2 }}}} } \right) \) and \(\hbox {Pr}_{\max } < {\textstyle {{M \alpha ^2 }}}\), with \(M\alpha ^2=1\) so let \(\hbox {Pr}_{\max } = \delta {\textstyle {{M \alpha ^2 } }}\) such that \(0<\delta <1\). For example, if \(\delta =0.9\), then \(\hbox {Pr} ^{(1)} \left( {ax_2 = 1} \right) \) will be at least 90 % as shown in Fig. 5. To conclude, the problem of low density of 1’s can be solved with a polynomial increase in the number of qubits to get the solution \(\left| z_{\max } \right\rangle \) in \(O\left( m_{ext}^2\right) =O\left( {n^4 } \right) \) iterations with arbitrary high probability \(\delta <1\) to terminate in poly-time, i.e., to read \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) for r times in a row.

The probability of success for a max-bisection instance of the graph shown in Fig. 1 with \(n=8, m=12, \mu _{\max }=31\) and \(\delta =0.9\), where the probability of success of \(\left| ax_2\right\rangle \) is 0.9305 after the first iteration and with probability of success of 0.9662 after iterating the algorithm where the probability of success of \(\left| z_{\max }\right\rangle \) is amplified to reach the probability of success of \(\left| ax_2\right\rangle \)

3.4.2 Adjustment for the min-bisection problem

Similar to the above approach, in an arbitrary graph, the density of 0’s will be \({\textstyle {{d_{\max 0} } \over m}}\). In the case of a complete graph, there are M states with 0-distance (\(d_k\)) equals to \({\textstyle {{n(n - 1)} \over 2}}-{\textstyle {{n^2} \over 4}}\). This case represents the worst density of 0’s where the density will be \({\textstyle {{n-2 } \over {2(n - 1)}}}\) slightly less than 0.5 for arbitrary large n. Iterating the proposed algorithm will not lead to any amplification after arbitrary number of iterations. To overcome this problem, add \(\mu _{\min }\) temporary qubits initialized to state \(\left| 0 \right\rangle \) to the register \(\left| {c_0 c_1 ...c_{m - 1} } \right\rangle \) as follows,

so that the extended number of edges \(m_{ext}\) will be \(m_{ext}=m+\mu _{\min }\) and \(V=\root m_{ext} \of {{\sigma _X }}\) will be used instead of \(V=\root m \of {{\sigma _X }}\) in the MIN operator, then the density of 0’s will be \({\textstyle {{n^2 -2n + 4\mu _{\min } } \over {2n(n - 1) + 4\mu _{\min } }}}\). To get a probability of success \(\hbox {Pr}_{\max }\) to find \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) after the first iteration,

then the required number of temporary qubits \(\mu _{\min }\) is calculated as follows,

where \(\omega = {\textstyle {2 \over \pi }}\sin ^{ - 1} \left( {\sqrt{{{{\hbox {Pr}_{\max } } \over {M\alpha ^2 }}}} } \right) \) and \(\hbox {Pr}_{\max } < {\textstyle {{M \alpha ^2 }}}\), with \(M\alpha ^2=1\) so let \(\hbox {Pr}_{\max } = \delta {\textstyle {{M \alpha ^2 } }}\) such that \(0<\delta <1\). For example, if \(\delta =0.9\), then \(\hbox {Pr} ^{(1)} \left( {ax_2 = 1} \right) \) will be at least 90 %. To conclude similar to the case of the max-bisection graph, the problem of low density of 0’s can be solved with a polynomial increase in the number of qubits, larger than the case of the max-bisection graph, to get the solution \(\left| z_{\min } \right\rangle \) in \(O\left( m_{ext}^2\right) =O\left( {n^4 } \right) \) iterations with arbitrary high probability \(\delta <1\) to terminate in poly-time, i.e., to read \(\left| ax_2 \right\rangle =\left| 1 \right\rangle \) for r times in a row.

4 Conclusion

Given an undirected graph G with even number of vertices n and m unweighted edges, the paper proposed a bounded-error quantum polynomial-time (BQP) algorithm to solve the max-bisection problem and the min-bisection problem, where a general graph is considered for both problems.

The proposed algorithm uses a representation of the two problems as a Boolean constraint satisfaction problem, where the set of edges of a graph are represented as a set of constraints. The algorithm is divided into three stages: The first stage prepares a superposition of all possible equally sized graph partitions in \(O\left( {\root 4 \of {n}} \right) \) using an amplitude amplification technique that runs in \(O\left( {\sqrt{{\textstyle {N \over M}}} } \right) \), for \(N=2^n\) and M is the number of possible graph partitions. The algorithm, in the second stage, evaluates the set of constraints for all possible graph partitions. In the third stage, the algorithm amplifies the amplitudes of the best graph bisection that achieves maximum/minimum satisfaction to the set of constraints using an amplitude amplification technique that applies an iterative partial negation where more negation is given to the set of constrains with more satisfied constrains and a partial measurement to amplify the set of constraints with more negation. The third stage runs in \(O(m^2)\) and in the worst case runs in \(O(n^4)\) for a dense graph. It is shown that the proposed algorithm achieves an arbitrary high probability of success of \(1-\epsilon \) for small \(\epsilon >0\) using a polynomial increase in the space resources by adding dummy constraints with predefined values to give more negation to the best graph bisection.

References

Armbruster, M.: Branch-and-Cut for a Semidefinite Relaxation of Large-Scale Minimum Bisection Problems. Ph.D. thesis, Technische Universitt Chemnitz (2007)

Armbruster, M., Fgenschuh, M., Helmberg, C., Martin, A.: A comparative study of linear and semidefinite branch-and-cut methods for solving the minimum graph bisection problem. In: Proceedings ofthe Conference on Integer Programming and Combinatorial Optimization (IPCO), LNCS, vol. 5035, pp. 112–124, (2008)

Bazgan, C., Karpinski, M.: On the complexity of global constraint satisfaction. In: Proceeding of 16th Annual International Symposium on Algorithms and Computation, vol. LNCS 3827, Springer, pp. 624–633 (2005)

Bazgan, C.: Paradigms of Combinatorial Optimization: Problems and New Approaches. In: Paschos, V.T. (ed) (Chapter 1: Optimal satisfiability), vol. 2, p. 25, Wiley-ISTE, (2010)

Bhatt, S.N., Leighton, F.T.: A framework for solving VLSI graph layout problems. J. Comput. Syst. Sci. 28(2), 300–343 (1984)

Delling, D., Goldberg, A.V., Razenshteyn, I., Werneck, R.F.: Graph partitioning with natural cuts. In: IEEE, Proceedings of the IEEE International Parallel and Distributed Processing Symposium (IPDPS), pp. 1135–1146 (2011)

Delling, D., Werneck, R.F.: Faster customization of road networks. In: Proceedings of the International Symposium on Experimental Algorithms (SEA). LNCS, vol. 7933, pp. 30–42. Springer, Berlin (2013)

Delling, D., Fleischman, D., Goldberg, A.V., Razenshteyn, I., Werneck, R.F.: An exact combinatorial algorithm for minimum graph bisection. Math. Program. (2014). doi:10.1007/s10107-014-0811-z

Josep Daza, J., Kamiskib, M.: MAX-CUT and MAX-BISECTION are NP-hard on unit disk graphs. Theor. Comput. Sci. 377(13), 271–276 (2007)

Feige, U., Langberg, M.: The RPR\(^2\) rounding technique for semidefinite programs. J. Algorithms 60, 1–23 (2006)

Feldmann, A.E., Widmayer, P.: An \(O(n^4)\) time algorithm to compute the bisection width of solid grid graphs. In: Proceedings of the European Symposium on Algorithms (ESA), LNCS, vol. 6942, pp. 143–154, Springer, (2011)

Guruswami, V., Makarychev, Y., Raghavendra, P., Steurer, D., Zhou, Y.: Finding almost-perfect graph bisections. In: Proceedings of ICS, pp. 321–337 (2011)

Garey, M.R., Johnson, D.S.: Computers and Intractability. A Guide to the Theory of NP-Completeness. W. H. Freeman and Company, London (1979)

Garey, M.R., Johnson, D.S., Stockmeyer, L.J.: Some simplified NP-complete graph problems. Theor. Comput. Sci. 1, 237–267 (1976)

Grover, L.K.: Quantum mechanics helps in searching for a needle in a haystack. Phys. Rev. Lett. 79, 325 (1997)

Hager, W.W., Phan, D.T., Zhang, H.: An exact algorithm for graph partitioning. Math. Program. 137, 531–556 (2013)

Hendrickson, B., Leland, R.: An improved spectral graph partitioning algorithm for mapping parallel computations. SIAM J. Sci. Comput. 16(2), 452–469 (1995)

Hein, M., Bhler, T.: An inverse power method for nonlinear eigen problems with applications in 1-spectral clustering and sparse PCA. In: Proceedings Advances in Neural Information Processing Systems (NIPS), pp. 847–855 (2010)

Høyer, P.: Arbitrary phases in quantum amplitude amplification. Phys. Rev. A 62, 52304 (2000)

Jansen, K., Karpinski, M., Lingas, A., Seidel, E.: Polynomial time approximation schemes for MAX-BISECTION on planar and geometric graphs. SIAM J. Comput. 35, 110–119 (2005)

Johnson, E., Mehrotra, A., Nemhauser, G.: Min-cut clustering. Math. Program. 62, 133–152 (1993)

Kwatra, V., Schdl, A., Essa, I., Turk, G., Bobick, A.: Graph cut textures: image and video synthesis using graph cuts. ACM Trans. Graph. 22, 277–286 (2003)

Land, A.H., Doig, A.G.: An automatic method of solving discrete programming problems. Econometrica 28(3), 497–520 (1960)

Lipton, R.J., Tarjan, R.: Applications of a planar separator theorem. SIAM J. Comput. 9, 615–627 (1980)

Malewicz, G., Austern, M.H., Bik, A.J., Dehnert, J.C., Horn, I., Leiser, N., Czajkowski, G.: Pregel, a system for large-scale graph processing. In: PODC, p. 6. ACM, (2009)

Meyerhenke, H., Monien, B., Sauerwald, T.: A new diffusion-based multilevel algorithm for computing graph partitions. J Parallel Distrib. Comput. 69(9), 750–761 (2009)

Rcke, H.: Optimal hierarchical decompositions for congestion minimization in networks. In: Proceedings of the ACM Symposium on Theory of Computing (STOC), pp. 255–263. ACM Press, New York (2008)

Raghavendra, P., Tan, N.: Approximating CSPs with global cardinality constraints using SDP hierarchies. In: Proceedings of SODA, pp. 373–387 (2012)

Rivlin, T.J.: Chebyshev Polynomials. Wiley, New York (1990)

Shi, J., Malik, J.: Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 888–905 (2000)

Sanders, P., Schulz, C.: Distributed evolutionary graph partitioning. In: Proceedings of the Algorithm Engineering and Experiments (ALENEX), pp. 16–29. SIAM (2012)

Sanders, P., Schulz, C.: Think locally, act globally: highly balanced graph partitioning. In: Proceedings of the International Symposium on Experimental Algorithms (SEA). LNCS, vol. 7933, pp. 164–175. Springer, Berlin (2013)

Xu, Z., Du, D., Xu, D.: Improved approximation algorithms for the max-bisection and the disjoint 2-catalog segmentation problems. J. Comb. Optim. 27(2), 315–327 (2014)

Younes, A., Rowe, J., Miller, J.: Enhanced quantum searching via entanglement and partial diffusion. Phys. D. 237(8), 1074–1078 (2007)

Younes, A.: Towards more reliable fixed phase quantum search algorithm. Appl. Math. Inf. Sci. 7(1), 93–98 (2013)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Younes, A. A bounded-error quantum polynomial-time algorithm for two graph bisection problems. Quantum Inf Process 14, 3161–3177 (2015). https://doi.org/10.1007/s11128-015-1069-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11128-015-1069-y