Abstract

A procedure for estimating the number of mature apples in orchard images captured at night-time with artificial illumination was developed and its potential for estimating yield was investigated. The procedure was tested using four datasets totaling more than 800 images taken with cameras positioned at three heights. The procedure for detecting apples was based on the observation that the light distribution on apples follows a simple pattern in which the perceived light intensity decreases with the distance from a local maximum due to specular reflection. Accordingly, apple detection was achieved by detecting concentric circles (or parts of circles) in binary images obtained via threshold operations. For each dataset, after calibration of the procedure using 12 images, the estimates of the number of apples were within a few percent of the number of apples counted by visual inspection. Yield estimations were obtained via multi-linear models that used between two and six images per tree. The results obtained using all three cameras were only slightly better than those obtained using only two cameras. Using images from only one side of the tree did not worsen the results significantly. Overall, the yield estimated by the best models was within \(\pm\)10 % of the actual yield. However, the standard deviation of the yield estimation errors corresponded to ~26–37 % of the average tree yield, indicating that improvements are still needed in order to achieve accurate yield estimation at the single-tree level.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Estimation of the expected yield is still a major challenge in orchards. Such information would be valuable to growers and companies that provide harvesting and post-harvesting services as it would help them manage equipment and labor force more effectively. To date, yield estimation is based on visual inspection of a limited number of trees by the grower, which is both time consuming and prone to errors. At first glance, it may seem that this task could be easily automated using computer vision. However, the unstructured and cluttered environment of the tree canopy makes this task very challenging, especially when the fruits are featureless and with a color similar to that of the canopy. Jiménez et al. (2000), Kapach et al. (2012) and Gongal et al. (2015) compiled reviews on the general topic of fruit detection and location in tree images, although Kapach et al. (2012) focused on vision-based fruit location for harvesting robots, which imposes additional constraints on the system (such as computing efficiency and accurate location). These review papers also discussed the pros and cons of the various types of imaging system (e.g. color, depth/time-of-flight, hyperspectral, thermal imaging). Standard color cameras offer obvious advantages in terms of cost and ease of operation, and a large number of studies have focused on the use of such cameras (e.g. Pla et al. 1993; Zhao et al. 2005; Kurtulmus et al. 2011; Zhou et al. 2012; Silwal et al. 2014). However, the images captured with such cameras are very sensitive to the illumination conditions (e.g. Linker et al. 2012; Payne et al. 2013). Night-time imaging using artificial illumination minimizes this issue and may constitute an advantageous alternative (e.g. Sites and Delwiche 1988; Payne et al. 2014; Cohen et al. 2014; Linker and Kelman 2015). The recent studies of Cohen et al. (2014) and Linker and Kelman (2015), which focused on the detection of green mature apples in night-time images, reported encouraging results with a strong linear relationship between the number of objects identified as apples and the number of apples counted by visual inspection of the images. However, as mentioned by Wang et al. (2012), the performance of vision-based yield estimation depends on two factors: (1) how accurately apples are detected by the image processing procedure and (2) how accurately the number of apples visible in the tree image(s) correlates with yield. This second factor was not investigated in the studies of Cohen et al. (2014) and Linker and Kelman (2015). Vision-based estimation of green apple yields has been reported by Wang et al. (2012), who used night-time color images, and Stajnko et al. (2004), who used day-time thermal images. Both studies reported R2 values between the estimated and actual number of fruit per tree in the range 0.8–0.9. However, these studies were conducted with small trees characterized by low fruit loads and a sparse canopy: Although Wang et al. (2012) did not provide detailed results at the tree level, it can be inferred from the results that the average tree yield was ~35 apples; Stajnko et al. (2004) reported yields ranging from 50 to 120 apples per trees. While such “small trees/high planting density” orchards are increasingly popular in Europe and the US, Israeli growers still favor older and larger trees, mostly due to Jewish Laws which prevent consuming the harvest of the first 3 years after planting. As a result, Israeli mature orchards such as the one used in the present study have a much higher fruit load (target yield of ~300–350 fruit per tree). The trees are also much larger and the canopy is very dense (Fig. 1). The dense canopy makes the detection of fruit especially challenging and the significant depth of the canopy means that a lower percentage of the overall fruit is visible to the camera.

The objective of the present study was 2-fold. First, to develop and test a novel procedure for detecting and locating apples in night-time orchard images. Second, to determine whether tree yield could be inferred from such images. Special emphasis was attached to using calibration sets with as few images/trees as possible since the user needs to provide ground-truth information for these images/trees. The present work should be viewed as an extension of previous works of Linker and co-authors dealing with fruit location and yield estimation: Linker et al. (2012) suggested an approach based on color and texture analysis, which was initially applied to day-time images and later to night-time images (Cohen et al. 2014). Linker and Kelman (2015) used light distribution around local maxima to locate apple in night-time images. These two approaches were compared by Linker et al. (2015), who also reported for the first time preliminary results relative to yield estimation. Besides presenting a novel approach for locating apples, which is somewhat similar to the one described by Linker and Kelman (2015) but simpler to calibrate and implement, this paper investigates more thoroughly the possibility of using night-time images covering only part of a tree for estimating tree yield.

Materials and methods

Datasets

The work was conducted with four datasets of images captured in “Golden Delicious” orchards at the Matityahu Research Station in Northern Israel in 2013, 2014 and 2015. The imaging system and the 2013 dataset (156 images of 26 trees) have been previously described in Linker and Kelman (2015). The same imaging system was used to capture images on July 17, 2014, August 5, 2014, and August 13, 2015. In 2014, images were captured from both sides of the trees (258 images of 43 trees, same trees on both nights) while in 2015 images were captured only from one side of the trees (156 images of 52 trees). In all 3 years, each tree was picked individually at the end of the season to provide ground-truth yield data but, due to a technical mistake, the yield of three trees was not recorded properly in 2013. In all three datasets, the number of fruit visible in each image was determined by visual inspection.

Algorithm

Concept

The development of the image analysis procedure was based on the observation that since apples are basically spherical objects with uniform texture, in night-time images captured with artificial illumination, the light distribution on apples is expected to follow a simple pattern in which the perceived light intensity decreases with the distance from a local maximum due to specular reflection. Figure 2a–c shows that this is indeed the case for ideal “fully visible” apples. In particular, the binary image displayed in Fig. 2c (which was obtained by applying simple thresholds to Fig. 2b) shows that pixels with similar intensities form rings. Furthermore, Fig. 2d–f show that even when an apple is partially occluded, the light distribution on the region of the apple surface which is visible follows a similar circular pattern. In other words, if one considers a set of pixels which have a similar intensity, the “apple pixels” will form part of a ring. Figure 2c and f also shows that the rings corresponding to different intensities are (approximately) concentric. Accordingly, the procedure described below is based on identifying rings (not necessarily complete) and estimating their centers. Apples are detected where a sufficient number of concentric rings are found.

Two typical sub-images. Frames a and d show original sub-images (300 by 300 pixels and 500 by 500 pixels, respectively). Frames b and e show gray-level versions of frames a and d. Frames c and f show binary images obtained by applying the thresholding operation \(B\left( {i,j} \right) = \left( {\tau_{1} \le I\left( {i,j} \right) \le \tau_{1} + 10{ | }\tau_{2} \le I\left( {i,j} \right) \le \tau_{2} + 10} \right)\). In frame c the inner and outer rings on apples correspond to \(\tau_{1} =\) 170 and \(\tau_{2} =\) 200, respectively. In frame f the inner and outer rings on the apple correspond to \(\tau_{1} =\) 120 and \(\tau_{2} =\) 170, respectively. In both cases the results obtained with additional values of \(\tau\), which led to additional concentric circles on the apples, are not shown for clarity

Detailed description of the procedure

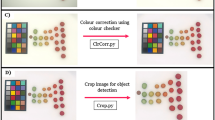

The flowchart of the procedure is presented in Fig. 3. The original color image was converted into a gray-scale image according to

where \(I\) denotes the gray level and \(R\), \(G\) and \(B\) denote the original values of the Red, Green and Blue channels, respectively.

Following this conversion, a binary image was obtained by simple thresholding operation, keeping only the pixels with intensity between \(\tau_{\hbox{min} }\) and \(\tau_{\hbox{min} } + 10\). The purpose of this operation was to extract all the pixels within a given intensity range, The Circle Hough Transform (CHT) (in its phase-coding formulation developed by Atherton and Kerbyson (1999)) was used to detect circles within a given radius range in this binary image. The CHT returns not only the estimated center and radius of each circle but also the so-called “accumulator value” associated with each circle. This accumulator value is a measure of the certainty of the circle detection. The results (centers, radii and accumulator values) were stored in a dynamic stack and the procedure was repeated after incrementing the threshold \(\tau\) until the maximum value \(\tau_{\hbox{max} }\) was reached. The stack containing all the detections was then scanned recursively and centers which were within \(D_{\hbox{max} }\) pixels of each other were united, i.e. considered to correspond to concentric circles. Finally, the score (mean accumulator value) associated with each set of concentric circles was calculated:

where \(A_{i,k}\) is the value of the accumulator of the kth circle included in the ith set of concentric circles and \(n_{i}\) is the number of circles in this set. The sets of concentric circles containing a sufficient number of circles and having a high enough score were considered to correspond to apple objects.

Calibration

The whole procedure required four kinds of parameters:

-

The minimum and maximum values (\(\tau_{\hbox{min} }\) and \(\tau_{\hbox{max} }\)) for the iterative thresholding operation

-

The search for circles using CHT required a radius range and a minimum value of the accumulator for circle detection.

-

Uniting circles required a value for \(D_{\hbox{max} }\), the maximum distance between centers for considering circles as concentric

-

Apple detection required a minimum value for the score of concentric circles. Rather than considering a single parameter, the required score was related to the number of concentric circles as follows:

where \(S_{i}\) is the score associated with the ith set of concentric circles, \(n_{i}\) is the number of concentric circles in this set and \(\theta \left( n \right)\) is the minimum score required.

The values of \(\tau_{\hbox{min} }\) and \(\tau_{\hbox{max} }\) can be readily set by inspecting the dynamic range of the images. For the datasets used in this study, such inspection led to selecting \(\tau_{\hbox{min} } = 100\) and \(\tau_{\hbox{max} } = 200\).

With respect to the CHT analysis, preliminary analysis with five images led to performing CHT with two radius ranges with different accumulator thresholds, and combining the results

-

Radius range 10–30 pixels, accumulator threshold \(\alpha_{1}\)

-

Radius range 31–60 pixels, accumulator threshold \(\alpha_{2}\)

In order to reduce the computation burden, the parameters \(\alpha_{1}\) and \(\alpha_{2}\) were restricted to values ranging from 0.02 to 0.20 at 0.03 increments (7 values), leading to 49 possible combinations of \(\left( {\alpha_{1} ,\alpha_{2} } \right)\). The range for the accumulator threshold was based on the accumulator values observed for typical situations, such as shown in Fig. 2. For instance, the accumulator values for the “apple” inner and outer rings shown in Figs. 2c and f were in the range 0.16–0.20 and 0.08–0.10, respectively.

Calibration of the parameters was performed using a genetic algorithm with the following parameters: Uniform cross-over function, cross-over fraction: 0.8, migration factor: 0.2, parent selection by stochastic universal sampling, mutation using Gaussian distribution, population size: 20, number of generations: 30. The procedure was calibrated separately for each dataset. In each case, 12 images were selected randomly and the genetic algorithm was used to determine the set of parameters which led to the highest coefficient of correlation (R2) between the number of detections and the actual number of apples.

Comparison with other approaches

The 2013 dataset has been previously used in the studies of Cohen et al. (2014) and Linker and Kelman (2015) who used color-and-texture analysis (described in Linker et al. 2012) and spatial light distribution, respectively. These two approaches were applied to the July 2014 dataset. Since the datasets of 2013 and July 2014 were similar in terms of apple size (apple radius approx. 60 pixels), the procedure used by Linker and Kelman (2015) was applied “as-is”, i.e. without recalibration. The procedure of Cohen et al. (2014) had to be recalibrated. This was achieved using the same 12 calibration images as above, in which close to 350 apples were labeled manually. A genetic algorithm was used to determine the set of parameters which led to the highest coefficient of correlation (R2) between the number of detections and the actual number of apples.

Yield estimation

Various regression models for estimating tree yield using the information extracted from the images were developed. As detailed in Linker and Kelman (2015), the imaging system captured three images at fixed heights. In 2013 and 2014, images were captured from both sides of the tree while in 2015 images were captured only from one side. Different yield models were investigated, based on different sets of images: Images from all heights (from one side only or both sides); bottom and middle images only (from one side only or both sides); middle and top images only (from one side only or both sides); and bottom and top images only (from one side only or both sides). The general form of the models based on images from both tree sides was

where \(B_{i,k}\), \(M_{i,k}\) and \(T_{i,k}\) are the number of detections in the bottom, middle and top image of the side \(i\) of the kth tree, respectively. Similarly, the general form of the models based on images of one side only was

In both cases, the coefficients corresponding to images not used in the model were set to 0. Altogether eight types of models were developed for the 2013 and 2014 datasets, and four types of models for the 2015 dataset. Due to the small size of the 2013 dataset, it was not possible to split this dataset into two distinct calibration and validation sub-sets. Therefore models were created using all the available data and the objective was merely to establish the feasibility of developing such models. For the 2014 and 2015 datasets, calibration of the models was performed using the images of 20 trees selected randomly and the remaining trees were used to validate the models. The sensitivity of the results to the choice of the calibration trees was investigated by repeating the procedure ten times using different calibration trees. This Monte-Carlo cross-validation approach was preferred over the more common k-fold cross-validation approach since k-fold cross-validation with k = 10 would have resulted in relatively large calibration sets (47 trees) while one of the objectives of this study was to rely on as few calibration trees as possible. In addition, the Monte-Carlo approach made it possible to use the same number of calibration trees for both years despite the different sizes of the datasets.

Results

Estimation of the number of apples in images

Preliminary analysis with eight of the calibration images (two from each dataset, selected randomly) indicated that most apples were associated with at least three concentric circles, which led to considering the following score threshold \(\theta \left( n \right)\):

The corresponding procedure required calibration of six parameters: \(\left( {D_{\hbox{max} } ,\varLambda ,\theta_{1} ,\theta_{2} ,\theta_{3} ,\theta_{4} } \right)\), where \(\varLambda\) denotes the identifier of the \(\left( {\alpha_{1} ,\alpha_{2} } \right)\) combination (integer variable). The results of the calibration are presented in Table 1. For all four datasets, a correlation coefficient higher than 0.90 was obtained between the number of detections and the actual number of apples according to the manual count. However, the relationship between the two varied between the datasets. Also, the fitted values of most of the parameters varied between dataset, with the noticeable exception of \(\alpha_{1}\) and \(\alpha_{2}\)(the accumulator thresholds of the CHT procedure). Increasing the size of \(\theta\)(i.e. considering different score thresholds for 4, 5, or more circles) improved the results only marginally (details not shown).

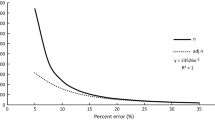

The results of the calibration are also presented graphically in the left frames of Fig. 4. For each dataset, the calibrated model was used to estimate the number of apples in all the images, leading to the results presented in the Fig. 4e–h and Table 2. Overall, the error between the actual and estimated number of apples in the images corresponded to less than 1 % of the actual number of apples for three of the datasets and 6 % for the last dataset. There was no consistent relationship between the type of image (i.e. camera position) and the estimation error. Although the standard deviations of the estimation errors appear to be much higher in 2014 and 2015, this is partly due to the fact that the number of apples per image was much lower in 2013: on average 43 apples per image in 2013, compared to 69, 75 and 77 apples per image in the dataset of July 2014, August 2014 and 2015, respectively.

Left frames: Relationship between the number of objects detected and the actual number of apples in the 12 calibration images of the datasets of 2013, July 2014, August 2014 and 2015, respectively. Right frames: Actual Versus estimated number of apples in each image of the datasets of 2013, July 2014, August 2014 and 2015, respectively. The symbols indicate the camera position

Although the main objective of this study was to obtain a high correlation between the number of objects detected and the actual number of apples, it was still of interest to estimate the accuracy of the procedure by considering the number of false positive and missed detections. These were evaluated for the calibration images and 12 additional images chosen randomly. The results are summarized in Table 3. There was a large difference between the results obtained for the 2013 dataset and the other datasets. For the 2013 dataset, the number of false positive was 1–2 % and the number of missed detections was around 10 % while, for the other datasets, the number of false positive was around 7 % and the number of missed detections was around 33 %. Since the same imaging system was used on all years and the images were captured at a similar developmental stage, these large differences appear to be related to the number of fruit visible in the images, which, as noted above, was much lower in 2013.

Yield estimation

As mentioned above, multi-linear models were calibrated for each dataset using images from two or three cameras, either from only one side or from both sides of the row. The results for the dataset of 2013 are presented in Table 4. The standard deviation of the yield estimation error ranged from 78 to 105 fruit/tree, which corresponds to ~20–27 % of the average tree yield (390 fruit/tree). The best results were obtained when using either all three cameras or only the middle and top cameras. Using images from only one side did not affect the results dramatically.

The results for the dataset of July 2014 are presented in Tables 5 and 6. As mentioned above, for each type of model, ten models were calibrated using different subs-sets of the data. The results in Tables 5 and 6 correspond only to the 23 trees not used to calibrate the respective model. Overall, as with the 2013 dataset, very similar results were obtained using images from only one side or both sides of the row (comparison between corresponding columns in Tables 5 and 6). The results obtained using all three cameras were only slightly better than those obtained using only either the bottom and top or the middle and top cameras. The relative error of yield estimation depended on the (random) choice of the trees used for calibration. However, the estimated yield was almost always within \(\pm\)10 % of the actual yield. The standard deviation of the yield estimation error of the models based on all three cameras ranged from 132 to 185 fruit/tree, which corresponds to ~26–37 % of the average tree yield (~500 fruit per tree). The estimation error at the tree level can be appreciated in Fig. 5 which shows the results of Model 3 based on all images from both sides of the row.

Actual yield Versus yield estimated using all images from both sides of the row (July 2014 dataset, Model 3 in Table 5)

The results for the dataset of August 2014 and 2015 are presented in Tables 7, 8, 9. As with the July 2014 dataset, using all three cameras or only the bottom and top cameras provided the best results, and using images from both sides did not improve the results appreciably for the August 2014 dataset. Overall, in terms of yield estimation, the results are slightly worse than those obtained for the July 2014 dataset. Still, the yield estimated by the large majority of models is within \(\pm\)10 % of the actual yield. In terms of yield estimation at the level of individual trees, for both datasets, the standard deviation of the yield estimation error ranged from ~26 to 35 % of the average tree yield (~640 fruit per tree in 2015).

Discussion

The performance of vision-based yield estimation must be judged based on two criteria: (1) how accurately apples are detected by the image processing algorithm and (2) how accurately the yield can be estimated using the number of objects detected in the images.

In terms of apple detection, the procedure is far from excellent, with a correct detection rate ranging from ~65 to 85 %. Furthermore, the number of missed apples appears to depend on the number of apples in the image. Nevertheless, within a given dataset, the detection rate was very consistent, so that it was possible to use a small number of images (12) to establish a relationship between the number of detected objects and the actual number of apples. For each dataset, such a relationship led to an estimate of the total number of fruit which was within a few percent of the number of fruit counted by visual inspection. A comparative investigation with the procedures described by Cohen et al. (2014) and Linker and Kelman (2015) was conducted with the datasets of 2013 and July 2014. Table 10 shows that all three approaches led to similar results. The advantage of the present approach is its simplicity and the fact that it requires calibration of only a small number of parameters. Also, by comparison to approaches that includes a supervised classifier (such as Cohen et al. 2014), the present method does not require time-consuming labeling of objects in the calibration images.

In terms of yield prediction, the results are encouraging but further improvements are required to reduce the estimation error at the single-tree level. Two factors contribute to the relatively large errors at the single-tree level: the simplicity of the models used and the significant overlap between neighboring trees. In terms of modeling, the multi-linear models used in this work are over-simplistic and more accurate models could probably be obtained using more flexible, non-linear models such as neural networks. However, such models would require a much larger dataset for calibration. In its present state, the method requires that the grower provides the actual yield of 20 trees, which does not seem excessive. The use of more flexible models (prone to overfitting) would require that the grower provides the actual yield for a much larger number of trees, which would not be realistic. However, the limited performance of the multi-linear models is also probably partly due to the significant overlap that exists between neighboring trees. While such overlap is virtually non-existent in images acquired in young orchards with small trees, such as used by Wang et al. (2012), in mature orchards such overlap is unavoidable and further work will be required to deal adequately with this issue. Finally, since the images captured by neighboring cameras overlap, performing registration of the images and analyzing a single composite image for each side of the tree would probably improve the results. Due to the three-dimensional architecture of the trees and the lack of clear “anchor points” for registration (corners, lines, etc.) image registration is far from trivial and was not attempted in the present study.

Conclusion

Yield estimation using color imaging remains a challenging task. The approach presented in this work is simple and requires ground truth data for only 20 trees. Although the actual yield estimation error depended on the choice of the calibration trees, in the present study, the total yield estimates were almost always within \(\pm\)10 % of the actual yield. However, the standard deviation of the yield estimation errors corresponded to more than 25 % of the average tree yield, indicating that improvements are still needed in order to achieve accurate yield estimation at the single-tree level. Furthermore, the relationship between the number of visible (or located) apples and tree yield was specific to each dataset, meaning that in practice the grower would be required to provide ground-truth data for the calibration trees. For practical applications, unless one were able to devise a one-fits-all relationship, one of the main challenges will be to develop models with enhanced performance while maintaining the calibration set reasonably small.

References

Atherton, T. J., & Kerbyson, D. J. (1999). Size invariant circle detection. Image and Vision Computing, 17, 795–803.

Cohen, O., Linker, R., Payne, A. & Walsh, K. (2014). Detection of fruit in canopy night-time images: Two case studies with apple and mango. In: Proceedings of the 12th International Conference on Precision Agriculture. ISPA, Monticello, IL, USA, Paper 1420. https://www.ispag.org/icpa/Proceedings

Gongal, A., Amatya, S., Karkee, M., Zhang, Q., & Lewis, K. (2015). Sensors and systems for fruit detection and localization: a review. Computers and Electronics in Agriculture, 116, 8–19.

Jiménez, A. R., Ceres, R., & Pons, J. L. (2000). A survey of computer vision methods for locating fruit on trees. Transactions of the ASAE, 43, 1911–1920.

Kapach, K., Barnea, E., Mairon, R., Edan, Y., & Ben Shahar, O. (2012). Computer vision for fruit harvesting robots—state of the art and challenges ahead. International Journal of Computer Vision and Robotics, 3, 4–34.

Kurtulmus, F., Lee, W. S., & Vardar, A. (2011). Green citrus detection using ‘eigenfruit’, color and circular gabor texture features under natural outdoor conditions. Computer and Electronics in Agriculture, 78, 140–149.

Linker, R., Cohen, O., & Naor, A. (2012). Determination of the number of green apples in RGB images recorded in orchards. Computers and Electronics in Agriculture, 81, 45–57.

Linker, R., & Kelman, E. (2015). Apple detection in nighttime tree images using the geometry of light patches around highlights. Computers and Electronics in Agriculture, 114, 154–162.

Linker, R., Kelman, E., & Cohen, O. (2015). Estimation of apple orchard yield using night time imaging. In Proceedings of the 10th European Conference on Precision Agriculture, 12–16 July 2015, Beit Dagan, Israel.

Payne, A., Walsh, K. B., Subedi, P., & Jarvis, D. (2013). Estimation of mango crop yield using image analysis–segmentation method. Computers and Electronics in Agriculture, 91, 57–64.

Payne, A., Walsh, K. B., Subedi, P., & Jarvis, D. (2014). Estimating mango crop yield using image analysis using fruit at ‘stone hardening’ stage and night time imaging. Computers and Electronics in Agriculture, 100, 160–167.

Pla, F., Juste, F., & Ferri, F. (1993). Feature extraction of spherical objects in image analysis: an application to robotic citrus harvesting. Computers and Electronics in Agriculture, 8, 57–72.

Silwal, A., Gongal, A., & Karkee, M. (2014). Apple identification in field environment with over-the- row machine vision system. Agriculture Engineering International: CIGR Journal, 6, 66–75.

Sites, A. & Delwiche, M.J. (1988). Computer vision to locate fruit on a tree. Paper No. 85-3039, ASAE, St Joseph, MI, USA.

Stajnko, D., Lakota, K., & Hocevar, M. (2004). Estimation of number and diameter of apple fruits in an orchard during the growing season by thermal imaging. Computers and Electronics in Agriculture, 42, 31–42.

Wang, Q., Nuske, S., Bergerman, M. & Singh, S. (2012). Automated crop yield estimation for apple orchards. In: Proceedings of the 13th International Symposium on Experimental Robotics. Quebec City, Canada.In: Desai, J.P., Dudek, G., Khatib, O., Kumar, V(Eds). pp: 745–758

Zhao, J., Tow, J. & Katupitiya, J. (2005). On-tree fruit recognition using texture properties and color data. In: IEEE/RSJ International Conference on Intelligent Robots and Systems, (pp. 263–268).

Zhou, R., Damerow, L., Sun, Y., & Blanke, M. M. (2012). Using colour features of cv. ‘Gala’ apple fruits in an orchard in image processing to predict yield. Precision Agriculture, 13, 568–580.

Acknowledgments

This work was supported by the Israel Plants Production & Marketing Board—Apple Division. The author wishes to thank the team at the Matityahu Research Station.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Linker, R. A procedure for estimating the number of green mature apples in night-time orchard images using light distribution and its application to yield estimation. Precision Agric 18, 59–75 (2017). https://doi.org/10.1007/s11119-016-9467-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-016-9467-4