Abstract

This paper proposes a line search technique to solve a special class of multi-objective optimization problems in which the objective functions are supposed to be convex but need not be differentiable. This is an iterative process to determine Pareto critical points. A suitable sub-problem is proposed at every iteration of the iterative process to determine the direction vector using the sub-differential of every objective function at that point. The proposed method is verified in numerical examples. This methodology does not bear any burden of selecting suitable parameters like the scalarization methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The formulation of a general unconstrained multi-objective optimization problem is

where \(\mathscr {F}(x)=(f_1(x),f_2(x),f_3(x),\ldots ,f_m(x))^T\), \(f_i: \mathbb {R}^n\rightarrow \mathbb {R},~\) \(i=1,2,3,\ldots ,m\), \(m\ge 2\). In an ideal situation, a single point minimizes all the objective functions. But, in real-life situations, at least one objective is compromised. Therefore, the solution of MP is termed as a compromising/Pareto optimal/efficient solution. The scalarization approaches [1,2,3] are the well-known techniques to solve MP, which transform MP into a single objective optimization problem using some parameters, provided by the decision maker. These methods often have difficulties in finding a well-diversified Pareto front. Heuristic methods [4,5,6,7,8,9] are widely used to solve MP, for which convergence property is not guaranteed. However, these methods usually provide approximate Pareto front.

In recent years, line search techniques for single objective cases have been extended to MP to avoid these difficulties. Some of the line search techniques for MP are the steepest descent methods [10, 11], projected gradient methods [12, 13], Newton method [14], quasi-Newton methods [15], q-Newton’s method [16], q-steepest descent method [17], Newton’s method for uncertain MP [18] and some known references for constrained MP are sequential quadratic programming techniques [19], sequential quadratically constrained quadratic programming techniques [20, 21] etc.. The general line search techniques for MP, which are developed in these reference papers, generate a sequence of points \(\{x^k\}\) as \(x^{k+1}=x^k+\alpha _k d^k\), which converges to a Pareto critical point under some assumptions. A suitable sub-problem is formulated at every iterate \(x^k\), whose solution is the direction vector \(d^k\), and the step length \(\alpha _k\) is determined using Armijo-like conditions. The BFGS update formula is modified to generate a sequence of positive definite matrices to ensure the descent property. All the above-stated methods are gradient-based algorithms and applicable only if all functions of MP are continuously differentiable.

In recent years, some line search techniques have been developed for nonsmooth MP, in which all the functions may not be differentiable. Recently the concept of subdifferential has been used by Tanabe et. al [22] to develop the proximal gradient method for a specific class of optimization problems, in which each objective function is composed of two parts. One part is a proper convex function that is lower semicontinuous but not necessarily differentiable. The other part is a continuously differentiable function. The subproblem at every iterating point takes care of the first-order information of the differentiable function. The proximal sub-gradient method is also extended by Bento et. al [23] to constrained MP, in which the subproblems are constructed using one element of the subdifferential set. Da Cruz Neto et al. [24] develop a subgradient method for a class of MP, in which all the objective functions are quasi-convex, locally Lipschitz functions, and not necessarily differentiable. These papers have studied many theoretical results related to the existence of solutions to MP using subdifferential concepts.

The present work aims to develop an iterative process for MP in which the gradient information of all the functions is unavailable. The basic concept of this method is a variation of the existing gradient-based methods cited above. First, the direction vector at every iterate is obtained as the solution of a subproblem using sub-differentials of the objective functions, which are not differentiable. The step-length selection process is provided to ensure the descent property of every objective function along this direction. Moreover, under some standard assumptions, the global convergence of this iterative process is justified.

The following is an outline for the paper. In Sect. 2, certain prerequisites pertaining to MP are described. Section 3 develops some theoretical results for converging the iterative process. In Sect. 4, the methodology is verified in some numerical examples.

2 Prerequisites

Throughout this manuscript, we use the following notations.

MP: Multi-objective programming

SP: Single objective programming

PF: Pareto front

PO: Pareto optimal

PC: Pareto critical

\(\Lambda _m=\{1,2,3,\ldots ,m\}\), \(\mathbb {R}_+^m:= \{a \in \mathbb {R}^ m| \hspace{.1cm} 0\le a_i ~\text {for all}~ i\in \Lambda _m \}\)

\(\mathbb {R}_{++}^m:= \{a \in \mathbb {R}^ m| \hspace{.1cm} 0<a_i~\text {for all}~ i\in \Lambda _m\}\).

For \(a^1,a^2 \in \mathbb {R}^m\), the vector inequalities are defined as:

\( a^2\ge a^1 \hspace{.2cm} \Leftrightarrow \hspace{.2cm} a^2-a^1\in \mathbb {R}_+^m \backslash \{0\}\) and \( a^2>a^1 \hspace{.2cm} \Leftrightarrow \hspace{.2cm} a^2-a^1\in \mathbb {R}_{++}^m\).

\(\mathscr {F}: \mathbb {R}^n\rightarrow \mathbb {R}^m\) is called a convex vector function if for each \(y,z \in \mathbb {R}^n, b \in [0, 1]\)

\(\mathscr {F}: \mathbb {R}^n\rightarrow \mathbb {R}^m\) is called a strict convex vector function if for each \(y, z \in \mathbb {R}^n, ~b \in (0, 1)\)

Subdifferential of a convex function \(f_i\) on \(\mathbb {R}^n\) at x is defined as

The column vector \(g_i:=g_i(x)\) is the subgradient of \(f_i\) at x. If \(f_i\) is differentiable then \(g_i=\nabla f_i(x)\).

Subdifferential of a convex vector function \(\mathscr {F}: \mathbb {R}^n\rightarrow \mathbb {R}^m\) at x is

Here \(L(\mathbb {R}^n, \mathbb {R}^m)\) represents the space of linear continuous mappings from \(\mathbb {R}^n\) into \(\mathbb {R}^m\), it is also known as the \((m \times n)\) real matrix space. If all \(f_i\) are differentiable at x then \(J\mathscr {F}(x)=\partial \mathscr {F}(x)\), \(J\mathscr {F}(x)\) represents the Jacobian of \(\mathscr {F}\) at point x. In fact the matrix \(A\in \partial \mathscr {F}(x)\) is \( ({g_1},{g_2},\ldots {g_m} )^T\).

Let every \(f_i\) be locally Lipschitz continuous at y. The Clarke generalized directional derivative of the function \(f_i\) at y along the direction of \(d \in \mathbb {R}^n\) is stated as follows.

The subsequent symbols are employed throughout this manuscript. \(d_x\) is the direction vector at x, \(d_{x^k}\) is the direction vector at \(x^k\), \(g_i:=g_i(x)\in \partial f_i(x), ~i \in \Lambda _m~\) and \(g_i^k:=g_i(x^k)\in \partial f_i(x^k),~ i \in \Lambda _m\).

3 Methodology

A point \(x^*\) is called the PO solution of MP if no other point exists x such that \(\mathscr {F}(x) \le \mathscr {F}(x^*)\) and \(\mathscr {F}(x) \ne \mathscr {F}(x^*)\), and \(x^*\) is said to be the weak PO solution of MP if no other point exists x for which \(\mathscr {F}(x) < \mathscr {F}(x^*)\). Suppose \(x^*\) is not a PO point of MP. Then \(\exists \) \(x\in \mathbb {R}^n\) such that \(\mathscr {F}(x)\le \mathscr {F}(x^*)\) and \(\mathscr {F}(x)\ne \mathscr {F}(x^*)\). Using the subdifferential’s definition of \(\mathscr {F}\) at \(x^*\),

For \(x=x^*+\alpha d\), where \(\alpha >0\) and the direction vector d at \(x^*\), the above inequality becomes \(A d\le 0\). Hence, if \(x^*\) is not a PO point then there exists \( d \in \mathbb {R}^n\) such that \(Ad \in -\mathbb {R}_{+}^m-\{0\} \text {for all}~ A \in \partial \mathscr {F}(x^*)\). A point \(x^*\) is a PO point if \(\exists \) \( d\in \mathbb {R}^n,~ Ad\notin -\mathbb {R}^m_{+}-\{0\} \) for some \(A\in \partial \mathscr {F}(x^*)\). Therefore if \(\text {for all}~ d\in \mathbb {R}^n,\hspace{.1cm} A d \notin -\mathbb {R}_{++}^m, \hspace{.1cm} \text{ for } \text{ some } \hspace{.1cm} A \in \partial \mathscr {F}(x^*)\) then \(x^*\) is a local weak PO point of MP. We say a point that satisfies this necessary condition is a PC point. Hence, the definition of the PC point defined in [25] is similar to the one that follows.

Definition 1

A point \(x^*\in \mathbb {R}^n\) is said to be a PC point of MP if \(\exists \) \(A\in \partial \mathscr {F}(x^*)\) so that \((Ad)_i\ge 0\) for at least one \(i \in \Lambda _m\) and \(\text {for all}~ d\in \mathbb {R}^n\), where \((Ad)_i\) denotes the \(i^{th}\) component of vector Ad.

Theorem 1

If \(\mathscr {F}\) is a convex vector function then the PC point of MP is a weak PO point. If \(\mathscr {F}\) is a strictly convex vector function then the PC point of MP is a PO point.

Proof

Suppose \(\mathscr {F}\) is a convex vector function and \(x^*\) is a PC point of MP. If possible, \(x^*\) is not a weak PO point. It is consequent that there exists \(y \in \mathbb {R}^n\), \(y\ne x^*\) such that \(f_i(y)<f_i(x^*)~ ~ \text {for all}~ i \in \Lambda _m\). Therefore

Hence \(\exists \) \(d~(=y-x^*)\) such that \(Ad<0~~ \text {for all}~ A\in \partial \mathscr {F}(x^*) \). This implies \(x^*\) is not a PC point, which follows from Definition 1. So \(x^*\) is a weak PO point.

Suppose \(\mathscr {F}\) is a strictly convex vector function and \(x^*\) is a PC point of MP. If possible, suppose \(x^*\) is not a PO point. Therefore, \(\exists \) a \(y \in \mathbb {R}^n\) such that \(f_i(y)\le f_i(x)\hspace{.1cm} \forall ~ i \in \Lambda _m\). Since all \(f_i\) are strictly convex functions, for every \(i\in \Lambda _m\),

Consequently, \( \text {for all}~ i\in \Lambda _m ~\text {and} ~ \text {for all}~ g_i\in \partial f_i(x^*),\)

This implies \(g_i^T(y-x^*)<0\) \(\forall i\in \Lambda _m\). Hence \(\exists \) \(d=y-x^*\) such that \(Ad<0~~\forall A \in \partial \mathscr {F}(x^*)\). This contradicts the idea that x is a PC point. So \(x^*\) must be a PO point. \(\square \)

For \(x \in \mathbb {R}^n\) define \(\mathscr {F}_x: \mathbb {R}^n \rightarrow \mathbb {R}\) as

and consider a subproblem at x as

A simple reformulation of the above subproblem is

Solution of \((P_x)\): \((P_x)\) is a convex quadratic semi-infinite programming problem characterized by linear inequality constraints. It is difficult to solve a general semi-infinite programming problem. However, with a known subdifferential set, some heuristic approaches can solve \((P_x)\) using the discretization process over the sudifferential set. The MATLAB command ’fseminf’ also takes care of the discretization process, as discussed in Subsection 3.1. This heuristic approach can be implemented if the subdifferential set is known in advance. To compute the subdifferential set, a separate methodology has to be developed for general nonsmooth functions, which is beside the context of the current work. The proposed methodology focuses only on the theoretical developments behind an iterative process based on a line search technique for MP using subdifferential set information.

Theorem 2

Let \((d_x,t_x)\) be the unique solution of the Subproblem \((P_x)\) and \(v_x\) be the optimal value.

-

1.

For any \(x\in \mathbb {R}^n,\) optimal value of \((P_x)\) is non positive and vanishes if x is a PC point of MP.

-

2.

If x is a PC point of MP then \((t_x,d_x)=(0,0)\) is the solution of \((P_x)\).

-

3.

If x is not a PC then \(v_x<0\) and \( \mathscr {F}_x(d_x) \le -(1 / 2)\Vert d_x\Vert ^2<0.\)

-

4.

The mappings \(x \rightarrow d_x\) and \(x\rightarrow v_x\) are continuous.

Proof

-

1.

For any \(x\in \mathbb {R}^n,\) suppose \(v_x\) is the optimal value of \((P_x)\), which is acquired at the optimal solution \((t_x,d_x)\). We have \( \mathscr {F}_x(d_x)+\frac{1}{2}\Vert d_x\Vert ^2\le \mathscr {F}_x(d)+\frac{1}{2}\Vert d\Vert ^2~\forall d\in \mathbb {R}^n \). At \(d=0,\) \(\mathscr {F}_x(d)+\frac{1}{2}\Vert d\Vert ^2=0\). Hence \( \mathscr {F}_x(d_x)+\frac{1}{2}\Vert d_x\Vert ^2\le 0\). That is, \(v_x\le 0\). Suppose x is a PC point for MP and \(v_x<0\). That is, \( \mathscr {F}_x(d_x)+\frac{1}{2}\Vert d_x\Vert ^2<0\) or \(\mathscr {F}_x(d_x)<0\). So \(Ad_x<0~ ~ \forall A\in \partial \mathscr {F}(x^*)\), which follows from the definition of \(\mathscr {F}_x(d_x)\). This contradicts that x is the PC point for which \(Ad\notin -\mathbb {R}^m_{++}\) for some \(A\in \partial \mathscr {F}(x)\) and \(\forall d\in \mathbb {R}^n\). Hence \(v_x=0\).

-

2.

From (1), \(v_x=0\) at the PC point x and \((t_x,d_x)\) is the solution of \((P_x)\), so \(t_x+\frac{1}{2}\Vert d_x\Vert ^2=0.\) That is,

$$\begin{aligned} \mathscr {F}_x(d_x)\le t_x\le t_x+\frac{1}{2}\Vert d_x\Vert ^2=0 \end{aligned}$$Since x is a PC point so \(\mathscr {F}_x(d_x)\ge 0\). Hence \(t_x=0, ~d_x=0.\)

-

3.

Consider x to be a non-PC point. It follows that there is \(d\in \mathbb {R}^n\) such that \(Ad<0\) \(\forall A\in \partial \mathscr {F}(x)\). That is, \(\mathscr {F}_x(d)<0\) for some \(d \in \mathbb {R}^n\). Since \(\mathscr {F}_x(d)\) is positive homogeneous of degree 1 we can consider \(\frac{\mathscr {F}_x(d)}{\Vert d\Vert ^2}\), denote \(\overline{\mathscr {F}_x(d)}=-\frac{\mathscr {F}_x(d)}{\Vert d\Vert ^2}\), and \(\bar{d}=\overline{\mathscr {F}_x(d)}.~d\). Hence

$$\begin{aligned} \begin{aligned} \mathscr {F}_x({\bar{d}})+\frac{1}{2}\Vert {\bar{d}}\Vert ^2&=\overline{\mathscr {F}_x(d)}.\mathscr {F}_x(d)+\frac{1}{2}(\overline{\mathscr {F}_x(d)})^2\Vert d\Vert ^2\\&= - \frac{(\mathscr {F}_x(d))^2}{\Vert d\Vert ^2}+\frac{1}{2}\Bigl (-\frac{\mathscr {F}_x(d)}{\Vert d\Vert ^2}\Bigl )^2 \Vert d\Vert ^2\\&= -\frac{1}{2}\frac{(\mathscr {F}_x(d))^2}{\Vert d\Vert ^2}\\&< 0. \end{aligned} \end{aligned}$$Hence \(v_x<0\). This implies \(\underset{d\in \mathbb {R}^n}{\min } \mathscr {F}_x(d)+\frac{1}{2}\Vert d\Vert ^2~<~0\). That is, \(\exists \) \(d\in \mathbb {R}^n\) such that \(\mathscr {F}_x(d)+\frac{1}{2}\Vert d\Vert ^2<0\). The result follows.

-

4.

For \(\epsilon >0\) and \(\bar{x} \in \mathbb {R}^n\), consider the set \( S(\bar{x}):=\left\{ d \in \mathbb {R}^n \mid \left\| d_{\bar{x}}-d\right\| =\epsilon \right\} . \) Let \(d_{\bar{x}}\) be the minimizer for the Subproblem (1) at \(\bar{x}\). The objective function of Subproblem (1) is strongly convex with a modulus of 1/2 since \(\mathscr {F}_x(\cdot )\) is a convex function. Therefore,

$$\begin{aligned} \mathscr {F}_{\bar{x}}(d)+(1/2)\Vert d\Vert ^2 \geqslant \mathscr {F}_{\bar{x}}\left( d_{\bar{x}}\right) +(1 / 2)\left\| d_{\bar{x}}\right\| ^2+(1 / 2) \epsilon ^2 \quad \forall d \in S(\bar{x}). \end{aligned}$$Since \(S(\bar{x})\) is a compact set and \(\mathscr {F}_x(d)\) is a continuous function, \(\exists \) \(\delta >0\) such that

$$\begin{aligned} \mathscr {F}_x(d)+(1 / 2)\Vert d\Vert ^2>\mathscr {F}_x\left( d_{\bar{x}}\right) +(1 / 2)\left\| d_{\bar{x}}\right\| ^2 ~~\forall d \in S, ~\text {whenever}~ \left\| x-\bar{x}\right\| \leqslant \delta . \end{aligned}$$Since \(\mathscr {F}_x(d)+(1 / 2)\Vert d\Vert ^2\) is a convex function and \(d_x\) is the minimizer of \(\mathscr {F}_x(d)+(1 / 2)\Vert d\Vert ^2\), therefore, from the above inequality we can conclude that \(d_x\) does not lie in the region \(\Vert d-d_{\bar{x}}\Vert >\epsilon \) for \(\Vert x-\bar{x}\Vert \le \delta \). Hence \(\Vert d_x-d_{\bar{x}}\Vert \le \epsilon \). This justifies that \(d_x\) is continuous. For the second part, it is sufficient to prove that \(v_x\) is continuous on every compact subset of \(\mathbb {R}^n\). Consider a function \(\gamma _x^i:U\rightarrow \mathbb {R}\) at every \(x\in \mathbb {R}^n\), where U is an arbitrary compact subset of \(\mathbb {R}^n\), \(i=1,2,3,\ldots ,m\), as

$$\begin{aligned} \gamma _x^i(z)=\underset{A\in \partial \mathscr {F}(z)}{\max }\{(Ad_x)_i\}+\frac{1}{2}\Vert d_x\Vert ^2 \end{aligned}$$and

$$\begin{aligned} \Gamma _x(z)=\max \{\gamma _x^1(z),\gamma _x^2(z),\ldots ,\gamma _x^m(z)\}, \end{aligned}$$where \(z\in U\). For every \(x\in \mathbb {R}^n,\) \(\gamma _x^i\) is a continuous function for each i and, hence \(\Gamma _x\) is a continuous function. Therefore for \(\epsilon >0\), \(\exists \) \(\delta >0\) such that for all \( y,z\in U\), \(\Vert y-z\Vert<\delta \implies |\Gamma _x(y)-\Gamma _x(z)|<\epsilon .\) From the Subproblem (1),

$$\begin{aligned} \begin{aligned} v_z= \mathscr {F}_z(d_z)+\frac{1}{2}\Vert d_z\Vert ^2&\le \mathscr {F}_z(d_y)+\frac{1}{2}\Vert d_y\Vert ^2,~~ \text {where}~d_y\ne d_z\\&= \underset{A\in \partial \mathscr {F}(z)}{\max }\{(Ad_y)_i~:~i=1,2,\ldots ,m\}+\frac{1}{2}\Vert d_y\Vert ^2\\&=\Gamma _y(z)\le \Gamma _y(y)+|\Gamma _y(z)-\Gamma _y(y)| \\&\le v_y+\epsilon , ~~\text {when}~\Vert y-z\Vert <\delta \end{aligned} \end{aligned}$$That is, \(v_z-v_y<\epsilon \) whenever \(\Vert y-z\Vert <\delta \). Similarly interchanging y and z in the above inequality, we get \(v_y-v_z<\epsilon \) whenever \(\Vert y-z\Vert \le \delta \). Hence \(|v_y-v_z|<\epsilon \) whenever \(\Vert y-z\Vert <\delta \). So \(v_x\) is continuous.

\(\square \)

The subsequent outcome is proved to compute the step length \(\alpha \) like Armijo type condition. This result justifies the descent property of every objective function.

Lemma 1

Suppose \(\mathscr {F}\) be a convex vector function and \(\beta \in (0,1)\). If x is not a PC point, then there exists a positive value \(\hat{\varvec{\alpha }} \) satisfies,

where \(\hat{1}\) is the vector of dimension \(n\times 1\) and all of its entries are equal to 1.

Proof

Given that x does not represent a PC point, the directional derivative for every objective function \(f_i\) at x along the direction \(d_x\) is

That is,

Hence \(\exists \) \(\hat{\varvec{\alpha }} > 0\) such that

holds for every \( i \in \Lambda _m\). Since \((t_x,d_x)\) is the solution of the subproblem \((P_x)\) at x, so \(f_i(x+\alpha d_x)-f_i(x)\le \alpha \beta t_x ~~ \forall \alpha \in (0, \hat{\varvec{\alpha }}) \) holds for each \( i \in \Lambda _m\). This implies \(\mathscr {F}(x+\alpha d_x)\le \mathscr {F}(x)+\alpha \beta t_x {\hat{1}} ~~\forall \alpha \in (0, \hat{\varvec{\alpha }}).\) \(\square \)

In the remaining part of the manuscript, we utilize the notations: \(d^k:=d_{x^k}\), \( t_k:=t_{x^k}\), \(A_k:=A\in \partial \mathscr {F}(x^k)\) and \(f_i^k:=f_i(x^k)\).

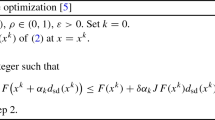

Theorem 3

(Convergence property) Suppose \(\mathscr {F}:\mathbb {R}^n\rightarrow \mathbb {R}^m\) be a convex vector function and \(\left\{ x^k\right\} \) be a sequence generated as \(x^{k+1}=x^k+\alpha _kd^k\) starting with the initial point \(x^0\), \(d^k\) is an approximate solution of the Subproblem (1) and \(\alpha _k\) corresponds to the first element of \(\left\{ \frac{1}{2}, \frac{1}{2^2},\frac{1}{2^3}, \ldots \right\} \) satisfying

for some \(\beta \in (0,1)\). Further, suppose \(M^0=\left\{ x \in \mathbb {R}^n: \mathscr {F}(x)\le \mathscr {F}\left( x^0\right) \right\} \) is convex and bounded, \(f_i(x)\) is bounded below for every \(i \in \Lambda _m\). Then every accumulation point of \(\left\{ x^k\right\} \) is a PC point of (MP).

Proof

If Inequality (2) holds then \(\exists \) at least one \(i \in \Lambda _m\) such that

Taking \(\omega \rightarrow \infty \), \(f_i'(x^k;d^k)\ge \beta t_k \). That is,

From the constraints of the Subproblem \((P_x)\) we have \((A_kd^k)_i\le t_k, \forall A_k\in \partial \mathscr {F}(x^k), ~\forall i\in \Lambda _m\). This implies \( {g_i^k}^Td^k\le t_k~~\forall g_i^k \in \partial f_i(x^k), \forall i \in \Lambda _m\). Hence

Using the above relation and (3),

Hence \( \underset{g_i^k \in \partial f_i(x^k)}{\max }\ {g_i^k}^Td^k\ge 0\) for at least one \(i\in \Lambda _m\). This contradicts \(A_k(d^k)<0\) \(\forall A_k\in \partial \mathscr {F}(x^k)\).

Hence \(\exists \) some \(\hat{\varvec{\alpha }}>0\) such that \( \hat{\varvec{\alpha }}\le \alpha _k\) holds for each k. Since \(x^k\) is not PC point so \(\underset{i\in \Lambda _m}{\max }(A_k(d^k))_i< 0\) and from Theorem 2-(3), \(t_k\le -\frac{1}{2}\Vert d^k\Vert ^2\). Substituting this Inequality in (2),

\(\left\{ f_i(x^k)\right\} \) is a monotonically decreasing sequence and bounded below. Let \(\{f_i(x^k)\}\) converges to some \(f_i^*\) as \(k \rightarrow \infty \). Taking \(k \rightarrow \infty \) in Inequality(4). We have,

Suppose \(\Vert d^j\Vert \ne 0\) for all j and \(\underset{j\in \mathbb {N}}{\inf } ~\Vert d^j\Vert =m\). Then

This is not possible since \(\beta \ne 0\) and \(m\ne 0\). So \(d^k \rightarrow 0\) as \(k \rightarrow \infty \). Hence \(t_k \rightarrow 0\) as \(k \rightarrow \infty \).

Since \(t_k={\max }\{(A_k d^k)_1,(A_k d^k)_2, (A_k d^k)_3,\ldots , (A_k d^k)_m~~\forall A_k \in \partial \mathscr {F}(x^k)\} \),

\(\exists \) \(g_i^k \in \partial f_i(x^k)\) such that \({g_i^k}^Td^k \rightarrow 0\) as \( k \rightarrow \infty .\) Since \(M^0\) is a bounded set, \(\left\{ x^k\right\} \) must have at least one accumulation point. Hence \(\exists \) a sub sequence \(\{x^{k_l}\}\) such that \(x^{k_l}\rightarrow x^*\) and \(\mathop {lim}\nolimits _{k_l \rightarrow \infty }d^{k_l}= 0\) and \(\mathop {lim}\nolimits _{k_l \rightarrow \infty }t^{k_l}=0\). That is, \(x^*\) is a PC point of MP. \(\square \)

From Theorem 2-(2), one can conclude that if at \(x^k\), \(t_k\ne 0, ~d^k\ne 0\) then \(x^k\) is not a PC point. In this situation, the next iterating point should be calculated. Note that since \(v_{x^k}\) is non-positive, from Theorem 2-(3), one can conclude that either \(v_{x^k}=0\) (or \(|v_{x^k}|=0\) ) or \(\mathscr {F}_x(d_x)= 0\). Then \(x^k\) is a PC point, and iterating process can stop here.

Observe that if the point \( x^{k}\) is not a PC point, then using the Theorem (2)-(3),

Hence \(T_k:=\{\alpha =1 / 2^j \mid j \in \mathbb {N}, \mathscr {F}(x^k+\alpha d^k)\le \mathscr {F}(x^k)+\beta \alpha t_k \hat{1}\} \ne \emptyset \). When \(\mathscr {F}\) is a scalar-valued differentiable function, the determination of the step length is reduced to an Armijo-like rule. The steps of this method are demonstrated through the following examples.

4 Numerical examples

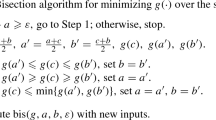

The theoretical results of the previous section can be summarized in the following steps.

-

Starting at any point \(x_0\), a sequence \(\{x^k\}\) is generated in the proposed methodology, which converges to a PC point. Convergence of the sequence is guaranteed in Theorem 3.

-

\((t_k, d^k)\) is the solution of the Subproblem \((P_x)\) and \(\alpha _k\) satisfies (2) at every iterating point \(x^k\), corresponding to the first element of \(\{\frac{1}{2},\frac{1}{2^2},\frac{1}{2^3},\ldots \}\) for some \(0<\beta <1\). Thus, the iterative process can be generated as \(x^{k+1}=x^k+\alpha _kd^k.\)

-

From Theorem 2, it is clear that if \(x^k\) is a PC point then the solution \((t_{x^k},d_{x^k})\) of the Subproblem \((P_x)\) at \(x^k\) satisfies \((t_{x^k},d_{x^k})=(0,0)\) or \(v_{x^k}=0\). The converse part of this result can be justified as follows. From Theorem 2(3), one can conclude that if \(v_{x^k}\ge 0\) or \(F_{x^k}(d_{x^k})\ge 0\) then \(x^k\) is a PC point. From Theorem 2(1), \(v_{x^k}\le 0\) for all \(x^k\). Hence \(v_{x^k}\)=0. This implies \((t_{x^k},d_{x^k})=(0,0)\). Therefore, \(x^k\) is a PC point if and only if \(v_{x^k}= 0\) or \((t_{x^k},d_{x^k})=(0,0)\). In a practical situation, an error term \(\epsilon \) can be considered so that \(|v_{x^k}|<\epsilon \).

Example 1

Consider an optimization problem: \( \underset{x\in \mathbb {R}}{\min } ~ \mathscr {F}(x)\), where \({\mathscr {F}}(x)=(f_1(x), f_2(x))^T\), \(f_1(x)=|x|\) and \(f_2(x)= |x|+|x+1|+|x+2|\). The objective functions are not differentiable everywhere. Hence this problem can not be solved by the existing line search techniques proposed in the papers, which are stated in the introduction section. From Fig. 1, it is clear that the PO set is \([-1,0]\). Using the proposed methodology, this can be verified as follows.

Set of solutions of Example 1

Consider \( x^0=-2\).

\(\mathscr {F}\) is not differentiable at \(x^0\) and \(\partial \mathscr {F}(x^0)=\biggl \{\begin{pmatrix} -1 \\ a \end{pmatrix}:~~ a\in [-3,-1]\biggr \}\). To compute the descent direction \(d^0\), consider

which is similar to

The subproblem is solved using the MATLAB command “optimproblem" after discretizing the value of a. The solution of this subproblem is found as \(d_{x^0}=1\) and \(t_{x^0}=-1\) and the optimal value is \(v(x^0)=-0.5\). Since \(v(x^0)<0\) so \(x^0\) is not a PC point, which can also be verified from Figure 1 as in the neighbourhood of \(x^0\), both \(f_1\) and \(f_2\) are decreasing. For \(\beta =1/2 \),

\(x^1=x^0+\alpha _1d^1= -1.5\) and \(\mathscr {F}(x^1)=\begin{pmatrix} 1.5\\ 2.5 \end{pmatrix}\). Here \(\mathscr {F}(x^1) < \mathscr {F}(x^0)\). Other points are computed in a similar manner. At \(x^1\), both the functions are differentiable. \(\partial \mathscr {F}(x^1)=\Big \{\begin{pmatrix} -1\\ {}-1 \end{pmatrix}\Big \}\). \((P_{x^1})\) is a convex quadratic problem which has unique solution \((t_1,d^1)=(-1,1)\), optimal value is \(v(x^1)=-0.5\) and \(\alpha _1=\frac{1}{2}\). Hence \(x^2=x^1+\alpha _1d^1=-1\).

At \(x^2\), \(\partial \mathscr {F}(x^1)=\Big \{\begin{pmatrix} -1\\ a \end{pmatrix}|~a\in [-1,1]\Big \}\) and \((P_{x^2})=\left\{ \begin{array}{l} \underset{t, d\in \mathbb {R}}{\min }~t+\frac{1}{2} \Vert d\Vert ^2 \\ s. t. -d \le t \\ \text {and}\,\, ad\le t, \forall a\in [-1,1] \end{array} \right. \).

Solution of this subproblem \((P_{x^2})\) is \((t_1,d^2)=(3.2195\times 10^{-4},~3.2195\times 10^{-4})\) and optimal value is \(v(x^2)\approx 3.2201\times 10^{-4}.\) Hence \(x^2\) is an approximate PC point. One can also verify this from Fig. 1.

The set of all PC points and efficient frontier can be determined by repeating the same process with different initial points and a suitable spreading technique. In Fig. 2a, the PF generated by this methodology is compared with the PF generated by NSGA-II. One may observe that both are approximately the same.

Example 2

Consider the problem.

\( \underset{x\in \mathbb {R}^2}{\min } ~ \mathscr {F}(x)= \underset{x\in \mathbb {R}^2}{\min }((x_1-1)^2+(x_2-1)^2, x_1^2+|x_2|)\) where \(f_1(x)=(x_1-1)^2+(x_2-1)^2\) and \(f_2(x)=x_1^2+|x_2|\).

The steps of the proposed methodology for this example are summarized in the following Table 1.

Using the proposed methodology, the solution to this problem is found as \(x^4=\begin{pmatrix} 0.5911\\ 0.64598 \end{pmatrix}\), starting with an initial point \(x^0=\begin{pmatrix} 1.5\\ 0 \end{pmatrix}\). In Fig. 2b, the PF generated by this methodology is compared with the PF generated by NSGA-II. One may observe that both are approximately the same.

Some more problems are solved in a similar way using the proposed methodology and summarized in Table 2. The objective functions of these problems are borrowed from [26,27,28].

All these problems are nonsmooth and convex. The proposed method is implemented in MATLAB. To find the solution to the subproblem at every iteration, first, we created the subproblem using the MATLAB command ’optimproblem’. This is a semi-infinite programming problem, which is solved using the discretization process and then solved using the MATLAB command ’solve’. We have observed that the solution of the subproblem using the MATLAB command ’fseminf’ provided the same solution set by solving the subproblem using the commands ’optimproblem’ and ’solve’ with discretization. The pre-specified constant \(\beta \) and the stopping parameter are taken \(\frac{1}{2}\) and \(10^{-3}\) respectively. In each case, we have verified that the PF is generated using the proposed methodology with the NSGA-II method.

5 Concluding remarks

A methodology for unconstrained MP, which uses the subdifferential information of the nonsmooth objective functions, is proposed in this paper. The iterative process develops a sequence, converging to the PC point under reasonable assumptions, preserving the descent property. The proposed methodology is applied in a set of numerical examples. However, getting information on the subdifferential set of all types of functions isn’t easy. Some computational processes should be developed to generate the subdifferential set to determine the descent direction explicitly. Generating a well-distributed Pareto front is also a challenge to this aspect. These are the possible future scope of the current work

Data Availability

No datasets were generated or analysed during the current study.

References

Tammer, C., Weidner, P.: Scalarization and Separation by Translation Invariant Functions. Springer, Cham (2020)

Miettinen, K., Mäkelä, M.: Interactive bundle-based method for nondifferentiable multiobjeective optimization: Nimbus. Optimization 34(3), 231–246 (1995)

Miettinen, K., Mäkelä, M.M.: Comparative evaluation of some interactive reference point-based methods for multi-objective optimisation. J. Oper. Res. Soc. 50(9), 949–959 (1999)

Laumanns, M., Thiele, L., Deb, K., Zitzler, E.: Combining convergence and diversity in evolutionary multiobjective optimization. Evol. Comput. 10(3), 263–282 (2002)

Mostaghim, S., Branke, J., Schmeck, H.: Multi-objective particle swarm optimization on computer grids. In: Proceedings of the 9th Annual Conference on Genetic and Evolutionary Computation, pp. 869–875 (2007)

Coello, C.A.C.: Evolutionary Algorithms for Solving Multi-objective Problems. Springer, New York (2007)

Deb, K.: Recent advances in evolutionary multi-criterion optimization (emo). In: Proceedings of the Genetic and Evolutionary Computation Conference Companion, pp. 702–735 (2017)

Deb, K., Jain, H.: An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part i: solving problems with box constraints. IEEE Trans. Evol. Comput. 18(4), 577–601 (2013)

Seada, H., Deb, K.: Non-dominated sorting based multi/many-objective optimization: Two decades of research and application. Multi-Objective Optimization: Evolutionary to Hybrid Framework, 1–24 (2018)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51, 479–494 (2000)

Drummond, L.G., Svaiter, B.F.: A steepest descent method for vector optimization. J. Comput. Appl. Math. 175(2), 395–414 (2005)

Fukuda, E.H., Drummond, L.G.: On the convergence of the projected gradient method for vector optimization. Optimization 60(8–9), 1009–1021 (2011)

Drummond, L.G., Iusem, A.N.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28, 5–29 (2004)

Fliege, J., Drummond, L.G., Svaiter, B.F.: Newton’s method for multiobjective optimization. SIAM J. Optim. 20(2), 602–626 (2009)

Qu, S., Goh, M., Chan, F.T.: Quasi-newton methods for solving multiobjective optimization. Oper. Res. Lett. 39(5), 397–399 (2011)

Mishra, S.K., Panda, G., Ansary, M.A.T., Ram, B.: On q-newton’s method for unconstrained multiobjective optimization problems. J. Appl. Math. Comput. 63, 391–410 (2020)

Lai, K.K., Mishra, S.K., Panda, G., Ansary, M.A.T., Ram, B.: On q-steepest descent method for unconstrained multiobjective optimization problems. AIMS Math. 5(6), 5521–5540 (2020)

Kumar, S., Ansary, M.A.T., Mahato, N.K., Ghosh, D., Shehu, Y.: Newton’s method for uncertain multiobjective optimization problems under finite uncertainty sets. J. Nonlinear Var. Anal. 7(5) (2023)

Fliege, J., Vaz, A.I.F.: A method for constrained multiobjective optimization based on sqp techniques. SIAM J. Optim. 26(4), 2091–2119 (2016)

Ansary, M.A.T., Panda, G.: A sequential quadratically constrained quadratic programming technique for a multi-objective optimization problem. Eng. Optim. 51(1), 22–41 (2019)

Ansary, M.A.T., Panda, G.: A globally convergent sqcqp method for multiobjective optimization problems. SIAM J. Optim. 31(1), 91–113 (2021)

Tanabe, H., Fukuda, E.H., Yamashita, N.: Proximal gradient methods for multiobjective optimization and their applications. Comput. Optim. Appl. 72, 339–361 (2019)

Bento, G.D.C., Cruz Neto, J.X., López, G., Soubeyran, A., Souza, J.: The proximal point method for locally lipschitz functions in multiobjective optimization with application to the compromise problem. SIAM J. Optim. 28(2), 1104–1120 (2018)

Da Cruz Neto, J.X., Da Silva, G., Ferreira, O.P., Lopes, J.O.: A subgradient method for multiobjective optimization. Comput. Optim. Appl. 54(3), 461–472 (2013)

Ansary, M.A.T.: A newton-type proximal gradient method for nonlinear multi-objective optimization problems. Optim. Methods Softw. 38, 570–590 (2023)

Lukšan, L., Vlcek, J.: Test problems for nonsmooth unconstrained and linearly constrained optimization. Technical report (2000)

Montonen, O., Karmitsa, N., Mäkelä, M.: Multiple subgradient descent bundle method for convex nonsmooth multiobjective optimization. Optimization 67(1), 139–158 (2018)

Gebken, B., Peitz, S.: An efficient descent method for locally lipschitz multiobjective optimization problems. J. Optim. Theory Appl. 188, 696–723 (2021)

Acknowledgements

The authors wish to extend their heartfelt appreciation to the anonymous referees for their insightful comments and valuable feedback, which improved the quality and clarity of this paper.

Author information

Authors and Affiliations

Contributions

Both the authors have equal contribution to this work.

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest have been declared by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, D., Panda, G. A line search technique for a class of multi-objective optimization problems using subgradient. Positivity 28, 34 (2024). https://doi.org/10.1007/s11117-024-01051-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11117-024-01051-6

Keywords

- Line search technique

- Convex optimization

- Pareto optimal point

- Multi-objective optimization

- Sub-gradient method