Abstract

We consider weak sharp solutions for the generalized variational inequality problem, in which the underlying mapping is set-valued, and not necessarily monotone. We extend the concept of weak sharpness to this more general framework, and establish some of its characterizations. We establish connections between weak sharpness and (1) gap functions for variational inequalities, and (2) global error bound. When the solution set is weak sharp, we prove finite convergence of the sequence generated by an arbitrary algorithm, for the monotone set-valued case, as well as for the case in which the underlying set-valued map is either Lipschitz continuous in the set-valued sense, for infinite dimensional spaces, or inner-semicontinuous when the space is finite dimensional.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

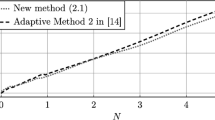

In 1998, Marcotte and Zhu [14] introduced the concept of weak sharp solutions of a point-to-point variational inequality. They derived the necessary and sufficient condition for a solution set to be weakly sharp, and also studied the finite convergence of iterative algorithms for solving variational inequalities whose solution set is weakly sharp. The finite convergence of an iterative algorithm means that the algorithm finds a solution in a finite number of iterates. Zhou and Wang [23] re-examined the unified treatment of finite termination of a class of iterative algorithms, and showed that some results given by Marcotte and Zhu [14] remain valid under more general assumptions. Wu and Wu [18] presented several equivalent (and sufficient) conditions for weak sharp solutions of a variational inequality in the setting of Hilbert spaces. They gave a finite convergence result for a class of algorithms for solving variational inequalities. Using the concept of a dual gap function, Zhang et al. [22] characterized the directional derivative and subdifferential of the dual gap function. Their analysis opens the way for a better understanding of the concepts of a global error bound, weak sharpness, and minimum principle sufficiency property for a variational inequality, where the operator is pseudo-monotone point-to-point. Xiu and Zhang [20] further studied finite convergence of a specific family of algorithms. They established the finite convergence of the proximal point algorithm when the solution set is weakly sharp.

If the objective function of a constrained optimization problem is neither convex nor differentiable, then the problem cannot be formulated as a point-to-point monotone variational inequality. In this case, generalized variational inequalities (i.e., with a non-monotone set-valued underlying mapping) are needed.

On the other hand, the literature addressing the case of weak sharp solutions for generalized variational inequalities (i.e., for set-valued variational inequalities) is very scarce, and, as far as we know, treats the maximally monotone case in finite dimensions, see, e.g., the recent work [19]. Under a weak-sharpness assumption, the latter paper shows finite termination for the set-valued maximally monotone case. To address this gap is the main aim of the present paper.

The paper is structured as follows: in the next section, we present the formulation of generalized variational inequality problem in which the underlying operator is a set-valued mapping. We also present some basic definitions and results from nonlinear and convex analysis, which will be used in the sequel. Section 3 deals with the notion of a gap function for generalized variational inequality problems. We investigate some properties of the gap functions and give some characterizations of the solution set of generalized variational inequality problems in terms of gap functions. In Sect. 4, on the lines of Marcotte and Zhu [14], we introduce the concept of weak sharp solution set for generalized variational inequalities. We provide some necessary and sufficient conditions in terms of a gap function for the solution set of the generalized variational inequality to be weak sharp. We give a necessary condition for a weak sharp solution set of a generalized variational inequality in terms of global error bound. In the last section, we prove finite termination of an iterative algorithm under weak sharpness of the solution set. We provide the finite termination result under two types of assumptions on the underlying set-valued map F: (1) when F is monotone, and (2) when F is continuous in the set-valued sense (see Definition 2).

The results of this paper extend and generalize corresponding results for variational inequalities studied in [14, 15, 23].

2 Formulations, preliminaries and basic definitions

Let H be a Hilbert space with inner product denoted by \(\langle \cdot ,\cdot \rangle \), and induced norm \(\Vert \cdot \Vert : H \rightarrow \mathbb {R}_+\), where \(\mathbb {R}_+\) is the set of nonnegative real numbers. Given C a nonempty closed convex subset of H and \(F : H \rightrightarrows H\) a set-valued mapping, the generalized variational inequality problem (GVIP) is stated as follows: find \(\bar{x} \in C\) and \(\bar{u} \in F(\bar{x})\) such that

An important particular case arises when \(F \equiv \partial f\), the subdifferential of a proper, lower semicontinuous and convex function \(f : H \rightarrow \mathbb {R}\cup \{ +\infty \}\), or the Clarke subdifferential [8] \(\partial f^{C}\) of a locally Lipschitz function \(f : H \rightarrow \mathbb {R}\cup \{ +\infty \}\). In these cases, the GVIP (1) provides a necessary and sufficient optimality condition for solving a convex/non-convex and non-smooth optimization problem. For further details on GVIP and their generalizations with applications, we refer to [1, 5, 10, 13, 16] and the references therein.

For a given nonempty subset C of H, we denote by \(\mathrm{int}C\), \(\mathrm{ri}C\), and \(\mathrm{cl}C\) the interior, the relative interior, and the closure of C, respectively. We denote by B(x, r) the open ball in H with center x and radius \(r>0\) and by B[x, r] the closed ball in H with center x and radius \(r>0\). The polar cone \(C^{\circ }\) of C is defined by

For a nonempty closed convex subset of \(\mathbb {R}^{n}\), the tangent cone to C at \(x \in C\) is defined by

By construction, \(T_{C}(x)\) is a nonempty, closed and convex cone. The normal cone to the set C at x is defined by

that is, \(N_{C}(x) := \left[ T_{C}(x) \right] ^{\circ }\). The distance from \(x\in H\) to C is given by

Let C be a nonempty closed convex subset of H. The projection of \(x \in H\) onto C is defined by

Let \(f : H \rightarrow \mathbb {R}\cup \{ +\infty \}\) be a proper, convex and lower semicontinuous function. The domain of f is denoted by \(\mathrm{dom}\,f\) and defined as

Fix \(d \in H\) and \(x\in \mathrm{dom}\,f\). Recall that the directional derivative of f at x in the direction d is defined by

Fix \(x\in \mathrm{dom}\,f\). The subdifferential of f at x is defined by

Let \(\varepsilon \ge 0\). The \(\varepsilon \) -subdifferential of f at x is defined by

Note that \(\partial _{\varepsilon } f(x) \supset \partial f(x) \) for all \(x \in \mathrm{dom}\,f\) and all \(\varepsilon \ge 0\).

Given a set-valued mapping \(F : H \rightrightarrows H\), its domain is denoted by D(F) and defined as

The graph of F is denoted by G(F) and defined as

Let \(C \subset H\) be nonempty. We denote by \(G_{C}(F)\) the graph of F restricted to C, namely,

A set-valued mapping \(F : H \rightrightarrows H\) is said to be

-

(a)

monotone if

$$\begin{aligned} \langle u-v, x-y \rangle \ge 0, \quad \mathrm{for\,all}\quad x, y \in H\quad \mathrm{and\,all}\quad u \in F(x), v \in F(y); \end{aligned}$$ -

(b)

pseudomonotone if for all \(x, y \in H\),

$$\begin{aligned} \langle u, y-x \rangle \ge 0 \quad \mathrm{for\,all}\quad u \in F(x) \quad \Rightarrow \quad \langle v, y-x \rangle \ge 0\quad \mathrm{for\,all}\quad v \in F(y). \end{aligned}$$ -

(c)

maximally monotone if it is monotone and the graph of F cannot be enlarged without destroying the monotonicity property. In other words, if \(\tilde{F}\) is monotone and \(G(F) \subset G(\tilde{F})\), then \(F \equiv \tilde{F}\);

-

(d)

paramonotone if it is monotone and whenever \(\langle u-v,x-y \rangle = 0\) with \(u \in F(x)\) and \(v \in F(y)\), then this implies that \(u \in F(y)\) and \(v \in F(x)\);

We will consider in our analysis maps which are continuous in the point-to-set sense. The following definitions and results collect all the tools we will need. We start by defining convergence of a sequence of sets.

Definition 1

([5, Definition 2.2.3 and Proposition 2.2.7]) Given a sequence \(\{ C^{k} \}\) of sets such that \(C^k\subset H\) for all k, we define the interior limit of the sequence \(\{C^k\}\) of sets as the set

Recall that in a Hilbert space, the strong topology is the metric topology induced by the norm.

Definition 2

([5, Definition 2.5.1(a) (b) and Definition 2.5.3 (i)]) Let \(F : H \rightrightarrows H\) and U be a subset of D(F) such that F is closed-valued on U. In the definitions below, we consider the convergence with respect to the strong (i.e., the norm) topology in H. We say that F is:

-

(a)

inner-semicontinuous at \(x\in D(F)\) if whenever a sequence \(\{x_n\}_{n\in \mathbb {N}}\) converges to x we have

$$\begin{aligned} F(x) \subset \mathop {\lim {\text {int}} }\limits _{n \rightarrow \infty } F({x_n}). \end{aligned}$$ -

(b)

Lipschitz continuous on U if there exists a Lipschitz constant \(\kappa >0\) such that for all \(x,\,x'\in U\) it holds that

$$\begin{aligned} F(x)\subset F(x') + \kappa \Vert x -x '\Vert B. \end{aligned}$$

The next result, which will be used for establishing finite convergence under no monotonicity assumptions, is an adaptation of [5, Proposition 2.5.29(i)] to our framework. Since the statement is slightly changed from that in [5], and for convenience of the reader, we include its proof here.

Proposition 1

Let \(F : H \rightrightarrows H\) be inner-semicontinuous (with respect to the strong topology) at \(\bar{x}\in \, \mathrm{int}\,{D(F)}\). Consider the following assumptions.

-

(i)

H is infinite dimensional and \(F(\bar{x})\) is compact (with respect to the strong topology in H).

-

(ii)

\(H=\mathbb {R}^n\) (i.e., H is finite dimensional) and \(F(\bar{x})\) is closed.

If either (i) or (ii) hold, then for every \(\rho >0\), \(\varepsilon >0\), there exists \(\delta >0\) such that

Proof

Assume that (i) holds. If the statement on neighborhoods were not true, we could find \(\rho _0\), \(\varepsilon _0>0\), and a sequence \(\{x_n\}\) converging to \(\bar{x}\) such that \(F(\bar{x})\cap \,B[0,\rho _0]\not \subset F(x_{n}) + B[0,\varepsilon _0]\). Define a sequence \(\{z_n\}\) such that \(z_n\in F(\bar{x})\cap \,B[0,\rho _0]\) and \(z_n\not \in F(x_n) +B[0,\varepsilon _0]\). By compactness of \(F(\bar{x})\), there exists a subsequence \(\{z_{n_k}\}\) of \(\{z_n\}\) converging to some \(\bar{z}\in F(\bar{x})\cap \,B[0,\rho _0]\). By inner-semicontinuity of F, we can find a sequence \(\{y_n\in F(x_n)\}\) also converging to \(\bar{z}\). Then \(\lim _k z_{n_k}-y_{n_k}=0\), so that for large enough k we have that \(z_{n_k}-y_{n_k}\in B[0,\varepsilon _0]\). Altogether, we conclude that \(z_{n_k}= y_{n_k} +[z_{n_k}-y_{n_k}]\in F(x_{n_k})+B[0,\varepsilon _0]\) for large enough k, but this contradicts the definition of \(\{z_n\}\). Therefore, the claim on neighborhoods must hold.

Assume now that (ii) holds. In this case, the proof follows the same steps, but with the difference that we use the compactness of the ball, which only holds in finite dimensions. Indeed, we have now that the set \(F(\bar{x})\cap \,B[0,\rho _0]\) is compact, because the ball is compact and \(F(\bar{x})\) is closed. Hence, as in the proof for assumption (i), there is subsequence \(\{z_{n_k}\}\) of \(\{z_n\}\) converging to some \(\bar{z}\in F(\bar{x})\cap \,B[0,\rho _0]\). The proof now follows exactly as for the previous assumption. \(\square \)

Remark 1

The Lipschitz continuity assumption rules out maximally monotone mappings which are not point-to-point. Hence this assumption only makes sense for non-monotone set-valued variational inequalities.

The following is a simple extension of results in the literature, and will be needed in the sequel. We provide here its simple proof for convenience of the reader.

Lemma 1

(See [15, Lemma 1] and [7, Lemma 3.1]) Let \(C\subset H\) be a closed convex set and \(x \in C\). Then, for every \(u\in H\), we have

-

(a)

\(\left\langle P_{T_{C}(x)} (-u), u \right\rangle = - \left\| P_{T_{C}(x)} (-u) \right\| ^{2}\);

-

(b)

\(\min \left\{ \langle v, u \rangle : v \in T_{C}(x), \; \Vert v \Vert \le 1 \right\} = - \left\| P_{T_{C}(x)} (-u) \right\| \).

Proof

We denote by \(\bar{u} := P_{T_{C}(x)} (-u) \in T_C(x)\). The properties of the projection imply that

Taking \(y = 0 \in T_C(x)\) and \(y = 2 \bar{u} \in T_C(x)\) in (2), we obtain sequentially that

which readily imply (a). Assume first that \(\bar{u}=0\). From (2) and (a), we have

So,

If \(\bar{u}=0\), then the above infimum is attained when \(v := \bar{u}\) and is equal to 0. Hence, (b) holds in this case. Assume now that \(\bar{u}\not =0\). The fact that \(\bar{u}=P_{T_{C}(x)} (-u)\) means that

Therefore, for all \(y \in T_C(x)\) such that \(\Vert y \Vert \le \Vert \bar{u} \Vert \), we have

Using (a) in this inequality and simplifying the resulting expression yields

where in the left-most inequality we used the fact that \(y\in T_C(x)\) is such that \(\Vert y\Vert \le \Vert \bar{u}\Vert \). Combine the left-most and right-most expressions in (3) to obtain

for all \(y\in T_C(x)\) such that \(\Vert y\Vert \le \Vert \bar{u}\Vert \). Since \(\bar{u}\not =0\), we can re-write this expression as

for all \(y\in T_C(x)\) such that \(\Vert y\Vert \le \Vert \bar{u}\Vert \). Since \(\Vert y\Vert \le \Vert \bar{u}\Vert \), \( \frac{y}{\Vert \bar{u}\Vert }\) represents any \(y'\in T_C(x)\) such that \(\Vert y'\Vert \le 1\). With this in mind, (4) reads

for all \(y'\in T_C(x)\) such that \(\Vert y'\Vert \le 1\). This proves that

To obtain the opposite inequality, take \(v:=\frac{\bar{u}}{\Vert \bar{u}\Vert }\in T_{C}(x)\), we have

where we used (a) in the right-most equality. This completes the proof of (b). \(\square \)

3 Gap functions and solution set for generalized variational inequalities

3.1 Characterization of solution set for generalized variational inequalities

The GVIP can be stated in terms of the graph of F as follows:

The set of solutions of GVIP is denoted by S, that is,

We will also consider the set \( \bar{S}\), the projection of S onto the first coordinate :

From (6), we have that \(\bar{x} \in \bar{S}\) if and only if there exists \(\bar{u} \in F(\bar{x})\) such that \(-\bar{u} \in N_{C}(\bar{x})\) or equivalently, \(P_{T_{C}(\bar{x})} [-\bar{u}] = 0\).

Now we give a characterization of the solution set \(\bar{S}\) of (1) under paramonotonicity.

Proposition 2

Let \(F : H \rightrightarrows H\) be paramonotone and \(\bar{x} \in \bar{S}\). Then, \(\bar{S} = \tilde{S}\), where

Proof

Let \(z \in \bar{S}\). Then, there exists \(w \in F(z)\) such that \(\langle w, \bar{x}-z \rangle \ge 0\). Since \(\bar{x} \in \bar{S}\), there exists \(\bar{u} \in F(\bar{x})\) such that \(\langle \bar{u}, z-\bar{x} \rangle \ge 0\). Combining the last two inequalities, we get

By monotonicity of F, we have

From (8) and (9), we get \(\langle w - \bar{u}, z-\bar{x} \rangle = 0\). The paramonotonicity of F implies that \(w \in F(\bar{x})\) and \(\bar{u} \in F(z)\). Hence, \(\bar{u} \in F(z)\cap F(\bar{x})\). Moreover,

and thus, \(\langle \bar{u}, z-\bar{x} \rangle = 0\). Hence, \(z \in \tilde{S}\), therefore, \(\bar{S} \subseteq \tilde{S}\).

Conversely, let \(z \in \tilde{S}\). Then, \(z \in C\) and there exists \(\bar{u} \in F(z)\cap F(\bar{x})\) such that

Since \(\bar{x}\in \bar{S}\), there exists \(\hat{u} \in F(\bar{x})\) such that

By monotonicity, and the inclusions \(\bar{u} \in F(z)\) and \(\hat{u}\in F(\bar{x})\), we have

Using (10) and (11) for \(y=z\) in the inequality above yields

so,

This fact and (10) imply that

and by paramonotonicity, we conclude that

For any \(y \in C\), by (11), we have

where we used (13) in the last equality. Combining the above expression with (14) implies that \(z \in \bar{S}\). Therefore, \(\tilde{S} \subseteq \bar{S}\) and the proof is complete. \(\square \)

Remark 2

Proposition 2 extends to the point-to-set framework Lemma 2 in [15]. Since the subdifferential of a proper convex lower semicontinuous function is point-to-set and paramonotone [12], Proposition 2 also extends and generalizes Lemma 1 in [23].

3.2 Gap function and solution set for generalized variational inequalities

Let C be a nonempty subset of H and \(F : H \rightrightarrows H\) be a set-valued mapping with a non-empty domain.

Definition 3

A function \(g : H \rightarrow \mathbb {R}\cup \{ +\infty \}\) is said to be a gap function for GVIP if and only if the following conditions hold:

-

(a)

\(g(x) \ge 0\) for all \(x \in C\);

-

(b)

\(g(\bar{x}) = 0\) if and only if \(\bar{x} \in \bar{S}\).

One of the main motivations to introduce the gap functions is that they allow to convert a GVIP into an optimization problem, so that standard methods can be used to solve GVIP.

Crouzeix [9] considered the Minty type generalized variational inequality problem (MGVIP): find \(\bar{x} \in C\) such that

The solution set of MGVIP is denoted by \(S^{*}\). It is clear that \(\bar{S} \subseteq S^{*}\) if F is pseudomonotone. It was proved by Crouzeix [9] that \(\bar{S} = S^{*}\) if C is a nonempty closed convex subset of H and F is pseudomonotone and upper semicontinuous with nonempty convex compact values.

Auslender [2] (see also [3, 9]) introduced the following gap function \(h_{F,C} : H \rightarrow \mathbb {R}\cup \{ +\infty \}\) for GVIP:

The function \(h_{F,C}\) is nonnegative, lower semicontinuous and convex. The latter two properties follow from the fact that it is a supremum of affine functions. From its definition, \(h_{F,C}\) is proper when \(C \cap D(F) \ne \emptyset \).

When F is maximally monotone, the minimizers of \(h_{F,C}\) are the solutions of GVIP. This fact, established in [4], is stated next.

Proposition 3

[3, 4] Assume that F is maximally monotone. Consider the following assumptions.

-

(i)

H is finite dimensional and \(\mathrm{ri}(D(F)) \cap {\mathrm{ri}}(C) \ne \emptyset \).

-

(ii)

H is infinite dimensional and \(\mathrm{int}(D(F)) \cap C \ne \emptyset \) or \(D(F) \cap \mathrm{int}(C) \ne \emptyset \).

Under assumptions (i) or (ii), we have:

-

(a)

\(h_{F,C}(x) \ge 0\) for all \(x \in D(F) \cap C\);

-

(b)

\(h_{F,C}(\bar{x}) = 0\) if and only if \(\bar{x} \in \bar{S}\).

For brevity, we write from now on h instead of \(h_{F,C}\) when referring to the gap function defined by (16).

Definition 4

For a fixed \(x \in C\), we define the following sets:

Remark 3

For h as in (16), we have that

Indeed, we clearly have

The opposite inclusion follows directly from the fact that

where we used the definition of h in the first inequality. Hence, under the assumptions of Proposition 3, for every \(\bar{x}\in \bar{S}\) we must have \(h(\bar{x})=0\). In this situation, (18) yields

Remark 4

Fix \(\bar{x}\in \bar{S}\) and assume that F is maximally monotone. If assumptions (i) or (ii) of Proposition 3 hold, we must have that \(h(\bar{x})=0\). This yields

Indeed, we may use \(z:=\bar{x}\) in the scalar product in the definition of \(\Lambda (\bar{x})\). As a direct consequence of the inclusion above, we have that

and

The aim of the next lemma is to find further relations between the sets \(\Lambda _2(\bar{x})\), \(\partial h(\bar{x})\) and \(F(\bar{x})\).

Lemma 2

Let F be a maximally monotone point-to-set mapping and \(C \subset H\) a closed and convex set such that assumptions (i) or (ii) in Proposition 3 hold. Let \(\bar{S} \ne \emptyset \) be as defined by (7) and fix \(\bar{x}\in \bar{S}\). Consider h as defined by (16). With the notation used in (17) and (19), the following properties hold.

-

(a)

For all \(x\in C\cap \mathrm{dom\,}h\) and all nonzero vector \(d \in H\), we have

$$\begin{aligned} h^{\prime }({x};d) \ge \displaystyle \sup _{w \in \Lambda _2 ({x})}\langle w, d \rangle . \end{aligned}$$Consequently, \(\Lambda _2 ({x}) \subseteq \partial h({x})\).

-

(b)

If F is paramonotone and \(\bar{x} \in \bar{S}\), then \(\Lambda _2 (\bar{x}) = F(\bar{x})\subset \partial h(\bar{x})\).

Proof

-

(a)

Given \(d \in H\), use the definition of h and \(\Lambda ({x})\), to write

$$\begin{aligned} h({x} + t d)= & {} \sup \left\{ \langle w, ({x} + t d) - y \rangle : (y,w) \in G_{C}(F) \right\} \\\ge & {} \sup \left\{ \langle w, {x} - y \rangle + t \langle w, d \rangle : (y,w) \in \Lambda ({x}) \right\} \\= & {} h(x)+ t \sup \left\{ \langle w, d \rangle : w\in \Lambda _2({x}) \right\} , \end{aligned}$$where we also used the fact that \(\Lambda ({x})\subset G_{C}(F)\) in the first inequality. The above expression yields,

$$\begin{aligned} \frac{h({x} + t d) - h({x})}{t} \ge \sup _{w \in \Lambda _2 ({x})} \langle w, d \rangle \ge \langle u,d \rangle , \quad \mathrm{for\,all}\quad u \in \Lambda _2 ({x}). \end{aligned}$$Thus, for all \(d\not =0\) we have that \(h^{\prime }({x};d) \ge \langle u, d \rangle \) for all \(u \in \Lambda _2 ({x})\). By [17, Proposition 4.1.6], we deduce that \(u \in \partial h({x})\), and hence, \(\Lambda _2 ({x}) \subseteq \partial h({x})\).

-

(b)

Let \(w \in \Lambda _2 (\bar{x})\). By (19), there exists \(y \in C \cap F^{-1}(w)\) such that \(\langle w, \bar{x} - y \rangle = 0\). Since \(\bar{x}\in \bar{S}\), there exists \(\bar{w} \in F(\bar{x})\) such that

$$\begin{aligned} \langle \bar{w}, y-\bar{x} \rangle \ge 0, \quad \mathrm{for\,all}\quad y \in C. \end{aligned}$$(21)By monotonicity of F, for all \(w \in F(y)\), we have

$$\begin{aligned} 0 \le \langle w-\bar{w}, y-\bar{x} \rangle = \langle w, y-\bar{x} \rangle + \langle \bar{w}, \bar{x}-y \rangle \le 0, \end{aligned}$$where we used (21) and the fact that \(\langle w, y-\bar{x} \rangle = 0\). Altogether, we have

$$\begin{aligned} \langle w-\bar{w}, y-\bar{x} \rangle = 0 \quad \mathrm{with }\quad w \in F(y) \quad \mathrm{and }\quad \bar{w} \in F(\bar{x}). \end{aligned}$$Since F is paramonotone, we have \(\bar{w} \in F(y)\) and \(w \in F(\bar{x})\). Hence, for all \(w \in \Lambda _2(\bar{x})\), we have \(w \in F(\bar{x})\). Therefore, \(\Lambda _2(\bar{x}) \subseteq F(\bar{x})\). The opposite inclusion follows from (20). The inclusion involving the subgradient of h follows directly from (a) for \(x=\bar{x}\).

\(\square \)

Remark 5

For \(\bar{x}\in \bar{S}\) and \(\varepsilon \ge 0\), we can define enlargements of the set \(\Lambda _2(\bar{x}) \) given in (19) as follows.

With straightforward modifications, the proof of Lemma 2 (a) can be adapted to show that \( \Lambda _2^{\varepsilon } ({x}) \subseteq \partial _{\varepsilon } h({x}).\)

4 Weak sharp solutions for generalized variational inequalities

In this section, we consider GVIP with solution set \(\bar{S}\). Unless specifically stated, F is a set-valued mapping which is not necessarily maximally monotone.

Definition 5

We say that \(\bar{S}\) is weak sharp if there exists \(\alpha > 0\) such that

where B denotes the unit ball with center at origin in H. In this situation, we say that \(\bar{S}\) is weak sharp with parameter \(\alpha \).

We will consider in our analysis the following alternative concept of weak sharp solution, introduced in [6, 24] in the context of error bounds.

Definition 6

Let g be a gap function for GVIP (as given in Definition 3) and assume that g is convex, proper and lower semicontinuous. We say that \(\bar{S}\) is weak sharp with respect to g if there exists \(\alpha > 0\) such that

In this situation, we say that \(\bar{S}\) is g -weak sharp with parameter \(\alpha \).

The following proposition gives the characterization of a weak sharp solution set, and will be used in the next section for establishing finite convergence.

Proposition 4

The solution set \(\bar{S}\) of GVIP is weak sharp with parameter \(\alpha \) if and only if for every \(\bar{x}\in \bar{S}\) there exists \(\bar{u} \in F(\bar{x})\) such that

for all \(v \in V(\bar{x}) = T_{C}(\bar{x}) \cap N_{\bar{S}}(\bar{x})\).

Proof

Assume that the solution set \(\bar{S}\) of GVIP is weak sharp. Then, for all \(x \in B\) and every \(\bar{x}\in \bar{S}\), we have

That is, there exist \(\bar{u} \in F(\bar{x})\) and \(z \in \left( V(\bar{x}) \right) ^{\circ }\) such that \(\alpha x -\bar{u} = z\in \left( V(\bar{x}) \right) ^{\circ }\). Hence, for all \(v \in V(\bar{x})\) with \(v \ne 0\), we have \(\langle \alpha x - \bar{u}, v \rangle \le 0\), equivalently, \(\alpha \langle x, v \rangle \le \langle \bar{u}, v \rangle \). Take \(x = \frac{v}{\Vert v \Vert }\), then \(\alpha \left\langle \frac{v}{\Vert v \Vert }, v \right\rangle \le \langle \bar{u}, v \rangle \). That is, there exists \(\bar{u} \in F(\bar{x})\) such that

Conversely, assume that (25) holds and let \(x \in B\). For \(v \in V(\bar{x})\) and \(\bar{u}\in F(\bar{x})\) as in (25), we can write

where we used (25) in the first inequality and the fact that \(x\in B\) in the second one. This implies that for all \(x\in B\) there exists \(\bar{u} \in F(\bar{x})\) such that

Equivalently, \(\alpha x \in F(\bar{x}) + \left( V(\bar{x}) \right) ^{\circ }\) for all \(x \in B\). Hence, (23) holds. \(\square \)

The next proposition gives the necessary condition for a weak sharp solution set of a GVIP in terms of global error bound.

Proposition 5

If the solution set \(\bar{S}\) of the GVIP is weak sharp with parameter \(\alpha \), then

Proof

Given a fixed \(x \in C\), set \(\bar{x} = P_{\bar{S}}(x)\). Then,

By Proposition 4, there exists \(\bar{u} \in F(\bar{x})\) such that

In particular, \(\langle \bar{u}, x-\bar{x} \rangle \ge \Vert x-\bar{x} \Vert \). The definition of h and the inequality above yield

Hence, (26) holds. \(\square \)

The following propositions give some nice properties in terms of directional derivatives or subdifferential of a function if the global error bound condition (26) is satisfied.

Proposition 6

Let \(\bar{x} \in \bar{S}\). If

then \(h^{\prime }(\bar{x};v) \ge \alpha \Vert v \Vert \) for all \(v \in V(\bar{x}) = T_{C}(\bar{x}) \cap N_{\bar{S}}(\bar{x})\).

Proof

Assume that (27) holds. If \(v \in V(\bar{x})\) with \(v = 0\), then trivially we are done. So, we assume that \(v \in V(\bar{x})\) but \(v \ne 0\). In this case,

On the other hand, the fact that \(v\in V(\bar{x})\) implies that, in particular, \(v\in N_{\bar{S}}(\bar{x})\), and hence

This means that

Since \(v \in T_{C}(\bar{x})\), we have

with \(\bar{x} + t_{k} v_{k} \in C\) for all k and \(t_{k} \downarrow 0\). Combining (31) and (28), we deduce that for all \(k \ge k_{0}\), \(\langle v_{k}, v \rangle > 0\), that is,

Equivalently, \((\bar{x} + t_{k} v_{k}) \not \in H^-_{v} \) for all \(k \ge k_{0}\). This implies that their projection onto the half-space \(H^-_{v} \) will belong to the boundary of \(H^-_{v} \), which is

Denote by \(\bar{z}_k:= P_{H_{v}}(\bar{x} + t_{k} v_{k})\) for all \(k \ge k_{0}\). Using also the inclusion in (30), we can write

By construction, the vector \((\bar{x} + t_{k} v_{k} - \bar{z}_k )\) is a positive multiple of v. Namely, there is a positive scalar \(\lambda _k\) such that

Let us compute \(\lambda _k\). Since \(\langle v,\bar{z}_k - \bar{x} \rangle = 0\), we have

which readily implies that \(\lambda _k = \displaystyle \frac{t_{k} \langle v_{k}, v \rangle }{\Vert v \Vert ^{2}}\). This gives

Therefore,

Then from (32) and (27), we have

Since \(h(\bar{x}) = 0\), we have

Taking limit for \(k\rightarrow \infty \) and recalling the fact that \(t_k\downarrow 0\), we obtain

as claimed. \(\square \)

Proposition 7

Let \(\bar{x} \in \bar{S}\) and assume that (27) holds. If \(\bar{x} \in {\mathrm{int}}(\mathrm{dom\,}h)\) and h is continuous at \(\bar{x}\), then there exists \(\bar{u} \in \partial h(\bar{x})\) such that \(\langle \bar{u}, v \rangle \ge \alpha \Vert v \Vert \) for all \(v \in V(\bar{x})\) and \(\alpha > 0\).

Proof

From (27), we have

Since \(\bar{x} \in {\mathrm{int}}(\mathrm{dom\,}h)\) and h is continuous at \(\bar{x}\), we can use [17, Proposition 4.1.6 (b)] to deduce that

That is, there exists \(\bar{u} \in \partial h(\bar{x})\) such that \( h^{\prime }(\bar{x},v) = \langle v, \bar{u} \rangle .\) From (33), we have \(\langle \bar{u}, v \rangle \ge \alpha \Vert v \Vert \). \(\square \)

Remark 6

In several papers, condition (26) is used as a definition of weak sharp solutions of variational inequalities or optimization problems, see, for example [6, 23, 24] and the references therein. In Propositions 6 and 7, we proved that if condition (26) is satisfied then we have some nice properties in terms of directional derivatives or subdifferential of a function. However, we could not establish the sufficient condition for a weak sharp solution set of GVIP in terms of global error bound. This remains an open problem, and the topic of our future research.

5 Finite convergence analysis

We say that an algorithm has finite convergence whenever all iterates belong to the solution set after a finite number of iterations. In previous sections, we extended the notion of weak sharpness to the framework of set-valued mappings. In the present section, we show that our definition of weak sharpness ensures finite convergence in this more general framework.

Moreover, at the end of this section, we observe that finite convergence can also be obtained for paramonotone problems under the relaxed assumption that the solution set is g-weak sharp.

The following theorem establishes a necessary condition for finite convergence. Its proof is standard, we present it here for completeness.

Theorem 1

Let \(F : H \rightrightarrows H\) be a set-valued mapping with nonempty domain. Let \(\{ x_{k} \} \subseteq C\cap D(F)\) be a sequence generated by an algorithm with finite termination. In other words, there exists \(k_{0} \in \mathbb {N}\) such that \(x_{k} \in \bar{S}\) for all \(k \ge k_{0}\). In this situation,

Proof

Assume that there exists \(k_{0} \in \mathbb {N}\) such that \(x_{k} \in \bar{S}\) for all \(k \ge k_{0}\). Then, by definition of \(\bar{S}\), there exists \(u_{k} \in F(x_{k})\) such that

that is, \(-u_{k} \in N_{C}(x_{k})\). Hence, \(P_{T_{C}(x_{k})} (- u_{k}) = 0\), and thus, there exists \(z^k = P_{T_{C}(x_{k})} (- u_{k})\subset P_{T_{C}(x_{k})} (-F(x^k))\) such that \(0 = \displaystyle \lim \nolimits _{k\rightarrow \infty }z^k\). Hence, (34) holds. \(\square \)

The following theorem establishes conditions under which (34) is also sufficient for a monotone mapping.

Theorem 2

Let \(F : H \rightrightarrows H\) be a monotone set-valued mapping with nonempty domain. Assume that \(\bar{S}\) is closed and convex, and weak sharp with parameter \(\alpha \). Let \(\{ x_{k} \} \subseteq C\cap D(F)\) be a sequence generated by an algorithm such that (34) holds. Then, there exists \(k_{0} \in \mathbb {N}\) such that \(x_{k} \in \bar{S}\) for all \(k \ge k_{0}\).

Proof

Assume that the conclusion is not true. Then, there exists a subsequence \(\{ x_{k_{j}} \}\) of \(\{ x_{k} \}\) such that \(x_{k_{j}} \notin \bar{S}\) for all \(j \in \mathbb {N}\). Without loss of generality, we may rename \(\{ x_{k_{j}} \}\) as \(\{ y_{j} \}\) for simplicity. Then, \(y_{j} \notin \bar{S}\) for all \( j \in \mathbb {N}\). Let \(z_{j} = P_{\bar{S}} (y_{j})\). Since \(y_{j} \notin \bar{S}\) and \(z_{j} \in \bar{S}\), we have \(\Vert z_{j} - y_{j} \Vert > 0\) for all j. By construction, we have that

By weak sharpness and Proposition 4 applied to \(\bar{x}=z_j\) and to \(v_j=y_{j} - z_{j} \in T_{C}(z_{j}) \cap N_{\bar{S}}(z_{j})\), there exists \(\hat{u}_j \in F({z_j})\) such that

which yields, for all j

By (34), for all k there exists \(u_j\in F(y^j)\) such that

By Lemma 1, monotonicity of F, and (35), we have

where we used monotonicity in the second inequality, and Lemma 1(b) in the last equality. The above expression contradicts (36). Therefore, we must have finite convergence. \(\square \)

By combining Theorems 1 and 2, we have the following result.

Theorem 3

Let \(F : H \rightrightarrows H\) be a monotone set-valued mapping with nonempty domain. Assume that \(\bar{S}\) is closed and convex, and weak sharp with parameter \(\alpha \). Let \(\{ x_{k} \} \subseteq C\cap D(F)\) be a sequence generated by an algorithm. Then, \(x_{k} \in \bar{S}\) for sufficiently large k if and only if (34) holds.

Remark 7

Theorem 3 can be seen as a generalization and refinement of Theorem 5.2 in [14] and Theorem 3.2 in [20].

Remark 8

The assumption of monotonicity in Theorem 3 is standard in the literature of weak-sharp minima (see, e.g., Theorem 2 in [14] and Theorem 4.2 in [19]). This assumption is used for proximal-like methods and its variants, such as those studied in [4]. The weak sharpness assumption has been mainly investigated for the point-to-point case, and its validity in this case is equivalent to an error bound condition for the gap function of the variational inequality (see, e.g., Theorem 3.1 in [11]). As for the point-to-set case, examples of weak sharpness of the solution set can be found in [19] for variational inequalities arising from nonsmooth constrained optimization problems.

To establish finite convergence without monotonicity, we need to strengthen condition (34) (see condition (ii) below). We also need F to have a continuity property. On the other hand, we do not assume that the sequence is bounded, which is a standard assumption used in the literature.

Theorem 4

Let \(F : H \rightrightarrows H\) be a set-valued mapping with nonempty domain. Assume that the solution set \(\bar{S}\) is closed and convex, and weak sharp with parameter \(\alpha \). Let \(\{ x_{k} \} \subseteq C\cap D(F)\) be a sequence generated by an algorithm that verifies the following properties.

-

(i)

\(\lim _k d(x^k, \bar{S})= 0\), and

-

(ii)

$$\begin{aligned} \{0\}= \mathop \mathrm{lim\,int}_{k\rightarrow \infty } \,P_{T_{C}(x_{k})} (-F(x^k)). \end{aligned}$$(38)

Consider the following two assumptions.

-

(A1) The map F is Lipschitz continuous on \(C\cap D(F)\).

-

(A2) The space H is finite dimensional, the set \(F(\bar{S})\) is bounded, and F is closed valued and inner-semicontinuous on \(\bar{S}\).

Under either of the assumptions (A1) or (A2), there exists \(k_{0} \in \mathbb {N}\) such that \(x_{k} \in \bar{S}\) for all \(k \ge k_{0}\).

Proof

Consider first assumption (A1). If the conclusion is not true, there exists a subsequence \(\{ x_{k_{j}} \}\) of \(\{ x_{k} \}\) such that \(x_{k_{j}} \notin \bar{S}\) for all \(j \in \mathbb {N}\). Without loss of generality, we denote this subsequence by \(\{ x_{j} \}\). As in the proof of the previous theorem, let \(z_j=P_{\bar{S}}(x_j)\). By assumption, we have that

By weak sharpness and Proposition 4 applied to \(\bar{x}=z_{j}\) and to \(v_{j}=x_{j} - z_{j} \in T_{C}(z_{j}) \cap N_{\bar{S}}(z_{j})\), there exists \(\hat{u}_{j}\in F({z_{j}})\) such that

Since F is Lipschitz continuous on \(C\cap D(F)\), there exists a constant \(\lambda >0\) such that

By (39), we can take \(j_0\) such that, for \(j\ge j_0\) we have that \(\lambda \,\Vert z_j-x_j\Vert <\alpha /2\). Hence, for \(j\ge j_0\), (41) yields

For \(j\ge j_0\), take \(\hat{u}_{j} \in F({z_{j}})\) as in (40). By (42), there exist \(\eta _{j}\in F(x_{j})\) and \(w_{j}\in B\) such that

On the other hand, (38) implies that for every sequence \(\theta _j\in P_{T_C(x_j)}(-F(x_j))\), we must have

Using this fact for \(\theta _j:=P_{T_C(x_j)}(-\eta _{j}) \in P_{T_C(x_j)}(-F(x_j))\), there exists \(j_1\ge j_0\) such that

Altogether, (40) and (43) yield, for \(j\ge j_1\)

where we used the fact that \(w_j\in B\) in the second inequality, Lemma 1 (b) in the last equality, and the assumption on \(j_1\) in the last inequality. The above expression entails a contradiction, which implies that the algorithm must have finite convergence as stated.

Consider now assumption (A2). The proof follows the same steps until equation (40). Since \(F(\bar{S})\) is bounded, there exists \(\rho >0\) such that \(\Vert \hat{u}_{j}\Vert \le \rho \). Take this \(\rho \), and set \(\varepsilon :=\alpha /2\) in Proposition 1 (ii) to find a \(\delta >0\) such that

Take \(j_0\) such that \(x_j\in B(z_j,\delta )\) whenever \(j\ge j_0\), so we deduce that

Since \(\hat{u}_{j}\in F(z_j)\cap B[0,\rho ]\) we have \( \hat{u}_{j}\in F(x_j)+(\alpha /2)B,\,\forall \,j\ge j_0. \) Hence, we are in the situation of (43). The rest of the proof follows the same steps. \(\square \)

Remark 9

Most algorithms for the variational inequality problem have some kind of monotonicity assumption on the underlying operator. In the absence of any monotonicity assumption, the usual setting is the one that has a point-to-point and continuous operator (in the classical sense), see the recent work [21]. For a point-to-point continuous map, all assumptions in (A2) of Theorem 4 hold, as long as \(F(\bar{S})\) is a bounded set and \(\bar{S}\) is weak sharp. The Lipschitz assumption in Theorem 4 (A1) can be relaxed by the uniform continuity assumption on F. More precisely, \(G : \mathbb {R}^{n} \rightrightarrows \mathbb {R}^{n}\) is uniformly continuous on a set \(W \subset \mathbb {R}^{n}\) if for every \(\varepsilon >0\), there exists a \(\delta >0\) such that for every pair of points \(x, x' \in W\) we have

The proof under this less restrictive assumption follows the same steps as those in Theorem 4 under assumption (A1).

Remark 10

When F is paramonotone, we have seen in Lemma 2 (b) that

In this situation, whenever \(\bar{S}\) is weak sharp with parameter \(\alpha \), then it is h-weak sharp with parameter \(\alpha \). This implies that, for a paramonotone F, h-weak sharpness is less restrictive than weak sharpness.

Remark 11

Under assumption (34) and h-weak sharpness of \(\bar{S}\), Zhou and Wang established in [24, Theorem 3.1] finite convergence to \(\bar{S}\). This implies that, when F is paramonotone, the assumption of weak-sharpness may be relaxed by h-weak sharpness, and finite convergence holds automatically under assumption (34). In fact, paramonotonicity is only used to show that h-weak sharpness is a less restrictive assumption than sharpness. Otherwise, the proof of the following theorem follows directly from [24, Theorem 3.1]. We state this result in our framework.

Theorem 5

Let F be paramonotone and assume \(\bar{S}\) is h-weak sharp. Let \(\{ x_{k} \} \subseteq C\cap D(F)\) be a sequence generated by an algorithm such that (34) holds. Then, there exists \(k_{0} \in \mathbb {N}\) such that \(x_{k} \in \bar{S}\) for all \(k \ge k_{0}\).

References

Ansari, Q.H., Lalitha, C.S., Mehta, M.: Generalized Convexity, Nonsmooth Variational Inequalities and Nonsmooth Optimization. Taylor & Francis Group, CRC Press, Boca Raton (2014)

Auslender, A.: Résolution numérique d’inégalités variationnelles. RAIRO R2, 67–72 (1973)

Burachik, R.: Generalized proximal point methods for the variational inequality problem. Ph.D. Thesis, Instituto de Matemática Pure e Aplicada, Rio de Janeiro, Brazil (1995)

Burachik, R., Iusem, A.: A generalized proximal point algorithm for the variational inequality problem in a Hilbert space. SIAM J. Optim. 8(1), 197–216 (1998)

Burachik, R.S., Iusem, A.: Set-Valued Mappings and Enlargements of Monotone Operators. Springer, New York (2008)

Burke, J.V., Ferris, M.C.: Weak sharp minima in mathematical programming. SIAM J. Control Optim. 31, 1340–1359 (1993)

Calamai, P.H., Moré, J.J.: Projected gradient methods for linearly constrained problems. Math. Program. 39, 93–116 (1987)

Clarke, F.H.: Optimization and Nonsmooth Analysis. Wiley, New York (1983)

Crouzeix, J.-P.: Pseudomonotone variational inequality problems: existence of solutions. Math. Progam. 78, 305–314 (1997)

Fang, S.C., Peterson, E.L.: Generalized variational inequalities. J. Optim. Theory Appl. 38, 363–383 (1982)

Hu, Y.H., Song, W.: Weak sharp solutions for variational inequalities in Banach spaces. J. Math. Anal. Appl. 374, 118–132 (2011)

Iusem, A.N.: On some properties of paramonotone operators. J. Convex Anal. 5, 269–278 (1998)

Konnov, I.V.: Combined Relaxation Methods for Variational Inequalities. Lecture Notes in Mathematical Economics. Springer, Berlin (2000)

Marcotte, P., Zhu, D.L.: Weak sharp solutions of variational inequalities. SIAM J. Optim. 9(1), 179–189 (1998)

Matsushita, S.-Y., Xu, L.: Finite convergence of the proximal point algorithm for variational inequality problems. Set-Valued Var. Anal. 21, 297–309 (2013)

Saigal, R.: Extension of the generalized complementarity problem. Math. Oper. Res. 1, 260–266 (1976)

Schirotzek, W.: Nonsmooth Analysis. Springer, Berlin (2007)

Wu, Z.L., Wu, S.Y.: Weak sharp solutions of variational inequalities in Hilbert spaces. SIAM J. Optim. 14(4), 1011–1027 (2004)

Xiong, J., Li, J.: Weak sharpness for set-valued variational inequalities and applications to finite termination of iterative algorithms. Optimization 65(8), 1585–1597 (2016)

Xiu, N., Zhang, J.: On finite convergence of proximal point algorithms for variational inequalities. J. Math. Anal. Appl. 312, 148–158 (2005)

Ye, M., He, Y.: A double projection method for solving variational inequalities without monotonicity. Comput. Optim. Appl. 60, 141–150 (2015)

Zhang, J., Wan, C., Xiu, N.: The dual gap function for variational inequalities. Appl. Math. Optim. 48, 129–148 (2003)

Zhou, J., Wang, C.: A note on finite termination of iterative algorithms in mathematical programming. Oper. Res. Lett. 36, 715–717 (2008)

Zhou, J., Wang, C.: New characterizations of weak sharp minima. Optim. Lett. 6, 1773–1785 (2012)

Acknowledgements

This research was partially done during the visit of second author to the School of Information Technology and Mathematical Sciences at University of South Australia, and King Fahd University of Petroleum and Minerals, Dhahran, Saudi Arabia. This research was funded by the National Plan for Science, Technology and Innovation (MAARIFAH)—King Abdulaziz City for Science and Technology—through the Science and Technology Unit at King Fahd University of Petroleum and Minerals (KFUPM)—the Kingdom of Saudi Arabia, award number 12-MAT3023-24.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Al-Homidan, S., Ansari, Q.H. & Burachik, R.S. Weak sharp solutions for generalized variational inequalities. Positivity 21, 1067–1088 (2017). https://doi.org/10.1007/s11117-016-0453-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11117-016-0453-x

Keywords

- Generalized variational inequalities

- Weak sharp solutions

- Paramonotone operators

- Gap function

- Inner-semicontinuous maps

- Lipschitz continuous set-valued maps

- Finite convergence