Abstract

Optical tracking sensor technology has been widely used in the field of motion. However, there are still some challenges in the recognition of badminton players, and more accurate methods are needed to capture the dynamic characteristics of badminton players. The aim of this study is to design an optical tracking sensor system based on image feature extraction for badminton player motion recognition. In this paper, a high resolution camera is used to collect the image sequence of badminton match. Then through image processing and computer vision technology, the key image features are extracted from the image sequence. Then, machine learning algorithm is used to classify and recognize the extracted features to achieve accurate recognition of badminton players' movements. The experimental results show that the optical tracking sensor system can effectively extract the movement features of badminton players and identify their movements accurately. Compared with the traditional method, the system in this paper has higher precision and real-time performance, and can meet the needs of practical applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image characteristics, description, and matching are key issues in computer optical imaging and image processing research. Due to the characteristics of the image, which can reflect the main information of the image and have a certain high-dimensional vector, it is easy for computers to process and calculate (Kato et al. 2004). Therefore, in application systems that require processing a large number of images such as unmanned driving, airport runway detection, and face recognition, feature point detection is essential (Wang et al. 2018). Classifying remote sensing images is a very important task. With the development of remote sensing data, its application scope has also become very wide, and the feature dimensions of remote sensing data are becoming higher and higher, and the application of remote sensing data is also becoming more and more widespread (Cheng and Han 2016). Through the research of this project, the processing efficiency and accuracy of remote sensing images can be further improved, serving major strategic needs such as surveying, navigation, military, and ecology (Li et al. 2020). The principles and methods of remote sensing image classification, as well as the conditions and scope of algorithm application, were explained. The PCA-SVM classification method was studied using artificial intelligence to improve the accuracy of remote sensing image analysis. In some practical situations, data classification methods were comprehensively discussed and validated. At present, national sports have been widely promoted and popularized. Badminton is a small ball sport. Due to its simple equipment and no physical contact between opponents, it can independently control the amount of exercise and enhance physical fitness while enjoying fun. Therefore, badminton is now deeply loved by people (Gao 2017). This sport can provide comprehensive exercise for the body, enhancing one's movement speed, strength, coordination, and reaction ability, which greatly contributes to physical fitness. In today’s rapidly developing society, with the progress of technology, people have been liberated from most physical work (Zheng 2019). However, due to the increase in mental work and the decrease in exercise frequency, there are often health red lights (Manrique and Gonzalez-Badillo 2003). Therefore, strengthening sports and improving physical fitness has become a concern for the whole society and the general public. Play badminton has a good effect on relieving anxiety, depression and life pressure (Phomsoupha and Laffaye 2015). Therefore, fitness enthusiasts like it. With the development of artificial intelligence and robotics technology, more and more intelligent robots have entered people's lives. With the increasing competition of badminton, communication between robots and humans has attracted more and more young people to participate in badminton sports, and has brought joy to high-tech (Callow et al. 2001). Therefore, developing more efficient image feature extraction and artificial intelligence classification algorithms to achieve badminton movement recognition is of great significance.

With the continuous development of science and technology, optical tracking sensors are more and more widely used in the field of motion. The optical tracking sensor can track and recognize the position and attitude of moving objects in real time by collecting and analyzing the image information, which has the characteristics of high precision and real-time. In badminton, the dynamic characteristics of the players are very important to evaluate and improve the training effect. Traditional badminton recognition methods often rely on manual judgment or sensor devices, there are some limitations and challenges. Manual judgment is easy to be affected by subjective factors and can not achieve high-precision recognition effect. The sensor device usually requires the athlete to wear a specific device, which not only increases the complexity of the operation, but also fails to meet the real-time tracking and recognition requirements for subtle movements. Therefore, the optical tracking sensor based on image feature extraction becomes an effective method to solve this problem. By using a high-resolution camera to capture the image sequence in the badminton match scene, and combining image processing and computer vision technology, the key image features of athletes can be extracted, such as position and posture. The classification and recognition of these features by machine learning algorithm can achieve accurate tracking and recognition of badminton players' sports behavior. The appearance of optical tracking sensor brings new possibilities for badminton players’ motion recognition, which can realize accurate capture and analysis of players' dynamic characteristics. Therefore, the optical tracking sensor based on image feature extraction has a broad application prospect in badminton players' motion recognition, which is helpful to improve the effect of training and competition, and promote the development of badminton.

2 Related work

The concept of image feature points was first proposed by Moravec. However, due to its high time complexity and sensitivity to noise, this detection method has not yet been promoted and applied. Later, this theory was continuously studied by foreign scholars. In order to further improve the image feature extraction algorithm, it is necessary to improve the quality of feature points and improve the speed of feature point extraction. The literature points out that in previous studies, when analyzing an image, people found that most of the information in the image was concentrated in some important areas, while the remaining parts were redundant areas of the image. Region of Interest (ROI) is the region with the richest and most complex image information (Chityala et al. 2004). At the same time, it reduces the impact of local regions on feature extraction and improves the efficiency of image processing. There are several classic methods for extracting image ROI, such as the maximum class variance algorithm in the literature. Although both methods can achieve regions of interest in images, the Stentiford model is sensitive to image noise and lacks the ability to grasp image details (Eswaraiah and Sreenivasa 2015). Although Otsu algorithm does not have the above problems, the image area and background of interest are normally distributed with the same number of pixels. Therefore, this is not universal. At present, deep learning has been widely applied in the field of computer vision (Voulodimos et al. 2018). For example, in the field of medical images, literature can use medical images and deep learning models to identify various diseases such as pathological tissues and oral diseases; However, in such scenarios, even with the support of medical institutions, it is difficult to obtain sufficient samples; In addition, deep learning is prone to overfitting in small sample scenarios, which can affect learning effectiveness. On this basis, an image based feature extraction method is proposed (Esteva et al. 2021). A literature review was conducted on research related to human motion before 1997, and three fields related to human motion were identified: analysis of the first field of motion: all or part of the human body; The second field uses one or more cameras to track the human body; The third area is to identify and analyze human behavior and actions (Zhang et al. 2019). Before 2007, research on motion in the literature was divided into two categories: the first category was the study of model motion; The second category is unmodeled motion research, which focuses on automatic video understanding technology that detects and divides parts of the human body and constructs a human model to track the human body. The literature has studied the construction of statistical models for head features, using extended Kalman filtering to track human head posture in real-time. When the literature is model-based, it fills in the sensing signals of different sensors and is used to separate different signals during communication (Kong and Fu 2022). The literature uses convolutional networks to extract signals as features using advanced semantics, and the most confident category is the results of algorithm recognition. Unlike CNN, LSTM can directly take the timing signal as input and extract the long-term dependence of the timing signal (Xue et al. 2018). The literature suggests using lightweight LSTM for real-time action classification. The literature is based on LSTM and proposes CNN The LSTM model utilizes CNN for data sampling and local information extraction, providing LSTM with more information features and reducing computational complexity (Wang et al. 2016).

3 Image feature extraction and artificial intelligence classification

3.1 Image feature extraction

In the design of badminton players’ motion recognition, the optical tracking sensor uses a high-resolution camera to capture the badminton match scene in real time, and analyzes it through image processing and computer vision technology. Firstly, the key image features of athletes, such as position and posture, are extracted from the image sequence by the method of image feature extraction. Then machine learning algorithm is used to classify and identify these features, so as to achieve accurate tracking and recognition of badminton players' sports behavior. Optical tracking sensors require high resolution and fast acquisition capabilities to ensure subtle movements and changes can be captured. The sensor needs to have good robustness and stability, and can adapt to different lighting conditions and scenes to ensure the accuracy and effect of tracking and recognition. Sensors also need to have adaptive algorithms that can flexibly respond to different athletes and sports scenarios.

When using neural networks for image recognition, the first step is to construct a training sample library based on neural networks to improve the accuracy of image classification and recognition; Secondly, it is necessary to enhance the sensitivity of neural networks to image samples. On this basis, a neural network learning rule based on DD. Hebb was selected to train the established training samples. The representation of a training sample for this rule is shown in formula (1):

Obtain a three-dimensional motion vision image through Eq. (1), select an S-type function as the threshold for neurons during image recognition, and output training samples. Train the neural network through propagation algorithms to improve its recognition accuracy. The output layer of a neural network is defined as Q, and when given an input, the expected output value is bq, while the actual output value is Oq. So, the mean square error EQ is shown in formula (2):

The framework structure of three-dimensional visual images is shown in formula (3):

The expression of the target image in the video structure using formula (3) is shown in formula (4):

Define a matching function for the PC to dissipate the Forrier local factors on site, as shown in formula (5):

e value of the phase consistency measure is between 0 and 1, where 1 represents bounded or straight line features, and 0 represents unstructured. This method has the advantage of clear concepts, but it has problems such as low positioning accuracy and susceptibility to noise interference. On this basis, the phase consistency algorithm based on LogGabor wavelet has been improved to achieve effective extraction of local phase. Assuming that \({\text{M}}_{{{\text{n}},{\text{o}}}}^{{\text{e}}}\) represent even symmetry at scale n and odd symmetry in direction o, \({\text{M}}_{{{\text{n}},{\text{o}}}}^{{\text{e}}}\) represent odd symmetry at frequency n.

The new phase stability measures are defined as follows in Eq. (7):

Assuming the correct number of recognition samples is R, the total number of test samples is T, and the accuracy of image classification recognition is p, the expression is shown in formula (8):

As can be seen from Fig. 1, the number of feature points of the improved A algorithm in this paper has improved by 195% and 93% respectively compared with the fast image registration algorithm of the improved B algorithm and the information entropy weighted HOG feature extraction algorithm. The reason is that this method maintains the characteristic of A method being able to generate high-quality multiple feature points, and improves B method using Harris method. Principal component analysis method is used to reduce the dimensionality of feature points, thereby obtaining more feature points.

According to Fig. 2, the A algorithm is an evaluation method for identifying feature points, which corresponds the position coordinates of two feature points A and B to the same coordinate system. If the coordinates of two reference points overlap or the distance between two points is less than a threshold, it should be considered that the feature points between these two points will successfully match. After dividing the total number of contour lines by the total number of contour lines, the total number of contour lines can be calculated correctly. The higher the matching rate of an algorithm, the smaller the number of error feature points caused by the algorithm. It can be seen that compared with the fast image registration method and the information entropy weighted HOG feature extraction method, the overall correct matching rate of the modified A method is increased by 4.8%, and the overall correct matching rate of the modified B method is increased by 3.1%. It can be seen that the improved algorithm has the highest matching rate, which means it has the smallest number of error points.

3.2 Implementation of artificial intelligence classification

The captured image is usually a color image, and the processing process takes a long time. Then, the color image is preprocessed according to the equation of encoding the color into a gray image, which converts the color image into a gray image as shown in formula (9):

SVM is a binary classification model, whose basic idea is to find suitable samples for dividing positive and negative classes for classification, classify linear problems, and separate the two training samples. The problem of classification is to determine the optimal management level for job classification. The classification results are distributed at both ends of the work level, i.e. \({\text{g}}\left( {\text{x}} \right)\, = \,{\text{w}}_{{\text{x}}}^{{\text{t}}} \, + \,{\text{b}}\). The classification results should increase the distance between the two classes as much as possible (see Fig. 3).

Classification includes the best level of the standard industry, dividing the data into two parts, positive and negative, and indicating the best level of classification as shown in formula (10):

By adding the correlation between this pixel and neighboring pixels in LBP encoding, the characteristics of the image can be better characterized and the problem of feature extraction difficulties caused by changes in lighting intensity can be solved. The existing LBP algorithm cannot effectively characterize the texture characteristics of remote sensing images, easily categorizing heterogeneous patterns in the image into one type. Moreover, if the neighborhood of heterogeneous patterns is too large, it will cause a large amount of texture information to be missing in the image, and heterogeneous patterns are easily affected by noise. A new algorithm called phase measurement is proposed to address the shortcomings of LBP algorithm. Assuming the phase metric is\({\text{D}}_{{{\text{ROR}}}}^{{{\text{min}}}}\) , the expssion for \({\text{D}}_{{{\text{ROR}}}}^{{{\text{min}}}}\) is shown in (11):

For dual optimization, the optimal classification plane function for SVM is:

The feature expression for the P-dimension of remote sensing images is shown in (13):

Explanatory classification research was conducted using artificial intelligence technology, which combines the constantly changing positive features of complex data. In the HDCM model driven complex data classification process, the density of complex data is lower and the conversion efficiency is higher. In the intelligent extraction comprehensive environment, automated learning technology is used for complex data classification. Use target indication to promote the classification of complex datasets, and calculate the data classification recall rate based on the interpretation of classification requirements and data partitioning. The formula is shown in (14):

Regarding the classification of nonlinear plates, linear partitioning of spatial structures can be achieved by displaying specific nonlinear kernel functions in high-dimensional space. It effectively avoids direct computation in low dimensional space. The optimal classification plane for nonlinear SVM can also be described as shown in Eq. (15):

Calculate the covariance matrix of the sample as shown in Eq. (16):

From the calculation in (16) above, the final test results are obtained and analyzed for the data classification scenario, as shown in Table 1.

4 Badminton Athlete Action Recognition System

4.1 Badminton player action collection and recognition

At present, the biggest problem faced by human motion recognition algorithms is that the algorithms are dominated by sliding window method and recognition algorithms, which have good recognition performance for periodic movements such as running and walking. However, for non periodic movements such as badminton and basketball, it cannot meet the requirements for specific action intervals, such as serving in badminton, which has poor performance. The main problems are as follows: (1) Due to the non repetitive operation, there are sound signals outside the target action within the sliding window, which can affect the recognition performance of the classifier; (2) Previous recognition algorithms mostly used conventional algorithms based on statistical features, neglecting the variation information of various dimensional signals and the correlation information between different dimensional signals, which can seriously affect the recognition of sound signals.

To address the above issues, this project intends to study an image feature extraction method based on candidate interval generation. The flowchart is shown in Fig. 4. The method consists of an image feature extraction network, a candidate interval generation network, and an image interval recognition network, and is applied to the image feature extraction network. This method extracts features from the input of inertial sensors, extracting high-level semantic features that can maintain time series information. Among them, maintaining the temporal information maintained by high-level semantic features is to align them with pre-set boxes (hereinafter referred to as pre-set boxes), and to generate confidence and bias parameters for pre-set boxes, and to provide useful features for further classification of pre-set boxes in the future. To maintain temporal information in feature quantities, it is necessary to make the adopted feature quantities translational in temporal order. To address this issue, we propose a new method for extraction using a new neural network. Specifically, this project will fully utilize the characteristics of the convolutional neural network, such as large temporal perceptual fields and fine multidimensional perceptual features, to enable it to extract more features from the changes in temporal perceptual signals and the interrelationships between multidimensional perceptual signals. Candidate interval generation network obtains high-level Semantic information through feature extraction network, and calculates confidence and deviation values for each preset region. On this basis, a threshold method based on the credibility of pre-set boxes is proposed. This method can filter out the pre-set boxes that are most likely to contain target behavior from the pre-set boxes, and then fine adjust the pre-set boxes through deviation parameters to obtain high-quality pre-set boxes. Compared with the sliding window method, the candidate interval generation network eliminates the need for subsequent interval recognition networks to identify each preset box (a sliding window can be seen as a preset box), and only needs to identify the generated candidate intervals, thereby avoiding unnecessary overhead. The interval recognition network identifies all candidate regions generated by the candidate area network and obtains the final detection and identification results. In the design of interval identification networks, in order to avoid the repeated use of convolutional neural networks to extract the characteristics of all candidate regions, this file proposes a shared communication area positioning network. Firstly, the entire network extracts features from inertial sensing signals, and then uses the time correspondence between features and timed signals to search for the associated features of candidate intervals from the above features, and finally uses these features for recognition. By using this special interval recognition method, the algorithm reduces a significant amount of computation and forward inference time. At present, most of the inertial perception data for periodic movement behavior recognition comes from multiple inertial sensors worn at different positions of the body. Since most people's actions are coordinated actions of the upper body, collecting the perception data of each part can not only obtain more complete features, but also capture the interaction information between each part, so as to achieve the purpose of accurate recognition. However, in practical applications, due to the variety of signal sources required, the devices used are less portable, making their promotion difficult. Therefore, on this basis, this project plans to use an inertial sensor on the wrist, which not only increases the complexity of the algorithm but also makes it more user-friendly. In terms of selecting non periodic mobility, we will focus on the generality of mobility. Broad mobility not only facilitates data collection, but also provides services for a wider range of users. Therefore, the popularity of badminton is very high. In this dataset, serve and swing are used as indicators, as both serve and swing are common and necessary in matches. This dataset uses a smart watch network sensor with a frequency of 50 Hz as the collection device. Each participant wore this watch and played badminton for an average of 5 min. During the exercise, volunteers' movements will be captured and recorded by cameras, and then inertial sensing data will be used to automatically detect and mark the time interval between each serve and swing. Among them, the signal acquisition equipment, as well as the collected signals and videos, are shown in Fig. 5.

4.2 Analysis of Action Recognition Results for Badminton Players

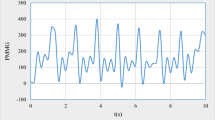

When collecting data, sensors take a certain amount of time, and there are many hitting behaviors in the data. Therefore, it is necessary to study how to extract them from each hitting behavior for future classification and recognition. On this basis, a sliding window based blocking method is proposed, which utilizes a fixed length window to block and achieve recognition of rackets. Considering that the frequency of data sampling is 200 Hz, and the hitting motion of badminton is usually not greater than 0.5 s, when the window width is set to 100, the acceleration is calculated to extract the hitting motion. As shown in Fig. 6, the horizontal axis represents the time axis and the vertical axis represents the acceleration signal.

The recognition model of badminton movements can not only have multiple movements, but also analyze the same hitting movements of different athletes, thus comparing the technical differences between athletes at different levels. As shown in Fig. 7, professional players have the shortest interval of hitting time, followed by amateur players, and beginners have the fastest hitting time. Therefore, professional players can hit the ball at the fastest speed possible.

4.3 Design and Implementation of a Badminton Athlete Action Recognition System

The entire software system is divided into registration and login modules, BLE data collection modules, information management modules, exercise training modules, exercise analysis modules, and social modules. The overall framework diagram is shown in Fig. 8, where each large module can be divided into several smaller modules, ultimately forming a complete badminton player action recognition system. In order to facilitate user use and program writing, in addition to the overall module part of the software, it is also necessary to design the software architecture and user interface.

To facilitate users to query their own sports data, the sharing of sports data has been achieved. The system also features a “login” function that allows users to log in to their own account and store their data in the cloud. The login system implemented in this paper includes functions such as login and exit, and utilizes third-party applications such as WeChat and QQ to achieve login functions. Figure 9 shows the process of a user registration system. When a user uses the software, the system first determines whether the user has registered and determines the user’s registration based on whether there is a record of the user's local data. If the user has logged in, they will directly jump to the main page. Otherwise, the user will need to log in with an account password or directly log in to a third party, and the login function displayed in the image will cache the cloud data of the user to be logged in to the local database. After successful login, you will be able to perform the next steps of the program, such as saving sports data, logging in from an account, modifying personal information, publishing status, and other user related activities. After clearing the local login account, you can log out.

The sports training system is the main function of the software, and the badminton training system designed in this article can identify the swing techniques of badminton players in real-time. It is possible to calculate the activities performed by a badminton player over a period of time, including the number of swings, energy consumption, maximum beat speed, and exercise time. The activities and implementation principles of the training system are detailed below. (1) Real time identification of hitting movements. Real time recognition of racket movements has been achieved by installing the data collector described above on the racket to identify the six racket movements of the player in real time. The implementation principle of real-time recognition is: Using the Android client, real-time collection of motion raw sensor data is carried out, and a sliding window is used to capture the real-time collected raw data. Then, the presence of swing technology actions in the sliding window is judged. If it exists, based on the swing recognition algorithm discussed in this article, first identify the grip method of the athlete, and then identify the swing type of the athlete under that grip method. Due to the fact that the recognition process of the recognition algorithm is much more time-consuming than interface refresh, in order to ensure the smoothness of the UI thread, it is necessary to place the time-consuming recognition algorithm in the thread for specific calculations, and return the recognition results to the UI thread for interface updates. The real-time recognition interface of the software is the six swing technology functions introduced above. The real-time swing of athletes can be recognized in real time and transmitted through sound. (2) Conduct statistics on the badminton skills of each event. In order to more intuitively reflect the movement of the athlete over a period of time, this software has designed the function of motion statistics and implemented the function of motion statistics. It can record the number of beats, time, calories, maximum beat speed, exercise goals, and completion status of each swing over a period of time. In addition, daily action data will also be uploaded to both cloud and local databases, allowing users to easily browse their action history. Each user has a sports data table. When users exercise, the program regularly stores action data into the cloud database, and through the database's query actions, the user's exercise records can be queried.

The motion analysis module mainly includes the following functions: (1) motion target setting. Users can set their own sports goals according to their own sports needs. After setting, the system will save the goals set by the user. (2) Comprehensive evaluation. The system will conduct a comprehensive evaluation of the user's various data, and through the comprehensive evaluation results, the system will automatically generate a comprehensive evaluation report. (3) Ball style analysis. By analyzing a large amount of data on athletes with different sports styles, it is possible to understand the physical characteristics and game performance of athletes with different sports styles, thereby helping coaches make corresponding training strategies. The design principle for configuring and completing sports goals: Save the user set sports goals in a local database, and then upload these data to the cloud. The system then calculates and analyzes different data based on different sports athletes. (4) Show completion of goals. When displaying the completion of the goal, the system will compare the user's set motion goal with the current motion situation, and calculate the completion percentage based on the comparison results.

5 Conclusion

In today’s sports training and competition, athletes' skill evaluation and training need accurate and timely data support. The traditional evaluation methods rely on manual observation and subjective judgment, and there are problems of subjectivity and error. Therefore, it is particularly important to develop a system that can accurately and automatically identify and track the movement of athletes. Based on the needs of badminton players for sport recognition and skill evaluation, optical tracking sensor is selected as a data acquisition tool, and image feature extraction and classification are carried out through processing continuous image sequences to achieve accurate recognition of badminton players’ movements. This can not only provide objective data support for coaches, but also be used for real-time monitoring and teaching assistance to improve the technical level of badminton players. The experiment verifies that the system extracts the features of badminton movement from the continuous image sequence through the image feature extraction algorithm, and realizes the accurate recognition of different movement movements with the accurate capture of the movement track data. The system has high recognition accuracy and stable performance under different light and environmental conditions. The system is real-time and practical, which is suitable for real-time monitoring and teaching assistance. Therefore, the optical tracking sensor based on image feature extraction provides an effective tool and method for badminton players' technical evaluation, training and teaching, and has a broad application prospect. Future research will further optimize the performance of the system and expand its application in other fields.

Data Availability

The data will be available upon request.

Change history

18 September 2024

This article has been retracted. Please see the Retraction Notice for more detail: https://doi.org/10.1007/s11082-024-07507-z

References

Callow, N., Hardy, L., Hall, C.: The effects of a motivational general-mastery imagery intervention on the sport confidence of high-level badminton players. Res. Q. Exerc. Sport 72(4), 389–400 (2001)

Cheng, G., Han, J.: A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 117, 11–28 (2016)

Chityala, R.N., Hoffmann, K.R., Bednarek, D.R., Rudin, S.: Region of interest (ROI) computed tomography. In: Medical Imaging 2004: Physics of Medical Imaging, vol. 5368, pp. 534–541. SPIE (2004)

Esteva, A., Chou, K., Yeung, S., Naik, N., Madani, A., Mottaghi, A., Liu, Y., Topol, E., Dean, J., Socher, R.: Deep learning-enabled medical computer vision. NPJ Digital Med. 4(1), 5–12 (2021)

Eswaraiah, R., Sreenivasa Reddy, E.: Robust medical image watermarking technique for accurate detection of tampers inside region of interest and recovering original region of interest. IET Image Proc. 9(8), 615–625 (2015)

Gao, R.Y.: A comparison between talent identification and development (TID) for badminton in China and the UK (2017)

Kato, S., Nakagawa, T., Ohkawa, M., Muramoto, K., Oyama, O., Watanabe, A., Nakashima, H., Nemoto, T., Sugitani, K.: A computer image processing system for quantification of zebrafish behavior. J. Neurosc. Methods 134(1), 1–7 (2004)

Kong, Y., Fu, Y.: Human action recognition and prediction: a survey. Int. J. Comput. Vision 130(5), 1366–1401 (2022)

Li, K., Wan, G., Cheng, G., Meng, L., Han, J.: Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 159, 296–307 (2020)

Manrique, D.C., Gonzalez-Badillo, J.J.: Analysis of the characteristics of competitive badminton. Br. J. Sports Med. 37(1), 62–66 (2003)

Phomsoupha, M., Laffaye, G.: The science of badminton: game characteristics, anthropometry, physiology, visual fitness and biomechanics. Sports Med. 45, 473–495 (2015)

Voulodimos, A., Doulamis, N., Doulamis, A., Protopapadakis, E.: Deep learning for computer vision: a brief review. Comput. Intell. Neurosc. 7, 163–167 (2018)

Wang, N., Gao, X., Tao, D., Yang, H., Li, X.: Facial feature point detection: a comprehensive survey. Neurocomputing 275, 50–65 (2018)

Wang, J., Yu, L.C., Lai, K.R., Zhang, X. (2016). Dimensional sentiment analysis using a regional CNN-LSTM model. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (volume 2: Short papers) pp. 225–230

Xue, H., Huynh, D.Q., Reynolds, M. (2018). SS-LSTM: a hierarchical LSTM model for pedestrian trajectory prediction. In: 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1186–1194. IEEE

Zhang, H.B., Zhang, Y.X., Zhong, B., Lei, Q., Yang, L., Du, J.X., Chen, D.S.: A comprehensive survey of vision-based human action recognition methods. Sensors 19(5), 1005 (2019)

Zheng, J.: Research Progress and Trend of Functional Physical Training for Young Badminton Players. Southwest Petroleum University, China (2019)

Funding

This paper was supported by the fund as Research on Collaborative Sharing Mode of Innovative Technology Platform Based on the Integration of Industry, University, and Research—Taking the Project of Sichuan Electronic New Technology and New Materials Application Research Institute as an Example (NO. GZJG2022-047).

Author information

Authors and Affiliations

Contributions

Yongqiu Pu has done the first version, Xing Gao and Weicen Lv has done the simulations. All authors have contributed to the paper’s analysis, discussion, writing, and revision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1007/s11082-024-07507-z

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pu, Y., Gao, X. & Lv, W. RETRACTED ARTICLE: Design of optical tracking sensor based on image feature extraction for badminton athlete motion recognition. Opt Quant Electron 56, 608 (2024). https://doi.org/10.1007/s11082-024-06322-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11082-024-06322-w