Abstract

This note is concerned with inverse wave scattering in one and two dimensional domains. It seeks to recover an unknown function based on measurements collected at the boundary of the domain. For one-dimensional problem, only one point of the domain is assumed to be accessible. For the two dimensional domain, the outer boundary is assumed to be accessible. It develops two iterative algorithms, in which an assumed initial guess for the unknown function is updated. The first method uses a set of sampling functions to formulate a moment problem for the correction to the assumed value. This method is applied to both one-dimensional and two dimensional domains. For two dimensional Helmholtz equation, it relies on a new effective filtering technique which is another contribution of the present work. The second method uses a direct formulation to recover the correction term. This method is only developed for the one-dimensional case. For all cases presented here, the correction to the assumed value is obtained by solving an over-determined linear system through the use of least-square minimization. Tikhonov regularization is also used to stabilize the least-square solution. A number of numerical examples are used to show their applicability and robustness to noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this note, we introduce two new methods for inverse wave scattering in one and two dimensional domains. This problem appears very naturally in various applications including acoustics (Colten et al. 2000), quantum mechanics (Lagaris and Evangelakis 2011; Sacks and Jaemin 2009), nondestructive testing of materials (Jamil et al. 2013), magnetic resonance imaging (Fessler 2010), and optics (Belai et al. 2008).

It is well-known that this class of problems are highly ill-posed (Colten and Kress 1991) and various methods have been developed to overcome it. These methods include singular value decomposition (Capozzoli et al. 2017), direct sampling methods (Kang et al. 2020), inexact Newton method (Desmal and Bağci 2015), multiple forward method (Tadi et al. 2011), meshless method (2006) (Jin and Zheng 2006), level set method (2008) (Irishina et al. 2008), dual reciprocity (Marin et al. 2006), generalized inverse , proper solution space (Hamad and Tadi 2019), and iterative methods which treats the integral formulation of the scattering problem (Barcelo et al. 2016) and (Novikov 2015). Although this problem has been approached by a number of researchers (Mueller and Siltanen 2012) (also references therein), the research in developing computational algorithms is still underway due to the complexity of the problem (Klibanov and Romanov 2015).

For this particular problem in one dimension, recent results include (Bugarija et al. 2020) where the unknown function is assumed to be piecewise constant Thanh and Klibanov (2020) where a globally convergent numerical method is presented, and Klibanov et al. (2018) where a one-dimensional model is used for land-mind detection. The purpose of this paper is to develop two numerical methods. One method is based on using a set of sampling functions to formulate a moment problem. We have developed this method to solve a Cauchy problem that appears in stable operation of Tadi (2019). In this paper, We develop this method for one-dimensional and two-dimensional domains. For the one-dimensional domain, only the boundary of the domain is accessible for collecting measurements. For the two-dimensional domain, the external boundary of the domain is used to collect the data. When applying the sampling function method to a 2-D domain, the effect of noise in the data becomes crucial. In this paper, we also develop a new tailored filtering technique that is very effective in eliminating the noise in the data. This filtering technique has it’s roots in an inversion method based on proper solution space (Tadi 2017).

The second method uses a perturbation technique, i.e., (Eshkuvatov 2018) to relate the unknown function directly to the measurements provided for the inversion. Using this method, it is also possible to include the effect of nonlinear term that appears in the equation for the error field.

In Sect. 2, we present the method based on the use of sampling functions. In Sect. 3, we present the direct formulation. In Sect. 4, we present the sampling method for 2-D Helmholtz equation. In Sect. 5, we present the new tailored filtering technique, and in Sect. 6, we choose a specific set of sampling functions and develop the direct method for 2-D Helmholtz equation. In Sect. 7 we use a number of numerical results to study the applicability of the present methods.

Notation For the one dimensional problems, Sects. 2 and 3, the domain is a straight line \(\varOmega =[0,1]\) where the outer point at \(x=0\) is accessible. In order to impose non-reflecting boundary condition it is extended to \(\varOmega =[-1,2]\). For Sects. 4, 5, and 6, \(\varOmega ({\mathbf{x}})\) is a closed bounded 2-dimensional domain. Subscript \(()_x\) denotes differentiation with respect to x (in 1-D case). Subscript \(()_n\) denotes the outward normal derivative for 2-D domains. In Sects. 2 and 3, the field is a complex quantity and \({\mathfrak {R}}()\) and \({\mathfrak {I}}()\) denote the real and imaginary part of a complex quantity. We use the notation \(()|_{x=}\) to denote the value of the quantity at x. We use bold letters to denote vectors and matrices.

2 A moment problem

Consider the wave propagation in a one dimensional domain \(x\in \varOmega =[-1,2]\) given by

where, u(x) is the electric field, k is the wave number and the unknown function, p(x), represents the material property. The function p(x) is unknown for \(x\in [0,1]\), and is assumed to be equal to a nominal value (here, \(p(x)=1\)) for \(x\in [-1,0]\cup [1,2]\). The domain is excited by the source \(\delta (x-x_0)\) with \(x_0\in [-1,0)\). Non-reflecting boundary conditions are imposed at the boundaries, i.e.

where \(i=\sqrt{-1}\), and \(u(0)=g\) is the collected measurement. The inverse problem of interest is the evaluation of the unknown function p(x) for \(x\in [0,1]\) based on the measurement collected at \(x=0\) which is the accessible point of the domain.

One can start with an initial guess \(\hat{p}(x)\) and obtain a background field given by

The error field, \(e(x)=u(x)-{\hat{u}}(x)\), is then given by

The actual unknown is related to the assumed value according to \(p(x)={\hat{p}}(x)+q(x)\), and the above equation leads to

The error field is required to satisfy additional condition given by

The under braced term in Eq. (5) is the nonlinear term involving the two unknowns, i.e., q(x) and e(x). This term is the product of two correction terms. In fact, \(q=0\) for \(x\in [-1,0]\cup [1,2]\), and is only nonzero (and unknown) for \(x\in [0,1]\). We first develop the formulation without this term. We can later on show that the contribution from this term can be included in the form of a series.

Consider a sampling function that is the solution of the differential equation given by

Multiplying Eq. (5) by \(\psi (x)\) and integrating the product over the region \(x\in [0,2]\) leads to

Integrating by parts twice and using Eqs. (7) and (5) leads to

The quantities on the right hand side, denoted by \(\gamma \) for simplicity, are known. If the measurements are collected for a range of frequencies, i.e., \(k_1,k_2,\ldots ,k_\ell \), then we can write the above equation for each measurement according to

The above equation is the classical moment problem for the unknown function q(x) (Ang et al. 2002). It is easy to note that the functions \(\psi _\ell \) form a linearly independent set.

Remark 1 For i and j, with \(i\ne j\), the functions \(\psi _i(x)\) and \(\psi _j(x)\) are linearly independent for \(x\in [0:2]\). To see that these functions are linearly independent one can consider two sampling functions \(\psi _i(x)\) and \(\psi _j(x)\) that satisfy Eq. (7). Multiplying the equation for \(\psi _i(x)\) by \(\psi _j(x)\), and the equation for \(\psi _j(x)\) by \(\psi _i(x)\), integrating by parts and subtracting them leads to

for \(\psi _i(0)=\psi _j(0)=0\). If \(\psi _i(x)=\kappa \psi _j(x)\) for a constant \(\kappa \), then \(k_i=k_j\) which is a contradiction.

The unknown function q(x) is only nonzero within \(x\in [0,1]\). Assuming an expansion of the form \(q(x)=\sum _{j=1}^Nc_j(x)\tau _j\)

placing the unknown values \(\tau _j\) in a vector \(\varvec{\tau }\), and the terms on the right hand side in a vector \(\varvec{\gamma }\) leads to a matrix equation given by

Least-square solution of the above linear system can be solved after introducing Tikhonov regularization according to

where, the parameter \(\beta \) is the weight for the Tikhonov regularization, and \(\varvec{\varGamma }\) is the matrix representing the first derivative operator. Once the above linear system is solved for \(\varvec{\tau }\), then the assumed value can be updated according to \({\hat{p}}(x) = {\hat{p}}(x)+q(x)\). We next formulate a different algorithm that can lead to a similar linear system for the correction term.

3 A direct formulation

Consider the error equation given in Eq. (5)

Instead of the above equation, an approximate solution can be obtained by considering a homotopy-perturbation approximation given by

where, \({\bar{p}}(x)={\hat{p}}(x)-1\), and for \({\mathcal {H}}=1\) we recover Eq. (5). Seeking a solution in the form of \(e=e^0+{\mathcal {H}}e^1+{\mathcal {H}}^2e^2+\ldots \), leads to

Using variation of parameters, the zeroth order solution is given by

and, the first order solution is given by

Higher order terms can also be computed in a similar way. Note that the zeroth order solution depends on q, linearly. The first order solution depends on q, quadratically, and so on.

Evaluating the zeroth-order solution, i.e. Eq. (18), at \(x=0\) leads toFootnote 1

The second integral drops because \(q=0\) for \(x\in [-1,0]\). Assuming a similar expansion for the unknown q leads to

For the range of frequencies \(k_1,k_2,\ldots ,k_\ell \), the above equation leads to

for \(\ell =1,2,\ldots ,L\) and \(j=1,2,\ldots ,N\). Also, the right-hand-side quantities \(\hat{g}\) are stored in \(\hat{\varvec{\gamma }}\). Similar to Eq. (12), the coefficient matrix \(\mathbf{E}\) is rank deficient. A least-square solution can be obtained after introducing Tikhonov regularization.

We can similarly include the higher-order terms. Taking the first two terms, i.e., \(e=e^0+e^1\) leads to

Replacing \(e^0\) in the above equation according to Eq. (18), leads to a quadratic system of equations for q, (or, \(\varvec{\tau }\)). However, it would be easier to set up an iteration. One can first use the zeroth order solution and, using Eq. (22), obtain \(e^0(x)\). Denoting this solution with \(\epsilon ^0\), the above equation leads to

Assuming a similar expansion for q(x) according to \(q(x)=\sum _{j=1}^Nc_j(x)\tau _j\) leads to a similar linear system given by,

where the entries in the matrix \(\mathbf{F}\) are given by

where, Eq. (23) is used for a sequences of frequencies, \(k_1,k_2,\ldots ,k_\ell \). Similar to Eq. (13), least-square solution can be obtained after introducing Tikhonov regularization.

4 Moment method for 2-D Helmholtz equation

We next apply the method developed in Sect. refs2 to a 2-D Helmholtz equation. For this problem we assume that all of the boundary is accessible for collecting measurements. Let \(\varOmega \in R^2\) be a closed bounded set. Consider a 2-D Helmholtz equation given by

where Dirichlet and Neumann boundary conditions are given at the boundary of \(\varOmega \), denoted by \({\partial }\varOmega \).

Measurements in the form of normal derivative at the boundaries can be collected and provided for the purpose of inversion. One can assume an initial value for the unknown function, i.e., \(\hat{p}({\mathbf{x}})\) and, using the Dirichlet boundary conditions, obtain the background field satisfying the system

Subtracting the background field from Eq. (26), one can obtain the error field, \(e({\mathbf{x}})=u({\mathbf{x}})-\hat{u}({\mathbf{x}})\), given by

Since the background field satisfies the Dirichlet boundary condition, the boundary conditions for the error field are given by \(e=0,\forall {\mathbf{x}}\in {\partial }\varOmega \), and \(\nabla _ne=\nabla _nu-\nabla _n\hat{u}\), where \(\nabla _n\) denotes the normal derivative. A linearized version of the above equation is given by

We can now consider a sampling function that is the solution to the Helmholtz equation given by

where \(\varpi ({\mathbf{x}})\) is an arbitrary boundary condition. Using Green’s second identity leads to

where the right-hand-side is known. Similar to Eq. (9), Eq. (33) constitute a moment problem. Choosing a set of linearly independent functions for the boundary conditions \(\varpi _\ell \) leads to

where the kernels \({\hat{u}}({\mathbf{x}})\xi _\ell \) are linearly indenpendentFootnote 2. Note that the under braced term includes the data which may often be noisy. Therefore, the right-hand-side requires that we integrate functions that are contaminated with noise. In the next section we develop a method that can filter out the noise. Assuming a similar expansion for the correction term \(q({\mathbf{x}})\), according to \(q=\sum _{j=1}\zeta _j({\mathbf{x}})\tau _j\) leads to

As is expected the coefficient matrix is non-square and rank deficient. Similar to Eq. (13), after introducing Tikhonov regularization, we can obtain a stable least-square solution for \(\varvec{\tau }\). We next develop a method that can effectively filter out the noise in the data.

5 Tailored filtering

The moment problem in Eq. (34) for the 2-D helmholtz equation requires the integration of the given data that maybe noisy. The data is given in the form of the normal derivative at the boundary in Eq. (27). Therefore, in the present iterative algorithm, the given data appear as the additional boundary condition for the error equation given in Eq. (30). We repeat this equation here for clarity

Additional condition for this equation is given by \(\nabla _ne=\underbrace{\nabla _nu}-\nabla _n\hat{u}\) for \({\mathbf{x}}\in {\partial }\varOmega \), where it includes the under braced term which is the noisy data. To formulate a tailored filter for the above equation we note that both \(q({\mathbf{x}})=0\) and \(e({\mathbf{x}})=0\) for \({\mathbf{x}}\in {\partial }\varOmega \). Since, \(k^2q({\mathbf{x}}){\hat{u}}({\mathbf{x}})=\sum _{j=1}^N\chi _j({\mathbf{x}})\vartheta _j\) where \(\chi _j({\mathbf{x}})=0\) for \({\mathbf{x}}\in {\partial }\varOmega \) with \(\vartheta _j\) being unknown. We can conclude that the error field has an expansion given by \(e({\mathbf{x}})=\sum _{j=1}^N\varepsilon _j({\mathbf{x}})\epsilon _j\) where \(\epsilon _j\) are unknowns and the functions \(\varepsilon _j({\mathbf{x}})\) satisfy the Helmholtz equations given byFootnote 3

Choosing a set of linearly independent functions \(\chi _j({\mathbf{x}})\), leads to a set of linearly independent solutions \(\varepsilon _j({\mathbf{x}})\). It is then reasonable to expect that the given boundary condition for the error field \(\nabla _n e({\mathbf{x}})\) live in the space generated by \(\nabla _n\varepsilon _j({\mathbf{x}})\), \(j=1,2,\ldots ,N\). In other words,

where the functions \(\varepsilon _j({\mathbf{x}})\) are computed, and \(\nabla _n e\) is the known noisy function. Multiplying the above equation by \(\varepsilon _i({\mathbf{x}})\) and computing the appropriate interproduct leads to a linear system given by

Using singular-value-decomposition (Lay et al. 2016), the above linear system can be solved for the coefficients \(\varrho _j\). In the actual calculations, we can use the filtered data, \(\sum _{j=1}^N\nabla _n\varepsilon _j({\mathbf{x}})\varrho _j\) instead of the noisy data \(\nabla _n e\).

6 A direct formulation using sampling functions

In this section we use a specific set sampling functions to formulate a direct method that does not require integration of the data at the boundary. Consider a sampling function that is the solution to the Helmholtz equation given by

where \(\delta ({\mathbf{x}}-{\mathbf{x}}_0)\) is the delta function centered at \({\mathbf{x}}_0\). The above equation is the Green’s function for the variable wave number Helmholtz equation. We use an approximate method to obtain the solution which is presented in the appendix. Applying the Green’s second identity to the above equation and using Eq. (30) leads to

Using the property of the delta function and the boundary conditions, the above equation leads to the value of the error at \({\mathbf{x}}_{0}\) according to

Now, consider domain close to a boundary as depicted in Fig. 1. One can use a third-order one-sided first-derivative of the error at the boundary according to

where dx is the equal spacing between the points. Using Eq. (41), one can relate the unknown \(q({\mathbf{x}})\) to the gradient of the error at the boundary according to

The above equation relates the unknown correction term \(q({\mathbf{x}})\) to the known quantity which is the gradient of the error field at the boundary. Note that the error at the boundary is equal to zero, i.e., \(e(x_0)=0\). Evaluating the above equation for L locations (i.e., \(\ell =1,2,\ldots ,L\)) on the boundary, and assuming a similar expansion for the correction term \(q=\sum _{j=1}\zeta _j({\mathbf{x}})\tau _j\) leads to

and the gradient of the error \(\nabla _n e({\mathbf{x}})\), \({\mathbf{x}}\in {\partial }\varOmega \) at the boundaries are placed in the vector \(\varvec{\varkappa }\) on the right hand side. Similar to Eq. (13), the above equation can now be solved after the introduction of Tikhonov regularization through least-square minimization.

7 Numerical examples and implementations

So far, we have introduced two computational algorithms to study inverse wave scattering. We have presented two methods for the one-dimensional inverse wave scattering. For the one-dimensional case, the measurements are collected at one point on the boundary. For the one-dimensional cases the noise in the data is introduced using a random number generator. Since the data is a scalar value, one can collect a few measurement for the same frequency and average the value. We are introducing 1% noise for the numerical results involving one-dimensional case. For the 2-D problems, measurements are in the form of functions and filtering out the noise will be crucial. We are introducing a new method to filter out the noise. We first consider the one-dimensional problem.

We can divide the domain \(\varOmega =[-1,2]\) into equal intervals and use a second order finite difference approximation to solve various working equations. The point \(x=0\) is accessible, and is used to collect data. The excitation \(\delta (x-x_0)\) is placed at \(x_0=-0.5\). To excite the system, we can use \(L=20\) different values of frequencies, starting with \(k_1=1.2\), and with increments \(\varDelta k=0.14\).

7.1 Example 1

Assume that the interest is to recover the unknown function given by

The unknown function is equal to the background outside the region of interest, i.e., \(p(x)=1\) for \(x\in [-1,0]\cup [1,2]\) and unknown for \(x\in [0,1]\). One can start with a nominal value for \({\hat{p}}(x)=1\), obtain the background fields, and obtain the errors \(e(0)=g_0\) for all frequencies. Boundary conditions for the sampling functions are given by \(\psi _j(-1)=(0.67k_\ell ,-(\ell /0.8))\), for \(\ell =1,2,\ldots ,L\). This choice is arbitrary. We just need to generate a set of sampling functions, \(\psi _j(x)\), that are linearly independent. Once the sampling functions are computed, then the right-hand-side in Eq. (10) can be computed. The correction to the assumed value is the unknown, and an appropriate finite dimensional approximation is given by \(q=\sum _{j=1}^N c_j(x)\tau _j\) where \(c_j(x)=sin(j\pi x)\), for \(j=1,2,\ldots ,N\). Choosing \(N=20\), one can compute the coefficient matrix in the linear system in Eq. (12). As is expected this matrix is rank deficient. Figure 2 presents the normalized eigenvalues of the symmetric matrix \([\mathfrak {R}(\mathbf{A})^T\mathfrak {R}(\mathbf{A})+\mathfrak {I}(\mathbf{A})^T\mathfrak {I}(\mathbf{A})]\). We can also check the performance of the direct method in Sect. refs3, by considering the rank of the coefficient matrix in Eq. (22). Figure 2 also presents the normalized eigenvalues of the symmetric matrix \([\mathfrak {R}(\mathbf{E})^T\mathfrak {R}(\mathbf{E})+\mathfrak {I}(\mathbf{E})^T\mathfrak {I}(\mathbf{E})]\).

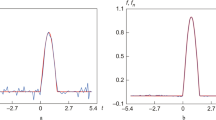

Combining the two linear systems, we can solve the linear system after the introduction of Tikhonov regularization through the use of Least-square minimization. Figure 3 shows the recovered function when \(\beta =0.5E-4\) with 1% noise. It also show the recovered function when the first-order nonlinear term is included in the direct method, i.e., Eq. (24). Figure 4 presents the reduction in the error as a function of the number of iterations. The inclusion of the first-order nonlinear term improves the rate of the reduction in the error.

7.2 Example 2

we next consider the evaluation of an unknown function given by

Using both formulations and including the first-order term from the nonlinear contribution, Fig. 5 presents the recovered function and Fig. 6 shows the \(\beta =.6E-4\). This example involves three targets, and the combined methods can recover a close estimate of it. For both examples, the accuracy of the recovered function is better for the region that is closer to the accessible point of the domain.

7.3 Example 3

We next consider a 2-D domain \(\varOmega =[0,1]\times [0,1]\) and study the inverse evaluation of a wave function, given in Eq. (26). Assume that the unknown function is given by

The boundary can be exposed to an incoming wave given by

where \(k=3\) and \(\theta =\frac{\pi }{3.4}\). One can generate the data. For this example, we assume that data can be collected with no noise (Creedon et al. 2011). In this section, we study the reduction in the error which is the difference between the given data and the calculated value at a given iteration.

To generate the sampling functions, \(\xi _\ell ({\mathbf{x}})\), given in Eq. (31), one needs to provide linearly independent set of functions for the boundary conditions \(\xi _\ell ({\mathbf{x}})=\varpi _\ell ({\mathbf{x}})\), for \({\mathbf{x}}\in {\partial }\varOmega \). We can use a combination of cubic-B splines (Tadi 2009), and (Christensen 2010). For the Bessel functions, we can use

Note that \(J_n(0)=0\), and the values of \(\lambda _j\) are obtained by imposing \(J_n(1)=0\). And, for \(n=0\), we can use the functions \((1-x)-J_0(x)\). Using combinations of cubic-B splines and Bessel functions we can generate the boundary conditions \(\varpi _\ell \) needed to generate the sampling functions \(\xi _\ell ({\mathbf{x}})\). In this example, we are using 121 linearly independent set of functions, \(\varpi _\ell \) for \(\ell =1,2,\ldots ,121\). To approximate the corrections term \(q({\mathbf{x}})\), we can again use sine functions according to

where, \(\zeta _\ell ({\mathbf{x}})=0\) \(\forall {\mathbf{x}}\in {\partial }\varOmega \), for \(\ell =1,2,\ldots ,N\), with \(L=M^2\). Note that the unknown wave number is considered to be known at the boundaries therefore, we have \(q({\mathbf{x}})=0,\forall {\mathbf{x}}\in \partial \varOmega \). For this example, we are using \(M=14\), which leads to \(L=196\). We can now proceed and generate the linear system given in Eq. (35). As is expected the coefficient matrix is non-square and rank deficient. Figure 6 shows the normalized eigenvalues of the symmetric matrix \(\mathbf{D}^T\mathbf{D}\). We are normalizing the eigenvalues with respect to the highest eigenvalue.

For only one set of data, i.e., \(k_1=3\), there are about 20 significant eigenvalues out of 196. If we can provide additional measurements and augment the linear system in Eq. (35), the number of significant eigenvalues in this matrix can be increased. Providing data for 4 different frequencies, i.e., \(k_1=3\), \(k_2=4\), \(k_3=5\), \(k_4=6\), the linear matrix is Eq. (35) leads to

As we include the data for additional frequencies, the number of significant eigenvalues in this matrix increases. We next proceed to recover the unknown wave number. In order to obtain a stable inversion, we still need to introduce Tikhonov regularization, similar to Eq. (13). Figure 7 shows the actual unknown function and Fig. 8 shows the recovered function after 200 iteration. Figure 9 considers the cross-section along the line \(x+y=1\) and compares the actual function with the recovered function along this line.

7.4 Example 4

We next consider a case where the data is noisy and use the direct method presented in Sect. 6. We need to apply the tailored filter presented in Sect. 5. Consider the problem of recovering a wave number given by

which is shown in Fig. 10. One can assume an initial guess, obtain background field and arrive at Eq. (30). The noisy data appears in the additional boundary condition given by \(\nabla _ne=\underbrace{\nabla _nu}-\nabla _n\hat{u}\) for \({\mathbf{x}}\in {\partial }\varOmega \). The proper space to project the noisy term, \(\nabla _ne\), can be obtained by first solving the set of Helmholtz equations given in Eq. (36) where \(\chi _j({\mathbf{x}})\), \(j=1,2,\ldots ,N\) is a set of linearly independent functions with \(\chi _j({\mathbf{x}})=0\) for \({\mathbf{x}}\in {\partial }\varOmega \). Using the same sine functions given in Eq. (50) with \(M=14\) leads to a set of linearly independent functions \(\varepsilon _j({\mathbf{x}})\), with \(j=1,2,\ldots ,156\). Therefore, the proper space to project the noisy term \(\nabla _ne({\mathbf{x}})\) is given by

Consider the lower part of the boundary where \(y=0\) and \(x\in [0,1]\). The noisy boundary condition is given by \(\frac{{\partial }e}{{\partial }y}\) at \(x=0\). We can project this function according to

Solving for the components \(\varrho _j\) leads to a linear system given by

where the coefficient matrix is symmetric and rank deficient. Singular value decomposition can be easily used to solve this system. Once we have the parameters \(\varrho _j\), then for the calculations we can use the filtered data according to Eq. (54).

Figure 11 shows the function \(\nabla _ne ({\mathbf{x}})\) for \(y=0\) and \(x\in [0:1]\), lower boundary, or \(\frac{{\partial }e(x,y)}{{\partial }y}|_{y=0}\) for \(x\in [0{:}1]\). The figure compares the uncorrupted data and the noisy data which has 3% noise added. It also presents the filtered data.

We next proceed to recover the unknown function using this filter. We can use a similar boundary condition given in Eq. (46). Using nine sets of data with \(k_1=3.\), \(k_2=3.4\), \(k_3=4.\), \(k_4=5.\), \(k_5=5.4\), \(k_6=5.8\), \(k_7=6.\), \(k_8=6.3\), and \(k_9=6.5\). We use three angles for the incoming waves given by \(\theta _1=\frac{\pi }{3.4}\), \(\theta _2=\frac{\pi }{4.4}\), and \(\theta _3=\frac{\pi }{6.9}\) to generate 9 sets of linearly independent boundary conditions. Figures 12, 13 shows the recovered function after 300 iterations and Fig. 14 shows a cross-section of the function. It compares the recovered function with the actual value along the long \(x+y=1\).

7.5 Example 5

We next consider the recovering of an unknown function given by

which is shown in Fig. 15. Using the same number of collected data as in example 4, Fig. 16 shows the recovered function after 300 iterations, and Fig. 16 compares the recovered function over the cross-section \(x=y\).

Over-all the results can be improved by using higher frequencies, which in general requires finer mesh. We also need to investigate various sampling functions. The present method can also be naturally combined with our previous method based on proper solution space (Hamad and Tadi 2019), since both treat the linearized error field to obtain a correction term to an assumed initial guess for the unknown function. All of these issues will be addressed in our future work.

8 Conclusion

In this note we presented two methods for inverse wave scattering. We also presented a new method for filtering noisy data. For one dimensional problems the present method is able to recover a close estimate of the unknown function based on data collected at one point on the boundary. For two dimensional problems the presented method can recover a reasonable approximation of the unknown function with up to 3% noise. This method can be combined with our previous method. The accuracy of the recovered function can also be improved by introducing additional data and/or using finer computational mesh. All of these issues will be addressed in our future work.

Availability of codes

All computer codes used in this work will be provided upon request.

Notes

Note that, for nonzero \({\hat{u}}(x)\), the kernel functions \(e^{ik_1(\eta -2)}\) and \(e^{ik_2(\eta -2)}\) are linearly independent in [0 : 2] for \(k_1\ne k_2\).

The Helmholtz equation in Eq. (31) is well-posed for \(k^2\) away from the eigenvalues of the Laplace operator. Therefore, \(\xi _i({\mathbf{x}})\) and \(\xi _j({\mathbf{x}})\) are linearly independent for two linearly independent boundary conditions i.e., \(\varpi _i({\mathbf{x}})\) and \(\varpi _j({\mathbf{x}})\), \({\mathbf{x}}\in {\partial }\varOmega \).

In actuality, the functions \(\varepsilon _j({\mathbf{x}})\) should satisfy the nonhomogeneous Helmholtz equation given by \(\varDelta \varepsilon _j+k^2p({\mathbf{x}})\varepsilon +\chi _j({\mathbf{x}})=0\). However, at this stage \(p({\mathbf{x}})\) is unknown, and we are using \({\hat{p}}({\mathbf{x}})\) instead. As the iteration proceeds, \({\hat{p}}({\mathbf{x}})\) converges to \(p({\mathbf{x}})\).

References

Ang DD, Gorenflo R, Le Khoi V, Trong DD (2002) Moment theory and some inverse problems in potential theory and heat conduction. Springer

Barcelo JA, Castro C, Reyes JM (2016) Numerical approximation of the potential in the two-dimensional inverse scattering problems. Inverse Problems 32:015006

Belai V, Frumin LL, Podivilov EV, Shapiro DA (2008) Inverse scattering for the one-dimensional Helmholtz equation: fast numerical method. Opt Lett 33(18):2101–2103

Bugarija S, Gibson PC, Hu G, Li P, Zhao Y (2020) Inverse scattering for the one-dimensional Helmholtz equation with piecewise constant wave speed. Inverse Problems 36(7):075008

Capozzoli A, Curcio C, Liseno A (2017) Singular value optimization in inverse electromagnetic scattering. IEEE Antennas Wirel Propag Lett 16:1094–1097

Christensen O (2010) Functions, spaces, and expansions. Springer, New York

Colten D, Kress R (1991) Inverse acoustic and electromagetic scattering theory. Springer, New York

Colten D, Coyle J, Monk P (2000) Recent developments in inverse acoustic scattering theory. SIAM Rev 42(3):369–414

Creedon DL, Tobar ME, Ivanov EN, Hartnett JN (2011) High-resolution Flicker-noise-free frequency measurements of weak microwave signals. IEEE Trans Microw Theory Techn 59(6):1651–1657

Desmal A, Bağci H (2015) A preconditioned inexact Newton method for nonlinear sparse electromagnetic imaging. IEEE Geosci Rem Sens Lett 12(3):532–536

Eshkuvatov Z (2018) Homotopy perturbation method and Chebyshev polynomials for solving a class of singular and hypersingular integral equations. Numer Algebra Control Optim 8(3):347–360

Fessler JA (2010) Model-based image reconstruction for MRI. IEEE Signal Process Mag 27(4):81–89

Hamad A, Tadi M (2019) Inverse scattering based on proper solution space. J Theor Comput Acoust 27(3):1850033

Irishina N, Dorn O, Moscoso M (2008) A level set evolution strategy in microwave imaging for early breast cancer. Comput Math Appl 56:607–618

Jin B, Zheng Y (2006) A meshless method for some inverse problems associated with the Helmholtz equation. Comput Methods Appl Mech Eng 195:2270–2288

Jamil M, Hassan MK, Al-Mattarneh MA, Zain MFM (2013) Concrete dielectric properties investigation using microwave nondestructive techniques. Mater Struct 46(1):77–87

Kang S, Lambert M, Ahn CY, Ha T, Park W-K (2020) Single- and multi-frequency direct sampling methods in limited-aperture inverse scattering problem. IEEE Access 8:121637–121649

Klibanov MV, Kolesov A, Sullivan A, Nguyen LD (2018) A new version of the convexification method for a 1D coefficient inverse problem with experimental data. Inverse Problems 34(11):115014

Klibanov MV, Romanov VG (2015) Two reconstruction procedures for a 3D phaseless inverse scattering problem for the generalized Helmholtz equation. Inverse Problems 32:015005

Lagaris IE, Evangelakis GA (2011) One-dimensional inverse scattering problem in acoustics. Brazil J Phys 41:248–257

Lay D, Lay S, McDonald J (2016) Linear Algebra and its applications. Pearson, New York

Marin L, Elliott L, Heggs PJ, Ingham DB, Lesnic D, Wen X (2006) Dual reciprocity boundary element method solution of the Cauchy problem for Helmholtz-type equations with variable coefficients. J Sound Vib 297:89–105

Mueller JL, Siltanen S (2012) Linear and nonlinear inverse problems with practical applications. SIAM, Philadelphia

Novikov RG (2015) An iterative approach to non-overdetermined inverse scattering at fixed energy. Sbornik Math 206(1):120–134

Sacks P, Jaemin Shin (2009) Computational methods for some inverse scattering problems. Appl Math Comput 207:111–123

Tadi M (2017) On elliptic inverse heat conduction problems. ASME J Heat Tranasf 139(074504–1):4

Tadi M (2019) A direct method for a Cauchy problem with application to a Tokamak. Theor Appl Mech Lett 9(4):254–259

Tadi M, Nandakumaran AK, Sritharan SS (2011) An inverse problem for Helmholtz equation. Inverse Problems Sci Eng 19(6):839–854

Tadi M (2009) A computational method for an inverse problem in optical tomography. Discret Contin Dyn Syst-B 12(1):205–214

Thanh NT, Klibanov MV (2020) Solving a 1-D inverse medium scattering problem using a new multi-frequency globally strictly convex objective functional. J Inverse Ill-posed Problems 28(5):693–711

Zhang Z, Chen S, Xu Z, He Y, Li S (2017) Iterative regularization method in generalized inverse beamforming. J Sound Vib 396:108–121

Funding

There were no funding for this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There are no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

To obtain an approximate solution for the Helmholtz equation in Eq. (39) we can rewrite it according to

where \(\bar{p}({\mathbf{x}})={\hat{p}}({\mathbf{x}})-1\). We can then consider a similar perturbation approximation and obtain the solution as a series given by \(\xi =\xi ^0+\xi ^1+\xi ^2+\xi ^3+\dots \) where

Note that \(\hat{p}({\mathbf{x}})\) is zero for most of the region, and the above series converges very fast. In all calculations presented here, we are including four terms in the series.

Rights and permissions

About this article

Cite this article

Tadi, M., Radenkovic, M. New computational methods for inverse wave scattering with a new filtering technique. Optim Eng 22, 2457–2479 (2021). https://doi.org/10.1007/s11081-021-09638-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11081-021-09638-8