Abstract

We suggest a revised form of a classic measure function to be employed in the optimization model of the nonnegative matrix factorization problem. More exactly, using sparse matrix approximations, the revision term is embedded to the model for penalizing the ill-conditioning in the computational trajectory to obtain the factorization elements. Then, as an extension of the Euclidean norm, we employ the ellipsoid norm to gain adaptive formulas for the Dai–Liao parameter in a least-squares framework. In essence, the parametric choices here are obtained by pushing the Dai–Liao direction to the direction of a well-functioning three-term conjugate gradient algorithm. In our scheme, the well-known BFGS and DFP quasi–Newton updating formulas are used to characterize the positive definite matrix factor of the ellipsoid norm. To see at what level our model revisions as well as our algorithmic modifications are effective, we seek some numerical evidence by conducting classic computational tests and assessing the outputs as well. As reported, the results weigh enough value on our analytical efforts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A cursory readout of the literature confirms that high-dimensional models have increasingly appeared in the data mining procedures, in the current age of social networks, bioinformatics, digital communications, and quantum computing. This fact places great importance on the necessity of diversifying the strategies for managing the difficulties that need to be prevailed when working with the complex, massive data sets.

A well-known plan to handle the high-dimensional models has been mainly centered on the compact representation of the input data sets [16]. In this regard, data reduction principally targets decreasing the size of the data sets while maintaining the important information, sometimes by data encoding procedures [20]. Meanwhile, when the data sets are given in the matrix forms, classic tools of the linear algebra such as nonnegative matrix factorization (NMF) may be greatly and influentially helpful [10, 17]. As known, a wide range of the real-world data sets are inherently nonnegative and so, we should technically try to rule out the generation of the negative entries while managing and processing such data. Nowadays, NMF is repeatedly and purposefully applied in practical studies such as pattern recognition [11], recommendation systems [21] and face detection [29].

In a common framework, various NMF techniques take a matrix with nonnegative entries as the input, and deliver two lower dimension matrices with nonnegative entries as the output [16], in a way that multiplying the output matrices yields an accurate approximation for the input matrix. As a matter of fact, well-conditioning the intermediary consecutive approximations of the factorization elements may influentially enhance the computational stability [30], and as a result, make it possible to gain more appropriate output matrices as well.

Researchers have recently also pushed to devise memoryless versions of the classic algorithms as another move to handle the high-dimensional optimization models. To contrive a memoryless technique for a general minimization model, we should tactfully benefit the differential features of the cost function as well as the constraints. Meanwhile, the algorithmic steps should be simply performed, not being so time-consuming and labor-intensive, alongside keeping the accuracy at an acceptable level and ensuring the convergence of the solution trajectory. These features can be aggregately seen in the conjugate gradient (CG) algorithms which have been traditionally shaped in the vector forms [28]. Especially, the Dai–Liao (DL) method is nowadays labeled as an efficient CG algorithm due to flexibly incorporating the conjugacy and the quasi–Newton aspects in general circumstances [8, 13].

Here, we plan to address possible model revisions as well as algorithmic modifications of some classic strategies for managing the large-scale data sets. To summarize the organization of our study, firstly we deal with a revised form of the classic measure function proposed by Dennis and Wolkowicz [14] in Section 2, to be embedded to the optimization model of the NMF problem, by penalizing the ill-conditioned intermediary approximations of the factorization elements. Then, in Section 3, we focus on determining adaptive formulas for the DL parameter as the solutions of a least-squares model formulated based on the ellipsoid vector norm [28]. We carry out numerical tests to mirror the value of our theoretical efforts in Section 4, on the CUTEr problems [18] as well as a set of randomly generated NMF cases. Finally, we summarize some results for better understanding of the progress level in Section 5.

2 A revised model for the nonnegative matrix factorization problem

Dimensionality reduction methodologies are naturally understood as influential approaches for analyzing large data sets. As known, high-dimensional data analysis is an integral part of the digital era due to recent developments in sensor technology. As mentioned in Section 1, NMF is one such techniques that has caught researchers’ imagination thanks to the interpretability, simplicity, flexibility and generality [11, 21, 24, 27, 29].

Extracting hidden and important features from data gives rise to the NMF popularity in which the data matrix is approximated by the product of two matrices, usually much smaller than the original data matrix. All the input and output matrices of NMF (often) should be component wisely nonnegative. Mathematically speaking, for a given component wisely nonnegative matrix \(\textbf{A}\in \mathbb {R}^{m\times n}\) (or \(\textbf{A}\ge 0\) for short) and a positive integer \(r\ll \min \{m,n\}\), NMF entails finding component wisely nonnegative matrices \(\textbf{W}\in \mathbb {R}^{m\times r}\) and \(\textbf{Z}\in \mathbb {R}^{r\times n}\) (or \(\textbf{W}\ge 0\) and \(\textbf{Z}\ge 0\) for short), by solving the following minimization problem:

where \(\Vert .\Vert _F\) stands for the Frobenius norm. In an efficient approach to address (2.1), the alternating nonnegative least-squares (ANLS) technique targets the following two subproblems [22]:

for all \(k\in \mathbb {Z}^+=\mathbb {N}\bigcup \{0\}=\{0,1,2,\dots \}\).

As known, in the computational and analytical studies of the matrix spaces, a great deal of concern is devoted to the matrix condition number, an influential factor that is in a straight connection with the collinearity between the rows or the columns of the matrix [30]. Experiential efforts of the literature show that ill-conditioning may significantly deflect the solution process and yield misleading results. So, it is a classic matter of routine to devise a plan for having control over the condition number of the matrices that iteratively generate in an algorithmic procedure.

A cursory glimpse of the NMF literature shows a lack of analytical will as well as structural tendency to dealing with well-conditioning of the NMF outputs. It should be noted that various modified NMF models mainly target the orthogonality or symmetrization of the decomposition elements [17], being helpful in special applications of the data mining such as sparse recovery and clustering. Such extensions of the classic NMF model have been devised by imposing extra constraints to push the solution path toward the desired outputs. As a results, the solution process of the mentioned models can be to some extent challenging and sometimes, the workload may get heavy.

To depict the effect of ill-conditioning on the NMF model, here we report the outputs of the MATLAB function ‘nnmf’ on the well-known Hilbert matrix. Defined by

the Hilbert matrix \(\mathcal {H}\in \mathbb {R}^{n\times n}\) has been classically recognized as an ill-conditioned matrix, being also (symmetric) positive definite. By setting \(n=20\) and \(r=6\), and then investigating the NMF outputs on \(\mathcal {H}\) obtained by 10000 different implementations of the MATLAB function ‘nnmf’, we observed that for more than 46% of the implementations, at least three columns (and rows) of \(\textbf{W}\) (and \(\textbf{Z}\)) were equal to zero. That means for more than 46% of the implementations the outputs for \(r=4,5,6\) were quite the same. So, in such situations, the NMF cannot serve as a reliable tool in a recommender system for which filling the zero entries (empty positions) is of great importance. On the other hand, we observed that for at least 34% of the outputs the relative error was more than one. These observations could motivate us to deal with collinearity in the NMF model.

Combating the collinearity between the columns of \(\textbf{W}\) or the rows of \(\textbf{Z}\), in order to take computational stability attitude toward the NMF model prompted us to plug condition number of the matrices \(\mathcal {W}=\textbf{W}^T\textbf{W}\) and \(\mathcal {Z}=\textbf{Z}\textbf{Z}^T\) of the dimension \(r\times r\) into the model (2.1). Note that the existence of sufficient (numerical) linear independency between the columns of \(\textbf{W}\) or the rows of \(\textbf{Z}\), makes the matrices \(\mathcal {W}\) and \(\mathcal {Z}\) acceptably well-conditioned and positive definite. While, the mentioned collinearity pushes \(\mathcal {W}\) and \(\mathcal {Z}\) toward ill-conditioning and positive semidefiniteness. So, to be cautious about such troubling issues, the following revised version of the NMF model (2.1) can be proffered:

where \(\lambda _1\ge 0\) and \(\lambda _2\ge 0\) are the penalty parameters [25, 26] and the maximum magnification (maxmag) and the minimum magnification (minmag) by an arbitrary matrix \(P\in \mathbb {R}^{m\times n}\) are respectively defined in Watkins [30] as

As seen, ill-conditioned choices for \(\textbf{W}\) and \(\textbf{Z}\) meaningfully impose penalty to the model. Meanwhile, although seldom occurs in practice, \(\mathfrak {\hat{F}}(\textbf{W},\textbf{Z})\) is not well-defined when \(\textbf{W}\) or \(\textbf{Z}\) are rank deficient.

In the model (2.4) well-conditioning has been brought up by straightly embedding penalty terms to the cost function. So, in this respect, since we made the solution process away from the possible troubling consequences resulted by imposing an extra set of constraints, finding approximate solutions of the model may be less challenging. However, we should not overlook the complexity of doing computations by the spectral condition number in the model, especially in large-scale cases. It is generally a matter of fact that carrying out calculations with high-dimensional dense matrices causes extra CPU time and may increase the numerical errors as well. So, developing sparse approximations of such matrices in the data analysis has recently attracted special attentions [25, 26].

Among the fundamental sparse structures for the symmetric matrices, there exist the diagonal and the (banded) symmetric tridiagonal matrices [7] as well as the symmetric rank-one or rank-two updates of the (scaled) identity matrix [28]. In essence, we should conduct a cost-benefit analysis to select a special sparse matrix structure which is of enough suitability in the relevant application. Driven by this issue, because of the presence of the spectral condition number in the augmented model (2.4) which is directly linked to the eigenvalues of the matrix, to tackle some precarious situations stemming from a great deal of time-consuming for calculating \(\mathcal {W}\) and \(\mathcal {Z}\), it may be preferable to use diagonal approximations of \(\mathcal {W}\) and \(\mathcal {Z}\) in the model (2.4) by

where

Notably, the above diagonal estimations are derived from

where \(\textbf{D}^+\) denotes the collection of all diagonal matrices with the nonnegative elements in \(\mathbb {R}^{r\times r}\).

As known, measure functions provide helpful tools to evaluate and analyze well-conditioning of the square matrices. They often target the distribution of the matrix eigenvalues [25]. Among them, as a fundamental study to analyze the scaling and sizing of the quasi–Newton updates, Dennis and Wolkowicz [14] proposed the following measure function:

for an arbitrary positive definite matrix \(\textbf{A}\in \mathbb {R}^{r\times r}\). As a factor to evaluate well-conditioning, \(\psi (\textbf{A})\) considers all the eigenvalues of \(\textbf{A}\), rather than, as occurs in the spectral condition number, only taking the extreme eigenvalues of the matrix [30]. So, by employing \(\psi (.)\) instead of \(\kappa (.)\) in (2.4), it is more likely possible to obtain NMF elements with well-distributed eigenvalues. However, the matrix function (2.5) would be accompanied by some kinds of complexity due to its denominator.

Mathematical inequalities have been widely and purposefully employed by the researchers to turn a dense or complicated formula into something manageable. For this aim, the first and foremost point in accordance with the norm of the literature is to rise the level of interpretability of the targeted formula or model. Here, for the sake of a well-planned simplicity that is a crucial issue in the high-dimensional data analysis, we organize assistance from the first part of the mean inequality chain that is related to the algebraic ties between the harmonic, geometric, arithmetic, and quadratic means. To proceed, firstly note that \(\text {det}(\textbf{A})=\displaystyle \prod _{i=1}^{r}\zeta _i\), in which \(\{\zeta _i\}_{i=1}^r\) is the set of the eigenvalues of \(\textbf{A}\). Therefore, bearing the relation between the geometric and the harmonic means in mind, here in the sense of

and noting that the trace of a (square) matrix is equal to the sum of its eigenvalues, the following simple bound for \(\psi (\textbf{A})\) can be obtained:

This gives rise compelling motivations to employ \(\varphi (.)\) instead of \(\kappa (.)\) in (2.4), to possibly gain NMF elements with well-distributed eigenvalues. So, the modified model is given by

Inherited from the measure function (2.5), the penalty terms of the model (2.6) control the condition number by engaging in all the diagonal elements of the relevant matrices, not only considering the extreme ones. Also, emerging polynomial terms makes the model easier to handle with respect to determining the gradient of the cost function.

The major defect of the cost function of the model (2.6) is that it is not differentiable everywhere due to the extra penalty terms. Especially, if \(\textbf{W}\) has a zero column or \(\textbf{Z}\) has a zero row, then \(\mathfrak {\breve{F}}\) in (2.6) is not well-defined. Moreover, small magnitudes of the columns of \(\textbf{W}\) or the rows of \(\textbf{Z}\) are computationally troublesome. Backed by these arguments and in favor of simplicity, our revised ANLS (RANLS) method is founded upon the following modified version of the model (2.6):

with some constant \(\gamma >0\). As seen, \(\mathfrak {\tilde{F}}\) is well-defined and also, it is differentiable everywhere. Thus, the next revised versions of the least-squares models (2.2) and (2.3) should alternately be solved:

for all \(k\in \mathbb {Z}^+\).

3 Adaptive optimal choices for the Dai–Liao parameter based on the ellipsoid norm

Among the fundamental techniques for solving the unconstrained minimization problem \(\displaystyle \min _{x\in \mathbb {R}^n} f(x)\), the CG methods are iteratively defined by

starting by some \(x_0\in \mathbb {R}^n\) and \(d_0=-g_0\), in which \(g_k=\triangledown f(x_k)\) and \(\beta _k\in \mathbb {R}\) is the CG parameter [3]. Also, the scalar \(\alpha _k>0\), called the step length, is customarily determined as the output of an approximate line search, popularly to meet the (strong) Wolfe conditions [28]. Here, we assume that the cost function f is smooth and its gradient is analytically available. Also, \(\Vert .\Vert \) signifies the \(\ell _2\) (Euclidean) norm and our analysis undergoes with the Wolfe conditions for which \(s_k^Ty_k>0\), where \(s_k=x_{k+1}-x_k=\alpha _k d_k\).

In the initial years of the current century, the worthy study of Dai and Liao [13] brought considerable attention to the CG techniques in various guidelines [4]. Recently, Babaie–Kafaki [8] conducted an expository review on the DL method to provide a better understanding of the capabilities of the method from several standpoints. For the DL method, \(\beta _k\) in its original form is set to

with \(y_k=g_{k+1}-g_k\), where the scalar \(t>0\) is called the DL parameter. It is valuable to note that if

then the DL directions satisfy the sufficient descent condition which is an important ingredient of the convergence [5].

Among the analytical attempts to seek an appropriate formula for t as a classic open problem [4, 8], Babaie–Kafaki and Ghanbri [9] offered a least-squares model, i.e.

by pushing the DL direction to the direction of the three-term CG method proposed by Zhang, Zhou and Li (ZZL) [31]. As known, the ZZL directions satisfy a strong form of the sufficient descent condition. Moreover, they benefit the consecutive gradient differences vector \(y_k\) as an element of the search direction, besides the vectors \(g_{k+1}\) and \(d_k\) in the framework of a linear combination, rather than the DL directions that are just linear combination of \(g_{k+1}\) and \(d_k\). As a result of their plan, Babaie–Kafaki and Ghanbri [9] obtained the following formula for t:

Here, we organize assistance from the ellipsoid vector norm to diversify the adaptive choices for the DL parameter. As an extended form of the \(\ell _2\) norm in the sense of

where \(\mathcal {M}\in \mathbb {R}^{n\times n}\) is a (symmetric) positive definite matrix, ellipsoid norm has been pivotally employed to analyze the convergence of the steepest descent and the quasi–Newton methods, and particularly, to devise the scaled trust region algorithms [28]. In our strategy, we plan to set several choices for \(\mathcal {M}\) using the quasi–Newton updating formulas [28].

Quasi–Newton methods have been traditionally devised to tactfully estimate the (inverse) Hessian in order to determine the search direction in the iterative continuous optimization techniques. Mostly being positive definite, the given matrix approximations classically should satisfy the (standard) secant equation, i.e. \(\textbf{B}_{k+1}s_k=y_k\), where \(\textbf{B}_{k+1}\approx \nabla ^2f(x_{k+1})\) [3, 28]. The methods benefit enough flexibility to effectively address the large-scale models. For this aim, the memoryless versions of the well-known BFGS and DFP quasi–Newton updating formulas can be applied [6]; that is,

and

both are positive definite approximations of \(\nabla ^2f(x_{k+1})^{-1}\), for all \(k\in \mathbb {Z}^+\). Here, MLBFGS and MLDFP are respectively shortened forms of the ‘memoryless BFGS’ and the ‘memoryless DFP’.

It is a matter of tradition that reform is always needed in the available algorithms to answer the great need of diversity and inclusion. There are a lot of evidence in the methodology literature that such efforts evolved the algorithmic schemes over time. So, we should not neglect effects of these evolutionary plans on the hybrid CG algorithms as well. By this fact at the forefront, here we consider the ellipsoid extension of the least-squares model (3.4) as follows:

which yields

So, \(t_k^{\text {ZZL}}\) given by (3.5) is the solution of (3.4) by setting \(\mathcal {M}\) as the identity matrix. Also, if we let \(\mathcal {M}=\textbf{B}_{k+1}\) given by a quasi–Newton update for the Hessian, then, because of the standard secant equation we have

which is an effective formula already suggested by Dai and Kou (DK) [12]. This salient fact places great importance on the effectiveness of the given extended least-squares model. The setting \(t=t_k^{\text {DK}}\) in the DL method ensures (3.3) that squarely leads to the sufficient descent property. Moreover, if we let \(\mathcal {M}=\textbf{H}_{k+1}^{\text {MLBFGS}}\) or \(\mathcal {M}=\textbf{H}_{k+1}^{\text {MLDFP}}\), then we respectively obtain

or

From the Cauchy–Schwarz inequality, it can be seen that \(t_k^{\text {MLBFGS}}>0\) and \(t_k^{\text {MLDFP}}>0\). To gain the sufficient descent property in light of (3.3), here we propose the following restricted versions of (3.7) and (3.8):

and

As a result, global convergence of the DL method with the given formulas for t can be proved following the analysis of [2, 13].

4 Computational experiments

We offer here some computational confirmation for the veracity of our theoretical analyses, starting with some numerical tests on the CUTEr library [18] with \(n\ge 50\), comprising of 96 problems. All the tests were performed by MATLAB version 7.14.0.739 (R2012a), installed on the Centos 6.2 server Linux operation system, in a computer AMD FX–9800P RADEON R7 with 12 COMPUTE CORES 4C+8G 2.70 GHz of CPU and 8 GB of RAM. The effectuality of the parametric choices (3.6), (3.9), (3.10) and the Hager–Zhang (HZ) formula [19], i.e.

is appraised for the DL+ method with

proposed for establishing convergence for general cost functions [13]. In our tests, DK+, DL–BFGS+, DL–DFP+ and HZ+, stand for the iterative method (3.1) with the CG parameter (4.2), in which t is respectively computed by (3.6), (3.7), (3.8) and (4.1). Since in rare iterations the DL+ method may fail to generate descent direction, restart (by the negative gradient vector) has been also employed as suggested in Dai and Liao [13].

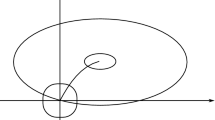

For the algorithms, we used the approximate Wolfe conditions of Hager and Zhang [19] with the similar settings, and let the stopping criteria as \(k>10000\) or \(\Vert g_k\Vert <10^{-6}(1+|f_k|)\). Also, we set \(\eta =0.26\) in (3.9) and (3.10), to enhance the possibility of employing (3.7) and (3.8). To visually assess the algorithmic results, we applied the Dolan–Moré performance profile [15], by comparisons based on the TNFGE and CPUT metrics, being acronyms for the ‘total number of function and gradient evaluations’ (as outlined in Hager and Zhang [19]) and the ‘CPU time’, respectively. Figure 1 represents the results, by which it can be seen that DL–BFGS+ and DL–DFP+ are generally preferable to DK+ and HZ+, especially with respect to TNFGE. Meanwhile, with respect to CPUT, at times DK+ and HZ+ are competitive with DL–BFGS+ and DL–DFP+. This observation is mainly related to the structure of the formulas (3.7) and (3.8) which is to some extent more complex rather than (3.6) and (4.1). Also, since DL–DFP+ is slightly preferable to DL–BFGS+, we can conclude that the setting (3.8) for the DL+ method is more effective than the setting (3.7).

To bring the validity of the given revised NMF model to light, in this part of our computational experiments we investigate the efficiency of DL–DFP+ for ANLS by solving the least-squares subproblem (2.2)–(2.3) of the minimization model (2.1), and for RANLS by solving the least-squares subproblem (2.8)–(2.9) of the minimization model (2.7). To handle the nonnegativity constraints in the subproblems, we followed the suggestion of Li et al. [22] and employed a proximal scheme in the sense of setting the negative entries of the iterative outputs equal to zero. For RALNS, we set \(\lambda _1=\lambda _2=1\) and \(\gamma =10^{-10}\) in (2.7), and for both ANLS and RANLS, we adopted the termination condition of Liu and Li [23] as well; which is

with \(\mathcal {F}=\mathfrak {F}\) and \(\mathcal {F}=\tilde{\mathfrak {F}}\), respectively, and \(\nu =10^{-2}\). By using the uniform distribution, the test matrices were generated randomly with various dimensions, together with the initial estimates of the NMF elements, as declared in Ahookhosh et al. [1]. Outputs have been outlined in Table 1, including the spectral condition number (Cond) and the relative error (RelErr), calculated by

To recapitulate the results, we can observe that RANLS and ANLS are approximately competitive with respect to the accuracy. While, in the condition number viewpoint which is the main target of this study, RANLS is generally preferable to ANLS. Hence, capability of delivering well-conditioned NMF elements with satisfactory accuracy can therefore be considered a success by RANLS.

5 Conclusions

We have mainly concentrated on the modifying a classic optimization model of the nonnegative matrix factorization problem, frequently arising in a wide range of practical fields. Avoiding the possibility of ill-conditioning in the results of the decomposition motivated us to revise the model by embedding a measurement for condition numbers of the diagonalized types of the output matrices. What embedded as the well-conditioner (penalty) term has been extracted from the Dennis–Wolkowicz measure function [14]. Then, based on an ellipsoid norm-oriented least-squares model, some optimal choices for the Dai–Liao parameter have been suggested. Driven by the great need for algorithmic tools with the low memory consumption of the machine, the ellipsoid norms have been centered on the memoryless BFGS and DFP formulas. The approach in terms of which the method’s influential parameter has been computed is tending the Dai–Liao search direction to that of a well-functioning three-term conjugate gradient algorithm. Then, to examine the performance of the Dai–Liao method when it is equipped with the given formulas as the parametric settings, some computational tests were performed on the CUTEr functions. The findings were evaluated leveraged on the well-known Dolan–Moré benchmark. The results demonstrated the positive impact of our suggestions for the Dai–Liao parameter. Furthermore, the quality of the given revised nonnegative matrix factorization model has been assessed in several random cases. The results showed that the revised model can produce more well-conditioned factorization elements with reasonable relative errors. Thus, in practical terms, computational experiments have supported our theoretical assertions.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Ahookhosh, M., Hien, L.T.K., Gillis, N., Patrinos, P.: Multi-block Bregman proximal alternating linearized minimization and its application to orthogonal nonnegative matrix factorization. Comput. Optim. Appl. 79(3), 681–715 (2021)

Aminifard, Z., Babaie-Kafaki, S.: An optimal parameter choice for the Dai–Liao family of conjugate gradient methods by avoiding a direction of the maximum magnification by the search direction matrix. 4OR. 17, 317–330 (2019)

Andrei, N.: Modern Numerical Nonlinear Optimization. Switzerland, Springer, Cham (2006)

Andrei, N.: Open problems in conjugate gradient algorithms for unconstrained optimization. B. Malays. Math. Sci. So. 34(2), 319–330 (2011)

Babaie-Kafaki, S.: On the sufficient descent condition of the Hager–Zhang conjugate gradient methods. 4OR. 12(3), 285–292 (2014)

Babaie-Kafaki, S.: On optimality of the parameters of self-scaling memoryless quasi-Newton updating formulae. J. Optim. Theory Appl. 167(1), 91–101 (2015)

Babaie-Kafaki, S.: A monotone preconditioned gradient method based on a banded tridiagonal inverse Hessian approximation. UPB Sci. Bull. Ser. A: Appl. Math. Phys. 80(1), 55–62 (2018)

Babaie-Kafaki, S.: A survey on the Dai-Liao family of nonlinear conjugate gradient methods. RAIRO-Oper. Res. 57, 43–58 (2023)

Babaie-Kafaki, S., Ghanbari, R.: Two optimal Dai-Liao conjugate gradient methods. Optimization 64(11), 2277–2287 (2015)

Berry, M.W., Browne, M., Langville, A.N., Pauca, V.P., Plemmons, R.J.: Algorithms and applications for approximate nonnegative matrix factorization. Comput. Stat. Data Anal. 52(1), 155–173 (2007)

Cho, Y.C., Choi, S.: Nonnegative features of spectro-temporal sounds for classification. Pattern Recognit. Lett. 26(9), 1327–1336 (2005)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23(1), 296–320 (2013)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43(1), 87–101 (2001)

Dennis, J.E., Wolkowicz, H.: Sizing and least-change secant methods. SIAM J. Numer. Anal. 30(5), 1291–1314 (1993)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2, Ser. A), 201–213 (2002)

Eldén, L.: Matrix Methods in Data Mining and Pattern Recognition. SIAM, Philadelphia (2007)

Gan, J., Liu, T., Li, L., Zhang, J.: Nonnegative matrix factorization: a survey. Comput. J. 64(7), 1080–1092 (2021)

Gould, N.I.M., Orban, D., Toint, Ph.L.: CUTEr: a constrained and unconstrained testing environment, revisited. ACM Trans. Math. Software 29(4), 373–394 (2003)

Hager, W.W., Zhang, H.: Algorithm 851: CG\(_{-}\)Descent, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Software 32(1), 113–137 (2006)

Han, J., Kamber, M., Pei, J.: Data Mining: Concepts and Techniques, 3rd Edition. Morgan Kaufmann (2012)

Koren, Y., Bell, R., Volinsky, C.: Matrix factorization techniques for recommender systems. Computer 42(8), 30–37 (2009)

Li, X., Zhang, W., Dong, X.: A class of modified FR conjugate gradient method and applications to nonnegative matrix factorization. Comput. Math. Appl. 73, 270–276 (2017)

Liu, H., Li, X.: Modified subspace Barzilai-Borwein gradient method for non-negative matrix factorization. Comput. Optim. Appl. 55(1), 173–196 (2013)

Pompili, F., Gillis, N., Absil, P.A., Glineur, F.: Two algorithms for orthogonal nonnegative matrix factorization with application to clustering. Neurocomputing 141, 15–25 (2014)

Roozbeh, M., Babaie-Kafaki, S., Aminifard, Z.: Two penalized mixed-integer nonlinear programming approaches to tackle multicollinearity and outliers effects in linear regression models. J. Ind. Manag. Optim. 17(6), 3475 (2021)

Roozbeh, M., Babaie-Kafaki, S., Aminifard, Z.: Improved high-dimensional regression models with matrix approximations applied to the comparative case studies with support vector machines. Optim. Methods Softw. 37(5), 1912–1929 (2022)

Shahnaz, F., Berry, M.W., Pauca, V.P., Plemmons, R.J.: Document clustering using nonnegative matrix factorization. Inf. Process. Manag. 42(2), 373–386 (2006)

Sun, W., Yuan, Y.X.: Optimization Theory and Methods: Nonlinear Programming. Springer, New York (2006)

Wang, Y., Jia, Y., Hu, C., Turk, M.: Nonnegative matrix factorization framework for face recognition. Int. J. Pattern Recognition Artif. Intell. 19(04), 495–511 (2005)

Watkins, D.S.: Fundamentals of Matrix Computations. John Wiley and Sons, New York (2002)

Zhang, L., Zhou, W., Li, D.H.: Some descent three-term conjugate gradient methods and their global convergence. Optim. Methods Softw. 22(4), 697–711 (2007)

Acknowledgements

The authors thank the anonymous reviewers for their worthy comments helped to improve the quality and organization of this work.

Funding

Open access funding provided by Libera Universitá di Bolzano within the CRUI-CARE Agreement. No funds, grants, or other support was received.

Author information

Authors and Affiliations

Contributions

All authors whose names appear on the submission: - made substantial contributions to the conception and design of the work, the acquisition, analysis, or interpretation of data; - drafted the work or revised it critically for important intellectual content; - approved the version to be published; and - agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Ethical Approval

The research does not involve human or animal participants. Also, the authors declare that they take on all the ethical responsibilities clarified by the journal.

Competing Interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Babaie-Kafaki, S., Dargahi, F. & Aminifard, Z. On solving a revised model of the nonnegative matrix factorization problem by the modified adaptive versions of the Dai–Liao method. Numer Algor (2024). https://doi.org/10.1007/s11075-024-01886-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-024-01886-w

Keywords

- Unconstrained optimization

- Nonnegative matrix factorization

- Conjugate gradient algorithm

- Quasi–Newton updating formula

- Ellipsoid norm