Abstract

In this paper, we propose a collocation scheme for efficiently solving the mixed time-fractional Black-Scholes (MTF-BS) model and obtaining the option price. Our approach involves deriving the mixed fractional Black-Scholes (MF-BS) partial differential equation (PDE) considering the delta hedging strategy and the mixed fractional Geometric Brownian motion (MFGBM) model. To simplify the problem, we transform the MTF-BS PDE into a modified Riemann-Liouville derivative form. Subsequently, a collocation method is employed to numerically solve the transformed equation, where the solution is represented as a series of fractional Jaiswal functions with unknown coefficients. By utilizing operational matrices and collocation points, we convert the problem into a linear system of equations, allowing for the examination of convergence and stability in the Sobolev spaces. Finally, we present four examples to demonstrate the method’s effectiveness and accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Option pricing is a financial concept that involves determining the value of a financial contract known as an option [1]. The pricing of options is crucial for investors and traders in the financial markets as it helps them to make informed decisions about buying, selling, or holding these instruments. By understanding the value of options, market participants can assess the potential risks and rewards associated with their investment strategies. The Black-Scholes (BS) model, developed in the 1970s, is one of the prominent methodologies used for option pricing [2]. This model takes into consideration factors such as the asset price, the strike price, the expiration time, the expected volatility, interest rates, and dividends. By incorporating these variables, the model generates an estimated value for the option.

Financial models play an important role in option pricing because they help determine the fair value or theoretical price of an option contract [3, 4]. These models often use stochastic processes to incorporate uncertainty and randomness into their calculations. In recent years, various stochastic processes have been used in financial models. One of the most important ones is fractional Brownian motion (fBm) [5, 6]. The fBm was first introduced by Kolmogorov in 1940, and it is a stochastic process that is widely employed in financial modeling due to its ability to capture long-range dependence, self-similarity, and fractal nature in asset price movements over time [7]. Unlike the standard Brownian motion, which assumes independent and identically distributed increments, the fBm process incorporates memory effects, making it a suitable tool for modeling financial data with persistent autocorrelation. The process is parameterized by a Hurst exponent (H), which characterizes the level of dependence present in the data. If \(H \in (\frac{1}{2}, 1)\), the process has long memory [8]. However, since the fBm process with \(H \ne \frac{1}{2}\) is not a semimartingale, applying the related financial models creates arbitrage opportunities [9,10,11]. But Cheridito in 2001 studied the fBm models and showed how arbitrage can be removed from fBm models [12]. Moreover, he presented the mfBm, which combines the properties of both the fBm process and the Brownian motion, in 2009 as [13]:

where \(\alpha \) and \(\beta \) are real constants. Cheridito showed that the mfBm process with \(H \in (\frac{3}{4}, 1)\) is equivalent to a martingale, and applying the mixed fractional models for forecasting the stock price doesn’t create arbitrage opportunities [13]. After that, Zili in 2006 studied the process and presented some stochastic properties and characteristics of this process [14]. Cai et al. [15], Zhang et al. [16], and Xiao et al. [17] applied the process in different financial models and showed that the efficiency of the process is higher than the standard and fBms.

1.1 The methodology for determining the option price PDE

Here, we obtain the option price PDE under the MFGBM model. Let \(\left( \Omega , \mathcal {F}, \mathbb {P} \right) \) be a probability space and the dynamic of the asset price satisfies the following equation:

where \(\mu \) and \(\sigma \) are constants.

Lemma 1

Let \( \mathcal {V}(S, t)\) denotes the value of the option on the underlying asset S at time t. Then, \(\mathcal {V}(S, t)\) satisfies in

where

Proof

Let Y denotes a replicating portfolio with the call option \(\mathcal {V}\) and sell \(\Delta \) shares of the underlying stock S. Then, the portfolio’s price equation satisfies in:

By Proposition 2.9 and Theorem 2.10 from [18] for Y and \(\mathcal {V}\), we deduce that

To derive the PDE, we remove the stochastic part of the (6). Thus, \(\Delta =\frac{\partial \mathcal {V}}{\partial S}\) which this is the Delta Hedging assumption of Leland and Kabanov strategies [19, 20]. Therefore, we conclude that

where \(\nu _t=\Delta (t+dt) - \Delta (t)\) and \(k=k_0 n^{\xi - 1/2}\) is the amount of transaction costs in which \(k_0 >0\) and \(\xi \in {[0,1/2]} \) are constant and n is the number of revisions. Also, we have

By removing the random part of the (7), the expected return of the Hedge portfolio is equal to the risk-free rate ( r). Thus, we deduce that

Additionally, one has

By (9) and (10), we deduce that

Let us consider

Finally, we obtain

1.2 Fractional model

In light of their vast range of applications, fractional partial differential equations (FPDEs) have sparked the interest of scientists from a variety of disciplines. Many articles have investigated fractional Black-Scholes models. Meihui Zhang et al. focused on a time-fractional option pricing model with asset-price-dependent variable order and a fast numerical technique to solve the time-fractional option pricing mode [21]. Fazlollah Soleymani and Shengfeng Zhu introduced a discretization scheme of \((2 - \alpha )\) order for the Caputo fractional derivative utilizing graded meshes along the time dimension to solve the time-fractional option price PDE [22]. Other methods are also provided for the numerical solution of the time-fractional option price PDE [23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39]. H. Mesgarani et al. investigated the approximation of the solution u(x, t) for the temporal fractional Black-Scholes model, which involves the Caputo sense time derivative and is subject to initial and boundary conditions [31]. H. Mesgarani et al. introduced a novel fuzzy mathematical programming approach initially designed to address problems within the framework of linear programming (LP) models [32]. Y. Esmaeelzade Aghdam et al. combined the composition of orthogonal Gegenbauer polynomials (GB polynomials) and the approximation of the fractional derivative based on the Caputo derivative for estimating the fractional Black-Scholes model [33]. In the sequel, we consider the following equation,

where \(\frac{\partial ^{\alpha } \mathcal {V}(S,\gamma )}{\partial \gamma ^{\beta }}\) is the modified Riemann-Liouville derivative

For this problem, we consider the initial and boundary conditions as

Assume, \(S=e^x\), \(\gamma =T-t\), and \(\mathcal {J}(x,t)=\mathcal {V}(e^x,T-t)\). Then, we have

where

So that, we obtain

Remark 1

We restrict the domain of space to a finite interval [c, d] because of numerical limitations and to assess our numerical scheme with artificial exact solutions. We apply a source term \(\mathcal {F}(x, t)\) to problem (17)–(19). As a result, we have the following problem:

Remark 2

In real-world problems, we have some limitations on the initial and boundary conditions. Since the price of the stock changes within a logical interval, we first obtain the stock price based on the financial model. As a result, we can determine the minimum and maximum values of the stock price. With these values, we derive the boundary conditions for the option price.

1.3 Spectral methods

Spectral methods are a technique used to find the solution to a differential equation by representing it as a series of well-defined and smooth functions. These methods, which have gained popularity, are now considered a valid alternative to finite difference and finite element methods when it comes to numerically solving partial differential equations. These methods are comprehensive approaches in which the calculation at any specific location is influenced not only by data from nearby locations but also by data from the entire region. They are also considered global because they utilize all the available function values to create the required approximations. Different approaches used for solving partial differential equations using polynomial spectral methods are the Galerkin, tau, and collocation method.

1.4 Contribution and structure of the paper

As far as the authors are aware, the numerical solution of the problem (12)–(15) has not been investigated until now, and this study is the first attempt. We intend to propose a polynomial collocation scheme based on Jaiswal polynomials to solve the problem (20)–(22). To overcome this issue, let the solution to the problem be considered as a series of Jaiswal polynomials with unknown coefficients. By doing so, we will approximate the problem, and by collocating the resulting equations, we obtain a linear system of equations. The unknown coefficients are obtained by solving the resulting system, and the numerical solution of the problem is constructed. The convergence of the introduced scheme is studied in the Sobolev space. Some instances are provided to demonstrate the significance of the method.

In the following, the paper’s main contributions is presented:

-

Introducing a fast collocation method to solve the model numerically.

-

Introducing fractional form of Jaiswal polynomials.

-

Computing operational matrices of the fractional jaiswal polynomials.

-

Studied error analysis in the Sobolev space.

-

Provided test problems with artificial and non-artificial exact solutions.

The continuation of the article is as follows: In Section 2, we introduce the Jaiswal polynomials and their fractional form and obtain the operational matrix of these polynomials. Section 3 is devoted to constructing a numerical scheme to address problem (20)–(22). An analysis of the suggested scheme is discussed in Section 4. Section 5 includes some examples to demonstrate how well the approach works. Finally, Section 6 concludes the article.

2 Jaiswal polynomials

In this part, we generate fractional Jaiswal functions by employing Jaiswal polynomials, which were initially proposed by D.V. Jaiswal in 1974 [40]. Jaiswal polynomials can be defined by the following explicit formula.

For every \(n\ge 0\), the subsequent relationship is true

The process of defining fractional Jaiswal functions involves applying a transformation \(y\rightarrow y^{h},h\in \mathbb {R}^{+}\) to (23), in the following manner.

Moreover, we have

We can represent a continuous function \(\mathcal {J}(x,t)\) by utilizing fractional Jaiswal functions, as demonstrated below.

where \(h_1,h_2\in \mathbb {R}\). We use the truncated series of (27) in order to approximate \(\mathcal {J}(x,t)\) as follows:

where

and

Furthermore, (28) can be expressed in an equivalent manner as provided below:

where \(\mathcal {Z}_{x}\) and \(\mathcal {Z}_{t}\) are as follows

and

3 Methodology

In this section, we develop a numerical technique using operational matrices to estimate the (20)–(22). It should be noted that there is not any non-linearity in (4). In fact \(sign(\frac{\partial ^2 \mathcal {V}}{\partial S^2})\) is equal \(\pm 1\). After collocating the approximated model, this term will be a constant number. To begin, we make an estimation of the Caputu fractional derivative.

Next, we calculate the expression \(^{C}_{0}D_{t}^{\alpha }\mathcal {T}_{\mathcal {N}_t}^{h_2}(t)\).

where

We have obtained by substituting (31) for (30)

Now, we attempt to approximate the first and second derivatives of \(\mathcal {J}(x,t)\).

where

likewise, one has

where

Similarly, (21) and (22) can also be estimated as

Therefore, using the points \(x_i=\frac{i+1}{\mathcal {N}_x}\) and \(t_j=\frac{j}{\mathcal {N}_t}\), we obtain

By solving (39) and finding the value of matrix \(\mathcal {M}\), we achieve the numerical solution for (20)–(22). Algorithm 1 outlines the fundamental steps required to implement the suggested approach.

Theorem 1

Let \(\mathcal {J}_{N_x}^{N_t}(x,t)=B^T\mathcal {A}_{N_xN_t}^{h_1h_2}(x,t)\) and \(\tilde{\mathcal {J}}_{N_x}^{N_t}(x,t)=\tilde{B}^T\mathcal {A}_{N_xN_t}^{h_1h_2}(x,t)\) be, respectively, the exact and numerical solutions of (35), in which

Then, we have

in which \(C_3\) is a constant.

Proof

The inequality of Cauchy-Schwarz enables us to acquire

This completes the theorem’s proof. \(\square \)

4 Convergence analysis

Therein, we examine the convergence of the suggested approach on the Sobolev space. Our aim is to demonstrate that as the number of basis functions derived from the fractional Jaiswal functions increases, the approximate solution gradually approaches the exact value. Additionally, we define our function space and present several essential theorems to support our findings. In \(\Omega =(c,d)\times (0,T)\) for \(n\ge 1\) the Sobolev norm is defined [41]

where \(D_{i}^{(j)}\mathcal {J}\) stand for the jth derivative of \(\mathcal {J}\) with respect to ith variable. The semi-norm \(|\mathcal {J}|_{H^{n;N}}(\Omega )\) are given by [41]

Due to brevity, we will assume that \(h_1=h_2=h\) and \(N_x=N_t=N\).

Theorem 2

[42] Let \(\mathcal {J}(x,t)\in H^{n}(\Omega )\) with \(n\ge 1\). Assume \(\mathcal {P}_{N}^{h}\mathcal {J}(x,t)=\sum _{i=0}^{N}\sum _{j=0}^{N}b_{i+1,j+1} \mathcal {A}_{i+1}^{h}(x)\mathcal {A}_{j+1}^{h}(t)\) be the best approximation of \(\mathcal {J}\). Then,

and for \(1\le s\le n\),

where

in which \(\mathcal {C}\) depends on N and is a positive constant.

Theorem 3

Assume \(\mathcal {J}(x,t)\in H^{n}(\Omega )\),\(n>0\). Then, for \(1\le s\le n\)

Proof

Using Young’s convolution inequality

we gain

Using Theorem 2, we derive

Theorem 4

Consider that the previous theorem’s assumptions are true. Then,

Proof

Theorem 3 naturally leads to the proof. In fact

and

Theorem 5

Assume that, \(|p(t)|\le m_1\), \(|q(t)|\le m_2\) and \(\mathcal {J}(x,t)\in H^n(\Omega )\) with \(n\ge 1\). For \(1\le s\le n\), the following inequality is hold

where \(\mathcal {X}(x,t)\) is the perturbation term.

Proof

The perturbation term satisfies the following equation

According to (20)

\(\square \)

The approximation error can be reduced by selecting the number of basis functions suitably, as seen by the right-hand side of inequality (45). We also need to select the parameters \(h_1\) and \(h_2\). properly in order to decrease the method’s error. However, there is generally no way to select these parameters. So, we can apply the trial-and-error approach. Typically, parameters \(h_1\) and \(h_2\) can be taken like \(\frac{1}{a}\) and \(\frac{1}{b}\) so long as the \(\mathcal {J}(x^{a},t^{b})\) be smooth enough. This can speed up the convergence rate, as demonstrated in theorem 4.1 of reference [44].

5 Test problems

In this part, we provide four examples for various types of exact solutions to demonstrate the novel method’s applications and computational performance. MATLAB 2020 is used to perform the computations. In all examples, the following error norm is applied to obtain numerical results.

In order to validate the theoretical results, we choose a fix \(\mathcal {N}_x\) properly and increase the number of basis functions in time direction. In fact, let \(\mathcal {J}^{\mathcal {N}_t}(x,t)=\sum _{i=0}^{\infty }b_{\mathcal {N}_x+1,j+1}\mathcal {A}_{\mathcal {N}_x+1}^{h_1}(x)\mathcal {A}_{j+1}^{h_2}(t)\) and \(\hat{\mathcal {J}}^{\mathcal {N}_t}(x,t)=\sum _{i=0}^{\mathcal {N}_t}b_{\mathcal {N}_x+1,j+1}\mathcal {A}_{\mathcal {N}_x+1}^{h_1}(x)\) \(\mathcal {A}_{j+1}^{h_2}(t)\), then we estimate the following error norm

A bound similar to reference [45] can be found for the aforementioned error norm.

The convergence rate of the numerical approach was not obtained in the previous section; nevertheless, it has been experimentally computed in the numerical examples. An estimate of the convergence rate is calculated as [43]

Example 1

In the third example, we have chosen the following problem with exact solution \(\mathcal {J}(x,t)=x^4t^{\alpha }\) on domain \(\Omega =[0,1]^2\)

where \(0<\beta <1\), \(r=0.5\), \(p(t)=t^2\), \(q(t)=t\) and

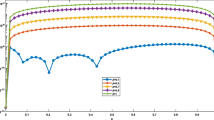

Absolute errors at sample point \((x_i,t_i)=(0,0),(\frac{1}{6},\frac{1}{6}), (\frac{1}{3},\frac{1}{3}),(\frac{1}{2},\frac{1}{2}),(\frac{2}{3},\frac{2}{3}),(\frac{5}{6},\) \(\frac{5}{6}),(1,1)\) for different value of \(\beta \) are collected in Table 1. We choose \(\mathcal {N}_x=6\), \(\mathcal {N}_t=3\), \(h_1=1\), and \(h_2=\alpha \). It is apparent that the absolute errors are approximately zero. The norm of errors, rate of convergence, and CPU time are provided in Table 2. This table demonstrates that we get better and better results that validate our theoretical analysis when we fix \(\mathcal {N}_t\) by an appropriate number of basis functions in the time direction and increase \(\mathcal {N}_x\). Also, the CPU time indicates the procedure is fast and efficient. The plot of error function depicted in Fig. 1. This picture illustrates how selecting appropriate values for \(h_1\) and \(h_2\) may reduce the absolute errors between numerical and exact solutions. The behavior of solutions at \(T=1\) for \(\alpha =0.2,0.4,0.8,0.9\) are illustrated in Fig. 2. Also, the surface of exact and numerical solutions is portrayed in Fig. 3. We can see that the two solutions are almost equal.

Example 2

In this example, we consider the model as follows:

with terminal and boundary conditions

where

Surface of error function for \(\alpha =0.5\) with \((\mathcal {N}_x,\mathcal {N}_t)=(4,4)\) for (Example 1)

The behavior solutions with \((\mathcal {N}_x,\mathcal {N}_t)=(6,3)\) and \((h_1,h_2)=(1,\alpha )\) when \(\alpha =0.2,0.5,0.8,\) and \(\alpha = 0.9\) for (Example 1)

The behavior of solutions for \((\mathcal {N}_x,\mathcal {N}_t)=(6,3)\) and \((h_1,h_2)=(1,\alpha )\) when \(\alpha =0.5\) for (Example 1)

The parameters value are, \(\sigma =0.4\), \(r=0.1\), \(K=5\), \(k=0.01\), \(H=0.8\), \(\beta =0.5\), \(\alpha =0.5\), \(dt=0.001\), \(s_{\min }=0.1\), \(s_{\max }=33.33\), and \(T=1\). Changes in the stock price and the time to maturity can have significant implications for the option value. The stock price directly impacts the value of a call option. As the stock price increases, the likelihood of the option being profitable also rises, resulting in a higher option value. Conversely, a decrease in the stock price diminishes the probability of the option becoming profitable, leading to a lower option value. Also, the time to maturity plays a crucial role in determining the option value. As the time to expiration decreases, the option has less time to move in a favorable direction, resulting in a lower probability of the option being profitable and consequently reducing its value. Conversely, a longer time to maturity provides more opportunity for the underlying stock price to fluctuate favorably, increasing the likelihood of profitability and driving up the option value. Moreover, when \(\alpha \longrightarrow 1\), the MTF-BS equation convergences to the MF-BS equation. Because the exact solution is unknown, we follow the procedure of reference [30, 36] and estimate error of numerical scheme using the following relation

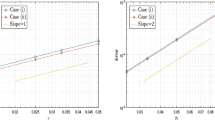

for a fixed value of \(\mathcal {N}_x^{*}\). In Table 3, norm of errors, rate of convergence, and CPU time provided. It is clear that by selecting \(\mathcal {N}_x\) suitably as \(\mathcal {N}_t\) is increased, the numerical value of \(\mathcal {J}(x,t)\) within the domain converge to the exact values. This table exhibits how the suggested method can generate precise numerical solutions even with a limited number of basis functions. The experimentally determined convergence rate of the approach indicates that our results are in good accord with the theoretical results. The graph of call option prices for European call option with \(\alpha =0.5,0.6,0.7,0.8,0.9,1\) is depicted in Fig. 4. Figure 5 shows the surface of numerical solution for \(\alpha =0.5\) with \((\mathcal {N}_x,\mathcal {N}_t)=(10,5)\). The graphs in this example demonstrate the efficacy and usefulness of the numerical method.

Call option prices of European call option with \(\alpha =0.5,0.6,0.7,0.8,0.9,1\) for (Example 2)

Surface of Numerical solution for \(\alpha =0.5\) with \((\mathcal {N}_x,\mathcal {N}_t)=(10,5)\) for (Example 2)

Example 3

In this example, we consider the model as follows:

with initial and boundary conditions

with \(0<\alpha <1\), \(\sigma =0.1\), \(r=0.01\), \(T=1\), \(K=50\), and \(Y=2\). Since an exact solution for this problem is not easily accessible, we estimate the error of the numerical scheme by the following relation

for a fixed value of \(\mathcal {N}_x^{*}\). The norm of errors, convergence rate, and CPU time of the numerical scheme are provided in Table 4 for \(\alpha =0.2,0.8\). The error of the collocation scheme decreases as \(\mathcal {N}_t\) increases, illustrating the accuracy of the proposed methodology. The accuracy of the method can be improved further by using suitable parameters. The surface plot of European put option prices computed by the presented scheme for \(\alpha =0.5\), \((\mathcal {N}_x,\mathcal {N}_t)=(8,5)\), and \((h_1,h_2)=(1,\alpha )\) is demonstrated in Fig. 6. Our findings in this example show that the suggested approach can solve the problem effectively.

Surface of Numerical solution for \(\alpha =0.5\) with \((\mathcal {N}_x,\mathcal {N}_t)=(8,5)\) and \((h_1,h_2)=(1,\alpha )\) for (Example 3)

Example 4

In the last example, we consider the model as follows:

where

together initial and boundary conditions

with \(0<\alpha <1\), \(\sigma =0.1\), \(r=0.01\), \(k=0\), \(H=0.8\), \(\beta =0.5\), \(dt=0.001\), \(T=1\), \(K=50\), and \(Y=2\).

We estimate the error of the numerical scheme by the following relation

for a fixed value of \(\mathcal {N}_x^{*}\). In this test problem, the volatility is not a constant. In order to obtain the numerical results, we fix \(\mathcal {N}_x=4\). For different value of \(\mathcal {N}_t\) norm of errors, convergence rate, and CPU time for \(h_1=1,h_2=\alpha \) at time \(t=1\) are presented in Table 5. This table demonstrates how, by selecting the proper number of basis functions, the method’s error tends to be zero. Based on the data in this table, it can be concluded that the approach used in this study solves the given problem with remarkable precision. The plot of put option values is demonstrated in Fig. 7. Overall, these findings show that the mixed time-fractional Black-Scholes European option pricing model can be solved accurately and effectively using the suggested collocation method.

Surface of Numerical solution for \(\alpha =0.6\) with \((\mathcal {N}_x,\mathcal {N}_t)=(4,6)\) and \((h_1,h_2)=(1,\alpha )\) for (Example 4)

6 Conclusion

In the paper, we obtained the option price under the MTF-BS model where the stock price dynamic follows the MFGBM model. The model added the long memory property in which the feature is compatible with the real word data behavior. We considered the fractional form of the problem in the sense of Riemann-Liouville derivative. Since the option price PDE is non-linear, thus we apply the collocation method to treat the model numerically. We used the Jaiswal functions as a basis to construct the numerical scheme. We reduced the problem into an algebraic linear system of equations. Moreover, the convergence of the method is fully discussed in the Sobolev space framework. An error bound was found for the perturbation term, demonstrating that the exact solution tends to the exact solution by selecting the number of basis functions properly. In order to speed up the convergence rate, selecting parameters \(h_1\) and \(h_2\) are discussed. To demonstrate how effective the approach is, we provided four test problems and found the option price in different states. For examples where the exact solution was unknown, the norm of the difference between numerical solutions for two consecutive \(\mathcal {N}_t\) is calculated. Also, the method can be used for problems with non-smooth solutions.

Data availability

The datasets generated during the current study are available.

References

Haug, E.G.: The history of option pricing and hedging. In Vinzenz Bronzin’s Option Pricing Models: Exposition and Appraisal. Berlin, Heidelberg: Springer Berlin Heidelberg (2009)

Black, F., Scholes, M.S.: The pricing of options and corporate liabilities. Journal of Political Economy, University of Chicago Press. 81, 637–654 (1993)

Benninga, S.: Financial modeling. MIT press (2014)

Rachev, S.T., Kim, Y.S., Bianchi, M.L., Fabozzi, F.J.: Financial models with Levy processes and volatility clustering. John Wiley & Sons (2011)

Gardiner, C.: Stochastic models. Springer, Berlin (2009)

Bass, R.F.: Stochastic processes. Cambridge University Press (2011)

Kolmogorov, A.N.: Wienersche spiralen und einige andere interessante kurven in hilbertscen raum, cr (doklady). Acad. Sci. URSS (NS) 26, 115–118 (1940)

Rostek, S.: Option pricing in fractional Brownian markets. Springer (2009)

Rogers, L.C.G.: Arbitrage with fractional Brownian motion. Math Financ. 7, 95–105 (1997)

Shiryaev, A. N.: On arbitrage and replication for fractal models (1998)

Willinger, W., Taqqu, M.S., Teverovsky, V.: Stock market prices and long-range dependence. Finance Stoch. 3, 1–13 (1999)

Cheridito, P.: Arbitrage in fractional Brownian motion models. Finance Stoch. 7, 533–553 (2003)

Cheridito, P.: Mixed fractional Brownian motion. Bernoulli. 913-934 (2001)

Zili, M.: On the mixed fractional Brownian motion. International Journal of stochastic analysis. (2006)

Cai, C., Cheng, X., Xiao, W., Wu, X.: Parameter identification for mixed fractional Brownian motions with the drift parameter. Phys. A: Stat. Mech. 536, 120942 (2019)

Zhang, P., Sun, Q., Xiao, W.L.: Parameter identification in mixed Brownian-fractional Brownian motions using Powell’s optimization algorithm. Econ. Model. 40, 314–319 (2014)

Xiao, W.L., Zhang, W.G., Zhang, X., Zhang, X.: Pricing model for equity warrants in a mixed fractional Brownian environment and its algorithm. Phys. A: Stat. Mech. 391, 6418–6431 (2012)

Najafi, A., Mehrdoust, F.: Conditional expectation strategy under the long memory Heston stochastic volatility model. Commun. Stat. Simul. Comput. 1-21 (2023)

Leland, H.E.: Option pricing and replication with transactions costs. J. Finance. 40, 1283–1301 (1985)

Kabanov, Y.M., Safarian, M.M.: On Leland’s strategy of option pricing with transactions costs. Finance Stoch. 1, 239–250 (1997)

Zhang, M., Jia, J., Hendy, A.S., Zaky, M.A., Zheng, X.: Fast numerical scheme for the time-fractional option pricing model with asset-price-dependent variable order. Appl. Numer, Math (2023)

Soleymani, F., Zhu, S.: Error and stability estimates of a time-fractional option pricing model under fully spatial-temporal graded meshes. J. Comput. Appl. Math. 425, 115075 (2023)

Kazmi, K.: A second order numerical method for the time-fractional Black-Scholes European option pricing model. J. Comput. Appl. Math. 418, 114647 (2023)

Zhang, M., Jia, J., Zheng, X.: Numerical approximation and fast implementation to a generalized distributed-order time-fractional option pricing model. Chaos Solit. Fractals. 170, 113353 (2023)

An, X., Wang, Q., Liu, F., Anh, V.V., Turner, I.W.: Parameter estimation for time-fractional Black-Scholes equation with S &P 500 index option. Numer. Algorithms. 1-30 (2023)

Rahimkhani, P., Ordokhani, Y., Sabermahani, S.: Hahn hybrid functions for solving distributed order fractional Black-Scholes European option pricing problem arising in financial market. Math. Methods Appl. Sci. 46, 6558–6577 (2023)

Taghipour, M., Aminikhah, H.: A spectral collocation method based on fractional Pell functions for solving time-fractional Black-Scholes option pricing model. Chaos Solit. Fractals. 163, 112571 (2022)

Abdi, N., Aminikhah, H., Sheikhani, A.R.: High-order compact finite difference schemes for the time-fractional Black-Scholes model governing European options. Chaos Solit. Fractals. 162, 112423 (2022)

Roul, P.: Design and analysis of a high order computational technique for time-fractional Black-Scholes model describing option pricing. Math. Methods Appl. Sci. 45, 5592–5611 (2022)

Sarboland, M., Aminataei, A.: On the numerical solution of time fractional Black-Scholes equation. Int. J. Comput. Math. 99, 1736–1753 (2022)

Mesgarani, H., Bakhshandeh, M., Aghdam, Y.E., Gómez-Aguilar, J.F.: The convergence analysis of the numerical calculation to price the time-fractional Black-Scholes model. Comput Econ. 62(4), 1845–1856 (2023)

Mesgarani, H., Aghdam, Y.E., Beiranvand, A., Gómez-Aguilar, J. F.: A novel approach to fuzzy based efficiency assessment of a financial system. Comput Econ. 1-18 (2023)

Aghdam, Y. E., Mesgarani, H., Amin, A., Gómez-Aguilar, J. F.: An efficient numerical scheme to approach the time fractional Black-Scholes model using orthogonal Gegenbauer polynomials. Comput Econ. 1-14 (2023)

Mohapatra, J., Santra, S., Ramos, H.: Analytical and numerical solution for the time fractional Black-Scholes model under jump-diffusion. Comput Econ. 1-26 (2023)

Priyadarshana, S., Mohapatra, J., Pattanaik, S.R.: A second order fractional step hybrid numerical algorithm for time delayed singularly perturbed 2D convection-diffusion problems. Appl Numer Math. 189, 107–129 (2023)

Mohapatra, J., Priyadarshana, S., Raji Reddy, N.: Uniformly convergent computational method for singularly perturbed time delayed parabolic differential-difference equations. Eng Comput. 40(3), 694–717 (2023)

Aghdam, Y.E., Mesgarani, H., Amin, A., Gómez-Aguilar, J.F.: An efficient numerical scheme to approach the time fractional Black-Scholes model using orthogonal Gegenbauer polynomials. Comput. Econ. 1-14 (2023)

Kaur, J., Natesan, S.: A novel numerical scheme for time-fractional Black-Scholes PDE governing European options in mathematical finance. Numer. Algorithms. 1-31 (2023)

Zhang, H., Liu, F., Turner, I., Yang, Q.: Numerical solution of the time fractional Black-Scholes model governing European options. Comput. Math. with Appl. 71, 1772–1783 (2016)

Jaiswal, D.V.: On polynomials related to Tchebichef polynomials of the second kind. Fibonacci Q. 12, 263–265 (1974)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral methods: fundamentals in single domains. Springer Science & Business Media (2007)

Rahimkhani, P., Ordokhani, Y.: Generalized fractional-order Bernoulli-Legendre functions: an effective tool for solving two-dimensional fractional optimal control problems. IMA J. Math. Control. Inf. 36, 185–212 (2019)

Zhao, T., Li, C., Li, D.: Efficient spectral collocation method for fractional differential equation with Caputo-Hadamard derivative. Fract. Calc. Appl. Anal. 26(6), 2903–2927 (2023)

Abo-Gabal, H., Zaky, M.A., Doha, E.H.: Fractional Romanovski-Jacobi tau method for time-fractional partial differential equations with nonsmooth solutions. Appl. Numer. Math. 182, 214–34 (2022)

Dehestani, H., Ordokhani, Y.: Razzaghi, M: An improved numerical technique for distributed-order time-fractional diffusion equations. Numer. Methods Partial Differ. Equ. 37(3), 2490–2510 (2021)

Author information

Authors and Affiliations

Contributions

Dr. Alazemi and Dr. Alsenafi designed and wrote the main idea of the paper and solved the pde and wrote the paper text and Dr Najafi wrote the program section.

Corresponding author

Ethics declarations

Ethical approval

The manuscript is not submitted to more than one journal for simultaneous consideration. The manuscript is original and is not published elsewhere in any form or language. The manuscript is not divided into several parts to increase the quantity of submissions and submitted to various journals or to one journal over time. Results are presented clearly, honestly, and without fabrication, falsification, or inappropriate data manipulation (including image-based manipulation). No data, text, or theories by others are not presented without references.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Alazemi, F., Alsenafi, A. & Najafi, A. A spectral approach using fractional Jaiswal functions to solve the mixed time-fractional Black-Scholes European option pricing model with error analysis. Numer Algor (2024). https://doi.org/10.1007/s11075-024-01797-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-024-01797-w