Abstract

This work develops the Milstein scheme for commutative stochastic differential equations with piecewise continuous arguments (SDEPCAs), which can be viewed as stochastic differential equations with time-dependent and piecewise continuous delay. As far as we know, although there have been several papers investigating the convergence and stability for different numerical methods on SDEPCAs, all of these methods are Euler-type methods and the convergence orders do not exceed 1/2. Accordingly, we first construct the Milstein scheme for SDEPCAs in this work and then show its convergence order can reach 1. Moreover, we prove that the Milstein method can preserve the stability of SDEPCAs. In the last section, we provide several numerical examples to verify the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Differential equations with piecewise continuous arguments (EPCAs) are well used in control theory and some biomedical models ([1,2,3,4]). A typical EPCA is of the form

where the argument h(t) has intervals of constancy. A potential application of EPCAs is the stabilization of hybrid control systems with feedback delay [1]. In recent years, some scholars further developed the theory of stabilization for hybrid stochastic differential equations by feedback control based on discrete-time state observations ([5, 6]), and this theory is actually based on the stability of the hybrid stochastic differential equation with piecewise continuous arguments (SDEPCA)

Therefore, the properties of SDEPCAs have received more and more consideration.

However, most of SDEPCAs do not have explicit solutions; hence, it is extremely important to solve them by numerical methods. Moreover, in order to achieve the required accuracy in many real-world problems, the development of higher-order numerical methods is necessary. But to our knowledge, the numerical methods currently developed for global Lipschitz continuous or highly nonlinear SDEPCAs are all Euler or Euler-type methods (such as the split-step theta method, the tamed Euler method, the truncated Euler method), and the convergence orders of all of these methods do not exceed one-half (see, e.g., [7,8,9,10,11,12]). Therefore, the main aim of this work is to construct a higher-order numerical scheme for SDEPCAs.

The Milstein scheme is a well-known numerical scheme for stochastic ordinary differential equations (SODEs) with a strong order of convergence one ([13,14,15,16,17,18,19]). Several scholars have further derived and analyzed the Milstein scheme for stochastic delay differential equations (SDDEs) [20,21,22,23,24,25,26,27,28,29]. However, most of these papers only consider the stochastic differential equations with constant delay [20,21,22,23,24,25,26,27,28], while an SDEPCA can be viewed as a stochastic differential equation with time-dependent delay, and the delay function is piecewise continuous and not differentiable. Therefore, it is worthwhile to construct the Milstein scheme for SDEPCAs.

In this work, we construct the Milstein scheme for SDEPCAs following the approach used by Kloeden et al. for SODEs [14] and SDDEs [29] and prove that the Milstein solution also converges strongly with order one to the exact solution of commutative SDEPCAs. It is worth mentioning that the Milstein scheme constructed in this paper contains only the derivatives of the coefficients f and \(g_j\) to the first component, which is different from the ones derived in the existing publications.

Moreover, whether the numerical method can preserve the stability of the exact solution is also an important criterion for the goodness of the numerical method [30,31,32,33]. Therefore, we also consider the stability of the Milstein method in this paper. The rest of this work is arranged as follows. Some basic lemmas and preliminaries are introduced in the second section. The Milstein scheme is developed, and its uniform boundedness in p-th moment is obtained in Sect. 3. Then, the strong convergence order of the Milstein method is proved in Sect. 4. The mean square exponential stability of the Milstein method is given in Sect. 5. Finally, several illustrative examples are given.

2 Notations and preliminaries

Throughout this paper, unless otherwise specified, we will use the following notations. \(\vert x\vert \) denotes the Euclidean vector norm, and \(\langle x,y\rangle \) denotes the inner product of vectors x, y. If A is a vector or matrix, its transpose is denoted by \(A^\text {T}\). If A is a matrix, its trace norm is denoted by \(\vert A\vert =\sqrt{{{\,\textrm{trace}\,}}(A^\text {T}A)}\). For two real numbers a and b, we will use \(a\vee b\) and \(a\wedge b\) for the \(\max \left\{ a,b\right\} \) and \(\min \left\{ a,b\right\} \), respectively. \(\mathbb {N}:=\{0,1,2,\dots ,\}\). \([\cdot ]\) denotes the greatest-integer function.

Moreover, let \((\Omega ,\mathcal {F},\left\{ \mathcal {F}_t\right\} _{t\ge 0},\mathbb {P})\) be a complete probability space with a filtration \(\left\{ \mathcal {F}_t\right\} _{t\ge 0}\) satisfying the usual conditions (i.e., it is right continuous and \(\mathcal {F}_0\) contains all \(\mathbb {P}\)-null sets), and let \(\mathbb {E}\) denote the expectation corresponding to \(\mathbb {P}\). Denote by \(\mathcal {L}^p([0,T];\mathbb {R}^n)\) the family of all \(\mathbb {R}^n\)-valued, \(\mathcal {F}_t\)-adapted processes \(\left\{ f(t)\right\} _{0\le t\le T}\) such that \(\int _0^T\vert f(t)\vert ^p dt<\infty ,\) a.s. Denote by \(\mathcal {L}^p([0,\infty );\mathbb {R}^n)\) the family of process \(\left\{ f(t)\right\} _{t\ge 0}\) such that for every \(T>0\), \(\left\{ f(t)\right\} _{0\le t\le T}\in \mathcal {L}^p([0,T];\mathbb {R}^n).\)

Let \(B(t)=(B^1(t),\dots ,B^d(t))^\text {T}\) is a d-dimensional Brownian motion defined on the probability space \((\Omega ,\mathcal {F},\left\{ \mathcal {F}_t\right\} _{t\ge 0},\mathbb {P})\); we consider the following SDEPCA:

on \(t\ge 0\) with initial data \(x(0)=x_0\in \mathbb {R}^n\), where \(x(t)=(x_1(t),x_2(t),\dots ,x_n(t))^\text {T}\in \mathbb {R}^n\), \(f:\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}^n\), \(g_j:\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}^{n}\), \(j=1,2,\dots ,d\). The definition of the exact solution for (1) is as follows.

Definition 1

[34] An \(\mathbb {R}^n\)-valued stochastic process \(\left\{ x(t),t\ge 0\right\} \) is called a solution of (1) on \([0,\infty ),\) if it has the following properties:

-

\(\left\{ x(t),t\ge 0\right\} \) is continuous and \(\mathcal {F}_t\)-adapted;

-

\(\left\{ f(x(t),x([t]))\right\} \in \mathcal {L}^1([0,\infty );\mathbb {R}^n)\) and \(\left\{ g_j(x(t),x([t]))\right\} \in \mathcal {L}^2([0,\infty );\mathbb {R}^{n})\);

-

(1) is satisfied on each interval \([n,n+1)\subset [0,\infty )\) with integral end points almost surely.

A solution \(\left\{ x(t),t\ge 0\right\} \) is said to be unique if any other solution \(\left\{ \bar{x}(t),t\ge 0\right\} \) is indistinguishable from \(\left\{ x(t),t\ge 0\right\} \), that is,

We assume that the coefficients of (1) satisfy the following conditions.

Assumption 2.1

Suppose f(x, y) and \(g_j(x,y)\) are continuously twice differentiable in \(x\in \mathbb {R}^n\) with derivatives bounded as follows: for constant \(M>0\)

holds for all \(x, y\in \mathbb {R}^n\), \(k,i=1,2,\dots n\), and \(j=1,2,\dots , d\), where

Remark 1

Under Assumption 2.1, for all \(x,y,\bar{x}\in \mathbb {R}^n\),

where \(\bar{M}=\sqrt{n}M\).

Proof

For any \(x,y,\bar{x}\in \mathbb {R}^n\), according to the mean value theorem of vector-valued function (see [35]), we have

where \(\theta \in (0,1)\), \(\frac{\partial f(x,y)}{\partial x}:=\left( \frac{\partial f_l(x,y)}{\partial x_k}\right) _{l,k},~l,k=1,2,\dots ,n\). In the same way, we can also get

The proof is completed. \(\square \)

Assumption 2.2

There exists a positive constant L such that

for all \(x, y,\bar{y}\in \mathbb {R}^n\).

Remark 2

Under Assumptions 2.1 and 2.2, there exist a constant \(\bar{L}>0\) such that f and \(g_j, j=1,\dots , d\) satisfy the following linear growth condition:

for all \(x,y\in \mathbb {R}^n\).

Proof

By (2) and (3), using the fundamental inequality \(\vert a+b\vert \le \vert a\vert +\vert b\vert \), one can obtain

Similarly, it can also be proved that

Let \(\bar{L}=\bar{M}+L+\vert f(0,0)\vert +\sum _{j=1}^d\vert g_j(0,0)\vert \); the proof is completed. \(\square \)

Based on Theorem 1 in [36], one can obtain the existence and uniqueness of the exact solution for (1) on the interval \([n,n+1), \forall n\in \mathbb {N}\), then the following existence and uniqueness of the solution holds on the whole time interval \([0,\infty )\) according to the continuity. For more details, one can also see Theorem 3.1 in [34]. Moreover, the proof of the following boundedness can be found in [37].

Lemma 2.3

Under Assumptions 2.1 and 2.2, there is a unique global solution x(t) to (1) on \(t\ge 0\) with initial data \(x(0)=x_0\). Moreover, for any \(p\ge 2\), there is a positive constant C such that

Lemma 2.4

[15, 38] Let \(Z_1,\dots ,Z_N:\Omega \rightarrow \mathbb {R}, N\in \mathbb {N}\) be \(\mathcal {F}/\mathcal {B}(\mathbb {R})\)-measurable mapping with \(\mathbb {E}\vert Z_n\vert ^p\le \infty \) for all \(n=1,2,\dots ,N\) and with \(\mathbb {E}(Z_{n+1}\vert Z_1,\dots ,Z_n)=0\) for all \(n=1,2,\dots ,N-1\). Then,

for every \(p\in [2,\infty )\), where \(\Vert \cdot \Vert _{L^p}:=(\mathbb {E}\vert \cdot \vert ^p)^{1/p}\), \(C_p\) is a constant depend on p but independent of n.

3 The Milstein scheme

Let us now define the Milstein scheme for (1). Set \(\Delta =1/m\) be a given step size with integer \(m\ge 1\), and let the grid points \(t_k\) be defined by \(t_k=k\Delta (k=0,1,\dots )\). For \(x,y\in \mathbb {R}^n, j,r=1,2,\dots , d\), define

In this work, we only consider the SDEPCAs with diffusion coefficients \(g_j\) satisfies the so-called commutativity condition \(L^{j}g_r(x,y)=L^{r}g_j(x,y), j\ne r\).

Since for arbitrary \(k\in \mathbb {N}\), there always exist \(s\in \mathbb {N}\) and \(l=0,1,2,\dots ,m-1\) such that \(k=sm+l\), the discrete Milstein solution \(X_{sm+l}\approx x(t_{sm+l})\) is defined by

where \(X_0=x(0)=x_0\), \(\Delta B^j_{sm+l}=B^j(t_{sm+l+1})-B^j(t_{sm+l})\). Due to \(I_{rj}(k)+I_{jr}(k)=\Delta B^j_k\Delta B^r_k\) for \(r\ne j\), (5) can also be written as

Let

The continuous version of scheme (5) is given by

where \(\Delta B^r(u)=B^r(u)-B^r([u/\Delta ]\Delta )\). It can be verified that \(X(t_{sm+l})=\bar{X}(t_{sm+l})=X_{sm+l}\).

Throughout this paper, let C be a generic constant that varies from one place to another and depends on p, but independent of \(\Delta \).

Theorem 3.1

Let Assumptions 2.1 and 2.2 hold. Then, for any \(\Delta \in (0,1]\) and \(p\ge 2\), the Milstein scheme (5) has the following property:

Proof

For any \(T>0, t_{sm+l+1}\in [0,T], s\in \mathbb {N}, l=0,1,\cdots , m-1\), according to (8), one has

By the inequality \((\sum _{i=1}^n\vert a_i\vert )^p\le n^{p-1}\vert a_i\vert ^p, p\ge 1\), we have

According to Hölder’s inequality and the Burkholder-Davis-Gundy (B-D-G) inequality, we can deduce that

in the last inequality we use the fact that \(L^jg_r(X_{sm+i},X_{sm})\) is \(\mathcal {F}_{t_{sm+i}}\)-measurable, while \(\Delta B^r(u)=B^r(u)-B^r(t_{sm+i})\) is \(\mathcal {F}_{t_{sm+i}}\)-independent. Applying the B-D-G inequality again, together with (4), we can arrive at

According to Assumption 2.1 and (4), one can obtain

By the discrete Gronwall inequality, we have

hence

In particular, take \(l=m-1\), it is easy to see that

Then,

Consequently, for any \(T>0, t_{sm+l}\in [0,T]\), one can deduce that

The proof is completed. \(\square \)

Lemma 3.2

Let Assumptions 2.1 and 2.2 hold. Then, for any \(T>0, \Delta \in (0,1]\) and \(p\ge 2\),

Proof

For any \(t\in [0,T]\), there are always \(s\in \mathbb {N}\) and \(l=0,1,\dots ,m-1\) such that \(t\in [t_{sm+l},t_{sm+l+1})\), by (7) and (8), one has

Similar to the process of (9)-(12), applying Hölder’s inequality, the B-D-G inequality, Assumption 2.1, (4), and Theorem 3.1, one can arrive at

Moreover, it is easy to see that

\(\square \)

4 Strong convergence rate of the Milstein scheme

In the following, we sometimes use the notation \((\Phi )_i\) to denote the i-th component of \(\Phi \in \mathbb {R}^n\). Let \(\varphi :\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) be twice differentiable with respect to the first component, then according to the Taylor formula,

for \(x,y,\bar{x}\in \mathbb {R}^n\), where

with \(\theta \in (0,1)\). Note that \(X([t])=\bar{X}([t])\) for all \(t\ge 0\), hence

with

Applying (7) and (8), let \(\kappa (t)=[t/\Delta ]\Delta \), one has

Define

which gives

Lemma 4.1

Let Assumptions 2.1 and 2.2 hold. Then, for any \(T>0, \Delta \in (0,1]\), and \(p\ge 2\),

for \(\varphi =f, g_j\), \(j=1,2,\dots ,d\).

Proof

Take \(\varphi =f\), for any \(t\in [0,T]\), using Hölder’s inequality, one has

By Assumption 2.1 and Lemma 3.2, one can obtain that

Moreover, recall that for any \(t\in [0,T]\), there always exist \(s\in \mathbb {N}\) and \(l=0,1,\dots ,m-1\) such that \(t\in [t_{sm+l},t_{sm+l+1})\), which gives \(\kappa (t)=t_{sm+l}\), hence

Using Assumption 2.1, Hölder’s inequality, (4) and Theorem 3.1, one can deduce that

Similarly, applying Assumption 2.1 and the B-D-G inequality, it yields

According to the definition of \(L^kg_r(X_{sm+l},X_{sm})\), using Assumptions 2.1, 2.2, and Theorem 3.1, we can know that

Substituting (20) into (19), with the help of B-D-G inequality again, we can obtain that

Combining (16), (17), (18), and (21) yields

Repeating the process above, we can also prove

for all \(j=1,2,\dots ,d\). \(\square \)

Theorem 4.2

Let Assumptions 2.1 and 2.2 hold. Then, for any \(\Delta \in (0,1]\) and \(p>0\),

Proof

For any \(t\in [0,T]\) and \(p\ge 2\), according to (1) and (8), using Itô’s formula, we can arrive at

where

Then, for any \(T_1\in [0,T]\),

where

Applying Young’s inequality, (2) and (3), it is easy to get that

Similarly, we can also get

Next, we give an estimation for \(A_4\). According to (15), we have

Recall that \(L^{j}g_k(x,y)=L^{k}g_j(x,y)\), we have

Hence,

and then

Using the B-D-G inequality, fundamental inequality \(2ab\le a^2+b^2\), (2), and (3), one sees that

Applying the B-D-G inequality again, with the help of (26) and Lemma 4.1, it can be derived that

In the following, we give an estimation for \(A_2\). According to (15),

where

Using Hölder’s inequality and Lemma 4.1, one has

where

According to the Young inequality, it is easy to arrive at

Taking the difference between (1) and (8), one has

where \(\kappa (t)=[t/\Delta ]\Delta \), then

with

Let \(N=[T_1/\Delta ]\),

Set

it is easy to know that \(\mathbb {E}(Z_{sm+l+2}\vert Z_1,Z_2,\dots ,Z_{sm+l+1})=0\) for all \(sm+l+1=1,\dots ,N-1\), then for \(p\ge 4\), by Lemma 2.4, we have

Applying Assumption 2.1, the fundamental inequality \((\sum _{i=1}^n a_i)^p\le n^{p-1}\sum _{i=1}^na_i^p\), (4) and Lemma 3.2, for any \(t\in [0,T_1]\),

Hence,

According to Hölder’s inequality, we can get that

Therefore, one can obtain

Using Hölder’s inequality and (32), together with (2)-(3), one can arrive at

Similarly,

It follows from Lemma 3.2 that

Using Hölder’s inequality and the B-D-G inequality again, with the help of (32), yields

By (26), (32) and Lemma 4.1, we have

Combining (31) and (33)–(37), it yields

Substituting this into (30), one has

Combining (22)–(24),(27)–(29), and (38), we have

Consequently, it can be deduced from the Gronwall inequality that

Furthermore, for any \(q\in (0,4)\), by Hölder’s inequality,

The proof is completed. \(\square \)

5 Stability analysis of the Milstein method

In this section, we investigate the exponential stability of the Milstein method for (1). Throughout this section, we shall assume that (1) has a unique global solution for any given initial data \(x_0\). Firstly, we suppose that \(f(0,0)=0\) and \(g_j(0,0)=0, j=1,\dots ,d\) and give the following two definitions of stability.

Definition 2

The SDEPCA (1) is said to be exponentially stable in mean square if there exist positive constants \(\lambda \) and \(H_1\) such that for any given initial value \(x_0\in \mathbb {R}^n\),

Definition 3

For a given step-size \(\Delta >0\), the Milstein method is said to be exponentially stable in mean square if there exist positive constants \(\gamma \) and \(H_2\) such that for any given initial value \(x_0\in \mathbb {R}^n\),

for all \(k\in \mathbb {N}\).

Remark 3

Under Assumptions 2.1, 2.2, and \(f(0,0)=g_j(0,0)=0\), similar to the Remark 2, it follows

for all \(x, y\in \mathbb {R}^n\), where \(\tilde{L}=\bar{M}+L\), \(\bar{M}\), and L are defined in Assumptions 2.1 and 2.2.

Let \(g=(g_1,g_2,\dots ,g_d)\), we assume the following condition holds to obtain the stability.

Assumption 5.1

Assume that there are positive constants \(\lambda _1>\lambda _2>0\) such that

By Theorem 4.1 in [7], we can obtain the exponentially stability in the mean square of (1).

Theorem 5.2

Let Assumption 5.1 holds. Then, (1) is exponentially stable in mean square, i.e.,

where \(\lambda =-\log r(1)\) and \(H_1=r(1)^{-1}\) with \(r(1)=\frac{\lambda _2}{\lambda _1}+(1-\frac{\lambda _2}{\lambda _1})e^{-2\lambda _1}\).

To obtain the stability of the Milstein method, we introduce the following lemmas (for details of the proofs, see [7]).

Lemma 5.3

Let \(z_{sm+l}\) be a sequence of numbers, \(s,m\in \mathbb {N}, l=0,1,\dots ,m-1\). If there are constants \(\alpha>\beta >0\) such that \(1-\alpha \Delta >0\) and

then

Lemma 5.4

Assume that \(\alpha ,\beta \) are two positive constants. If \(\alpha >\beta \), then for all \(t\ge 0\), we have

Let \(K=nd^2(d^2+2)M^2\tilde{L}^2+2\tilde{L}^2\), \(\alpha =2\lambda _1-K\Delta \), \(\beta =2\lambda _2+K\Delta \), \(\Gamma (m)=\frac{\beta }{\alpha }+\left( 1-\frac{\beta }{\alpha }\right) e^{-\alpha }\), where M and \(\tilde{L}\) are defined in Assumptions 2.1 and Remark 3, respectively. Then, we obtain the exponential stability of the Milstein method.

Theorem 5.5

Let Assumptions 2.1, 2.2, and 5.1 hold. Then, for any step-size \(0<\Delta <\bar{\Delta }\wedge 1\), the Milstein scheme (5) is exponentially stable in mean square, i.e.,

for all \(k\in \mathbb {N}\), where \(H_2=\frac{1}{\Gamma (m)}\), \(\gamma =-\log \Gamma (m)\),

Moreover, \(\lim _{\Delta \rightarrow 0}\gamma =\lambda \), where \(\lambda \) is defined in Theorem 5.2.

Proof

For any \(s\in \mathbb {N}, l=0,1,\dots ,m-1\), according to (6), using Assumption 5.1,

where

Note that \(L^j g_r(X_{sm+l},X_{sm})\) is \(\mathcal {F}_{t_{sm+l}}\)-measurable, \(\Delta B_{sm+l}^j\) and \(\Delta B_{sm+l}^r\) are \(\mathcal {F}_{t_{sm+l}}\)-independent; moreover, \(\Delta B_{sm+l}^j\) and \(\Delta B_{sm+l}^r\) are independent, and using the fundamental inequality, we can arrive at

Recall the definition of \(L^j g_r(x,y)\), using Assumption 2.1 and (39), we have

Hence,

In addition, using the independence again, one has

Similarly,

Moreover, it is easy to know that

Substituting (41)–(44) into (40), using (39) and Assumption 5.1, one can obtain that

Since \(\Delta <\bar{\Delta }\), we have \(\alpha>\beta >0\) and \(1-\alpha \Delta >0\), by Lemma 5.3, yields

where \(\Gamma (l+1)=\left( \frac{\beta }{\alpha }+\left( 1-\frac{\beta }{\alpha }\right) e^{-\alpha (l+1)\Delta }\right) \). In particular, if \(l=m-1\), it follows

Therefore

According to Lemma 5.4, we know that \(\Gamma (l+1)\in (0,1)\) for all \(l=0,1,\dots ,m-1\). Hence,

Let \(H_2=\frac{1}{\Gamma (m)}>1\), \(\gamma =-\log \Gamma (m)>0\), we can get that

Furthermore,

The proof is completed. \(\square \)

6 Numerical examples

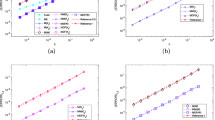

In this section, two numerical examples are given to show the convergence rate obtained in the previous section.

Example 1

In this example, we consider the scalar SDEPCA

on \(t\ge 0\) with the initial value \(x_0=1\), B(t) is a scalar Brownian motion. We generate 3000 different Brownian paths. Let \(T=1\), Fig. 1 depicts p-th moment errors \(\mathbb {E}\vert x(1)-X_{m}\vert ^p\) as a function of the step size \(\Delta \) in log-log plot, where we use the numerical solutions produced by Euler and Milstein methods with step sizes \(2^{-3},2^{-4},2^{-5},2^{-6}\), and \(2^{-7}\). The simulation using the Euler scheme with step size \(\Delta = 2^{-16}\) is regarded as the “true solution.” It can be seen from Fig. 1 that the convergence order of the Euler method is around \(\frac{1}{2}\), while the convergence order of the Milstein method is close to 1.

Example 2

In the following, we consider the 2-dim SDEPCA

on \(t\ge 0\) with the initial value \(x_0=(1,2)^\text {T}\). We use the numerical solution of the Euler method with step-size \(\Delta = 2^{-15}\) as the “exact solution,” and the step sizes for numerical solutions are taken to be \(2^{-4},2^{-5},2^{-7},2^{-8}\), and \(2^{-9}\). The convergence rates for Euler and Milstein methods are shown in Fig. 2.

Example 3

In this example, we consider the stability of the Milstein method for the following scalar SDEPCA

on \(t\ge 0\) with the initial value \(x_0=10\). It is easy to get that \(n=d=1, M=1, L=\frac{1}{4}\), hence \(\tilde{L}=M+L=\frac{5}{4}\) and \(K=\frac{125}{16}\). On the other hand, we can obtain that \(\lambda _1=\frac{3}{4}\), \(\lambda _2=\frac{1}{8}\) by Assumption 5.1. Since \(\lambda _1^2=\frac{9}{16}<K\), according to Theorem 5.5, \(\bar{\Delta }=\frac{\lambda _1-\lambda _2}{K}=\frac{2}{25}\). Therefore, we choose three step sizes \(\Delta =2^{-4}, 2^{-5}\), and \(2^{-6}\) to show the stability of the Milstein method. The mean square stability of the numerical solutions can be observed from Fig. 3.

The mean square stability of the Milstein solutions for (45)

Data availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Wiener, J.: Generalized solutions of functional differential equations. World scientific, Singapore (1993)

Ozturk, I., Bozkurt, F.: Stability analysis of a population model with piecewise constant arguments. Nonlinear Anal. Real World Appl. 12, 1532–1545 (2011)

Bozkurt, F., Yousef, A., Bilgil, H., Baleanu, D.: A mathematical model with piecewise constant arguments of colorectal cancer with chemo-immunotherapy. Chaos Solitons Fractals 168, 113207 (2023)

Li, X.: Existence and exponential stability of solutions for stochastic cellular neural networks with piecewise constant argument. J. Appl. Math. 2014, 145061 (2014)

Mao, X., Liu, W., Hu, L., Luo, Q., Lu, J.: Stabilization of hybrid stochastic differential equations by feedback control based on discrete-time state observations. Systems Control Lett. 73, 88–95 (2014)

You, S., Liu, W., Lu, J., Mao, X., Qiu, Q.: Stabilization of hybrid systems by feedback control based on discrete-time state observations. SIAM J. Control Optim. 53(2), 905–925 (2015)

Lu, Y., Song, M., Liu, M.: Convergence and stability of the split-step theta method for stochastic differential equations with piecewise continuous arguments. J. Comput. Appl. Math. 317, 55–71 (2017)

Geng, Y., Song, M., Lu, Y., Liu, M.: Convergence and stability of the truncated Euler-Maruyama method for stochastic differential equations with piecewise continuous arguments. Numer. Math. Theor. Meth. Appl. 14(1), 194–218 (2021)

Milos̆ević, M.: The Euler-Maruyama approximation of solutions to stochastic differential equations with piecewise continuous arguments. J. Comput. Appl. Math. 298, 1–12 (2016)

Xie, Y., Zhang, C.: A class of stochastic one-parameter methods for nonlinear SFDEs with piecewise continuous arguments. Appl. Numer. Math. 135, 1–14 (2019)

Xie, Y., Zhang, C.: Compensated split-step balanced methods for nonlinear stiff SDEs with jump-diffusion and piecewise continuous arguments. Sci. China Math. 63, 2573–2594 (2020)

Zhang, Y., Song, M., Liu, M.: Strong convergence of the tamed Euler method for nonlinear hybrid stochastic differential equations with piecewise continuous arguments. J. Comput. Appl. Math. 429, 115197 (2023)

Milstein, G.: Approximate integration of stochastic differential equations. Theor. Probab. Appl. 19, 557–562 (1974)

Kloeden, P., Platen, E.: Numerical solution of stochastic differential equations. In: Applications of mathematics (New York), Springer-Verlag (1992)

Wang, X., Gan, S.: The tamed Milstein method for commutative stochastic differential equations with non-globally Lipschitz continuous coefficients. J. Difference Equ. Appl. 19(3), 466–490 (2013)

Li, X., Yin, G.: Explicit Milstein schemes with truncation for nonlinear stochastic differential equations: Convergence and its rate. J. Comput. Appl. Math. 374, 112771 (2020)

Kumar, C., Kumar, T.: On explicit tamed Milstein-type scheme for stochastic differential equation with Markovian switching. J. Comput. Appl. Math. 377, 112917 (2020)

Zong, X., Wu, F., Xu, G.: Convergence and stability of two classes of theta-Milstein schemes for stochastic differential equations. J. Comput. Appl. Math. 336, 8–29 (2018)

Zhan, W., Guo, Q., Cong, Y.: The truncated Milstein method for super-linear stochastic differential equations with Markovian switching. Discrete Contin. Dyn. Syst. Ser. B. 27(7), 3663–3682 (2022)

Hofmann, N., Müller-Gronbach, T.: A modified Milstein scheme for approximation of stochastic delay differential equations with constant time lag. J. Comput. Appl. Math. 197(1), 89–121 (2006)

Hu, Y., Mohammed, S.-E.A., Yan, F.: Discrete-time approximations of stochastic delay equations: the Milstein scheme. Ann. Probab. 32(1), 265–314 (2004)

Niu, Y., Burrage, K., Zhang, C.: A derivative-free explicit method with order \(1.0\) for solving stochastic delay differential equations. J. Comput. Appl. Math. 253, 51–65 (2013)

Wang, Z., Zhang, C.: An analysis of stability of Milstein method for stochastic differential equations with delay. Comput. Math. Appl. 51(9), 1445–1452 (2006)

Calzolaria, A., Florchingerb, P., Nappo, G.: Nonlinear filtering for stochastic systems with fixed delay: Approximation by a modified Milstein scheme. Comput. Math. Appl. 61(9), 2498–2509 (2011)

Zhang, W., Yin, X., Song, M., Liu, M.: Convergence rate of the truncated Milstein method of stochastic differential delay equations. Appl. Math. Comput. 357, 263–281 (2019)

Hu, R.: Almost sure exponential stability of the Milstein-type schemes for stochastic delay differential equations. Chaos Solitons Fractals 131, 109499 (2020)

Gao, S., Hu, J., He, J., Guo, Q.: The truncated \(\theta \)-Milstein method for nonautonomous and highly nonlinear stochastic differential delay equations. Appl. Numer. Math. 181, 234–254 (2022)

Farkhondeh Rouz, O., Ahmadian, D.: Analysis on mean-square and almost sure exponential stability of numerical method for stochastic differential equations with constant delay. Int. J. Appl. Math. Stat. 56(6), 86–98 (2017)

Kloeden, P., Shardlow, T.: The Milstein scheme for stochastic delay differential equations without using anticipative calculus. Stoch. Anal. Appl. 30(2), 181–202 (2012)

Higham, D.J., Mao, X., Szpruch, L.: Convergence, non-negativity and stability of a new Milstein scheme with applications to finance. Discrete Contin. Dyn. Syst. Ser. B. 18(8), 2083–2100 (2013)

Lamba, H., Mattingly, J.C., Stuart, A.M.: An adaptive Euler-Maruyama scheme for SDEs: convergence and stability. IMA Journal of Numerical Analysis. 27(3), 479–506 (2006)

Li, D., Quan, C., Tang, T.: Stability and convergence analysis for the implicit-explicit method to the Cahn-Hilliard equation. Math. Comp. 91, 785–809 (2022)

Liu, X., Deng, F., Liu, L., Luo, S., Zhao, X.: Mean-square stability of two classes of \(\theta \)-methods for neutral stochastic delay integro-differential equations. Appl. Math. Lett. 109, 106544 (2020)

Song, M., Zhang, L.: Numerical solutions of stochastic differential equations with piecewise continuous arguments under Khasminskii-type conditions. J. Appl. Math. 2012, 696849 (2012)

Zorich, V.A.: Mathematical Analysis I. 2nd Edition, Springer (2015)

Gyöngy, I., Krylov, N.V.: On stochastic equations with respect to semimartingales I. Stochastics 4(1), 1–21 (1980)

Lu, Y., Song, M., Liu, M.: Convergence rate and stability of the split-step theta method for stochastic differential equations with piecewise continuous arguments. Discrete Contin. Dyn. Syst. Ser. B. 24(2), 695–717 (2019)

Hutzenthaler, M., Jentzen, A.: Convergence of the stochastic Euler scheme for locally Lipschitz coefficients. Found. Comput. Math. 11, 657–706 (2011)

Acknowledgements

The authors would like to thank the Journal Editorial Office Assistant, Jude Estrera, for helping in the preparation of this manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant nos.12071101 and 11671113).

Author information

Authors and Affiliations

Contributions

Yuhang Zhang drafted the manuscript, and all the authors revised the manuscript together.

Corresponding author

Ethics declarations

Ethical approval

Not applicable

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Y., Song, M., Liu, M. et al. Convergence and stability of the Milstein scheme for stochastic differential equations with piecewise continuous arguments. Numer Algor 96, 417–448 (2024). https://doi.org/10.1007/s11075-023-01652-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01652-4

Keywords

- Stochastic differential equations with piecewise continuous arguments

- Commutative noise

- The Milstein method

- Convergence order

- Exponential stability