Abstract

Matrix completion is usually formulated as a low-rank matrix approximation problem. Several methods have been proposed to solve this problem, e.g., truncated nuclear norm regularization (TNNR) which performs well in recovery accuracy and convergence speed, and hybrid truncated norm regularization (HTNR) method which has better stability compared to TNNR. In this paper, a modified hybrid truncated norm regularization method, named WHTNR, is proposed to accelerate the convergence of the HTNR method. The proposed WHTNR method can preferentially restore rows with fewer missing elements in the matrix by assigning appropriate weights to the first r singular values. The presented experiments show empirical evidence on significant improvements of the proposed method over the closest four methods, both in convergence speed or in accuracy, it is robust to the parameter truncate singular values r.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Matrix completion [1] aims to recover an unknown low-rank or approximately low-rank matrix from the observed missing matrix. It is widely used in computer vision [2,3,4], recommendation system [5,6,7], machine learning [8], etc. It is well-known that the vast majority of visual data is of low-rank or approximately low-rank structure [9]. Wright et al. [10] transferred the matrix completion problem into a rank minimization model while retaining the low-rank or approximately low-rank structure of the matrix. Specifically, given the incomplete data matrix M ∈ Rm×n, the general completion model is rewritten as follows

where rank(X) denotes the rank of the matrix X, and Ω is the set of locations in matrix X ∈ Rm×n, corresponding to the known entries.

Unfortunately, because the rank function is discontinuous and non-convex, solving the rank minimization model (1) is NP-hard [11], which greatly limits the practical application of this model. M. Fazel et al. [12] firstly suggest that the nuclear norm is a good substitute for the matrix rank function. It showed that the nuclear norm is the most compact convex lower bound of the rank function [12]. The rank of matrix X is equal to the number of its non-zero singular values, and the nuclear norm is the sum of singular values. The relationship between rank function and the nuclear norm is similar to the relationship between ℓ1 and ℓ0 norm.

Inexact augmented Lagrangian multiplier (IALM) method [13] and accelerated proximal gradient method [14], to name a few, have been developed in recent years to solve this model, however with unsatisfactory performance in practical applications. The main reason is that the nuclear norm is not the best substitute for the rank function when the matrix does not have a strict low-rank structure. In the rank function, all non-zero singular values should have an equal contribution. However, in the nuclear norm, the contributions of singular values are different according to their magnitudes. The singular value threshold (SVT) method [15] is widely used for solving the nuclear norm minimization model. But the SVT method needs to make a large number of iterations to converge. Hence, the nuclear norm may not be the best substitution of the rank function in the practical application.

To obtain a better approximation of the rank function, Zhang et al. [16] firstly proposed the truncated nuclear norm considered as an alternative to the rank function, and designed an effective two-step iteration algorithm. Subsequently, to solve the convex subproblem in the second step, three efficient iterative procedures were introduced by Hu et al. [17], called TNNR-APGL, TNNR-ADMM, and TNNR-ADMMAP. The TNNR method has significantly improved convergence accuracy. To improve the rate of convergence and robustness of the TNNR method, a truncated nuclear norm regularization method based on weighted residual error was proposed by Liu et al. [18], called the TNNR-WRE and ETNNR-WRE methods, respectively. Hereafter, the double weighted truncated nuclear norm regularization was proposed by Xue et al. [19], called DW-TNNR. Then, quaternion truncated nuclear norm for LRQMC was proposed by Yang et al. [20], called QTNN. However, as a variant of the nuclear norm, its stability problem still faces challenges. The stability of the matrix completion model aims to keep the output matrix almost unchanged when the elements of the observation matrix are replaced.

To improve the stability of the model, Cai et al. [15] proposed a model that combines the nuclear norm and the Frobenius norm, with which they control the low rank and stability of the model, respectively. The Frobenius norm is the arithmetic root of the sum of squares of its singular values and the truncated nuclear norm discards r large singular values. Although the Frobenius norm can improve stability, if it is directly integrated into the TNN model, it may not work well. Therefore, it may be inappropriate to directly combine the Frobenius norm with the truncated nuclear norm improving the model stability. To overcome the above shortcomings, Ye et al. [21] combined the truncated nuclear norm with truncated Frobenius norm, proposed a hybrid truncated norm regularization (HTNR) method, and improved the recovery accuracy and the model stability simultaneously. However the convergence speed of the HTNR method is not satisfying. The HTNR designs a two-step iterative scheme that needs to recover all the missing entries of an incomplete matrix in each step. As the variant method of truncated nuclear norm, the robustness of r is very important for HTN, therefore the choice of r will affect the convergence accuracy.

As a general cognition, the matrix completion task gets easier after recovering some rows with more observed entries. With this idea, we propose a weighted hybrid truncated norm regularization method (WHTNR), aiming to improve the convergence speed. At the same time, the WHTNR algorithm has better convergence accuracy and it is very robust to the parameter r.

In the WHTNR method, different weights are assigned according to the proportion of missing elements to the rows, so that the rows with fewer missing elements will restore firstly hoping to accelerate the matrix recovery process. The experimental results show that the WHTNR not only has a better convergence speed, but also has better accuracy compared with SVT, IALM, TNNR, HTNR, and TNNR-WRE methods (the closest four methods).

The rest of the paper is as follows. In Section 2, some closely related works are introduced. In Section 3, we introduce the proposed WHTNR model and discuss its convergence. In Section 4, experimental results on real images are presented.

2 Related work

Suppose the singular value decomposition (SVD) of the matrix X ∈ Rm×n is as follows

where \({U}=\left [{u}_{1}, {u}_{2},\ldots , {u}_{m}\right ]\in R^{m \times m}\) and \({V}=\left [{v}_{1}, {v}_{2}, \dots , {v}_{n}\right ]\in R^{n \times n}\) are orthogonal matrices, σi(X) is the i th singular value of X, and is assumpted to be sorted in descending order, σ1(X) ≥ σ2(X) ≥⋯ ≥ 0.

As mentioned in the introduction, it is intractable to directly solve the optimization model (1). Conventional approaches use an approximate method via convex relaxation. Specifically, the rank minimization model (1) could be rewritten by approximating the rank function with nuclear norm [12] as follows

where \(\|X\|_{*}={\sum }_{i=1}^{\min \limits \{m,n\}}\sigma _{i}(X)\) denotes the nuclear norm of the matrix X, PΩ denotes the orthogonal projection operator onto the span of matrices vanishing outside of Ω. For any matrix X, we have

The singular value thresholding (SVT) method for matrix completion proposed by Cai et al. [15] combines the nuclear norm and the Frobenius norm, with which they control the low rank and stability of the model, respectively. The SVT model [15] is as follows

where \( \|{X}\|_{F} = \sqrt {{\sum }_{i=1}^{{\min \limits } (m, n)} {\sigma _{i}^{2}}(X)}\) denotes the Frobenius norm of the matrix X.

Since the nuclear norm is the sum of singular values, a few largest singular values have an important contribution to the nuclear norm. To obtain a better approximation to the rank function, Zhang et al. proposed a truncated nuclear norm [16]. For any matrix X ∈ Rm×n, the truncated nuclear norm minimization model (TNN) is defined as follows

And the inequality holds [16] as follows

Thus, the optimization model (5) can be rewritten as follows:

However, it is inappropriate to directly combine the Frobenius norm with the TNN term to improve the stability of the model. Later, Ye et al. [21] proposed a hybrid truncated norm model instead of integrating the Frobenius norm into the TNN term. This method improves recovery accuracy and enhances stability. Experimental results demonstrate the effectiveness of the HTNR model. For any matrix X ∈ Rm×n, the truncated Frobenius norm (TFN) is defined as follows

So, the hybrid truncated norm minimization model (HTNR) is as follows

The main iterative steps of the HTNR algorithm are presented in Algorithm 1.

HTNR algorithm [21].

3 WHTNR method for matrix completion

In this section, we propose a weighted hybrid truncated norm regularization method (WHTNR) which is dedicated to improving the iteration speed of the HTNR model while ensuring recovery accuracy. Aiming to improve the matrix restoration speed, the rows with fewer missing elements will be recovered first.

3.1 The WHTNR method

In line with the trace inequality [22], if \(r=\min \limits \{m,n\}\), then we have

where AAT = BBT = I. Consequently, the truncated nuclear norm is rewritten as follows

On the basis of (11), the model (9) is presented as follows

In order to restore the rows with fewer missing elements in the matrix preferentially, Liu et al. [18] assigned different weights to each row of the residual matrix X − H. Inspired by this, the X terms of \(\text {Tr}\left ({A } {X} {B}^{T}\right )\) in the model (12) are weighted to restore the rows with few missing elements first. The optimization model (12) is changed as follows

where A = UT, B = VT, \({C}=\left (u_{1}, \ldots , u_{r}\right )^{T}\), \(D=\left (v_{1}, \ldots , v_{r}\right )^{T}\). U and V are the left and right orthogonal matrices in the SVD decomposition of X, respectively, and r is the number of subtracted singular values.

In addition, the weight matrix W is defined as follows

where λi > 0,1 ≤ wi ≤ wj, if Ni > Nj,(i,j = 1,…,m), and Ni is the number of observed entries in the ith row of X. Any matrix with rank r can be expressed as the sum of r matrices with rank 1, and singular values measure the weight of these matrices with rank 1 to the original matrix. In image processing, small singular values can be considered to be caused by missing elements. For the nuclear norm minimization model of the matrix completion algorithm, missing elements can have a large impact on the larger first few singular values of the matrix. The WHTNR algorithm, which is one of the variants of truncation of the nuclear norm, completely truncates the first r singular values, which may lead to poor robustness to the parameter r.

The optimization function of the model (13) is

The weight of each singular value σi(X) should be greater than 0. Based on the above analysis, for a given W, we have \(\text {Tr}\left ({u_{i}^{T}}\left (W-I\right ) u_{i}\right )>0\), let wi satisfy

That is, the rows with more missing elements are assigned smaller weights.

3.2 Solving WHTNR model

According to model (13), we can update Xk+ 1 by solving the next convex optimization model:

For solving the model (13), an auxiliary variable H ∈ Rm×n is added to relax the constraint, and the above model is equivalent to

To preferentially recover the rows wit h fewer missing elements in the matrix, the Lagrange function as follows

We solve the Lagrange function (19) by alternating direction iterative method. Firstly, fix Xk and Yk to update Hk+ 1,

Based on the constraints in model (18), the value of the recovery matrix is consistent with the observed value. We have

where

Secondly, fix Hk+ 1 and Yk to update Xk+ 1,

Then, combining (20) and (22), we obtain the following iterative scheme

where \(T_{k}=\left [\frac {2 \lambda }{\mu } \left (I-C^{\top } C\right )+I\right ]^{-1}\), \(Z_{k}=\frac {1}{\mu } \left (WA^{\top } B-C^{\top } D\right )\). Like (20),

Updating Yk+ 1 with fixed Hk+ 1 and Xk+ 1 is performed as follows

By (23), the update expression about Xk+ 1 does not contain Yk or Yk+ 1, so there is no need to calculate Yk+ 1 in the algorithm. The parameter μk is updated by

where μ0 > 0, ρ > 1 and \(k=1,2, \dots \).

In conclusion, the main steps of the WHTNR algorithm are described in Algorithm 2.

3.3 Convergence analysis

In this subsection, the convergence of the proposed method is discussed. For convenience, the optimal value of (18) is depicted as follows

the unaugmented lagrangian function (19) is also written by

In order to further illustrate the convergence of WHTNR algorithm, the following three lemmas are established, and the proofs are shown in the Appendices A, B and C.

Lemma 1

If \(\left ({X}_{*}, {H}_{*}, {Y}_{*}\right )\) is the optimal solution of the unaugmented Lagrangian function (27), then the inequality holds as follows

where Rk+ 1 = Xk+ 1 − Hk+ 1. In fact, Xk+ 1 = Hk+ 1, which implies that \(\left ({X}_{*}, {H}_{*}\right )\) is also the optimal solution to the model (18).

Lemma 2

If \(\left ({X}_{*}, {H}_{*}, {Y}_{*}\right )\) is the optimal solution of the unaugmented Lagrangian function (27), then Vk decreases in each iteration and satisfies the relationship as follows

where \(V_{k}=\frac {1}{\mu }\|{Y}_{k}-{Y}_{*}\|_{F}^{2}+\mu \|H_{k+1}-H_{k} \|_{F}^{2}\).

In terms of the above three lemmas, we have the following convergence theorem.

Theorem 1

If \(\left ({X}_{*}, {H}_{*}, {Y}_{*}\right )\) is the optimal solution of the unaugmented Lagrangian function (27), the iterative solution \(\left ({X}_{k}, {H}_{k}\right )\) converges to the optimal solution \(\left ({X}_{*}, {H}_{*}\right )\) if \(k \rightarrow \infty \). In other words, \(p_{k} \rightarrow p_{*}\) as \(k \rightarrow \infty \)

On the basis of Theorem 1, the convergence of the WHTNR method is explained by the approach of the objective function to the optimal value.

4 Experiments

In this section, extensive experiments are performed on real data to verify the proposed convergence accuracy, speed, and stability of the WHTNR algorithm. All experiments on Intel(R), Core(TM) i7- MATLAB R2016b running on CPU@2.90 GHz, 32GB main memory. The proposed algorithm WHTNR will be compared with five related algorithms, including (1) SVT [15]; (2) IALM [13]; (3) TNNR-admmap [17]; (4) TNNR-WRE [18]; (5) HTNR [21]; (6) WHTNR (The proposed method).

There are several parameters (weight matrix W, coefficients of the Frobenius norm λ, coefficients of lagrangian functions μ, and the number of truncated singular values r in WHTNR algorithm. W is given by the formula (33). In experiments, an optimal value of r was chosen between 1 and 20.

The maximum number of iterations for WHTNR is 100, and the maximum number of iterations for other comparison algorithms is 100. The stopping condition of the algorithm is

The stop error is set ε to 0.0001.

In these experiments, the peak signal to noise ratio (PSNR) is used as evaluation metrics, for which is defined as follows

where \(\text { Erec } =\left \|\left ({X}_{\text {rec}}-{M}\right )_{{\Omega }^{\mathrm {c}}}\right \|_{\mathrm {F}}\), \(\text {SE} =\text { Erec }_{\mathrm {R}}^{2}+\text {Erec}_{\mathrm {G}}^{2}+\text {Erec}_{\mathrm {B}}^{2}\), \(\text {MSE} =\frac {\text {SE}}{T}=\frac {\text {SE}}{T_{\mathrm {R}}+T_{\mathrm {G}}+T_{\mathrm {B}}}\), T is the number of missing elements, and {R,G,B} are the different channels of the real image.

In addition, the stability of the proposed method is characterized by the coefficient of variation of PSNR (CV) and the smaller the CV value, the more stable the result. Except that, we use the running time (CPU time(s)) value under the same conditions to evaluate the speed.

In this section, real images are used to verify the performance of the WHTNR algorithm on matrix computation. In practical applications, real-world images sometimes suffer from noise which causes missing of image information to a certain extent. Approximately, the WHTNR algorithm proposed above can effectively restore the damaged images when the image satisfies the low rank or near low rank. Therefore, in the experiments, the WHTNR method is evaluated below, and three types of pixel occlusion are considered, including (1) random mask; (2) text mask; and (3) block mask. The images used in this section are color images in JPEG format with a dimension of 300 × 300 as shown in Fig. 1.

4.1 The effect of W on WHTNR

If \(W=\text {diag}\left (w_{1}, \ldots , w_{m}\right )\), then wi is defined as follows

where 𝜃 > 1,m is the number of rows of the matrix to be restored and Ni is the number of observed entries in the ith row. According to the (33), we have wi ≤ wj if Ni ≥ Nj, that is to say, the row with more observed elements is assigned with a smaller value of weight, according to (33). Clearly, wi = 0 denotes that the i-th row has no element lost.

In Fig. 2(b)–(e) illustrate that rows with few missing elements are recovered first. For clarification, Fig. 3(a) depicts the weights applied in Fig. 2. Rows with fewer missing elements have lower weighted values, since they are commonly easier to be restored than the others. Likewise, the bigger blocks require larger values of weights to ensure accuracy. Therefore, the entire process is accelerated by the sequential recovery fashion.

The optimization process of the WHTNR performed on the image in Fig. 1(k) with the missing diamond block. (a) original image; (b) the incomplete image; (c) the 20th step; (d) the 30th step; (e) the recovered image

Weights corresponding to four missing blocks on the image in Fig. 1(a)

To test the robustness of the WHTNR algorithm when parameter 𝜃 varying, we set 𝜃 increasing from 0.1 to 3. For different miss rates, if 𝜃 is too large, the PSNR will become worse. This is because 𝜃 controls the weight range in the row dimension, which affect the recovery of WHTNR. So we choose that 𝜃 = 1.2 for our method and apply to subsequent experiments. It shows PSNR is relatively stable when 𝜃 < 2, which indicates that WHTNR performs well in robustness when 𝜃 < 2 (Fig. 4).

The effect of 𝜃 for W on WHTNR on the image in Fig. 1(a)

4.2 Convergence of WHTNR

Figure 5 shows the PSNR curve of the first 70 iterations under random occlusion with different missing rates for Fig. 1(a). It can be seen from Fig. 5 that the PSNR value gradually increases in the first 40 iterations, and becomes stable after 40 iterations, which verifies the convergence of the WHTNR algorithm experimentally.

The convergence analysis on WHTNR on the image in Fig. 1(a)

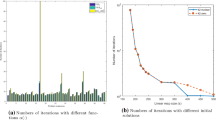

4.3 Robustness to r of WHTNR

We design an experiment on Fig. 1(a) to verify that the number of truncated singular values r is robust of WHTNR. In Fig. 6, a line graph of PSNR values under different r values is shown and compared with TNNR-admmap, TNNR-WRE, and HTNR methods. As can be seen from Fig. 6, when parameter r varying, WHTNR outperforms other methods in terms of robustness, because WHTNR does not completely truncate the first r singular values, but assigns weights (similar to ETNNR-WRE algorithm [18]), which can optimize the convergence accuracy based on the nuclear norm.

The comparison of robustness to r by SVT, IALM, TNNR-admmap, TNNR-WRE, HTNR and WHTNR on the image in Fig. 1(a)

4.3.1 Random mask

Random noise refers to missing information randomly distributed in the image, which is a relatively simple matrix computation problem. In the experiment, we mask 50%, 60%, 70% elements randomly in the 14 test images in Fig. 1 and then use SVT, IALM, TNNR-admmap, TNNR-WRE, HTNR and the WHTNR algorithm proposed in this paper for image restoration and compare their performance. By experience, we set the following settings for the parameters respectively, 𝜃 = 1.2, ρ = 1.15, λ = 0.001, β = 1.05 and μ = 0.0001. The WHTNR algorithm and the quantization results of the convergence accuracy of the other 5 algorithms are shown in Table 1. The original image, the noise image and the restored image of different methods are shown in Fig. 7. The CPU time for different methods is shown in Fig. 8. As can be seen from Table 1, WHTNR has the best quality of the restored image, which is due to that WHTNR does not completely truncate the first r singular values. As can be seen from Fig. 6, WHTNR is robust to parameter r. As can be seen from Table 4, WHTNR retains the advantages of the good stability of the HTNR algorithm without affecting the convergence accuracy. As can be seen from Fig. 7, WHTNR can basically restore the original appearance of the image compared with other algorithms, and its image details are clearer. It can be seen from Fig. 8 that the WHTNR algorithm greatly improves the convergence speed based on the HTNR algorithm. Therefore, WHTNR has certain advantages in terms of convergence accuracy, convergence speed, stability, and robustness of parameter r (Tables 2, 3 and 4).

Recovered results of the image in Fig. 1(a) with random mask (missing ratio = 0.5) by six methods: (a) the original image, (b) the observed image, (c) SVT, (d) IALM, (e) TNNR-admmap, (f)TNNR-WRE, (g) HTNR, (h) WHTNR

4.3.2 Text mask

Unlike random noise, text noise continuously distributed in part of the image. In this paper, a matrix computation algorithm is used to restore low-rank images contaminated by text noise, and text is regarded as the missing element in the matrix computation problem. In this section, Fig. 1(d) and (k) are arbitrarily selected as examples, the text noise is printed, and use SVT, IALM, TNNR-admmap, TNNR-WRE, HTNR and WHTNR to recover the image. The quantized results of its convergence accuracy are shown in Table 5. In Fig. 9 original image, noise image and restored image of different methods are shown. It can be seen from Table 5 that in terms of quantization results, the WHTNR algorithm significantly outperforms the SVT and IALM methods, and slightly better than the TNNR-admmap, TNNR-WRE, and HTNR algorithms. As can be seen from Fig. 9, the upper part of the SVT and IALM results still contains noise, while the edge of the restored image by WHTNR algorithm is clearer.

Recovered results of the image in Fig. 1(b) with text mask by six methods: (a) the original image, (b) the observed image, (c) SVT, (d) IALM, (e) TNNR-admmap, (f) TNNR-WRE, (g) HTNR, (h) WHTNR

4.3.3 Block mask

As mentioned earlier, text noise is continuously distributed in the image area, while block noise is continuously distributed in the larger area, which makes it more difficult to restore contaminated images with matrix padding. In this paper, a matrix computation algorithm is used to restore low-rank images contaminated by text noise, and text elements are regarded as missing elements in the matrix computation problem. Figure 1(e) and (f) are arbitrarily chosen in this section as examples, print block noise, and use SVT, IALM, TNNR-admmap, TNNR-WRE, HTNR and HTNR-wre to recover the image. The quantized results of its convergence accuracy are shown in Table 5. In Fig. 10 original image, noise image and restored image of different methods are shown. From Table 5, WHTNR algorithm has better recovery effect than other algorithms. As can be seen from Fig. 10, the WHTNR algorithm can basically restore the visual effect of the image even under the more difficult block noise.

Recovered results of the image in Fig. 1(d) with block mask by six methods: (a) the original image, (b) the observed image, (c) SVT, (d) IALM, (e) TNNR-admmap, (f) TNNR-WRE, (g) HTNR, (h) WHTNR

5 Conclusions

In this paper, we introduce a new low-rank matrix completion method called the weighted residual-based hybrid truncated norm method (WHTNR). The WHTNR model is used for matrix completion. By assigning appropriate weights to the first r singular values, the rows with fewer missing elements in the matrix can be restored preferentially. At the same time, we verify the convergence of the WHTNR algorithm both theoretically and experimentally. Quantization results and visual effects on real images, illustrating the advantages of WHTNR compared to other methods. Therefore, the WHTNR algorithm is based on the HTNR algorithm, and on the basis of ensuring its stability, compared with the closest 5 methods, it has higher convergence accuracy and convergence speed, and is more robust to the parameter truncate singular values r.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Ma, T.H., Lou, Y., Huang, T.Z.: Truncated l1 − 2 models for sparse recovery and rank minimization. SIAM J. Imaging Sci. 10 (3), 1346–1380 (2017)

Zhao, X.L., Wang, F., Huang, T.Z., et al.: Deblurring and sparse unmixing for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 51(7), 4045–4058 (2013)

Zhao, X.L., Xu, W.H., Jiang, T.X., et al.: Deep plug-and-play prior for low-rank tensor completion. Neurocomputing 400, 137–149 (2020)

Zhao, X.L., Zhang, H., Jiang, T.X., et al.: Fast algorithm with theoretical guarantees for constrained low-tubal-rank tensor recovery in hyperspectral images denoising. Neurocomputing 413, 397–409 (2020)

Jannach, D., Resnick, P., Tuzhilin, A., et al.: Recommender systems—beyond matrix completion. Commun. ACM 59(11), 94–102 (2016)

Ramlatchan, A., Yang, M., Liu, Q., et al.: A survey of matrix completion methods for recommendation systems. Big Data Mining and Analytics 1(4), 308–323 (2018)

Wang, W., Chen, J., Wang, J., et al.: Geography-aware inductive matrix completion for personalized Point-of-Interest recommendation in smart cities. IEEE Internet Things J. 7(5), 4361–4370 (2019)

Candes, E.J., Plan, Y.: Matrix completion with noise. Proc. IEEE 98(6), 925–936 (2010)

Zou, C., Hu, Y., Cai, D., et al.: Salient object detection via fast iterative truncated nuclear norm recovery. In: International Conference on Intelligent Science and Big Data Engineering, pp 238–245. Springer, Berlin (2013)

Wright, J., Ganesh, A., Rao, S., et al.: Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. Advances in Neural Information Processing Systems 22 (2009)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009)

Fazel, M.: Matrix Rank Minimization with Applications. PhD thesis, Stanford University (2002)

Lin, Z., Chen, M., Ma, Y.: The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv:1009.5055(2010)

Toh, K.C., Yun, S.: An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pacific Journal of Optimization 6(3), 615–640 (2010)

Cai, J.F., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20(4), 1956–1982 (2010)

Zhang, D., Hu, Y., Ye, J., et al.: Matrix completion by truncated nuclear norm regularization. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp 2192–2199. IEEE (2012)

Hu, Y., Zhang, D., Ye, J., et al.: Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans. Pattern Anal. Mach. Intell. 35(9), 2117–2130 (2013)

Liu, Q., Lai, Z., Zhou, Z., et al.: A truncated nuclear norm regularization method based on weighted residual error for matrix completion. IEEE Trans. Image Process. 25(1), 316–330 (2015)

Xue, S., Qiu, W., Liu, F., et al.: Double weighted truncated nuclear norm regularization for low-rank matrix completion. arXiv:1901.01711(2019)

Yang, L., Kou, K.I., Miao, J.: Weighted truncated nuclear norm regularization for low-rank quaternion matrix completion. J. Vis. Commun. Image Represent. 81, 103335 (2021)

Ye, H., Li, H., Cao, F., et al.: A hybrid truncated norm regularization method for matrix completion. IEEE Trans. Image Process. 28(10), 5171–5186 (2019)

Mirsky, L.: A trace inequality of John von Neumann. Monatshefte für mathematik 79(4), 303–306 (1975)

Tyrrell, R., Fellar, R: Convex analysis. Princeton University Press (1996)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Guanghui Cheng contributed equally to this work.

Appendices

Appendix A: Proof of Lemma 1

First, Lemma 1 (1) is discussed. On the basis of that \(\left ({X}_{*}, {H}_{*},{Y}_{*}\right )\) is the optimal solution to the Lagrange function (19). Hence, we have

Clearly, X∗ = H∗, then the left-hand side is p∗. And \(p_{k+1}=\text {Tr}\left (A WX_{k+1}B{ }^{T}\right )-\text {Tr}\left (C H_{k+1}D{}^{T}\right )+\lambda \left (\|{X}_{k+1}\|_{F}^{2}-\|{C} {X}_{k+1}\|_{F}^{2}\right )\), (34) is rewritten to \(p_{*}{\leq } p_{k+1}+ {\langle }\frac {\mu }{2}R_{k+1}+Y_{*},R_{k+1}{\rangle }\), the inequality (34) holds.

Thus, Lemma 1 (1) is proved. Then Lemma1(2) is discussed.

Clearly, Xk+ 1 minimizes L(X,Hk,Yk) by definition. Since L(X,Hk,Yk) is closed, convex and subdifferentiable on X. On the basis of the property of subdifferential [23], the optimality condition is obtained as follows

Since Yk+ 1 = Yk + μRk+ 1, we have Yk = Yk+ 1 − μRk+ 1, (35) is rewritten as follows

note that Xk+ 1 minimizes

In the same way, Hk+ 1 minimizes L(Xk+ 1,H,Yk) by definition. Since L(Xk+ 1,H,Yk) is closed, convex and subdifferentiable on H. On the basis of the property of subdifferential [23], the optimality condition is obtained as follows,

Since Yk+ 1 = Yk + μRk+ 1, we have Yk = Yk+ 1 − μRk+ 1, (39) is rewritten as follows

note that Hk+ 1 minimizes

Hence, for the optimal solution (X∗,H∗), we have

and

With (42), (43), and X∗ = H∗, we have

Thus, Lemma 1(2) is also proved.

Appendix B: Proof of Lemma 2

Add the two inequalities in Lemma 1, multiply both sides by 2, we have

the inequality (45) is rewritten as follows

Since Yk+ 1 = Yk + μRk+ 1, we have

Since \({Y}_{k+1}-{Y}_{k}=\left ({Y}_{k+1}-{Y}_{*}\right )-\left ({Y}_{k}-{Y}_{*}\right )\), we have

The inequality (47) is rewritten as follows

Since \({H}_{k}-{H}_{k+1}=\left ({H}_{k}-{H}_{*}\right )-\left ({H}_{k+1}-{H}_{*}\right )\), we have

The inequality (47) is rewritten as follows

In other words,

Hence, Vk decreases. Clearly, in order to prove (30), it only need to verify the − 2〈Rk+ 1,Hk − Hk+ 1〉≥ 0.

In fact, recalling that Hk+ 1 minimizes \(-\text {Tr}\left (C H D^{T}\right )-{\langle }Y_{k+1}, H{\rangle }\) and Hk minimizes \(-\text {Tr}\left (C H D^{T}\right )-{\langle }Y_{k}, H{\rangle }\), we have

and

Add the two inequalities (53) and (53), we have

With Yk+ 1 − Yk = μRk+ 1 and μ > 0, then the inequality (55) is rewritten to − 2μ〈Rk+ 1,Hk − Hk+ 1〉≥ 0

Thus, Lemma 2 is proved.

Appendix C: Proof of Theorem 1

In terms of Lemma 2, Vk decreases in each iteration and \(V_{k}-V_{k+1} \geq \mu \left (\left \|R_{k+1}\right \|_{F}^{2}+\|H_{k+1}-H_{k} \|_{F}^{2}\right )\), add all the terms on the both sides and rearrange it, we have

Notes that \(\mu \left (\left \|R_{k+1}\right \|_{F}^{2}+\|H_{k+1}-H_{k} \|_{F}^{2}\right )\rightarrow 0\)(\(k \rightarrow \infty \)). Hence, if \(k \rightarrow \infty \), then \(R_{k+1} \rightarrow 0\) and \(H_{k+1}-H_{k}\rightarrow 0\). In view of Theorem 1, we have

and

That is to say, \(p_{k} \rightarrow p_{*}\) as \(k \rightarrow \infty \).

Thus, Theorem 1 is proved.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wan, X., Cheng, G. Weighted hybrid truncated norm regularization method for low-rank matrix completion. Numer Algor 94, 619–641 (2023). https://doi.org/10.1007/s11075-023-01513-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01513-0