Abstract

In this paper, we study the split feasibility problem with multiple output sets in Hilbert spaces. For solving the aforementioned problem, we propose two new self-adaptive relaxed CQ algorithms which involve computing of projections onto half-spaces instead of computing onto the closed convex sets, and it does not require calculating the operator norm. We establish a weak and a strong convergence theorems for the proposed algorithms. We apply the new results to solve some other problems. Finally, we present some numerical examples to show the efficiency and accuracy of our algorithm compared to some existing results. Our results extend and improve some existing methods in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let H1 and H2 be two real Hilbert spaces. Let C and Q be nonempty, closed, and convex subsets of H1 and H2, respectively. Let A : H1 → H2 be a nonzero bounded linear operator and let A∗ : H2 → H1 be its adjoint. The split feasibility problem (SFP) is formulated to find a point x∗∈ H1 satisfying

The SFP was first introduced in 1994 by Censor and Elfving [1] in finite-dimensional Hilbert spaces for modeling certain inverse problems and has received a great attention since then. This is because the SFP can be used to model several inverse problems arising from, for example, phase retrievals and in medical image reconstruction [1, 2], intensity-modulated radiation therapy (IMRT) [3,4,5], gene regulatory network inference [6], just to mention but few, for more details one can, see, e.g., [7,8,9,10,11,12,13,14] and the references therein. In the span of the last twenty five years, focusing on real world applications, several iterative methods for solving the SFP (1) have been introduced and analyzed. Among them, Byrne [2, 9] introduced the first applicable and most celebrated method called the well-known CQ-algorithm as follows: for x0 ∈ H1;

where PC and PQ are the metric projections onto C and Q, respectively, and the stepsize \(\tau _{n}\in \left (0, \frac {2}{\|A\|^{2}}\right )\) where ∥A∥2 is the spectral radius of the matrix A∗A.

The CQ algorithm proposed by Byrne [2, 9], requires the computation of metric projection onto the sets C and Q (in some cases, it is impossible or is too expensive to exactly compute the metric projection). In addition, the determination of the stepsize depends on the operator norm which computation (or at least estimate) is not easy task. In practical applications, the sets C and Q are usually the level sets of convex functions which are given by

where \(c:H_{1}\rightarrow \mathbb {R}\) and \(q:H_{2}\rightarrow \mathbb {R}\) are convex and subdifferentiable functions on H1 and H2, respectively, and that subdifferentials ∂c(x) and ∂q(y) of c and q, respectively, are bounded operators (i.e., bounded on bounded sets).

Later, in 2004, Yang [12] generalized the CQ method to the so-called relaxed CQ algorithm, needing computation of the metric projection onto (relaxed sets) half-spacesCn and Qn, where

where ξn ∈ ∂c(xn) and

where ηn ∈ ∂q(Axn). It is easy to see that \(C\subseteq C_{n}\) and \(Q\subseteq Q_{n}\) for all n ≥ 1. Moreover, it is known that projections onto half-spaces Cn and Qn have closed forms. In what follows, define

where Qn is given as in (5). fn is a convex and differentiable function with its gradient ∇fn defined by

More precisely, Yang [12] introduced the following relaxed CQ algorithm for solving the SFP (1) in a finite-dimensional Hilbert space: for x0 ∈ H1;

where \(\tau _{n}\in \left (0, \frac {2}{\|A\|^{2}}\right )\). Since \( P_{C_{n}}\) and \(P_{Q_{n}}\) are easily calculated, this method appears to be very practical. However, to compute the norm of A turns out to be complicated and costly. To overcome this difficulty, in 2012, López et al. [15] introduced a relaxed CQ algorithm for solving the SFP (1) with a new adaptive way of determining the stepsize sequence τn defined as follows:

where ρn ∈ (0,4),∀n ≥ 1 such that \(\liminf _{n\rightarrow \infty }\rho _{n}(4-\rho _{n})>0 \). It was proved that the sequence {xn} generated by (8) with τn defined by (9) converges weakly to a solution of the SFP (1). That is, their algorithm has only weak convergence in the framework of infinite-dimensional Hilbert spaces. But, in the infinite-dimensional spaces norm (strong) convergence is more desirable than the weak convergence for solving our problems. In this regard, many authors proposed algorithms that generate a sequence {xn}, converges strongly to a point in the solution set of the SFP (1), see, e.g., [15,16,17,18,19]. In particular, López et al. [15] proposed a Halpern's iterative scheme for solving the SFP (1) in the setting of infinite-dimensional Hilbert spaces as follows: for u,x0 ∈ H1;

where {αn}⊂ (0,1), and ∇fn(xn) and τn are given by (7) and (9), respectively. In 2013, He et al. [16] also introduced a new relaxed CQ algorithm for solving the SFP (1) such that strong convergence is guaranteed in infinite-dimensional Hilbert space. Their algorithm generates a sequence {xn} by the following manner: for u,x0 ∈ H1;

where Cn and τn are given as in (4) and (9), respectively, and {αn}⊂ (0,1) such that \(\lim _{n\rightarrow \infty } \alpha _{n}=0\) and \(\sum _{n=1}^{\infty }\alpha _{n}=+\infty \). Under some standard conditions, it was shown that the sequence {xn} generated by (10) and (11) converges strongly to p∗ = PΩ(u) ∈Ω = {p ∈ H1 : p ∈ C such that Ap ∈ Q} of the SFP (1). Both schemes (10) and (11) do not require any prior knowledge of the operator norm and compute the projections onto the half-spaces Cn and Qn (which have closed-form), and thus both are easily implementable.

Some generalizations of the SFP have also been studied by many authors. We mention, for instance, the multiple-sets SFP (MSSFP) [3, 20,21,22,23,24,25,26,27,28,29,30,31,32,33,34], the split common fixed point problem (SFPP) [35, 36], the split variational inequality problem (SVIP) [37], and the split common null point problem (SCNPP) [38,39,40,41,42].

Very recently, Reich et al. [43] considered and studied the following split feasibility problem with multiple output sets in real Hilbert spaces.

Let \(H, H_{i}, i=1, 2, \dots , N,\) be real Hilbert spaces and let \(A_{i}: H\to H_{i}, i=1, 2, \dots , N,\) be bounded linear operators. Let C and \(Q_{i}, i=1, 2, \dots , N,\) be nonempty, closed, and convex subsets of H and \(H_{i}, i=1, 2, \dots , N\), respectively. Given H,Hi, and Ai as above, the split feasibility problem with multiple output sets (SFPMOS, for short) is to find an element p∗ such that

That is p∗∈ C and Aip∗∈ Qi for each \(i=1, 2, \dots , N\).

In 2020, Reich et al. [43] introduced the following two methods for solving the SFPMOS (12).

For any given points, x0,y0 ∈ H, {xn}, and {yn} are sequences generated by

where f : C → C is a strict contraction mapping of H into itself with the contraction constant \(\theta \in [0, 1),~\lambda _{n}\subset (0, \infty )\) and {αn}⊂ (0,1). It was proved that if the sequence {λn} satisfies the condition:

for all n ≥ 1, then the sequence {xn} generated by (13) converges weakly to a solution point p∗∈Ω of the SFPMOS (12). Furthermore, if the sequence {αn} satisfies the conditions:

then the sequence {yn} generated by (14) converges strongly to a solution point p∗∈Ω of the SFPMOS (12), which is a unique solution of the variational inequality

An important observation here is that the iterative methods (Scheme (13) and Scheme (14)) introduced by Reich et al. [43] requires to compute the metric projections on to the sets C and Qi. Moreover, it needs to compute the operator norm. Due to this reason, the following question naturally arises. Question: Can we design two new iterative algorithms (a weakly convergent and strongly convergent methods, different from Scheme (13) and Scheme (14)) for solving the SFPMOS (12) which mainly involves a self-adaptive step-size and requires to compute the projections onto half-spaces so that the algorithm is easily implementable?.

We have a positive answer for the above question which is motivated by the iterative schemes (13) and (14) proposed by Reich et al. [43] for solving the SFPMOS (12), the Halpern’s-type iterative schemes (10) and (11) proposed by López et al. [15] and He et al. [16], respectively, to solve the SFP (1). In this paper, we propose two new self-adaptive relaxed CQ algorithms for solving the SFPMOS (12) in infinite-dimensional Hilbert spaces.

In the next section, we recall some necessary tools which are used in establishing our main results. In Section 3, we propose self-adaptive relaxed CQ algorithms for solving the SFPMOS (12), and we establish and analyze weak and strong convergence theorems for the proposed algorithms. In the same section, we also present some newly derived results for solving the SFP (1). In Section 4, we present the application of our methods to solve the generalized split feasibility problem (another generalization of the SFP). Finally, in the last section, we provide several numerical examples to illustrate the implementation of our algorithms compared to some existing results.

2 Preliminaries

In this section, we recall some definitions and basic results which are needed in the sequel. Let H be a real Hilbert space with the inner product 〈.,.〉, and induced norm ∥.∥. Let I stands for the identity operator on H. Let the symbols “\(\rightharpoonup \)” and “→”, denote the weak and strong convergence, respectively. For any sequence {xn}⊂ H, \(\omega _{w}(x_{n})=\{x\in H:\exists \{x_{n_{k}}\}\subset \{x_{n}\} \text { such that } x_{n_{k}}\rightharpoonup x\}\) denotes the weak w-limit set of {xn}.

Definition 1

([44]) Let C be a nonempty closed convex subset of H. Let \(T:C\rightarrow H\) be a given operator. Then, T is called

- (1):

-

Lipschitz continuous with constant λ > 0 on C if

$$ \|Tx-Ty\|\leq \lambda\|x-y\|,\forall x,y \in C; $$(15) - (2):

-

nonexpansive on C if

$$ \|Tx-Ty\|\leq\|x-y\|, \forall x,y \in C; $$(16) - (3):

-

firmly nonexpansive on C if

$$ \|Tx-Ty\|^{2}\leq \|x-y\|^{2}-||(I-T)x-(I-T)y\|^{2}, \forall x,y\in C , $$(17)which is equivalent to

$$ \|Tx-Ty\|^{2}\leq \langle Tx-Ty,x-y\rangle, \forall x,y\in C; $$(18) - (4):

-

averaged if there exists a number λ ∈ (0,1) and a nonexpansive operator \(F:C\rightarrow H\) such that

$$ T=\lambda F+(1-\lambda)I, \text{ where } I \text{ is the identity operator}. $$(19)In this case, we say that T is λ-averaged.

Definition 2

([44]) Let C ⊂ H be a nonempty, closed and convex set. For every element x ∈ H, there exists a unique nearest point in C, denoted by PC(x) such that

The operator PC (mapping \(P_{C} : H\rightarrow {C}\)) is called a metric projection of H onto C and it has the following well-known properties.

Lemma 1

([44, 45]) Let C ⊂ H be a nonempty, closed and convex set. Then, the following assertions hold for any x,y ∈ H and z ∈ C :

- (1):

-

〈x − PC(x),z − PC(x)〉≤ 0;

- (2):

-

∥PC(x) − PC(y)∥≤∥x − y∥;

- (3):

-

∥PC(x) − PC(y)∥2 ≤〈PC(x) − PC(y),x − y〉;

- (4):

-

∥PC(x) − z∥2 ≤∥x − z∥2 −∥x − PC(x)∥2.

We see from Lemma 1 that the metric projection mapping is firmly nonexpansive and nonexpansive. Moreover, it is not hard to show that I − PC is also firmly nonexpansive and nonexpansive.

Lemma 2

For all x,y ∈ H and for all \(\alpha \in \mathbb {R}\), we have

-

∥x + y∥2 ≤∥x∥2 + 2〈y, x + y〉;

-

∥x + y∥2 = ∥x∥2 + ∥y∥2 + 2〈x, y〉;

-

\(\langle x,y\rangle =\frac {1}{2}\|x\|^{2}+\frac {1}{2}\|y\|^{2}-\frac {1}{2}\|x-y\|^{2};\)

-

∥αx + (1 − α)y∥2 = α∥x∥2 + (1 − α)∥y∥2 − α(1 − α)∥x − y∥2.

Fejér-monotone sequences are very useful in the analysis of optimization iterative algorithms.

Definition 3

([44]) Let C be a nonempty subset of H and let {xn} be a sequence in H. Then, {xn} is Fejér monotone with respect to C if

It is easy to see that a Fejér monotone sequence {xn} is bounded and the limit \(\lim _{n\rightarrow \infty }\|x_{n}-z\|\) exists.

Lemma 3

(Demiclosedness principle of nonexpansive mappings [44]) Let C be a closed convex subset of H, T : C → C be a nonexpansive mapping with nonempty fixed point sets. If {xn} is a sequence in C converging weakly to x and {(I − T)xn} converges strongly to y, then (I − T)x = y. In particular, if y = 0, then x = Tx.

Lemma 4

([44, 46, 47]) Let C be a nonempty, closed, and convex subset of a real Hilbert space H and let {xn} be a sequence in H satisfying the properties:

- (1):

-

\(\lim _{n\rightarrow \infty }\|x_{n}-x^{*}\|\) exists for every x∗∈ C;

- (2):

-

ωw(xn) ⊂ C.

Then, there exists a point \(\hat {x}\in C\) such that {xn} converges weakly to \(\hat {x}\).

Definition 4

Let \(f: H\rightarrow \mathbb {R}\) be a function and λ ∈ [0,1]. Then,

- (1):

-

f is convex if

$$ f(\lambda x+(1-\lambda)y)\leq \lambda f(x)+(1-\lambda)f(y), \forall x,y\in H. $$ - (2):

-

A vector ξ ∈ H is a subgradient of f at a point x if

$$ f(y)\geq f(x)+\langle \xi, y-x\rangle,~\forall y\in H. $$ - (3):

-

The set of all subgradients of a convex function \(f:H\rightarrow \mathbb {R}\) at x ∈ H, denoted by ∂f(x), is called the subdifferential of f, and is defined by

$$ \partial f(x)=\{\xi\in H:f(y)\geq f(x)+\langle \xi,y-x \rangle, ~\text{for each } y\in H\}. $$ - (4):

-

If ∂f(x)≠∅, f is said to be subdifferentiable at x. If the function f is continuously differentiable then ∂f(x) = {∇f(x)}. The convex function is subdifferentiable everywhere [44].

- (5):

-

f is called weakly lower semicontinuous at x0 if for a sequence {xn} weakly converging to x0 one has

$$ f(x_{0})\leq \liminf_{n\rightarrow\infty}f(x_{n}). $$A function which is weakly lower semicontinuous at each point of H is called weakly lower semicontinuous on H.

Lemma 5

([48]) Let H1 and H2 be real Hilbert spaces and \(f:H_{1}\rightarrow \mathbb {R}\) is given by \(f(x)=\frac {1}{2}\|(I-P_{Q})Ax\|^{2}\) where Q is a nonempty, closed convex subset of H2 and \(A:H_{1}\rightarrow H_{2}\) be a bounded linear operator. Then, the following assertions hold:

- (1):

-

f is convex and differentiable;

- (2):

-

f is weakly lower semicontinuous on H1;

- (3):

-

∇f(x) = A∗(I − PQ)Ax, for x ∈ H1;

- (4):

-

∇f is ∥A∥2-Lipschitz, i.e., ∥∇f(x) −∇f(y)∥≤∥A∥2∥x − y∥,∀x,y ∈ H1.

Lemma 6

([49]) Let {Λn} be a sequence of real numbers that does not decrease at infinity. Also consider the sequence of integers \(\{\varphi (n)\}_{n\geq n_{0}}\) defined by

Then, \(\{\varphi (n)\}_{n\geq n_{0}}\) is a nondecreasing sequence verifying \(\lim _{n\rightarrow \infty }\varphi (n)=\infty \), and for all n ≥ n0, the following two estimates hold:

Lemma 7

([50]) Let {sn} be a sequence of nonnegative real numbers satisfying the following relation:

where {ϱn}, {μn} and {𝜃n} satisfying the conditions:

- (1):

-

{ϱn}⊂ [0,1], \({\sum }_{n=1}^{\infty }{\varrho }_{n}=\infty ;\)

- (2):

-

\(\limsup _{n\rightarrow \infty }\mu _{n}\leq 0;\)

- (3):

-

𝜃n ≥ 0, \({\sum }_{n=1}^{\infty }\theta _{n}<\infty \).

Then, \(\lim _{n\rightarrow \infty }s_{n}=0\).

3 The iterative algorithms for solving SFPMOS

In this section, we propose new self-adaptive relaxed iterative methods for solving the SFPMOS (12) in the infinite-dimensional Hilbert spaces, and we prove a weak and strong convergence theorems of the proposed methods.

The relaxed projection methods use metric projections onto half-spaces instead of projections onto the original closed convex sets. In what follows, we consider a general case of the SFPMOS (12), where the nonempty, closed and convex sets C and \(Q_{i}(i=1, 2, \dots , N)\) are given by level sets of convex functions defined as follows:

where, \(c:H\rightarrow \mathbb {R}\) and \(q_{i}:H_{i}\rightarrow \mathbb {R}\), \(i=1, 2, \dots , N\) are lower semicontinuous convex functions. We assume that both c and each qi are subdifferentiable on H and Hi, respectively, with subdifferential ∂c and ∂qi, respectively. Moreover, assume that for any x ∈ H a subgradient ξ ∈ ∂c(x) can be calculated, and for any y ∈ Hi and for each \(i\in \{1, 2, \dots , N\}\), a subgradient ηi ∈ ∂qi(y) can be calculated. Again, assume that both ∂c and \(\partial q_{i}(i=1, 2, \dots , N)\) are bounded operators (i.e., bounded on bounded sets). The subdifferentials ∂c and ∂qi are defined by

for all x ∈ C and

for all y ∈ Qi, \(i=1, 2, \dots , N\).

In this situation, the projections onto C and Qi are not easily implemented in general. To avoid this difficulty, we introduce a relaxed projection gradient methods, in which the projections onto the half-spaces are adopted in stead of the projections onto C and Qi. In particular for \(n\in \mathbb {N}\), we define the relaxed sets (half-spaces) Cn and \({Q^{n}_{i}}(i=1, 2, \dots , N)\) of C and Qi, respectively, at xn as follows:

where ξn ∈ ∂c(xn) is subgradient of c at xn and

where \({\eta ^{n}_{i}}\in \partial q_{i}(A_{i}x_{n})\). By the definition of the subgradient, it is easy to see that \(C\subseteq C_{n}\) and \(Q_{i}\subseteq {Q^{n}_{i}}\) (see [51]), and the metric projections onto Cn and \({Q_{i}^{n}}\) can be directly calculated (since the projections onto Cn and \({Q_{i}^{n}}\) have closed-form expressions), for example, for ξn ∈ ∂c(xn)

Now, we present the following easily implementable algorithms.

3.1 Weak convergence theorems

In this subsection, we propose a new self-adaptive relaxed iterative method for solving the SFPMOS (12) in the infinite-dimensional Hilbert spaces, and we prove a weak convergence theorem of the proposed method.

Theorem 1

Assume that the SFPMOS (12) is consistent (i.e., Ω≠∅). Suppose the sequences \(\{{\rho _{1}^{n}}\}\) and \(\{{\rho _{2}^{n}}\}\) in Algorithm 1 are in (0,1) such that \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), respectively. Then, the sequence {xn} generated by Algorithm 1 converges weakly to a solution p∗∈Ω of the SFPMOS (12).

Proof

For convenience, we set the following notations first (for \(i=1, 2, \dots , N\))

Consequently, the step-size τn given by (??) can be written as

where

Then, the iterative sequence {xn} in Algorithm 1 can be rewritten as follows:

Let p∗∈Ω (Ω is the solution set of the SFPMOS (12)). By (27), we have

Using Lemma 1 (1), we obtain the following two estimations.

Substituting (29) and (30) into (28) and since \(\|{\sum }_{i=1}^{N}\vartheta _{i}A^{*}_{i}f_{{Q_{i}^{n}}}^{n}\|\leq \bar {\tau }_{n}\), we obtain that

Since \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), we have from (31) that

Therefore, the sequence {xn} is Fejér-monotone with respect to Ω. As a consequence, \(\lim _{n\rightarrow \infty }\|x_{n}-p^{*}\|\) exists. That is, {xn} is bounded, and hence the sequence \(\{A_{i}x_{n}\}_{i=1}^{N}\) is also bounded.

Noticing that \({\rho _{1}^{n}}\in [a_{1}, b_{1}]\subset (0, 1)\), we can obtain from (31) that

Since {xn} is bounded and \(f_{C_{n}}^{n}\) is 1-Lipschitz continuous, there exists a real number R > 0 such that \(\|f_{C_{n}}^{n}\|^{2}\leq R\). Thus, we can obtain from (32) that

Hence, we obtain from (33)

Noticing that \({\rho _{2}^{n}}\in [a_{2}, b_{2}]\subset (0, 1)\), we can obtain from (31) that

Letting \(n\rightarrow \infty \) on both sides of (35), we have

Since the iterative sequence {xn} is bounded and by the Lipschitz continuity of \(f_{{Q_{i}^{n}}}^{n}\), the sequence \(\left \{\left \|{\sum }_{i=1}^{N}\vartheta _{i}A^{*}_{i}f_{{Q_{i}^{n}}}^{n}\right \|\right \}_{n=1}^{\infty }\) is bounded and so the sequence \(\{\bar {\tau }_{n}\}\) is bounded too. Therefore, we can get from (36) that

Next, we will prove that ωw(xn) ⊂Ω. For each \(i=1, 2, \dots , N\), since ∂qi is bounded on bounded sets, there exists a constant γ > 0 such that \(\|{\eta _{i}^{n}}\|\leq \gamma \), where \({\eta _{i}^{n}}\in \partial q_{i}(A_{i}x_{n})\). Then, for \(i=1, 2, \dots , N\), notice that \(P_{{Q_{i}^{n}}}(A_{i}x_{n})\in {Q_{i}^{n}}\), we have

By (37), we have for any \(i=1, 2, \dots , N,\) that

Let \(\hat {p}\in \omega _{w}(x_{n})\), there exists a subsequence \(\{x_{n_{m}}\}\subset \{x_{n}\}\) such that \(x_{n_{m}}\rightharpoonup \hat {p}\) as \(m\to \infty \). By the weak lower semicontinuity of the function qi and (39), we get

which means that \(A_{i}\hat {p}\in Q_{i}\) for \(i=1, 2, \dots , N\).

Since ∂c is bounded, there exists a constant δ > 0 such that ∥ξn∥≤ δ, where ξn ∈ ∂c(xn). Then, notice that \(P_{C_{n}}(x_{n})\in C_{n}\), we have

By (34), we have that

By the weak lower semicontinuity of the convex function c and (42), we obtain

Consequently, \(\hat {p}\in C\). Therefore, \(\hat {p}\in {\Omega }\).

Notice that for any p∗∈Ω, \(\lim _{n\rightarrow \infty }\|x_{n}-p^{*}\|\) exists and ωw(xn) ⊂Ω. Therefore, applying Lemma 4, we conclude that the iterative sequence {xn} converges weakly to a solution of the SFPMOS (12). This completes the proof. □

For N = 1, we note the following iterative method for solving the SFP (1).

As an immediate consequence of Theorem 1, we obtain the following corollary.

Corollary 1

Assume that the SFP (1) is consistent. Suppose the sequences \(\{{\rho _{1}^{n}}\}\) and \(\{{\rho _{2}^{n}}\}\) in Algorithm 2 are in (0,1) such that \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), respectively. Then, the sequence {xn} generated by Algorithm 2 converges weakly to a solution p∗∈Ω = {p ∈ H1 : p ∈ C such that Ap ∈ Q}.

3.2 Strong convergence theorem

In this subsection, we propose a new iterative method for solving the SFPMOS (12) in the infinite-dimensional Hilbert spaces, and we prove a strong convergence theorem of the proposed method.

Theorem 2

Assume that the SFPMOS (12) is consistent (i.e., Ω≠∅). Suppose the sequences \(\{{\rho _{1}^{n}}\}\), \(\{{\rho _{2}^{n}}\}\), and {αn} in Algorithm 3 are in (0,1) such that \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), and \(\lim _{n\rightarrow \infty }\alpha _{n}=0\) and \({\sum }_{n=0}^{\infty } \alpha _{n}=\infty \). Then, the sequence {xn} generated by Algorithm 3 converges strongly to the point p∗∈Ω, where p∗ = PΩu.

Proof

For simplicity, the same as we did in the proof of Theorem 1, we introduce some notations first.

where τn is the stepsize given in the Algorithm 3 and can be defined as

where

Then, the iterative sequence {xn} in Algorithm 3 can be rewritten as follows:

Let p∗∈Ω. Using Lemma 2 (1) and by (47), we have that

From (31), we have

Now, we prove the sequence {xn} is bounded. Indeed, using the assumptions imposed on \(\{{\rho _{1}^{n}}\}\), \(\{{\rho _{2}^{n}}\}\) and {αn}, we have from (50) that

Consequently,

This shows that the sequence {xn} is bounded, and {yn} and \(\{A_{i}x_{n}\}_{i=1}^{N}\) as well.

Next, with no loss of generality, we may assume that there exist σ1,σ2 > 0 such that \(2{\rho _{1}^{n}}\left (1-{\rho _{1}^{n}}\right )(1-\alpha _{n})\geq \sigma _{1}\) and \(2{\rho _{2}^{n}}\left (1-{\rho _{2}^{n}}\right )(1-\alpha _{n})\geq \sigma _{2}\) for all n.

Setting sn = ∥xn − p∗∥2, we get from (50) that

Now, we prove sn → 0 by distinguishing two cases. Case 1: Assume that {sn} is eventually decreasing. That is, there exists k ≥ 0 such that sn+ 1 < sn holds for all n ≥ k. In this case, {sn} must be convergent, and from (52) it follows that

where K > 0 is a constant such that 2∥u − p∗∥∥xn+ 1 − p∗∥≤ K for all \(n\in \mathbb {N}\). Since σ1,σ2 > 0, and αn → 0 as \(n\to \infty \), we have from (53) that

and

Next, we show that \(\left \{f_{{Q_{i}^{n}}}^{n}\right \}\to 0\). To do so, it suffices to verify that \(\left \{ \|{\sum }_{i=1}^{N}\vartheta _{i}A^{*}_{i}f_{{Q_{i}^{n}}}^{n}\|^{2}\right \}\) is bounded. Since p∗∈Ω, we note that \(A^{*}_{i}\left (I-P_{{Q_{i}^{n}}}\right )A_{i}p^{*}=0\). Hence, it follows from Lemma 5 that

and since {xn} is bounded, for all \(i=1, 2, \dots , N\), we have the sequence \(\left \{\left \|A_{i}^{*}\left (I-P_{{Q^{n}_{i}}}\right )A_{i}x_{n}\right \|\right \}_{n=1}^{\infty }\) is bounded. This implies that the sequence \(\left \{\left \|{\sum }_{i=1}^{N}\vartheta _{i}A^{*}_{i}f_{{Q_{i}^{n}}}^{n}\right \|\right \}_{n=1}^{\infty }\) is also bounded. Consequently, \(\{\bar {\tau }_{n}\}\) is bounded too. Therefore, we can get from (55) that

for i= 1, 2, …, N.

Next, we verify that ωw(xn) ⊂Ω. For each \(i=1, 2, \dots , N\), since ∂qi is bounded on bounded sets, there exists a constant δ > 0 such that \(\|{\eta _{i}^{n}}\|\leq \delta \) for all n ≥ 0, where \({\eta _{i}^{n}}\in \partial q_{i}(A_{i}x_{n})\). Then, from the fact that \(P_{{Q_{i}^{n}}}(A_{i}x_{n})\in {Q_{i}^{n}}\) and (23), it follows (for \(i=1, 2, \dots , N\)) that

Let \(\hat {p}\in \omega _{w}(x_{n})\), there exists a subsequence \(\{x_{n_{m}}\}\) of {xn} such that \(x_{n_{m}}\rightharpoonup \hat {p}\) as \(m\to \infty \). Then, the weak lower semicontinuity of qi and (58) imply that

It turns out that \(A_{i}\hat {p}\in Q_{i}\) for \(i=1, 2, \dots , N\). Next, we turn to prove that \(\hat {p}\in C\). Since ∂c is bounded, there exists a constant γ > 0 such that ∥ξn∥≤ γ for all n ≥ 0, where ξn ∈ ∂c(xn). Then, from that trivial fact that \(P_{C_{n}}(x_{n})\in C_{n}\) and (22), it follows that

The weak lower semicontinuity of c then implies that

Consequently, \(\hat {p}\in C\). Therefore, \(\hat {p}\in {\Omega }\). Hence, ωw(xn) ⊂Ω.

Moreover, we have the following estimation

since {xn} is bounded and \(\lim _{n\rightarrow \infty }\alpha _{n}=0\), we have that \(\lim _{n\rightarrow \infty }\alpha _{n}\|x_{n}-u\|=0\). Noting that \(\{\|A_{i}^{*}(I-P_{{Q^{n}_{i}}})A_{i}x_{n}\|\}_{n=1}^{\infty }\) is bounded, (57) together with the conditions \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), we have that

which implies that

Furthermore, due to Lemma 1 (1), we get

Taking into account of (52), we have

Applying Lemma 7 to (64), we obtain sn = ∥xn − p∗∥2 → 0.

Case 2: Assume that {sn} is not eventually decreasing. That is, we can find an integer n0 such that \(s_{n_{0}}\leq s_{n_{0}+1}\). Now we define

It is easy to see that Mn is nonempty and satisfies \(M_{n}\subseteq M_{n+1}\). Let

It is clear that \(\phi (n)\to \infty \) as \(n\to \infty \) (otherwise, {sn} is eventually decreasing). It is also clear that sϕ(n) ≤ sϕ(n)+ 1 for all n > n0. Moreover,

In fact, if ϕ(n) = n, then (67) is trivial: if ϕ(n) < n, from (66), there exists some \(j\in \mathbb {N}\) such that ϕ(n) + j = n, we deduce that

and (67) holds again. Since sϕ(n) < sϕ(n)+ 1 for all n > n0, it follows from (53) that

so that

and

Noting that \(\left \{\left \|A_{i}^{*}(I-P_{Q^{\phi (n)}_{i}})A_{i}x_{\phi (n)}\right \|\right \}_{n=1}^{\infty }\) is bounded, for \(i=1, 2, \dots , N\), we also have that

By the same argument to the proof in Case 1, we have ωw(xϕ(n)) ⊂Ω.

Furthermore, by the same argument to the proof in Case 1, from (62), we have that

Thus, one can deduce that

Since sϕ(n) ≤ sϕ(n)+ 1, it follows from (52) that

Hence, \(\lim _{n\rightarrow \infty }s_{\phi (n)}=0\), which together with (73)

which, together with (67), in turn implies that sn → 0, that is, xn → p∗. Therefore, the full iterative sequence {xn} converges strongly to the solution p∗ = PΩ(u) of SFPMOS (12). This completes the proof. □

Corollary 2

Assume that the SFPMOS (12) is consistent (i.e., Ω≠∅). Let x0 ∈ H be an arbitrary initial point, and set n = 0. Let {xn} be a sequence generated via the manner

s where the step-size τn is updated self-adaptively as

where for a constant β > 0

and Cn and \({Q_{i}^{n}}\) are the half-spaces given as in (22) and (23), respectively. Suppose the parameters \(\{{\rho _{1}^{n}}\}, \{{\rho _{2}^{n}}\}, \{\alpha _{n}\}\) are in (0,1) satisfying the conditions in Theorem 2, and \(\{\vartheta _{i}\}_{i=1}^{N}>0\). Then, the sequence {xn} converges strongly to the point p∗∈Ω, where p∗ = PΩ(x0).

Similarly as in Subsection 3.1, for N = 1, we again obtain the following strongly convergent result regarding the SFP (1).

As an immediate consequence of Theorem 2, we obtain the following corollary.

Corollary 3

Assume that the SFP (1) is consistent. Suppose the sequences \(\{{\rho _{1}^{n}}\}\), \(\{{\rho _{2}^{n}}\}\) and {αn} in Algorithm 4 are in (0,1) such that \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), and \(\lim _{n\rightarrow \infty }\alpha _{n}=0\) and \({\sum }_{n=0}^{\infty } \alpha _{n}=\infty \). Then, the sequence {xn} generated by Algorithm 4 converges strongly to the point p∗ = PΩ(u) ∈Ω = {p ∈ H1 : p ∈ C such that Ap ∈ Q}.

4 Application to the generalized split feasibility problem

In this section, we present an application of Theorems 1 and 2 for solving generalized split feasibility problem (another generalization of the SFP) in Hilbert spaces. We recall the generalized split feasibility problem first.

In 2020, Reich and Tuyen [52] first introduced and studied the following generalized split feasibility problem (GSFP).

Let \(H_{i}, i=1, 2, \dots , N,\) be real Hilbert spaces and \(C_{i},~i=1, 2, \dots , N,\) be closed and convex subsets of Hi, respectively. Let \(B_{i}: H_{i}\to H_{i+1},~i=1, 2, \dots , N-1,\) be bounded linear operators such that

Given Hi, Ci and Ai as above, the generalized SFP (GSFP)([52]) is to

That is \(p^{*}\in C_{1}, B_{1}p^{*}\in C_{2},\dots , B_{N-1}B_{N-2}{\dots } B_{1}p^{*}\in C_{N}\). In [52], Reich and Tuyen proved a strong convergence theorem for a modification of the CQ method which solves the GSFP (81). For more details on the GSFP (81), one can read the paper [52].

Remark 1

([43, Remark 1.1]) Letting H = H1,C = C1,Qi = Ci+ 1,1 ≤ i ≤ N − 1, \(A_{1}=B_{1}, A_{2}=B_{2}B_{1}, \dots ,\) and \(A_{N-1}=B_{N-1}B_{N-2}B_{N-3} {\dots } B_{2}B_{1}\), then the SFPMOS (12) becomes the GSFP (81).

From Theorem 1 and Remark 1, we note the following theorem for solving the GSFP (81).

Theorem 3

Let H = H1,C = C1,Qi = Ci+ 1,1 ≤ i ≤ N − 1, \(A_{1}=B_{1}, A_{2}=B_{2}B_{1}, \dots ,\) and \(A_{N-1}=B_{N-1}B_{N-2}B_{N-3} {\dots } B_{2}B_{1}\). Assume that the GSFP (81) is consistent (i.e., S≠∅). Let x0 ∈ C1 be an arbitrary initial point and set n = 0. Let {xn} be the sequence generated by

where \({C^{n}_{1}}\) and \(C^{n}_{i+1}\) are half-spaces of C1 and Ci+ 1 (at the nth iterate ), respectively,

where for a constant β > 0

and the sequences \(\{{\rho _{1}^{n}}\}\), \(\{{\rho _{2}^{n}}\}\subset (0, 1)\) such that \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\) and \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), and the parameter \(\{\vartheta _{i}\}_{i=1}^{N}>0\). Then, the sequence {xn} generated by the iterative scheme (82) converges weakly to a solution p∗∈ S.

Again, using Theorem 2 and Remark 1, we note the following result to solve the GSFP (81).

Theorem 4

Let H = H1,C = C1,Qi = Ci+ 1,1 ≤ i ≤ N − 1, \(A_{1}=B_{1}, A_{2}=B_{2}B_{1}, \dots ,\) and \(A_{N-1}=B_{N-1}B_{N-2}B_{N-3} {\dots } B_{2}B_{1}\). Assume that the GSFP (81) is consistent (i.e., S≠∅). Let u ∈ C1 be a fixed point and x0 ∈ C1 is an arbitrary initial point, and set n = 0. Let {xn} be the sequence generated by

where \({C^{n}_{1}}\) and \(C^{n}_{i+1}\) are half-spaces of C1 and Ci+ 1, respectively,

where for a constant β > 0 and

the sequences \(\{{\rho _{1}^{n}}\}\), \(\{{\rho _{2}^{n}}\},\{\alpha _{n}\}\subset (0, 1)\) such that \(0<a_{1}\leq {\rho _{1}^{n}}\leq b_{1}<1\), \(0<a_{2}\leq {\rho _{2}^{n}}\leq b_{2}<1\), \(\lim _{n\rightarrow \infty }\alpha _{n}=0\) and \({\sum }_{n=0}^{\infty } \alpha _{n}=\infty \), and the parameter \(\{\vartheta _{i}\}_{i=1}^{N}>0\). Then, the sequence {xn} generated by the iterative scheme (83) converges strongly to the solution p∗∈ S, where p∗ = PS(u).

5 Numerical results

In this section, we present some numerical examples to illustrate the implementation and efficiency of our proposed methods compared to some existing results by solving some problems. The numerical results are completed on a standard FUJITSUNOTEBOOK laptop with 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80GHz 2.80 GHz with memory 16GB. The code is implemented in MATLAB R2022a. In our numerical experiments, Iter. (n) stands for the number of iterations and CPU(s) for the Elapsed time-run in seconds.

Example 1

([43]) Consider \(H=\mathbb {R}^{10}\), \(H_{1}=\mathbb {R}^{20}\), \(H_{2}=\mathbb {R}^{30}\) and \(H_{3}=\mathbb {R}^{40}\). Find a point \(p^{*}\in \mathbb {R}^{10}\) such that

where the sets C and Qi, and the linear bounded operators Ai are defined by

where \(\textbf {c}\in \mathbb {R}^{10}\), \(\textbf {c}_{1}\in \mathbb {R}^{20}\), \(\textbf {c}_{2}\in \mathbb {R}^{30}\), \(\textbf {c}_{3}\in \mathbb {R}^{40}\), \(\textbf {r}, \textbf {r}_{1}, \textbf {r}_{2}, \textbf {r}_{3}\in \mathbb {R}\), and \(A_{1}:\mathbb {R}^{10}\to \mathbb {R}^{20}\), \(A_{2}:\mathbb {R}^{10}\to \mathbb {R}^{30}\), \(A_{3}:\mathbb {R}^{10}\to \mathbb {R}^{40}\). In this case, for any \(x\in \mathbb {R}^{10}\) we have c(x) = ∥x −c∥2 −r2 and \(q_{i}(A_{i}x)=\|A_{i}x-\textbf {c}_{i}\|^{2}-\textbf {r}^{2}_{i}\) for i = 1,2,3. According to (22) and (23), the half-spaces Cn and \({Q^{n}_{i}}\) (i = 1,2,3), respectively of the sets C and Qi are determined at a point xn and Aixn, respectively as follows:

Then, the metric projections onto the half-spaces Cn and \({Q^{n}_{i}}\) (i = 1,2,3), can be easily calculated. The elements of the representing matrices Ai are randomly generated in the closed interval [− 5,5], the coordinates of the centers c,c1,c2,c3 are randomly generated in the closed interval [− 1,1], and the radii r,r1,r2,r3 are randomly generated in the closed intervals [10,20], [20,40], [30,60] and [40,80], respectively. For simplicity, denote \(e_{1}=(1, 1, \dots , 1)^{T}\in \mathbb {R}^{10}\).

In this example, we examine the convergence of the sequence {xn} which is defined by Algorithms 1 and 3 by solving problem (84) compared to the recently introduced iterative methods for solving the SFPMOS (12) given by Scheme (13), Scheme (14), and with the following viscosity approximation an optimization approach method proposed by Reich et al. [53] for solving the SFPMOS (12). For any given point x0 ∈ H, {xn} is a sequence generated by the iterative method

where f : C → C is a strict contraction mapping of H into itself with the contraction constant 𝜃 ∈ [0,1), {αn}⊂ (0,1), \(I(x_{n}) = \left \{i : \left \|A_{i}x_{n}-P_{Q_{i}}A_{i}x_{n}\right \|\right .\) \(\left .=\max \limits _{i=1, 2, \dots , N}\left \|A_{i}x_{n}-P_{Q_{i}}A_{i}x_{n}\right \|\right \}\), γi,n ≥ 0 for all i ∈ I(xn) with \({\sum }_{i\in I(x_{n})}\gamma _{i,n}=1\), and for \(\{\rho _{n}\}\subset [\bar {a}, \bar {a}]\subset (0, 2)\) \(\{\lambda _{n}\}\subset [0, \infty )\) such that

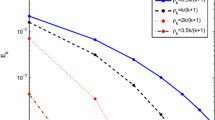

For comparison purpose, we consider the values of the parameters appeared in the methods as follows. For Algorithms 1 and 3, we take β = 0.05, \({\rho ^{n}_{1}}=\frac {1}{10^{4}n+1}={\rho ^{n}_{2}}\) and \(\vartheta _{i}=\frac {i}{12}, i=1,2,3\). For Algorithm 3, Scheme (14), and Scheme (87) \(\alpha _{n}=\frac {1}{n+1}\). For Scheme (13) and Scheme (14), we take λn = 0.00005. For Scheme (14) and Scheme (87), we take f(x) = 0.975x. Moreover, for Scheme (87), we take \(\gamma _{1,n}=\frac {1}{6}\), \(\gamma _{2,n}=\frac {1}{3}\), \(\gamma _{3,n}=\frac {1}{2}\) and \(\rho _{n}=\frac {1}{10^{4}n+1}\). Using En = ∥xn+ 1 − xn∥2 < 10− 8 as stopping criteria, for different choices of the fixed point u and the initial point x0, the results of numerical experiments are reported in Table 1 and Fig. 1.

It can be observed from Table 1 and Fig. 1 that for each choices of (u,x0), our proposed methods Algorithms 1 and 3 have better performance interms of the iteration numbers (Iter. (n)) and comparatively the CPU-run time in seconds (CPU(s)) than of the compared methods. More precisely, Algorithms 1 and 3 have less number of iterations and take small CPU-time to run than of the iterative methods given by Scheme (13), Scheme (14), and Scheme (87).

Example 2

Let H1 = H2 = L2([0,2π]) with the inner product 〈.〉 defined by

and with the norm ∥.∥ defined by

Furthermore, we consider the following half-spaces

In addition, we consider a linear continuous operator A : L2([0,2π]) → L2([0,2π]), where (Ax)(t) = x(t). Then, (A∗x)(t) = x(t) and ∥A∥ = 1. That is, A is an identity operator. The metric projection onto C and Q have an explicit formula [54]. We can also write the projections onto C and the projections onto Q as follows:

Now, we solve the following problem

In this example, we examine the numerical behaviour of our proposed method: Algorithm 4 and compare it with the strongly convergent iterative algorithms given by Scheme (10) and Scheme (11) by solving problem (89). For comparison purpose, we take the following data: For Algorithm 4, we take, β = 0.05, \({\rho _{1}^{n}}={\rho _{2}^{n}}=\frac {n}{n+1}\) and \(\alpha _{n}=\frac {1}{n+1}\). For Schemes (10) and (11), we take \(\rho _{n}=\frac {n}{n+1}\) and \(\alpha _{n}=\frac {1}{n+1}\).

Now, using En = ∥xn+ 1 − xn∥ < 10− 4 as stopping criteria for all methods, for different choices of the fixed point u and the initial point x0, the outcomes of the numerical experiments of the compared methods are reported in Table 2 and Fig. 2.

It can be observed from Table 2 and Fig. 2 that for each choices of u and x0, Algorithm 4 is faster in terms of less number of iterations (Iter. (n)) and CPU-run time in seconds (CPU(s)) than the compared algorithms.

Example 3

The problem of computing sparse solutions (i.e., solutions where only a very small number of entries are nonzero) to linear inverse problems arises in a large number of application areas, for instance, in image restoration [55], channel equalization [56], echo cancellation [57], and stock market analysis [58]. The linear inverse problem consists of computing sparse solutions of a vector that has been digitized and has been degraded by an additive noise. Without loss of generality, for a vector x ∈ H1 and an observed vector y ∈ H2, a model including an additive noise can be written as

where A is a bounded linear operator between the two Hilbert spaces H1 and H2 and η ∈ H2 denotes the additive noise.

Suppose that H1 = H2 = L2([0,1]) with norm \(\|x\|:=\left ({{\int \limits }_{0}{\!}^{1}}|x(t)|^{2}dt\right )^{\frac {1}{2}}\) and inner product \(\langle x, y\rangle :={{\int \limits }_{0}{\!}^{1}}x(t)y(t)dt\), x,y ∈ L2([0,1]). Define the Volterra integral operator \(A:L^{2}([0,1])\rightarrow L^{2}([0,1])\) by

Then, A is bounded linear monotone and \(\|A\|=\frac {2}{\pi }\) (see [59, Problem 188, p100; Solution 188, p300]). Using Algorithm 4, we develop an iterative algorithm to recover the solution of the linear equation Ax = y − η. Furthermore, we compare the performance of our proposed Algorithm 4 and Scheme (10).

We are interested in solutions x∗∈{x ∈ C : Ax ∈ Q}, where C is the cone of functions x(t) that are negative for t ∈ [0,0.25] and positive for t ∈ [0.25,1] and Q = [a(t),b(t)] := {y(t) : a(t) ≤ y(t) ≤ b(t),0 ≤ t ≤ 1} is a box delimited by the functions a(t) and b(t). The metric projection PQ can be computed by formula:

For some problems, the solution is almost sparse. To ensure the existence of the solution of the consider problem, K-sparse vector x∗(t) is generated randomly in C. Taking y(t) = Ax∗(t) and a(t) = y(t) − 0.01, b(t) = y(t) + 0.01, we have Q = [a(t),b(t)]. We take K = 30, K = 55 and K = 70 (see Fig. 3). The problem of interest is to find x ∈ C such that Ax ∈ Q.

We compare the behavior of Algorithm 4 and Scheme (10) for the same initial point \(x_{0}=e^{4t^{2}}\) and same fixed point u = t2. Set \({\rho _{1}^{n}}=\frac {2n}{3n+1}={\rho _{2}^{n}}\) and \(\alpha _{n}=\frac {1}{n}\) in Algorithm 4 and \(\rho _{n}=\frac {2n}{3n+1}\) and \(\alpha _{n}=\frac {1}{n}\) in Scheme (10). In the implementation, we take En < ε = 10− 4 as the stopping criterion, where

In Table 3, we present our numerical results with different K-sparse (K = 30, 55, 70). Table 3 shows the number of iterations and the time of execution in seconds (CPU(s)) of Algorithm 4 and Scheme (10). In Fig. 4, we report the behavior of Algorithm 4 and Scheme (10) for K = 30, 55, 70. Furthermore, Fig. 4 presents error value versus the iteration numbers. It can be seen that Algorithm 4 is significantly faster than Scheme (10). This shows the effectiveness of our proposed algorithms.

Number of iterations and error estimate for Algorithm 4 and Scheme (10)

References

Censor, Y., Elfving, T.: A multiprojection algorithm using bregman projections in a product space. Numer. Algorithms 8(2), 221–239 (1994)

Byrne, C.: Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18(2), 441 (2002)

Censor, Y., Elfving, T., Kopf, N., Bortfeld, T.: The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 21(6), 2071 (2005)

Censor, Y., Bortfeld, T., Martin, B., Trofimov, A.: A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 51(10), 2353 (2006)

Censor, Y., Segal, A.: Iterative projection methods in biomedical inverse problems. Mathematical methods in biomedical imaging and intensity-modulated radiation therapy (IMRT) 10, 65–96 (2008)

Wang, J., Hu, Y., Li, C., Yao, J.-C.: Linear convergence of CQ algorithms and applications in gene regulatory network inference. Inverse Probl. 33 (5), 055017 (2017)

Ansari, Q. H., Rehan, A.: Split feasibility and fixed point problems. In: Nonlinear Analysis, Springer, pp. 281–322 (2014)

Xu, H.-K.: Iterative methods for the split feasibility problem in infinite-dimensional hilbert spaces. Inverse Probl. 26(10), 105018 (2010)

Byrne, C.: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20(1), 103 (2003)

Takahashi, W.: The split feasibility problem and the shrinking projection method in banach spaces. J. Nonlinear Convex Anal. 16(7), 1449–1459 (2015)

Xu, H.-K.: A variable krasnosel’skii–mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 22(6), 2021 (2006)

Yang, Q.: The relaxed CQ algorithm solving the split feasibility problem. Inverse Probl. 20(4), 1261 (2004)

Gibali, A., Mai, D. T., et al.: A new relaxed CQ algorithm for solving split feasibility problems in hilbert spaces and its applications. J. Ind. Manag. Optim. 15(2), 963 (2019)

Sahu, D.R., Cho, Y.J., Dong, Q.L., Kashyap, M.R., Li, X.H.: Inertial relaxed CQ algorithms for solving a split feasibility problem in hilbert spaces. Numer. Algorithms 1–21 (2020)

López, G., Martín-Márquez, V., Wang, F., Xu, H.-K.: Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 28(8), 085004 (2012)

He, S., Zhao, Z.: Strong convergence of a relaxed CQ algorithm for the split feasibility problem. J. Inequal. Appl. 2013(1), 197 (2013)

Yao, Y., Postolache, M., Liou, Y.-C.: Strong convergence of a self-adaptive method for the split feasibility problem. Fixed Point Theory and Applications 2013(1), 201 (2013)

Dang, Y., Sun, J., Xu, H.: Inertial accelerated algorithms for solving a split feasibility problem. Journal of Industrial and Management Optimization 13(3), 1383–1394 (2017)

Gibali, A., Liu, L.-W., Tang, Y.-C.: Note on the modified relaxation CQ algorithm for the split feasibility problem. Optim. Lett. 12(4), 817–830 (2018)

Zhang, W., Han, D., Li, Z.: A self-adaptive projection method for solving the multiple-sets split feasibility problem. Inverse Probl. 25(11), 115001 (2009)

Zhao, J., Yang, Q.: A simple projection method for solving the multiple-sets split feasibility problem. Inverse Probl. Sci. Eng. 21(3), 537–546 (2013)

López, G., Martin, V., Xu, H.K., et al.: Iterative algorithms for the multiple-sets split feasibility problem. Biomed. Math.: Promising Directions in Imag, Therapy Planning Inverse Problems 243–279 (2009)

Dang, Y.-, Yao, J., Gao, Y.: Relaxed two points projection method for solving the multiple-sets split equality problem. Numer. Algorithms 78 (1), 263–275 (2018)

Iyiola, O.S., Shehu, Y.: A cyclic iterative method for solving multiple sets split feasibility problems in banach spaces. Quaest. Math. 39(7), 959–975 (2016)

Shehu, Y.: Strong convergence theorem for multiple sets split feasibility problems in banach spaces. Numer. Funct. Anal. Optim. 37(8), 1021–1036 (2016)

Suantai, S., Pholasa, N., Cholamjiak, P.: Relaxed CQ algorithms involving the inertial technique for multiple-sets split feasibility problems. Revista de la Real Academia de Ciencias Exactas, Físicas y Naturales. Serie A. Matemáticas 113(2), 1081–1099 (2019)

Wang, J., Hu, Y., Yu, C. K. W., Zhuang, X.: A family of projection gradient methods for solving the multiple-sets split feasibility problem. J. Optim. Theory Appl. 183(2), 520–534 (2019)

Mewomo, O.T., Ogbuisi, F.U.: Convergence analysis of an iterative method for solving multiple-set split feasibility problems in certain banach spaces. Quaest. Math. 41(1), 129–148 (2018)

Buong, N., Hoai, P. T. T., Binh, K. T.: Iterative regularization methods for the multiple-sets split feasibility problem in hilbert spaces. Acta Appl. Math. 165(1), 183–197 (2020)

Yao, Y., Postolache, M., Zhu, Z.: Gradient methods with selection technique for the multiple-sets split feasibility problem. Optimization (2019)

Wang, X.: Alternating proximal penalization algorithm for the modified multiple-sets split feasibility problems. J. Inequal. Appl. 2018(1), 1–8 (2018)

Taddele, G. H., Kumam, P., Gebrie, A. G., Sitthithakerngkiet, K.: Half-space relaxation projection method for solving multiple-set split feasibility problem. Math. Comput. Appl. 25(3), 47 (2020)

Taddele, G. H., Kumam, P., Gebrie, A. G.: An inertial extrapolation method for multiple-set split feasibility problem. J. Inequal. Appl. 2020 (1), 1–22 (2020)

Sunthrayuth, P., Tuyen, T. M.: A generalized self-adaptive algorithm for the split feasibility problem in banach spaces. Bull. Iran. Math. Soc. 1–25 (2021)

Censor, Y., Segal, A.: The split common fixed point problem for directed operators. J. Convex Anal 16(2), 587–600 (2009)

Moudafi, A.: The split common fixed-point problem for demicontractive mappings. Inverse Probl. 26(5), 055007 (2010)

Censor, Y., Gibali, A., Reich, S.: Algorithms for the split variational inequality problem. Numer. Algorithms 59(2), 301–323 (2012)

Byrne, C., Censor, Y., Gibali, A., Reich, S.: The split common null point problem. J. Nonlinear Convex Anal 13(4), 759–775 (2012)

Tuyen, T. M., Ha, N. S., Thuy, N. T. T.: A shrinking projection method for solving the split common null point problem in banach spaces. Numer. Algorithms 81(3), 813–832 (2019)

Reich, S., Tuyen, T. M.: Two projection algorithms for solving the split common fixed point problem. J. Optim. Theory Appl. 186(1), 148–168 (2020)

Reich, S., Tuyen, T. M.: Two new self-adaptive algorithms for solving the split common null point problem with multiple output sets in hilbert spaces. J. Fixed Point Theory Appl. 23(2), 1–19 (2021)

Reich, S., Tuyen, T. M.: Projection algorithms for solving the split feasibility problem with multiple output sets. J. Optim. Theory Appl. 190(3), 861–878 (2021)

Reich, S., Truong, M. T., Mai, T. N. H.: The split feasibility problem with multiple output sets in hilbert spaces. Optim. Lett 1–19 (2020)

Bauschke, H. H., Combettes, P. L., et al.: Convex analysis and monotone operator theory in hilbert spaces, vol. 408. Springer, Berlin (2011)

Goebel, K., Simeon, R.: Uniform convexity, hyperbolic geometry, and nonexpansive mappings. Dekker (1984)

Opial, Z.: Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 73(4), 591–597 (1967)

Rockafellar, R. T.: Monotone operators and the proximal point algorithm. SIAM journal on control and optimization 14(5), 877–898 (1976)

Cholamjiak, P., Suantai, S., et al.: A new CQ algorithm for solving split feasibility problems in hilbert spaces. Bull. Malays. Math. Sci. Soc. 42 (5), 2517–2534 (2019)

Maingé, P.-E.: Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Analysis 16(7-8), 899–912 (2008)

Xu, H.-K.: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66(1), 240–256 (2002)

Fukushima, M.: A relaxed projection method for variational inequalities. Math. Program. 35(1), 58–70 (1986)

Reich, S., Tuyen, T. M.: Iterative methods for solving the generalized split common null point problem in hilbert spaces. Optimization 69(5), 1013–1038 (2020)

Reich, S., Tuyen, T. M., Ha, M. T. N.: An optimization approach to solving the split feasibility problem in hilbert spaces. J. Glob. Optim 1–16 (2020)

Cegielski, A.: Iterative methods for fixed point problems in hilbert spaces, vol. 2057. Springer, Berlin (2012)

Jeffs, B. D., Gunsay, M.: Restoration of blurred star field images by maximally sparse optimization. IEEE Trans. Image Process. 2(2), 202–211 (1993)

Fevrier, I. J., Gelfand, S. B., Fitz, M. P.: Reduced complexity decision feedback equalization for multipath channels with large delay spreads. IEEE Trans. Commun. 47(6), 927–937 (1999)

Duttweiler, D. L.: Proportionate normalized least-mean-squares adaptation in echo cancelers. IEEE Transactions on speech and audio processing 8(5), 508–518 (2000)

Ramsey, J. B., Zhang, Z.: The application of wave form dictionaries to stock market index data. In: Predictability of complex dynamical systems, Springer, pp. 189–205 (1996)

Halmos, P. R.: A hilbert space problem book. Springer-Verlag, New York (1982)

Acknowledgements

The authors acknowledge the financial support provided by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), KMUTT. Guash Haile Taddele is supported by the Petchra Pra Jom Klao Ph.D. Research Scholarship from King Mongkut’s University of Technology Thonburi (Grant No.37/2561). Moreover, we would like to thank the Editor and Reviewers for taking the time and effort necessary to review the manuscript. We sincerely appreciate all valuable comments and suggestions, which helped us to improve the quality of the manuscript.

The authors acknowledge the financial support provided by “Mid-Career Research Grant” (N41A640089).

Funding

This research was funded by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), Faculty of Science, KMUTT. The first author was supported by the Petchra Pra Jom Klao Ph.D. Research Scholarship from King Mongkut’s University of Technology Thonburi with Grant No. 37/2561.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Author contribution

All authors contributed equally to the manuscript and read and approved the final manuscript.

Availability of data and material

Not applicable.

Code availability

Not available.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Taddele, G.H., Kumam, P., Sunthrayuth, P. et al. Self-adaptive algorithms for solving split feasibility problem with multiple output sets. Numer Algor 92, 1335–1366 (2023). https://doi.org/10.1007/s11075-022-01343-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01343-6

Keywords

- Split feasibility problem

- Split feasibility problem with multiple output sets

- Hilbert space

- Relaxed CQ algorithm

- Self-adaptive technique