Abstract

This paper is concerned with a linearized second-order finite difference scheme for solving the nonlinear time-fractional Schrödinger equation in d (d = 1,2,3) dimensions. Under a weak assumption on the nonlinearity, the optimal error estimate of the numerical solution is established without any restriction on the grid ratio. Besides the standard energy method, the key tools for analysis include the mathematical induction method, several inverse Sobolev inequalities, and a discrete fractional Gronwall-type inequality. The convergence rate of the proposed scheme is of O(τ2 + h2) with time step τ and mesh size h. Numerical results are carried out to confirm the theoretical analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the d-dimensional (d = 1,2,3) nonlinear time-fractional Schrödinger (NTFS) equation

with initial condition

and boundary condition

where \(i = \sqrt {-1}\) is the imaginary unit, t is time variable, x is coordinate in \(\mathbb {R}^{d}\), \({\Omega } \subset \mathbb {R}^{d}\) is a bounded computational domain, u = u(x,t) is the unknown complex-valued wave function, \(f \in C^{1}(\mathbb {R}^{+})\) is a given real-valued function, u0 is a given complex-valued function, and \({}^{\mathcal {C}}_{0}\mathcal {D}_{t}^{\alpha }\) with α ∈ (0,1) represents the Caputo fractional derivative defined by

Here Γ(⋅) denotes the standard gamma function \( {\Gamma }(z)={\int \limits }_{0}^{\infty }t^{z-1}e^{-t} d t \). the NTFS equation is a widely used model for plenty of nature phenomena in physics [19, 38, 44, 46]. In recent years, extensive numerical studies have been carried out in the literature for solving time-fractional PDEs. As α tends to 1, the NTFS equation reduces to the famous nonlinear Schrödinger (NLS) equation. For numerical methods on solving NLS equation, we refer to [3, 5,6,7, 12, 16, 17, 26, 27, 31, 39, 40, 42, 43, 45, 47, 50, 52] and references therein.

In recent years, extensive numerical studies have been carried out in the literature for solving fractional partial differential equations. Those numerical methods can be roughly divided into two types, indirect ones and direct ones. The indirect methods [10, 11, 36, 37, 54] consider reformulating the time-fractional differential equations into integro-differential equations, while the direct methods [1, 4, 8, 9, 13, 15, 18, 20,21,22,23,24,25, 30, 32, 34, 35, 48, 49, 51] directly consider approximating the fractional derivative via some numerical schemes. From the practical implementation perspective, the direct methods are much easier to implement than the indirect methods. Most of the direct methods use finite difference method or finite element method in spatial direction which are of little difference from the numerical schemes of integro-differential equations.

In [33, 41], an L1 approximation to the Caputo fractional derivative was presented, where the truncation error of the time-fractional derivative is merely of O(τ2−α). In order to improve the accuracy, an L1-2 formula [14] and an L2-1σ formula [2] were proposed to approximate the Caputo fractional derivative with truncation error of O(τ3−α). In fact, for the integer NLS equation, the L1-2 formula reduces to the famous second-order backward differentiation formula, and the L2-1σ formula reduces to the famous Crank-Nicolson differentiation formula.

Though extensive numerical studies for time-fractional partial differential equations have been carried out in literature, few numerical methods are proposed for the multi-dimensional nonlinear time-fractional Schrödinger equation. In [25], Li, Wang and Zhang proposed a linearized L1-Galerkin finite element method to solve the nonlinear time-fractional Schrödinger equation in multi-dimensions. By a temporal-spatial error-splitting method and a fractional Gronwall-type inequality, they obtained the optimal error estimate of the numerical scheme without any grid ratio condition, and the convergence order is proved to be of O(τ2−α + hk) with time step τ and mesh size h. In [48], to improve the temporal accuracy, Wang et al. adopted the L2-1σ method to approximate the Caputo time-fractional derivative and utilized the Galerkin finite element method in space to derive two numerical methods with second-order accuracy in time direction, then they established the optimal error estimates by using similar method in [25]. However, as far as we know, there is few analysis on finite difference scheme of the NTFS equation. Hence, we derive an accurate finite difference scheme for NTFS equation and establish the optimal error estimate in this work. In summary, the main contributions of this paper are threefold:

-

(1)

A linearized L2-1σ finite difference scheme for the NTFS (1.1) with second-order accuracy in time is proposed;

-

(2)

The optimal error estimate of the proposed scheme is established without any restriction on the grid ratio. Meanwhile, a new analysis for an iterative procedure to obtain the numerical solution at the first time level is given.

-

(3)

A novel and concise analysis method is introduced to establish the optimal error estimate by combining the standard energy method, the mathematical induction method, inverse Sobolev inequalities, and a fractional Gronwall-type inequality. In fact, by using this new analysis method, one can avoid estimating the semi-H1 norm or the semi-H2 norm of the “error” function, which not only makes the new analysis method rather concise than the existing ones in the literature but also merely requires \(f \in C^{1}(\mathbb {R}^{+})\) instead of \(f \in C^{3}(\mathbb {R}^{+})\) required in existing works.

The rest of this paper is organized as follows. In Section 2, a linearized fully discrete numerical scheme is proposed and the main convergence result is stated. In Section 3, a time-fractional Gronwall-type inequality for L2-1σ approximation is introduced and the L2 error estimate of the numerical solution is established without any restriction on the grid ratio. In Section 4, several numerical examples are provided to verify our theoretical results. Finally, conclusions and future perspectives are drawn in Section 5.

2 An accurate finite difference scheme and main result

For simplicity, we here only consider the NTFS equation in two dimensions with computation domain Ω = (a,b) × (c,d). The extension to three-dimensional cases is straightforward with minor modification. The initial-boundary value problem of the two-dimensional NTFS equation reads

For a positive integer N, choose time step τ = T/N and denote time steps tn = nτ, n = 0,1,2,⋯ ,N, where \(0<T<T_{\max \limits }\) with \(T_{\max \limits }\) the maximal existing time of the solution. Choose mesh sizes h1 = (b − a)/J, h2 = (d − c)/K with two positive integers J and K;let \(h = \max \limits \{h_{1},h_{2}\}\) and \(\tilde {h} = \min \limits \{h_{1},h_{2}\}\) satisfy \(h \leq C_{0}\tilde {h}\) with C0 a positive constant; and denote grid points (xj,yk) = (a + jh1,c + kh2) for j = 0,1,2,⋯ ,J,k = 0,1,2,⋯ ,K.

Introduce three index sets

and three corresponding grid sets

From the notations of above index sets and corresponding grid sets, we can easily see that

For simplicity, we define a space of grid functions defined on \(\overline {\Omega }_{h}\) as

We denote by \(U_{j,k}^{n}\) and \(u_{j,k}^{n}\) as the numerical approximation and the exact value of u at the point (xj,yk,tn), respectively. For a grid function ωn ∈ Xh, we introduce the L2-1σ formula given in [2] to approximate the Caputo derivative, i.e.,

where μ = ταΓ(2 − α), σ = 1 − α/2, and

with

Remark 2.1

Because L2 − 1σ formula uses a linear interpolation at the last temporal layer which is different with the quadratic interpolation at other temporal layers, \(C_{l}^{\sigma }\) depends on n, which means that \(C_{l}^{\sigma }\) is different in every layers.

For a function v ∈ C3([0,T]), the local truncation error between \(D_{\sigma }^{\alpha } v(t_{n})\) and \({}^{\mathcal {C}}_{0} D_{t}^{\alpha } v(t_{n+\sigma })\) satisfies [2]

where tn+σ = (n + σ)τ.

As usual, for any grid function ωn ∈ Xh, we introduce the following finite difference quotient operators/notations,

For any grid functions w,v ∈ Xh, we define discrete inner products and discrete norms over Xh as

where \(\overline {\nu }\) is the complex conjugate of ν, |w|1 and |w|2 are semi-norms of the grid function w ∈ Xh, and ∥w∥,∥w∥1,∥w∥2 are norms of the grid function w ∈ Xh.

Throughout the paper, we denote C as a generic positive constant which depends on the regularity of the exact solutions and the given data but is independent of the time step τ and the grid size h.

2.1 Finite difference scheme

Now, we give the following finite difference scheme to solve the problem (2.1)–(2.3) as

Due to the local extrapolation used in approximating the nonlinear term, the above scheme is not self-starting. In order to start it, we introduce the following iterative algorithm to obtain \(U^{1}=U^{1,m_{\alpha }}\in {X_{h}}\):

where \(m_{\alpha }:=\left [\frac {1}{\alpha } + \frac {1}{2}\right ]\) and W1,s = (1 − σ)U0 + σU1,s for s = 0,1,2,⋯ ,mα.

Remark 2.2

For the integer NLS equation (α = 1), the above iterative algorithm reduces to a two-level linearized implicit scheme in which the approximations of the linear terms and the nonlinear terms are implicit and explicit, respectively.

2.2 Main result

In this paper, we assume that the exact solution satisfies

with positive number ε arbitrarily small.

Remark 2.3

In order to deal with the singularity of the time-fractional derivative (1.4) at time t = 0, we give a particular two-level scheme (i.e., the scheme (2.8)–(2.10)) to solve the numerical solution at the first level. And we can see from the analysis of the local truncation error and convergence rate of the two-level scheme, we just need to require that the exact solution u at the initial time interval [0,τ] satisfies \({\int \limits }_{0}^{\tau } \|u_{t}(\cdot , cdot, \theta \tau )\| d\theta \leq C\).

We now state our main theoretical result in the following theorem.

Theorem 2.1

Suppose that the system (2.1)–(2.3) has a unique solution u = u(x,y,t) satisfying (2.11), then the scheme (2.5)–(2.10) has a unique solution Un ∈ Xh for n = 0,1,2,⋯ ,N satisfying

where un ∈ Xh with \(u_{j,k}^{n}=u(x_{j},y_{k},t_{n})\).

3 Error analysis

In this section, we aim to prove the optimal error estimate given in Theorem 2.1. At first, let us introduce several lemmas which will be frequently used in our analysis.

Lemma 3.1

[28, 29, 48] Suppose that the nonnegative sequences {ωn,gn|n = 0,1,2,⋯} satisfy and

where λ1 > 0,λ2 > 0,λ3 > 0 are given constants independent of τ. Then there exists a positive constant τ∗ suchthat,when0 <τ ≤ τ∗, there is

Here, \( E_{\alpha }(z) = {\sum }_{k=0}^{\infty } \frac {z^{k}}{\Gamma (1+k\alpha )} \) is the Mittag-Leffler function, and \( \lambda = 6 \lambda _{1} + \frac {C_{0}^{\sigma } \lambda _{2}}{C_{0}^{\sigma } - C_{1}^{\sigma }} + \frac {C_{0}^{\sigma } \lambda _{3}}{C_{1}^{\sigma } - C_{2}^{\sigma }} \).

The time-fractional Gronwall inequality given in Lemma 3.1 plays a crucial role in our analysis work, and we give another different and direct proof of the inequality in the Appendix section.

Remark 3.1

Under some particular condition, the time-fractional Gronwall inequality given in Lemma 3.1 can be viewed as a spatial case of the one given in [29] where the nonuniform time step is allowed, and they proved it by using the discrete convolution method. This also means that our proposed scheme can be generalized to the nonuniform time stepping case, and the convergence results can be proved similarly by using our analysis method together with the time-fractional Gronwall inequality given in [29].

Lemma 3.2

[53] For any grid function v ∈ Xh, there is

Lemma 3.3

For any grid function v ∈ Xh, there is

where C0 is the constant used to limit the mesh ratio in both directions of space.

Proof

From the definition of the maximum norm and the discrete L2 norm, we have

where \(h\leq {C_{0}} {\tilde {h}}\) was used. This immediately gives (3.5). □

Lemma 3.4 (Lemma 3 in 48)

For any grid function ωn ∈ Xh, there are

where Im(ν) and Re(ν) denote the imaginary part and the real part of ν, respectively.

Lemma 3.5

For any grid function ωn ∈ Xh, there is

Proof

Combining \( C_{0}^{\sigma } \geq C_{1}^{\sigma } \geq {\cdots } \ge C_{N-1}^{\sigma } \geq 0 \) with

gives

Then, by using Cauchy-Schwarz inequality, we have

It follows that

This completes the proof of Lemma 3.5. □

3.1 Local truncation error

We define the local truncation errors \(P^{n+\sigma }\in {X_{h}}, {P}^{\sigma ,s}\in {X_{h}}\) of the scheme (2.5)–(2.10) as follows,

Noticing the initial-boundary value problem (2.1)–(2.3), one can see that

Under assumption (2.11), one can use the standard Taylor’s expansion to obtain that

Substituting the above equations into (3.11)–(3.12) gives

Lemma 3.6

Under assumption (2.11), we have the following estimates of the local truncation errors:

3.2 Proof of the main result

This subsection aims to give the proof of Theorem 2.1. For simplicity of notations, we define the “error” functions e1,s ∈ Xh, en ∈ Xh for n = 0,1,⋯ ,N as

Then we obtain the following “error” equations by subtracting (2.5)–(2.10) from (3.10)–(3.9),

where

Lemma 3.7

Under assumption (2.11), we have the following estimates of the “error” functions e1,s,

Proof

Here we use mathematical induction method to prove this lemma in three steps.

Step 1. when s = 0, we obtain from (3.15) and (2.10) that

which together with (2.11) gives

Step 2. when s = 1, we have

where ξ is some number between \(|{u}_{j,k}^{0}|^{2}\) and \(|{u}_{j,k}^{\sigma }|^{2}\). This together with \(f \in C^{1}(\mathbb {R}^{+})\) gives

For s = 1 in (3.17), computing the inner product of (3.17) with e1,1, and taking the imaginary part, we arrive at

where (3.14) and (3.23) were used. In order to estimate |e1,1|2, we rewrite (3.17) with s = 1 into

then taking the discrete L2 norm of both sides of (3.27), we have

where (3.14), (3.23) and (3.25) were used. Therefore, (3.20) and (3.21) hold for s = 1.

Step 3. With mathematical induction method, we suppose that (3.20) holds for s ≤ m − 1 with 2 ≤ m ≤ mα, i.e.,

this together with Lemma 3.2 gives

Hence, for sufficiently small τ and h, we have

Noting \(f\in C^{1}(\mathbb {R}^{+})\) and using differential mean value theorem, we obtain

where ξ is some number between \(|(1-\sigma ){u}_{j,k}^{0}+\sigma {u}_{j,k}^{1}|^{2}\) and \(|(1-\sigma ){u}_{j,k}^{0}+\sigma {U}_{j,k}^{1,m-1}|^{2}\). Combining (3.32) together with assumption (2.11) and (3.31) gives

Next, we will show that (3.20) and (3.21) hold for s = m. To do this, for s = m in (3.17), by computing the inner product of (3.17) with e1,m and taking the imaginary part of the result, we have

which implies

where (3.14), (3.29) and (3.33) where used. In order to estimate \(\|{e}^{1,m}\|_{\infty }\), we rewrite (3.17) with s = m into the following form:

then taking the discrete L2 norm of both sides of (3.36), we have

where (3.14), (3.29), (3.33) and (3.35) were used. This completes the proof of Lemma 3.7. □

Lemma 3.8

Suppose that the system (2.1)–(2.3) has a unique solution u satisfying (2.11), then the scheme (2.6) has a unique solution \(U_{j,k}^{1}\), and there exists \(\tau _{1}^{*} > 0\) such that when \( {0<}\tau \leq \tau _{1}^{*}\), there is

Proof

Taking s = mα in Lemma 3.7 and using Lemma 3.2 immediately give (3.38). □

We now turn back to the proof of Theorem 2.1.

Proof

From Lemma 3.8, we know that Theorem 2.1 holds for n = 1. By using the mathematical induction method, we assume that (2.12) holds for n ≤ l with l ≤ N − 1, i.e.,

Direct calculation gives that

where ξ is some number between \(|\hat {u}_{j,k}^{l+\sigma }|^{2}\) and \(|\hat {U}_{j,k}^{l+\sigma }|^{2}\) and then it satisfies |ξ|≤ C. This together with (3.39) gives

Next, we are going to prove that (2.12) hold for n = l + 1. Let n = l in (3.16), then by taking the inner product of (3.16) with \(e_{j,k}^{l+\sigma }\) and then taking the imaginary part of the result, we arrive at

where Lemmas 3.4 and 3.6 were used. This together with (3.7) gives

By using Theorem 3.1, there exists a positive constant τ∗ such that when τ < τ∗, there is

In order to get the bound of \(||U^{l+1}||_{\infty }\), we rewrite (3.16) with n = l into the following form,

then taking the discrete L2 norm of both sides of (3.45), we have

where Lemma 3.5 was used. Noting that \(\sigma =1-\frac {\alpha }{2}>\frac {1}{2}>1-\sigma =\frac {\alpha }{2}\) with 0 < α < 1, then by using Minkowski inequality, we obtain

this together with (3.46) gives

Summing (3.48) up for l from 1 to m, then replacing m by l, we obtain

where Lemma 3.8 was used. This together with Lemma 3.2 gives

On the other hand, by using Lemma 3.3, we obtain

where (3.44) was used. Combining (3.50) with (3.51) gives

Hence, for both the case h ≤ τ and the case τ ≤ h, we always have

Therefore, (3.20) and (3.21) hold for n = l + 1. This completes the proof of Theorem 2.1. □

Remark 3.2

The numerical method can be generalized to solve the initial-boundary value problem of the time-fractional Gross-Pitaevskii equation (TFGPE) in d dimensions. For simplicity, we here still take the two-dimensional TFGPE as an example, i.e.,

where V = V (x,y) is a given real-valued function and β is a given real constant. The extension of the linearized second-order finite difference scheme to solve the initial-boundary value problem (3.54)–(3.56) reads

where Vj,k = V (xj,yk) for \((j,k)\in \mathcal {T}_{h}^{0}\). To start the scheme (3.57)–(3.59), we compute \(U^{1}=U^{1,m_{\alpha }}\in {X_{h}}\) by the following two-level scheme

where \(m_{\alpha }:=\left [\frac {1}{\alpha } + \frac {1}{2}\right ]\) and W1,s = (1 − σ)U0 + σU1,s for s = 0,1,2,⋯ ,mα.

4 Numerical experiments

In this section, we carry out several numerical results to show the performance of the proposed scheme for solving the NTFS equation.

Example 4.1

Consider the following 1D cubic NTFS equation

with initial condition

and boundary condition

where a = − 10,b = 10,T = 1.

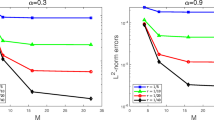

To test the convergence order of the proposed scheme, we choose sufficiently fine time step τ and mesh size h (here we choose h = 10− 3,τ = 10− 3) to get a numerically “exact” solution. The L2-errors at time T = 1 and convergence rates of the proposed scheme in computing Example 4.1 with different α are listed in Tables 1 and 2. One can observe from the two tables that the proposed scheme has an accuracy of O(τ2 + h2). As verifies the convergence results given in Theorem 2.1.

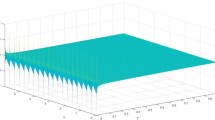

In order to test the influence of α to the evolution of the total mass and energy of the NTFS equation, we draw the total mass and energy of the 1D NTFS equation computed by the proposed scheme in Fig. 2, and draw the approximation of |u| in Fig. 2. From Figs. 1 and 2, we can see that the nonlinear integer Schrödinger equation is dispersive but the NTFS equation is dissipative, and the smaller the parameter α is, the faster the mass and energy dissipate.

Evolution of the mass and energy of the 1D NTFS (4.1) with different α

Evolution of |u| of the 1D NTFS (4.1) with different α

Example 4.2

Consider the following non-homogeneous 2D NTFS equation with different nonlinearities

where the function g, the initial and boundary conditions are determined by the exact solution

The nonlinear term f(s) is selected for three cases:

In Example 4.2, we test the convergence order of the proposed scheme in computing the 2D NTFS equation. In order to reduce the computational cost and memory, we choose h1 = h2 = τ and investigate the temporal convergence and spatial convergence together by refining τ and h simultaneously. The L2-errors at time T = 1 and convergence rates with different α’s are listed in Tables 3, 4 and 5. One can observe again that the accuracy of the proposed scheme is of O(τ2 + h2), which verifies again the error estimate results given in Theorem 2.1.

5 Conclusion

In this paper, we proposed a linearized finite difference scheme to solve the NTFS equation in d (d = 1,2,3) dimensions, and introduced a novel and concise analysis method to establish the optimal error estimate of the numerical solution. Under a weaker requirement of the coefficient function f than the literature, we introduced a new analysis technique to prove that the proposed scheme is unconditionally convergent with L2 convergence order O(h2 + τ2). Our analysis methods can be adopted to relax the requirement of the coefficient function f for the Galerkin FEMs given in [25, 48]. Numerical results of the NTFS equation with several different types of nonlinear terms were carried out to illustrate our theoretical results. Furthermore, if the exact solution is smooth enough, one can use some high accurate method to improve the spatial accuracy, e.g., one can consider adopting the compact finite difference method or Pseudo-spectral method to discretize the spatial derivatives to achieve higher order accuracy in the space. Applying the analysis method used in this paper, one can obtain the optimal error estimate of the high-order accurate scheme without any restrictions on the grid ratio. In our future works, we will discuss a nonlinear finite difference scheme, which can start by itself for solving the NTFS equation, and introduce a concise analysis method to establish the optimal error estimate without any restriction on the grid ratio.

References

Acedo, S.B.Y.: An explicit finite difference method a new von neumann-type stability analysis for fractional diffusion equations. SIAM J. Numer. Anal. 42(5), 1862–1874 (2005)

Alikhanov, A.A.: A new difference scheme for the time fractional diffusion equation. J. Comput. Phys. 280, 424–438 (2015)

Antoine, X., Bao, W., Besse, C.: Computational methods for the dynamics of the nonlinear Schröinger/gross-Pitaevskii equations. Comput. Phys. Comm. 184, 2621–2633 (2013)

Antoine, X., Tang, Q., Zhang, J.: On the numerical solution and dynamical laws of nonlinear fractional Schrödinger/gross-Pitaevskii Equations. Int. J. Comput. Math. 95, 1423–1443 (2018)

Bao, W., Cai, Y.: Mathematical theorey and numerical methods for Bose-Einstein condensation. Kinet. Relat. Mod. 6, 1–135 (2013)

Bao, W., Carles, R., Su, C., Tang, Q.: Error estimates of a regularized finite difference method for the logarithmic Schrödinger equation. SIAM J. Numer. Anal. 57, 657–680 (2019)

Bao, W., Carles, R., Su, C., Tang, Q.: Regularized numerical methods for the logarithmic Schrödinger equation. Numer. Math. 143, 461–487 (2019)

Bhrawy, A.H., Doha, E.H., Ezz-Eldien, S.S., Van Gorder, R.A.: A new Jacobi spectral collocation method for solving 1 + 1 fractional Schrödinger equations and fractional coupled Schrödinger systems. Eur. Phys. J. plus. 129, 260 (2014)

Bhrawy, A.H., Abdelkawy, M.A.: A fully spectral collocation approximation for multidimensional fractional Schrödinger equations. J. Comput. Phys. 294, 462–483 (2015)

Cao, J., Xu, C.: A high order schema for the numerical solution of the fractional ordinary differential equations. J. Comput. Phys. 586, 93–103 (2013)

Cao, W., Zhang, Z., Karniadakis, G.E.: Time-splitting schemes for fractional differential equations I: smooth solutions. SIAM J. Sci. Comput. 37(4), A1752–A1776 (2015)

Chang, Q., Jia, E., Sun, W.: Difference schemes for solving the generalized nonlinear Schrödinger equation. J. Comput. Phys. 148, 397–415 (1999)

Chen, X., Di, Y., Duan, J., Li, D.: Linearized compact, ADI Schemes for nonlinear time-fractional Schrödinger equations. Appl. Math. Lett. 84, 160–167 (2018)

Gao, G., Sun, Z., Zhang, H.: A new fractional numerical differentiation formula to approximate the Caputo fractional derivative and its applications. J. Comput. Phys. 259, 33–50 (2014)

Gao, G., Sun, Z.: A compact finite difference scheme for the fractional sub-diffusion equations. J. Compu. Phys. 230(3), 586–595 (2011)

Henning, P., Peterseim, D.: Crank-nicolson Galerkin approximation to nonlinear Schrödinger equation with rough potentials. Math. Mod. Meth. Appl. S 27(11), 2147–2184 (2017)

Henning, P., Wrnegrd, J.: A note on optimal H1-error estimates for Crank-Nicolson approximations to the nonlinear Schrödinger equation, BIT. https://doi.org/10.1007/s10543-020-00814-3

Hicdurmaz, B., Ashyralyev, A.: A stable numerical method for multidimensional time fractional Schrödinger equations. Comput. Math. Appl. 72, 1703–1713 (2016)

Iomin, A.: Fractional-time Schrdinger equation: fractional dynamics on a comb. Chaos Soliton. Fract. 44(4-5), 348–352 (2011)

Jin, B., Lazarov, R., Zhou, Z.: Two schemes for fractional diffusion and diffusion-wave equations with nonsmooth data. SIAM J. Sci. Comput. 38(1), A146–A170 (2014)

Jin, B., Li, B., Zhou, Z.: Numerical analysis of nonlinear subdiffusion equations. SIAM J. Numer. Anal. 56(1), 1–23 (2017)

Jin, B., Li, B., Zhou, Z.: Discrete maximal regularity of time-stepping schemes for fractional evolution equations. Numer. Math. 138, 101–131 (2018)

Langlands, T.A.M., Henry, B.I.: The accuracy and stability of an implicit solution method for the fractional diffusion equation. J. Comput. Phys. 205(2), 719–736 (2005)

Li, D., Liao, H., Sun, W., Wang, J., Zhang, J.: Analysis of L1-Galerkin FEMs for time-fractional nonlinear parabolic problems. Commun. Comput. Phys. 24, 86–103 (2018)

Li, D., Wang, J., Zhang, J.: Unconditionally convergent L1-Galerkin FEMs for nonlinear time-fractional Schrödinger equations. SIAM J. Sci. Comput. 39(6), A3067–A3088 (2017)

Li, X., Cai, Y., Wang, P.: Operator-compensation methodswith mass and energy conservation for solving the Gross-Pitaevskii equation. Appl. Numer. Math. 151, 337–353 (2020)

Li, X., Zhu, J., Zhang, R., Cao, S.: A combined discontinuous Galerkin method for the dipolar Bose-Einstein condensation. J. Comput. Phys. 275, 363–376 (2014)

Liao, H., Mclean, W., Zhang, J.: A discrete grönwall inequality with applications to numerical schemes for subdiffusion problems. SIAM J. Numer. Anal. 57(1), 218–237 (2019)

Liao, H., Mclean, W., Zhang, J.: A second-order scheme with nonuniform time steps for a linear reaction-subdiffusion problem. Commun. Comput. Phys. 30(2), 567–601 (2021)

Liao, H. , Tang, T. , Zhou, T.: A second-order and nonuniform time-stepping maximum-principle preserving scheme for time-fractional Allen-Cahn equations. J. Comput. Phys. 414, 109473 (2020)

Lubich, C.: On splitting methods for schrödinger-poisson and cubic nonlinear Schrödinger equations. Math. Comp. 77, 2141–2153 (2008)

Ji, B., Liao, H., Gong, Y., Zhang, L.: Adaptive second-order Crank–Nicolson time-stepping schemes for time-fractional molecular beam epitaxial growth models. SIAM J. Sci. Comput. 42(3), B738–B760 (2020)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225, 1533–1552 (2007)

Mohebbi, A., Abbaszadeh, M., Dehghan, M.: The use of a meshless technique based on collocation and radial basis functions for solving the time fractional nonlinear Schröinger equation arising in quantum mechanic. Eng. Anal. Bound Elem. 37(2), 475–485 (2013)

Mustapha, K., Almutaw, J.: A finite difference method for an anomalous sub-diffusion equation, theory and applications. Numer. Algorithms 61 (4), 525–543 (2012)

Mustapha, K.: Time-stepping discontinuous Galerkin methods for fractional diffusion problems. Numer. Math. 130(3), 497–516 (2015)

Mustapha, K., McLean, W.: Superconvergence of a discontinuous Galerkin method for fractional diffusion and wave equations. SIAM J. Numer. Anal. 51(1), 491–515 (2013)

Naber, M.: Time fractional Schrödinger equation. J. Math. Phys. 45, 3339–3352 (2004)

Ohannes, K., Charalambos, M. : A space-time finite element method for the nonlinear Schrödinger equation: the continuous Galerkin method. SIAM J. Numer. Anal. 36, 1779–1807 (1999)

Sanz-Serna, J.M.: Methods for the numerical solution of the nonlinear Schrödinger equation. Math. Comput. 43, 21–27 (1984)

Sun, Z., Wu, X.: A fully discrete difference scheme for a diffusion-wave system. Appl. Numer. Math. 56(2), 193–209 (2006)

Thalhammer, M.: High-order exponential operator splitting methods for timedependent Schrödinger equations. SIAM J. Numer. Anal. 46, 2022–2038 (2008)

Thalhammer, M., Caliari, M., Neuhauser, C.: High-order time-splitting Hermite and Fourier spectral methods. J. Comput. Phys. 228, 822–832 (2009)

Tofighi, A.: Probability structure of time fractional Schröinger equation. Acta. Physica Polonica Series A. 116(2), 114–119 (2009)

Wang, J.: A new error analysis of Crank-Nicolson Galerkin FEMs for a generalized nonlinear Schrödinger equation. J. Sci. Comput. 60, 390–407 (2014)

Wang, S., Xu, M.: Generalized fractional schrödinger equation with space-time fractional derivatives. J. Math. Phys. 48(4), 81 (2007)

Wang, T., Wang, J., Guo, B.: Two completely explicit and unconditionally convergent Fourier pseudo-spectral methods for solving the nonlinear Schrödinger equation. J. Comput. Phys. 404, 109116 (2020)

Wang, Y., Wang, G., Bu, L., Mei, L.: Two second-order and linear numerical schemes for the multi-dimensional nonlinear time-fractional Schrödinger equation. Numer. Algorithms. https://doi.org/10.1007/s11075-020-01044-y

Wei, L., He, Y., Zhang, X.: Analysis of an implicit fully discrete local discontinuous Galerkin method for the time-fractional Schrödinger equation. Finite Elem. Anal. Des. 59, 28–34 (2012)

Xu, Y., Shu, C.-W.: Local discontinuous Galerkin methods for nonlinear Schrödinger equations. J. Comput. Phys. 205, 72–77 (2005)

Yang, Y., Wang, J., Zhang, S., Tohidi, E.: Convergence analysis of space-time Jacobi spectral collocation method for solving time-fractional Schrdinger equations. Appl. Math. Comput. 387, 124489 (2019)

Zhao, X.: Numerical integrators for continuous disordered nonlinear Schrödinger equation. J. Sci. Comput. 89, 40 (2021)

Zhou, Y.: Application of Discrete Functional Analysis to the Finite Difference Methods. International Academic Publishers, Beijing (1990)

Zhuang, P., Liu, F., Anh, V., Turner, I.: Stability and convergence of an implicit numerical method for the non-linear fractional reaction-subdiffusion process. IMA J. Appl. Math. 74(5), 645–667 (2009)

Funding

This work is supported by the National Natural Science Foundation of China (Grant No. 11571181) and the Natural Science Foundation of Jiangsu Province (Grant No. BK20171454).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors declare no competing interests.

Additional information

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Proof of the time-fractional Gronwall inequality given in Lemma 3.1

Appendix. Proof of the time-fractional Gronwall inequality given in Lemma 3.1

In this appendix, we present two useful lemmas which are main tools used for proving Lemma 3.1.

Lemma A.1

Let {pn} be a sequence defined by

Then it holds that

Proof

(i) Since \( C_{0}^{\sigma } \geq C_{1}^{\sigma } \geq {\cdots } \geq C_{j}^{\sigma } \ge 0 \) for j ≥ 0, it is easy to verify inductively from (A.1) that \(0 \leq p_{n} \leq 1/C_{0}^{\sigma } \ (n \geq 1) \) by mathematical induction. Moreover, we have

This implies \( {\Phi }_{n} = {\Phi }_{0} = p_{0} C_{0}^{\sigma } = 1 \) for n ≥ 1. Substituting j = l + k − 1, we further find

The equality (A.2) is proved.

(ii) To prove (A.3) and (A.4), we introduce an auxiliary function q(t) = tmα/Γ(1 + mα) for m ≥ 1. Then for j ≥ 1, we have

Let Q(t) be a quadratic interpolation of q(t) using the points (s − 1,q(s − 1)), (s,q(s)), (s + 1,q(s + 1)) for 1 ≤ s ≤ j, and a linear interpolation of q(t) using the points (j,q(j)), (j + 1,q(j + 1)). We define the approximation error by

where

Combining (A.7) and (A.8) yields

Noting that \( q^{\prime \prime \prime }(t) \geq 0 \) for m = 1, we have \( {R_{k}^{j}} \leq 0 \) and

so we have

Multiplying (A.13) by Γ(2 − α)pn−j and summing it over for j from 0 to n, we have

where we the equality (A.2) was used.

(iii) Consider of (A.11), we have

We multiply (A.15) by Γ(2 − α)pn−j+ 1 and sum the resulting inequality for j from 1 to n to obtain

If 1 ≤ m ≤ 1/α, \( q^{\prime \prime \prime }(t) \geq 0 \), then \( {R_{k}^{j}} \leq 0 \) and \( R_{\sigma }^{j} \le 0 \), so (A.4) follows immediately from the above estimate. If m ≥ 1/α, by (A.8), we have

and

so that

Because of

and

one can immediately get (A.4), and the proof of Lemma A.1 is completed. □

Lemma A.2

Let \( \vec {e} = (1,1,\cdots ,1)^{T} \in R^{n+1} \) and

Then, it holds that

Proof

The proof is similar to that of Lemma 3.3 in [24], and we here omit it for brevity. □

We now turn back to the proof of Lemma 3.1

Proof Proof of Lemma 3.1

By the definition of \( D_{\sigma }^{\alpha } \), we get

Multiplying inequality (A.26) by pn−j and summing over for j from 1 to n, we have

By using the result (A.2) in Lemma A.1, we obtain

It follows that

Because of

and

we get

It follows that

when τ ≤ τ∗. By using the result (A.3) in Lemma A.1, we obtain

It follows that

where

and it is easy to get that Ψn ≥Ψk for n ≥ k ≥ 1. Let V = (ωn+ 1,ωn,⋯ ,ω1)T, then (A.33) can be written in a vector form by

where

By (A.1), we have

therefore,

which shows that

where J is defined in (A.22) with \( \lambda = 6 \lambda _{1} + \frac {C_{0}^{\sigma } \lambda _{2}}{C_{0}^{\sigma } - C_{1}^{\sigma }} + \frac {C_{0}^{\sigma } \lambda _{3}}{C_{1}^{\sigma } - C_{2}^{\sigma }} \). As a result, we see that

This together with Lemma A.2 completes the proof. □

Rights and permissions

About this article

Cite this article

Liu, J., Wang, T. & Zhang, T. A second-order finite difference scheme for the multi-dimensional nonlinear time-fractional Schrödinger equation. Numer Algor 92, 1153–1182 (2023). https://doi.org/10.1007/s11075-022-01335-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01335-6

Keywords

- Nonlinear time-fractional Schrödinger equation

- Finite difference method

- Unconditional convergence

- Optimal error estimate