Abstract

In this paper, we study a proximal method for the minimization problem arising from l0-regularization for nonlinear inverse problems. First of all, we prove the existence of solutions and give an optimality condition for solutions to the minimization problem. Then, we propose and prove the convergence of the proximal method for this minimization problem, which is controlled by step size conditions. Finally, we illustrate performance of the proximal method by applying it to a numerical example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, H is denoted to be a real separable Hilbert space, 〈⋅,⋅〉 and ∥⋅∥ are respectively the scalar product and the norm in H. We assume that {φi}i∈Λ \(({\Lambda } \subset \mathbb {N})\) is an orthonormal basis of the Hilbert space H. For each u ∈ H, we denote u = (ui)i∈Λ, where ui = 〈u,φi〉 is the i th component of u with respect to the basis {φi}i∈Λ. Note that all results from this paper are true not only for an orthonormal basis but also for a frame in the real separable Hilbert space H. However, for simplicity, we assume that {φi}i∈Λ \(({\Lambda } \subset \mathbb {N})\) is an orthonormal basis of the Hilbert space H. We study the proximal method for the minimization problem

where \(F:H \rightarrow \mathbb {R}\) is a Fréchet differentiable functional and \({\Phi }:H \rightarrow \bar {\mathbb {R}}:= \mathbb {R}\cup {\{+\infty \}}\) is defined by

with

Problem (1) appears in l0-regularization of inverse problems, i.e., we want to find a solution u of the operator equation

where \(K: H\rightarrow H\) is a Fréchet differentiable operator (linear or nonlinear), y is the exact data, which is unknown and we only obtain a noisy data yδ of y with

Further, we assume that problem (3) is ill-posed, which the solutions to the problem do not depend continuously on the data [5]. Therefore, the real problem is finding an approximation of the solution given the operator K and a noisy data yδ. Applying l0-regularization for this problem the regularized approximate solutions to (3)–(4) are seen to be minimizers of the minimization problem

where \(F(\cdot ;y^{\delta }):H \rightarrow \mathbb {R}\) measuring the difference between K(u) and yδ is a Fréchet differentiable functional. Note that problem (5) is an example of problem (1).

The advantage of l0-regularization is preserving the edges between homogeneous regions and nonhomogeneous. Thus, l0-regularization is used in many applications such as signal and image analysis, wavelet frame, compressive sensing, dictionary building, machine learning and actuator location problems. For linear inverse problems, Blumensath and Davies in [2, 3] have proposed an iterative thresholding scheme and proven the local convergence of this scheme. Needell and Tropp have presented the compressive sampling matching pursuit (CoSaMP). Foucart [7] has also improved CoSaMP by introducing hard thresholding pursuit based on a combination of iterative hard thresholding and compressive sampling matching pursuit algorithm. The other method is suggested by Lu et al. in [9]. Nikolova and E. Soubies have studied in detail about global solutions, see [13, 14]. Recently, Wachsmuth has studied the hard-iterative thresholding to optimal control problems with L0(Ω) control cost in space L2(Ω) in [15]. But for nonlinear inverse problems, there are few algorithms proposed to solve problem (1) in a real separable Hilbert space.

In this paper, we will propose a proximal method for problem (5) in a real separable Hilbert space based on the ideas of the proximal method in [8, 11]. Note that since the functional Φ is nonconvex, problem (1) is different from the minimization problem arising from l1-regularization in [8, 11]. Therefore, the results and the methods of proof for the convergence of the proximal method in our paper are different from those in [8, 11]. Furthermore, the iterative thresholding [2] or the iterative hard thresholding [3] are special cases of our proposed method since they are only applied to linear inverse problem, i.e., to problem (5) with F(u;yδ) = ∥Ku − yδ∥2 and K is a linear operator. Thus, the results in this paper are extensions of those in [2, 3] to nonlinear inverse problems.

The main results in our paper are

-

The existence and the optimality condition for solutions to problem (1).

-

The convergence of the proximal method for problem (1) and the practical choice for step sizes.

The paper is organized as follows: In Section 2 we give explicit formula for the unique minimizer of the quadratic approximation of the cost functional. In Section 3, we study the existence and the optimality condition for solutions to problem (1). Section 4 is devoted to prove the convergence of the proximal method. A method of choosing step sizes is given in Section 5. Finally, we illustrate the performance of the proximal method by applying it to an identification problem in elliptic equations in Section 6.

2 First-order condition for global minimizers for quadratic approximation of the cost functional

The proximal methods developed in [2,3,4, 11] are based on quadratic approximate functionals and the proximal operators. In order to present the proximal method as well as the first-order optimality condition of problem (1), we need to introduce the proximal operator for l0-regularization and the quadratic approximate functionals.

The proximal operator (also known as the hard-thresholding operator ) is defined by

where

Note that \(\mathbb {P}: H \rightrightarrows H\) is a multi-valued mapping.

For each fixed s > 0, the so called quadratic approximate functional of Θ(v) at a given point u is defined as follows:

Using the proximal operator, we can show that the minimization problem, \(\min \limits _{v\in H } {\Theta }_{s}(v,u)\), has at least one solution. This result is given in the following theorem.

Theorem 2.1

Let F ∈ C1(H). Then, for any u ∈ H fixed, the problem

has at least one solution and u+ is a solution if and only if

Proof

By simple calculation, which ignores constant term in u, the minimization problem, \(\min \limits _{v\in H } {\Theta }_{s}(v,u)\), is equivalent to

This problem can be rewritten as

Therefore, the minimum of the problem, \(\min \limits _{v\in H } {\Theta }_{s}(v,u)\), can be calculated by minimizing with respect to vi individually. For each i we distinguish two cases, vi = 0 and vi≠ 0. In the first case, by the definition of |.|0, we have

In the second case, we have

where the equality sign is obtained when \(v_{i}=u_{i}-\frac {1}{s}(\nabla F(u))_{i}\).

Comparing the values for both cases, the minimum of component i of problem (9) is

that is equal to

Note that the first value is obtained when vi = 0, the second one is obtained when vi = 0 or \(v_{i}= u_{i}-\frac {1}{s}(\nabla F(u))_{i}\), and the last value is obtained when \(v_{i}= u_{i}-\frac {1}{s}(\nabla F(u))_{i}\not =0\), i.e., the minimum of component i is attained at

Therefore, the problem, \(\min \limits _{v\in H } {\Theta }_{s}(v,u)\), has a solution

or

□

3 Existence of optimal solutions and optimality conditions

Before presenting the results of the existence of optimal solutions and optimality conditions to problem (1), we recall some basic notions which are not often used. The other basic notions such as convexity, Lipschitz continuity, Lipschitz Fréchet differentiability are well-known in calculus or convex analysis, so we do not recall them here.

Definition 3.1

Let a functional \(G: H \rightarrow \mathbb {R}\).

-

G is called bounded from below if there exists a constant C such that G(u) ≥ C for all u ∈ H.

-

G is called coercive if \(G(u) \rightarrow \infty \) as \(\|u\|\rightarrow \infty \).

-

G is called lower semi-continuous if for every u ∈ H and every sequence \(\{u^{k}\}, u^{k}\rightarrow u\) as \(k\rightarrow \infty \), we have

$$G(u)\leq \liminf_{k\rightarrow\infty} G(u^{k}).$$

In our paper, the functionals, F and Φ, in problem (1) have some properties of G. In fact, F is assumed to be bounded from below, coercive and lower semi-continuous (see Assumption 1) and Φ is lower semi-continuous (see Lemma 3.1). In order to prove the existence of solutions and give the optimality conditions to problem (1) as well as the convergence of the proximal method, we need the following assumption.

Assumption 1

Assume that

-

F is coercive, bounded from below and lower semi-continuous.

-

F is Lipschitz Fréchet differentiable with the Lipschitz constant L, i.e.,

$$\| \nabla F(u)-\nabla F(v)\|\leq L\|u-v\|, \forall u,v \in H .$$

Note that In Assumption 1, Condition a) is used to make sure the existence of a solution to problem (1), and Condition b) is used to obtain the optimality condition of solutions to problem (1) as well as the convergence of the proximal method for this problem. In fact, by Condition b) we have for \(s\geqslant L\)

This is the key inequality used to prove the main results of the paper.

Lemma 3.1

The functional Φ defined by (2) is non-negative, nonconvex, and lower semi-continuous.

Proof

The first two properties are clear. The last property is followed by the lower semi-continuity of |x|0. □

Note that functional Φ in l0-regularization (given by (2)) is nonconvex and not coercive. Thus, problem (1) is nonconvex as well. To make sure the existence of a solution to problem (1) we need some properties of the functional F. Such sufficient conditions for F are given in the following lemma.

Lemma 3.2

Assume that F satisfies Condition a) of Assumption 1. Then, problem (1) has at least a solution (global minimizer).

Proof

The proof is standard and it is left to the readers. □

Theorem 3.1

Assume that F satisfies Condition b) of Assumption 1. Then, u∗ is a solution (global minimizer) of problem (1) if and only if

for any fixed s∗≥ L.

Proof

Since u∗ is a global minimizer of Θ, we have Θ(u∗) ≤Θ(u∗ + h) for any h ∈ H. For s∗≥ L, we have

Therefore, \({\Theta }_{s^{*}}(u^{*}+h,u^{*})\geq {\Theta }_{s^{*}}(u^{*},u^{*})\) for any h ∈ H. It means that u∗ is the global minimizer of \({\Theta }_{s^{*}}(\cdot ,u^{*})\). Then, the proof deduces from Theorem 2.1. □

Definition 3.2

Any point u∗ satisfying (10) with s∗≥ L is called a stationary point of Θ.

Remark 3.1

The condition s∗≥ L is necessary to obtain the last inequality in the proof of Theorem 3.1. For s∗ < L we could not prove such a result. This is different from l1-regularization, which we can prove a similar result for any s∗ > 0, see, e.g., in [1, 10]. Furthermore, Condition (10) is only true for global minimizers of problem (1). We could not prove such a result for local minimizers. This is also the other difference when it is compared with l1-regularization, see, e.g., [10].

4 Proximal method

The main idea of the proximal method is based on the quadratic approximation method. In fact, at the k th iteration, uk+ 1 is determined by solving the quadratic approximation of Θ at uk, i.e.,

and then we need to propose some conditions for sk > 0 such that {uk} (with a subsequence) converges to a stationary point of the original problem. Here, the functional Θs(v,u) is defined by (8).

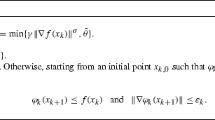

By Theorem 2.1, the sequence \(\{u^{k+1} \in \text {argmin}_{v\in H } {\Theta }_{s^{k}}(v,u^{k})\}\) is later given by

Now we prove the convergence of this sequence. We separate the result into several following lemmas and theorems.

Lemma 4.1

Let Assumption 1 hold and {uk} is a sequence defined by (11), where the sequence {sk} satisfies sk ≥ L + 𝜖 with 𝜖 > 0 for all \(k\in \mathbb {N}\). Then, the sequence {Θ(uk)} is monotonically decreasing, the sequence {uk} is bounded and \(\lim _{k\rightarrow \infty } \|u^{k+1}-u^{k}\|=0\).

Proof

Since F is Lipschitz differentiable with Lipschitz constant L. Therefore, for \(s\geqslant L\) we have

Hence, with \(s\geqslant L\) we obtain

Consequently, we have (since sk > L)

In addition, since uk+ 1 is the solution to \({\Theta }_{s^{k}}(\cdot ,u^{k})\) (see (11)), we have

Thus, the sequence {Θ(uk)} is monotonically decreasing.

The boundedness of {uk} is a consequence of the decreasing of {Θ(uk)}, the boundedness from below and the coercivity of Θ.

For each k, since L is the Lipschitz constant of ∇F and sk > L, we have

This implies that

or

Summing the above inequalities over k = 0,1,...,m yields

One infers that the series \({\sum }_{k=0}^{\infty }\|u^{k+1}-u^{k}\|^{2}\) converges. Therefore, \(\lim _{k\rightarrow \infty }\|u^{k+1}-u^{k}\|=0\). □

Theorem 4.1 (Convergence)

Let F satisfy Assumption 1 and {uk} is a sequence defined by (11), where \(s^{k}\in [L+\epsilon ,\overline {s}], \forall k\), 𝜖 > 0 and \(\overline {s}\geq L+\epsilon \). Then, there exists a subsequence \(\{u^{k_{j}}\}\) of {uk} weakly converging to some u∗∈ H as \(j\rightarrow \infty \) and u∗ is a stationary point of Θ.

Proof

By Lemma 4.1, the sequence {Θ(uk)} is decreasing and the sequence {uk} is bounded. Since ∇F is Lipschitz continuous, the sequence \(\{\nabla F(u^{k})\}_{k}\) is bounded as well. Therefore, there exists a subsequence \(\{u^{k_{j}}\}\) of {uk} such that \(\{u^{k_{j}}\}\) converges weakly to some u∗∈ H, \(\{\nabla F(u^{k_{j}})\}\) converges weakly to ∇F(u∗) ∈ H, and \(\{s^{k_{j}}\}\) converges to \(s^{*}\in [L+\epsilon ,\overline {s}]\) as \(j\rightarrow \infty \). By Lemma 4.1 \(\{u^{k_{j}+1}\}\) also converges weakly to u∗. We define

and

We consider three cases:

Case 1 (for each i ∈Γ0): Since \(\{u^{k_{j}}-\frac {1}{s^{k_{j}}}\nabla F(u^{k_{j}})\}\) converges to \(u^{*}-\frac {1}{s^{*}}\nabla F(u^{*})\) and \(\{s^{k_{j}}\}\) converges to s∗, there exists \(j^{*}\in \mathbb {N}\) such that for all j ≥ j∗ we have \(i{\in {\Gamma }^{j}_{0}}\). Therefore, if j ≥ j∗, then

Let \(j\rightarrow \infty \), we have

This implies that

Case 2 (for each i ∈Γ1): Since \(\{u^{k_{j}}-\frac {1}{s^{k_{j}}}\nabla F(u^{k_{j}})\}\) converges to \(u^{*}-\frac {1}{s^{*}}\nabla F(u^{*})\) and \(\{s^{k_{j}}\}\) converges to s∗, there exists \(j^{*}\in \mathbb {N}\) such that for all j ≥ j∗ we have \(i{\in {\Gamma }^{j}_{1}}\). Therefore, if j ≥ j∗, then

Let \(j\rightarrow \infty \), we have

This implies that

Case 3 (for each i ∈Γ): We have

Let \(j\rightarrow \infty \), we have

Thus

Combining three cases, we have

□

5 Step size choice and realization of the proximal method

From Lemma 4.1 the condition for the convergence of proximal method is that parameters sk must satisfy sk ≥ L + 𝜖 for some fixed 𝜖 > 0. In practice, there are two following cases:

-

(i)

In the first case, the Lipschitz constant L is known.

-

(ii)

In the second one, the Lipschitz constant L is unknown or very hard to find.

To make sure the convergence of the proximal method: in the first case we can choice sk = L + 𝜖 with 𝜖 > 0 for all k. However, the bigger the Lipschitz constant is, the smaller of step size is. As a result, the algorithm converges slower. Thus, we do not want to choose sk too large for all k. To overcome this situation and for the second case, we propose a practical way to select step size as follow: we can choose s0 that is not too large and choose the parameter sk that satisfies the condition \({\Theta }(u^{k+1})\leq {\Theta }_{s^{k}}(u^{k+1},u^{k})\) by the back-tracking rule. By this method, the sequence {Θ(uk)} is monotonically decreasing (see the proof of Lemma 4.1). With this choice rule, there are two cases: In the first case we have sk ≤ L for all k and thus the conditions in Lemma 4.1 are not satisfied, i.e., the proximal method may not converge. In the second case there exists a k∗ such that \(s^{k^{*}}>L, \forall k\ge k^{*}\), i.e., the proximal method converges. In practice, by the backtracking rule the first case rarely happen, especially when the number of iterations is large since the sequence sk is increasing. Thus, the above method of choosing step sizes is practical.

Since the proximal operator is a multi-valued operator, there are many sequences {uk} generated by the proximal method. In practice we only need one of them. Thus, we use one of two modified proximal operators as follows:

where

Combining the choice of step sizes and the modified proximal operator, the iteration (8), is presented in Algorithm 1. We will illustrate Algorithm 1 with different modified proximal operators in Section 6.

6 Application to an inverse source problem

For a numerical example we consider the inverse problem of estimating the source y ∈ L2(Ω) in the elliptic boundary problem

from a noisy data uδ of the exact solution.

We assume that Ω is a Lipschitz domain and \(a\in \mathcal {A}\), where

Then, for each \(y\in L^{2}({\Omega }),a\in \mathcal {A}\) and u0 ∈ H1/2(∂Ω) problem (14) has a unique weak solution u ∈ H1, see, e.g., [6].

Let y∗ be the parameter that needs to recover and u∗ be the solution to (14) with fixed parameters u0,a and y∗. Let \(K:L^{2}({\Omega })\rightarrow H^{1}({\Omega }), y\mapsto u\), the solution operator of (14) with fixed a and u0. The inverse problem is equivalent to solving the equation

where only a noisy approximation uδ of u∗ is available with

Using least square approach together with l0-regularization, the approximation to y∗ is the solution to the following problem

where

α > 0 is a regularization parameter and yi = 〈y,φi〉 with \(\{\varphi _{i}\}_{i=1}^{\infty }\) is an orthonormal basis of L2(Ω).

The wellposedness of problem (16), the convergence and convergence rates of its solutions to solutions of operator (15) are still open (by our best knowledge), but it can be obtained by standard approaches in theory of regularization for inverse problems. For examples, Wang et al. have proved some such results relating to l0-regularization for general operator equations in [16, 17]. Here, as an example we try to solve problem (16) by our proximal method. The numerical results will show the wellposedness of problem (16) and the convergence of its solutions to operator (15). Note that the proximal method can be applied for problem (16) since F satisfies Assumption 1, where the Lipschitz differentiability of F is given in the following lemma.

Lemma 6.1

The functional \(F:L^{2}({\Omega })\rightarrow \mathbb {R}\) is Fréchet differentiable at any point y ∈ L2(Ω), Fréchet derivative \(F^{\prime }\) is Lipschitz continuous and \(F^{\prime }(y)=p\), where p is the weak solution to the following problem

where u = K(y).

Proof

We first recall the following well-known results:

-

(a)

\({H^{1}_{0}}({\Omega })\) is embedding compactly into L2(Ω). This implies that there exists a constant c > 0 such that

$$ \|v\|_{L^{2}({\Omega})}\leq c\|v\|_{{H^{1}_{0}}({\Omega})}, \forall v\in {H^{1}_{0}}({\Omega}). $$(19) -

(b)

In \({H^{1}_{0}}({\Omega })\) the norm \({\left \vert \kern -0.25ex\left \vert \kern -0.25ex\left \vert v \right \vert \kern -0.25ex\right \vert \kern -0.25ex\right \vert }:=\sqrt {{\int \limits }_{\Omega } a \nabla v. \nabla v dx}\) is equivalent to \(\|v\|_{{H^{1}_{0}}({\Omega })}\), i.e., there exist constants c1,c2 > 0 such that

$$ c_{1}\|v\|_{{H^{1}_{0}}({\Omega})}\leq {\left\vert\kern-0.25ex\left\vert\kern-0.25ex\left\vert v \right\vert\kern-0.25ex\right\vert\kern-0.25ex\right\vert}\leq c_{2}\|v\|_{{H^{1}_{0}}({\Omega})}, \forall v\in {H^{1}_{0}}({\Omega}). $$(20)

Using above results, we now prove the Fréchet differentiability of F at y ∈ L2(Ω). Indeed, for δy ∈ L2(Ω) we have

where u = K(y),u1 = K(y + δy). Furthermore, by the formula of weak solutions we have

Subtracting both sides of equations (22) and (23) we have

Inserting v = u1 − u and v = p into (24) we obtain

By the formula of weak solution to (18) we have

Inserting \(v=u^{1}-u\in {H^{1}_{0}}({\Omega })\) into (27) we have

From (21), (25), (26) and (28) we have

since \(\|u^{1}-u\|_{L^{2}({\Omega })}\rightarrow 0\) as \(\delta y\rightarrow 0\) (due to the continuity of the operator K). The inequality (29) implies that F is Fréchet differentiable and \(F^{\prime }(y)=p\), where p is the weak solution to (18).

To finish the proof, we need to prove the Lipschitz continuity of \(F^{\prime }\). Indeed, let \(p_{1}=F^{\prime }(y_{1})\) and \(p_{2}=F^{\prime }(y_{2})\). Then, \(p_{1}, p_{2}\in {H^{1}_{0}}({\Omega })\) and they respectively satisfy

and

where u1 = K(y1) and u2 = K(y2). Subtracting both sides of (30) and (31) we have

Inserting v = p1 − p2 into (32) we obtain

Similarly, subtracting both sides of the formula of the weak solutions u1 and u2 we have

Inserting v = u1 − u2 into (32) we obtain

From (19), (20) and (33) we have

Similarly, from (19), (20) and (35) we have

Finally, from (36) and (36) we deduce

This implies that \(F^{\prime }\) is Lipschitz continuous with Lipschitz constant \(\frac {c^{4}}{{c_{1}^{4}}}\). □

7 Numerical solution

In this section we illustrate the performance of the proximal method for the inverse source problem in previous section. We assume that Ω = [− 1,1]2 and

We discretize problems (14) and (16) by the finite element method (FEM). The domain Ω is divided into triangles and define the following finite element spaces:

where \(\mathcal {P}_{1}(K)\) denotes the set of linear polynomials in K, N and \(\mathcal {T}\) are respectively the number of nodes and the set of triangles of the mesh. Let {φk}k= 1,…,N is the basis consisting of piecewise linear finite elements in discretized space VN. Then, the discretization of problem (14) is: find u ∈ VN such that

where P is the interpolation in FEM.

Similarly, the discretization of problem (16) is

where

with \(y_{i}=\langle y,\varphi _{i} \rangle _{L^{2}({\Omega })}\).

To obtain u∗ and uδ, we solve (39) on a mesh with N = 887 nodes and 1680 triangles. The solution to (39) and all the parameters a,u0 and y are represented by piecewise linear finite elements. Problem (40) is solved by the proximal method, Algorithm 5.1.

We measure the convergence of the computed minimizers to the true parameter y∗ by the mean square error sequence

and

with s∗ = 100.

7.1 Numerical experiments with δ = 0

We first discuss results without noise, i.e., uδ = u∗. Let Prox.Al.1 and Prox.Al.2 be Algorithm 5.1 with l = 1 and l = 2 respectively. In these algorithms we set q = 0.5, \([\underline {s},\overline {s}]:=[5 \cdot 10^{-4},5 \cdot 10^{4}]\) and α = 10− 6.

Convergence and convergence rate

In Fig. 1 we see that the sequences \(\{\|D(y^{k})\|_{L^{2}({\Omega })}\}\) generated by Prox.Al.1 and Prox.Al.2 are decreasing to zero, i.e., the sequences {yk} converge to stationary points of Θ(⋅). The behavior of MSE(yk) indicate that the sequences {yk} in two algorithms converge to the unknown parameter y∗. We also see that the objective functionals {Θ(yk)} decrease monotonically in two algorithms. These observations are suitable with the theory results in Lemma 4.1. The computational times in two algorithms are similar. Figure 3 shows that after the first iteration, the step sizes \(\frac {1}{s^{n}}\) do not change. Thus, we believe that they are smaller than \(\frac {1}{L}\), the Lipschitz constant of \(F^{\prime }\).

Note that we do not have any result about convergence rate of the proximal algorithm in this paper. Since problem (9) is nonconvex and nondifferentiable, such a result is very hard to prove. However, in [12] for the convex problem of the same form of (9), the convergence rate is proven to be \(O(\frac {1}{n})\), where n is the number of iterations of the proximal method. This implies that the convergence rate of the proximal algorithm for problem (9) do not better than \(O(\frac {1}{n})\). Figure 1 shows this statement is true since the sequences Θ(yn) in two algorithms are decreasing slower than Θ(y0)/n.

Quality of recovered solutions

Figure 2 illustrates y∗ and yn with n = 500 in two algorithms. The parameter y∗ has been reconstructed very well. The mean square errors in two algorithms are the same. Note that y∗ is always greater than zero (there are many components close to zero), but two reconstructions, yn with n = 500 in two algorithms have many zero components, which are shown in Fig. 3.

7.2 Numerical experiments with noisy data

We now consider noisy data. To obtain uδ ∈ L2(Ω), we compute \(u^{\delta }=u^{*}+\epsilon \frac {R}{\|R\|_{L^{2}({\Omega })}}\), where R is computed with the MATLAB routine randn(size(y)) with setting randn(′state′,0). Here, we choose 𝜖 = 10− 2. Figure 4 illustrates the graph of uδ − u∗. It shows that the data is noisy.

With noisy data we also use the same setting in Prox.Al.1 and Prox.Al.2, i.e., all parameters are the same as those in the case of no noise. From Fig. 5 we have the similar observations on convergence and convergence rate as well as computational time. However, for MSE(yn) they are decreasing in the first number of iterations, but they are increasing after that. This is always happened in regularization of inverse problems. With noisy data we need to study a rule of stopping the algorithms. Such a rule is out of the aim of this paper. Here, we choose yk such that MSE(yk) is the smallest in the sequences of MSE(yn) generated by two algorithms. These approximate solutions are illustrated in Fig. 6.

7.3 Numerical experiments with a nonsmooth source functional

We close this section by considering an inverse source problem with a nonsmooth source functional . Here, we use the same setting as two previous subsections, but replace the source functional by another nonsmooth functional, i.e., we assume that Ω = [− 1,1]2 and

We generate noisy data as previous subsection with 𝜖 = 10− 2. For Prox.Al.1 and Prox.Al.2, we set q = 0.5, \([\underline {s},\overline {s}]:=[5 \cdot 10^{-4},5 \cdot 10^{4}]\) and α = 10− 6. In Fig. 7, the sequences {∥D(yn)∥} show that the sequences {yn} converge to stationary points of Θ, the sequences {yn} also approach to the exact parameter y∗ but the sequences MSE(yn) have higher values than those in the smooth case (see Fig. 5). Figure 8 shows the sparsity of recovered solutions yn and the behaviors of the stepsizes \(\frac {1}{s_{n}}\), which are suitable with theoretical analysis. It is harder to recover a nonsmooth parameter than a smooth parameter. The qualities of reconstructions are worse in this situation (see Fig. 9).

From three situations, we see that Prox.Al.1 and Prox.Al.2 converge to stationary points of Θ and the sequences of the objective functional are decreasing monotonically. Prox.Al.1 is faster than Prox.Al.2, but they have the same order of convergence rates. Furthermore, the computational time for each iteration seems to be constant in both algorithms. Finally, at the same noise level the reconstructions for smooth unknown parameters are better than those for nonsmooth unknown parameters.

8 Conclusion and future work

We have considered the minimization problem

where \(F:H \rightarrow \mathbb {R}\) is Fréchet differentiable, \(F^{\prime }\) is Lipschitz continuous and Φ(u) = ∥u∥0.

Under Assumption 1 and the condition on step sizes sk, Theorem 4.1 has shown that the iteration

converges (under a subsequence) to a stationary point u∗, i.e., u∗ satisfies

for some s∗≥ L.

Note that the proximal method falls in class of gradient type methods. Thus, it converges quite slow and has linear convergence rate. Therefore, we will study some better methods in the next step, e.g., some algorithms fall into class of Newton method. To make this point clearer, we note that instead of solving the above minimization problem, we can concentrate on solving its first-order optimal condition equation:

for some fixed s ≥ L. Therefore, we can extend Newton method or quasi-Newton method to solve the last equation. It is valuable to show that the last equation is not continuous. As a result, it is most difficult part of extending Newton and quasi-Newton method to deal with this situation. We will propose our ideas to overcome this issue in future.

There are some other issues which are needed to study such as the rules of choosing regularization parameter with respect to noise level, the rules of stopping the algorithms in the case of noisy data and the algorithms for the constrained minimization problem

where \(F: D\subset H \rightarrow \mathbb {R}\) is Fréchet differentiable, \(F^{\prime }\) is Lipschitz continuous and Φ(u) = ∥u∥0.

References

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Blumensath, T., Davies, M.E.: Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 14(5–6), 629–654 (2008)

Blumensath, T., Yaghoobi, M., Davies, ME: l0 regularisation. In: Iterative hard thresholding IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, vol. 3, pp. III–877. IEEE (2007)

Daubechies, I., Defrise, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57(11), 1413–1457 (2004)

Engl, H.W., Hanke, M., Neubauer, A.: Regularization of Inverse Problems. Kluwer Academic publishers (1996)

Evans, L.C.: Partial differential equations. Graduate Studies in Mathematics, 19(2) (1998)

Foucart, S.: Hard thresholding pursuit: An algorithm for compressive sensing. SIAM J. Numer. Anal. 49(6), 2543–2563 (2011)

Lorenz, D.A., Maass, P., Muoi, P.Q.: Gradient descent for Tikhonov functionals with sparsity constraints: Theory and numerical comparison of step size rules. Electron. Trans. Numer. Anal. 39, 437–463 (2012)

Lu, Z., Zhang, Y.: Sparse approximation via penalty decomposition methods. SIAM J. Optim. 23(4), 2448–2478 (2013)

Quy Muoi, P, Nho Hào, D., Maass, P, Pidcock, M: Semismooth Newton and quasi-newton methods in weighted l1-regularization. J. Inverse Ill-Posed Problems 21(5), 665–693 (2013)

Quy Muoi, P., Nho Hào, D, Maass, P., Pidcock, M.: Descent gradient methods for nonsmooth minimization problems in ill-posed problems. J. Comput. Appl. Math. 298, 105–122 (2016)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140(1), 125–161 (2013)

Nikolova, M.: Description of the minimizers of least squares regularized with ℓ0-norm. Uniqueness of the global minimizer. SIAM J. Imaging Sci. 6(2), 904–937 (2013)

Soubies, E., Blanc-Féraud, L, Aubert, G.: A continuous exact ℓ0 penalty (cel0) for least squares regularized problem. SIAM J. Imaging Sci. 8(3), 1607–1639 (2015)

Wachsmuth, D.: Iterative hard-thresholding applied to optimal control problems with l0(ω) control cost. SIAM J. Control. Optim. 57(2), 854–879 (2019)

Wang, W., Lu, S, Hofmann, B., Cheng, J.: Tikhonov regularization with l0-term complementing a convex penalty: l1-convergence under sparsity constraints. J. Inverse Ill-posed Problems 27(4), 575–590 (2019)

Wang, W, Lu, S, Mao, H, Cheng, J: Multi-parameter Tikhonov regularization with the l0 sparsity constraint. Inverse Problems 29(6), 065018 (2013)

Acknowledgements

The authors would like to thank all three implicit referees for their valuable comments. We have learned a lots from their comments and revised the paper to obtain the final version, which much be better than the original one.

Funding

This research was supported by Science and Technology Development Fund - Ministry of Training and Education under project B2021-DNA-15.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Muoi, P.Q., Hiep, D.X. Proximal algorithm for minimization problems in l0-regularization for nonlinear inverse problems. Numer Algor 91, 367–388 (2022). https://doi.org/10.1007/s11075-022-01266-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01266-2