Abstract

We propose a variant of PMHSS iteration method for solving and preconditioning a class of complex symmetric indefinite linear systems. The unconditional convergence theory of this iteration method is proved, and the choice of quasi-optimal parameter is also discussed. The explicit expressions for the eigenvalues and eigenvectors of the corresponding preconditioned matrix are derived. In addition, theoretical analyses show that all eigenvalues are linearly distributed in the unit circle under suitable conditions. Numerical experiments are reported to illustrate the effectiveness and robustness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the iteration solution of the following complex linear system:

where \({\mathscr{A}}= W+i T\in \mathbb {C}^{n\times n}\) is a sparse complex matrix with matrices \(W,T\in \mathbb {R}^{n\times n}\) being both symmetric, \(b\in \mathbb {C}^{n}\) is a given vector and \(x\in \mathbb {C}^{n}\) is an unknown vector. Here \(i=\sqrt {-1}\) is the imaginary unit. Linear systems of the form (1) arise in many problems in scientific computing and engineering applications, such as optimal control problems for PDEs with various kinds of state stationary or time dependent equations, e.g., Poisson, convection diffusion, Stokes [2], wave propagation and structural dynamics. More details on this class of questions are given in references [1, 7, 17].

Based on the Hermitian and skew-Hermitian parts of the coefficient matrix \({\mathscr{A}}=H+S\) with \(H=\frac {1}{2}({\mathscr{A}}+{\mathscr{A}}^{*})=W\) and \(S=\frac {1}{2}({\mathscr{A}}-{\mathscr{A}}^{*})=iT\), Bai et al. [11] constructed the Hermitian and skew-Hermitian splitting (HSS) iteration method for non-Hermitian positive definite linear systems. This iteration method is algorithmically described in the following.

Method 1.1

(The HSS iteration method) Let \(x_{0}\in \mathbb {C}^{n}\) be an arbitrary initial guess. For k = 0,1,2,⋅⋅⋅, until the sequence of iterates \(\{x_{k}\}_{k=0}^{\infty }\subset \mathbb {C}^{n}\) converges, compute the next iterate xk+ 1 according to the following procedure:

where α is a given positive constant and \(I\in \mathbb {R}^{n\times n}\) is the identity matrix.

When \(W\in \mathbb {R}^{n\times n}\) is a symmetric positive definite (SPD) matrix, we know that the HSS iteration method is convergent for any positive constant α. Corollary 2.3 in [11] shows that the quasi-optimal parameter α∗ is given by the extreme eigenvalues of the matrix W and the convergence rate of the HSS iteration method is depended on the spectral condition number of the SPD matrix W. For more details about the HSS iteration method and its variants, we refer to [6, 10, 12].

A potential difficulty with the HSS iteration approach (2) is the need to solve the shifted skew-Hermitian sub-system of linear equations with coefficient matrix αI + iT at each iteration step, which is as difficult as that of the original problem in some cases [7]. In order to avoid solving the complex sub-system, Bai et al. in [8] presented the preconditioned modified HSS (PMHSS) iteration method by making use of the special structure of the coefficient matrix \({\mathscr{A}}\) in (1). The PMHSS method is algorithmically described as follows.

Method 1.2

(The PMHSS iteration method) Let \(x_{0}\in \mathbb {C}^{n}\) be an arbitrary initial guess. For k = 0,1,2,⋅⋅⋅, until the sequence of iterates \(\{x_{k}\}_{k=0}^{\infty }\subset \mathbb {C}^{n}\) converges, compute the next iterate xk+ 1 according to the following procedure:

where α is a given positive constant and \(V\in \mathbb {R}^{n\times n}\) is a prescribed SPD matrix.

Theoretical analysis in [8] has indicated that the PMHSS iteration converges to the unique solution of the complex linear system (1) for any initial guess when the matrices W,T are symmetric positive semi-definite (SPSD) with at least one of them being SPD. Its asymptotic convergence rate is bounded by

where Sp(V− 1W) and Sp(V− 1T) denote the spectrums of the matrices V− 1W and V− 1T, respectively. In particular, the PMHSS-preconditioned GMRES method shows meshsize-independent and parameter-insensitive convergence behavior for some test problems, see also [9, 15, 16, 22].

However, when the matrix W is symmetric indefinite and T is SPD, the linear system (1) is often referred to as the complex symmetric indefinite linear system [21, 22, 24, 26]. Then αV + W in (3) may be singular or indefinite, and the effectiveness of the PMHSS method is seriously affected [25]. It is worth pointing out that the same situation occurs in the modified HSS [7] and double-step scale splitting [27] iteration methods.

Actually, in earlier times, Bai exquisitely designed the skew-normal splitting (SNS) and skew scaling splitting (SSS) methods in [5] by using Hermitian positive semi-definite matrix − (iT)2 = (iT)∗(iT). Theoretical analyses in [5] show that the SNS and SSS methods converge unconditionally to the unique solution of complex symmetric indefinite linear systems of the form (1). Although the SNS and SSS methods are convergent unconditionally, the algorithm implementation of both methods involves complex arithmetics which greatly reduce the computational efficiency at each inner iteration.

Let x = y + iz and b = b1 + ib2, where \(y, z, b_{1}, b_{2}\in \mathbb {R}^{n}\), in order to avoid the complex arithmetic, the complex symmetric indefinite linear system (1) can be rewritten into the block 2 × 2 equation [1, 3, 4, 9, 25]:

The block 2 × 2 linear equation (4) can be solved in real arithmetics by some preconditioned Krylov subspace iteration methods (such as GMRES [19]). Recently, based on the relaxed splitting preconditioning technique [14] for generalized saddle point problems [13, 18], some block structure preconditioners have been created. For example, a variant of HSS (VHSS) preconditioner is constructed in [20], which has the form

Zhang and Dai [24] made slight changes to the VHSS preconditioner and presented a new block (NB) splitting preconditioner:

they showed that \({\mathscr{P}}_{\text {NB}}\) is a better approximation to the block 2 × 2 matrix \(\widehat {{\mathscr{A}}}\) in (4) than the VHSS preconditioner \({\mathscr{P}}_{\text {VHSS}}\) in (5).

The forms of \({\mathscr{P}}_{\text {VHSS}}\) and \({\mathscr{P}}_{\text {NB}}\) imply that they are both good approximations to the coefficient matrix \(\widehat {{\mathscr{A}}}\). However, when these preconditioners are applied to accelerate Krylov subspace methods, a linear sub-system with real coefficient matrix αT + W2 must be solved at each inner iteration. As the spatial dimension increases, these preconditioners may be invalid or computationally inefficient since the matrix αT + W2 becomes dense.

Therefore, in this paper, we strive to construct an iteration method that is unconditionally convergent for solving the complex symmetric indefinite linear system (1) with W is symmetric indefinite and T is SPD. Moreover, the algorithm implementation of the iteration method and the corresponding preconditioner only involves the solutions of sparse SPD linear sub-systems.

The remainder of this paper is organized as follows. In Section 2, a variant of PMHSS (VPMHSS) iteration method which can be regarded as a generalization of PMHSS method is introduced in detail, and the unconditional convergence theory and quasi-optimal parameter expression are also established. In addition, the spectral properties of the corresponding preconditioned matrix are discussed under suitable conditions in Section 3. Numerical results are given in Section 4 to demonstrate the efficiency of the VPMHSS iteration method and the corresponding preconditioner. Some concluding results are drawn in Section 5.

2 The VPMHSS iteration method

By using the positive definiteness property of the matrix T and introducing a positive factor 𝜖, the complex linear system (1) is equivalent to

where M = 𝜖T + W and δ = 1 + i𝜖. Here 𝜖 is an appropriate positive number such that M is a SPD matrix, see [26]. Referred to the first half-step iteration format of the PMHSS method in (3), it is easy to rewrite the linear (6) to

where α is a given positive constant and \(V\in \mathbb {R}^{n\times n}\) is a SPD matrix.

Similarly, compared with the second half-step iteration format of the PMHSS method (3), we need to convert the complex matrix δT in (6) into a SPD matrix |δ|T at first, where \(|\delta |=\sqrt {1+\epsilon ^{2}}\) is the module of δ. Then we can reconstruct

where δ∗ = 1 − i𝜖 represents the conjugate of δ.

Combining (7) and (8), the VPMHSS iteration method is given immediately.

Method 2.1

(The VPMHSS iteration method) Let \(x_{0}\in \mathbb {C}^{n}\) be an arbitrary initial guess. For k = 0,1,2,⋅⋅⋅, until the sequence of iterates \(\{x_{k}\}_{k=0}^{\infty }\subset \mathbb {C}^{n}\) converges, compute the next iterate xk+ 1 according to the following procedure:

where α is a given positive constant, δ = 1 + i𝜖 and \(V\in \mathbb {R}^{n\times n}\) is a prescribed SPD matrix.

Remark 2.1

Note that when 𝜖 = 0, i.e., the matrix W itself is a SPD matrix, Method 2.1 reduces to Method 1.2. Therefore, the VPMHSS method can be considered as a generalization of the PMHSS method for solving the complex symmetric indefinite linear system (1).

Since the matrices αV + M and αV + |δ|T are both sparse SPD matrices, the two linear sub-systems involved in each step of (9) can also be solved effectively by using a Cholesky factorization or inexactly by some conjugate gradient or multigrid scheme [8, 9, 11, 27].

After straightforward derivations, the VPMHSS iteration scheme (9) can be reformulated into the standard linear stationary iteration form

where

and

In addition, if we introduce the matrices F(V,α) and G(V,α) with

and

then it holds that L(V,α) = F(V,α)− 1G(V,α) and \({\mathscr{A}}=F(V, \alpha )-G(V, \alpha )\).

Concerning the convergence of the stationary VPMHSS iteration method, we have the following theorem.

Theorem 2.1

Let \({\mathscr{A}}=M+i\delta T\) with \(M, T\in \mathbb {R}^{n\times n}\) being both SPD matrices, δ = 1 + i𝜖 with 𝜖 > 0, and let α be a positive constant. Then the spectral radius ρ(L(V,α)) of the VPMHSS iteration matrix L(V,α) satisfies ρ(L(V,α)) ≤ σ(α), where

Therefore, it holds that

i.e., the VPMHSS iteration method converges to the unique solution of the complex symmtric indefinite linear systems (1) and (6) for any initial guess.

Proof 1

By direct computations we have

As γj > 0, j = 1,2,⋯ ,n and 𝜖 > 0, it then follows that

Therefore, the VPMHSS iteration method (9) converges to the unique solution of the complex systems (1) and (6). □

Remark 2.2

The VPMHSS is, in spirit, analogous to the PMHSS method discussed in [8] for solving the complex symmetric semi-definite linear systems. However, the unconditional convergence property of the VPMHSS method is still applicable for the complex symmetric indefinite equations (1), and the convergence rate depends on the relax parameter α and the positive factor 𝜖.

Regarding the quasi-optimal parameter αopt of the VPMHSS method (9), we refer to literature [7, 8] and draw the following conclusion immediately.

Corollary 2.1

Let the conditions of Theorem 2.1 be satisfied, and \(\gamma _{\min \limits }\) and \(\gamma _{\max \limits }\) be the smallest and the largest eigenvalues of the matrix V− 1M, respectively. Then the quasi-optimal parameter αopt that minimizes σ(α) is \(\alpha _{\text {opt}}=\sqrt {\gamma _{\min \limits } \gamma _{\max \limits }}\). The corresponding optimal convergence factor is bounded by

where κ2(⋅) represent the spectral condition number of the corresponding matrix.

It is easy to see that the convergence rates of the VPMHSS iteration method are bounded by σ(αopt), which only depends on the spectrum of the matrix V− 1M. Evidently, the smaller the condition number of the matrix V− 1M is, the faster the asymptotic convergence rate of the VPMHSS iteration method will be.

Now, according to the result of (10), we give the value of the quasi-optimal parameter αopt for minimizing the upper bound σ(α) when V = M.

Corollary 2.2

Let V = M, then the quasi-optimal parameter αopt is 1, and the corresponding spectral radius ρ(L(1)) is less than \(\sqrt {\frac {1}{2}+\frac {\epsilon }{2\sqrt {1+\epsilon ^{2}}}}\).

In particular, when V = M, we have

and the VPMHSS iteration scheme is induced by the matrix splitting \({\mathscr{A}}=F(\alpha )-G(\alpha )\) with

and

The splitting matrix F(α) can be used as a preconditioner matrix for the complex symmetric indefinite matrix \({\mathscr{A}}\) in (1). Note that the multiplicative factor \(\frac {(\alpha +1)|\delta |}{\alpha (|\delta |-i\delta ^{*})}\) has no effect on the preconditioning system and therefore it can be dropped. Algorithm implementation of the VPMHSS preconditioner F(α) within Krylov subspace acceleration only involves solution of the sub-linear system with real SPD matrix αM + |δ|T.

3 Spectral properties of the preconditioned matrix

The spectral properties of the corresponding preconditioned matrix \(F(\alpha )^{-1}{\mathscr{A}}\) are established in the following theorem.

Theorem 3.1

Let the conditions of Theorem 2.1 be satisfied. Then the eigenvalues of the preconditioned matrix \(F(\alpha )^{-1}{\mathscr{A}}\) are given by

and the corresponding eigenvectors are given by

with μj being the eigenvalues and qj being the corresponding orthogonal eigenvectors of the SPD matrix \(\widehat {T}=M^{-\frac {1}{2}}TM^{-\frac {1}{2}}\), respectively. Therefore, it holds that \(F(\alpha )^{-1}{\mathscr{A}}=\textbf {X}{\Lambda }\textbf {X}^{-1}\), where X = (x1,x2,⋯ ,xn) and \({\Lambda }=\text {diag}\left (\lambda ^{(\alpha )}_{1}, \lambda ^{(\alpha )}_{2}, \cdots , \lambda ^{(\alpha )}_{n}\right )\) with \(\kappa _{2}(\textbf {X})=\sqrt {\kappa _{2}(M)}\).

Proof 2

The proof is quite similar to that of Theorem 3.2 in [8], and is omitted. □

Theorem 3.1 indicates that the matrix \(F(\alpha )^{-1}{\mathscr{A}}\) is diagonalizable, with the matrix X, formed by its eigenvectors, satisfying \(\kappa _{2}(\textbf {X})=\sqrt {\kappa _{2}(M)}\). Hence, when employed to solve the complex symmetric indefinite linear systems (1) and (6), the preconditioned Krylov subspace iteration methods (such as GMRES [19]) can be expected to converge rapidly, at least when \(\sqrt {\kappa _{2}(M)}\) is not too large.

Finally, under the selection of quasi-optimal parameter, a more refined eigenvalue distributions of \(F(\alpha )^{-1}{\mathscr{A}}\) is given.

Corollary 3.1

When α = 1, the real part \(Re\left (\lambda ^{(1)}_{j}\right )\) of the eigenvalues \(\lambda ^{(1)}_{j}\) in (11) is

and the imaginary part \(Im\left (\lambda ^{(1)}_{j}\right )\) of the eigenvalues \(\lambda ^{(1)}_{j}\) is

Therefore, the eigenvalues of the preconditioned matrix \(F(1)^{-1}{\mathscr{A}}\) are linearly distributed and only related to the positive factor 𝜖.

Corollary 3.2

If α = 1, then Corollary 2.2 leads to \(\rho (L(1))\leq \sqrt {\frac {1}{2}+\frac {\epsilon }{2\sqrt {1+\epsilon ^{2}}}}\). This shows that when

is used to precondition the matrix \({\mathscr{A}}\), the eigenvalues of the preconditioned matrix \(F(1)^{-1}{\mathscr{A}}\) are well-clustered in the circle with radius \(\sqrt {\frac {1}{2}+\frac {\epsilon }{2\sqrt {1+\epsilon ^{2}}}}\) and centered at (1, 0).

4 Numerical experiments

In this section, we use two examples to assess the feasibility and effectiveness of the VPMHSS method when it is used either as a solver or as a preconditioner for solving the system of linear equations (1) and (6). We also compare the VPMHSS with the PMHSS both as iterative solvers and as preconditioners for the GMRES method. In addition, numerical results of GMRES in conjunction with the VHSS and NB preconditioners for solving the real system (4) are also presented.

The initial guess is chosen to be the zero vector and the iteration is terminated if the relative residual error satisfies \(\frac {\|r^{(k)}\|_{2}}{\|r^{(0)}\|_{2}}<10^{-6}\). IT denotes the number of iteration, CPU denotes the computing time (in seconds) required to solve a problem. All codes are running in MATLAB on an Intel(R) Core(TM) i7-4790 CPU @3.60GHz, 8G memory and Win 7 operating system, with machine precision 10− 16.

In the implementation, the relax parameters α in different methods are selected by the following ways.

-

For the VPMHSS and PMHSS methods, we use both the experimentally optimal parameters (denoted by \(\alpha _{\exp }\)) and the fix parameter α = 1. The involved preconditioning matrices for the two methods are chosen as V = M and V = T, respectively. Moreover, the positive factor 𝜖 in (6) is \(\sqrt {n}\|W\|_{\infty }/\|T\|_{F}\), see [26].

-

For the VHSS preconditioner [20]:

$$\alpha_{\text{VHSS}}=\sqrt{\lambda_{\max} \lambda_{\min}},$$where \(\lambda _{\max \limits }\) and \(\lambda _{\min \limits }\) are the maximum and the minimum eigenvalues of the SPD matrix T, respectively. In this section, it should be noted that we use the power method and the inverse power method to calculate the \(\lambda _{\max \limits }\) and \(\lambda _{\min \limits }\), respectively, but the calculation time is not shown in Tables 2 and 4.

-

For the NB preconditioner [24]:

$$\alpha_{\text{NB}}=\left( \frac{\text{tr}(TW^{2}T)}{n}\right)^{\frac{1}{4}},$$where tr(⋅) denotes the trace of a matrix.

Example 4.1

(See [4, 8, 20, 26]) The complex symmetric indefinite linear system (1) is of the following form:

where G is the inertia matrix, CV and CH are the viscous and the hysteretic damping matrices, respectively, and K is the stiffness matrix. See [7] for more information.

In this example, we take G = I, CV = 0.05G, CH = μK. The matrix \(K\in \mathbb {R}^{n\times n}\) possesses the tensor-product form K = I ⊗ Vm + Vm ⊗ I with \(V_{m}=h^{-2}\cdot \text {tridiag}(-1,2,-1)\in \mathbb {R}^{m\times m}\). So K is a n × n block-tridiagonal matrix with n = m2. In addition, we set ω = 2π, μ = 5, and the right-hand side vector \(b=(1+i){\mathscr{A}}\textbf {1}\) with 1 being the vector of all entries equal to 1. As before, we normalize the system (12) by multiplying both sides by h2. Then we conclude that the SPD matrix T = ωCV + CH, and the symmetric indefinite matrix W = −ω2G + K. The numerical results of the tested methods for Example 4.1 are listed in Tables 1 and 2.

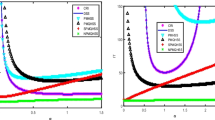

In Table 1, we display the numerical results of the PMHSS and VPMHSS iteration methods. From Table 1, we see that the ITs for VPMHSS method remain constant with problem size, while that of PMHSS method do not. Moreover, VPMHSS considerably outperforms PMHSS both in terms of IT and CPU times, except for m = 512. Additionally, pictures of IT versus α for PMHSS and VPMHSS iteration methods with m = 128 and m = 256 are plotted in Fig. 1. It should be noted that if the number of iteration steps of the PMHSS method is more than 100, it is calculated and plotted as 100. Compare with the iteration counts of PMHSS, VPMHSS is more stable with respect to the iteration parameter α, see Fig. 1.

In Table 2, we report results for the VHSS-, NB-, PMHSS- and VPMHSS-preconditioned GMRES methods. From these results we observe that when used as preconditioners, VPMHSS and PMHSS perform much better than VHSS and NB in IT steps and CPU times. The ITs for PMHSS and VPMHSS almost keep consistent with problem size and relax parameters. Moreover, when the iteration parameter α is fixed to be 1, the ITs and CPU for the VPMHSS iteration method and the VPMHSS-preconditioned GMRES method are almost identical to those obtained with experimentally found optimal parameters \(\alpha _{\exp }\) in Tables 1 and 2.

To further show the efficiency of the VPMHSS preconditioner, eigenvalue distributions (64 × 64 grids) of the four preconditioned matrices are plotted in Fig. 2. From Fig. 2, we can see that all eigenvalues of the four preconditioned matrices are located in a circle at 1 with radius strictly less than 1. In particular, we noticed that the eigenvalues of VPMHSS-preconditioned matrix are linearly distributed, which confirm the theoretical results in Corollary 3.1, and the eigenvalues are more clustered.

Example 4.2

(A distributed optimal control for time-periodic hyperbolic equations) The second complex symmetric indefinite linear system (1) is of the form

where \(K,~T\in \mathbb {R}^{(m-1)^{2}\times (m-1)^{2}}\) are the stiffness and mass matrices, respectively. ν > 0 is a regularization parameter and ω > 0 is a frequency parameter. See [23] for more information.

Note that we have \(W=\sqrt {\nu }(K-\omega T)\) is a symmetric indefinite matrix. We vary the parameters (ν,ω) = (10− 4s,10s) with s = {1,2}. The numerical results of the tested methods for Example 4.2 are depicted in Tables 3 and 4.

As the numerical results reported in Table 3, the VPMHSS iteration method outperforms the PMHSS method for s = 2 both in ITs and CPU times, while for s = 1 and large values of m (m = 256, 512), the VPMHSS iteration method can not compete with PMHSS method. In addition, pictures of IT versus α for PMHSS and VPMHSS methods are plotted in Fig. 3.

From Table 4, we observe that the proposed VPMHSS-preconditioned GMRES method can keep steady in IT steps when the mesh grids increase. We also find that the PMHSS and VPMHSS methods outperform VHSS and NB methods with respect to both IT steps and CPU times. Moreover, the NB preconditioned GMRES method has a wide range of iteration steps and takes more time. Although the VHSS preconditioned GMRES method has a good performance in the iteration steps, the determination of its optimal parameters is difficult and time-consuming.

Eigenvalue distributions (64 × 64 grids) of the four preconditioned matrices are plotted in Figs. 4 and 5 for different variables s. It is evident that the VPMHSS-preconditioned matrix \(F(1)^{-1}{\mathscr{A}}\) is of a well-clustered spectrum around 1 away from zero.

5 Conclusions

In this paper, motivated by the ideas of [8] and [26], we present a variant of PMHSS iteration method (9), which can be regarded as a generalization of the PMHSS method for solving a class of complex symmetric indefinite linear systems (1) and (6). The algorithm implementation of the VPMHSS iteration method and the corresponding preconditioner avoids complex matrix and dense matrix operations, and only involves the solutions of sparse SPD linear sub-systems. Then the unconditionally convergence properties of the VPMHSS method are confirmed. At the same time, the simple optimal iteration parameter and the corresponding optimal convergence factor of our method are also derived. Finally, numerical experiments are given to show the effectiveness and robustness of the proposed method.

Data availability

The datasets generated during and analyzed during the current study are available from the corresponding author on reasonable request.

References

Axelsson, O.: Optimality properties of a square block matrix preconditioner with applications. Comput. Math. Appl. 80, 286–294 (2020)

Axelsson, O., Farouq, S., Neytcheva, M.: Comparison of preconditioned Krylov subspace iteration methods for PDE-constrained optimization problems. Numer. Algoritm. 73, 631–663 (2016)

Axelsson, O., Neytcheva, M., Ahmad, B.: A comparison of iterative methods to solve complex valued linear algebraic systems. Numer. Algoritm. 66, 811–841 (2014)

Axelsson, O., Salkuyeh, D.K.: A new version of a preconditioning method for certain two-by-two block matrices with square blocks. BIT Numer. Math. 59, 321–342 (2019)

Bai, Z.-Z.: Several splittings for non-Hermitian linear systems. Sci. China Ser. A: Math. 51, 1339–1348 (2008)

Bai, Z.-Z.: Quasi-HSS iteration methods for non-Hermitian positive definite linear systems of strong skew-Hermitian parts. Numer. Linear Algebra Appl. 25, e2116:1–19 (2018)

Bai, Z.-Z., Benzi, M., Chen, F.: Modified HSS iteration methods for a class of complex symmetric linear systems. Computing 87, 93–111 (2010)

Bai, Z.-Z., Benzi, M., Chen, F.: On preconditioned MHSS iteration methods for complex symmetric linear systems. Numer. Algoritm. 56, 297–317 (2011)

Bai, Z.-Z., Benzi, M., Chen, F., Wang, Z.-Q.: Preconditioned MHSS iteration methods for a class of block two-by-two linear systems with applications to distributed control problems. IMA J. Numer. Anal. 33, 343–369 (2013)

Bai, Z.-Z., Golub, G.H., Lu, L.-Z., Yin, J.-F.: Block triangular and skew-Hermitian splitting methods for positive-definite linear systems. SIAM J. Sci. Comput. 26, 844–863 (2005)

Bai, Z.-Z., Golub, G.H., Ng, M.K.: Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 24, 603–626 (2003)

Bai, Z.-Z., Golub, G.H., Ng, M.K.: On successive-overrelaxation acceleration of the Hermitian and skew-Hermitian splitting iterations. Numer. Linear Algebra Appl. 14, 319–335 (2007)

Benzi, M., Golub, G.H., Liesen, J.: Numerical solution of saddle point problems. Acta Numer. 14, 1–137 (2005)

Benzi, M., Ng, M.K., Niu, Q., Wang, Z.: A relaxed dimensional factorization preconditioner for the incompressible Navier-Stokes equations. J. Comput. Phys. 230, 6185–6202 (2011)

Cao, S.-M., Wang, Z.-Q.: PMHSS Iteration method and preconditioners for Stokes control PDE-constrained optimization problems. Numer. Algoritm. 87, 365–380 (2021)

Cao, Y., Ren, R.-Z.: Two variants of the PMHSS iteration method for a class of complex symmetric indefinite linear systems. Appl. Math. Comput. 264, 61–71 (2015)

Howle, V.E., Vavasis, S.A.: An iterative method for solving complex-symmetric systems arising in electrical power modeling. SIAM J. Matrix Anal. Appl. 26, 1150–1178 (2005)

Notay, Y.: Convergence of some iterative methods for symmetric saddle point linear systems. SIAM J. Matrix Anal. Appl. 40, 122–146 (2019)

Saad, Y., Schultz, M.H.: GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 7, 856–869 (1986)

Shen, Q.-Q., Shi, Q.: A variant of the HSS preconditioner for complex symmetric indefinite linear systems. Comput. Math. Appl. 75, 850–863 (2018)

Wu, S.-L., Li, C.-X.: Modified complex-symmetric and skew-Hermitian splitting iteration method for a class of complex-symmetric indefinite linear systems. Numer. Algoritm. 76, 93–107 (2017)

Xu, W.-W.: A generalization of preconditioned MHSS iteration method for complex symmetric indefinite linear systems. Appl. Math. Comput. 219, 10510–10517 (2013)

Zeng, M.-L.: A circulant-matrix-based new accelerated GSOR preconditioned method for block two-by-two linear systems from image restoration problems. Appl. Numer. Math. 164, 245–257 (2021)

Zhang, J.-H., Dai, H.: A new block preconditioner for complex symmetric indefinite linear systems. Numer. Algoritm. 74, 889–903 (2017)

Zhang, J.-L., Fan, H.-T., Gu, C.-Q.: An improved block splitting preconditioner for complex symmetric indefinite linear systems. Numer. Algoritm. 77, 451–478 (2018)

Zheng, Z., Chen, J., Chen, Y.-F.: A fully structured preconditioner for a class of complex symmetric indefinite linear systems. BIT Numer. Math. https://doi.org/10.1007/s10543-021-00887-8 (2021)

Zheng, Z., Huang, F.-L., Peng, Y.-C.: Double-step scale splitting iteration method for a class of complex symmetric linear systems. Appl. Math. Lett. 73, 91–97 (2017)

Acknowledgements

The authors are very much indebted to Dr. Rui-Xia Li for her proofreading. They are also grateful to the anonymous referees for their valuable comments and suggestions which improved the quality of this paper.

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 11901505, 11901324, 11771193), the Natural Science Foundation of Fujian Province (No. 2020J01906), the Key Scientific Research Project for Colleges and Universities of Henan Province (No. 19A110006) and Nanhu Scholar Program for Young Scholars of XYNU.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zheng, Z., Zeng, ML. & Zhang, GF. A variant of PMHSS iteration method for a class of complex symmetric indefinite linear systems. Numer Algor 91, 283–300 (2022). https://doi.org/10.1007/s11075-022-01262-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01262-6