Abstract

We report a new kind of waveform relaxation (WR) method for general semi-linear fractional sub-diffusion equations, and analyze the upper bound for the iteration errors. It indicates that the WR method is convergent superlinearly, and the convergence rate is dependent on the order of the time-fractional derivative and the length of the time interval. In order to accelerate the convergence, we present the windowing WR method. Then, we elaborate the parallelism based on the discrete windowing WR method, and present the corresponding fast evaluation formula. Numerical experiments are carried out to verify the effectiveness of the theoretic work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, fractional differential equations have attracted more and more researchers’ attention, since they have inherent advantages to describe the various processes and phenomenon with memory and hereditary properties [1, 2]. Fractional sub-diffusion equation is one of the most typical equations and it is often used to model the viscoelastic anomalous diffusion in disordered media [3], nanoprecipitate growth in solid solutions [4], diffusion process in magnetic resonance imaging [5], and so on. Because of the nonlocal properties, the sub-diffusion equations are more complicated than the classical integer-order differential equations, and it is usually difficult to obtain the analytic solutions of the sub-diffusion equations. Various numerical schemes for such equations are emerging [6,7,8].

The waveform relaxation (WR) method, as an iterative method for time-dependent equations, produces a series of functions with respect to the time variable, to approximate the solution of the equations. The WR method is firstly proposed for the simulation of large circuits [9], and it is able to decouple a complicated large system into a series of weakly coupled small sub-systems, which can be solved simultaneously. It has been proved in [10, 11] that the WR method has superlinear convergence for general nonlinear ordinary differential equations (ODEs) on bounded domain, and is of linear convergence for linear ODEs on unbounded domain. The WR method has been widely used to solve differential algebra equations (DAEs) [12, 13], integral-differential-algebraic equations (IDAEs) [14], and delay-differential equations (DDEs) [15]. To apply the WR method to partial differential equations (PDEs), one popular method is to decompose spatial domain into several sub-domains, and solve a set of systems on sub-domains, which is also called Schwarz WR method [16,17,18]. Moreover, we have also proposed a kind of WR method for reaction diffusion equations [19], in which we preserve the diffusion term and relax the nonlinear reaction term. This kind of WR method converges quickly, and can also be implemented in parallel.

The WR method is also an alternative method for fractional differential equations. In ref. [20], the WR method is used to solve linear and nonlinear time-fractional ODEs, and proper convergent splitting is constructed. Then, the WR method is extended to handle fractional DDEs [21] and DAEs [22]. In ref. [23], a multigrid WR method is proposed for the time-fractional heat equation, and a fast computing technique is also supplied, based on the Toeplitz-like structure of the coefficient matrix. Furthermore, Schwarz WR methods for fractional PDEs are also reported recently [24, 25]. In this paper, we apply the WR method to a kind of fractional sub-diffusion equation with a nonlinear reaction term at the PDE level, which produces a series of linear time-fractional PDEs. The WR method has the same advantages as that for integer-order PDEs in [19], but the convergence is totally different, which is dependent on the order of the fractional derivative, see Theorem 2.1 for details. In order to accelerate the convergence, a windowing technique is combined, and the corresponding discrete windowing WR method is discussed.

The outline of the paper is as follows. In Section 2, we propose the new WR method for a kind of semi-linear fractional sub-diffusion equations, and analyze the convergence. Combing the windowing technique, we introduce the windowing WR method in Section 3. In Section 4, the discrete windowing WR method is constructed, and the convergence and the parallelism are presented, then the fast evaluation formula for the windowing WR method is provided. In Section 5, two numerical experiments are given to verify the effectiveness of the theoretic results. Finally, conclusions are attached in the last section.

2 Waveform relaxation method and convergence

We consider the following fractional-order initial value problem satisfying homogeneous boundary conditions

where the positive constant K is the transport-related coefficient, f(x, t, u(x, t)) is a given nonlinear source/sink term, φ(x) is a prescribed initial value, and \({{~}_{0}^{C}\!\mathcal {D}_{t}^{\alpha }} u(x,t)\) is the Caputo fractional derivative of order α (0 < α < 1) defined by [1]

where Γ(⋅) denotes the Gamma function. It can be seen that system (1) involves a nonlinear fractional sub-diffusion equation.

2.1 Construction of the WR method

A typical iterative scheme of the WR method for system (1) is

for k = 0,1,…, where the splitting function F(x, t, u(x, t), v(x, t)) determines the type of WR algorithm, and it always satisfies F(x, t, u(x, t), u(x, t)) = f(x, t, u(x, t)). In this paper, we choose F(x, t, u(k+ 1)(x, t), u(k)(x, t)) = f(x, t, u(k)(x, t)) for simplicity, i.e., the iterative scheme can be written as

for k = 0,1,…. The initial guess of the iterations is often chosen as u(0)(x, t) ≡ φ(x), for 0 ≤ t ≤ T. The special WR algorithm (3) is also called Picard relaxation algorithm. We can see that WR algorithm (3) leads to a series of linear systems.

For any fixed iteration index k, by the separation of variables, the solution of system (3) has the form

where the coefficients \(a_{n}^{(k)}\), for n = 1,2,…, are to be determined. We further suppose

with

Substituting expressions (4) and (5) into system (3), we have

Comparing the coefficients of the basis functions \(\{ \sin \limits \frac {n \pi x}{L} \}_{n=1}^{\infty }\), we can obtain

According to Theorem 5.15 of ref. [26], we get the solution of (6)

where Eα,β(z) is the Mittag-Leffler function, defined by [26]

and

Then, the solution of system (3) has the form

Denote

we can verify that the function \(\sum \limits _{n=1}^{\infty }a_{n}(t)\sin \limits \frac {n\pi x}{L}\) satisfies system (1).

2.2 Convergence analysis

In this part, we present the convergence of the iterative sequence by WR algorithm (3). Before that, we still need a preliminary definition and lemmata as follows.

For \(\varphi (t)\in C([0,T],\mathbb {R})\), we define an operator

where λ is s positive constant. Then, we have

Lemma 2.1

For any positive integer k,

where \((\mathcal {A}^{k} \varphi )(t) = (\mathcal {A}(\mathcal {A}^{k-1} \varphi ))(t)\).

Proof

We prove the result by induction. For k = 1, we have

Suppose that inequality (9) holds for any fixed index k, then

where B(⋅,⋅) denotes the Beta function. The conclusion results. □

Lemma 2.2

For any t ∈ [0, T], α ∈ (0,1), and λ > 0, the series

converges.

Proof

Since function Eα, α(−λn2tα) is completely monotonous with respect to n [27], for any λ, t > 0, the series \({\sum }_{n=1}^{\infty }E_{\alpha ,\alpha } (-\lambda n^{2} t^{\alpha })\) has the same convergence as the integration \({\int \limits }_{1}^{\infty } E_{\alpha ,\alpha } (-\lambda t^{\alpha } x^{2})dx\).

To evaluate the integration, we consider \({{\int \limits }_{1}^{y}} E_{\alpha ,\alpha } (-\lambda t^{\alpha } x^{2})dx\) for y ≥ 1, then we have

For any y ≥ 1, Eα(−λtαy) converges, and then \({\int \limits }_{1}^{\infty } E_{\alpha ,\alpha } (-\lambda t^{\alpha } x^{2})dx\) converges. The conclusion results. □

The convergence of the WR algorithm (3) can be found in the following theorem.

Theorem 2.1

If the nonlinear function f(x, t, u) has a continuous first-order derivative with respect to u, then the iterative sequence of functions {u(k)(x, t)} for k = 1,2,…, generated by the WR algorithm (3) converges to the true solution u(x, t) of system (1), and satisfies

where \(\bar {M}\) is a positive constant.

Proof

We define ε(k)(x, t) = u(k)(x, t) − u(x, t), 0 ≤ x ≤ L, 0 ≤ t ≤ T, which satisfies

By the separation of variables, the solution of system (11) is

where \(u_{\ast }^{(k)} \in [\min \limits (u^{(k)}(x,t),u(x,t)), \max \limits (u^{(k)}(x,t),u(x,t))]\).

We notice that for any 0 ≤ s ≤ t ≤ T,

and the function \(E_{\alpha ,\alpha } \left [-K\left (\frac {n \pi }{L}\right )^{2} (t-s)^{\alpha } \right ]\) is bounded and monotonous with respect to n [27]. According to Abel’s test for uniform convergence, the series of functions

converges uniformly for x ∈ [0, l] and s ∈ [0, t]. Denote

then we have

For any fixed t ∈ (0, T], we suppose that there is a s0 ∈ (0, t), such that

and denote \(\varepsilon _{0}=\frac {1}{\Gamma (\alpha )}\min \limits _{s_{0}\leq s \leq t}\max \limits _{0\leq x \leq L}|f^{\prime }(\xi ,s,u_{\ast }^{(i)}(x,s))\varepsilon ^{(i)}(x,s)|\). From (12) we know that,

so there exist a constant δ1 ∈ (0, t), such that for any s ∈ (t − δ1, t),

and there exist a constant δ2 ∈ (0, t), such that for any s ∈ (t − δ2, t),

Let \(s_{1}=\max \limits \{t-\delta _{1},t-\delta _{2},s_{0}\}\). For any s ∈ (s1, t), we have

Therefore,

and

where M is a uniform upper bound of \(|f^{\prime }(x,t,u(x,t))|\), for 0 ≤ x ≤ L and 0 ≤ t ≤ T.

For s ∈ [0, s1], we consider the following series of functions,

For any x, ξ ∈ [0, L], we have

Together with Lemma 2.2, series of functions (14) is convergent uniformly for x, ξ ∈ [0, L], and 0 ≤ s ≤ t ≤ s1. We denote by M1 an upper bound of the series of functions (14), then

and

We choose \(\bar {M}=\max \limits \{\frac {2M}{\Gamma (\alpha )}, LM_{1}M\}\). By the inequalities (13) and (15), we obtain

and

According to Lemma 2.1, we can obtain

which leads to

This completes the proof. □

Remark 1

For the convergence rate shown in estimation (10), we denote \(v_{k} = \frac {(\bar {M} {\Gamma }(\alpha ) T^{\alpha })^{k}}{\Gamma (k\alpha +1)}\). Then, we compute

Based on Stirling’s formula of the Gamma function, we get \(r\sim \mathcal {O}(k^{-\alpha })\), which means that WR algorithm (3) is convergent superlinearly to the true solution of system (1).

3 Windowing WR method

We can see from Theorem 2.1 that the upper bound of the WR iteration is related to the length of the time interval. Therefore, the windowing technique is a proper way to reduce the iteration error [28]. In this section, we combine the windowing technique with the WR method introduced in the last section to establish an improved WR method, say the windowing WR method.

3.1 Construction of the windowing WR method

For the fractional sub-diffusion equation (1), we first divide the time interval [0, T] into N windows, with

We denote the i-th window by Ωi = [Ti, Ti+ 1], with the length Hi = Ti+ 1 − Ti, for i = 0,1,…, N − 1, and the maximum length of the windows is \(H=\max \limits _{0\leq i \leq N-1}\{H_{i}\}\).

We denote by \(u_{i}^{(k)}(x,t)\) the k-th waveform on the i-th window Ωi, for k = 0,1,…, ki, where ki is the maximum number of WR iterations on Ωi. Then, the windowing WR method for system (1) on Ωi is given by

for k = 0,1,…, ki − 1, and i = 0,1,…, N − 1. We define \(u_{-1}^{(k_{-1})}(x,0)=\varphi (x)\) and choose \(u_{i}^{(0)}(x,t)\equiv u_{i-1}^{(k_{i-1})}(x,T_{i})\) as the initial guess on Ωi. We can observe that, when solving the waveforms \(u_{i}^{(k)}\) on Ωi, the approximations of the solution on the previous windows are already known.

For t ∈ [Ti, Ti+ 1] and any fixed k, we denote

Similar to the method shown in sub-section 2.1, we have the solution of system (16) with the form

3.2 Convergence of the windowing WR method

Before giving the convergence of the windowing WR method (16), we first investigate an operator and its property.

For \(\varphi (t)\in C([0,T],\mathbb {R})\), we define an operator

where B0 and λ are positive constants. Then, we have

Lemma 3.1

For any positive integer k, we have

where \((\mathcal {B}^{k} \varphi )(t) = (\mathcal {B}(\mathcal {B}^{k-1} \varphi ))(t)\).

Proof

We prove the result by induction. For k = 1, we have

Suppose that inequality (18) holds for any fixed index k, then

where we have used the equality

By the properties of the Beta function B(α, jα + 1), we further have

The conclusion results. □

Then, the convergence of the windowing WR method for system (1) can be found in the following theorem.

Theorem 3.1

If the nonlinear function f(x, t, u) has a continuous first-order derivative with respect to u, then the iteration error between the approximation \(\{u_{i}^{(k_{i})}(x,t), i=0,1,\ldots , N-1\}\), generated by the windowing WR method (16), and the true solution {ui(x, t), i = 0,1,…, N − 1} of system (1) restricted in Ωi, can be bounded by

where

with M, \(\bar {M}\) positive constants, for i = 0,1,…, N − 1.

Proof

We define \(\varepsilon _{i}^{(k)}(x,t)=u_{i}^{(k)}(x,t)-u_{i}(x,t)\), 0 ≤ x ≤ L, Ti ≤ t ≤ Ti+ 1, which satisfies

The solution of system (20) has the form

where

Similar to Theorem 2.1, there exist constants Mi, for i = 0,1,…, N − 1, such that

We might as well suppose Mi ≥ 1, for i = 0,1,…, N − 1.

Using integration by parts and the property \(\varepsilon _{j}^{(k_{j})}(x,T_{j+1}) = \varepsilon _{j+1}^{(k_{j+1})}(x,T_{j+1})\), we have

where \(u_{i,\ast }^{(k)} \in \left [\min \limits (u_{i}^{(k)}(x,t),u_{i}(x,t)), \max \limits (u_{i}^{(k)}(x,t),u_{i}(x,t))\right ]\). Then,

where M is an upper bound of \(|f^{\prime }(x,t,u_{i,\ast }^{(k)}(x,t))|\). Taking inequality (23) into inequality (22), we get

where \(\bar {M}_{i}=\frac {M_{i}}{\Gamma (1-\alpha )}B(\alpha ,1-\alpha )=\frac {M_{i}}{\Gamma (1-\alpha )}\frac {\pi }{\sin \limits (\pi \alpha )}\), and \(C_{i}=M_{i}+\bar {M}_{i}\). After ki iterations of WR on [Ti, Ti+ 1], we have by Lemma 3.1 that

for t ∈ [Ti, Ti+ 1]. We notice that

For simplicity, we further denote

Then, we have

Since Θi ≥ 1, and Υi(t) ≥ 1, we can deduce from inequality (24) that the iteration errors accumulate along the windows line, i.e.,

which leads to

and further we have

By recurrence, we get

The conclusion results. □

4 Discrete windowing WR method

In fact, the standard WR method introduced in Section 2 is a special case of the windowing WR method. In this section, we only consider the discrete version of the windowing WR method.

4.1 Construction of the discrete windowing WR method

For simplicity, we suppose that all the time windows have the same lengths, i.e., Hi = H, for i = 0,1,…, N − 1. Let N0 and Ns be two positive integers. We partition the spatial domain [0, L] by a uniform mesh, xl = lh, for l = 0,1,…, Ns, with \(h=\frac {L}{N_{s}}\), and further partition the time window Ωi by a uniform mesh ti, n = Ti + nτ, for n = 0,1,…, N0, with \(\tau =\frac {H}{N_{0}}\), which satisfy \(t_{i,N_{0}}=T_{i+1}\).

At any mesh point (xl, ti, n), the windowing WR method (16) is

for l = 1,2,…, Ns − 1, i = 0,1,…, N − 1, and n = 0,1,…, N0. We denote by \(\left [u_{i}^{(k)}\right ]_{l}^{p}\) the approximation of \(u_{i}^{(k)}(x_{l},t_{i,p})\), and \([u_{i}]_{l}^{p}\) the approximation of ui(xl, ti, p). We approximate the term \(\frac {\partial ^{2}}{\partial x^{2}}u_{i}^{(k+1)}(x_{l},t_{i,n})\) in the right-hand side of (25) by the classical finite difference operation \({\delta _{x}^{2}} \left [u_{i}^{(k+1)}\right ]_{l}^{n}\), and adopt the idea of L1 scheme [29] to approximate the terms in the left-hand side of (25). Then, we have

where

For each term in the summation in the left side of (25), we have

The resulting numerical scheme for (25) can be written as

Similarly, the L1 scheme for the original equation can be written as

4.2 Convergence of the discrete windowing WR method

Let \(\left [\varepsilon _{i}^{(k)}\right ]_{l}^{n} = \left [u_{i}^{(k)}\right ]_{l}^{n} - [u_{i}]_{l}^{n}\), for l = 1,2,…, Ns − 1, i = 0,1,…, N − 1, n = 0,1,…, N0 and k = 0,1,…, ki, which satisfies

We denote the vectors

together with the fact \(\left [\boldsymbol {\varepsilon }_{j}^{(k_{j})}\right ]^{N_{0}} = \left [\boldsymbol {\varepsilon }_{j+1}^{(k_{j+1})}\right ]^{0}\), for j = 0,1,…, i − 1, and \(\left [\boldsymbol {\varepsilon }_{0}^{(k_{0})}\right ]^{0} = \boldsymbol {0}\), we have

where

and

We further define the vector

Then, equality (29) can be rewritten in the following matrix form,

where Is and I0 are identity matrixes with scales Ns − 1 and N0, respectively, A is a N0 × N0 lower triangular Toeplitz matrix with

Gij is a N0 × N0 Toeplitz matrix with

and

We denote \(r={\Gamma }(2-\alpha )\frac {K\tau ^{\alpha }}{h^{2}}\) and D = A ⊗Is − r(I0 ⊗B), the error (30) can be written as

where Jf is the Jacobian matrix of \(\boldsymbol {\bar {f}}\), which is bounded by a positive M0. Then, we have

By induction on the index k, it leads to

where

We can choose τ small enough, such that 𝜃 < 1, and ψij > 0.

Define the real numbers ηij, for i = 0,1,…, N − 1, and j = 0,1,…, i − 1 by

We have the following theorem.

Theorem 4.1

If the nonlinear function f(x, t, u) has a continuous first-order derivative with respect to u, then the iteration error \(\boldsymbol {\bar {\varepsilon }}_{i}^{(k_{i})}\) of the windowing WR method (26) on the i-th time window Ωi can be bounded by

where kj is the maximum number of WR iterations on Ωj.

Proof

We prove the result by induction on the index i.

For i = 0, on the first window, we obtain that by (32)

Assume that inequality (35) holds for i = 1,2,…, n − 1, then we have

By exchanging the summation order, we can obtain

It means that the result also holds for i = n, and the theorem is proved. □

It can be seen that {ηij} are defined by recurrence, and it is not intuitive to bound \(\| \boldsymbol {\bar {\varepsilon }}_{i}^{(k_{i})} \|_{\infty }\). Therefore, we present the following corollary.

Corollary 4.1

The iteration error \(\boldsymbol {\bar {\varepsilon }}_{i}^{(k_{i})}\) can be further bounded by

where \(k_{min}=\min \limits _{0\leq j \leq i-1}\{k_{j}\}\), and 𝜃 is defined in (33) with 𝜃 < 1.

Proof

From estimation (35), we have

Denote \(W_{i} = {\sum }_{j=0}^{i} \eta _{ij}\), then we have W0 = 1, and for i = 1,2,…, N − 1,

Since Gij is a Toeplitz matrix, and

We have

By the induction on the index i, we get

Together with inequality (37), we can obtain the result (36). □

Remark 2

The quantity \(\|\boldsymbol {D}^{-1}\|_{\infty }\) in estimation (36) is usually a moderate number. In fact, a number of numerical tests are carried out to support this observation. Therefore, we can take a proper WR iteration number ki on each time window to control the iteration errors.

4.3 Parallelism of the discrete windowing WR method

The discrete windowing WR method (26) can be written in the following form,

For any fixed n = 1,2,…, N0, in order to compute \(\left [\boldsymbol {u}_{i}^{(k+1)}\right ]^{n}\), we need \(\left [\boldsymbol {u}_{i}^{(k+1)}\right ]^{p}\) on the present waveform for p = 1,2,…, n − 1, and \(\left [\boldsymbol {u}_{i}^{(k)}\right ]^{n}\), which is the approximation at t = ti + nτ on the last waveform, as well as \(\left [\boldsymbol {u}_{j}^{(k_{j})}\right ]^{p}\), for p = 1,2,…, N0 and j = 0,1,…, i − 1, which are the approximations of the waveforms on all the previous time windows. It is unnecessary to wait for all the approximations on the k-th waveform before computing the approximations on the (k + 1)-th waveform.

For simplicity, we assume that the WR methods on all the time windows have the same number of iterations, say k0. The parallelism of the discrete windowing WR method (39) is shown in Fig. 1, where “dt” denotes the computational cost at each time step. In fact, for the computation of the time-fractional PDE, the computational costs at later time steps are usually bigger than that at former time steps, since more matrix-vector multiplications are involved in later time steps, though “dt” is used uniformly for clarity.

The parallelism of the discrete windowing WR scheme (39)

Assume that k0 processors are available, and each processor is in charge of the computation of a waveform. We can see from Fig. 1 that k0 processors are running almost simultaneously on each time window, such that the theoretical total running time is N(N0 + k0)dt, rather than Nk0N0dt in serial manner.

The steps of the parallel procedure are shown in the following algorithm,

4.4 Efficient implementation of the discrete windowing WR method

We can see from (26) that the discrete windowing WR method is based on the idea of L1 scheme, which is computationally expensive on long time interval. In this sub-section, we employ the sum-of-exponentials (SOE) method [30, 31] to further reduce the memory and the computational cost. The fast evaluation formula based on SOE method for the Caputo fractional derivative in (25) can be expressed as

At the initial point, we have

and at the boundaries of any two time windows, we have

It means that the discrete windowing WR method is implemented on one time window after another, and the WR iterations are performed ki times on the i-th window.

In fact, the fast evaluation formula based on SOE method transforms the discrete windowing WR method as a nonlocal numerical integrator to be a local evaluation, and the computational cost at each time point looks more balanced. Suppose that we have enough processors and denote by \(\tilde {\tau }\) the computational cost at each time point, then the theoretical computational cost of the fast windowing WR method is \(N(N_{0}+k_{0}-1)\tilde {\tau }\).

For the choice of the parameters, we have two considerations. First, k0 should be as small as possible to avoid too much computational cost. Second, if the time window is too short, the number of time steps on each window, N0, will be very small, then the degree of parallelism will be reduced. In particular, if only one time step is contained in each window, i.e., N0 = 1, the WR method will deteriorate to the classical semi-implicit scheme, which cannot be implemented in parallel at all. In order to balance the two aspects, we can take moderate values for N0, such that N0 is much larger than k0. Therefore, the theoretical computational cost with enough processors seems slightly more than the cost of a general numerical scheme for a linear problem.

5 Numerical experiments

In this section, we use two examples to verify the effectiveness of the windowing WR methods, and the methods are coded using Matlab R2017b on a Lenovo desktop with Intel(R) Core(TM) i7-7700 CPU @ 3.60GHz.

Example 5.1

We first consider the following sub-diffusion equation in one spatial dimension,

To discretize the x and t variables, we use a uniform space-time mesh with time step \(\tau =\frac {1}{2048}\) and spatial grid size \(h=\frac {1}{256}\). The time interval [0,2] is divided into N time windows uniformly, and each window includes \(\frac {4096}{N}\) time steps. For simplicity, we perform the same number of WR iterations on all time windows, i.e., ki = k0, for i = 0,1,…, N − 1.

The discrete windowing WR method for (41) can be written as

for i = 0,1,…, N − 1, l = 1,2,…,255, \(n=1,2,\ldots ,\frac {4096}{N}\), k = 0,1,…, k0 − 1, and the reference solution can be obtained by the scheme

for l = 1,2,…,256, and n = 1,2,…,4096. We notice from scheme (42) that the nonlinear term is approximated using the values at \(t=t_{iN_{0}+n-1}\), in order to match with scheme (43). In fact, if we employ a fully implicit scheme in the discrete windowing WR method for (41), the resulting numerical scheme, i.e., the nonlinear term in (42) is replaced by \(\left [u_{i}^{(k)}\right ]_{l}^{n} + \left (\left [u_{i}^{(k)}\right ]_{l}^{n}\right )^{2}\), will still be a linear equation, since the values are from the last waveform, while the corresponding reference equation is a nonlinear equation.

The iteration error of the windowing WR method is measured by

The iteration errors for various values of k0 and N are shown in Tables 1, 2, and 3, for α = 0.2, 0.5, 0.8, respectively. In fact, the windowing WR method with only one time window, i.e., N = 1, is indeed the standard WR method. We can see from Tables 1, 2, and 3 that the windowing technique can reduce the number of iterations effectively.

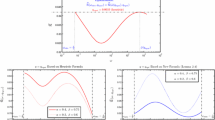

To show the convergence of WR clearly, we plot the relationship between the iteration error and the iteration number k0 with various time windows in the first three figures of Fig. 2, for α = 0.2, α = 0.5, and α = 0.8, respectively. Furthermore, We also compare the convergence behaviors of WR with 64 time windows for various values of α in the last figure in Fig. 2. We can see that the convergence rates of the windowing WR method are related to the values of α, and such an observation fits very well with the theoretical results introduced in Sections 2–3.

We also test the efficiency of the fast evaluation formula of the WR method. We fix NN0 = 4096, the observed errors, and the consumed CPU time for the discrete windowing WR method, and its fast evaluation version with tolerance 𝜖= 1.0e-10 is shown in Table 4. We can see that the fast windowing WR method has almost the same errors as the windowing WR method which is based on L1 scheme, while the fast version costs much less CPU time. For example, when N = 16, α = 0.2, and k0 = 9, the two methods have the same observed error 2.83e-04, but the consumed CPU time of the fast windowing WR method has reduced from 63 to 7 s.

Based on the fast evaluation formula of the WR method, we also investigate the relationship between the number of windows N and the number of iterations k0. We fix the number of time steps NN0 = 4800 on the whole time interval, and denote k0 as the minimum uniform number of WR iterations on each time window, such that the iteration error below 2.0e-10 and the relationships between N and k0 for different values of α can be found in Table 5, which are also shown in Fig. 3. It can be observed that, with more time windows, less WR iterations are needed for a desired accuracy.

Example 5.2

We then consider the following sub-diffusion equation in two spatial dimensions,

where Ω = [0,1] × [0,1].

We still use a uniform space-time mesh with time step \(\tau =\frac {1}{256}\) and spatial grid size \(h_{x}=h_{y}=\frac {1}{64}\). N uniform time windows are employed, and each of them has \(\frac {512}{N}\) time steps. The discrete windowing WR method for (44) can be written as

for i = 0,1,…, N − 1, l, q = 1,2,…,63, \(n=1,2,\ldots ,\frac {512}{N}\), k = 0,1,…, k0 − 1, and the reference solution can be obtained by the scheme

for l, q = 1,2,…,63, and n = 1,2,…,512. The iteration error of the windowing WR method is measured by

The iteration error for various values of k0 and N are shown in Tables 6, 7, and 8, for α = 0.2, 0.5, 0.8, respectively. We can also see that the windowing technique can accelerate the convergence of the WR method.

We plot the relationship between the iteration error and the iteration number k0 with various time windows for α = 0.2, α = 0.5, and α = 0.8, as well as the convergence behaviors of WR with 8 time windows for various values of α, in Fig. 4. The same observation can be found as that in Example 5.1.

We also use the fast evaluation formula of the WR method for Example 5.2. We fix NN0 = 4096, and the observed errors and the consumed CPU times for the discrete windowing WR method and its fast evaluation version with different values of N and tolerance 𝜖= 1.0e-10 are shown in Table 9. We can also see that the two versions with the same parameters have the same accuracy, while the fast windowing WR method needs much less running time.

Moreover, we fix the number of time steps NN0 = 2400 on [0, T], and denote k0 as the minimum uniform number of WR iterations on each time window, such that the iteration error below 2.0e-10 and the relationships between N and k0 for different values of α are shown in Table 10 and Fig. 5, from which the same observation as that in Example 5.1 can be found.

6 Conclusions

A new WR method for semi-linear fractional sub-diffusion equations has been presented, and the error estimation has been given. Then, the windowing WR method and its discrete version have also been presented. We can see from the numerical experiments that the (windowing) WR method is convergent superlinearly, and the convergence rate will be slow with the decrease of the order of the time-fractional derivative. Furthermore, we have also combined the fast evaluation formula based on SOE approximation with the windowing WR method, and verified the efficiency of the fast windowing WR method by numerical tests.

Moveover, the WR method used in this paper is one of the simplest WR methods. Proposing some other effective WR methods to further improve the convergence rate will be considered in the future.

References

Podlubny, I.: Fractional Differential Equations. New York: Academic Press (1999)

Sun, H., Zhang, Y., Baleanu, D., Chen, W., Chen, Y.: A new collection of real world applications of fractional calculus in science and engineering. Commun Nonlinear Sci Numer Simulat 64, 213–231 (2018)

Metler, R, Jeon, JH, Cherstvy, AG, Barkai, E.: Anomalous diffusion models and their properties: non-stationarity, non-ergodicity, and ageing at the centenary of single particle tracking. Phys Chem Chem Phys 16, 24128–24164 (2014)

Svetukhin, V.V., Sibatov, R.T.: Kinetics of subdiffusive growth of new phase particles in supersaturated solid solutions. J Exp Theor Phys 120, 678–686 (2015)

Bennett, K.M., Schmaidna, K.M., Bennett, R., Rowe, D.B., Lu, H., Hyde, J.S.: Characterization of continuously distributed cortical water diffusion rates with a stretched-exponential model. Magn Reson Med 50, 727–734 (2003)

Alikhanov, A.A.: A new difference scheme for the time fractional diffusion equation. J Comput Phys 280, 424–438 (2015)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J Comput Phys 225, 1533–1552 (2007)

Sun, Z., Wu, X.: A fully discrete difference scheme for a diffusion-wave system. Appl Numer Math 56, 193–209 (2006)

Lelarasmee, E., Ruehli, A.E., Sangiovanni-Vincentelli, A.L.: The waveform relaxation method for time-domain analysis of large scale integrated circuits. IEEE T Comput Aid D 1, 131–145 (1982)

Vandewalle, S.: Parallel multigrid waveform relaxation for parabolic problems. Stuttgart: B. G. Teubner (1993)

Miekkala, U., Nevanlinna, O.: Convergence of dynamic iteration methods for initial value problems. SIAM J Sci Stat Comput 8, 459–482 (1987)

Jiang, Y.L.: On time-domain simulation of lossless transmission lines with nonlinear terminations. SIAM J Numer Anal 42, 1018–1031 (2004)

Jiang, Y.L., Wing, O.: On monotone waveform relaxation for systems of nonlinear differential-algebraic equations. SIAM J Numer Anal 38, 170–185 (2000)

Jiang, Y.L., Chen, R.M.M.: Multisplitting waveform relaxation for systems of linear integral-differential-algebraic equations in circuit simulation. J Circuit Syst Comp 10, 205–218 (2000)

Bartoszewski, Z., Kwapisz, M.: On error estimates for waveform relaxation methods for delay-differential equations. SIAM J Numer Anal 38, 639–659 (2000)

Gander, M.J.: A waveform relaxation algorithm with overlapping splitting for reaction diffusion equations. Numer Linear Algebr 6, 125–145 (1999)

Gander, M.J.: Optimized schwarz methods. SIAM J Numer Anal 44, 699–731 (2006)

Gander, M.J., Zhao, H.K.: Overlapping Schwarz waveform relaxation for the heat equation in n-dimensions. BIT 42, 779–795 (2002)

Liu, J., Jiang, Y.L.: Waveform relaxation for reaction diffusion equations. J Comput Appl Math 235, 5040–5055 (2011)

Jiang, Y.L., Ding, X.L.: On waveform relaxation methods for fractional differential equations with the Caputo derivatives. J Comput Appl Math 238, 51–67 (2013)

Ding, X.L., Jiang, Y.L.: Waveform relaxation method for fractional functional differential equations. Fract Calc Appl Anal 16, 573–594 (2013)

Ding, X.L., Jiang, Y.L.: Waveform relaxation method for fractional differential-algebraic equations. Fract Calc Appl Anal 17, 585–604 (2014)

Gaspar, F.J., Rodrigo, C.: Multigrid waveform relaxation for the time-fractional heat equation. SIAM J Sci Comput 39, A1201–A1224 (2017)

Wu, S.L.: Optimized overlapping Schwarz waveform relaxation for a class of time-fractional diffusion problems. J Sci Comput 72, 842–862 (2017)

Wu, S.L., Huang, C.M.: Asymptotic results of Schwarz waveform relaxation algorithm for time-fractional cable equations. Commun Comput Phys 25, 390–415 (2019)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and applications of fractional differential equations. North-holland mathematics studies, 204. Amsterdam: Elsevier Science B. V. (2006)

Schneider, W.R.: Completely monotone generalized Mittag-Leffler functions. Expo Math 14, 3–16 (1996)

Jiang, Y.L.: On Windowing waveform relaxation of initial value problems. Acta Math Appl Sin-E 22, 543–556 (2006)

Gao, G., Sun, Z., Zhang, H.: A new fractional numerical differentiation formula to approximate the Caputo fractional derivative and its applications. J Comput Phys 259, 33–50 (2014)

Jiang, S., Zhang, J., Zhang, Q., Zhang, Z.: Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations. Commun Comput Phys 21, 650–678 (2017)

Liao, H., Yan, Y., Zhang, J.: Unconditional convergence of a fast two-level linearized algorithm for semilinear subdiffusion equations. J Sci Comput 80, 1–25 (2019)

Acknowledgments

The authors would like to thank the anonymous referees for their helpful comments and valuable suggestions to improve the paper significantly.

Funding

This work was supported by the National Natural Science Foundation of China (Nos. 11401589, 11871393, and 11871400), the Fundamental Research Funds for the Central Universities (Nos. 18CX02049A and 17CX02066), and the International Science and Technology Cooperation Program of Shaanxi Research and Development Plan (2019KWZ-08).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, J., Jiang, YL., Wang, XL. et al. Waveform relaxation for fractional sub-diffusion equations. Numer Algor 87, 1445–1478 (2021). https://doi.org/10.1007/s11075-020-01014-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-01014-4

Keywords

- Waveform relaxation

- Fractional sub-diffusion equations

- Superlinear convergence

- Windowing technique

- Parallelism