Abstract

We show that a direct numerical computation of the coefficients of any method based on the exponential fitting is possible. This makes unnecessary the knowledge of long sets of analytical expressions for the coefficients, as usually presented in the literature. Consequently, the task of any potential user for writing his/her own code becomes much simpler. The approach is illustrated on the case of the Numerov method for the Schrödinger equation, on a version for which the analytic expressions of coefficients are not known.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Exponential fitting (ef for short) is a mathematical procedure for generating numerical methods for various operations (solution of differential or integral equations, numerical differentiation, quadrature, least-square approximation, interpolation, etc.) on functions with a pronounced oscillatory or hyperbolic variation. The typical condition for generating the coefficients of such methods is that the method must be exact for a set of functions including exponential functions.

The literature is very vast, see, e.g., [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17] and references therein, but in the large majority of papers the efforts were directed on the analytic determination of the coefficients. However, the resulting expressions are often so long and cumbersome that their use becomes a difficult task for any potential reader who wants to apply such methods. Attempts of reducing the number of the expressions do also exist, e.g., in [18], but for a potential user the problem continues to remain quite complicated.

As a matter of fact, the need for analytic expressions was important especially in the early stages of the field when properties of the new ef versions, for example the stability properties of solvers of differential equations, were better seen on analytic expressions. At present, however, the main interest consists in applications such that by now only the numerical determination of the coefficients is sufficient.

In this paper, we consider the problem from this perspective. The need of analytic expressions, which in many papers fill pages and pages of formulas, becomes redundant. Only the generating system of equations has to be mentioned, and everything after that is done numerically by using a dedicated subroutine.

We illustrate this on the case of the Numerov method for the Schrödinger equation.

2 Numerov method

This is to solve the Schrödinger equation

where V (x), called the potential, is a given function, and E, the energy, is a constant. Two problems are usually considered: (i) initial value problem, with initial values y(a) and \(y^{\prime }(a)\), with unique solution for any E, and (ii) eigenvalue problem where conditions at the two ends are given, \( \alpha _{1}y(a)+{\upbeta }_{1} y^{\prime }(a)=0\) and \( \alpha _{2}y(b)+{\upbeta }_{2} y^{\prime }(b)=0\). Here the weights have to satisfy the conditions |αi| + |βi|≠ 0,i = 1,2. The eigenvalues and their eigenfunctions have to be computed in this case.

Let us introduce an equidistant partition of [a,b] with the step h: xi = a + ih,i = 0,1,2,...,imax; Of course, \( x_{i_{max}}=b\). The Numerov method links the numerical values of y at three consecutive points,

where fi = V (xi) − E. It allows computing yi+ 1 in terms of yi− 1 and yi (forwards propagation) or yi− 1 in terms of yi and yi+ 1 (backwards propagation).

In the frame of the exponential fitting, four different versions of this method (schemes), denoted Sm,m = 0,1,2,3, are available, see [1, 19]. In each, the coefficients a1,b0, and b1 are known in analytic form but there is one restriction. While in principle each scheme depends on three parameters, Z1,Z2,Z3, of the form S(Z1,Z2,Z3), in the existing publications, the maximal number of parameters that can be different is only two: one is 0 and the other, Z, allowed to be not vanishing. Specifically, the existing schemes are: S0 = S(0,0,0) (classical), S1 = S(0,0,Z), S2 = S(0,Z,Z), and S3 = S(Z,Z,Z).

A scheme where all three parameters Z1,Z2 and Z3 are different can be also derived in analytic form but an accurate numerical procedure to compute its coefficients is available.

The theory behind this procedure is presented in [20]. In that paper, the following system of linear algebraic equations is examined:

written compactly as Fv = B, where Fkn = Fn(Zk) and Bk = B(Zk). We see that a set of N + 1 functions, Fn(Z),n = 1,2,⋯ ,N and B(Z), and of N arguments Zk,k = 1,2,⋯ ,N, are involved. Each column n of matrix F contains one and the the same function Fn and each row k has one and the same argument Zk. The latter holds true also for the column vector B.

The numerical problem with this system is that any standard numerical method to solve it meets difficulties when some arguments are close together. For example, the first two equations coincide if Z1 = Z2 and then the determinant of the system vanishes. To solve this type of difficulty, authors of [20] develop a regularization procedure for the system. The numerical solution of the resulting regularized system is free of difficulties such that its results are uniformly accurate irrespective of how close or separated are the arguments. A code is also available. This is Fortran subroutine REGSOLV (double precision arithmetics) in the CD attached to book [1].

The approach developed in [20] and the associate REGSOLV are rather general, and many existing investigations on ef methods lead to the need of solving systems of this form. For the Numerov method, the system for computing the three coefficients consists of three equations:

see [20]. For the set η− 1(Z),η0(Z),η1(Z),⋯ see the ??.

To build up this system, we associate to the Numerov algorithm (2) the functional

and ask that this is identically vanishing if y(x) is any of the six functions

where μk may be either real or purely imaginary. We get

for each k. By expressing the expression in the curly brackets in terms of η− 1,

system (4) results. We have preferred to write the system in terms of η functions because, on one hand, REGSOLV works for real arguments and functions and therefore, if μkh were used as arguments, a system in complex arithmetics would result and, on the other hand, this subroutine asks for the expressions of the derivatives of the involved Fn and B, and these are very short if written in terms of the η functions, see again the ??.

By solving this system with REGSOLV, the computed coefficients are highly accurate. Their errors are quite often within the round-off limits. Thus, for example, if we put here Z1 = Z2 = Z3 = 0, we reobtain the numerical values of the coefficients of the classical S0. In the following, the scheme S(Z1,Z2,Z3) with the coefficients computed numerically will be denoted \(S^{*}_{3}\).

The expressions of the leading term of local truncation error, denoted lte, are known for versions Sm,m = 0,1,2,3. As for the new \(S^{*}_{3}\), we follow the general theory, see, e.g., [1, 19].

The point is that the functions in (6) are six linear independent solutions of the sixth order differential equation Dy(x) = 0, where

This means that, if the coefficients are those resulting from solving the generating system (4), then functional \({\mathcal L}\) of (5) can be written as a product of the form

where the factor A must be determined. To do this, we consider the lowest power function for which \({\mathcal L}\) is nonvanishing. This is y(x) = 1 in our case. Equation (5) gives \({\mathcal L} [h,a_{1},b_{0},b_{1}] 1=2+a_{1}\) and, since \(D 1= -{\mu _{1}^{2}}{\mu _{2}^{2}}{\mu _{3}^{2}}\), we obtain

such that finally the lte of \(S^{*}_{3}\) at xi is

The numerical computation of the middle factor may meet difficulties for small |Zk| because this factor is of the form 0/0 if Zk = 0. A direct procedure for determining approximate but reliable values is as follows. To each Zk, we associate

and solve (4) for this set. If \(a^{\prime }_{1}\), \(b^{\prime }_{1}\), and \(b^{\prime }_{2}\) are the resulting coefficients then the expression \((2+a^{\prime }_{1})/Z^{\prime }_{1}Z^{\prime }_{2}Z^{\prime }_{3}\) produces values which are exact in at least three figures for any Z1,Z2,Z3.

To conclude, the new \(S^{*}_{3}\) remains of the same order (four) as any other scheme of this method but a gain in accuracy is expected from the last factor in the lte provided Zk parameters are advantageously selected.

Remark 1

An extension of REGSOLV is also presented in book [1]. This is subroutine REGSOLV2 which solves a system consisting of two blocks:

where functions \({F^{i}_{n}} ,B^{i}, i=1,2\) and their derivatives have to be furnished by the user. This opens the possibility of solving a substantially larger class of problems related to the ef approach. In particular, for the Numerov method, we may address the problem of generating the most general set of coefficients consistent with the set (6). Specifically, the functional to be addressed is now

instead of (5). On asking that this is identically vanishing for the pair e±μx, we get

where

The set of two equations E±z = 0 is now expressed in terms of the eta functions of argument Z = z2. The following equivalent pair results:

All together, the five coefficients consistent with the set of six functions (6) satisfy the system of six linear equations G±(Zk) = 0,k = 1,2,3, but, to determine them numerically, one of these equations must be disregarded. For example, if we choose disregarding G−(Z3) = 0, the following system of five equations and of form (13) remains to be solved:

As expected, if a2 = 1 and b0 = b2, as tacitly assumed in (4), system (15) reduces to (4). By solving (15) with REGSOLV2, the values furnished by REGSOLV for (4) and the additional relations a2 = 1 and b0 = b2 are reproduced with an absolute error which is typically within the round-off limits. As an extra check, we can examine if the disregarded equation G−(Z3) = 0 is also satisfied for the computed coefficients, to find out that this is indeed confirmed within the same accuracy.

3 A numerical illustration

We take the case of the eigenvalue problem for the Schrödinger equation (1) with the Coffey-Evans potential

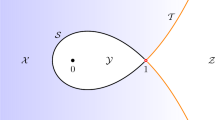

with β = 25, see Fig. 1. The domain is [a = 0,b = π/2] and the boundary conditions are y(0) = y(π/2) = 0. As a matter of fact, in the literature related to this problem, e.g., [22, 23], the domain is [−π/2,π/2], and various values for parameter β are considered. However, the domain and the value of β used by us are sufficient for this illustration.

Coffey-Evans potential function (16) with β = 25 on interval [0,π/2] and the three piecewise average values of it on each sector

We compare three schemes: S0,S3, and the new \(S^{*}_{3}\). The domain is divided in two sectors, [0,π/4] and [π/4,π/2], and the eigenvalues are located by shooting. A set of test values E ∈ [50,400] is considered and for each E the equation is propagated forwards with the starting values y0 = 0,y1 = h up to π/4 + h and backwards, with \(y_{i_{max}}=0, y_{i_{max}-1}=h\) for start, up to π/4. The values obtained at π/4 and π/4 + h from the two directions, denoted \({y^{f}_{1}}(E),{y^{f}_{2}}(E)\) and \({y^{b}_{1}}(E),{y^{b}_{2}}(E)\), respectively, are used to form the mismatch function

The eigenvalues are located as the zeros of this Δ(E).

Computation of the coefficients:

-

S0: This is the classical scheme. Its coefficients are a1 = − 2, b0 = 1/12, and b1 = 5/6.

-

S3: Let \({\bar V}^{f}\) and \({\bar V}^{b}\) be the average values of V (x) over the two sectors. Arguments \(Z^{f}= {(\bar V}^{f}-E)h^{2}\)/ \(Z^{b}= {(\bar V}^{b}-E)h^{2}\) are used in the forwards/backwards propagations with this S3. The coefficients are computed by the known analytic expressions, see [1, 2, 19].

-

\(S_{3}^{*} \): Each of the two sectors is divided into three equal subsectors, and let \({\bar V}^{f}_{k}\) / \({\bar V}^{b}_{k}\), (k = 1,2,3), be the corresponding average values (horizontal segments in Fig. 1). The three arguments Z1,Z2,Z3 are now \({Z^{f}_{k}}= ({\bar V}^{f}_{k}-E)h^{2}\) / \({Z^{b}_{k}}= ({\bar V}^{b}_{k}-E)h^{2}\) (k = 1,2,3), and the coefficients are computed by REGSOLV.

Notice that the computational effort is the same for S3 and \(S^{*}_{3}\): for each E they need only two computations of the coefficients, one for each direction.

In Table 1, we give the absolute errors in eigenvalues from the three schemes and different values of h. The reference eigenvalues, denoted Eexact, were computed by \(S^{*}_{3}\) at h = π/1024.

We see that, as expected, all schemes are of the same order, four. Indeed the errors typically decrease by a factor of around 16 when h is halved. What is also significant is that S3 does not improve the accuracy if compared with the classical S0. For contrast, the new \(S^{*}_{3}\) produces systematically better results than the other two schemes. The accuracy gain with \(S^{*}_{3}\) is of between one and two decimal figures if compared with the best placed from S0 and S3.

Our results suggest that, more general, the use of ef-based versions with one and the same value for the parameters (that is, with Z1 = Z2 = Z3) does not automatically lead to an improvement in accuracy for potentials with a pronounced variation. This holds true only for potentials that are reasonably well approximated by a step function, as is the case of the Woods-Saxon potential which is frequently used in the literature. Otherwise, using three different Zk represents a good idea.

These remarks do not restrict to the Numerov method. For any other method based on exponential fitting, the choice of sets with different values for the parameters, instead of a single one, will add more flexibility and therefore more chances for accuracy gain. The total number of parameters may vary from one method to another. Anyway, in any such situation, the use of the numerical solvers REGSOLV or REGSOLV2 for the coefficients will continue to play a central role.

Also worth adding is that the way how the values of the parameters should be advantageously selected remains open at this stage, especially for second-order differential equations of general form \(y^{\prime \prime }=f(x,y)\).

4 Conclusions

We have considered the Numerov method to introduce the new scheme \(S^{*}_{3}\) which is more flexible than the existing ones and includes them as particular cases. The important feature is that now the coefficients are computed numerically, not by analytical expressions, as before, and the numerical values obtained in this way are practically as accurate as those from analytic expressions. Fortran 95 subroutine REGSOLV was used for this purpose. Subroutine REGSOLV2 can be also used according to the case. The major advantage is that now the knowledge of the analytic expressions of the coefficients and their series expansions, which usually consists in sets of lengthy formulas, is no more needed. Only the generating system, as are (4) or (15) for the Numerov method, has to be formulated and communicated to the potential readers.

References

Ixaru, L.Gr., Vanden Berghe, G.: Exponential Fitting. Kluwer Academic Publishers, Dordrecht/Boston/London (2004)

Paternoster, B.: Present state-of-the-art in exponential fitting. A contribution dedicated to Liviu Ixaru on his 70-th anniversary. Comput. Phys. Commun. 183, 2499–2512 (2012)

Calvo, M., Franco, J.M., Montijano, J.I., Randez, L.: Explicit Runge-Kutta methods for initial value problems with oscillating solutions. J. Comput. Appl. Math. 76, 195–212 (1996)

Dai, Y., Wang, Z., Wu, D.: A four-step trigonometric fitted P-stable Obrechkoff method for periodic initial-value problems. J. Comput. Appl. Math. 187, 192–201 (2006)

Franco, J.M.: Exponentially fitted symplectic integrators of RKN type for solving oscillatory problems. Comput. Phys. Commun. 177, 479–492 (2007)

Kim, K.J., Cools, R.: Extended exponentially fitted interpolation formulas for oscillatory functions. Appl. Math. Comput. 224, 178–195 (2013). https://doi.org/10.1016/j.amc.2013.08.039

Conte, D., Paternoster, B.: Modified Gauss–Laguerre exponential fitting based formulae. J. Sci. Comput. 69, 227–243 (2016). https://springerd.bibliotecabuap.elogim.com/journal/10915

Cardone, A., D’Ambrosio, R., Paternoster, B.: Exponentially fitted IMEX methods for advection–diffusion problems. J. Comput. Appl. Math. 316, 100–108 (2017)

Ngwane, F.F., Jator, S.N.: A trigonometrically fitted block method for solving oscillatory second-order initial value problems and hamiltonian systems. International Journal of Differential Equations Article ID 9293530. https://doi.org/10.1155/2017/9293530 (2017)

D’Ambrosio, R., Moccaldi, M., Paternoster, B.: Adapted numerical methods for advection-reaction-diffusion problems generating periodic wavefronts. Comput. Math. Appl. 74, 1029–1042 (2017)

Cardone, A., D’Ambrosio, R., Paternoster, B.: High order exponentially fitted methods for Volterra integral equations with periodic solution. Appl. Num. Math. 114, 18–29 (2017). http://www.sciencedirect.com/science/journal/01689274

Medvedev, M.A., Simos, T.E., Tsitouras, C.: Fitted modifications of Runge-Kutta pairs of orders 6(5). Math Meth. Appl. Sci. 41, 6184–6194 (2018). https://doi.org/10.1002/mma.5128

Franco, J.M., Randez, L.: A class of explicit high-order exponentially-fitted two-step methods for solving oscillatory IVPs. J. Comput. Appl. Math. 342, 210–224 (2018)

Fang, Y., Yang, Y., You, X.: Fitted two-step hybrid methods for the resonant state of the Schrodinger equation. Int. J. Mod. Phys. C 29Art. No 1850055 (2018)

Zahra, W.K., Nasr, M.A., Van Daele, M.: Exponentially fitted methods for solving time fractional nonlinear reaction-diffusion equation. Appl. Math. Comput. 358, 468–490 (2019)

Xu, M., Simos, T.E.: A multistage two-step fraught in phase scheme for problems in mathematical chemistry. J. Math. Chem. 57, 1710–1731 (2019). https://doi.org/10.1007/s10910-019-01033-0

Zhao, Z., Luo, J., Lin, C.-L., Simos, T.E.: Full in phase finite difference algorithm for differential equations in quantum chemistry. J. Math. Chem. 58, 1197–1218 (2020). https://doi.org/10.1007/s10910-020-01125-2

Ixaru, L.Gr.: Exponential and trigonometrical fittings: user-friendly expressions for the coefficients, vol. 82 (2019)

Ixaru, L.Gr.: Operations on oscillatory functions. Comput. Phys. Commun. 105, 1–19 (1997)

Ixaru, L.Gr., De Meyer, H., Vanden Berghe, G., Van Daele, M.: A regularization procedure for \({\sum }_{i=1}^{n} f_{i}(z_{j})x_{i}=g(z_{j}); (j = 1, 2,\cdots , n)\). J. of Linear Algebra 3, 81–90 (1996)

Ixaru, L.Gr.: Numerical Methods for Differential Equations and Applications. Reidel, Dordrecht-Boston-Lancaster (1984)

Child, M.S., Chambers, A.V.: Persistent accidental degeneracies for the Coffey-Evans potential. J. Phys. Chem. 92, 3122–3124 (1988)

Marletta, M.: Certification of algorithm 700 numerical tests of the SLEIGN software for Sturm-Liouville problems. ACM Trans. Math. Softw. 17, 481–490 (1991)

Conte, D., Esposito, E., Paternoster, B., Ixaru, L.Gr.: Some new uses of the ηm(z) functions. Comput. Phys. Commun. 181, 128–137 (2010)

Acknowledgments

This research was supported in the frame of contract PN 18090101/ 2018 with the Romanian Ministry of Research and Innovation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Eta functions η− 1(Z),η0(Z),η1(Z),... are defined as follows [1, 19, 21]:

The first two are

In some papers, function η− 1(Z) is denoted ξ(Z).

Functions ηm(Z) with m > 0 are generated by recurrence

if Z≠ 0, and by following values at Z = 0 :

Some useful properties are:

Series expansion:

Asymptotic behavior at large |Z| :

Differentiation properties:

The latter is important in subroutine REGSOLV for solving (3) because there the expressions of the derivatives of functions Fn(Z),n = 1,2,⋯ ,N and B(Z) are also requested.

The expressions needed in system (4) for these functions and their derivatives are:

Codes for the computation of the eta functions are available: subroutines GEBASE and GEBASEV (fortran 95, double precision arithmetic) in the compact disk attached to book [1]; see also formConv (mathematica) in [24].

For solving (4), we have used derivatives up to mmax = 30 with eta functions computed by GEBASEV.

Rights and permissions

About this article

Cite this article

Ixaru, L.G. Numerical computation of the coefficients in exponential fitting. Numer Algor 87, 1097–1106 (2021). https://doi.org/10.1007/s11075-020-01000-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-01000-w