Abstract

The aim of this work is to analyze the mean-square convergence rates of numerical schemes for random ordinary differential equations (RODEs). First, a relation between the global and local mean-square convergence order of one-step explicit approximations is established. Then, the global mean-square convergence rates are investigated for RODE-Taylor schemes for general RODEs, Affine-RODE-Taylor schemes for RODEs with affine noise, and Itô-Taylor schemes for RODEs with Itô noise, respectively. The theoretical convergence results are demonstrated through numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Random ordinary differential equations (RODEs) are ordinary differential equations (ODEs) that include a stochastic process in their vector field. More precisely, let \((\varOmega , \mathcal {F}, \mathbb {P})\) be a probability space and let \(\eta : [0, T] \times \varOmega \to \mathbb {R}^{m}\) be an \(\mathbb {R}^{m}\)-valued stochastic process with Hölder continuous sample paths. A RODE in \(\mathbb {R}^{d}\)

is essentially a nonautonomous ODE:

for almost all realizations ω ∈Ω. Therefore, RODEs can be analyzed pathwise with deterministic calculus, but require further treatment beyond that of classical ordinary differential equation (ODE) theory. While the rules of deterministic calculus apply pathwise to RODEs for a fixed sample path, the vector field function in (2) is not smooth in its temperal variable. In fact, it is at most Hölder continuous in time when the driving stochastic process ηt is Hölder continuous and thus lacks sufficient smoothness needed to justify the usual Taylor expansions and the error analysis of traditional numerical methods for ODEs. Such methods can still be used (see, e.g., [5, 11]), but will attain at best a fractional low convergence order.

Similar to stochastic ordinary differential equations (SODEs), higher order numerical schemes for RODEs can be developed using Taylor-like expansions derived by iterated applications of the proper chain rule in the integral form (see e.g., [1,2,3,4, 8,9,10,11,12]). The mean-square convergence of numerical solutions of ODEs with a random initial value is discussed in [7]. The mean-square order for a class of backward stochastic differential equations was investigated in [6]. The pathwise convergence of numerical schemes for RODEs has received much attention (see, e.g., [3, 9, 10, 12]), as the numerical calculations of the approximating random variables are carried out path by path. However, there is no existing result on the mean-square convergence of numerical schemes for RODEs. In fact, the pathwise convergence rate does not imply the same mean-square convergence rate in general.

The goal of this work is to investigate the mean-square convergence rates of numerical schemes for various types of RODEs with different structures and noise. The mean-square order of convergence, also referred to as the strong order, is an important convergence index for numerical schemes of stochastic systems. A key criterion to estimate the mean-square convergence order of numerical approximations for SODEs was presented in (p. 12 [14]), where a fundamental convergence theorem was established for the mean-square convergence order of a method resting on properties of its one-step approximation. Here, we follow a similar idea to that used in (p. 12 [14]), and establish a general mean-square convergence theorem for numerical solutions of the RODE (1). In particular, we develop the relation between the local mean-square convergence order and the global mean-square convergence order of a general one-step approximation for the RODE (1) (see Theorem 1 below).

The paper is organized as follows. First, a generic theorem on the mean-square convergence order of one-step approximations for RODEs is presented Section 2. The theorem is then applied to establish the global mean-square convergence order of various numerical schemes for RODEs. In particular, the global mean-square convergence orders of RODE-Taylor schemes for general RODEs are discussed in Section 3; and the global mean-square convergence orders of affine-RODE-Taylor schemes for RODEs with affine noise are discussed in Section 4. Numerical experiments are carried out in Section 6 to demonstrate the theoretical convergence results and some closing remarks are provided in Section 7.

2 The mean-square convergence of a generic numerical approximation for RODEs

When the vector field function f of the RODE (1) is continuous in both of its variables and the sample paths of the noise process ηt are also continuous, the vector field function fω in the corresponding nonautonomous ODE (2) is continuous in both of its variables for each fixed ω ∈Ω. Therefore, classical existence and uniqueness theorems for ODEs can be applied pathwise.

Throughout this paper, it is assumed that:

-

(A1) The vector field function \(f: \mathbb {R}^{d} \times \mathbb {R}^{m} \to \mathbb {R}^{d}\) is at least continuously differentiable in both of its variables and satisfies a one-sided Lipschitz condition, i.e., there exists a constant \(L \in \mathbb {R} \) such that:

$$ \langle f(x, w) - f(y, w), x - y \rangle \leq L |x - y|^{2}, \qquad \forall x, y \in \mathbb{R}^{d}, \quad w \in \mathbb{R}^{m}; $$ -

(A2) There exist constants \(a, b \in \mathbb {R}\) and \(p \in \mathbb {N}\) such that:

$$ |f(y, w)|^{2} \leq a |w|^{p}+b |y|^{2}, \qquad \forall y \in \mathbb{R}^{d}, \quad w \in \mathbb{R}^{m}; $$ -

(A3) The noise process ηt is Hölder continuous with finite p th moments; i.e., there exists Mη = Mη(T) > 0 such that \(\mathbb {E}[|\eta _{t}|^{p}] < {M_{\eta }}\) for all t ∈ [0, T].

Here and in the rest of the paper, |⋅| denotes the Euclidean norm of a vector or a matrix and 〈⋅,⋅〉 denotes the dot product on \(\mathbb {R}^{d}\).

Given any initial condition \(y(t_{0}) = y_{0} \in {\mathcal{L}}^{2}(\varOmega )\), Assumption (A1) ensures the existence of a unique solution y(t;t0, y0) of (1) for all future time t ≥ t0 (see, e.g., [4, 16]). More generally, for any s ≥ t0 and \(\xi \in \mathbb {R}^{d}\), let y(t;s, ξ) be the solution for (1) satisfying y(s) = ξ.

We consider a general numerical method to approximate the exact solution y(t;t0, y0) of (1). To start with, we assume a uniform partition on [t0, T] with partition size \(h = \frac {T- t_{0}}{N}\), and denote:

Let Φ(h) be a one-step numerical scheme with step size h and for n = 0,1,⋯N − 1 let yn+ 1 be the one-step approximation of y(tn;t0, y0):

Denote by \(\mathbb {E}\) the expectation with respect to the probability measure \(\mathbb {P}\). Then, the local mean-square error (MSE) for the approximation (3) reads:

and the global MSE for the approximation (3) reads:

The main goal of this section is to establish the relation between \(\mathcal {E}_{n+1}^{G}\) and \(\mathcal {E}_{n+1}^{L}\). In particular, we show that \(\mathcal {E}_{n+1}^{G}\) is one order (of the step size h) lower than \(\mathcal {E}_{n+1}^{L}\), presented in the following theorem.

Theorem 1

Assume that the assumptions (A1)–(A3) hold, and let h ∈ (0,1]. If for some C > 0 and γ > 0, the local MSE satisfies the estimation:

then for sufficiently small h, there exists positive constant K (independent of h) such that the global MSE satisfies the estimation:

Remark 1

Theorem 1 states that the global mean-square convergence rate of any one-step approximation for RODEs is one order less than the local mean-square convergence rate. This result is consistent with the pathwise convergence rate for RODEs [9]. The local MSE condition (4) is parallel to those used in Milstein’s convergence theorem for SODEs [14, p. 12] and our result generalizes the deterministic case (ODEs) to the pathwise random case (RODEs).

The proof of Theorem 1 is based on a sequence of lemmas stated below. In particular, Lemma 1 provides a sequence of mean-square estimates of the true solution y(tn+ 1;tn, ξ) given y(tn;t0, y0) = ξ, Lemma 2 presents a Gronwall type of iterative difference inequalities, and Lemma 3 provides a mean-square upper bound for the numerical solution yn. In the sequel, K represents a generic constant that may depend on a, b, L, and Mη, but not on h, that may change from line to line.

Lemma 1

For any ξ ∈ L2(Ω), ζ ∈ L2(Ω) and h < 1, there exists K = K(a, b, Mη) > 0 such that the following inequalities hold for every n = 0,1,⋯ , N:

Proof

-

(i)

First, note that solutions to (1) satisfy the integral equation:

$$ y(t) = y(t_{n}) + {\int}_{t_{n}}^{t} f(y(s), \eta_{s})\mathrm{d} s $$on every subinterval [tn, tn+ 1]. Thus,

$$y(t_{n+1}; t_n, \xi) = \xi + {\int}_{t_n}^{t_{n+1}} f(y(s; t_n, \xi), \eta_s)\mathrm{d} s. $$(5)Writing f(y(s;tn, ξ), ηs) as f(y(s), ηs) in short, taking the mean-square of both sides of (5), and using the relationship (a + b)2 ≤ 2a2 + 2b2, we have:

$$ \mathbb{E}|y(t_{n+1}; t_{n}, \xi)|^{2} \leq \displaystyle 2\mathbb{E}|\xi|^{2}+2\mathbb{E}\left|{\int}_{t_{n}}^{t_{n+1}} f(y(s), \eta_{s})\mathrm{d} s\right|^{2}. $$Then using the Cauchy-Schwarz inequality and assumptions (A2)–(A3), we obtain:

$$ \begin{array}{@{}rcl@{}} \mathbb{E}|y(t_{n+1}; t_{n}, \xi)|^{2} &\leq& 2\mathbb{E}|\xi|^{2} + 2h{\int}_{t_{n}}^{t_{n+1}} \mathbb{E}|f(y(s), \eta_{s})|^{2} \mathrm{d} s\\ &\leq& 2 \mathbb{E}|\xi|^{2} + 2 a M_\eta h^{2} + 2 b h {\int}_{t_{n}}^{t_{n+1}} \mathbb{E}|y(s)|^{2} \mathrm{d} s. \end{array} $$Applying Gronwall’s inequality to the above inequality gives:

$$ \mathbb{E}|y(t_{n+1}; t_n, \xi)|^2 \leq 2 e^{2 b h^2} \left(a M_\eta h^2 + \mathbb{E}|\xi|^2 \right) \leq 2 e^{2b} \max\{a M_\eta, 1\} \cdot (1 + \mathbb{E}|\xi|^2). $$(6) -

(ii)

Using the (5), the Cauchy-Schwarz inequality and Assumptions (A2)–(A3), we have:

$$ \begin{array}{@{}rcl@{}} \mathbb{E}|y(t_{n+1}; t_{n}, \xi) - \xi|^{2} &\leq& h{\int}_{t_{n}}^{t_{n+1}}\mathbb{E}|f(y(s), \eta_{s})|^{2} \mathrm{d} s \\ &\leq& a M_\eta h^{2} + b h {\int}^{t_{n+1}}_{t_{n}} \left(\mathbb{E}|y(s) - \xi|^{2} + \mathbb{E}|\xi|^{2}\right) \mathrm{d} s \\ & \leq& (a M_\eta + \mathbb{E}|\xi|^{2}) h^{2} + bh {\int}_{t_{n}}^{t_{n+1}} \mathbb{E}|y(s) - \xi|^{2} \mathrm{d} s. \end{array} $$Applying Gronwall’s Lemma to the above inequality gives:

$$ \mathbb{E}|y(t_{n+1}; t_{n}, \xi) - \xi|^{2} \leq (a M_\eta + \mathbb{E}|\xi|^{2}) h^{2} e^{b h^{2}} \leq \max\{a M_\eta, 1\} e^{b} \cdot (1 + \mathbb{E}|\xi|^{2}) h^{2}. $$(7) -

(iii)

Using Jensen’s inequality, Hölder’s inequality, and inequalities (6) and (7), we obtain:

$$ \begin{array}{@{}rcl@{}} \left|\mathbb{E} \langle y(t_{n+1}; t_{n}, \xi), y(t_{n+1}; t_{n}, \xi)-\xi \rangle \right| &\leq& \mathbb{E} |\langle y(t_{n+1}; t_{n}, \xi), y(t_{n+1}; t_{n}, \xi)-\xi \rangle | \\ &\leq& \left(\mathbb{E}|y(t_{n+1}; t_{n}, \xi)|^{2}\right)^{1/2} \cdot \left(\mathbb{E}|y(t_{n+1}; t_{n}, \xi)-\xi|^{2}\right)^{1/2}\\ &\leq& \sqrt{2}e^{3b/2} \max\{a M_\eta, 1\} \cdot (1+\mathbb{E}|\xi|^{2})h. \end{array} $$ -

(iv)

For any ξ, ζ ∈ L2(Ω), let y(t;tn, ξ) and y(t;tn, ζ) be the two solutions to (1) with initial conditions y(tn) = ξ and y(tn) = ζ, respectively. Then, it follows directly from (1) that:

$$ \frac{\mathrm{d}}{\mathrm{d} t} \left(y(t; t_{n}, \xi) - y(t; t_{n}, \zeta) \right) = f(y(t; t_{n}, \xi), \eta_{t}) -f(y(t; t_{n}, \zeta), \eta_{t}). $$Taking the dot product of the above equation with y(t;tn, ξ) − y(t;tn, ζ) gives:

$$ \frac{\mathrm{d} }{\mathrm{d} t}\left|y(t; t_{n}, \xi) - y(t; t_{n}, \zeta)\right|^{2} = 2 \langle f(y(t; t_{n}, \xi), \eta_{t}) -f(y(t; t_{n}, \zeta), \eta_{t}), y(t; t_{n}, \xi) - y(t; t_{n}, \zeta)\rangle $$Then, by Assumption (A1),

$$\frac{\mathrm{d} }{\mathrm{d} t}\left|y(t; t_n, \xi) - y(t; t_n, \zeta)\right|^2 \leq 2 L \left|y(t; t_n, \xi) - y(t; t_n, \zeta)\right|^2. $$(8)Integrating (8) from tn to tn+ 1, we obtain:

$$\left|y(t_{n+1}; t_{n}, \xi) - y(t_{n+1}; t_{n}, \zeta)\right|^{2} \leq e^{2L h} \left|\xi - \zeta \right|^{2},$$which implies immediately

$$\mathbb{E} \left|y(t_{n+1}; t_{n}, \xi) - y(t_{n+1}; t_{n}, \zeta)\right|^{2} \leq e^{2L h} \mathbb{E} \left|\xi - \zeta \right|^{2}.$$The proof is complete.

□

Lemma 2

[15, p. 7] Suppose that for an arbitrary \(N \in \mathbb {N}\), we have:

where ν > 0, δ ≥ 0, r ≥ 1, un ≥ 0 for all n = 0,1,⋯ , N. Then

Lemma 3

Let assumptions (A1)–(A3) and the local mean-square error estimate (4) hold. Then, there exists K = K(a, b, L, Mη, T) > 0 such that:

Proof

First notice that \(\mathbb {E}|y_{n}|^{2} < \infty \) for every n = 1,2,⋯ , N, provided \(\mathbb {E}|y_{0}|^{2} < \infty \). In fact, assuming that \(\mathbb {E}|y_{k}|^{2} < \infty \) for every k = 0,⋯ , n, then by the local MSE estimate (4) and Lemma 1-(i):

It then follows from induction that \(\mathbb {E}|y_{n}|^{2} < \infty \) for all n = 0,⋯ , N.

It is straightforward to check that the assertion (9) holds for n = 0. To estimate \(\mathbb {E}|y_{n}|^{2}\) for n ≥ 1, we split yn as:

For simplicity, here we write y(tn;tn− 1, yn− 1) = y(tn) when there is no confusion. Then, taking the dot product of (10) with yn gives:

We next estimate the expectation of the terms on the right-hand side of the above equation.

By the local MSE estimation (4), one has:

From Lemma 1-(ii),we have that:

The Hölder inequality and the local MSE estimation (4) lead to:

By Lemma 1-(iii), we have that:

Finally, using the Hölder inequality and the estimations (12) and (13) above, we obtain:

Collecting estimations (11)–(14) and inserting into the expectation of (10), we have that:

in which K is independent of h. Taking into account Lemma 2, we obtain:

where K depends on T, a, b, Mη, but is independent of h. The proof is complete. □

We are now ready to prove Theorem 1 as follows.

Proof Proof of Theorem 1

First, we split the difference between yn+ 1 = yn+ 1(tn, yn) and y(tn+ 1;t0, y0) as:

Noticing that y(tn+ 1;t0, y0) = y(tn+ 1;tn, y(tn;t0, y0)), (15) then becomes:

Taking the dot product of the above equation with yn+ 1 − y(tn+ 1;t0, y0) and then taking the expectation of the resulting relation gives:

By the local MSE assumption (4) and Lemma 3:

By Lemma 1-(iv),

Inserting inequalities (17)–(18) into (16), we obtain:

For sufficiently small h, there exists a positive constant ν > 0 such that

we then obtain

It then follows directly from Lemma 2 that

which implies that

where K is constant depending on t0, T, L and the distribution of ηt, but not h. The proof is complete. □

2.1 Applications

As an introductory example, we first consider the explicit Euler scheme for the RODE (1):

where ηt is assumed to be a fractional Brownian motion (fBm).

Theorem 2

Let Assumptions (A2)–(A3) hold and in addition replace Assumption (A1) by

-

(A1\(^{\prime }\)) the function f is globally Lipschitz in both of its variables.

Then, the mean-square convergence order of the Euler scheme (19) for (1) is H, where H is the Hurst parameter of the fBm ηt.

Proof

We first estimate the local mean-square error of the scheme (19). Using the integral representation of the solution (5), we have:

Then by Cauchy-Schwarz inequality and Fubini’s Theorem, we have:

Write y(s;tn, yn) as y(s) in short when there is no confusion. To estimate the expectation inside the integral in the above inequality, write:

Then, by Hölder’s inequality and (20):

Due to Assumption (A1\(^{\prime }\)), there exist positive constants Mf such that:

Following (22), we have:

Furthermore, by the interpolation approximation \(y_{s} = y_{n} + (s - t_{n}) f(y_{n}, \eta _{t_{n}})\) and Assumptions (A2) and (A3) we obtain:

where \(\mathcal {E}_{s}^{L} = \left (\mathbb {E} |y(s; t_{n}, y_{n}) - y_{n}|^{2}\right )^{1/2}\) for s ∈ (tn, tn+ 1), and K is independent of h. Then, it follows from (23) that:

Collecting estimates (24)–(26) and inserting into (21) give:

Since ηt is a fractional Brownian motion with Hurst parameter H ∈ (0,1], then \(\mathbb {E} |\eta _{s} - \eta _{t_{n}}|^{2} = K |s - t_{n}|^{2H}\) and thus:

Also, because H ≤ 1, the inequality (27) implies:

and by Gronwall’s inequality again, we obtain:

Theorem 1 then implies that \(\mathcal {E}_{n+1}^{G} \sim \mathcal {O}(h^{\gamma })\) where γ = H. □

Remark 2

The assumption (A1\(^{\prime }\)) is imposed for convenience for exposition and may be stronger than needed. In fact, our numerical experiments (see, e.g., Example 15) indicate that the results of the theorem are still valid under conditions weaker than (A1\(^{\prime }\)).

Remark 3

The convergence order established in Theorem 2 is the slowest possible rate for the whole class of equations. One can always obtain better convergence with some special equations such as linear RODEs or RODEs with simple noise processes. For example, when ηt is a Brownian motion, the Euler scheme (19) can achieve a convergence order of 1, or even higher for special function f. This does not contradict with the result proved in Theorem 2.

In the following sections, we apply Theorem 1 to estimate the global mean-square convergence rate for various schemes for a class of general RODEs, and for the special classes of Affine-RODEs with affine noises and Itô-RODEs with Itô diffusion noise.

3 RODE-Taylor schemes for general RODEs

In this section, we first introduce the general framework of RODE-Taylor schemes for the RODE (1), based on RODE-Taylor approximations, then investigate the order of mean-square convergence for RODE-Taylor schemes. Here, we adopt the same set of notations as those in [9] and [10] with slight modifications. For the reader’s convenience, we summarize the necessary preliminaries below.

3.1 Preliminaries

For any nonempty set A and any \(l, k \in \mathbb {N}\), denote by Al×k the set of all l × k matrices with entries in A. In particular, for i ≥ 1 denote by \(\mathbb {N}_{0}^{m \times i}\) the set of all m × i matrices with nonnegative integers. In addition, write \(\mathbb {N}_{0}^{m \times 0} = {\mathbb {N}_{0}^{0}}: = \{\emptyset \}\). Consider an element \(\mathfrak {a}=(\mathfrak {a}_{1}, \cdots , \mathfrak {a}_{i}) \in {\mathbb {N}_{0}^{m\times i}}\) with \(\mathfrak {a}_{j}={{(\mathfrak {a}_{j, 1}, \cdots , \mathfrak {a}_{j, m})^{\top }}} \in {{\mathbb {N}_{0}^{m}}}\) for j = 1,⋯ , i represented as:

For such an \(\mathfrak {a} \in \mathbb {N}_{0}^{m \times i}\), define \(\iota (\mathfrak {a}): = i\).

Denote the set of all the above matrix-valued multi-indices by \(\mathfrak {A}_{m}:=\cup _{i=0}^{\infty } {\mathbb {N}_{0}^{m\times i}}\). Then, for any \(\mathfrak {a} \in \mathfrak {A}_{m} \backslash \{\emptyset \}\) with \(\iota (\mathfrak {a}) = i \geq 1\), we define:

In particular, for \(\mathfrak {a} = \emptyset \), define:

Given such a matrix valued index \(\mathfrak {a}\) with \(\iota (\mathfrak {a}) = i \geq 1\) and an m-dimensional stochastic process \(\eta _{t} = ({\eta _{t}^{1}}, \cdots , {\eta _{t}^{m}})\) with mutually independent component processes, define:

and the iterated integrals:

Then, \({I}_{t, s}^{\mathfrak {a}}\) is a random variable for every \(t, s \in \mathbb {R}\).

To derive an integral equation expansion of the solutions to the RODE (1), we introduce the vector spaces over \(\mathbb {R}\) of all smooth functions from \(\mathbb {R}^{d} \times \mathbb {R}^{m\times i}\) to \(\mathbb {R}^{d}\):

Given a matrix multi-index \(\mathfrak {a} \in \mathfrak {A}_{m}\) with \(\iota (\mathfrak {a}) = i \geq 1\) and a function \(\phi \in {\mathcal{H}}_{i}\) of the element \((y, w) \in \mathbb {R}^{d} \times \mathbb {R}^{m\times i}\) with the components:

define the linear differential operators \(\mathfrak {D}_{i}: {\mathcal{H}}_{i} \to {\mathcal{H}}_{i+1}\) by

and the \(\mathfrak {a}\)-derivative of ϕ with respect to w as

Let \({\mathcal{H}}: = \bigcup _{i = 0}^{\infty } {\mathcal{H}}_{i}\), then the differential operator \(\mathfrak {D}_{i}\) can be generalized to \(\mathfrak {D}: {\mathcal{H}} \to {\mathcal{H}}\) by \(\mathfrak {D} \phi : = \mathfrak {D}_{i} \phi \) if \( \phi \in {\mathcal{H}}_{i}\) for some \(i \in \mathbb {N}_{0}\). In addition, define the iterated differential operator defined by:

For each \(p \in \mathbb {N}\) and t0 ≤ t ≤ s ≤ T, the integral equation expansion of the solution to the RODE (1) reads [9, 10]:

where \(\chi : \mathbb {R}^{d} \to \mathbb {R}^{d}\) is the identity function defined by

3.2 γ-RODE-Taylor schemes

Assume that the driving stochastic process \(\eta _{t} = ({\eta }_{t}^{1}, \cdots , {\eta }_{t}^{m})\) in the RODE (1) has Hölder continuous sample paths. More specifically, assume that:

-

(A4) For each j = 1, ⋯ m, there exists \(\mathfrak {b}_{j} \in (0, 1]\) and Mq > 0 such that the driving stochastic process \({\eta _{t}^{j}}\) satisfies:

$$\mathbb{E} \left({\eta}_{t}^{j} - {\eta}_{s}^{j}\right)^{2 q} \leq M_{q} \left|t - s \right|^{2 q \mathfrak{b}_{j}}, \qquad q = 1, 2, {\cdots} .$$

Remark 4

Due to the Kolmogorov Continuity Theorem, Assumption (A4) implies that \({{\eta }_{t}^{j}}\) has β-Hölder continuous paths for \(\beta \in (0, \mathfrak {b}_{j})\). In particular, \(\mathfrak {b}_{j} = \frac {1}{2}\) when \({\eta _{t}^{j}}\) is a Brownian motion and \(\mathfrak {b}_{j} = H\) if \({\eta }_{t}^{j}\) is a fractional Brownian motion with Hurst parameter H.

Define \(\mathfrak {b} := {{(\mathfrak {b}_{1}, \ldots , \mathfrak {b}_{m})^{\top }}} \in (0, 1]^{m}\). Then, for any \(\mathfrak {a}\in \mathfrak {A}_{m}\) with \(\iota (\mathfrak {a})\geq 1\), we have:

and

In particular, for \(\mathfrak {a} = \emptyset \), define \( {\|\emptyset ^{\top }} \mathfrak {b}\|_{1}: = 0\).

Consider specific subsets of matrix multi-indices of the form:

Use the abbreviation:

where \(\partial ^{\mathfrak {a}}\) and \(\mathfrak {D}^{\iota (\mathfrak {a})}\) are defined according to (29) and (30), respectively.

Approximating \(\mathfrak {D}^{j} \chi : \mathbb {R}^{d} \times \mathbb {R}^{m \times i} \to \mathbb {R}^{d}\) in the integral equation expansion (31) by a Taylor expansion in its first m × i variables, the RODE-Taylor schemes for the RODE (1) can be constructed to be:

where \(I^{\mathfrak {a}}_{t_{n}, t_{n+1}}\) is defined by (28), \(f_{\mathfrak {a}}\) is defined by (32), and \({\eta }_{t_{n}}^{\times \iota (\mathfrak {a})}\) is the \(m \times \iota (\mathfrak {a})\) matrix:

3.3 Convergence analysis

In this subsection, we analyze the mean-square convergence rate of the general RODE-Taylor scheme (33). Here, it is assumed that all components of the driving stochastic process \(\eta _{t} = ({\eta }_{t}^{1}, \cdots , {\eta }_{t}^{m})\) are mutually independent.

Theorem 3

Assume that assumptions (A1)–(A4) hold. In addition, assume that:

-

(A5) f is infinitely often continuously differentiable in its variables and all partial derivatives of f are bounded on [t0, T].

Then, given any γ > 0, the global mean-square error of the RODE-Taylor scheme (33) satisfies:

where K is a constant depending on t0, T, γ, \(\mathfrak {b}\), Mη, but independent of h.

Proof

The proof is based on the proof of Theorem 5.1 [10], but in the mean-square sense with different details. For completeness, we provide all necessary details below.

Let \(r = \left \lceil \gamma \right \rceil \) be the smallest positive number that is larger than or equal to γ, and write y(tn+ 1;tn, yn) as y(tn+ 1) when there is no confusion. Then, the integral expansion (31) gives:

Given ω ∈Ω define the mapping \(G: [0, 1] \to \mathbb {R}^{d}\) by:

Let \( \underline {\mathfrak {b}}: = \min \limits \{\mathfrak {b}_{1}, \cdots , \mathfrak {b}_{m}\}\), and set \(\kappa _{i}: = \left \lceil \frac {\gamma - i + 1}{\underline {\mathfrak {b}}}\right \rceil - 1\). Then, applying a Taylor expansion of order κi to the function G gives:

It follows directly from the above equality that:

Inserting (35) into (34) results in

where

Now, notice that since \(|\mathfrak {a}| \underline {\mathfrak {b}} \leq \|\mathfrak {a}^{\top } \beta \|_{1}\), we have:

and thus (36) can be rewritten as:

with

Therefore, taking the dot product of (37) with itself then taking the expectation of the resulting relation gives:

We next estimate \(\mathbb {E}|R_{1}|^{2}\), \(\mathbb {E}|R_{2}|^{2}\) and \(\mathbb {E}|R_{3}|^{2}\). Again, we denote by K a generic constant that may change from line to line.

First, since all partial derivatives of f are bounded, there exists K > 0 such that:

Second, due to Assumption (A4) and the independence among \({{\eta }_{t}^{j}}\), for every \(k = 1, \cdots , \iota (\mathfrak {a})\) and sk ∈ [tn, tn+ 1], we have:

and therefore:

For \(\mathfrak {a} \in \mathfrak {A}_{m}\) and \(i = \iota (\mathfrak {a}) \geq 1\), define the random variable:

where

Then, there exists K > 0 such that:

It then follows immediately from (40) that:

Following similar calculations, we can obtain:

Collecting (39), (41), and (42) into (38) and putting \(i = \iota (\mathfrak {a})\) results in:

Noticing that \(\iota (\mathfrak {a}) + \|{{\mathfrak {a}^{\top }}} \mathfrak {b}\|_{1} \geq \gamma + 1\) for \(\mathfrak {a} \in \mathfrak {A}_{m} \backslash \mathfrak {A}^{\gamma }_{m}\) and assuming that h ≤ 1 we then obtain:

where K depends on γ, the Hölder property of η, and smoothness of f, but independent of h.

The desired global mean-square error estimate then follows from the Lipschitz estimate for \(\varPhi _{\gamma }^{(h)}\) (see Lemma 10.4 [10]) and Theorem 1. □

3.4 Applications

Here, we present some explicit RODE-Taylor schemes for scalar RODEs (d = 1) with Brownian motion or fractional Brownian motion.

Example 5

Scalar RODEs with scalar Brownian motions.

We have m = 1, \(\mathfrak {b} = (\frac {1}{2})\) and

The multi-index set for a γ-RODE-Taylor scheme is:

In particular consider \(\gamma = \frac {1}{2}, 1, \frac {3}{2}, 2\) with:

The corresponding RODE-Taylor schemes are:

where \({I}_{t_{n}, t_{n+1}}^{\mathfrak {a}}\) are defined by (28), and f and all partial derivatives of f are evaluated at \((y_{n}, \eta _{t_{n}})\).

The above schemes (43)–(46) have global mean-square convergence rates of 1, 1, \(\frac {3}{2}\), and 2, respectively (see Examples 14-(i) and 15-(i) in Section 6).

Remark 6

The Euler scheme (43) achieves a convergence order 1 instead of \(\frac {1}{2}\) due to the special noise process of Brownian motion. This will be further explained in Example 12 and Remark 13 in Section 5. Note this does not contradict the result in Theorem 3, as stated in Remark 3.

Example 7

Scalar RODEs with scalar fractional Brownian motion with Hurst parameter H.

We have m = 1, \(\mathfrak {b} = (H)\) and

The multi-index set for a γ-RODE-Taylor scheme is:

-

1.

For \(H \in \left (\frac {1}{2}, 1 \right )\), we consider the particular case γ = H,1,2H with

$${\mathfrak{A}_{1}^{H}} = \left\{\emptyset, (0)\right\}, \quad {\mathfrak{A}_{1}^{1}} = \left\{\emptyset, (0), (1) \right\}, \quad \mathfrak{A}_{1}^{2H} = \left\{\emptyset, (0), (1), (0,0) \right\}.$$The corresponding γ-RODE-Taylor schemes read:

$$ \begin{array}{@{}rcl@{}} y_{n+1} &=& \varPhi_{H}^{(h)} = y_{n} + h f\end{array} $$(47)$$ \begin{array}{@{}rcl@{}} y_{n+1} &=& \varPhi_{1}^{(h)} = y_{n} + h f + f_{\eta} {\int}_{t_{n}}^{t_{n+1}} {\varDelta} \eta_{t_{n}, s} \mathrm{d} s, \end{array} $$(48)$$ \begin{array}{@{}rcl@{}} y_{n+1} &=& \varPhi_{2H}^{(h)} = y_{n} + h f + f_{\eta} {\int}_{t_{n}}^{t_{n+1}} {\varDelta} \eta_{t_{n}, s} \mathrm{d} s + f_{y} f \frac{h^{2}}{2}, \end{array} $$(49)with global mean-square convergence rates of H, 1, and 2H, respectively (see Examples 14-(ii)/(iii) and 15-(ii)/(iii) in Section 6).

To achieve a convergence rate of 2 as the scheme (46) does, we need more information of H. For example, with \(H=\frac {3}{4}\):

$${\mathfrak{A}_{1}^{2}} = \left\{ \mathfrak{a} \in \mathfrak{A}_{1} : \iota(\mathfrak{a}) + \frac{3}{4} \|\mathfrak{a}\|_{1} < 3 \right\} = \left\{ \emptyset, (0), (1), (2), (0,0), (1,0), (0,1) \right\}$$and the corresponding 2-RODE-Taylor scheme reads:

$$ y_{n+1} = \varPhi_{2}^{(h)} = y_{n} + h f + \sum\limits_{i=1}^{2} \frac{1}{i!} \partial_{\eta}^{i} f {I}_{t_{n}, t_{n+1}}^{(i)} + f_{y} f \frac{h^{2}}{2} + f_{\eta,y} f {I}_{t_{n}, t_{n+1}}^{(1,0)} + f_{y} f_{\eta} {I}_{t_{n}, t_{n+1}}^{(0,1)}.$$(50) -

2.

For \(H \in \left (0, \frac {1}{2} \right )\), we consider the particular case γ = H,2H with:

$${\mathfrak{A}_{1}^{H}} = \left\{\emptyset, (0)\right\}, \quad \mathfrak{A}_{1}^{2H} = \left\{\emptyset, (0), (1), (0,0) \right\}.$$The corresponding γ-RODE-Taylor schemes are the same as (47) and (49) with global mean-square convergence rate of H and 2H, respectively (see Examples 14-(ii) and 15-(ii) in Section 6).

To achieve a convergence rate of 1 or 2 as above, we need more information of H. For example, for \(H = \frac {1}{3}\):

$$ \begin{array}{@{}rcl@{}} {\mathfrak{A}_{1}^{1}} &=& \left\{ \mathfrak{a} \in \mathfrak{A}_{1} : \iota(\mathfrak{a}) + \frac{1}{3} \|\mathfrak{a}\|_{1} < 2 \right\} = \left\{ \emptyset, (0), (1), (2) \right\}, \\ {\mathfrak{A}_{1}^{2}} &=& \left\{ \mathfrak{a} \in \mathfrak{A}_{1} : \iota(\mathfrak{a}) + \frac{1}{3} \|\mathfrak{a}\|_{1} < 3 \right\} = \left\{ \begin{array}{ll}\emptyset, (0), (1), (2), (3), (4), (5), (0,0), \\ (0,1), (0,2), (1,0), (1,1), (2,0) \end{array} \right\}, \end{array} $$and the corresponding 1- and 2-RODE-Taylor schemes read:

$$ \begin{array}{@{}rcl@{}} y_{n+1} = \varPhi_{1}^{(h)} &=& y_{n} + h f + \sum\limits_{i=1}^{2} \frac{1}{i!} \partial_{\eta}^{i} f {I}_{t_{n}, t_{n+1}}^{(i)} \end{array} $$(51)$$ \begin{array}{@{}rcl@{}} y_{n+1} = \varPhi_{2}^{(h)} &=& y_{n} + h f + \sum\limits_{i=1}^{5} \frac{1}{i!} \partial_{\eta}^{i} f I_{t_{n}, t_{n+1}}^{(i)} + f_{y} f \frac{h^{2}}{2} + f_{y} f_{\eta} {I}_{t_{n},t_{n+1}}^{(0,1)} + f_{y} f_{\eta} {I}_{t_{n},t_{n+1}}^{(1,0)} \\ && + f_{\eta, y}f_{\eta} I_{t_{n},t_{n+1}}^{(1,1)} + \frac{1}{2} f_{y} f_{\eta, \eta}I_{t_{n},t_{n+1}}^{(0,2)} + \frac{1}{2} f_{\eta,\eta,y}f_{\eta} {I}_{t_{n},t_{n+1}}^{(2,0)}. \end{array} $$(52)The schemes (51) and (52) have mean-square convergence orders of 1 and 2, respectively (see Examples 14-(iii) and 15-(iii) in Section 6).

Example 8

Notice that when m > 1, Assumption (A4) allows different components of ηt to have different Hölder properties. In this example, we consider RODEs with a 2-dimensional stochastic process \(\eta _{t} = ({\eta _{t}^{1}}, {\eta _{t}^{2}})^{\top }\) where \({\eta _{t}^{1}}\) is a Brownian motion and \({\eta _{t}^{2}}\) is a fractional Brownian motion with Hurst parameter \(H = \frac {3}{4}\), i.e., \(\mathfrak {b} = (\frac {1}{2}, \frac {3}{4})\). Then, we have:

and the multi-index set for a γ-RODE-Taylor scheme is:

In particular, we consider \(\gamma = \frac {1}{2}, 1\), \(\frac {3}{2}\) with

Hence, the corresponding \(\frac {1}{2}\)-, 1-, and 1.5-RODE-Taylor schemes are:

The global mean-square convergence orders for (53), (54), and (55) are \(\frac {1}{2}\), 1, and \(\frac {3}{2}\), respectively (see Example 16 in Section 6).

4 Affine-RODE-Taylor schemes for RODEs with affine noise

In this section, we consider a special type of RODES with affine noise [3, 9], formulated as:

where \(y \in \mathbb {R}^{d}\) and the noise process \(\eta _{t} = ({\eta }_{t}^{1}, \cdots , {\eta }_{t}^{m})\) takes values in \(\mathbb {R}^{m}\). Here, the sample paths of ηt are assumed to be continuous, and the coefficients σj, j = 1,⋯ , m are assumed to be sufficiently smooth real-valued functions satisfying appropriate conditions to ensure the existence and uniqueness of solutions to (56) on the whole time interval [t0, T].

When g(t, y) ≡ g(y) and σj(t, y) ≡ σj(y), the RODE-Taylor approximations introduced in Section 3 can be applied to the vector field \(f(y, \eta _{t}): = g(y)+{\sum }_{j=1}^{m} \sigma _{j}(y) {\eta }_{t}^{j}\) to obtain RODE-Taylor schemes of various mean-square convergence orders. Here, we introduce the affine-RODE-Taylor framework due to Asai and Kloeden [3] that deals with nonautonomous affine RODEs (56) and may achieve a different rate of convergence compared with RODE-Taylor schemes when g and σj do not depend on t. For readers’ convenience, the hierarchical sets and vector-valued multi-index notations used in [3, 13] are summarized below.

4.1 Preliminaries

Let \(\mathcal {A}_{m}\) be the set of all l-dimensional vectors with components valued in the set {0,1,⋯ , m}:

where l = ℓ(α) is the length of vector α. In particular, ℓ(∅) = 0. For any \(\alpha = (\alpha _{1}, \cdots , \alpha _{l}) \in \mathcal {A}_{m}\) with ℓ(α) = l ≥ 1, denote by -α and α- the multi-indices in \(\mathcal {A}_{m}\) obtained by deleting the first and last components of α, respectively, i.e.:

Given \(\gamma \in \mathbb {N}\), let \(\mathcal {A}_{m}^{\gamma }\) be the hierarchical set of vector-valued multi-indices given by:

In addition, define the reminder set \(\mathcal {R}(\mathcal {A}_{m}^{\gamma })\) by:

Then, \(\mathcal {R}(\mathcal {A}_{m}^{\gamma })\) consists of all the next following multi-indices with respect to the hierarchical set \(\mathcal {A}_{m}^{\gamma }\) that do not belong to \(\mathcal {A}_{m}^{\gamma }\). Obviously, \(\mathcal {R}(\mathcal {A}_{m}^{\gamma }) = \mathcal {A}_{m}^{\gamma +1} \backslash \mathcal {A}_{m}^{\gamma }\).

For any \(\alpha \in \mathcal {A}_{m}\) with ℓ(α) = l and an integrable function \(\phi : [t_{0}, T] \to \mathbb {R}\), define the multiple integral \(J[\phi (\cdot )]_{t, s}^{\alpha }\) as:

In particular, when ϕ ≡ 1, write \(J[1]_{t, s}^{\alpha }\) as \(J_{t, s}^{\alpha }\) in short.

Denote by ϕk the k-th component of an \(\mathbb {R}^{d}\)-valued function ϕ. For \(y = (y^{1}, {\cdots } y^{d}) \in \mathbb {R}^{d}\) and the \(\mathbb {R}^{d}\)-valued functions g and σj in (56), define the partial differential operators \(\mathcal {D}^{0}\) and \(\mathcal {D}^{j}\) by:

Given a vector-valued multi-index \(\alpha \in \mathcal {A}_{m}\) and a sufficiently smooth function \(\varphi : [t_{0}, T] \times \mathbb {R}^{d} \to \mathbb {R}\), the coefficient function φα is defined recursively by:

Then, the \(\mathcal {A}_{m}^{\gamma }\)-RODE-Taylor expansion of φ(t, y(t)) for a solution y(t) of the RODE (56) reads:

In particular, let φ = χ, the identity function on \(\mathbb {R}^{d}\) with χk(y) = yk for k = 1,⋯d. Then, given any y(tn) = yn, the solution of the RODE (56) can be written componentwise as:

where J is defined as in (58) and \(\mathcal {D}^{\alpha } = \mathcal {D}^{\alpha _{1}} \mathcal {D}^{\alpha _{2}} \cdots \mathcal {D}^{\alpha _{l}}\) with \(\mathcal {D}^{j}\) defined as in (59) for j = 0,1,⋯m.

Affine-RODE-Taylor schemes are constructed systematically using the affine-RODE-Taylor expansions (60) on each finite subinterval [tn, tn+ 1], deleting the remainder term on the right-hand side of (60). More specifically, the γ-affine-RODE-Taylor scheme is given componentwise by:

4.2 Convergence analysis

In this subsection, we analyze the mean-square convergence rate for the γ-affine-RODE-Taylor scheme. The analysis is based on Theorem 2 in [3], but in the mean-square sense with different estimations. Here, all the stochastic processes \({\eta }_{t}^{1}\), ⋯, \({\eta }_{t}^{m}\) are assumed to be mutually independent and satisfy:

-

(A6) there exists C > 0 such that

$$ \underset{t \in [t_{0}, T]}\sup \mathbb{E}\left|{\eta}_{t}^{j}\right|^{2} \leq C, \qquad j = 1, \cdots, m.$$

Theorem 4

Let Assumptions (A1)–(A3) and (A6) hold, and assume that all derivatives of g, σ1, ⋯, σm and all the multiple integrals of stochastic processes appearing in (60) exist. Then, given any γ > 0, the affine-RODE-Taylor scheme (61) has a global mean-square convergence order of γ.

Proof

The local mean-square error for the k-th component of γ-affine-RODE-Taylor scheme (61) is given by:

and satisfies

First, note that under standard assumptions, the RODE (61) has a unique solution on the finite interval [t0, T] with continuous path. Thus ,for any ω ∈Ω, there exists R = R(T, ω) such that |y(t, ω)|≤ R(T, ω) for all t ∈ [t0, T]. Moreover, there exists \(C = C(\mathcal {D}^{\alpha } \chi , t_{0}, T) > 0\) such that \(\left | \mathcal {D}^{\alpha } \chi ^{k}(t, y(t)) \right | \leq C\) for all t ∈ [t0, T] (see [3]). Therefore:

where l = ℓ(α). Then, by Hölder’s inequality:

Taking expectation of the above inequality and using Assumption (A6) and the independence among \({\eta _{t}^{j}}\), we obtain:

Since \(\gamma \in \mathbb {N}\), ℓ(α) = l ≥ γ + 1 for all \(\alpha \in \mathcal {R}(\mathcal {A}_{m}^{\gamma })\). Therefore, assuming h ≤ 1 we obtain immediately that:

which implies that \(\left (\mathcal {E}_{n+1}^{L,k} \right )^{2} \leq K h^{2 (\gamma +1)}\). It then follows immediately that:

and by Theorem 1 the γ-affine-RODE-Taylor scheme (61) has a global mean-square convergence order of γ. □

Next, we present some explicit γ-affine-RODE-Taylor schemes for scalar RODEs with affine noise. In particular, for comparison purpose, we consider autonomous examples with g(t, y) = g(y) and σj(t, y) = σj(y) for j = 1,⋯m. Then:

Example 9

Consider the RODE (56) with d = m = 1. The hierarchical sets \(\mathcal {A}_{1}^{\gamma }\) for γ = 1,2,3 are, respectively:

Then, the corresponding 1-, 2-, 3-affine-RODE-Taylor schemes read:

where g, σ, and their derivatives are evaluated at (tn, yn), and the integrals \({J}_{n}^{\alpha } = J[1]^{\alpha }_{t_{n}, t_{n+1}}\). The global mean-square convergence orders of (62), (63), and (64) are 1, 2, and 3, respectively (see Example 15 in Section 6).

Example 10

Consider the RODE (4.1) with d = 1 and m = 2. The hierarchical sets \(\mathcal {A}_{2}^{\gamma }\) for γ = 1,2 are, respectively:

Then, the corresponding 1-, 2-affine-RODE-Taylor schemes read:

where g, σ1, σ2, and their derivatives are evaluated at (tn, yn), and the integrals \(J^{\alpha }_{n} = J[1]^{\alpha }_{t_{n}, t_{n+1}}\). The global mean-square convergence orders of (66) and (67) are 1 and 2, respectively (see Example 16 in Section 6).

5 Itô-RODE-Taylor schemes for RODEs with Itô diffusion processes

In this section, we investigate mean-square convergence of RODEs driven by an Itô diffusion process, i.e., the solution of an Itô SODE. In particular, consider a RODE on \(\mathbb {R}^{d_{1}}\):

where ηt is the solution of an Itô SODE in \(\mathbb {R}^{d_{2}}\):

with m-independent scalar Wiener processes \({W}_{t}^{1}\), ⋯, \({W}_{t}^{m}\).

In this section, we again assume the standard assumption holds, i.e.:

-

(A7) All the coefficients μ, ρ1, ⋯ ρm and the vector field f are infinitely often continuously differentiable in its variables and all partial derivatives are bounded on [t0, T]

It is straightforward to verify that under Assumption (A7), the solution \(\eta _{t} = ({\eta }_{t}^{1}, \cdots , {\eta }_{t}^{d_{2}})\) of the SDE (70) satisfies:

Therefore, Assumption (A4) is satisfied with \(\mathfrak {b}_{j} = \frac {1}{2}\) for all j = 1,⋯ , d2 and all the RODE-Taylor schemes introduced in Section 3 can be applied here with \(\mathfrak {b} = (\frac {1}{2}, \cdots , \frac {1}{2})\) and attain their orders as shown in Section 3.

On the other hand, the system (69)–(70) can also be simulated via stochastic ordinary differential equations (see [1]). For comparison purpose, we also present the convergence analysis for numerical schemes for the RODE (69) via SODEs. More precisely, for \(y(t) \in \mathbb {R}^{d_{1}}\) in (69) and \(\eta _{t} \in \mathbb {R}^{d_{2}}\) in (70), let Y (t) = (y(t), ηt)⊤. Then, (69) and (70) can be formulated as an SODE in d = d1 + d2:

with

5.1 Preliminaries

Let \(\mathcal {A}_{m}\) be defined as in (57) and define the hierarchical set of multi-indices:

where ℓ(α) is the length of α and \(\mathfrak {n}(\alpha )\) is the number of zero components of α.

Given a multi-index α = (α1,⋯ , αl) with ℓ(α) = l ≥ 1, define the multiple integrals:

In addition, for any smooth enough scalar-valued function ϕ of t and Y, define the differential operators:

where the superscript k denotes the k-th component of a vector-valued function. Moreover, \({\mathcal{L}}^{\alpha }: = {\mathcal{L}}^{\alpha _{1}} {\cdots } {\mathcal{L}}^{\alpha _{l}}\) for α = (α1,⋯ , αl).

The γ-Itô-Taylor scheme for the SDE (71) reads:

and has a mean-square convergence order of γ [13]. In particular, the y-component of the γ-Itô-Taylor scheme gives the γ-Itô-RODE-Taylor scheme:

where \(\mathcal {I}^{\alpha }_{n}\) is defined as in (72) and

Remark 11

The smaller set \({\varLambda }_{\gamma }^{0}\) is used because the coefficients corresponding to \({\varLambda }_{\gamma } \backslash {\varLambda }_{\gamma }^{0}\) are annihilated as the diffusion coefficient component is 0 in the RODE component of the system (71) (see [1]).

5.2 Applications

Some explicit Itô-RODE-Taylor schemes for scalar RODEs (d = 1) with scalar Itô diffusion processes are provided below.

Example 12

For \(\gamma = \frac {1}{2}\), \({\varLambda }_{\frac {1}{2}} = \{\emptyset , (0), (1)\}\) and \({\varLambda }_{\frac {1}{2}}^{0} = \{\emptyset , (0)\}\). Thus the corresponding \(\frac {1}{2}\)-Itô-RODE-Taylor scheme reads:

which is the Euler scheme.

For γ = 1, Λ1 = {∅,(0),(1),(1,1)} and \({{\varLambda }_{1}^{0}} = \{\emptyset , (0)\}\). The corresponding 1-Itô-RODE-Taylor scheme \(y_{n+1} = {\varTheta }_{1}^{(h)} (t_{n}, y_{n})\) coincides with the Euler scheme (73). Thus, the Euler scheme (73) has a mean-square convergence order of 1 (see Examples 14-(i) and 17 in Section 6).

Remark 13

The Euler scheme (73) can be derived from the \(\frac {1}{2}\)-RODE-Taylor scheme \(\varPhi _{\frac {1}{2}}^{(h)}\) in (43) and from \(\frac {1}{2}\)-Itô-RODE-Taylor scheme \({\varTheta }_{\frac {1}{2}}^{(h)}\) in (73). It coincides with the 1-Itô-RODE-Taylor scheme and thus achieves a convergence order of 1 instead of \(\frac {1}{2}\).

For \(\gamma = \frac {3}{2}\), \({\varLambda }_{\frac {3}{2}}= \{\emptyset , (0), (1), (0, 0), (0, 1), (1, 0), (1,1), (1, 1, 1) \} \) and

The corresponding \(\frac {3}{2}\)-Itô-RODE-Taylor scheme reads:

where f and all its partial derivatives are evaluated at \((y_{n}, \eta _{t_{n}})\), ρ and μ are evaluated at \(\eta _{t_{n}}\). The Itô-RODE-Taylor scheme (74) has a global mean-square convergence order of \(\frac {3}{2}\) (see Example 17 in Section 6).

For γ = 2, \({\varLambda }_{2} = {\varLambda }_{\frac {3}{2}} \cup \{ (0, 1, 1), (1, 0, 1), (1, 1, 0), (1, 1, 1, 1) \} \) and \({{\varLambda }_{2}^{0}} = \left \{ \emptyset , (0), (0, 0), (1, 0), (1, 1, 0) \right \}\). The corresponding 2-Itô-RODE-Taylor scheme reads:

where f and all its partial derivatives are evaluated at \((y_{n}, \eta _{t_{n}})\), ρ, \(\rho ^{\prime }\), and μ are evaluated at \(\eta _{t_{n}}\). The Itô-RODE-Taylor scheme (75) has a global mean-square convergence order of 2 (see Example 17 in Section 6).

6 Numerical experiments

In this section, we conduct numerical experiments to demonstrate the mean-square orders of convergence for different schemes presented in Sections 3, 4, and 5. In particular, we simulate:

-

(I)

A scalar RODE with scalar noise process being Brownian motion or fBm;

-

(II)

A scalar RODE with scalar affine noise being either Brownian motion or fBm;

-

(III)

A scalar RODE with 2-dimensional affine noise consisting of one Brownian motion and one fBm;

-

(IV)

A scalar RODE with scalar Itô diffusion noise.

Let M be the sample size of the Monte Carlo simulation. For i = 1,⋯ , M, denote by \({y}_{n}^{(i)}\) the numerical approximation to the true solution y(i)(tn;t0, y0) at the time instant tn. The mean-square error \(\mathcal {E}_{n+1}^{G}\) is simulated by:

to verify the mean-square convergence order of various numerical schemes. In all the numerical experiments below, the sample size M is chosen to be 1000 and the “exact” solutions are calculated numerically with high precision (the integral is evaluated as a Riemann sum with 20,000 subintervals).

Example 14

Consider the scalar RODE:

For each realization η(i) (76) has the explicit solution:

The initial valued is picked to be y0 = 1 and the final time is picked to be T = 1.

-

(i)

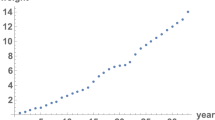

Assume that ηt is a Wiener process. The Euler scheme (43) of order 1, the RODE-Taylor schemes (44) of order 1, (45) of order \(\frac {3}{2}\) and (46) of order 2 are applied to simulate the RODE (76). The global mean-square errors \(\mathcal {E}_{n+1}^{G}\) versus the time steps h = 2−i, i = 3, 4, 5, 6, 7, 8 are plotted in Fig. 1. The two dashed lines are reference lines with slopes 1 and 2, respectively.

-

(ii)

Assume that ηt is an fBm with Hurst parameter \(\frac {3}{4}\). The RODE-Taylor schemes (47) of order \(\frac {3}{4}\), (48) of order 1, (49) of order \(\frac {3}{2}\), and (50) of order 2 are applied to simulate the RODE (76). The global mean-square errors \(\mathcal {E}^{G}_{n+1}\) versus the time steps h = 2−i, i = 3,4,5,6,7,8 are plotted in Fig. 2. The two dashed lines are reference lines with slopes 0.75 and 2, respectively.

-

(iii)

Assume that ηt is an fBm with Hurst parameter \(\frac {1}{3}\). The RODE-Taylor schemes (51) of order 1 and (52) of order 2 are applied to simulate the RODE (76). The global mean-square errors \(\mathcal {E}_{n+1}^{G}\) versus the time steps h = 2−i, i = 3,4,5,6,7,8 are plotted in Fig. 3. The two dashed lines are reference lines with slopes 1 and 2, respectively.

RODE-Taylor schemes for (76) with Wiener process

RODE-Taylor schemes for (76) with fBm with Hurst parameter \(\frac {3}{4}\)

RODE-Taylor schemes for (76) with fBm with Hurst parameter \(\frac {1}{3}\)

Example 15

Consider the scalar RODE with affine noise:

For each realization η(i) (77) has the explicit solution:

The initial valued is picked to be y0 = 1 and the final time is picked to be T = 1.

-

(i)

Assume that \(\eta _{t}={\sin \limits } W_{t}\), where Wt is a Wiener process. The Euler scheme (43) of order 1, the RODE-Taylor schemes (44) of order 1, (45) of order \(\frac {3}{2}\) and (46) of order 2, and the affine-RODE-Taylor schemes (62) of order 1, (63) of order 2, and (64) of order 3 are applied to simulate (77).

The global mean-square errors \(\mathcal {E}_{n+1}^{G}\) versus the time steps h = 2−i, i = 3, 4, 5, 6, 7, 8 using the RODE-Taylor schemes and the affine-RODE-Taylor schemes are plotted in Figs. 4 and 5, respectively. The dashed reference lines in Fig. 4 have slopes 1 and 2, respectively, and the dashed reference lines in Fig. 5 have slopes 1 and 3, respectively.

-

(ii)

Assume that \(\eta _{t}={\sin \limits } B^{\frac {3}{4}}(t)\), where \(B^{\frac {3}{4}}(t)\) is an fBm with Hurst parameter \(\frac {3}{4}\). The RODE-Taylor schemes (47) of order \(\frac {3}{4}\), (48) of order 1, (49) of order \(\frac {3}{2}\), and (50) of order 2, and the affine-RODE-Taylor schemes (62) of order 1, (63) of order 2, and (64) of order 3 are applied to simulate (77).

The global mean-square errors \(\mathcal {E}_{n+1}^{G}\) versus the time steps h = 2−i, i = 3,4,5,6,7,8 using the RODE-Taylor schemes and the affine-RODE-Taylor schemes are plotted in Figs. 4 and 5, respectively. The dashed reference lines in Fig. 6 have slopes 0.75 and 2, respectively, and the dashed reference lines in Fig. 7 have slopes 1 and 3, respectively.

-

(iii)

Assume that \(\eta _{t}={\sin \limits } B^{\frac {1}{3}}(t)\), where \(B^{\frac {1}{3}}(t)\) is an fBm with Hurst parameter \(\frac {1}{3}\). The RODE-Taylor schemes (51) of order 1 and (52) of order 2 and affine-RODE-Taylor schemes (62) of order 1, (63) of order 2, and (64) of order 3 are applied to simulate (77).

The global mean-square errors \(\mathcal {E}_{n+1}^{G}\) versus the time steps h = 2−i, i = 3,4,5,6,7,8 using the RODE-Taylor schemes and the affine-RODE-Taylor schemes are plotted in Figs. 8 and 9, respectively. The dashed reference lines in Fig. 8 have slopes 1 and 2, respectively, and the dashed reference lines in Fig. 9 have slopes 1 and 3, respectively.

RODE-Taylor schemes for (77) with Wiener process

Affine-RODE-Taylor schemes for (77) with Wiener process

RODE-Taylor schemes for (77) with fBm with Hurst parameter \(\frac {3}{4}\)

Affine-RODE-Taylor schemes for (77) with fBm with Hurst parameter \(\frac {3}{4}\)

RODE-Taylor schemes for (77) with fBm with Hurst parameter \(\frac {1}{3}\)

Affine-RODE-Taylor schemes for (77) with fBm with Hurst parameter \(\frac {1}{3}\)

Example 16

Consider the scalar RODE with 2-dimensional affine noise process consisting of one Brownian motion and one fBm:

For each realization \(\eta ^{(i)} = ({\eta }_{t}^{1(i)}, {\eta }_{t}^{2(i)})\) (78) has the explicit solution:

The initial valued is picked to be y0 = 1 and the final time is picked to be T = 1.

Assume that \({\eta ^{1}_{t}}={\sin \limits } W_{t}\), where Wt is a Brownian motion and \({\eta ^{2}_{t}}={\sin \limits } B_{t}^{\frac {3}{4}}\), where \(B_{t}^{\frac {3}{4}}\) is an fBm with Hurst parameter \(\frac {3}{4}\). The RODE-Taylor schemes (53) of order \(\frac {1}{2}\), (54) of order 1 and (55) of order 2, and the affine-RODE-Taylor schemes (66) of order 1 and (67) or order 2, are applied to simulate (78).

The global mean-square errors \(\mathcal {E}_{n+1}^{G}\) versus the time steps h = 2−i, i = 3,4,5,6,7,8 using the RODE-Taylor schemes and the affine-RODE-Taylor schemes are plotted in Figs. 10 and 11, respectively. The dashed reference lines in Figs. 10 and 11 have slopes 1 and 2, respectively.

RODE-Taylor schemes for (78) with Wiener process and fBm

Affine-RODE-Taylor schemes for (78) with Wiener process and fBm

Example 17

Consider the scalar RODE with scalar Itô diffusion noise:

For each realization \({W}_{t}^{(i)}\), the solution to (80) gives:

and the explicit solution to (79) is then:

The initial valued is picked to be y0 = 1 and η0 = 1, the final time is picked to be T = 1, and the parameter a = 1.

The Itô-RODE-Taylor schemes (74) of order \(\frac {3}{2}\), and (75) of order 2, are applied to simulate solutions to (79)–(80). The global mean-square errors \(\mathcal {E}^{G}_{n+1}\) versus the time steps h = 2−i, i = 3, 4, 5, 6, 7, 8 using the Itô-RODE-Taylor schemes are plotted in Fig. 12, where the dashed reference lines have slopes 1 and 2, respectively.

7 Closing remarks

The pathwise convergence rates for RODE-Taylor schemes were studied by Jentzen and Kloeden in [10], and the pathwise convergence rates for affine-RODE-Taylor schemes were studied by Asai and Kloeden in [3]. In this work, we first establish a generic theorem on the relation between local and global mean-square convergence rates for RODEs. Then, we investigate the global mean-square convergence rate for each of the RODE-Taylor, affine-RODE-Taylor, and Itô-RODE-Taylor schemes. It appears that the mean-square convergence rate is ε order higher than the pathwise convergence rate (see, e.g., [9, 12]) for the same scheme.

References

Asai, Y., Kloeden, P.E.: Numerical schemes for random ODES via stochastic differential equations. Commun. Appl. Anal. 17, 511–528 (2013)

Asai, Y., Kloeden, P.E.: Multi-step methods for random ODES driven by Itô diffusions. J. Comput. Appl. Math. 294, 210–224 (2016)

Asai, Y., Kloeden, P.E.: Numerical schemes for random ODES with affine noise. Numer. Algorithms 72, 155–171 (2016)

Asai, Y., Herrmann, E., Kloeden, P.E.: Stable integration of stiff random ordinary differential equations. Stoch. Anal. Appl. 31, 293–313 (2013)

Bunke, H.: Gewöhnliche Differentialgleichungen mit zufälligen Parametern. Akademie-Verlag, Berlin (1972)

Chen, C., Hong, J.: Mean-square convergence of numerical approximations for a class of backward stochastic differential equations. Discrete Contin. Dyn. Syst. Ser. B 8, 2051–2067 (2013)

Cortes, J., Jordan, L., Villafuerte, L.: Numerical solution of random differential equations, a mean square approach. Math. Comput. Model. 45, 757–765 (2007)

Grüne, L., Kloeden, P.E.: Pathwise approximation of random ordinary differential equations. BIT 41, 711–721 (2001)

Han, X., Kloeden, P.E.: Random Ordinary Differential Equations and Their Numerical Solution. Springer Nature, Singapore (2017)

Jentzen, A., Kloeden, P.E.: Pathwise taylor schemes for random ordinary differential equations. BIT Numer. Math. 49, 113–140 (2009)

Jentzen, A., Kloeden, P.E.: Taylor Approximations of Stochastic Partial Differential Equations. SIAM, Philadelphia (2011)

Kloeden, P.E., Jentzen, A.: Pathwise convergent higher order numerical schemes for random ordinary. Proc. R. Soc. A 463, 2929–2944 (2007)

Kloeden, P.E., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer, Heidelberg (1992)

Milstein, G.N.: Numerical Integration of Stochastic Differential Equations. Kluwer Acdemic Publishers Group, Dordrecht (1995)

Milstein, G.N.: Tretyakov: Stochastic Numerics for Mathematical Physics. Springer, New York (2004)

Stuart, A.M., Humphries, A.R.: Dynamical Systems and Numerical Analysis. Cambridge University Press, Cambridge (1996)

Acknowledgments

The authors would like to express their sincere gratitude to Prof. Dr. Arnulf Jentzen for his insightful comments. The authors would also like to thank the anonymous referees for their insightful comments, which have led to the much improved quality of this work.

Funding

Wang is partially supported by the National Natural Science Foundation of China (11101184, 11271151), Scientific and Technological Development Plan of Jilin Province (20200201251JC) and China Scholarship Council (201506175081), Cao is partially supported by the National Science Foundation (DMS1620150), Han is partially supported by Simons Foundation (Collaboration Grants for Mathematicians 429717), and Kloeden is partially supported by the National Natural Science Foundation of China (11571125).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, P., Cao, Y., Han, X. et al. Mean-square convergence of numerical methods for random ordinary differential equations. Numer Algor 87, 299–333 (2021). https://doi.org/10.1007/s11075-020-00967-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00967-w

Keywords

- Random ordinary differential equations

- Mean-square convergence

- Integral Taylor expansion

- Affine noise

- Brownian motions

- Fractional Brownian motion