Abstract

In this work, bilinear residual network method is proposed to solve nonlinear evolution equations. The activation function in final layer of deep neural network cannot interact with the neuron inside the deep neural network, but the residual network can transfer the input layer to the activation function in final layer to realize the interaction within the network. This reduces the complexity of the model and gives more interactive results. The steps of solving the exact analytical solution through the residual network are given. The rogue wave solution of Caudrey–Dodd–Gibbon–Kotera–Sawada-like equation is obtained by using the bilinear residual network method. Characteristic plots and dynamic analysis of these rogue waves are given.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the field of deep learning, the increase in the number of network layers is generally accompanied by the increase in the consumption of computing resources, the model is easy to over fit, generation of gradient disappearance problem. He et al. [1] found that, with the increase of network layers, the network degraded. When the network degenerates, the shallow network can achieve better training effect than the deep network. If the characteristics of the lower layer are transmitted to the higher layer, the effect should be at least no worse than that of the shallow network. Residual neural network have been proposed, via “shortcut connections,” the underlying features are transmitted to the deep layer. Most importantly, in this process, no additional parameters and model complexity are added. Zhang et al. [2] have proposed bilinear neural networks method (BNNM). Then, Zhang et al. used BNNM to find the exact analytical solution of the (3+1)-dimensional Jimbo–Miwa equation [3], Generalized lump solutions of Caudrey–Dodd–Gibbon–Kotera–Sawada-like (CDGKS-like) equation [4], and the interaction solutions for p-gBKP equation [5, 6]. BNNM brings the neural network model into the analytical solution field of partial differential equation for the first time, based on the bilinear method. When it comes to bilinear methods, the Hirota bilinear operator method is firstly come out of mind. Hirota was firstly proposed D operator: [7]

Many physical phenomena have been studied via bilinear method: soliton solution [8,9,10,11,12,13], localized waves [14,15,16,17], rogue wave solutions [18,19,20,21], lump solutions [22], solitary waves [23], lump-type solutions [24,25,26,27], breather solutions [28], interactions [29,30,31,32], M-lump solutions [33]. Based on the theory of Hirota bilinear method, Ma [34] has proposed a generalized bilinear method with the generalized bilinear operator as follows:

where \(\alpha _{p}^s=(-1)^{r_{p}(s)},\,\,\,\,s=r_{p}(s) \bmod p\) and \( \alpha _{p}^i \alpha _{p}^j \ne \alpha _{p}^{i+j},\,\,\,\,i, j\ge 0,\,p\ge 2\,.\)

The generalized bilinear method will be shown by using following (2+1)-dimensional CDGKS equation

where \(\partial ^{-1}\) is the integral operator. Konopelchenko et al. firstly proposed Eq. (2) in 1984 [35]. Fang et al. [36] have obtained the Lump-type solution, fusion and fission phenomena, rogue wave of Eq. (2). Manafian et al. [37] have got the interaction solutions and N-lump of localized waves for the variable-coefficient CDGKS equation by using Hirota bilinear method. Geng et al. [38] have got the Riemann theta function solutions via characteristic polynomial for the CDGSK hierarchy. Cheng et al. [39] have studied Interaction behavior of the (2+1)-dimensional CDGKS equation. Tang et al. [40] have obtained the lump solutions of CDGKS equation via direct method.

Generally, using the following bilinear transformation:

the bilinear form of Eq. (2) will be obtained as follows:

When p=2, bilinear operator D follows the Hirota bilinear formula (1), so we can get

When p=3, bilinear operator D follows the generalized bilinear formula (2); the generalized bilinear equation can be obtained as follows:

When p=5, bilinear operator D follows the generalized bilinear formula (2); the generalized bilinear equation can be obtained as follows:

by using the bilinear transformation as follows:

the following CDGKS-like equation can be derived from generalized bilinear equation (7),

where \(v_x=u_y, w_x=u_{yy}\).

The rest of this work is in the following organization. Section 2 will introduce the residual network and propose a new method, bilinear residual network method, for solving the exact analytical solution of NLEEs. In Sects. 3 and 4, the applications of BRNM will be given. Rogue wave solutions of Eq. (9) will be obtained via “2-2” and “2-3” residual network model. Characteristic plots and dynamic analysis of these rogue waves will be given. Section 5 will conclude this paper.

2 BRNM

2.1 Residual block and residual network

In order to realize the internal interaction of neural network without increasing the complexity, we use “shortcut connections” to form a residual network. Countless residual blocks form such a residual network. The formula of a residual block can be written as

where \(F(\cdot )\) is the activation function, \(\overrightarrow{x}\) represents input vector, and \(\overrightarrow{\xi _{i}}\) represents neurons of i-th layer,

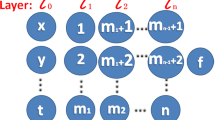

where w represents the weight value vector, and the residual block with “shortcut connections” can be intuitively understood through Fig. 1. Since “shortcut connections” add neither extra parameter nor computational complexity, the whole neural network model can get more interactive results without increasing parameters and complexity.

Taking the output of the residual network, stacked by these residual blocks, as the test function f, we can finally get the following expression,

where \(F(\cdot )\) is the activation function, \(\overrightarrow{x}\) represents input vector, and \(\overrightarrow{\xi _{n}}\) represents neurons of n-th layer. the residual network with “shortcut connections” can be intuitively understood through Fig. 2. The residual network not only share the input vector \(\overrightarrow{x}\) of the input layer, but also share the cross-layer vector \(F(\overrightarrow{\xi _{n}}+\overrightarrow{x})\) of cross-layer connection. In addition, we give a definition of generalized residual network, that is, the “shortcut connections” can be a multiple of two layers, a multiple of one layer, or even a multiple of 3, 4, \(\dots \), i layers as,

2.2 Bilinear residual network method

The residual network can enrich the diversity of solutions without increasing the model parameters and complexity by using “shortcut connections,” so how to use the residual network to obtain the original function of nonlinear evolution equation? Next, we give the specific steps to solve the exact analytical solution by using bilinear residual network.

- Step 1::

-

Using the Hirota bilinear method (or generalized bilinear method), the bilinear equation of a given nonlinear evolution equation is derived. If it is a system of nonlinear evolution equations, it can be carried out separately, and combined calculation in step 5.

- Step 2::

-

The bilinear equation obtained in step 1 is the equation concerning the test function f as follows:

$$\begin{aligned}&\qquad \qquad B(f, f_{x}, f_{y}, f_{t}, f_{xy}, f_{ty},\nonumber \\&\qquad \qquad \qquad f_{xt}, f_{xyt}, ...)=0. \end{aligned}$$(14)Substitute the test function f, which is constructed by using the residual network as Eq. (12), into Eq. (14), a nonlinear overdetermined algebraic equation about \(x, y, t, xy, xt, ty, xyt, F(x,y,t,...),...\) will be obtained as follows:

$$\begin{aligned}&\qquad \qquad A(x, y, t, xy, xt, ty, xyt, \nonumber \\&\qquad \qquad \qquad F(x,y,t,...),...) =0. \end{aligned}$$(15) - Step 3::

-

Extracting the coefficient about \(x, y, t, xy, xt, ty, xyt, F(x,y,t,...),...\) from Eq. (15), the system of nonlinear equations concerning weight w and threshold value b will be obtained.

- Step 4::

-

Solving this system of algebraic equations concerning weight w and threshold value b by using symbolic computation technology, the coefficient solutions of this system can be obtained.

- Step 5::

-

Substituting these coefficient solutions into test function f Eq. (12), the exact analytical solutions of bilinear equation (14) will be obtained.

- Step 6::

-

The analytical solutions u for NLEEs will be got via Hirota (or generalized) bilinear transformation.

Different from the approximate numerical solution, the bilinear residual network method, proposed in this paper, is used to solve the exact analytical solution of the nonlinear model. The similarities between the two methods can be seen from Figs. 3 and 4. The neural network model is used to fit the original function of the partial differential equation in both two algorithms. As can be seen from Fig. 3, physics-informed neural networks (PINNs) method obtains the optimization problem about weight W by bringing in data points, so as to obtain the optimal weight parameter W. Different from PINNs, the bilinear residual network method extracts the coefficients before the independent variables \(x, \dots , t\) to obtain a system of equations (Fig. 4), containing weight parameters W. Through solving this system of equations, the exact constraint relationship between weights W is obtained. Data-driven methods usually require discrete data points, so that the information of the original equation cannot be fully utilized. However, the bilinear residual network method proposed in this paper does not require discrete data points, so the exact analytical solution of the original equation can be obtained.

3 Rogue wave solutions and the “2-2” residual network

The “2-2” ResNet with generalized activation function can be expressed as follows:

setting \(F_1(\xi _1)=(\xi _1), F_{2}(\xi _2)=\exp (\xi _2), F_{3}(\cdot )=(\cdot )^2, F_{4}(\cdot )=(\cdot )^2\), we procure

Substituting the test function Eq. (17), constructed by the “2-2” ResNet model (Fig. 5), into the generalized bilinear equation (7), we can get a system of nonlinear equations through collecting the coefficient of each term. By using symbolic computing techniques with the help of Maple, we obtain the following solution of this algebraic system,

“2-2” residual network model of Eq. (16) by setting \(F_1(\xi _1)=(\xi _1), F_{2}(\xi _2)=\exp (\xi _2), F_{3}(\cdot )=(\cdot )^2, F_{4}(\cdot )=(\cdot )^2\)

(Color online) The characteristic diagrams of the rogue waves for Eq. (19) with \(w_{2,3}=1, w_{1,4}=1, w_{X, 1}=1, w_{y, 1}=2\)

Substituting solution above into the test function (17), the analytical solution for original equation (9) will be obtained via generalized bilinear transformation (8),

“2-3” residual network model for Eq. (20) by setting \(F_1(\xi _1)=(\xi _1), F_{2}(\xi _2)=\exp (\xi _2), F_{3}(\cdot )=(\cdot )^2, F_{4}(\cdot )=(\cdot )^2, F_{5}(\cdot )=(\cdot )^2\)

(Color online) The dynamic evolution 3−D plots of rogue waves for Eq. (23) with \(t=-0.7, t=-0.4, t=0, t=0.35, t=0.6\)

The dynamical shapes for the rogue wave solutions are exhibited in Fig. 6. Two soliton dark waves and a series of periodic waves are shown in Fig. 6a, c. But from density plot in Fig. 6b, we can find that two solitons shown in Fig. 6a are a series of periodic waves with small energy. Figure 6d shows the x-curve graphs, and Fig. 6e shows the y-curve graphs.

(Color online) The contour plot, density plot and curve plots of rogue waves for Eq. (23)

4 Rogue wave solutions and the “2-3” residual network

The “2-2” ResNet with generalized activation function can be expressed as follows:

setting \(F_1(\xi _1)=(\xi _1), F_{2}(\xi _2)=\exp (\xi _2), F_{3}(\cdot )=(\cdot )^2, F_{4}(\cdot )=(\cdot )^2, F_{5}(\cdot )=(\cdot )^2\), we procure

Substituting the test function Eq. (21), constructed by the “2-3” ResNet model (Fig. 7), into the generalized bilinear equation (7), we can get a system of nonlinear equations through collecting the coefficient of each term. By using symbolic computing techniques with the help of Maple, we obtain the following solution of this algebraic system,

Substituting solution above into the test function (21), the analytical solution for original equation (9) will be obtained via generalized bilinear transformation (8),

The evolution plots of Eq. (23) at different times are shown in Fig. 8, from which we can find that the rogue waves moves in the negative direction of X as time goes by. When time reaches 0, the two waves merge at one point slowly; then it spreads out slowly. The contour plot, density plot and curve plots of rogue waves for Eq. (23) are shown in Fig. 9, from which we could find that the rogue waves for Eq. (23) are composed of two columns of periodic waves.

5 Conclusions

In this work, bilinear residual network method has been proposed for the first time to get the exact analytical solutions of NLEEs. Without increasing the additional parameters and complexity of the model, the shallow layer parameters are transferred to the deep layer, which increases the interaction within the network by using the “shortcut connections.” So richer interactive solutions can be find without increasing the additional parameters and complexity of the model. The specific steps of bilinear residual network method have been given. An example have been solved by this method: CDGKS equation. Strange wave solutions are obtained, and the dynamic characteristics of these strange waves have been analyzed. In the future, we will try to solve other NLEEs or even a system of nonlinear partial differential equations by using BRNM. Readers can read and participate our source codeFootnote 1\(^{,}\)Footnote 2 for implementation details

Data availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Notes

(International community) https://github.com/Runfa-Zhang/CDGKS-like.

(Chinese community) https://gitee.com/zhangrunfa/CDGKS-like.

References

He, K.M., Zhang, X.Y., Ren, S.Q., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Zhang, R.F., Bilige, S.D.: Bilinear neural network method to obtain the exact analytical solutions of nonlinear partial differential equations and its application to p-gBKP equatuon. Nonlinear Dyn. 95, 3041–3048 (2019)

Zhang, R.F., Li, M.C., Yin, H.M.: Rogue wave solutions and the bright and dark solitons of the (3+1)-dimensional Jimbo–Miwa equation. Nonlinear Dyn. 103, 1071–1079 (2021)

Zhang, R.F., Li, M.C., Albishari, M., Zheng, F.C., Lan, Z.Z.: Generalized lump solutions, classical lump solutions and rogue waves of the (2+1)-dimensional Caudrey–Dodd–Gibbon–Kotera–Sawada-like equation. Appl. Math. Comput. 403, 126201 (2021)

Zhang, R.F., Bilige, S.D., Liu, J.G., Li, M.C.: Bright-dark solitons and interaction phenomenon for p-gBKP equation by using bilinear neural network method. Phys. Scr. 96, 025224 (2020)

Zhang, R.F., Bilige, S.D., Temuer, C.: Fractal solitons, arbitrary function solutions, exact periodic wave and breathers for a nonlinear partial differential equation by using bilinear neural network method. J. Syst. Sci. Complex. 34, 122–139 (2021)

Hirota, R.: The Direct Method in Soliton Theory, vol. 155. Cambridge University Press, Cambridge (2004)

Wazwaz, A.M., Kaur, L.: Complex simplified Hirota’s forms and lie symmetry analysis for multiple real and complex soliton solutions of the modified KdV-Sine-Gordon equation. Nonlinear Dyn. 95, 2209–2215 (2019)

Lan, Z.Z.: Multi-soliton solutions for a (2+1)-dimensional variable-coefficient nonlinear schrödinger equation. Appl. Math. Lett. 86, 243–248 (2018)

Wazwaz, A.M., Kaur, L.: New integrable Boussinesq equations of distinct dimensions with diverse variety of soliton solutions. Nonlinear Dyn. 97, 83–94 (2019)

Osman, M.S.: One-soliton shaping and inelastic collision between double solitons in the fifth-order variable-coefficient Sawada–Kotera equation. Nonlinear Dyn. 96, 1491–1496 (2019)

Osman, M.S., Wazwaz, A.M.: An efficient algorithm to construct multi-soliton rational solutions of the (2+ 1)-dimensional KdV equation with variable coefficients. Appl. Math. Comput. 321, 282–289 (2018)

Wazwaz, A.M.: Two new integrable fourth-order nonlinear equations: multiple soliton solutions and multiple complex soliton solutions. Nonlinear Dyn. 94, 2655–2663 (2018)

Wang, C.J., Fang, H.: General high-order localized waves to the Bogoyavlenskii–kadomtsev–Petviashvili equation. Nonlinear Dyn. 100(1), 583–599 (2020)

Wang, C.J., Fang, H., Tang, X.: State transition of lump-type waves for the (2+ 1)-dimensional generalized kdv equation. Nonlinear Dyn. 95(4), 2943–2961 (2019)

Wang, C.J., Dai, Z., Liu, C.: Interaction between kink solitary wave and rogue wave for (2+ 1)-dimensional burgers equation. Mediterr. J. Math. 13(3), 1087–1098 (2016)

Wang, C.J.: Spatiotemporal deformation of lump solution to (2+ 1)-dimensional kdv equation. Nonlinear Dyn. 84(2), 697–702 (2016)

Lan, Z.Z., Su, J.J.: Solitary and rogue waves with controllable backgrounds for the non-autonomous generalized AB system. Nonlinear Dyn. 96, 2535–2546 (2019)

Yin, H.M., Tian, B., Zhao, X.C., Zhang, C.R., Hu, C.C.: Breather-like solitons, rogue waves, quasi-periodic/chaotic states for the surface elevation of water waves. Nonlinear Dyn. 97, 21–31 (2019)

Lan, Z.Z.: Rogue wave solutions for a higher-order nonlinear Schrödinger equation in an optical fiber. Appl. Math. Lett. 107, 106382 (2020)

Yin, H.M., Tian, B., Zhang, C.R., Du, X.X., Zhao, X.C.: Optical breathers and rogue waves via the modulation instability for a higher-order generalized nonlinear Schrödinger equation in an optical fiber transmission system. Nonlinear Dyn. 97, 843–852 (2019)

Ma, W.X.: Bilinear equations, Bell polynomials and linear superposition principle. J. Phys Conf. Ser. 411, 012021 (2013)

Ghanbari, B., Mustafa, I., Rada, L.: Solitary wave solutions to the Tzitzeica type equations obtained by a new efficient approach. J. Appl. Anal. Comput. 9, 568–589 (2019)

Gai, L.T., Ma, W.X., Li, M.C.: Lump-type solution and breather lump-kink interaction phenomena to a (3+1)-dimensional GBK equation based on trilinear form. Nonlinear Dyn. 100, 2715–2727 (2020)

Gai, L.T., Ma, W.X., Li, M.C.: Lump-type solutions, rogue wave type solutions and periodic lump-stripe interaction phenomena to a (3+1)-dimensional generalized breaking soliton equation. Phys. Lett. A 384, 126178 (2020)

Liu, J.G.: Lump-type solutions and interaction solutions for the (2+1)-dimensional generalized fifth-order KdV equation. Appl. Math. Lett. 86, 36–41 (2018)

Liu, J.G., Zhu, W.H.: Various exact analytical solutions of a variable-coefficient Kadomtsev–Petviashvili equation. Nonlinear Dyn. 100, 2739–2751 (2020)

Liu, W., Wazwaz, A.M., Zhang, X.X.: High-order breathers, lumps, and semirational solutions to the (2+1)-dimensional Hirota–Satsuma–Ito equation. Phys. Scr. 94, 075203 (2019)

Ma, W.X., Yong, X.L., Zhang, H.Q.: Diversity of interaction solutions to the (2+1)-dimensional Ito equation. Comput. Math. Appl. 75, 289–295 (2018)

Liu, J.G.: Lump-type solutions and interaction solutions for the (2+1)-dimensional asymmetrical Nizhnik–Novikov–Veselov equation. Eur. Phys. J. Plus 134, 56 (2019)

Hua, Y.F., Guo, B.L., Ma, W.X., Lü, X.: Interaction behavior associated with a generalized (2+1)-dimensional Hirota bilinear equation for nonlinear waves. Appl. Math. Modell. 74, 184–198 (2019)

Ma, W.X.: Lump and interaction solutions to linear (4+1)-dimensional PDEs. Acta Math. Sci. 39B(2), 498–508 (2019)

Zhao, Z.L., He, L.C.: M-lump, high-order breather solutions and interaction dynamics of a generalized (2+1)-dimensional nonlinear wave equation. Nonlinear Dyn. 100, 2753–2765 (2020)

Ma, W.X.: Generalized bilinear differential equations. Stud. Nonlinear Sci. 2(4), 140–144 (2011)

Konopelchenko, B., Dubrovsky, V.: Some new integrable nonlinear evolution equations in 2+1 dimensions. Phys. Lett. A 102(1), 15–17 (1984)

Fang, T., Gao, C.N., Wang, H., Wang, Y.H.: Lump-type solution, rogue wave, fusion and fission phenomena for the (2+1)-dimensional Caudrey–Dodd–Gibbon–Kotera–Sawada equation. Mod. Phys. Lett. B 33, 1950198 (2019)

Manafian, J., Lakestani, M.: N-lump and interaction solutions of localized waves to the (2+1)-dimensional variable-coefficient Caudrey–Dodd–Gibbon–Kotera–Sawada equation. J. Geom. Phys. 150, 103598 (2020)

Geng, X.G., He, G.L., Wu, L.H.: Riemann theta function solutions of the Caudrey–Dodd–Gibbon–Sawada–Kotera hierarchy. J. Geom. Phys. 140, 85–103 (2019)

Cheng, X.P., Yang, Y.Q., Ren, B., Wang, J.Y.: Interaction behavior between solitons and (2+1)-dimensional CDGKS waves. Wave Motion 86, 150–161 (2019)

Tang, Y., Tao, S., Guan, Q.: Lump solitons and the interaction phenomena of them for two classes of nonlinear evolution equations. Comput. Math. Appl. 72(9), 2334–2342 (2016)

Acknowledgements

This work is supported by the National Natural Science Foundation of China under grant Nos: 12061054 and 61877007; Fundamental Research Funds for the Central Universities under No. DUT20GJ205.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest concerning the publication of this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, RF., Li, MC. Bilinear residual network method for solving the exactly explicit solutions of nonlinear evolution equations. Nonlinear Dyn 108, 521–531 (2022). https://doi.org/10.1007/s11071-022-07207-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-07207-x