Abstract

The mathematical model with time delay is often more practical because it is subject to current and past state. What remains unclear are the details, such as how time delay and sudden environmental changes influence the dynamic behavior of systems. The purpose of this paper is to analyze the long-time behavior of a stochastic Nicholson’s blowflies model, which includes distributed delay and degenerate diffusion. The application of the Markov semigroups theory is to prove that there exists a unique stationary distribution. What’s more, the expression of probability density function around the unique positive equilibrium of the deterministic model is briefly described under a certain condition. The results of this paper can be used to find that the weaker white noise can guarantee the existence of a unique stationary distribution and the stronger mortality rate can cause the extinction of Nicholson’s blowflies. Some numerical examples are also given to explain the effect of the white noise.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent decades, using biological models to study the survival problem of populations is a extraordinary significant method and a hot topic of concern. In particular, attention is concentrated on qualitative analysis of population model by many mathematicians and biologists. Besides, Nicholson’s blowflies model belongs to a class of biological systems and its analog equation is more consistent with the experimental data [1]. Therefore, it is more realistic to study the dynamic behavior of Nicholson’s blowflies model.

To the best of our knowledge, since Gurney et al. [1] presented Nicholson’s blowflies model, which explains Nicholson’s blowflies data more accurately, the model and its modifications have been widely studied [1,2,3,4,5]. For example, Zhu et al. [2] discussed the global existence of positive solution for a stochastic Nicholson’s blowflies model with regime switching and obtained path estimation. Xiong et al. [3] investigated the global exponential stability of positive pseudo-almost periodic solution. Next, we will explain why it is more meaningful to build Nicholson’s blowflies model with distributed delay and white noise.

On the one hand, when modeling ecosystem dynamics, it is desirable to take into account models that not only depend on the current situation but also on their past state. The above model cannot explain such phenomenon [1,2,3,4,5], while the time delay can better describe this phenomenon [6,7,8,9]. Nevertheless, it is difficult to analyze the long-time behavior of discrete time-delay equations. Hence, we use distributed delay to characterize the dynamic behavior of discrete delay equations. For instance, Wang et al. [6] studied the asymptotic stability of the positive equilibrium for a Lotka–Volterra predator–prey system of population allelopathy with distributed delay and proved Hopf bifurcations. Al-Omari et al. [8] analyzed global stability in a population competition system with distributed delay and harvesting. However, the difference between this paper and the above articles is that we study the long-term behavior of Nicholson’s blowflies model.

On the other hand, few people assume that the environment is constant in real life. Due to the influence of environmental factors (such as weather, food supply and so on.), it is reasonable and practical to investigate the biological system with environmental noise. Many scholars analyzed the time-delay model with random noise [10,11,12]. For example, Hu et al. [10] proved that there is a global almost surely positive solution and obtained asymptotic path estimation of the solution for a stochastic Lotka–Volterra system with unbounded distributed delay. Liu et al. [11] discussed long-time behavior for a stochastic logistic model with distributed delay. Even though, as far as we know, very few studies have been done to think over the influence of distributed delay and environmental noise into Nicholson’s blowflies model.

The originality of this paper is as follows: (i) The white noise and distributed delay are all taken into account in the Nicholson’s blowflies model. (ii) Extinction and asymptotic stability for a stochastic Nicholson’s blowflies model with distributed delay is solved. (iii) The expression of the probability density function around the positive equilibrium point of the deterministic system is obtained. In addition, the questions addressed of this paper are as follows: (i) What are the conditions for the existence of probability density function around the positive equilibrium point of the deterministic system? (ii) How does the white noise affect the growth of Nicholson’s blowflies?

To answer the above questions, the layout of this paper is as follows: the second part mostly formulates the stochastic Nicholson’s blowflies model with distributed delay and some preliminaries are put forward. In Sect. 3, we present the main results for the strong kernel case, which include the existence and uniqueness of globally positive solution, extinction of Nicholson’s blowflies, asymptotic stability and probability density function of Nicholson’s blowflies model. In Sect. 4, there are some results for the weak kernel. In Sect. 5, we make simulations to confirm our results. Section 6 concludes the paper.

2 The model formulation and some preliminaries

We first formulate a stochastic Nicholson’s blowflies model with distributed delay in Sect. 2.1.

2.1 The model formulation

A classic Nicholson’s blowflies model was presented by Gurney et al. [1] as follows:

with initial conditions \(X(s)=\phi (s)\), for \(s\in [-\tau ,0]\), \(\phi \in C([-\tau ,0],[0,+\infty ))\), \(\phi (0)>0\). All parameters in model (1) are assumed to be positive and listed in the Table 1.

Noting that the Nicholson’s blowflies model (1) is a discrete delay equation, but it is not easy for a discrete delay equation to analyze its long-time behavior. To better explain the dynamic behavior of the model (1), we introduce continuous time delay into the model (1) is as follows:

where the kernel \(f:[0,\infty )\rightarrow [0,\infty )\) is a function of time. The article [13] of Macdonald demonstrated that the distribution delay obeys Gamma distribution, that is,

with \(\alpha >0\) and \(n\ge 0\). As is known to all, we get a strong kernel for \(n=1\) while weak kernel for \(n=0\), i.e.,

Define

according to the linear chain technique, then the following equivalent system of the above system (2) is:

If \(\delta <p\), then there exists a unique positive equilibrium point:

Model (3) is unavoidable affected by external environment factors due to the randomness of real life. We consider that the per capita adult mortality daily rate \(\delta \) is subject to the white noise. Thus, \(\delta \) is replaced by \(\delta -\sigma \dot{B}(t)\) , where B(t) is a one-dimensional standard Brownian motion and \(\sigma ^{2}\) is the white noise intensity. The deterministic Nicholson’s blowflies model with distributed delay can be transformed into the following stochastic model:

We will present the following desirable definitions and lemmas in Sect. 2.2 after we formulate the stochastic model with distributed delay.

2.2 Some preliminaries

We define \((\Omega , \mathcal {F}, \{\mathcal {F}_{t}\}_{t\ge 0}, \mathbb {P})\) be a complete probability space with a natural filtration \(\{\mathcal {F}_{t}\}_{t\ge 0}\) satisfying the usual conditions (i.e., it is increasing and right continuous while \(\mathcal {F}_{0}\) contains all \(\mathbb {P}\)-null sets), and B(t) is defined on this complete filtrated probability space. Define

Consider the n-dimensional SDE:

with its initial value \(\hat{X}(0) = \hat{X}_{0} \in \mathbb {R}^{n}\). Let \(C^{2,1}(\mathbb {R}^{n} \times [t_{0},\infty ); \mathbb {R}_{+})\) be the family of all nonnegative real-valued functions \(V(\hat{X}, t)\) defined on \(\mathbb {R}^{n} \times [t_{0},\infty )\) which satisfies continuously twice differentiable in \(\hat{X}\) and once in t. The differential operator L of Eq. (5) is defined as

If L acts on a function \(V\in C^{2,1}(\mathbb {R}^{n} \times [t_{0},\infty ); \mathbb {R}_{+})\), then

where \(V_{t}=\frac{\partial V}{\partial t}\), \(V_{\hat{X}}=(\frac{\partial V}{\partial \hat{X}_{1}},\frac{\partial V}{\partial \hat{X}_{2}},\ldots ,\frac{\partial V}{\partial \hat{X}_{n}})\) and \(V_{\hat{X}\hat{X}}=(\frac{\partial ^{2}V}{\partial \hat{X}_{i}\partial \hat{X}_{j}})_{n\times n}\).

Lemma 2.1

[2] If \(F(x)=xe^{-ax}\), \(\forall a>0, x>0\), then \(F(x)\le \frac{1}{ae}\).

2.2.1 Markov semigroup

Next, some fundamental definitions and results with regard to Markov semigroup are proposed.

Define \(\mathbb {X}=\mathbb {R}_{+}^{3}\), \(\Sigma =\mathbb {D}\) be the \(\sigma \)-algebra of Borel subsets of \(\mathbb {X}\) and m be a Lebesgue measure of the space \((\mathbb {X}, \Sigma )\). Let \(\mathbb {D}\) contain all densities, i.e.,

where \(\Vert \cdot \Vert \) is the norm of the Lebesgue measure space \(L^{1}=L^{1}(\mathbb {X}, \Sigma , m)\). If \(P(\mathbb {D})\subset \mathbb {D}\), then a linear mapping \(P:L^{1}\rightarrow L^{1}\) is known as Markov operator [14].

Definition 2.2

[15] If there is a measurable function \(k: \mathbb {X}\times \mathbb {X}\rightarrow [0, \infty )\) satisfying

then

P is called an integral Markov operator and the function k a kernel.

A family of Markov operators \(\{P(t)\}_{t\ge 0}\) which satisfies the following three conditions:

- (1):

-

\(P(0)=Id\) (Id is the identity matrix);

- (2):

-

\(P(t+s)=P(t)P(s)\) for every \(s, t\ge 0\);

- (3):

-

\(\forall g\in L^1\), the function \(t\mapsto P(t)g\) is continuous concerning the \(L^1\)-norm; is known as a Markov semigroup.

Definition 2.3

[15] A Markov semigroup \(\{P(t)\}_{t\ge 0}\) is known as asymptotically stable if there is an invariant density \(g*\) satisfies

Definition 2.4

[15] A Markov semigroup \(\{P(t)\}_{t\ge 0}\) is known as sweeping concerning a set \(A \in \Sigma \) if

Lemma 2.5

[15] Assume that \(\{P(t)\}_{t\ge 0}\) be an integral Markov semigroup with the continuous kernel \(k(t, \mathbf {x}, \mathbf {y})\) for \(t>0\) of satisfying \(\int _{\mathbb {X}}k(t, \mathbf {x}, \mathbf {y})m(\mathrm{d}\mathbf {x})=1\) for all \(y\in \mathbb {X}\). If

Then the Markov semigroup is asymptotically stable or is sweeping concerning compact sets.

If a Markov semigroup \(\{P(t)\}_{t\ge 0}\) is asymptotically stable or is sweeping for a sufficiently large family sets, then its property is known as the Foguel alternative.

2.2.2 Fokker–Planck equation

Denote the transition probability function by \(\mathscr {P}(t, x, y,z, A)\), for any \(A\in \Sigma \) and the diffusion process (X(t), Y(t), Z(t)), i.e.,

where (X(t), Y(t), Z(t)) of (4) is a solution with the initial condition \((X(0), Y(0), Z(0))=(x, y, z)\). If \(t>0\), the distribution of (X(t), Y(t), Z(t)) is absolutely continuous with regard to the Lebesgue measure, then there is also a density U(t, x, y, z) of (X(t), Y(t), Z(t)) which satisfies the following Fokker–Planck equation [16]:

where

A Markov semigroup related to (6) is briefly described now. Let \(P(t)V(x, y, z)=U(t, x, y, z)\), \( \forall ~V\in \mathbb {D}\). Based on the operator P(t) is a contraction on \(\mathbb {D}\), it can be extended to a contraction on \(L^1\). The operators \(\{P(t)\}_{t\ge 0}\) can form a Markov semigroup. The infinitesimal generator of \(\{P(t)\}_{t\ge 0}\) is denoted by \(\mathscr {A}\), i.e.,

The adjoint operator of \(\mathscr {A}\) is:

3 Main results in the strong kernel case

Firstly, the existence and uniqueness of globally positive solution of (4) is discussed, then investigating the long-term behavior of the model (4) is desirable.

3.1 Existence and uniqueness of globally positive solution

Theorem 3.1

For any initial value \((X(0), Y(0), Z(0))\in \mathbb {R}_{+}^{3}\), there exists a unique solution of the system (4) with the strong kernel, which will remain in \(\mathbb {R}_{+}^{3}\) with probability one.

Proof

The proof is similar to that in [2, 17, 18]. Here we omit it. \(\square \)

The aim of this theorem is to prove the population X(t) of the stochastic system (4) becomes extinct.

3.2 Extinction

Theorem 3.2

Let (X(t), Y(t), Z(t)) be a solution of the stochastic system (4) with the strong kernel, for any given initial value \((X(0), Y(0), Z(0))\in \mathbb {R}_{+}^{3}\). If \(p<\delta \), then

That is to say, the population X(t) of the stochastic system (4) becomes extinct with probability one.

Proof

Let

where

Define a \(C^{2}\)-function \(V_{1}(t)\): \(\mathbb {R}_{+}^{3}\rightarrow \mathbb {R}_{+}\)

where \(c_{1}=\frac{\omega _{1}}{\delta }\), \(c_{2}=\frac{\omega _{2}}{\alpha }\) and \(c_{3}=\frac{\omega _{3}}{\alpha }\). Using Itô’s formula [19] to system (4) leads to

where

Substituting (9) into (8) yields

Integrating it from 0 to t and dividing t on both sides, we obtain

where let \(M(t)=\int _0^t \frac{c_{1}\sigma X(s)}{V_{1}(s)}\mathrm{d}B(s)\), which is a local martingale with \(M(0) = 0\). Moreover, using the strong law of large numbers [19] yields

Since \(p<\delta \), \(\lambda =\root 3 \of {\frac{p}{\delta }}<1\), substituting (12) into (11) and then taking the superior limit, we obtain

therefore,

which means that

In other words, the population X(t) is arrived at becoming extinct with probability one. \(\square \)

Next, we discuss the existence of a unique stable stationary distribution.

3.3 Asymptotic stability

Theorem 3.3

Let (X(t), Y(t), Z(t)) be a solution of the stochastic system (4) with the strong kernel. The distribution of (X(t), Y(t), Z(t)) has a density U(t, x, y, z), \(\forall t > 0\). If \(p>\delta +\frac{\sigma ^{2}}{2}\), then there is a unique density \(U^{*}(x, y, z)\) satisfies

The proof of the above Theorem 3.3 consists of the following steps:

-

(1)

Based on the H\(\ddot{o}\)rmander condition [14], it indicates that the kernel function of the process \((X(t), Y(t), Z(t))\) is absolutely continuous.

-

(2)

According to support theorems [20], we prove that the kernel function is positive on \(\mathbb {R}_{+}^{3}\).

-

(3)

We demonstrate that the Markov semigroup is asymptotically stable or is sweeping with respect to compact sets by Lemma 2.5.

-

(4)

Since there exists a Khasminski\(\breve{i}\) function, we exclude sweeping.

Next, to accommodate the above steps, we therefore give the following Lemmas 3.4–3.7.

Lemma 3.4

For every \((x_{0}, y_{0}, z_{0}) \in \mathbb {X}\) and \(t > 0\), the transition probability function \(\mathbb {P}(t, x_{0}, y_{0}, z_{0}, A)\) has a continuous density \(k(t, x, y, z; x_{0}, y_{0}, z_{0})\) with regard to Lebesgue measure.

Proof

If \(\mathbf {a}(x)\in \mathbb {R}_{+}^{3}\) and \(~\mathbf {b}(x)\in \mathbb {R}_{+}^{3}\) are vector fields, then the Lie bracket \([\mathbf {a},\mathbf {b}]\) is a vector field expressed by

Let

Then Lie bracket \([\mathbf {a},\mathbf {b}]\) is given by

and

where

we have

Thus, \(\mathbf {b},~\mathbf {a}_1~\text {and}~\mathbf {a}_2\) are linearly independent on \(\mathbb {R}_{+}^{3}\). Then for each \((X, Y, Z)\in \mathbb {R}_{+}^{3}\), where \(X\ne \frac{1}{a}\), the vector \(\mathbf {b},~\mathbf {a}_1~\text {and}~\mathbf {a}_2\) span the space \(\mathbb {R}_{+}^{3}\). Based on the Hörmander Theorem [21], the transition probability function \(\mathscr {P}(t, x_0, y_0, z_0, A)\) has a continuous density \(k(t, x, y, z; x_0, y_0, z_0)\), i.e., \(k\in C^\infty ((0, \infty )\times \mathbb {R}_{+}^{3}\times \mathbb {R}_{+}^{3})\). \(\square \)

Lemma 3.5

For every \((x_{1}, y_{1}, z_{1})\in \mathbb {R}_{+}^{3}\) and \((x_{2}, y_{2}, z_{2})\in \mathbb {R}_{+}^{3}\), there exists \(T > 0\) such that \(k(T, x_{2}, y_{2}, z_{2}; x_{1}, y_{1}, z_{1})>0\).

Proof

The method of verifying the positivity of k can be adopted, based on support theorems [20]. To use the support theorems, we first give Stratonovich version of Itô’s form (4):

Next, we fix a point \((x_0, y_0, z_0)\in \mathbb {X}\) and a function \(\phi \in L^2([0, T]; \mathbb {R})\) and the integral system is as follows:

Let \(\mathbf {N}=(x, y, z)^T\), \(\mathbf {N}_0=(x_0, y_0, z_0)^T\), denote \(D_{\mathbf {N}_0; \phi }\) be the Fréchet derivative of the function \(h{\mapsto }\mathbf {N}_{\phi +h}(T): L^2([0, T]; \mathbb {R})\rightarrow \mathbb {X}\). If the rank of the Fréchet derivative \(D_{\mathbf {N}_0; \phi }\) is full rank for some \(\phi \in L^2([0, T]; \mathbb {R})\), then \(k(T, x, y, z; x_0, y_0, z_0)>0\) for \(\mathbf {N}=\mathbf {N}_\phi (T)\) holds. Let

where \(\mathbf {f'}\) and \(\mathbf {g'}\) are the Jacobians of

respectively. Let \(Q(t, t_0)~(0\le t_0\le t\le T)\) be a matrix function satisfies \(Q(t_0, t_0)=Id\), \(\frac{\partial Q(t, t_0)}{\partial t}=\Psi (t)Q(t, t_0)\), then

Step 1: To illustrate the rank of \(D_{\mathbf {N}_0; \phi }\) is 3. Let \(\varepsilon \in (0, T)\) and \(h(t)=\frac{\chi _{[T-\varepsilon , T]}(t)}{x_\phi (t)}~(t\in [0, T])\), where \(\chi \) is the indicative function on \([T-\varepsilon , T]\). In the light of Taylor expansion, namely,

Therefore,

where \(\mathbf {v}=(-\sigma ,0,0)^{T}\), then compute

and

where \(H=-(\delta +\frac{\sigma ^{2}}{2})+\alpha \phi \) and \(K=e^{-ax}(1-ax)\), thus

where \(X\ne \frac{1}{a}\), so \(\mathbf {v}\), \(\Psi (T)\mathbf {v}\) and \(\Psi ^2(T)\mathbf {v}\) are linearly independent. Therefore, the rank of \(D_{\mathbf {N}_0; \phi }\) is 3.

Step 2: Firstly, we claim that there exists a control function \(\phi \) and \(T>0\) such that \(\mathbf {X}_\phi (0)=\mathbf {X}_0\), \(\mathbf {X}_\phi (T)=\mathbf {X}\) for any two points \(\mathbf {X}_0\in \mathbb {R}_{+}^{3}\) and \(\mathbf {X}\in \mathbb {R}_{+}^{3}\) holds, then system (14) can be replaced by the following differential equations:

To construct the function \(\phi \), first of all, we find a positive constant T and a \(C^{3}\)-function \(Y_{\phi }\): \([0,T] \rightarrow (0,+\infty )\) such that

and the following boundary value conditions are met:

Next we separate the construction of the function \(Y_\phi \) on three subintervals \([0, \varepsilon _{1}]\), \([\varepsilon _{1}, T-\varepsilon _{2}]\) and \([T-\varepsilon _{2}, T]\), where \(0<\varepsilon _{1},\varepsilon _{2}<\frac{T}{2}\), will be determined later. We choose the \(C^3\)-function \(Y_\phi \) on \([0, \varepsilon _{1}]\) as follows:

where the coefficients \(C_{1},~C_{2}~\text {and}~y_{1}\) are given in (17), then the coefficient A satisfies

thus,

When \(t=0\), \(Y_{\phi }(0)=y_{1},~Y'_{\phi }(0)=C_{1},~Y''_{\phi }(0)=C_{2}\) from (18), namely, the conditions (17) hold. Next, we intend to prove (16). Due to

then there is a sufficiently small \(\varepsilon _{1}\in (0,\frac{C_{1}+\alpha y_{1}}{|C_{2}|})\) such that for \(t\in (0, \varepsilon _{1})\),

According to (19), the first inequality of (16) is satisfied. And the second inequality of (16) also holds since

It can be discussed in two cases.

\(\mathbf {Case~1.}\) If \(C_{2}+\alpha C_{1}+\alpha C_{2}\varepsilon _{1}\le 0\), i.e., \(A \ge 0\), then

\(\mathbf {Case~2.}\) If \(C_{2}+\alpha C_{1}+\alpha C_{2}\varepsilon _{1}>0\), then

Thus, \(Y_\phi (t)\) satisfies (16) and (17) for \(t\in [0,\varepsilon _{1}]\). The same proof for \(t\in [T-\varepsilon _{2}, T]\), we also find a \(C^3\)-function \(Y_\phi (t)\): \([T-\varepsilon _{2}, T]\rightarrow (0, \infty )\) such that

and \(Y_\phi (t)\) satisfies inequalities (16) for \(t\in [T-\varepsilon _{2}, T]\). We choose T sufficiently large and extend the function \(Y_\phi (t): [0, \varepsilon _{1}]\cup [T-\varepsilon _{2}, T] \rightarrow (0,\infty )\) to a \(C^3\)-function \(Y_\phi (t)\) defined on [0, T] such that (15)–(17) hold.

Then from the second and the third equations of (15), two \(C^1\)-function \(Z_\phi (t)\) and \(X_\phi (t)\) can be found to satisfy (16) and (17), respectively. Finally there is a continuous control function \(\phi \) which can be determined from the first equation of (15). This completes the proof. \(\square \)

Lemma 3.6

The Markov semigroup \(\{P(t)\}_{t\ge 0}\) is asymptotically stable or is sweeping concerning compact sets.

Proof

Based on the result of Lemma 3.4, it shows that \(\{P(t)\}_{t\ge 0}\) is an integral Markov semigroup, which has a continuous density k(t, x, y, z) for \(t>0\). By Lemma 3.5, we know for every \(g\in \mathbb {D}\),

due to \(k(t, x, y, z)>0\) and \(P(t)g=\int _{\mathbb {R}_{+}^{3}}k(t, \mathbf {x})g(\mathbf {x})m(\mathrm{d}\mathbf {x})\), where \(\mathbf {x}=(x,y,z)\). Therefore, according to Lemma 2.5, the Markov semigroup \(\{P(t)\}_{t\ge 0}\) is asymptotically stable or is sweeping concerning compact sets. \(\square \)

Lemma 3.7

If \(p>\delta +\frac{\sigma ^{2}}{2}\), then the Markov semigroup \(\{P(t)\}_{t\ge 0}\) is asymptotically stable.

Proof

By virtue of the Lemma 3.6, it implies that the semigroup \(\{P(t)\}_{t\ge 0}\) satisfies the Foguel alternative. In order to exclude sweeping, we construct a nonnegative \(C^2\)-function V and a closed set \(\Gamma \in \mathbb {R}_{+}^{3}\) such that

where the \(C^2\)-function V is known as Khasminskiĭ function [22].

Define a nonnegative \(C^2\)-function V by

where M and \(0<\theta <1\) will be determined later. First, by Lemma 2.1, we compute

And

where

and \(\delta -\frac{\theta }{2}\sigma ^{2}>0\), i.e., \(0<\theta <\frac{2\delta }{\sigma ^{2}}\).

Combining (20)–(22), we obtain

where \(\mu =p-\delta -\frac{\sigma ^{2}}{2}>0\), M is a positive constant satisfying \(-M\mu +2\alpha +B\le -2\).

Define a bounded closed set €

where \(0<\epsilon _{1},~\epsilon _{2},~\epsilon _{3}<1\) are sufficient small real numbers. In the set \(\mathbb {R}_{+}^{3}\setminus \Gamma \), \(\epsilon _{1},~\epsilon _{2}~\text {and}~\epsilon _{3}\) satisfy the following conditions:

where

For the convenience of the following discussion, we will divide \(\Gamma ^{c}=\mathbb {R}_{+}^{3}\setminus \Gamma \) into six domains:

clearly, \(\Gamma ^{c}=\Gamma ^{1}\cup \Gamma ^{2}\cup \Gamma ^{3}\cup \Gamma ^{4}\cup \Gamma ^{5}\cup \Gamma ^{6}\). Next, we will prove that \(\mathscr {A}^*V\le -1\) for any \((X, Y, Z) \in \Gamma ^{c}\), this is equivalent to proving \(\mathscr {A}^*V\le -1\) in the above six domains, respectively. We discuss it in six cases.

\(\mathbf {Case~1.}\) If \((X,Y,Z)\in \Gamma ^{1}\), then

\(\mathbf {Case~2.}\) If \((X,Y,Z)\in \Gamma ^{2}\), then

\(\mathbf {Case~3.}\) If \((X,Y,Z)\in \Gamma ^{3}\), then

\(\mathbf {Case~4.}\) If \((X,Y,Z)\in \Gamma ^{4}\), then

\(\mathbf {Case~5.}\) If \((X,Y,Z)\in \Gamma ^{5}\), then

\(\mathbf {Case~6.}\) If \((X,Y,Z)\in \Gamma ^{6}\), then

To summarize, there exists a closed set \(\Gamma \in \mathbb {R}_{+}^{3}\) such that

According to [22], we know that the existence of a Khasminskiĭ function shows that the semigroup \(\{P(t)\}_{t\ge 0}\) exclude sweeping from the set \(\Gamma \). By Lemma 2.5, we can get that the semigroup \(\{P(t)\}_{t\ge 0}\) is asymptotically stable. \(\square \)

3.4 Probability density function

In this part, we will give the probability density function of quasi-stationary distribution near the positive equilibrium point of the model (4) with the strong kernel.

First, let \(u=X-X^{*},~v=Y-Y^{*}\) and \(w=Z-Z^{*}\), we obtain

The system (26) can be approximately expressed as:

where \(a_{11}=\delta ,~a_{12}=p,~a_{21}=\alpha ,~a_{31}=\alpha (1-aX^{*})e^{-aX^{*}}=\frac{\alpha \delta }{p}(1+\ln \frac{\delta }{p})\) and \(a_{33}=\alpha \).

Now we give the existence theorem and specific form of the probability density function of the linear system (27) of (4).

Theorem 3.8

If

then the distribution of the solution (u, v, w) of system (27) has a density function \(\Phi (u, v, w)\) which takes the following form

and corresponding to the marginal probability density function

where \(\Sigma \) denotes the covariance matrix of (u, v, w), \(\Sigma ^{-1}=\frac{2}{\sigma ^{2}\alpha ^{2}(1-aX^{*})^{2}e^{-2aX^{*}}}(d_{ij} )_{3\times 3}\), \(d_{ij} (i, j = 1, 2, 3)\) and \(\sigma _{1}\) are defined as below.

Proof

Let \(y=w-v\), by (27), then

Let \(x=a_{31}u-(a_{21}+a_{33})y-a_{33}v\), we have

where \(A_{11}=a_{11}+a_{21}+a_{33}=\delta +2\alpha >0\), \(A_{12}=a_{11}a_{21}+a_{11}a_{33}+a_{21}a_{33}=2\delta \alpha +\alpha ^{2}>0\), \(A_{13}=a_{11}a_{33}-a_{12}a_{31}=-\delta \alpha \ln \frac{\delta }{p}>0\). U(x, y, v) be the density function of (x, y, v), which can be approximated by the following Fokker–Planck equation:

i.e.,

where \(\theta _{12}=\theta _{21}=\theta _{23}=\theta _{32}=0\). From the above equation, we get,

on account of

Obviously, \(\theta _{ij}=\theta _{ji}\) and the matrix \(\theta = (\theta _{ij} )_{3\times 3}~(i, j = 1, 2, 3)\) is positive definite owing to \(\theta _{11}\theta _{22}\theta _{33}-\theta _{13}^{2}\theta _{22}=\theta _{22}^{2}\frac{A_{13}}{a_{21}}>0\). The joint density function of (x, y, v) is as follows:

where C is a positive constant satisfying \(\iiint _{\mathbb {R}_{+}^{3}}U(x, y, v)\mathrm{d}x\mathrm{d}y\mathrm{d}v= 1\) and

Thus, the Gaussian density function corresponding to (u, v, w) is as follows:

where \(C'\) is a positive constant satisfying \(\iiint _{\mathbb {R}_{+}^{3}}\Phi (u, v, w)\mathrm{d}u\mathrm{d}v\mathrm{d}w= 1\).

where \(\Sigma \) denotes the covariance matrix of (u, v, w). Apparently, the matrix \(\Sigma ^{-1}\) is positive definite, then \(\Sigma \) is also positive definite matrix. Therefore, \(d_{ij} = d_{ji}~(i, j = 1, 2, 3)\). Direct calculation leads to that

Then we have

The marginal probability density function is expressed by

So, let’s try to find \(\sigma _{1}^{2}\), we have

We know that \(\sigma _{1}^{2}\) is the element in the first row and the first column of the covariance matrix, direct calculation, we get

\(\square \)

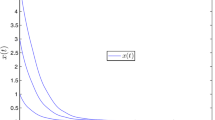

The path of X(t) for the stochastic Nicholson’s blowflies model (4) (blue line) and its corresponding deterministic model (red line) with initial parameters \((X(0), Y(0), Z(0)) = (0.05, 0.01, 0.01)\) under different noise intensities \(\sigma =0.10\), \(\sigma =0.13\), \(\sigma =0.16\) and \(\sigma =0.19\). (Color figure online)

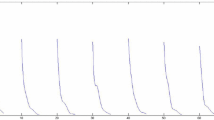

Figures on the left-hand side show solution in the total number of Nicholson’s blowflies X(t) resulting from Eq. (4) (blue line) and its corresponding deterministic model (red line), with initial conditions \((X(0), Y(0), Z(0)) = (0.05, 0.01, 0.01)\) under different noise intensities \(\sigma =0.1\) (a), \(\sigma =0.2\) (c) and \(\sigma =0.3\) (e). Figures on the right-hand side present histograms of the probability density function of X(100) with three different values of \(\sigma :~\sigma =0.1\) (b), \(\sigma =0.2\) (d) and \(\sigma =0.3\) (f). (Color figure online)

4 Main results in the weak kernel case

When \(n=0\), f(t) is weak kernel, i.e., \(f(t)=\alpha e^{-\alpha t}.\)

Define

considering the actual situation, white noise is also added into (2), then system (2) with weak kernel and environment noise is becoming:

Using same methods similar to Sect. 3, we have come to the following conclusions directly:

Theorem 4.1

There exists a unique solution of the system (29) with the weak kernel, for any initial value \((X(0), Y(0))\in \mathbb {R}_{+}^{2}\), which will remain in \(\mathbb {R}_{+}^{2}\) with probability one.

Theorem 4.2

Let (X(t), Y(t)) be a solution of the stochastic system (29) with the weak kernel, for any given initial value \((X(0), Y(0))\in \mathbb {R}_{+}^{2}\). If \(p<\delta \), then

Theorem 4.3

Let (X(t), Y(t)) be a solution of the stochastic system (29) with the weak kernel. The distribution of (X(t), Y(t)) has a density U(t, x, y), \(\forall t > 0\). If \(p>\delta +\frac{\sigma ^{2}}{2}\), then there is a unique density \(U^{*}(x, y)\) satisfies

5 Numerical simulations

Using Milstein’s method [23], the discretized equation of model (4) is as follows:

where \(\xi _k^2 ~(k=1,2,\ldots )\) are independent Gaussian random variables N(0, 1). Next, we give two numerical examples to support our results.

5.1 Extinction

Using the software MATLAB, we choose right parameters \(\delta =0.3\), \(p=0.1\), \(a=2\) and the strong kernel coefficient \(\alpha =0.4\). For the deterministic system (3) and the stochastic model (4), we find that the Nicholson’s blowflies X(t) will eventually die out, which is consistent with the result of Theorem 3.2 (see Fig. 1). Compared with the four pictures (a), (b), (c) and (b) in Fig. 1, it can be observed that the extinction of the Nicholson’s blowflies X(t) will be accelerated with the increase of noise intensity \(\sigma \).

5.2 Asymptotic stability

For the sake of testing the existence of a stationary distribution, we choose \(\delta =0.3\), \(p=0.7\), \(a=2\) and \(\alpha =0.4\). For deterministic system (3), there exists a locally asymptotically stable (see the left-hand side of Fig. 2), where positive equilibrium is \(E^{*}=(0.4236,0.1816,0.1816)\). We find that there exists a stationary distribution and a higher \(\sigma \) generates larger fluctuations of X(t). The distribution of X(t) is positively skewed with the noise increases as shown in Fig. 2.

6 Conclusion

In this paper, we formulate a stochastic Nicholson’s blowflies model with distributed delay by using the linear chain technique. The main objective of this paper is to discuss the long-time behavior of Nicholson’s blowflies model. Firstly, the existence and uniqueness of globally positive solution for the stochastic Nicholson’s blowflies model is obtained. Then, the sufficient conditions for the stochastic extinction of Eq. (4) are established. Generally speaking, due to the diffusion matrix is degenerate, the uniform elliptic condition is invalid. So, using Markov semigroup theory, the existence of a unique stationary distribution of the model (4) is derived. Finally, particular attention is given to the expression of the density function around the positive equilibrium of the deterministic system. Since the corresponding Fokker–Planck equation is three-dimensional, the previous method is invalid. While this paper fills this gap. In order to answer the questions mentioned in the introduction, we state the main results as follows:

-

(i)

Theorem 3.2 gives the conclusion of extinction that if \(p<\delta \), then

$$\begin{aligned}&\lim _{t\rightarrow \infty }X(t)=0,~\lim _{t\rightarrow \infty }Y(t)=0~\text{ and }~\\&\lim _{t\rightarrow \infty }Z(t)=0~a.s. \end{aligned}$$That is to say, the population X(t) of the stochastic system (4) becomes extinct with probability one.

-

(ii)

According to Theorem 3.3, the condition for stationary distribution is \(p>\delta +\frac{\sigma ^{2}}{2}\).

-

(iii)

Through Theorem 3.8, the conditions for the existence of probability density function is

$$\begin{aligned} \delta <p~\text {and}~\delta \alpha ^{2}(5+\ln \frac{\delta }{p})+\alpha (2\alpha ^{2}+\delta ^{2})>0. \end{aligned}$$Many interesting questions deserve further discussion. For example, a more complex model with Markovian switching is proposed to consider the positive recurrence of the system. We’ll leave this for future work.

References

Gurney, W., Blythe, S., Nisbet, R.: Nicholson’s blowflies revisited. Nature 287(5777), 17–21 (1980)

Zhu, Y., Wang, K., Ren, Y., et al.: Stochastic Nicholson’s blowflies delay differential equation with regime switching. Appl. Math. Lett. 94, 187–195 (2019)

Xiong, W.: New results on positive pseudo-almost periodic solutions for a delayed Nicholson’s blowflies model. Nonlinear Dyn. 85(1), 563–571 (2016)

Yao, Z.: Almost periodic solution of Nicholson’s blowflies model with linear harvesting term and impulsive effects. Int. J. Biomath. 08(03), 1–18 (2015)

Long, Z.: Exponential convergence of a non-autonomous Nicholson’s blowflies model with an oscillating death rate. Electron. J. Qual. Theory Differ. Equ. 41, 1–7 (2016)

Wang, X., Liu, H., Xu, C.: Hopf bifurcations in a predator-prey system of population allelopathy with a discrete delay and a distributed delay. Nonlinear Dyn. 69(4), 2155–2167 (2012)

Ruan, S.: Delay Differential Equations and Applications in Single Species Dynamics. Springer, Berlin (2006)

Al-Omari, J., Al-Omari, S.: Global stability in a structured population competition model with distributed maturation delay and harvesting. Nonlinear Anal. Real World Appl. 12(3), 1485–1499 (2011)

Liu, Q., Jiang, D., Hayat, T., et al.: Stationary distribution and extinction of a stochastic HIV-1 infection model with distributed delay and logistic growth. J. Nonlinear Sci. (2019). https://doi.org/10.1007/s00332-019-09576-x

Hu, Y., Wu, F.: Stochastic Lotka–Volterra system with unbounded distributed delay. Discrete Contin. Dyn. Syst. Ser. B 14(1), 275–288 (2010)

Liu, Q., Jiang, D., Hayat, T., et al.: Long-time behavior of a stochastic logistic equation with distributed delay and nonlinear perturbation. Physica A 508, 289–304 (2018)

Liu, M., Wang, K., Hong, Q.: Stability of a stochastic logistic model with distributed delay. Math. Comput. Modell. 57, 1112–1121 (2013)

Macdonald, N.: Time Lags in Biological Models. Lecture Notes in Biomathematics. Springer, Heidelberg (1978)

Rudnicki, R., Katarzyna, P., Marta, T.: Markov Semigroups and Their Applications. Dynamics of Dissipation. Springer, Heidelberg (2002)

Rudnicki, R., Katarzyna, P.: Influence of stochastic perturbation on prey–predator systems. Math. Biosci. 206(1), 108–119 (2007)

Rudnicki, R.: Asymptotic Properties of the Fokker–Planck Equation, vol. 457, pp. 517–521. Springer, Berlin (1995)

Sun, X., Zuo, W., Jiang, D., et al.: Unique stationary distribution and ergodicity of a stochastic logistic model with distributed delay. Physica A 512, 864–881 (2018)

Bao, K., Rong, L., Zhang, Q.: Analysis of a stochastic SIRS model with interval parameters. Discrete Contin. Dyn. Syst. B 24(9), 4827–4849 (2019)

Mao, X.: Stochastic Differential Equations and Applications, 2nd edn. Horwood Publishing, Sawston (1997)

Pichr, K., Rudnicki, R.: Stability of Markov semigroups and applications to parabolic systems. J. Math. Anal. Appl. 215, 56–74 (1997)

Arous, G., Léandre, R.: Décroissance exponentielle du noyau de la chaleur sur la diagonale (II). Probab. Theory Relat. Fields 90, 377–402 (1991)

Rudnicki, R., Pichr, K., Tyrankamiska, M.: Markov semigroups and their applications. Lect. Notes Phys. 597, 215–238 (2002)

Higham, D.: An algorithmic introduction to numerical simulation of stochastic differential equations. SIAM Rev. 433, 525–546 (2001)

Acknowledgements

The research is supported by the Natural Science Foundation of China (No. 11871473), Shandong Provincial Natural Science Foundation (Nos. ZR2019MA010, ZR2019MA006) and the Fundamental Research Funds for the Central Universities (No. 19CX02055A).

Author information

Authors and Affiliations

Contributions

DJ designed the research and methodology. XM wrote the original draft. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mu, X., Jiang, D., Hayat, T. et al. Dynamical behavior of a stochastic Nicholson’s blowflies model with distributed delay and degenerate diffusion. Nonlinear Dyn 103, 2081–2096 (2021). https://doi.org/10.1007/s11071-020-05944-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-020-05944-5