Abstract

We discuss an integral equation approach that enables fast computation of the response of nonlinear multi-degree-of-freedom mechanical systems under periodic and quasi-periodic external excitation. The kernel of this integral equation is a Green’s function that we compute explicitly for general mechanical systems. We derive conditions under which the integral equation can be solved by a simple and fast Picard iteration even for non-smooth mechanical systems. The convergence of this iteration cannot be guaranteed for near-resonant forcing, for which we employ a Newton– Raphson iteration instead, obtaining robust convergence. We further show that this integral equation approach can be appended with standard continuation schemes to achieve an additional, significant performance increase over common approaches to computing steady-state response.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Multi-degree-of-freedom nonlinear mechanical systems generally approach a steady-state response under periodic or quasi-periodic forcing. Determining this response is often the most important objective in analyzing nonlinear vibrations in engineering practice.

Despite the broad availability of effective numerical packages and powerful computers, identifying the steady-state response simply by numerically integrating the equations of motion is often a poor choice. First, modern engineering structures tend to be very lightly damped, resulting in exceedingly long integration times before the steady state is reached. Second, structural vibrations problems to be analyzed are often available as finite-element models for which repeated evaluations of the defining functions are costly. These evaluations are inherently not parallelizable, thus increasing the number of processors used in the simulation results in increased cross-communication times that slow down already slowly converging runs even further. As a result, even with today’s advances in computing, it may take days to reach a good approximation to a steady-state response in complex structural vibration problems (cf. Avery et al. [1]).

To achieve feasible computation times for steady-state response in high-dimensional systems, reduced-order models (ROM) are often used to obtain a low-dimensional variant of the mechanical system. Various nonlinear normal modes (NNM) concepts have been used to describe such small-amplitude, nonlinear oscillations. Among these, the classic NNM definition of Rosenberg [2] targets periodic orbits in a two-dimensional subcenter-manifold [3] in the undamped limit of the oscillatory system. By contrast, Shaw and Pierre [4] define NNMs as the invariant manifolds tangent to modal subspaces at an equilibrium point (cf. Avramov and Mikhlin [5] for a review) allowing application to dissipative systems. Haller and Ponsioen [6] distinguish these two notions for dissipative systems under possible periodic/quasi-periodic forcing, by defining an NNM as a near-equilibrium trajectory with finitely many frequencies, and introducing a spectral submanifold (SSM) as the smoothest invariant manifold tangent to a spectral subbundle along such an NNM.

Alternatively, ROMs obtained using heuristic projection-based techniques are also used to approximate steady-state response of high-dimensional systems. These include sub-structuring methods such as the Craig–Bampton method [7] (cf. Theodosiou et al. [8]), proper orthogonal decomposition [9] (cf. Kerchen et al. [10]), reduction using natural modes (cf. Amabili [11], Touzé et al. [12]) and the modal-derivative method of Idelsohn and Cardona [13] (cf. Sombroek et al. [14], Jain et al. [15]). A common feature of these methods is their local nature: they seek to approximate nonlinear steady-state response in the vicinity of an equilibrium. Thus, high-amplitude oscillations are generally missed by these approaches. The methods reviewed here are fundamentally heuristic as the relationship between the full system and its simplified approximation is generally unknown and has to be tested in each application. Though we focus on finite-dimensional dynamical systems in this work, the same limitations are shared by many truncation/approximation methods when applied to infinite-dimensional systems as well (cf. Malookani and van Horssen [16] for the case of Galerkin truncation applied to string vibrations).

On the analytic side, perturbation techniques relying on a small parameter have been widely used to approximate the steady-state response of nonlinear systems. Nayfeh et al. [17, 18] give a formal multiple-scales expansion applied to a system with small damping, small nonlinearities and small forcing. Their results are detailed amplitude equations to be worked out on a case-by-case basis. Mitropolskii and Van Dao [19] apply the method of averaging (cf. Bogoliubov and Mitropolsky [20] or, more recently, Sanders and Verhulst [21]) after a transformation to amplitude-phase coordinates in the case of small damping, nonlinearities and forcing. They consider single as well as multi-harmonic forcing of multi-degree of freedom systems and obtain the solution in terms of a multi-frequency Fourier expansion. Their formulas become involved even for a single oscillator, and thus, condensed formulas or algorithms are unavailable for general systems. As conceded by Mitroposkii and Van Dao [19], the series expansion is formal, as no attention is given to the actual existence of a periodic response. Existence is indeed a subtle question in this context, since the envisioned periodic orbits would perturb from a non-hyperbolic fixed point.

Vakakis [22] relaxes the small nonlinearity assumption and describes a perturbation approach for obtaining the periodic response of a single-degree-of-freedom Duffing oscillator subject to small forcing and small damping. A formal series expansion is performed around a conservative limit, where periodic solutions are explicitly known (elliptic Duffing oscillator). This approach only works for perturbations of integrable nonlinear systems.

Formally applicable without any small parameter assumption is the harmonic balance method. Introduced first by Kryloff and Bogoliuboff [23] for single-harmonic approximation of the forced response, the method has been gradually extended to include higher harmonics and quasi-periodic orbits (cf. Chua and Ushida [24] and Lau and Cheung [25]). In the harmonic balance procedure, the assumed steady-state solution is expanded in a Fourier series which, upon substitution, turns the original differential equations into a set of nonlinear algebraic equations for the unknown Fourier coefficients after truncation to finitely many harmonics. The error arising from this truncation, however, is not well understood. For the periodic case, Leipholz [26] and Bobylev et al. [27] show that the solution of the harmonic balance converges to the actual solution of the system if the periodic orbit exists and the number of harmonics considered tends to infinity. Explicit error bounds are only available as functions of the (a priori unknown) periodic orbit (cf. Bobylev et al. [27], Urabe [28], Stokes [29] and García-Saldaña and Gasull [30]). The quantities involved, however, generally require numerical integration to obtain. For quasi-periodic forcing, such error bounds remain unknown to the best of our knowledge.

The shooting method (cf. Keller [31], Peeters et al. [32] and Sracic and Allen [33]) is also broadly used to compute periodic orbits of nonlinear system. In this procedure, the periodicity of the sought orbit is used to formulate a two-point boundary value problem. The solutions are initial conditions on the periodic orbit. Starting from an initial guess, one corrects the initial conditions iteratively until the boundary value problem is solved up to a required precision. The iterated correction of the initial conditions, however, requires repeated numerical integration of the equation of variations along the current estimate of the periodic orbit, as well as numerical integration of the full system. Albeit the shooting method has moderate memory requirements relative to that of harmonic balance due to its smaller Jacobian, this advantage is useful only for very high-dimensional systems with memory constraints. In practice, shooting is limited by the capabilities of the time integrator used and can be unsuitable for solutions with large Floquet multipliers, as observed by Seydel [34]. Furthermore, the shooting method is only applicable to periodic steady-state solutions, not to quasi-periodic ones.

The shooting method uses a time-march-type integration, i.e., the solution at each time step is solved sequentially after the previous one. In contrast, collocation approaches solve for the solution at all time steps in the orbit simultaneously. Collocation schemes mitigate all the drawbacks of the shooting method but can be computationally expensive for large systems since all unknowns need to be solved together over the full orbit. Popular software packages, such as AUTO [35], MATCONT [36] and the po toolbox of coco [37], also use collocation schemes to continue periodic solutions of dynamical systems. Renson et al. [38] provide a thorough review of the commonly used methods for computation of periodic orbits in multi-degree-of-freedom mechanical systems.

Constructing particular solutions using integral equations is textbook material in physics or vibration courses for impulsive forcing the (system is at rest at the initial time, prior to which the forcing is zero). Solving this problem with a classic Duhamel integral will produce a particular solution that approaches the steady-state response asymptotically. This approach, therefore, suffers from the slow convergence we have already discussed for direct numerical integration.

In this paper, assuming either periodicity or quasi-periodicity for the external forcing, we derive an integral equation whose zeros are the steady-state responses of the mechanical system. Along with a phase condition to ensure uniqueness, the same integral equation can also be used to obtain the (quasi-) periodic response in conservative, autonomous mechanical systems.

While certain elements of the integral equations approach outlined here for periodic forcing have been already discussed outside the structural vibrations literature, our treatment of quasi-periodic forcing appears to be completely new. We do not set any conceptual bounds on the number of independent frequencies allowed in such a forcing, which enables one to apply the results to more complex excitations mimicking stochastic forcing.

First, we derive a Picard iteration approach with explicit convergence criteria to solve the integral equations for the steady-state response iteratively (Sect. 3.1). This fast iteration approach is particularly appealing for high-dimensional systems, since it does not require the construction and inversion of Jacobian matrices, and for non-smooth systems, as is does not rely on derivatives. At the same time, this Picard iteration will not converge near external resonances. Applying a Newton–Raphson scheme to the integral equation, however, we can achieve convergence of the iteration even for near-resonant forcing (Sect. 3.2). We additionally employ numerical continuation schemes to obtain forced response and backbone curves of nonlinear mechanical systems (Sect. J.1). Finally, we illustrate the performance gain from our newly proposed approach on several multi-degree-of-freedom mechanical examples (Sect. 4), using a MATLAB®-based implementation.Footnote 1

2 Setup

We consider a general nonlinear mechanical system of the form

where \({\mathbf {x}}(t)\in {\mathbb {R}}^{n}\) is the vector of generalized displacements; \({\mathbf {M}},{\mathbf {C}},{\mathbf {K}}\in {\mathbb {R}}^{n\times n}\) are the symmetric mass, stiffness and damping matrices; \({\mathbf {S}}\) is a nonlinear, Lipschitz continuous function such that \({\mathbf {S}}=\mathcal {O}\left( \left| {\mathbf {x}}\right| ^{2},\left| {\mathbf {x}}\right| \left| \dot{{\mathbf {x}}}\right| ,\left| \dot{{\mathbf {x}}}\right| ^{2}\right) \); \({\mathbf {f}}\) is a time-dependent, multi-frequency external forcing. Specifically, we assume that \({\mathbf {f}}(t)\) is quasi-periodic with a rationally incommensurate frequency basis \(\varvec{\Omega }\in {\mathbb {R}}^{k},\,k\ge 1\) which means

for some continuous function \(\tilde{{\mathbf {f}}}:{\mathbb {T}}^{k}\rightarrow {\mathbb {R}}^{n}\), defined on a \(k-\)dimensional torus \({\mathbb {T}}^{k}\). For \(k=1\), \({\mathbf {f}}\) is periodic in t with period \(T=2\pi /\varvec{\Omega }\), while for \(k>1\), \({\mathbf {f}}\) describes a strictly quasi-periodic forcing. System (1) can be equivalently expressed in the first-order form as

with

The first-order form in (3) ensures that the coefficient matrices \({\mathbf {A}}\) and \({\mathbf {B}}\) are symmetric, if the matrices \({\mathbf {M}},{\mathbf {C}}\) and \({\mathbf {K}}\) are symmetric, as is usually the case in structural dynamics applications (cf. Gérardin and Rixen [39]). We assume that the coefficient matrix of the linear system

can be diagonalized using the eigenvectors of the generalized eigenvalue problem

via the linear transformation \({\mathbf {z}}={\mathbf {V}}{\mathbf {w}}\), where \({\mathbf {w}}\in {\mathbb {C}}^{2n}\) represents the modal variables and \({\mathbf {V}}={\left[ {\mathbf {v}}_{1},\ldots ,\right. }\)\({\left. {\mathbf {v}}_{2n}\right] \in {\mathbb {C}}^{2n\times 2n}}\) is the modal transformation matrix containing the eigenvectors. The diagonalized linear version of (4) with forcing is given by

where \({\varvec{\Lambda }}={\mathrm {diag}}\left( \lambda _{1},\ldots ,\lambda _{2n}\right) \), \(\psi _{j}(t)=\frac{\tilde{{\mathbf {v}}}_{j}{\mathbf {F}}(t)}{\tilde{{\mathbf {v}}}_{j}{\mathbf {B}}{\mathbf {v}}_{j}}\), where \(\tilde{{\mathbf {v}}}_{j}\) denotes the jth row of the matrix \({\mathbf {V}}^{-1}\). Furthermore, if the matrices \({\mathbf {A}}\) and \({\mathbf {B}}\) are symmetric, then \({\mathbf {V}}^{-1}={\mathbf {V}}^{\top }\).

Remark 1

We have assumed autonomous nonlinearities \({\mathbf {S}},{\mathbf {R}}\) in Eqs. (1) and (3) since this is relevant for structural dynamics systems, but the following treatment also allows for time dependence in \({\mathbf {S}}\) or \({\mathbf {R}}\). Specifically, all the following results hold for nonlinearities with explicit time dependence as long as the time dependence is quasi-periodic (cf. Eq. (2)) with the same frequency basis \(\varvec{\Omega }\) as that of the external forcing \({\mathbf {f}}(t)\).

2.1 Periodically forced system

We first review a classic result for periodic solutions in periodically forced linear systems (cf. Burd [40]).

Lemma 1

If the forcing \({\mathbf {F}}(t)\) is T-periodic, i.e., \({\mathbf {F}}(t+T)={\mathbf {F}}(t),\,t\in {\mathbb {R}},\) and the non-resonance conditions

are satisfied for all eigenvalues \(\lambda _{1},\dots ,\lambda _{2n}\) defined in (5), then there exists a unique T-periodic response to (4), given by

where \({\mathbf {G}}(t,T)\) is the diagonal matrix of periodic Green’s functions for the modal displacement variables, defined as

with the Heaviside function h(t) given by

Proof

We reproduce the proof for completeness in “Appendix A”. \(\square \)

Remark 2

The uniform-in-time sup norm of the Green’s function (9) can be bounded by the constant \(\varGamma (T)\) defined as

We detail this estimate in “Appendix F”.

The Green’s functions defined in (9) turn out to play a key role in describing periodic solutions of the full, nonlinear system as well. We recall this in the following result (see eg. Bobylev et al. [27]).

Theorem 1

(i) If \({\mathbf {z}}(t)\) is a \(T-\)periodic solution of the nonlinear system (3), then \({\mathbf {z}}(t)\) must satisfy the integral equation

(ii) Furthermore, any continuous, \(T-\)periodic solution \({\mathbf {z}}(t)\) of (11) is a \(T-\)periodic solution of the nonlinear system (3).

Proof

We reproduce the proof for completeness in “Appendix B”. The term \( {\mathbf {V}}^{-1}\left[ {\mathbf {F}}(t)-{\mathbf {R}}({\mathbf {z}}(t))\right] \) is treated as a periodic forcing term in (6) for a T-periodic \( {\mathbf {z}}(t) \) and Lemma 1 is used to prove (i). Statement (ii) is then a direct consequence of the Leibniz rule. \(\square \)

2.2 Quasi-periodically forced systems

The above classic results on periodic steady-state solutions extend to quasi-periodic steady-state solutions under quasi-periodic forcing. This observation does not appear to be available in the literature, which prompts us to provide full detail.

Let the forcing \({\mathbf {F}}(t)\) be quasi-periodic with frequency basis \(\varvec{\Omega }\in {\mathbb {R}}{}^k{(k>1)}\), i.e.,

where each member of this k-parameter summation represents a time-periodic forcing with frequency \(\left\langle \varvec{\kappa },\varvec{\Omega }\right\rangle \), i.e., forcing with period

Here, \(T_{{\mathbf {0}}}=\infty \) formally corresponds to the period of the mean \({\mathbf {F}}_{\varvec{0}}\) of \({\mathbf {F}}(t)\).

Lemma 2

If the forcing is quasi-periodic, as given by (12), then under the non-resonance conditions

there exists a unique quasi-periodic steady-state response to (4) with the same frequency basis \(\varvec{\Omega }\). This steady-state response is given by

Furthermore, \( {\mathbf {z}}(t)\) is quasi-periodic with Fourier expansion

where \({\mathbf {H}}(T_{\varvec{\kappa }})\) is the diagonal matrix of the amplification factors, defined as

Proof

The proof is a consequence of the linearity of (4) along with Lemma 1, followed by the explicit evaluation of the integrals in (14). We give the details in “Appendix C”. \(\square \)

Remark 3

The maximum of \(H_{j}(T_{\varvec{\kappa }})\) can be bounded by the constant \(h_\mathrm{max}\), defined as

We note that the constant, \(h_\mathrm{max}\), increases as the real part of the minimal eigenvalue tends to zero (i.e., with decreasing damping values)

In analogy with Theorem 1, we present here an integral formulation for steady-state solutions of the nonlinear system (3) under quasi-periodic forcing.

Theorem 2

(i) If \({\mathbf {z}}(t)\) is a quasi-periodic solution of the nonlinear system (3) with frequency basis \(\varvec{\Omega }\), then the nonlinear function \({\mathbf {R}}({\mathbf {z}}(t))\) is also quasi-periodic with the same frequency basis \(\varvec{\Omega }\) and \({\mathbf {z}}(t)\) must satisfy the integral equation:

(ii) Furthermore, any continuous, quasi-periodic solution \({\mathbf {z}}(t)\) of (18), with frequency basis \(\varvec{\Omega }\), is a quasi-periodic solution of the nonlinear system (3).

Proof

The proof is analogous to that for the periodic case (cf. Theorem 1). Again, the term \({{\mathbf {F}}(t)-{\mathbf {R}}({\mathbf {z}}(t))}\) is treated as a quasi-periodic forcing term. \(\square \)

Remark 4

With the Fourier expansion \( {\mathbf {z}}(t) = \sum _{\varvec{\kappa }\in {\mathbb {Z}}^k} {\mathbf {z}}_{\varvec{\kappa }} e^{i\left\langle \varvec{\kappa },\varvec{\Omega }\right\rangle t} \), Eq. (18) can be equivalently written as

where \( {\mathbf {R}}_{\varvec{\kappa }}\{{\mathbf {z}}\} \) are the Fourier coefficients of the quasi-periodic function \( {\mathbf {R}}({\mathbf {z}}(t)) \), defined as

If we express the quasi-periodic solution using toroidal coordinates \( \varvec{\theta } \in {\mathbb {T}}^{k}\) such that \( {{\mathbf {z}}(t) = {\mathbf {u}}(\varvec{\Omega }t)} \), where \( {{\mathbf {u}}:{\mathbb {T}}^k\mapsto {\mathbb {R}}^{2n}} \) is the torus function, then we can express the Fourier coefficients as

This helps to avoid the infinite limit in the integral (20) that can pose numerical difficulties (cf. Schilder et al. [41], Mondelo González [42]. To this end, we have used the torus coordinates for the formulation of quasi-periodic oscillations in our supplementary MATLAB® code.

The present integral equation formulation (11), (18) assumes the knowledge of the eigenvectors and eigenvalues of the linearized system. These are usually computed numerically and may pose a computational challenge for very high-dimensional systems. Nonetheless, this computation needs to be performed only once for the full system (and not repeatedly for each forcing frequency). For this reason, it is expected that diagonalizing the system at the linear level forms only a fraction of the total computational cost for the forced response and backbone curves. The special case of proportional damping and purely position-dependent nonlinearities further alleviates these computational challenges by reducing the dimensionality to half, as we discuss in the following section.

2.3 Special case: structural damping and purely geometric nonlinearities

The results in Sects. 2.1 and 2.2 apply to general first-order systems of the form (3). The special case of second-order mechanical systems with proportional damping and purely geometric nonlinearities, however, is of significant interest to structural dynamicists (cf. Gérardin and Rixen [39]). These general results can be simplified for such systems, resulting in integral equations with half the dimensionality of Eqs. (11) and (18), as we discuss in this section.

We assume that the damping matrix \({\mathbf {C}}\) satisfies the proportional damping hypothesis, i.e., can be expressed as a linear combination of \({\mathbf {M}}\) and \({\mathbf {K}}\). We also assume that the nonlinearities depend on the positions only, i.e., we can simply write \({\mathbf {S}}({\mathbf {x}})\). The equations of motion are, therefore, given by

Then, the real eigenvectors \({\mathbf {u}}_{j}\) of the undamped eigenvalue problem satisfy

where \(\omega _{0,j}\) is the eigenfrequency of the undamped vibration mode \({\mathbf {u}}_{j}\in {\mathbb {R}}^{n}\). These eigenvectors (or modes) can be used to diagonalize the linear part of (22) using the linear transformation \({{\mathbf {x}}={\mathbf {U}}{\mathbf {y}}}\), where \({{\mathbf {y}}\in {\mathbb {R}}^{n}}\) represents the modal variables and \({\mathbf {U}}=\left[ {\mathbf {u}}_{1},\ldots ,{\mathbf {u}}_{n}\right] \in {\mathbb {R}}^{n\times n}\) is the modal transformation matrix containing the vibration modes. Thus, the decoupled system of equations for the linear system,

is given by

Specifically, the jth mode \((y_{j})\) of equation (25) is customarily expressed in the vibrations literature as

where \(\omega _{0,j}=\sqrt{\frac{{\mathbf {u}}_{j}^{\top }{\mathbf {K}}{\mathbf {u}}_{j}}{{\mathbf {u}}_{j}^{\top }{\mathbf {M}}{\mathbf {u}}_{j}}}\) are the undamped natural frequencies; \(\zeta _{j}=\frac{1}{2\omega _{0,j}}\left( \frac{{\mathbf {u}}_{j}^{\top }{\mathbf {C}}{\mathbf {u}}_{j}}{{\mathbf {u}}_{j}^{\top }{\mathbf {M}}{\mathbf {u}}_{j}}\right) \) are the modal damping coefficients; and \(\varphi _{j}(t)=\left( \frac{{\mathbf {u}}_{j}^{\top }{\mathbf {F}}(t)}{{\mathbf {u}}_{j}^{\top }{\mathbf {M}}{\mathbf {u}}_{j}}\right) \) are the modal participation factors. The eigenvalues for the corresponding full system in phase space can be arranged as follows

With the constants

we can restate Lemma 1 specifically for linear systems with proportional damping as follows.

Lemma 3

For T-periodic forcing \({\mathbf {f}}(t)\), i.e., \({\mathbf {f}}(t+T)={\mathbf {f}}(t),\,t\in {\mathbb {R}},\,T>0\) and under the non-resonance conditions (7), there exists a unique T-periodic response for system (24), given by

where \({\mathbf {L}}(t,T)\) is the diagonal Green’s function matrix for the modal displacement variables defined as

and \(\varvec{\varphi }(s)=[\varphi _{1}(s),\dots ,\varphi _{n}(s)]^{\top }\) is the forcing vector in modal coordinates.

Proof

See “Appendix D”. \(\square \)

The periodic Green’s function \(L_{j}(t,T)\) for a single-degree-of-freedom, underdamped harmonic oscillator has already been derived in the controls literature (see, e.g., Kovaleva [43], p. 19., formula (1.40), or Babitsky [44] p. 90). They also note a simplification when the periodic forcing function has an odd symmetry with respect to half the period (e.g., sinusoidal forcing), in which case the integral can be taken over just half the period with another Green’s function. Kovaleva [43] also lists the Green function without damping for the case of a multi-degree-of-freedom system without damping, in transfer-function notation. In summary, formula (30) does not seem to appear in the vibrations literature, but earlier controls literature has simpler forms of it (single-degree-of-freedom modal form with damping, or multi-dimensional form without damping in modal coordinates), albeit for the underdamped case only.

Kovaleva [43] also observes for undamped multi-degree-of-freedom systems that an integral equation with this Green’s function can be written out for nonlinear systems, and then refers to Rosenwasser [45] for existence conditions and approximate solution methods. Chapter 4.2 of Babitsky and Krupenin [46] also discusses this material in the context of the response of linear discontinuous systems, citing Rosenwasser [45] for a similar formulation. We formalize and generalize these discussions as a theorem here:

Theorem 3

(i) If \({\mathbf {x}}(t)\) is a T-periodic solution of the nonlinear system (22), then \({\mathbf {x}}(t)\) must satisfy the integral equation

with \({\mathbf {L}}\) defined in (30).

(ii) Furthermore, any continuous, T-periodic solution of \({\mathbf {x}}(t)\) of (31) is a T-periodic solution of the nonlinear system (22).

Proof

This result is just a special case of Theorem 1, with the specific form of the Green’s function listed in (30). \(\square \)

Remark 5

Once a solution to (31) is obtained for the position variables \({\mathbf {x}}\) (cf. Sect. 3 for solution methods), the corresponding velocity \(\dot{{\mathbf {x}}}\) can be recovered as

where \({\mathbf {J}}(t-s,T)={\mathrm {diag}}\left( J_{1}(t-s,T),\ldots ,J_{n}(t-s,T)\right) \in {\mathbb {R}}^{n\times n}\) is the diagonal Green’s matrix whose diagonal elements are given by

as shown in “Appendix D”.

Finally, the following result extends the integral equation formulation of Theorem 3 to quasi-periodic forcing.

Theorem 4

(i) If \({\mathbf {x}}(t)\) is a quasi-periodic solution of the nonlinear system (22) with frequency basis \(\varvec{\Omega }\), and the nonlinear function \({\mathbf {S}}({\mathbf {x}}(t))\) is also quasi-periodic with the same frequency basis \(\varvec{\Omega }\), then \({\mathbf {x}}(t)\) must satisfy the integral equation:

(ii) Furthermore, any continuous quasi-periodic solution \({\mathbf {x}}(t)\) to (33), with frequency basis \(\varvec{\Omega }\), is a quasi-periodic solution of the nonlinear system (1).

Proof

This theorem is just a special case of Theorem 2.

\(\square \)

In analogy with Remark 4, we make the following remark for geometric nonlinearities and structural damping.

Remark 6

With the Fourier expansion \( {\mathbf {x}}(t) = \sum _{\varvec{\kappa }\in {\mathbb {Z}}^k} {\mathbf {x}}_{\varvec{\kappa }} e^{i\left\langle \varvec{\kappa },\varvec{\Omega }\right\rangle t} \), Eq. (33) can be equivalently written as the system

where

is the diagonal matrix of the amplification factors, which are explicitly given by

as derived in “Appendix I”.

Remark 7

The non-resonance conditions (7) and (13) are generically satisfied by dissipative systems as described in this section since none of the eigenvalues (27) are purely imaginary.

2.4 The unforced conservative case

In contrast to dissipative systems, which have isolated (quasi-) periodic solutions in response to (quasi-) periodic forcing, unforced conservative systems will generally exhibit families of periodic or quasi-periodic orbits (cf. Kelley [47] or Arnold [48]). The calculation of (quasi-) periodic orbits in an autonomous system such as

is different from that in the forced case mainly due to two reasons:

-

1.

The frequencies of such (quasi-) periodic oscillations are intrinsic to the system. This means that the time period T, or the base frequency vector \(\varvec{\Omega }\), of the response is a priori unknown.

-

2.

Any given (quasi-) periodic solution \({\mathbf {z}}(t)\) to the autonomous system (36) is a part of a family of (quasi-) periodic solutions, with an arbitrary phase shift \(\theta \in {\mathbb {R}}\).

Nonetheless, Theorems 1–4 still hold for system (36) with the external forcing function set to zero. Special care needs to be taken, however, in the numerical implementation of these results for unforced mechanical systems, as we shall discuss in “Appendix J.1.1”.

3 Iterative solution of the integral equations

We would like to solve integral equations of the form (cf. Theorems 1 and 3)

to obtain periodic solutions, or integral equations of the form (cf. Theorem 2 and 4)

to obtain quasi-periodic solutions of system (3). In the following, we propose iterative methods to solve these equations. First, we discuss a Picard iteration and then subsequently a Newton–Raphson scheme.

3.1 Picard iteration

Picard [49] proposed an iteration scheme to show local existence of solutions to ordinary differential equations, which is also used as practical iteration scheme to approximate the solutions to boundary value problems in numerical analysis (cf. Bailey et al. [50]). We derive explicit conditions on the convergence of the Picard iteration when applied to Eqs. (37), (38).

3.1.1 Periodic response

We define the right-hand side of the integral equation (11) as the mapping \(\varvec{\mathcal {G}}_{P}\) acting on the phase space vector \({\mathbf {z}}\), i.e.,

Clearly, a fixed point of the mapping \({\varvec{\mathcal {G}}}_{P}\) in (39) corresponds to a periodic steady-state response of system (1) by Theorem 1. Starting with an initial guess \({\mathbf {z}}_{0}(t)\) for the periodic orbit, the Picard iteration applied to the mapping (37) is given by

To derive a convergence criterion for the Picard iteration, we define the sup norm \({\left\| \cdot \right\| _{0}=\max _{t\in [0,T]}\left| \cdot \right| }\) and consider a \(\delta -\)ball of \(C^{0}\)-continuous and T-periodic functions centered at \({\mathbf {z}}_{0}\):

We further define the first iterate under the map \( \varvec{\mathcal {G}}_{P} \) as

and denote with \(L_{\delta }^{{\mathbf {z}}_{0}}\) a uniform-in-time Lipschitz constant for the nonlinearity \({\mathbf {R}}({\mathbf {z}})\) with respect to its argument \({\mathbf {z}}\) within \(C_{\delta }^{{\mathbf {z}}_{0}}[0,T]\). With that notation, we obtain the following theorem for the convergence of a Picard iteration performed on (37)

Theorem 5

If the conditions

hold for some real number \(a\ge 1\), then the mapping \(\varvec{\mathcal {G}}_{P}\) defined in Eq. (37) has a unique fixed point in the space (41) and this fixed point can be found via the successive approximation

Proof

The proof relies on the Banach fixed point theorem. We establish that the mapping (37) is well defined on the space (41). Subsequently, we prove that under conditions (43), (44), the mapping (37) is a contraction. We detail all this in “Appendix G”. \(\square \)

Remark 8

If the nonlinearity \({\mathbf {R}}({\mathbf {z}})\) is not only Lipschitz but also of class \(C^{1}\) with respect to \({\mathbf {z}}\), then condition (43) can be more specifically written as

Remark 9

In case of geometric (purely position dependent) nonlinearities and proportional damping (cf. Sect. 2.3), we can avoid iterating in the \(2n-\)dimensional phase space by defining the iteration as

The existence of the steady-state solution and the convergence of the iteration (47) can be proven analogously.

Babistky [44] derives via transfer functions an iteration similar to (45) but without an explicit convergence proof. He asserts that the iteration is sensitive to the choice of the initial conditions \({\mathbf {z}}_{0}\). We can directly confirm this by examining condition (44). Indeed, the norm of the initial error \(\left\| \varvec{\mathcal {E}}\right\| _{0}\) is small for a good initial guess. Therefore, the \(\delta \)-ball in which the condition (43) on the Lipschitz constant needs to be satisfied can be selected small.

When no a priori information about the expected steady-state response is available, we can select \({\mathbf {z}}_{0}(t)\equiv {\mathbf {0}}\). Then, the term \(\varvec{\mathcal {E}}(0,t)\) is equal to the forced response of the linear system [cf. Eq. (42)]. In this case, the Lipschitz constant needs to be calculated for a \(\delta -\)ball centered at the origin.

The constant \(\varGamma (T)\) [cf. Eq. (10)] affects the convergence of the iteration (45). Larger damping (i.e., smaller \(e^{\mathrm{Re}(\lambda _{j})T})\), larger distance of the forcing frequency \(2\pi /T\) from the natural frequencies (i.e., larger \(|1-e^{\lambda _{j}T}|\)), and higher forcing frequencies (i.e., smaller T) all make the right-hand side of (43) larger and hence are beneficial to the convergence of the iteration. Likewise, a good initial guess (i.e., smaller \(\left\| \varvec{\mathcal {E}}\right\| _{0})\) and smaller nonlinearities (i.e., smaller \(\left| DS_{j}({\mathbf {x}})\right| \) ) all make the left-hand side of (43) smaller and hence are similarly beneficial to the convergence of the iteration. In the context of structural vibrations, higher frequencies, smaller forcing amplitudes, and forcing frequencies sufficiently separated from the natural frequencies of the system are realistic and affect the convergence positively. At the same time, low damping values in such systems are also typical and affect the convergence negatively.

An advantage of the Picard iteration approach we have discussed is that it converges monotonically, and hence, an upper estimate for the error after a finite number of iterations is readily available as the sup norm of the difference of the last two iterations. This can be exploited in numerical schemes to stop the iteration once the required precision is achieved.

3.1.2 Quasi-periodic response

We now consider the existence of a quasi-periodic solution under a Picard iteration of Eq. (18), which has apparently been completely absent in the literature. We rewrite the right-hand side of the integral equation (18) as the mapping

where we have made use of the Fourier expansion defined in Remark 4.

We consider a space of quasi-periodic functions with the frequency base \(\varvec{\Omega }\). Similarly to the periodic case (cf. Sect. 3.1.1), we restrict the iteration to a \(\delta -\)ball \(C_{\delta }^{{\mathbf {z}}_{0}}\left( \varvec{\Omega }\right) \) centered at the initial guess \({\mathbf {z}}_{0}\) with radius \(\delta \), i.e.,

where the sup norm \(\left\| \cdot \right\| _{0}=\max _{\varvec{\theta }\in {\mathbb {T}}^k}\left| \cdot \right| \) is the uniform supremum norm over the torus \( {\mathbb {T}}^k \). We then have the following theorem.

Theorem 6

If the conditions

hold for some real number \(a\ge 1\), then the mapping \(\varvec{\mathcal {G}}_{Q}\) defined in Eq. (48) has a unique fixed point in the space (49) and this fixed point can be found via the successive approximation

Proof

The is analogous to the proof of Theorem 5. We first establish that the mapping (48) is well defined in the space (49). In “Appendix H”, we detail that the mapping (48) is a contraction under the conditions (50) and (51).

Remark 10

In case of geometric (position-dependent) nonlinearities and proportional damping, we can reduce the dimensionality of the iteration (52) by half, using (34). This results in the following, equivalent Picard iteration:

The existence of the steady-state solution and the convergence of the iteration (53) can be proven analogously.

As in the periodic case, the convergence of the iteration (52) depends on the quality of the initial guess and the constant \(h_\mathrm{max}\) [cf. Eq. (17)], which is the maximum amplification factor. Low damping results in a higher amplification factor [cf. Eq. (17)] and will therefore affect the iteration negatively, which is similar to the criterion derived in the periodic case.

3.1.3 Unforced conservative case

In the unforced conservative case, \({\mathbf {z}}(t)\equiv {\mathbf {0}}\) is the trivial fixed point of the maps \(\varvec{\mathcal {G}}_{P}\) and \(\varvec{\mathcal {G}}_{Q}\). Thus, by Theorems 5 and 6, the Picard iteration with an initial guess in the vicinity of the origin would make the iteration converge to the trivial fixed point. In practice, the simple Picard approach is found to be highly sensitive to the choice of initial guess for obtaining non-trivial solution in the case of unforced conservative systems. Thus, in such cases, more advanced iterative schemes equipped with continuation algorithms are desirable, such as the ones we describe next.

3.2 Newton–Raphson iteration

So far, we have described a fast iteration process and gave bounds on the expected convergence region of this iteration. We concluded that if the iteration converges, it leads to the unique (quasi-) periodic solution of the system (1). As discussed previously (cf. Sect. 3.1), our convergence criteria for the Picard iteration will not be satisfied for near-resonant forcing and low damping. However, even if the Picard iteration fails to converge, one or more periodic orbits may still exist.

A common alternative to the contraction mapping approach proposed above is the Newton–Raphson scheme (cf., e.g., Kelley [51] ). An advantage of this iteration is its quadratic convergence if the initial guess is close enough to the actual solution of the problem. This makes this procedure also appealing for a continuation setup. We first derive the Newton–Raphson scheme to periodically forced systems and afterward to quasi-periodically forced systems.

3.2.1 Periodic case

To set up a Newton–Raphson iteration, we reformulate the fixed point problem (37) with the help of a functional \(\varvec{\mathcal {F}}_{P}\) whose zeros need to be determined:

Starting with an initial solution guess \({\mathbf {z}}_{0}\), we formulate the iteration for the zero of the functional \(\varvec{\mathcal {F}}_{P}\) as

where the second equation in (55) can be written using the Gateaux derivative of \( \varvec{\mathcal {F}}_{P} \) of

Equation (56) is a linear integral equation in \(\varvec{\mu }_{l}\), where \({\mathbf {z}}_{l}\) is the known approximation to the solution of (54) at the lth iteration step.

3.2.2 Quasi-periodic case

In the quasi-periodic case, the steady-state solution of system (1) is given by the zeros of the functional

Analogous to the periodic case, the Newton–Raphson scheme seeks to find a zero of \({\varvec{\mathcal {F}}}_{Q}\) via the iteration:

To obtain the correction step \({\varvec{\nu }}_{l}\), the linear system of equations

needs to be solved for \({\varvec{\nu }}_{l}\). The Fourier coefficients \(\{D{\mathbf {R}}({\mathbf {z}}_{l}){\varvec{\nu }}_{l}\}_{\varvec{\kappa }}\) in (59) are then given by the formula

Remark 11

As noted in Introduction, the results in Sects. 3.1.2 and 3.2.2 hold without any restriction on the number of independent frequencies allowed in the forcing function \( {\mathbf {f}}(t) \). This enables us to compute the steady-state response for arbitrarily complicated forcing functions, as long as they are well approximated by a finite Fourier expansion. Thus, the treatment of random-like steady-state computations is possible with the methods proposed here.

3.3 Discussion of the iteration techniques and numerical solution

The Newton–Raphson iteration offers an alternative to the Picard iteration, especially when the system is forced near resonance, and hence, the convergence of the Picard iteration cannot be guaranteed. At the same time, the Newton–Raphson iteration is computationally more expensive than the Picard iteration for two reasons: First, the evaluation of the Gateaux derivatives (56) and (59) can be expensive, especially if the Jacobian of the nonlinearity is not directly available. Second, the correction step \(\varvec{\mu }_{l}\) or \(\varvec{\nu }_{l}\) at each iteration involves the inversion of the corresponding linear operator in Eq. (56) or (59), which is costly for large systems.

Regarding the first issue above, the tangent stiffness is often available in finite-element codes for structural vibration. Nonetheless, there are many quasi-Newton schemes in the literature that circumvent this issue by offering either a cost-effective but inaccurate approximation of the Jacobian (e.g., the Broyden’s method [52]), or avoid the calculation of the Jacobian altogether (cf. Kelley [51]).

For the second challenge above, one can opt for the iterative solution of the linear system (56) or (59), which would circumvent operator inversion when the system size is very large. A practical strategy for obtaining force response curves of high-dimensional systems would be to use the Picard iteration away from resonant forcing and switch toward the Newton–Raphson approach when the Picard iteration fails. Even though the Newton–Raphson approach has better rate of convergence (quadratic) as compared to the Picard approach (linear), the computational complexity of a single Picard iteration is an order of magnitude lower than that of Newton–Raphson method (simply because of the additional costs of Jacobian evaluation and inversion involved in the correction step). In our experience with high-dimensional systems, when the Picard iteration converges, it is significantly faster than the Newton–Raphson in terms of CPU time, even though it takes significantly more number of iterations to converge.

Both the Picard and the Newton–Raphson iteration are efficient solution techniques for specific forcing functions. However, to obtain the steady-state response as a function of forcing amplitudes and frequencies, numerical continuation (cf. Dankowicz and Schilder [37], Doedel et al. [35], Dhooge et al. [36]) is required. We discuss numerical continuation in the context of the proposed integral equations approach in “Appendix J.1”.

In the case of multiple coexisting equilibrium positions in the unforced-damped system, the persistence of these solutions as k-dimensional tori under small (quasi-) periodic forcing can be deduced by the general results of Haro and de la Llave [53]. Depending on the specific application, a selection of these solutions can be numerically continued with the described techniques. To explore bifurcation phenomena, such as merging of such solutions, advanced continuation techniques, such as those of Dankowicz and Schilder [37], Doedel et al. [35] and Dhooge et al. [36] are needed.

Furthermore, note that \({\mathbf {z}}(t)\) in Eqs. (37) and (38) is a continuous function of time that cannot generally be obtained in a closed form and, therefore, must be numerically approximated (cf. Kress [54], Zeyman [55], Atkinson [56]). We discuss the numerical solution procedure for such integral equations in “Appendix J”.

In the supplementary MATLAB® code, we have implemented a simple yet powerful collocation-based approach for the numerical approximation of the periodic solution, and a Galerkin projection approach using a Fourier basis for the quasi-periodic case. We have also implemented the Picard and the Newton–Raphson approaches for the iterative solution of the integral equations, as discussed above. While performing numerical continuation, we make use of both these techniques in the sense described above, i.e., we use the fast Picard approach away from resonance and switch to the use of the Newton–Raphson approach when the Picard approach fails. In our experience, this combination was found to be very effective in obtaining forced response curves/surfaces for periodic as well as quasi-periodic cases.

4 Numerical examples

To illustrate the power of our integral-equation-based approach in locating the steady-state response, we consider two numerical examples. The first one is a two-degree-of-freedom system with geometric nonlinearity. We apply our algorithms under periodic and quasi-periodic forcing, as well as to the autonomous system with no external forcing. We also treat a case of non-smooth nonlinearities. For periodic forcing, we compare the computational cost with algorithm implemented in the \(\texttt {po}\) toolbox of the state-of-the-art continuation software \(\textsc {coco}\) [37]. Subsequently, we perform similar computations for a higher-dimensional mechanical system.

4.1 2-DOF example

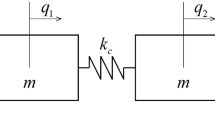

We consider a two-degree-of-freedom oscillator shown in Fig. 1. The nonlinearity \({\mathbf {S}}\) is confined to the first spring and depends on the displacement of the first mass only. The equations of motion are

This system is a generalization of the two-degree-of-freedom oscillator studied by Szalai et al. [57], which is a slight modification of the classic example of Shaw and Pierre [4]. Since the damping matrix \({\mathbf {C}}\) is proportional to the stiffness matrix \({\mathbf {K}}\), we can employ the Green’s function approach described in Sect. 2.3. The eigenfrequencies and modal damping of the linearized system at \(q_{1}=q_{2}=0\) are given by

With those constants, we can calculate the constants \(\alpha _{j}\) and \(\omega _{j}\) [cf. Eq. (28)] for the Green’s function \(L_{j}\) in Eq. (30). We will consider three different versions of system (60): smooth nonlinearity with periodic and subsequently quasi-periodic forcing; smooth nonlinearity without forcing; discontinuous nonlinearity without forcing.

4.1.1 Periodic forcing

First, we consider system (60) with harmonic forcing of the form

and a smooth nonlinearity of the form

which is the same nonlinearity considered by Szalai et al. [57]. The integral-equation-based steady-state response curves are shown in Fig. 2 for a full frequency sweep and for different forcing amplitudes. As expected, our Picard iteration scheme (blue) converges fast for all frequencies in case of low forcing amplitudes. For higher forcing amplitude, the method no longer converges in a growing neighborhood of the resonance. To improve the results close to the resonance, we employ the Newton–Raphson scheme of Sect. 3.2. We see that the latter iteration captures the periodic response even for larger amplitudes near resonances until a fold arises in the response curve. We need more sophisticated continuation algorithms to capture the response around such folds.

Performance comparison between the integral equations and thepotoolbox ofcoco As shown in Table 1, the integral equation approach proposed in the present paper is substantially faster than the \(\texttt {po}\) toolbox for continuation of periodic orbits with the MATLAB®-based continuation package \(\textsc {coco}\) [37] for low enough amplitudes. However, as the frequency response starts developing complicated folds for higher amplitudes (cf. Fig. 3), a much higher number of continuation steps are required for the convergence of our simple implementation of the pseudo-arc-length continuation (cf. the third column in Table 1). Since \(\textsc {coco}\) is capable of performing continuation on general problems with advanced algorithms, we have implemented our integral equation approach in \(\textsc {coco}\) in order to overcome this limitation. As shown in Table 1, the integral equation approach, along with \(\textsc {coco}\)’s built-in continuation scheme, is much more efficient for high-amplitude loading than any other method we have considered.

a Response curve for Example 1 with the nonlinearity (62) and the forcing (63), and the non-dimensional parameters \(m=1\), \(k=1\) and \(c=0.02\); b number of iterations needed on the construction of Fig. 4a. Red curves bound the a priori guaranteed region of convergence for the Picard iteration. The white region is the domain where this iteration fails, and we employ the Newton–Raphson scheme. (Color figure online)

The integral-equation-based continuation was performed with \(n_{t}=50\) time steps to discretize the solution in the time domain. On the other hand, the \(\texttt {po}\) toolbox in \(\textsc {coco}\) performs collocation-based continuation of periodic orbits, whereby it is able to modify the time-step discretization in an adaptive manner to optimize performance. In principle, it is possible to build an integral-equation-based toolbox in \(\textsc {coco}\), which would allow for the adaptive selection of the discretization steps. This is expected to further increase the performance of integral equations approach, when equipped with \(\textsc {coco}\) for continuation.

4.1.2 Quasi-periodic forcing

Unlike the shooting technique reviewed earlier, our approach can also be applied to quasi-periodically forced systems (cf. Theorems 2 and 4). Therefore, we can also choose a quasi-periodic forcing of the form

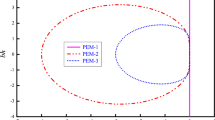

in Example 2, with the nonlinearity still given by Eq. (62). Choosing the first forcing frequency \(\varOmega _{1}\) close to the first eigenfrequency \(\omega _{1}\) and the second forcing frequency \(\varOmega _{2}\) close to \(\omega _{2}\), we obtain the results depicted in Fig. 4a. We show the maximal displacement as a function of the two forcing frequencies, which are always selected to be incommensurate; otherwise, the forcing would not be quasi-periodic. We nevertheless connect the resulting set of discrete points with a surface in Fig. 4a for better visibility.

To carry out the quasi-periodic Picard iteration (53), the infinite summation involved in the formula has to be truncated. We chose to truncate the Fourier expansion once its relative error is within \(10^{-3}\). If the iteration (53) did not converge, we switched to the Newton–Raphson scheme described in Sect. 3.2.2. In that case, we only kept the first three harmonics as Fourier basis.

Figure 4b shows the number of iterations needed to converge to a solution with this iteration procedure. Especially away from the resonances, a low number of iterations suffices for convergence to an accurate result. Also included in Fig. 4b are the conditions (50) and (51), which guarantee the convergence for the iteration to the steady-state solution of system (1). Outside the two red curves, both (50) and (51) are satisfied and, accordingly, the iteration is guaranteed to converge. Since these conditions are only sufficient for convergence, the iteration converges also for frequency pairs within the red curves. The number of iterations required increases gradually and within the white region bounded by green lines, the Picard iteration fails. In such cases, we proceed to employ the Newton– Raphson scheme (cf. Sect. 3.2.2).

4.1.3 Non-smooth nonlinearity

As noted earlier, our iteration schemes are also applicable to non-smooth system as long as they are still Lipschitz. We select the nonlinearity of the form

which represents a hardening (\(\alpha >0\)) or softening (\(\alpha <0\)) spring with play \(\beta >0\). The spring coefficient is given by \(\tan ^{-1}(\alpha )\), as depicted in Fig. 5a.

If we apply the forcing

to system (60) with the nonlinearity (64), our iteration techniques yield the response curve depicted in Fig. 5b. The Picard iteration approach (47) converges for moderate amplitudes, also in the nonlinear regime (\(|q_{1}|>\beta \)). When the Picard iteration fails at higher amplitudes, we employ the Newton–Raphson iteration. These results match closely with the amplitudes obtained by numerical integration, as seen in Fig. 5b.

4.2 Nonlinear oscillator chain

To illustrate the applicability of our results to higher-dimensional systems and more complex nonlinearities, we consider a modification of the oscillator chain studied by Breunung and Haller [58]. As shown in Fig. 6, the oscillator chain consists of n masses with linear and cubic nonlinear springs coupling every pair of adjacent masses. Thus, the nonlinear function \({\mathbf {S}}\) is given as:

The frequency response curve obtained with the iteration described in Sect. 3.2 for harmonic forcing is shown in Fig. 7 for 20 degrees-of-freedom. We also include the frequency response obtained with the \(\texttt {po}\)-toolbox of \(\textsc {coco}\) [37] with default settings for comparison. The integral equations approach gives the same solution as the \(\texttt {po}\)-toolbox of coco, but the difference in run times is stunning: the po-toolbox of \(\textsc {coco}\) takes about 12 min and 59 s to generate this frequency response curve, whereas the integral equation approach with a naive sequential continuation strategy takes 13 s to generate the same curve. This underlines the power of the approaches proposed here for complex mechanical vibrations.

5 Conclusion

We have presented an integral equation approach for the fast computation of the steady-state response of nonlinear dynamical systems under external (quasi-) periodic forcing. Starting with a forced linear system, we derive integral equations that must be satisfied by the steady-state solutions of the full nonlinear system. The kernel of the integral equation is a Green’s function, which we calculate explicitly for general mechanical systems. Due to these explicit formulae, the convolution with the Green’s function can be performed with minimal effort, thereby making the solution of the equivalent integral equation significantly faster than full time integration of the dynamical system. We also show the applicability of the same equations to compute periodic orbits of unforced, conservative systems.

We employ a combination of Picard and the Newton–Raphson iterations to solve the integral equations for the steady-state response. Since the Picard iteration requires only a simple application of a nonlinear map (and no direct solution via operator inversion), it is especially appealing for high-dimensional system. Furthermore, the nonlinearity only needs to be Lipschitz continuous, therefore our approach also applies to non-smooth systems, as we demonstrated numerically in Sect. 4.1.3. We establish a rigorous a priori estimate for the convergence of the Picard iteration. From this estimate, we conclude that the convergence of the Picard iteration becomes problematic for high amplitudes and forcing frequencies near resonance with an eigenfrequency of the linearized system. This can also be observed numerically in Example 4.1.1, where the Picard iteration fails close to resonance.

To capture the steady-state response for a full frequency sweep (including high amplitudes and resonant frequencies), we deploy the Newton–Raphson iteration once the Picard iteration fails near resonance. The Newton–Raphson formulation can be computationally intensive as it requires a high-dimensional operator inversion, which would normally make this type of iteration potentially unfeasible for exceedingly high-dimensional systems. However, we circumvent this problem with the Newton–Raphson method using modifications discussed in Sect. 3.2.

We have further demonstrated that advanced numerical continuation is required to compute the (quasi-) periodic response when folds appear in solution branches. To this end, we formulated one such continuation scheme, i.e., the pseudo-arc-length scheme, in our integral equations setting to facilitate capturing response around such folds. We also demonstrated that the integral equations approach can be coupled with existing state-of-the-art continuation packages to obtain better performance (cf. Sect. 4.1.1).

Compared to well-established shooting-based techniques, our integral equation approach also calculates quasi-periodic responses of dynamical systems and avoids numerical time integration. The latter can be computationally expensive for high-dimensional or stiff systems. In the case of purely geometric (position-dependent) nonlinearities, we can reduce the dimensionality of the corresponding integral iteration by half, by iterating on the position vector only. For numerical examples, we show that our integral equation approach equipped with numerical continuation outperforms available continuation packages significantly. As opposed to the broadly used harmonic balance procedure (cf. Chua and Ushida [24] and Lau and Cheung [25]), our approach also gives a computable and rigorous existence criterion for the (quasi-) periodic response of the system.

Along with this work, we provide a MATLAB® code with a user-friendly implementation of the developed iterative schemes. This code implements the cheap and fast Picard iteration, as well as the robust Newton–Raphson iteration, along with sequential/pseudo-arc-length continuation. We have further tested our approach in combination with the MATLAB®-based continuation package \(\textsc {coco}\) [37] and obtained an improvement in performance. One could, therefore, further add an integral-equation-based toolbox to \(\textsc {coco}\) with adaptive time steps in the discretization to obtain better efficiency.

Notes

Available at https://www.georgehaller.com.

References

Avery, P., Farhat, C., Reese, G.: Fast frequency sweep computations using a multi-point Padé-based reconstruction method and an efficient iterative solver. Int. J. Numer. Methods Eng. 69, 2848–2875 (2007). https://doi.org/10.1002/nme.1879

Rosenberg, R.M.: The normal modes of nonlinear \(n\)-degree-of-freedom systems. J. Appl. Mech. 30, 7–14 (1962)

Kelley, A.: On the Liapounov subcenter manifold. J. Math. Anal. Appl. 18, 472–478 (1967). https://doi.org/10.1016/0022-247X(67)90039-X

Shaw, S., Pierre, C.: Normal modes for non-linear vibratory systems. J. Sound Vib. 164, 85–124 (1993)

Avramov, K.V., Mikhlin, Y.V.: Nonlinear normal modes for vibrating mechanical systems. Rev. Theor. Dev. ASME Appl. Mech. Rev. 65, 060802 (2010)

Haller, G., Ponsioen, S.: Nonlinear normal modes and spectral submanifolds: existence, uniqueness and use in model reduction. Nonlinear Dyn. 86, 1493–1534 (2016). https://doi.org/10.1007/s11071-016-2974-z

Craig, R., Bampton, M.: Coupling of substructures for dynamic analysis. AIAA J. 6(7), 1313–1319 (1968). https://doi.org/10.2514/3.4741

Theodosiou, C., Sikelis, K., Natsiavas, S.: Periodic steady state response of large scale mechanical models with local nonlinearities. Int. J. Solids Struct. 46, 3565–3576 (2009). https://doi.org/10.1016/j.ijsolstr.2009.06.007

Kosambi, D.: Statistics in function space. J. Indian Math. Soc. 7, 76–78 (1943)

Kerschen, G., Golinval, J., Vakakis, A.F., Bergman, L.A.: The method of proper orthogonal decomposition for dynamical characterization and order reduction of mechanical systems: an overview. Nonlinear Dyn/ 41, 147–169 (2005). https://doi.org/10.1007/s11071-005-2803-2

Amabili, M.: Reduced-order models for nonlinear vibrations, based on natural modes: the case of the circular cylindrical shell. Philos. Trans. R. Soc. A 371, 20120474 (2013). https://doi.org/10.1098/rsta.2012.0474

Touzé, C., Vidrascu, M., Chapelle, D.: Direct finite element computation of non-linear modal coupling coefficients for reduced-order shell models. Comput. Mech. 54, 567–580 (2014). https://doi.org/10.1007/s00466-014-1006-4

Idelsohn, S.R., Cardona, A.: A reduction method for nonlinear structural dynamic analysis. Comput. Methods Appl. Mech. Eng. 49(3), 253–279 (1985). https://doi.org/10.1016/0045-7825(85)90125-2

Sombroek, C.S.M., Tiso, P., Renson, L., Kerschen, G.: Numerical computation of nonlinear normal modes in a modal derivatives subspace. Comput. Struct. 195, 34–46 (2018). https://doi.org/10.1016/j.compstruc.2017.08.016

Jain, S., Tiso, P., Rixen, D.J., Rutzmoser, J.B.: A quadratic manifold for model order reduction of nonlinear structural dynamics. Comput. Struct. 188, 80–94 (2017). https://doi.org/10.1016/j.compstruc.2017.04.005

Malookani, R.A., van Horssen, W.T.: On resonances and the applicability of Galerkin’s truncation method for an axially moving string with time-varying velocity. J. Sound Vib. 344, 1–17 (2015). https://doi.org/10.1016/J.JSV.2015.01.051

Nayfeh, A.H.: Perturbation Methods. Wiley, Hoboken (2004)

Nayfeh, A.H., Mook, D.T., Sridhar, S.: Nonlinear analysis of the forced response of structural elements. J. Acoust. Soc. Am. 55(2), 281–291 (1974)

Mitropolskii, YuA, Van Dao, N.: Appl. Asymptot. Methods Nonlinear Oscil. Springer, New York (1997)

Bogoliubov, N., Mitropolsky, Y.: Asymptotic Methods in the Theory of Nonlinear Oscillations. Gordon and Breach Science Publication, New York (1961)

Sanders, J.A., Verhulst, F.: Averaging Methods in Nonlinear Dynamical Systems. Springer, New York (1985)

Vakakis, A., Cetinkaya, C.: Analytic evaluation of periodic responses of a forced nonlinear oscillator. Nonlinear Dyn. 7, 37–51 (1995)

Kryloff, N., Bogoliuboff, N.: Introduction to Non-linear Mechanics. Princeton University Press, Princeton (1949)

Chua, L., Ushida, A.: Algorithms for computing almost periodic steady-state response of nonlinear systems to multiple input frequencies. IEEE Trans. Circuits Syst. 28(10), 953–971 (1981)

Lau, S., Cheung, Y.: Incremental harmonic balance method with multiple time scales for aperiodic vibration of nonlinear systems. J. Appl. Mech. 50, 871–876 (1983)

Leipholz, H.: Direct Variational Methods and Eigenvalue Problems in Engineering. Vol. Mechanics of Elastic Stability, 5. Leyden: Noordhoff, (1977)

Bobylev, N.A., Burman, Y.N., Korovin, S.K.: Approximation Procedures in Nonlinear Oscillation Theory. Walter de Gruyter, Berlin (1994)

Urabe, M.: Galerkin’s procedure for nonlinear periodic systems. Arch. Ration. Mech. Anal. 20(2), 120–152 (1965)

Stokes, A.: On the approximation of nonlinear oscillations. J. Diff. Equ. 12, 535–558 (1972)

García-Saldaña, J.D., Gasull, A.: A theoretical basis for the harmonic balance method. J. Differ. Equ. 254, 67–80 (2013)

Keller, H.B.: Numerical Methods for Two-point Boundary-value Problems. Blaisdell, Waltham (1968)

Peeters, M., Viguié, R., Sérandour, G., Kerschen, G., Golinval, J.C.: Nonlinear normal modes, Part II: toward a practical computation using numerical continuation techniques. Mech. Syst. Signal. Process. 23, 195–216 (2009)

Sracic, M., Allen, M.: Numerical Continuation of Periodic Orbits for Harmonically Forced Nonlinear Systems. Civil Engineering Topics, Volume 4: Proceedings of the 29th IMAC, 51– 69 (2011)

Seydel, R.: Practical Bifurcation and Stability Analysis. Springer, New York (2010). ISBN 978-1-4419-1739-3

Doedel, E., Oldeman, B.: Auto-07p: Continuation and Bifurcation Software for ordinary differential equations, urlhttp://indy.cs.concordia.ca/auto/

Dhooge, A., Govaerts, W., Kuznetsov, Y.: Matcont: a MATLAB package for numerical bifurcation analysis of odes. ACM Trans. Math. softw. 29(2), 141–164 (2003)

Dankowicz, H., Schilder, F.: Recipes for Continuation, SIAM (2013). ISBN 978-1-611972-56-6. https://doi.org/10.1137/1.9781611972573

Renson, L., Kerschen, G., Cochelin, B.: Numerical computation of nonlinear normal modes in mechanical engineering. J. Sound Vib. 364, 177–206 (2016). https://doi.org/10.1016/j.jsv.2015.09.033

Géradin, M., Rixen, D.J.: Mechanical Vibrations: Theory and Application to Structural Dynamics, 3rd edn. Wiley, Chichester (2015). ISBN 978-1-118-90020-8

Burd, V.M.: Method of Averaging for Differential Equations on an Infinite Interval: Theory and Applications. Chapman and Hall, London (2007). Chapter 2

Schilder, F., Vogt, W., Schreiber, S., Osinga, H.M.: Fourier methods for quasi-periodic oscillations. Int. J. Numer. Methods Eng. 67, 629–671 (2006). https://doi.org/10.1002/nme.1632

Mondelo González, J.M.: Contribution to the study of Fourier methods for quasi-periodic functions and the vicinity of the collinear libration points. Ph.D. Thesis, University of Barcelona (2001). http://hdl.handle.net/2445/42084

Kovaleva, A.: Optimal Control of Mechanical Oscillations. Springer, New York (1999)

Babistkiy, V.I.: Theory of vibro-impact systems and applications. Springer, Berlin (1998)

Rosenwasser, E.N.: Oscillations of Non-linear Systems: Method of Integral Equations. Nauka, Moscow (1969). (in Russian)

Babitsky, V.I., Krupenin, V.L.: Vibration of Strongly Nonlinear Discontinuous Systems. Springer, Berlin (2012)

Kelley, A.F.: Analytic two-dimensional subcenter manifolds for systems with an integral. Pacific J. of Math. 29, 335–350 (1969)

Arnold, V.I.: Mathematical Methods of Classical Mechanics. Springer, New York (1989)

Picard, E.: Traité d’analyse, vol. 2. Gauthier-Villars, Paris (1891)

Bailey, P., Shampine, L., Waltman, P. (Eds.): Nonlinear Two Point Boundary Value Problems, Mathematics in Science and Engineering, Vol. 44, (1968)

Kelley, C.T.: Solving Nonlinear Equations with Newton’s Method. SIAM, New York (2003). ISBN: 978-0-89871-546-0. https://doi.org/10.1137/1.9780898718898

Broyden, C.G.: A class of methods for solving nonlinear simultaneous equations. Math. Comput. 19(92), 577–593 (1965). https://doi.org/10.1090/S0025-5718-1965-0198670-6

Haro, A., de la Llave, R.: A parameterization method for the computation of invariant tori and their whiskers in quasi-periodic maps: rigorous results. J. Differ. Equ. 228(2), 530–579 (2006). https://doi.org/10.1016/j.jde.2005.10.005

Kress, R.: Linear Integral Equations, Chapter 13, 3rd edn. Springer, New York (2014). ISBN 978-1-4612-6817-8

Zemyan, S.M.: The Classical Theory of Integral Equations: A Concise Treatment. Birkhäuser, Basel (2012). https://doi.org/10.1007/978-0-8176-8349-8. ISBN 978-0-8176-8348-1

Atkinson, K.: The Numerical Solution of Integral Equations of the Second Kind (Cambridge Monographs on Applied and Computational Mathematics). Cambridge University Press, Cambridge (1997). https://doi.org/10.1017/CBO9780511626340

Szalai, R., Ehrhardt, D., Haller, G.: Nonlinear model identification and spectral submanifolds for multi-degree-of-freedom mechanical vibrations. Proc. R. Soc. A. 473, 20160759 (2017)

Breunung, T., Haller, G.: Explicit backbone curves from spectral submanifolds of forced-damped nonlinear mechanical systems. Proc. R. Soc. A. 474, 20180083 (2018)

Muñoz-Almaraz, F.J., Freire, E., Galán-Vioque, J., Doedel, E., Vanderbauwhede, A.: Continuation of periodic orbits in conservative and Hamiltonian systems. Phys. D: Nonlinear Phenomena 181(1), 1–38 (2003). https://doi.org/10.1016/S0167-2789(03)00097-6

Galán-Vioque, J., Vanderbauwhede, A.: Continuation of periodic orbits in symmetric Hamiltonian systems. In: Krauskopf, B., Osinga, H.M., Galán-Vioque, J. (eds.) Numerical Continuation Methods for Dynamical Systems. Springer, Dordrecht (2007). https://doi.org/10.1007/978-1-4020-6356-5_9

Acknowledgements

We are thankful to Harry Dankowicz and Mingwu Li for clarifications and help with the continuation package \(\textsc {coco}\) [37]. We also acknowledge helpful discussions with Mark Mignolet and Dane Quinn.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proof of Lemma 1

The general solution of (6) is given by the classic variation of constants formula

A T-periodic solution \({\mathbf {w}}_{0}(t)\) of (6) must satisfy (65), resulting in

Since the matrix \(\left[ {\mathbf {I}}-e^{\varvec{\Lambda }T}\right] \) is invertible due to the non-resonance condition (7), this allows us to solve Eq. (66) for a unique initial condition \( {\mathbf {w}}_0(0) \) as (cf. Burd [40], Chapter 2)

Substituting the unique initial condition from (67) into the general solution (65) provides us an explicit expression of the unique T-periodic solution \({\mathbf {w}}_{0}(t)\) to system (6) as

where \({\mathbf {G}}(t,T)\) is a diagonal matrix with the entries given by (9). Using the linear modal transformation \({\mathbf {z}}=\mathbf {Vw}\), we find the unique T-periodic solution to system (4) in the form

Proof of Theorem 1

If \({\mathbf {z}}(t)\) is a T-periodic solution of (3), then it satisfies the linear inhomogeneous differential equation

where we view \({\mathbf {F}}(t)-{\mathbf {R}}({\mathbf {z}}(t))\) as a \(T-\)periodic forcing term. Thus, according to Lemma 1, we have

as claimed in statement (i).

Now, let \({\mathbf {z}}(t)\) be a continuous, \(T-\)periodic solution to (11). After introducing the notation \(\varvec{\chi }(t)={\mathbf {V}}^{-1}\left[ {\mathbf {F}}(t)-{\mathbf {R}}({\mathbf {z}}(t))\right] \), we have

where (69) is a direct consequence of (68). By the continuity of \({\mathbf {z}}(t)\), \(\varvec{\chi }(t)\) is also at least \(C^{0}\) (\({\mathbf {F}}\) is at least \(C^{0}\) and \({\mathbf {R}}\) is Lipschitz). Thus, for any \(t\in [0,T]\), the right-hand side of (69) can be differentiated with respect to t according to the Leibniz rule, to obtain

which implies

as claimed in statement (ii).

Proof of Lemma 2

By the linearity of (4), one can verify that the sum of periodic solutions given by Lemma 1 for each periodic forcing summand in (12) is the unique, bounded solution of (4). In case of a forcing written as a Fourier series, we can carry out the integration appearing in Lemma 1 for each summand in this bounded solution explicitly in diagonalized coordinates \({\mathbf {z}}={\mathbf {V}}{\mathbf {w}}\). With the notation \(\varvec{\psi _{\varvec{\kappa }}}={\mathbf {V}}^{-1}{\mathbf {F}}_{\varvec{\kappa }}\), we then obtain for the jth degree of freedom:

Explicit Green’s function for mechanical systems: Proof of Lemma 3

The first-order ODE formulation for (26) is given by

By the classic variation of constants formula for first-order systems of ordinary differential equations, the general solution of (70) is of the form

with \({\mathbf {N}}(t)=e^{{\mathbf {A}}_{j}t}\) denoting the fundamental matrix solution for the jth mode with \({\mathbf {N}}(0)={\mathbf {I}}.\) Thus, the homogeneous (unforced) version of (26), the explicit solution can be obtained as

Since \({\mathbf {F}}(t)\) is uniformly bounded for all times and all \({\mathbf {A}}_{j}\) matrices are hyperbolic (\(\zeta _{j}>0\) for \(j=1,\ldots ,n\)), then a unique uniformly bounded solution exists for the 2n-dimensional system of linear ordinary differential equations (ODEs) (70) (see, e.g., Burd [40]). The initial condition \(\left( y_{j}(0),\dot{y}_{j}(0)\right) \) for the unique T-periodic solution of (71) is obtained by imposing periodicity, i.e., \(y_{j}(0)=y_{j}(T)\) for \(j=1,\dots ,n\) and is given by

Finally, the unique periodic response \(\left( y_{j}(t),\dot{y}_{j}(t)\right) \) is obtained by substituting the initial condition (73) into the Duhamel’s integral formula (71) as

With the notation introduced in (28), i.e.,

the specific expressions for the fundamental matrix of solutions of (70) in the underdamped, the critically damped and overdamped cases are given by

Furthermore, we have

Thus, we can explicitly compute the particular periodic solution given in (74) using (75) as

with the diagonal elements of the Green’s function matrices \({\mathbf {L}},{\mathbf {J}}\) defined in (30) and (32), i.e.,

Finally, the linear periodic response \({\mathbf {x}}_{P}(t)\) in the original system coordinates can then obtained by the linear transformation \({\mathbf {x}}_{P}(t)={\mathbf {U}}{\mathbf {y}}(t)\) as

Derivative of Green’s function with respect to T

The derivative with respect to the time period T of the first-order periodic Green’s function \( {\mathbf {G}} \) given in (9) is simply given by

We also provide the derivative of the Green’s function \({\mathbf {L}}\) with respect to T to ease the computation of the Jacobian of the zero function in during numerical continuation. This is obtained by simply differentiating (30) with respect to T. We use a symbolic toolbox for this procedure:

Proof of Remark 2

We derive an estimate for the sup norm of the integral of the operator norm of the Green’s function, i.e., for \(\int _{0}^{T}\left\| G_{j}(t-s,T)\right\| \,\mathrm{d}s\) defined in equation (9). For \(t>s\), we start by noting that

For the case \(T>s>t\), we obtain

The upper bounds on the Green’s function in the two intervals are equal and we therefore obtain

Proof of Theorem 5

In the following, we derive conditions under which the mapping \( {\varvec{\mathcal {H}}} \) defined in equation (37) is a contraction mapping. We rewrite (37) as

where \(\varUpsilon _{P}\) is a linear map representing the convolution operation with the Green’s function. Specifically, we define the space of n-dimensional periodic T-periodic functions as

Under the non-resonance condition (7), the linear map

is well defined, i.e., \(\varUpsilon _{P}\) maps T-periodic functions into T-periodic functions. Indeed, for any \({\mathbf {p}}\in {\mathcal {P}}_{2n}\), let \({\mathbf {q}}=\varUpsilon _{P}{\mathbf {p}}\). We have

i.e., \({\mathbf {q}}\in {\mathcal {P}}_{2n}\).

Since the space (41) consists of periodic functions, we know that it is well defined in the space \(C_{\delta }^{{\mathbf {z}}_{0}}[0,T]\). Therefore, by the Banach fixed point theorem, the integral equation (37) has a unique solution if the mapping \({\varvec{\mathcal {H}}}\) is a contraction of the complete metric space \(C_{\delta }^{{\mathbf {z}}_{0}}[0,T]\) into itself for an appropriate choice of the radius \(\delta >0\) and the initial guess \({\mathbf {z}}_{0}\).

To find a condition under which this holds, we first note that for \(\left\| \mathbf {z-}{\mathbf {z}}_{0}\right\| _{0}\le \delta ,\) Eq. (37) gives

where \(L_{\delta }^{{\mathbf {z}}_{0}}\) denotes a uniform-in-time Lipschitz constant for the function \({\mathbf {S}}({\mathbf {z}})\) with respect to its argument \({\mathbf {z}}\) within the ball \(\left| {\mathbf {z}}-{\mathbf {z}}_{0}\right| \le \delta ,\) and \(\varGamma (T)\) is the constant defined in (10). The initial error term \(\varvec{\mathcal {E}}(t)\) is defined in Eq. (42). Taking the sup norm of both sides, we obtain that \(\left\| {{\varvec{\mathcal {H}}}}({\mathbf {z}})\right\| _{0}\le \delta ,\) and hence

holds, whenever condition (44) holds.

Similarly, for two functions \({\mathbf {z}},\tilde{{\mathbf {z}}}\in C_{\delta }^{{\mathbf {z}}_{0}}[0,T],\) Eq. (37) gives the estimate

Taking the sum norm of both sides then gives that \(\varvec{\mathcal {H}}\) is a contraction mapping on \(C_{\delta }^{{\mathbf {z}}_{0}}[0,T]\) if

holds for some real number \(a\ge 1\). Solving equation (79) for \(L_{\delta }^{{\mathbf {z}}_{0}}\), we obtain condition (43).

Proof of Theorem 6

We show here that the mapping \(\varvec{\mathcal {H}}\) defined in the quasi-periodic case (cf. equation (48)) is a contraction on the space (49) if the conditions (50) and (51) hold. The convergence estimate for the iteration (52) is then similar in spirit to the periodic case (cf. “Appendix G”).

We rewrite (38) as

where \(\varUpsilon _{Q}\) is a linear map representing the convolution operation with the Green’s function. Similarly to the periodic case, we define the space of n-dimensional quasi-periodic functions with frequency base vector \(\varvec{\Omega }\) as

Furthermore, we note that under the non-resonance condition (7), the linear map

is well defined, i.e., \(\varUpsilon _{Q}\) maps any quasi-periodic function \({\mathbf {q}}\) with frequency base vector \(\varvec{\Omega }\) to quasi-periodic functions with the same frequency base vector \(\varvec{\Omega }\). This is a direct consequence of the linearity of the \(\varUpsilon _{Q}\) and definition of \(\varUpsilon _{P}\) in “Appendix G”.

Since the mapping (48) is well defined in the space \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\) defined in (49), we have by the Banach fixed point theorem that the integral equation (48) has a unique solution if the mapping \(\varvec{\mathcal {H}}\) is a contraction of the complete metric space \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\) into itself for an appropriate choice of the radius \(\delta >0.\) In a similar spirit as in the periodic case we search for conditions under which the space \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\) is mapped to itself. Therefore, we take the sup norm of the mapping (48) applied to an element from \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\) and obtain

where we have used that the Fourier series of the nonlinearity \(\sum _{k}\mathbf {R_{\varvec{\kappa }}}\{{\mathbf {z}}\}e^{i\left\langle \varvec{\kappa },\varvec{\Omega }\right\rangle t}\)converges to the function \({\mathbf {R}}({\mathbf {z}},t)\). Due to the Lipschitz continuity of the nonlinearity and the forcing, this holds. We finally conclude that \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\) is mapped to itself, if condition (51) holds.

Similarly, for two function \({\mathbf {z}},\tilde{{\mathbf {z}}}\) in \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\), we obtain

Therefore, the iteration (48) is a contraction on the space \(C_{\delta }^{{\mathbf {z}}_{0}}(\varvec{\Omega })\), if the condition

holds, which we reformulate in (50).

Explicit expressions for Fourier coefficients in Remark 6

To obtain the amplifications factors given in (35), we carry out the integration explicitly, we diagonalize the system with the matrix of the undamped mode shapes \({\mathbf {U}}\), (i.e., let \({\mathbf {x}}={\mathbf {U}}{\mathbf {y}}\)) and introduce the notation \(\varvec{\psi _{\varvec{\kappa }}}={\mathbf {U}}^{\top }{\mathbf {f}}_{\varvec{\kappa }}\). Assuming an underdamped configuration (\(\zeta _{j}<1\)), we obtain for the jth degree of freedom

For the critically damped configuration (\(\zeta _{j}=1\)), we obtain

Finally, for the overdamped configuration (\(\zeta _{j}>1\)), we obtain

Numerical solution procedure

The numerical approximation of the solution \( {\mathbf {z}}(t) \) to integral equations such as (37) and (38) is performed via the finite sum