Abstract

We prove the existence of the classical Hopf bifurcation and of the zero-Hopf bifurcation in the Hindmarsh–Rose system. For doing this, some adequate change in parameters must be done in order that the computations become easier.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

These last years there was a big interest in studying the three-dimensional Hindmarsh–Rose polynomial ordinary differential system [1]. It appears as a reduction in the conductance based on the Hodgkin–Huxley model for neural spiking (see for more details [2]). This differential system can be written as:

where \(b,\ I,\ \mu ,\ s,\ x_0\) are parameters and the dot indicates derivative with respect to the time t.

The interest in system (1) basically comes from two main reasons. The first one is due to its simplicity since it is just a differential system in \({\mathbb {R}}^3\) with a polynomial nonlinearity containing only five parameters. And the second one is because it captures the three main dynamical behaviors presented by real neurons: quiescence, tonic spiking and bursting. We can find in the literature many papers that investigate the dynamics presented by system (1) (see, for instance, [3–13]).

The study of the periodic orbits of a differential equation is one of the main objectives of the qualitative theory of differential equations. In general, the periodic orbits are studied numerically because, usually, their analytical study is very difficult. Here, using the averaging theory, we shall study analytically the periodic orbits of the three-dimensional Hindmarsh–Rose polynomial ordinary differential Eq. (1) which bifurcate firstly from a classical Hopf bifurcation and secondly from a zero-Hopf bifurcation.

Among the mentioned papers on the differential system (1), none of them study the occurrence of a Hopf or a zero-Hopf bifurcation in this differentiable system, except the paper [10] where the authors studied numerically the existence of the Hopf bifurcation.

In the present paper, we consider some special choice of parameters that facilitates the study of the classical Hopf bifurcation and also of the zero-Hopf bifurcation. A classical Hopf bifurcation in \({\mathbb {R}}^3\) takes place in an equilibrium point with eigenvalues of the form \(\pm \omega i\) and \(\delta \ne 0\), while for a zero-Hopf bifurcation the eigenvalues are \(\pm \omega i\) and 0. Here an equilibrium point with eigenvalues \(\pm \omega i\) and 0 will be called a zero-Hopf equilibrium.

The study of the zero-Hopf bifurcation of system (1) is done in Theorem 2 (see Sect. 4). We note that the local stability or instability of the periodic solution starting in the zero-Hopf bifurcation is described at the end of Sect. 4. Moreover, the proof of Theorem 2 is done using averaging theory, and the method used for proving Theorem 2 can be applied for studying the zero-Hopf bifurcation in other differential systems.

We study the classical Hopf bifurcation of system (1) in Theorem 4 (see Sect. 6). For related proofs of Theorems 2 and 4, see [14] and [15].

2 Preliminaries

The first step of our analysis is to change the parameters s and \(x_0\) to new parameters c and d in the following way

Now we change the parameters b and I to new parameters \(\beta \) and \(\rho \) in order to obtain a simplified expression of one of the equilibria, and easier computations proving Theorems 2 and 4. We consider the following change in parameters

Next, lemma shows that under generic hypothesis we can perform the change in parameters (3).

Lemma 1

Let \(H:{\mathbb {R}}^7\rightarrow {\mathbb {R}}^2\) be a function given by

For a given \(({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}})\in {\mathbb {R}}^5\) that satisfies \({\overline{\mu }}\ne 0\), \({\overline{d}}+{\overline{\mu }}(1+{\overline{I}})\ne 0\) and \(R({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}})\ne 0\) where the function \(R(b,I,c,\mu ,d)\) is given by

Then, there exists \(({\overline{\rho }},{\overline{\beta }})\in {\mathbb {R}}^2\) such that \(H({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}},\) \({\overline{\rho }},{\overline{\beta }})=(0,0)\). Moreover, in a small neighborhood of this point, there exist smooth functions \(\rho (b,I,c,\mu ,d)\) and \(\beta (b,I,c,\mu ,d)\) such that

Proof

First, we solve the equation \(H_1({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}},\rho ,\beta )=0\) in terms of \(\beta \) obtaining

It is possible because \({\overline{\mu }}\ne 0\). Now, we substitute the expression of \(\beta \) in the equation \(H_2=0\) obtaining an equation \(h_1({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}},\rho )=0\), where

which is a cubic equation in the variable \(\rho \). It is clear that there exists a real solution \({\overline{\rho }}\) of this cubic equation. The hypothesis \({\overline{d}}+{\overline{\mu }}(1+{\overline{I}})\ne 0\) implies that \({\overline{\rho }}\ne 0\). Now, we just substitute the value \({\overline{\rho }}\) in (5), and we get \({\overline{\beta }}\). The second part of lemma follows from the Implicit Function Theorem. The determinant of the jacobian matrix \(\displaystyle \frac{\partial (H_1,H_2)}{\partial (\rho ,\beta )}\), with the substitution (5), is

In order to finish the proof, it is enough to show that \(h_2({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}},{\overline{\rho }})\ne 0\). The resultant of \(h_1\) and \(h_2\) with respect to the variable \(\rho \) is given by (4). The hypothesis \(R({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}})\ne 0\) implies that \(h_i({\overline{b}},{\overline{I}},{\overline{c}},{\overline{\mu }},{\overline{d}},\rho )=0\), for \(i=1,2\), do not share a common root \({\overline{\rho }}\). \(\square \)

Then, it is easy to verify that

is an equilibrium of system (1).

Now, we choose the parameters \(\mu \), \(\beta \) and s in order that when \(\varepsilon =0\) the eigenvalues of the linear part of system (1) at the equilibrium point \(p_0\) are \(\delta \), \(\omega i\) and \(-\omega i\). This is an scenario candidate to have a Hopf bifurcation. So, we get

where

with

In the next step, we translate the equilibrium point \(p_0\) to the origin of coordinates doing the change in variables

and we obtain

where

We remark that the differential system (10) in the variables \(({\overline{x}},{\overline{y}},{\overline{z}})\) will be used for proving our main results on the zero-Hopf bifurcation (Theorem 2) and on the classical Hopf bifurcation (Theorem 4).

3 Averaging theory of first order

We consider the initial value problems

and

with \({\mathbf {x}}\) , \({\mathbf {y}}\) and \({\mathbf {x}}_0 \) in some open \({\varOmega }\) of \({{\mathbb {R}}^n}\), \(t\in [0,\infty )\), \(\varepsilon \in (0,\varepsilon _0]\). We assume that \(\mathbf {F_1}\) and \(\mathbf {F_2}\) are periodic of period T in the variable t, and we set

Theorem 1

Assume that \(F_1\), \(D_{\mathbf {x}}F_1\) ,\(D_\mathbf {xx}F_1\) and \(D_{\mathbf {x}}F_2\) are continuous and bounded by a constant independent of \(\varepsilon \) in \([0,\infty )\times \ {\varOmega } \times (0,\varepsilon _0]\) and that \(y(t)\in {\varOmega }\) for \(t\in [0,1/\varepsilon ]\). Then, the following statements hold:

-

(a)

For \(t\in [0,1/\varepsilon ]\), we have \({\mathbf {x}}(t)- {\mathbf {y}}(t)= O(\varepsilon )\) as \(\varepsilon \rightarrow 0\).

-

(b)

If \(p \ne 0\) is a singular point of system (12) and \(\det D_{\mathbf {y}}g(p) \ne 0\), then there exists a periodic solution \(\phi (t,\varepsilon )\) of period T for system (11) which is close to p and such that \(\phi (0,\varepsilon )-p=O(\varepsilon )\) as \(\varepsilon \rightarrow 0\).

-

(c)

The stability of the periodic solution \(\phi (t, \varepsilon )\) is given by the stability of the singular point.

We have used the notation \(D_{\mathbf {x}}g\) for all the first derivatives of g, and \(D_\mathbf {xx}g\) for all the second derivatives of g.

4 Zero-Hopf bifurcation of system (1)

In this section, we analyze the case \(\delta =0\). It means that we are in the scenario of a zero-Hopf bifurcation. Our approach for obtaining the periodic orbits of the system bifurcating from the zero-Hopf equilibrium \(p_0\) is through the averaging theory of first order (see Sect. 3).

In the next theorem, we study the zero-Hopf bifurcation in the Hindmarsh–Rose system (1).

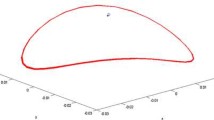

The periodic orbit of Theorem 2 bifurcating from a zero-Hopf bifurcation for the parameters \(b=-403/20, I=-2499/2000, \mu =3, s=5, x_0=0,\varepsilon =1/10\). The parallelepiped which appears in the figure containing the periodic orbit has the following dimensions in the variables \((x,y,z)\in [-0.3,0]\times [0.8,0.95]\times [-2,0.5]\)

Theorem 2

The equilibrium point \(p_0\) of the Hindmarsh–Rose system (1) given in (6) exhibits a zero-Hopf bifurcation for the choice of the parameters given in (2), (3) and (7), when \(\varepsilon =\delta =0\), if \((C_2B_1B_0-C_1B_1^2)C_0>0, B_0 D {\varDelta } \ne 0\) (see Sect. 4 for the definitions of \(B_0\), \(B_1\), \(C_0\), \(C_1\), \(C_2\), \({\varDelta }\) and D). Then, for \(\varepsilon \ne 0\) sufficiently small, the bifurcated periodic solution is of the form

where \(\overline{r_0}\) and \(\overline{w_0}\) are given in (20) (See Fig. 1).

In our approach, we want to study the periodic solutions that born at the origin. So we perform the rescaling of variables \(({\overline{x}},{\overline{y}},{\overline{z}})=(\varepsilon {\overline{u}},\varepsilon {\overline{v}},\varepsilon {\overline{w}})\). Then, system (10) becomes

where \(\widetilde{a_j}=a_j \left| _{\delta =0}\right. \) for \(j=1,2\ldots ,5\).

Now, we put the linear part of system (14) into its real Jordan normal form doing the change in variables

where the matrix M is

So, we obtain

where the polynomials \(P_i(u,v,w)=b_{i1}u+b_{i2}v+b_{i3}w+b_{i4}u^2+b_{i5}uv+ b_{i6}uw+b_{i7}v^2+b_{i8}vw+b_{i9}w^2\) for \(i=1,2,3\) are

where

We pass system (16) to cylindrical coordinates using \(u=r\cos \theta \), \(v=r\sin \theta \) and \(w=w\). We get

where we are denoting \(P_i(r\cos \theta ,r\sin \theta ,w)\) just by \(P_i\) for \(i=1,2,3\).

Now, we take \(\theta \) as a new independent variable. So system (17) becomes

Here we are ready to apply the averaging theory of first order, presented in Sect. 3 to system (18). As in (12), we must compute the integrals

Performing the calculations, we obtain

where \({\varDelta }\) is given in (8) and

Consider

If \(\dfrac{C_2B_1B_0-C_1B_1^2}{C_0}>0\) and \(C_0 B_0\ne 0\), then \((\overline{r_0},\overline{w_0})\) is a singular point of the system \(({\dot{r}},{\dot{w}})=(g_1(r,w),g_2(r,w))\). From (19), we get

So, according to Theorem 1, this solution (when it exists) provides a periodic solution \((r(\theta ,\varepsilon ), w(\theta ,\varepsilon ))\) of the differential system (18) such that \((r(0,\varepsilon ), w(0,\varepsilon ))\) tends to \((\overline{r_0},\overline{w_0})\) when \(\varepsilon \) tends to 0.

Going back through the changes in variables and using the statement (a) of Theorem 1, we have

Then, in cylindrical coordinates \((r,\theta ,w)\), the periodic solution is

and in the variables (u, v, w) the periodic solution becomes

Now, undo the linear change in variables (15) that transform system (14) into system (16), and we obtain the periodic solution

of system (14).

Finally, using that \(({\overline{x}},{\overline{y}},{\overline{z}})=(\varepsilon {\overline{u}},\varepsilon {\overline{v}},\varepsilon {\overline{w}})\) and (9), we get the periodic solution given in (13).

Note that the periodic solution (13) borns by a zero-Hopf bifurcation from the zero-Hopf equilibrium \(p_0\), because when \(\varepsilon \rightarrow 0\) this periodic solution tends to the equilibrium \(p_0\). Moreover, we know the kind of linear stability of this periodic solution. From Theorem 1, we need to know the eigenvalues of the Jacobian matrix of the map \((g_1,g_2)\) evaluated at \((\overline{r_0},\overline{w_0})\). If both eigenvalues have negative real part, this periodic orbit is an attractor. If both eigenvalues have positive real part, this periodic orbit is a repeller. If both eigenvalues are purely imaginary, then this periodic solution is linear stable. Finally, if one eigenvalue has negative real part and the other has positive real part, the periodic solution has an unstable and a stable invariant manifold formed by two cylinders.

This completes the proof of Theorem 2 and consequently the study of the zero-Hopf bifurcation for the Hindmarsh–Rose differential system at the mentioned equilibrium point. Now, it remains to study the classical Hopf bifurcation.

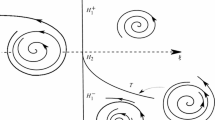

5 Classical Hopf bifurcation

Assume that a system \({\dot{x}}=F(x)\) has an equilibrium point \(p_0\). If its linearization at \(p_0\) has a pair of conjugate purely imaginary eigenvalues and the others eigenvalues have nonvanishing real part, then this is the setting for a classical Hopf bifurcation. We can expect to see a small-amplitude limit cycle branching from the equilibrium point \(p_0\). It remains to compute the first Lyapunov coefficient \(\ell _1(p_0)\) of the system near \(p_0\). When \(\ell _1(p_0)<0\), the point \(p_0\) is a weak focus of system restricted to the central manifold of \(p_0\) and the limit cycle that emerges from \(p_0\) is stable. In this case, we say that the Hopf bifurcation is supercritical. When \(\ell _1(p_0)>0\), the point \(p_0\) is also a weak focus of the system restricted to the central manifold of \(p_0\), but the limit cycle that borns from \(p_0\) is unstable. In this second case, we say that the Hopf bifurcation is subcritical.

Here we use the following result presented on page 180 of the book [17] for computing \(\ell _1(p_0)\).

Theorem 3

Let \({\dot{x}}=F(x)\) be a differential system having \(p_0\) as an equilibrium point. Consider the third-order Taylor approximation of F around \(p_0\) given by \(F(x)=Ax +\dfrac{1}{2!}B(x,x)+\dfrac{1}{3!}C(x,x,x)+{\mathcal {O}}(|x|^4)\). Assume that A has a pair of purely imaginary eigenvalues \(\pm \omega i\), and these eigenvalues are the only eigenvalues with real part equal to zero. Let q be the eigenvector of A corresponding to the eigenvalue \(\omega i\), normalized so that \({\overline{q}}\cdot q=1\), where \({\overline{q}}\) is the conjugate vector of q. Let p be the adjoint eigenvector such that \(A^Tp=-\omega i p\) and \({\overline{p}}\cdot q=1\). If \(\text{ Id }\) denotes the identity matrix, then

6 Classical Hopf bifurcation of system (1)

In what follows, we perform the study of a classical Hopf bifurcation using a result that can be found in the book of Kuznetzov (see details in Sect. 5).

Theorem 4

The equilibrium point \(p_0\) of the Hindmarsh–Rose system (1) given in (6) exhibits a classical Hopf bifurcation for the choice of the parameters given in (2), (3) and (7), when \(\varepsilon =0\), \(\delta \ne 0\) and

where \(R_1\) and \(R_2\), given in the end of Sect. 6, are polynomials in the variables \(\rho \), \(\delta \) and \(\omega ^2.\) Moreover, if \(\ell _1(p_0)<0\), then the Hopf bifurcation is supercritical; otherwise, it is subcritical.

We recall that these classical Hopf bifurcations have been studied numerically by Storace, Linaro and de Lange in [10].

First of all, we consider system (10), with \(\varepsilon =0\), given by

The matrix of the linear part of (21) is

and its eigenvalues are \(\delta \), \(\omega i\) and \(-\omega i\). In order to prove that we have a Hopf bifurcation at the equilibrium point \(p_0\), it remains to prove that the first Lyapunov coefficient \(\ell _1(p_0)\) is different from zero. According to Theorem 3, to compute \(\ell _1(p_0)\), we need not only the matrix A but also the bilinear and trilinear forms, B and C, associate with terms of second and third orders of system (21), the inverse of matrix A and the inverse of the matrix \(2\omega i Id-A\), where Id is the identity matrix of \({\mathbb {R}}^3\).

In what follows, the letters u, v and w will denote vectors of \({\mathbb {R}}^3\), and they have no relation with the real variables u, v and w used in the proof of Theorem 2. The bilinear form B evaluated at two vectors \(u=(u_1,u_2,u_3)\) and \(v=(v_1,v_2,v_3)\) is given by

And the trilinear form C evaluated at three vectors \(u=(u_1,u_2,u_3)\), \(v=(v_1,v_2,v_3)\) and \(w=(w_1,w_2,w_3)\) is given by

Let q be the eigenvector of A corresponding to the eigenvalue \(\omega i\), normalized so that \({\overline{q}}\cdot q=1\), where \({\overline{q}}\) is the conjugate vector of q. The expression of q is given by

where

Let p be the adjoint eigenvector such that \(A^Tp=-\omega i p\) and \({\overline{p}}\cdot q=1\). The expression of p is given by

where

So, from Theorem 3, the first Lyapunov coefficient is

where \(R_1=\sum _{i=0}^{8}r_i\rho ^i,\) \(r_i=r_i(\delta ,\omega ^2)\) with

and \(R_2=R_2(\rho ,\delta ,\omega ^2)\) is

Here, \(W_1=1+\omega ^2\) and \(\delta _1=1+\delta \).

Now, the proof of Theorem 4 follows directly from Theorem 3.

7 Conclusion section

Choosing special parameters, we have studied the zero-Hopf bifurcation (see Theorem 2) and also the classical Hopf bifurcation (see Theorem 4) of the three-dimensional Hindmarsh–Rose polynomial ordinary differential system (1). The proof of Theorem 2 is done using the averaging theory, while the proof of Theorem 4 uses a result of Kuznetsov.

In general, the technic here described for proving the zero-Hopf bifurcation using the averaging theory can be applied to other differential systems for studying their zero-Hopf bifurcations.

References

Hindmarsh, J.L., Rose, R.M.: A model of neuronal bursting using three coupled first order differential equations. Proc. R. Soc. Lond. Ser. B, Biol. Sci. 221 (1984), 87–102. 246, 541–551 (2009)

Hodgkin, A.L., Huxley, A.F.: A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. Lond. 117, 500–544 (1952)

Belykh, V.N., Belykh, I.V., Colding-Jorgensen, M., Mosekilde, E.: Homoclinic bifurcations leading to the emergence of bursting oscillations in cell models. Eur. Phys. J. E: Soft Matter Biol. Phys. 3, 205–219 (2000)

González-Miranda, J.M.: Observation of a continuous interior crisis in the Hindmarsh–Rose neuron model. Chaos 13, 845–852 (2003)

González-Miranda, J.M.: Complex bifurcation structures in the Hindmarsh–Rose neuron model. Int. J. Bifurc. Chaos 17, 3071–3083 (2007)

Innocenti, G., Genesio, R.: On the dynamics of chaotic spiking–bursting transition in the Hindmarsh–Rose neuron. Chaos 19, 023124 (2009)

Innocenti, G., Morelli, A., Genesio, R., Torcini, A.: Dynamical phases of the Hindmarsh–Rose neuronal model: studies of the transition from bursting to spiking chaos. Chaos 17, 043128 (2007)

Linaro, D., Champneys, A., Desroches, M., Storace, M.: Codimension-two homoclinic bifurcations underlying spike adding in the Hindmarsh–Rose burster. SIAM J. Appl. Dyn. Syst. 11, 939–962 (2012)

Silnikov, A., Kolomiets, M.: Methods of the qualitative theory for the Hindmarsh–Rose model: a case study. A tutorial. Int. J. Bifurc. Chaos 18, 2141–2168 (2008)

Storace, M., Linaro, D., de Lange, E.: The Hindmarsh–Rose neuron model: bifurcation analysis and piecewise-linear approximations. Chaos 18, 033128 (2008)

Terman, D.: Chaotic spikes arising from a model of bursting in excitable membranes. SIAM J. Appl. Math. 51, 1418–1450 (1991)

Terman, D.: The transition from bursting to continuous spiking in excitable membrane models. J. Nonlinear Sci. 2, 135–182 (1992)

Wang, X.J.: Genesis of bursting oscillations in the Hindmarsh–Rose model and homoclinicity to a chaotic saddle. Phys. D 62, 263–274 (1993)

Cid-Montiel, L., Llibre, J., Stoica, J.C.: Zero–Hopf bifurcation in a hyperchaotic Lorenz system. Nonlinear Dyn. 75(3), 561–566 (2014)

Llibre, J., Pessoa, C.: The Hopf bifurcation in the Shimizu–Morioka system. Nonlinear Dyn. 79, 2197–2205 (2015)

Verhulst, F.: Nonlinear Differential Equations and Dynamical Systems. Universitext. Springer, New York (1996)

Kuznetsov, Y.: Elements of applied bifurcation theory, applied mathematical sciences, vol. 112. Springer, New York (2004)

Acknowledgments

We thank the reviewers for their comments and suggestions that help us to improve the presentation of this article. The first author is partially supported by FAPESP Grant 2013/2454-1, CAPES Grant 88881.068462/2014-01 and EU Marie-Curie IRSES Brazilian-European partnership in Dyn. Systems (FP7-PEOPLE-2012-IRSES 318999 BREUDS). The second author is partially supported by a MINE–CO Grant MTM2013-40998-P, an AGAUR Grant Number 2014SGR568, the Grants FP7-PEOPLE-2012-IRSES 318999 and 316338, and a CAPES Grant Number 88881. 030454/2013–01 from the program CSF–PVE. The third author is partially supported by the FAPEG, by the CNPq Grants Numbers 475623/2013-4 and 306615/2012-6 and, by the CAPES Grant Numbers PROCAD 88881.068462/2014-01 and by CSF/PVE-88881.030454/2013-01.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Buzzi, C., Llibre, J. & Medrado, J. Hopf and zero-Hopf bifurcations in the Hindmarsh–Rose system. Nonlinear Dyn 83, 1549–1556 (2016). https://doi.org/10.1007/s11071-015-2429-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-015-2429-y