Abstract

This study develops a novel approach for identifying buildings that were damaged in the aftermath of the Kahramanmaraş earthquakes on February 6, 2023, which were among the most devastating in the history of Türkiye. The approach involves using two pre-event and one post-event Sentinel-1 and Sentinel-2 images to detect changes in the varying-sized and shaped buildings following the earthquakes. The approach is based on the hypothesis that the radiometric characteristics of building pixels should change after an earthquake, and these changes can be detected by analysing the spectral distance between the building pixel vectors before and after the earthquake. The proposed approach examines the changes in building pixel vectors on pre-event and post-event Sentinel-2 MultiSpectral Instrument images. It also incorporates the backscattering features of Sentinel-1 Synthetic Aperture Radar images, as well as the variance image, a feature that is derived from a Grey-Level Co-occurrence Matrix, and the Normalized Difference Built-up Index image, which were derived from the optical data. The approach was tested on three sites, two of which were in Kahramanmaraş and the third in Hatay city. The results showed that the proposed method was able to accurately identify damaged and undamaged buildings with an overall accuracy of 75%, 84.4%, and 73.8% in test sites 1, 2, and 3, respectively. These findings demonstrate the potential of the proposed approach to effectively identify damaged buildings in post-disaster situations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

On February 6, 2023, Türkiye was struck by two major earthquakes, resulting in significant damage and loss of life. The first earthquake, with a magnitude of M 7.8, hit the city of Kahramanmaraş at around 4:17 a.m. local time. The epicentre was located at a depth of about 17.9 kms. The second earthquake, with a magnitude of M 7.5, occurred just nine hours later, at 13:24 p.m. local time, with its epicentre located at a depth of 10 kms (https://earthquake.usgs.gov/storymap/index-turkey2023.html). According to the Disaster and Emergency Management Authority of Türkiye (AFAD), the death toll from the earthquakes has exceeded 50,000, and over 100,000 people have been injured (https://www.bbc.com/turkce/articles/c51kdv8d15jo). The consequences of the earthquakes have affected around 14 million people in eleven cities. The February 6 earthquakes are exceptional in terms of both their type and the occurrence of two large and severe earthquakes at short intervals. Türkiye is located in an area of high seismic activity, and earthquakes are not uncommon in the country. However, the February 6 earthquakes were particularly devastating due to their proximity to populated areas and the relatively shallow depth at which they occurred.

Taking quick action after an earthquake is crucial for a number of reasons. Firstly, it can help protect the people from potential harm. After an earthquake, there may be hazards such as aftershocks, gas leaks, fires, and structural damage that pose a risk to people's safety. Taking swift action can help prevent injuries and fatalities. Additionally, quick action can allow authorities to assess the extent of the damage and coordinate relief efforts. The faster they can determine the areas most affected, the sooner they can mobilize rescue and recovery efforts, and provide aid to those who need it the most. It is also essential to act quickly to secure the buildings and prevent further damage or collapse. Overall, taking quick action after an earthquake can help save lives, minimize the impact of the disaster, and facilitate the recovery process.

1.1 Literature review

Following an earthquake, it is crucial to assess the damage to buildings and infrastructure to determine the extent of the impact and identify areas that require immediate attention. Traditional methods of damage assessment, such as visual inspection and field surveys, can be time-consuming, expensive, and often hazardous. Moreover, they might not offer a thorough analysis of the destruction, particularly in large-scale disasters. Remote sensing techniques have emerged as a powerful tool for detecting and analysing changes in buildings caused by earthquakes. These techniques use various sensors and imaging platforms to collect data from a distance, enabling rapid and comprehensive assessment of earthquake damage. Remote sensing techniques can furnish information on the extent and severity of the destruction, as well as the location of critical infrastructure, such as hospitals and water supply systems, that may have been affected. Several methods have been developed to identify structural damage using data obtained from remote sensing techniques. These methods can be categorized into two main classes (Dong and Shan 2013): (1) methods that identify damage using pre- and post-event data, and (2) methods that rely solely on post-event data.

1.1.1 Methods based on pre- and post-event data

Automated change detection is challenging when comparing pre- and post-earthquake images due to differing imaging parameters. Hence, visual interpretation of images of pre- and post-event data is widely used for building damage detection. Several studies found visual interpretation to be effective (Saito et al. 2004; Saito and Spence 2005; Adams et al. 2005; Yamazaki et al. 2005), with pre-event imagery being particularly useful for detecting lower damage grades (Yamazaki et al. 2005). However, visual interpretation is not suited for quick damage assessment over a large region because it takes time, calls for qualified operators, and creates a lot of labour (Dong and Shan 2013). The developments in the damage identification techniques have caused a lack of encounter in the literature with visual interpretation-based strategies in recent years.

There are also some methods that reveal the damage by comparing the pre- and post-event data through mathematical operations such as ratioing and subtraction. One common approach is to co-register and subtract pre- and post-event images to produce a residual image that highlights changes (Ishii et al. 2002; Oommen et al. 2012). The difficulty of this technique is finding the best threshold used to identify the extent of damage. Other methods focused on comparing brightness values (Yusuf et al. 2001), colour difference (Ishii et al. 2002), and image structure features (Zhang et al. 2003) between pre- and post-event images. Sakamoto et al. (2004) introduced a non-linear mapping strategy that mapped the post-event image onto the pre-event image in a non-linear fashion. The degree of damage to the buildings was estimated by evaluating the distribution of the image matching scores. Kohiyama and Yamazaki (2005) modelled the fluctuation of digital numbers on a pixel-by-pixel basis to evaluate deviation values and detect surface changes. Trinder and Salah (2012) used image differencing as part of four change detection techniques that used Light Detection and Ranging (LiDAR) data and aerial images to reveal the damage in buildings. Turker and Cetinkaya (2005) and Menderes et al. (2015) compared Digital Elevation Models (DEMs) and Normalized Digital Surface Models (nDSMs) produced from pre- and post-earthquake aerial images to detect collapsed buildings caused by earthquakes, respectively. Methods that utilize mathematical operations, such as image differencing, require precise spatial co-registration, which can occasionally pose challenges (Chen and Hutchinson 2010). Chen and Hutchinson (2011) also indicated that conventional change detection methods such as image differencing are not effective when used with very high-resolution satellite images due to the large amount of detailed spatial information present and the potential for noise variations if the images were captured at different times.

A method to detect building damage is by comparing the classification results obtained from pre- and post-event data separately (Al-Khudhairy et al. 2005; Wu et al. 2021). This technique has the advantage of minimizing the impact of variations in radiometric characteristics between the two datasets. However, the accuracy of the outcomes is entirely reliant on the initial classification results (Al-Khudhairy et al. 2005; Dong and Shan 2013; Wu et al. 2021). On the other hand, these methods may not be effective in urban environments where the features are heterogeneous and characterized better by spatial properties like texture, rather than spectral reflectance (Zhang 1999). Moreover, these methods cannot differentiate easily between objects with high spectral overlap, like building roofs and pavements made of the same material (Al-Khudhairy et al. 2005). These restrictions can be circumvented by object-oriented segmentation (Gusella et al. 2005; Yamazaki et al. 2008) and classification techniques, which consider the spatial relationships between pixels when deciding which class is best for each individual pixel. These methods generate a thematic map that maintains spectral and spatial coherence by utilizing information about neighbouring pixels. However, the spatial resolution of the sensor and the size of the features can affect the degree to which adjacent pixels are correlated. Object-oriented approaches, on the other hand, operate on the principle that semantic information is conveyed not by individual pixels, but by significant image objects and their interrelationships (Al-Khudhairy et al. 2005). Khodaverdizahraee et al. (2020) proposed a method that identifies image-objects in the pre- and post-earthquake images and aligns them through segmentation intersection. Then, the method calculates various textural and spectral properties of the images and uses their differences as input features for a classification algorithm. Al-Khudhairy et al. (2005) indicated that, although object-based image segmentation techniques are flexible and user-friendly, they necessitate expert image analysis knowledge and a significant investment of time and effort to determine the optimal settings and methods for segmentation and classification. Alizadeh et al. (2022) generated a change map using a post-classification approach that combines optical and Synthetic Aperture Radar (SAR) images through decision fusion. Some approaches demonstrated the effectiveness of texture information extracted from pre- and post-event data to reveal the damage in buildings aftermath of an earthquake (Chesnel et al. 2008; Klonus et al. 2012; Khodaverdizahraee et al. 2020).

When speaking of the techniques used for the identification of earthquake-induced changes in buildings, it is impossible to talk without mentioning Deep Learning (DL)-based techniques, which have continued to rapidly develop in recent years. One of the main advantages of using DL techniques is their ability to automatically extract complex features from data, enabling accurate identification of even subtle changes that may be difficult for traditional methods to detect. Additionally, DL models can be trained on large datasets, making them more robust and capable of generalizing to new, unseen data. In recent years, the increasing usage of Convolutional Neural Networks (CNNs) for various recognition tasks has resulted in the widespread adoption of these methods for detecting building damage (Nex et al. 2019). Duarte et al. (2019), Ji et al. (2019), Ghaffarian et al. (2019), Xu et al. (2019), Bhangale et al. (2019) and Kalantar et al. (2020) used CNNs to reveal the change in buildings aftermath of an earthquake through the use of pre- and post-event images. One major drawback of using DL-based techniques is the need for large amounts of data to train these models effectively, which may be hard to obtain in the event of an earthquake, given the issues described above (Zhu et al. 2017; Takhtkeshha et al. 2023). Hence, some approaches were developed to tackle a reduced number of training data (Li et al. 2018). Therefore, it is beneficial to have the capability to include images of varying types and sizes in the training model (Kerle et al. 2019).

1.1.2 Methods based on post-event data

Approaches utilizing both pre- and post-event data can achieve superior accuracy compared to methods that rely solely on post-event data (Dong and Shan 2013; Duarte et al. 2019). Nevertheless, multi-temporal techniques have a significant drawback in that several cities, particularly those in developing countries, lack pre-event reference data (Li et al. 2011). Even if pre-event data are accessible, reliable and precise outcomes may be difficult to obtain due to substantial spectral variations between pre- and post-event data (Kaya et al. 2011; Dong and Shan 2013).

The practice of visually interpreting post-earthquake optical data to identify damaged buildings was common years ago. Ogawa and Yamazaki (2000) achieved over 70% accuracy in building damage identification using single and stereoscopic aerial photographs for the Kobe earthquake. Yamazaki et al. (2004) used only post-event QuickBird imagery for the Boumerdes earthquake and discovered that pre-event images were essential for detecting minor damage grades in visual analysis. Recent advances in damage identification techniques have resulted in a decreased prevalence of visual interpretation-based strategies in the literature.

An interesting study was conducted by Brunner et al. (2010). They estimated the 3D parameters of buildings from pre-event optical imagery, predicted what undamaged buildings would look like in post-event SAR image, and compared this to the actual post-event SAR image to determine if the building was damaged. In order to reveal the earthquake-induced changes in buildings, Sumer and Turker (2005) used gradient orientation analysis, Turker and Sumer (2008) utilized image segmentation techniques, Ye et al. (2014) used fuzzy reasoning techniques, Gong et al. (2016) applied Machine Learning (ML) algorithms, Janalipour and Mohammadzadeh (2019) applied the k-means clustering algorithm on LiDAR data, Nie et al. (2021) used the optimization of polarimetric contrast enhancement matching algorithm on SAR data.

By using texture features, the identification of subtle changes in the texture pattern caused by building damage can be achieved, which makes them a powerful tool for building damage detection and has led to their wide use in recent years. Dell'Acqua et al. (2011), Ye et al. (2014), Gong et al. (2016), Janalipour and Mohammadzadeh (2019) and Alataş and Taşkın (2019) used texture features to detect the earthquake-induced changes in buildings from post-event data. One of the main challenges with using texture features is their sensitivity to image noise, which can affect the accuracy of the extracted features. Additionally, the effectiveness of texture features in building damage detection may reduce in regions with homogeneous textures, where the texture pattern of buildings does not change significantly after an earthquake. Furthermore, the selection of appropriate texture feature parameters, such as the size of the analysis window and the number of orientations, can be challenging, and the optimal values may vary depending on the type of data and the specific application. Despite these limitations, texture features remain a valuable tool in building damage detection and are often used in conjunction with other techniques to improve the accuracy of the results.

A thorough literature review revealed that many recent studies have employed DL techniques to identify the changes in buildings from only post-event data. In order to reveal the changes in buildings with only post-event data; Mohammadi et al. (2019) used a 3D fully convolutional network model on photogrammetric point clouds; Li et al. (2019) used a convolution auto-encoder; Ji et al. (2018, 2020) and Miura et al. (2020) used CNNs; Abdi et al. (2021) used a pre-trained Residual Neural Network (RNN); Koukouraki et al. (2021) employed the few-shot learning, which is a ML approach for cases where the amount of labelled data is limited; Abdi et al. (2022) used deep transfer learning; and Zhan et al. (2022) used a mask region-based CNN (Mask R-CNN) model. The study conducted by Duarte et al. (2019) is noteworthy. The study involved the comparison of the performance of three CNN architectures utilizing pre- and post-event images and another CNN architecture utilizing only post-event images. The results showed that the CNN architectures utilizing pre- and post-event data were more resilient. The ‘black-box’ nature of DL models makes it challenging to comprehend what exactly DL has learned during training. Additionally, the computational resources required for training and deploying DL models can be significant, which may limit their feasibility in resource-limited situations.

The literature review shows that the majority of the previous studies used high-resolution images to detect the earthquake-induced changes in buildings. However, obtaining high-resolution commercial images may not always be possible due to financial restrictions, especially when the earthquake hits a large-extent area. The acquisition of high-resolution images may not be possible even if sufficient budget is available. Atmospheric conditions may not allow high-resolution images to be taken immediately after an earthquake for rapid response. The literature review also shows that many previous studies have been conducted using data produced from stereo aerial images captured by Unmanned Aerial Systems (UASs). Obtaining and processing UAS images could be a time-consuming process, especially in the case of earthquakes that have an impact over a wide area. This, of course, eliminates the possibility of emergency response.

A significant amount of current studies have used conventional ML methods and DL techniques to reveal the changes in the buildings after earthquakes. While conventional ML techniques have shown promise in identifying earthquake-induced changes in buildings, there are also several disadvantages that must be considered. One major challenge is the parameterization of conventional ML algorithms. Inaccurate parameters may lead to misleading results. Additionally, the accuracy of the results obtained from conventional ML techniques may be limited by the quality of the input data, such as the resolution and quality of the images used for analysis. This can lead to false positives or false negatives in identifying damage, which could have serious consequences in emergency response situations. When it comes to the use of DL techniques for damage detection, they have shown great potential in identifying earthquake-induced changes in buildings after earthquakes. However, there are some notable disadvantages that need to be taken into account. One significant challenge is the requirement for large volumes of high-quality labelled data to train the models effectively. In some cases, obtaining such data may be difficult or impossible. Furthermore, DL models are often complex and can be difficult to interpret, leading to potential issues with model transparency and explainability. This can make it challenging to identify and address potential issues with the models and can limit their effectiveness in practice. Additionally, the accuracy of the models may be affected by the variability in building designs and construction materials, which can lead to inaccurate assessment of damage in some cases. Another challenge associated with using DL techniques is the significant computational power and expertise required to train and implement the models. This can be a significant barrier for some organizations and communities, particularly those with limited resources or technical expertise.

Considering all these, an effective methodology is needed to quickly identify the changes in buildings following earthquakes that affect large areas, such as the Kahramanmaraş earthquakes that occurred on February 6th, 2023. The objective of this study is to introduce an automated approach that relies on the use of Sentinel-1 SAR and Sentinel-2 MultiSpectral Instrument (MSI) images captured before and after the Kahramanmaraş earthquakes to detect changes in buildings. Using Sentinel-2 MSI images will provide a high spectral resolving power, which is also a significant advantage over the previous studies that utilized multispectral or UAS images with less spectral resolution. By the combined use of data from these satellites, the drawbacks of poor atmospheric conditions and time of day that impact data collection can be overcome. In addition, using data from these satellites will not only enable work in large areas but also eliminate budget requirements. Compared to many previous studies that identified the damage on pixel basis, the proposed approach focuses on the identification of the changes in building scale through the use of satellite images with medium spatial resolution. Another merit of the proposed approach is that it takes only a couple of seconds to identify the damaged and undamaged buildings, even in large-extent areas. One major benefit of the proposed approach, in contrast to ML- or DL-based techniques, is that it does not necessitate prior knowledge of the working domain, hence, eliminating the need for training procedure. To the best of our knowledge, this study will be the first to study the effects of these catastrophic earthquakes.

2 Material and methods

2.1 Test sites and data used

The capacity of the proposed approach was tested in three test sites, two of which were in Kahramanmaraş and one was in Hatay. Both cities are located in the Mediterranean Region of Türkiye. The reason for selecting the test sites from these cities is that they experienced the most severe destruction in the earthquake. Test sites 1 and 2, which are located in the city centre of Kahramanmaraş, cover an area of approximately 9 ha and 48 ha, respectively. Test site 3, which is located in Antakya, the centre province of the city of Hatay, covers an area of approximately 80 ha. Site 3 was selected as being larger than the others to test the performance of the proposed method on a greater number of buildings. Another reason for selecting these test sites was the availability of robust reference data for these areas. Test sites can be seen in Fig. 1.

The proposed approach uses two Sentinel-1 and two Sentinel-2 images taken before the event and one Sentinel-1 and Sentinel-2 image taken after. The Sentinel-1 and Sentinel-2 satellites revisit a location every 10 days, whereas the combined constellation leads to a revisit time of approximately six days. The short revisit time of these satellites can provide timely updates on the displacement of buildings and the ground surface caused by the earthquake. Hence, the combined use of data from these satellites makes it possible to obtain more frequent and regular observations of earthquake-affected areas, leading to better monitoring of the damages caused by the earthquake. This advantage of the Sentinel-1 and Sentinel-2 satellites played a significant role in their use in the proposed methodology. In this study, images from the date closest to the earthquake were attempted to be used in order to obtain the most accurate results. All satellite images were obtained from Google Earth Engine (GEE) cloud computing platform.

The current study used pre-processed Sentinel-2 MSI (Level 2A, Bottom-Of-Atmosphere surface reflectance product) images with collection id ‘COPERNICUS/S2_SR’. The images were filtered so that the cloudy pixel percentage would be less than 10% to get a clear look over the test sites. The Sentinel-2 MSI data comprises 13 spectral bands having spatial resolutions between 10 and 60 m. However, experiments showed that the Sentinel-2 MSI bands 2, 3, 4, 8 and 8A provided the most robust results in the identification of the earthquake-induced changes in buildings, which is why these spectral bands were used within the suggested approach. The current study also used the Sentinel-1 SAR imagery data with collection id ‘COPERNICUS/S1_GRD’. This collection provides Ground Range Detected (GRD) scenes, which were pre-processed by the Sentinel-1 Toolbox. The pre-processing stage comprised the removal of thermal noise, radiometric calibration, and terrain correction (https://developers.google.com/earth-engine/datasets/catalog/COPERNICUS_S1_GRD#description). The data collection was filtered to obtain images at Interferometric Wide Swath (IW) mode with dual polarization (i.e., VV and VH) in ascending orbit. As a final step, the pre- and post-event Sentinel-1 SAR images underwent a noise reduction process using a refined Lee filter (Lee et al. 2008). The characteristics of the satellite data used in the current study are summarized in Table 1.

The OpenStreetMap (OSM) open-source platform provided the building data used as a reference for obtaining the building footprints in the test locations. Due to the numerous inaccuracies in the building data obtained from the OSM, the building data downloaded in shapefile (.shp) format for all test sites were manually corrected using both the high-resolution GEE base images and 50 cm free-to-use Planet images acquired before and after the earthquake. The Planet images used, which can also be seen in Fig. 1, were downloaded from https://github.com/kalkan/Turkey-Earthquake-2023-GeoData/. After manual corrections, very high-resolution pre- and post-event images were used to identify the damaged and undamaged buildings after the event. Observations showed that 45 buildings out of 96 were damaged in site 1, while 95 buildings out of 263 were damaged in site 2, and 587 buildings out of 907 were damaged in site 3. The corrected building data were labelled considering these observations. Figure 2 shows the reference pre/post-event Planet images and pre/post-event Sentinel-2 MSI images used.

Reference pre/post-event Planet images and pre/post-event Sentinel-2 MSI images. a, b and c Reference pre-event Planet images for sites 1, 2 and 3, respectively; d, e and f Reference post-event Planet images for sites 1, 2 and 3, respectively; g, h and i Pre-event Sentinel-2 MSI images (acquisition date: 01/25/2023) for sites 1, 2 and 3, respectively; j, k and l Post-event Sentinel-2 MSI images for sites 1, 2 and 3, respectively

2.2 Proposed methodology

2.2.1 Generation of texture features

The method proposed in this study was developed in MATLAB environment. The very first step of the suggested approach is to produce the texture features for the pre- and post-event images of the test sites. To derive the texture features more robustly, Principal Component Analysis (PCA) is applied to the Sentinel-2 MSI images of the test sites and the variance Grey-Level Co-occurrence Matrix (GLCM) feature, one of the most discriminative texture features derived from the GLCM, is produced from the first PC obtained. The GLCM variance texture feature (\(V\)) is calculated as (Haralick et al. 1973);

where \(p\) denotes \(\left(i,j\right)\)th element in a normalized GLCM.

2.2.2 Generation of normalized difference built-up index (NDBI) images

The next step of the suggested approach is to calculate the NDBI images from the pre- and post-event images of the test sites. The NDBI is calculated as (Zha et al. 2003);

where \(\mathrm{SWIR}\) and \(\mathrm{NIR}\) represent the short-wave infrared (i.e., band 11) and near-infrared (i.e., band 8) bands of the Sentinel-2 MSI images, respectively.

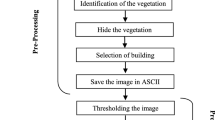

2.2.3 Estimation of the damaged and undamaged buildings

The suggested approach is based on the fact that the radiometric characteristics of the pixels corresponding to buildings should change after the earthquake. For each pre- and post-event image, the pixels corresponding to each building are determined by establishing a relationship between all of the raster data (including the Sentinel-1 SAR, Sentinel-2 MSI, GLCM variance and NDBI images produced) and building data obtained from OSM. This approach allows for the specification of the pixels that fall in the boundary of each building polygon vector. The suggested approach determines if a building is damaged during the earthquake considering four criteria; spectral distance, VV-VH correlation, GLCM variance correlation and NDBI correlation.

-

Spectral distance: A pixel vector is formed for each group of pixels within a building polygon. Each pixel vector consists of three elements, the first two come from the pre-event images and the last one from the post-event images. The elements of each vector are calculated by averaging the grey values of the corresponding building pixels in the Sentinel-2 MSI bands (i.e., B2, B3, B4, B8 and B8A). Then, for each group of pixels within a building polygon, the spectral distance is computed between the pixel vectors. Let \({a}_{1}\), \({a}_{2}\) (i.e., pre-event grey values) and \(b\) (i.e., post-event grey value) be the elements of a pixel vector derived for a building polygon, the spectral distance (\(s\)) is calculated as;

for \(i=1:n\) (\(n=2\) in our case),

-

VV-VH correlation: A Correlation Coefficient (i.e., \({\mathrm{CC}}_{\mathrm{pre}}^{\mathrm{VV}-\mathrm{VH}}\)) is calculated between the building pixel groups of two pre-event Sentinel-1 SAR VV-VH images. A CC (i.e., \({\mathrm{CC}}_{\mathrm{post}}^{\mathrm{VV}-\mathrm{VH}}\)) is also computed between the building pixel group of a pre-event and the post-event Sentinel-1 SAR VV-VH image. The general CC formula is;where \(\mathrm{cov}\) refers to covariance, whereas the \({\sigma }_{x}\) and \({\sigma }_{y}\) denote the standard deviations of \(x\) and \(y\), respectively.

$$\mathrm{CC}=\frac{\mathrm{cov}\left(x,y\right)}{{\sigma }_{x}{\sigma }_{y}}$$(4)

-

GLCM Variance correlation: A CC (i.e., \({\mathrm{CC}}_{\mathrm{pre}}^{\mathrm{GLCM}}\)) is calculated between the building pixel groups of two pre-event GLCM variance images. A CC (i.e., \({\mathrm{CC}}_{\mathrm{post}}^{\mathrm{GLCM}}\)) is also computed between the building pixel group of a pre-event and the post-event GLCM variance image.

-

NDBI correlation: A CC (i.e., \({\mathrm{CC}}_{\mathrm{pre}}^{\mathrm{NDBI}}\)) is calculated between the building pixel groups of two pre-event NDBI images. A CC (i.e., \({\mathrm{CC}}_{\mathrm{post}}^{\mathrm{NDBI}}\)) is also computed between the building pixel group of a pre-event and the post-event NDBI image.

The suggested approach determines whether a group of pixels belongs to a damaged building by using a threshold, which is calculated using the Otsu algorithm (Otsu 1979), one of the most widely-used thresholding algorithms. The algorithm determines the optimal threshold value that separates the vectors into different classes based on their component values. This is achieved by calculating the threshold that maximizes the between-class variance of the vector components. Once the threshold value is determined, the vectors are separated into two clusters based on their component values relative to the threshold value. The original version of the Otsu technique offers satisfactory results in cases where the data used is in Gaussian distribution (Kittler and Illingworth 1985; Kurita et al. 1992; Cao et al. 2021). However, if the data has a high variance, then the threshold value provided by Otsu algorithm is likely to be greater than it should be. In such instances, the improved Otsu technique suggested by Yuan et al. (2015) can be utilized. Hence, the suggested technique examines the distribution of the spectral distance values computed to determine whether to use the original or improved version of the Otsu algorithm for each test site. Since the spectral distances calculated for sites 1 and 2 follow a normal distribution, the original version of the Otsu algorithm is used for these sites. The improved version of the Otsu algorithm is used for site 3, as the spectral distance values calculated for this site do not conform to a normal distribution. The MATLAB script is written to automatically select the appropriate version of the Otsu algorithm based on the distribution of the spectral distance values.

-

The original version of the Otsu algorithm estimates the threshold (\(t\)) as (Otsu 1979; Yuan et al. 2015);

Let the spectral distance data (\(s\)) be divided into two classes as \(c1\) and \(c2\). In this case, the spectral distance values from the minimum (\({s}_{\mathrm{min}}\)) to \(t\) are gathered in \(c1\). Similarly, the spectral distance values that fall between the minimum spectral distance value greater than \(t\) (\({s}_{k}\)) and the maximum (\({s}_{\mathrm{max}}\)) are stored in \(c2\). Let \({n}_{i}\) be the number that shows the frequency of a spectral distance value \(i\) in \(s\). The probability of occurrence (\({p}_{i}\)) of the spectral distance values (\({n}_{i}\)) is calculated as;

where \(n\) is the total number of buildings in the test site. The \({P}_{0}\left(t\right)\) and \({P}_{1}\left(t\right)\) cumulative probabilities for the classes \(c1\) and \(c2\) are calculated as;

The mean value for each class is computed as;

The between-class variance (\({\sigma }_{b}^{2}\)) for the threshold \(t\) is computed as;

The final threshold (\(T\)) value is determined as;

-

The improved version of the Otsu algorithm estimates the threshold (\(t\)) as (Yuan et al. 2015);where \(\omega\) is a weighting factor that ranges between 0 and 1. Yuan et al. (2015) recommended setting \(\omega\) to \({P}_{0}\left(t\right)\).

$${\sigma }_{b}^{2}\left(t\right)={\omega P}_{0}\left(t\right){\left({\mu }_{0}\left(t\right)\right)}^{2}+{P}_{1}\left(t\right){\left({\mu }_{1}\left(t\right)\right)}^{2}$$(12)

Several previous studies have enhanced the performance of the Otsu algorithm through the use of metaheuristic optimization algorithms (Hamdaoui et al. 2015; Pruthi and Gupta 2016; Shahabi et al. 2020). Hence, the proposed method optimizes the threshold value obtained by the Otsu algorithm using the Jaya (Rao 2016), one of the most recent powerful metaheuristic optimization algorithms. The Jaya algorithm, which derives its name from the Sanskrit word for ‘victory’, operates on the premise that a potential solution to a problem iteratively progresses towards the optimal solution while steering clear of inferior solutions. The Jaya algorithm does not require any algorithm-specific parameters and also can converge very fast, which is why it was preferred in this study. Suppose we have an objective function, \(f(x)\), that needs to be optimized (Eqs. (10) or (12)), where \(x\) is a set of decision variables (i.e., spectral distance values). Let \(i\) denote the current iteration number, \(m\) denote the total number of decision variables (i.e., \(m=1\)), and \(l\) denote the population size (i.e., the number of potential solutions, \(k=\mathrm{1,2},...,l\)). Additionally, let \(b\) and \(w\) represent the best and worst fitness values obtained from the best and worst solutions among all the candidate solutions, respectively. During the \(i\)th iteration, the value of the \(j\)th variable for the \(k\)th solution (\({X}_{j,k,i}\)) is updated as (Rao 2016);

where \({X^{\prime}}_{j,k,i}\) stands for the updated value of \({X}_{j,k,i}\). \({X}_{j,b,i}\) and \({X}_{j,w,i}\) represent the \(j\)th variable of the best and worst candidate solutions, respectively. Additionally, \({r}_{1,j,i}\) and \({r}_{2,j,i}\) denote two random numbers generated between 0 and 1 for the variable \(j\) during the \(i\)th iteration. If the \({X^{\prime}}_{j,k,i}\) yields a better solution than \({X}_{j,k,i}\), it will be retained and passed on to the next iteration.

As a final step, the suggested approach decides whether a group of pixels belongs to a damaged building if;

-

The spectral distance (\(s\)) value of a building is greater than \(T\),

-

And, \({\mathrm{CC}}_{\mathrm{post}}^{\mathrm{VV}-\mathrm{VH}}<{\mathrm{CC}}_{\mathrm{pre}}^{\mathrm{VV}-\mathrm{VH}}\), or \({\mathrm{CC}}_{\mathrm{post}}^{\mathrm{GLCM}}<{\mathrm{CC}}_{\mathrm{pre}}^{\mathrm{GLCM}}\), or \({\mathrm{CC}}_{\mathrm{post}}^{\mathrm{NDBI}}<{\mathrm{CC}}_{\mathrm{pre}}^{\mathrm{NDBI}}\).

The flowchart of the proposed method is shown in Fig. 3.

3 Results and discussion

This section presents the results of the suggested approach qualitatively and quantitatively. Figures 4, 5 and 6 show the detected and reference buildings for comparison.

The quantitative evaluation results obtained from the proposed approach are given in Table 2. As seen in the table, the proposed approach was able to identify 37, 76, and 557 buildings that were damaged due to the earthquake in sites 1, 2, and 3, respectively. In addition, the method also identified 59, 187, and 350 buildings that were undamaged in the same sites. The Omission Error (OE), which indicates the percentage of undamaged buildings that were mistakenly determined as damaged, was calculated to be 15.7%, 6.5%, and 32.5% for sites 1, 2, and 3, respectively. The Commission Error (CE), which represents the percentage of damaged buildings that were mistakenly identified as undamaged, was found to be 35.5%, 31.5%, and 22.8% for the respective sites. The Total Error (TE), which shows the overall percentage of inaccurate identifications, was determined to be 25%, 15.6%, and 26.2% for sites 1, 2, and 3, respectively. The Overall Accuracy (OA), which indicates the overall percentage of correct identifications, was found to be 75%, 84.4%, and 73.8% for the respective sites.

The OEs were found to be relatively low for sites 1 and 2 but were higher for site 3. The difficulty in identifying the damaged buildings in site 3 can be explained by two factors: the density of the buildings and the presence of numerous buildings with different sizes and shapes. The CEs were also found to be lower for site 3, indicating that the method performed better in identifying damaged buildings in site 3 than in the other two sites. The OA of the proposed method was found to be highest in site 2, indicating that the method performed the best in identifying damaged buildings in this site. The OA was found to be lowest in site 3, indicating that the method had the most difficulty in identifying damaged buildings in this site.

The results given in Table 2 demonstrate the potential of the proposed approach. This is a significant finding as it can help in quickly assessing the extent of damage caused by an earthquake, allowing for prompt response and recovery efforts. However, the method also had a high CE, which means that a significant number of damaged buildings were identified as undamaged. This can be attributed to several factors, such as the threshold value obtained by the metaheuristics-based Otsu algorithm, limitations of the satellite images and the complexity of identifying subtle changes in the building structures.

The suggested approach centres on utilizing the dissimilarity in spectral distance between the pre-event and post-event pixel vectors to detect changes in buildings caused by earthquakes. Such an approach leads to several advantages when identifying earthquake-induced changes in buildings. Firstly, it enables the detection of subtle changes in the building pixels, which may not be visible to the naked eye or visible in conventional change detection methods. This is particularly important when detecting small or partial damages that may go unnoticed by other methods. Secondly, it is a quantitative approach that provides an objective and consistent measure of change, which can be used to monitor changes over time and across different spatial scales. Thirdly, this method is flexible and can be applied to different types of satellite imagery, including multispectral, hyperspectral, and radar data, allowing for more comprehensive monitoring and analysis of earthquake-induced changes. Additionally, this method does not require prior knowledge or information about the buildings or the damage, making it suitable for large-scale monitoring and analysis.

The combined use of Sentinel-1 SAR and Sentinel-2 MSI data was found to be partially advantageous in the identification of earthquake-induced changes in buildings. Sentinel-1 SAR images can provide information on the texture changes on the ground surface caused by the earthquake. On the other hand, Sentinel-2 MSI images can provide medium-resolution details of the buildings before and after the earthquake. By combining these two types of data, it was possible to detect and map the changes in the buildings caused by the earthquake with promising accuracy. This can be particularly useful in identifying areas that require immediate attention and in prioritizing rescue and recovery efforts.

The suggested approach utilizes the NDBI images and GLCM variance texture feature to identify changes in buildings induced by earthquakes, resulting in several benefits. The use of these data was found to be useful in identifying subtle changes in the images. Of the GLCM texture features, the variance feature provided the best overall accuracy, which is why it was incorporated in the proposed approach. Another advantage of using the GLCM variance texture feature is its non-destructive nature. The texture feature can be applied to existing images of buildings without causing any damage or alteration to the structure. This means that the analysis can be performed without disturbing the integrity of the buildings in the test sites, which is essential for the preservation of the structure.

To comprehensively examine the contributions of the data types used (i.e., VV, VH, GLCM, and NDBI) to the proposed methodology in the test sites, the histograms of the CCs computed between pairs of pre-event and post-event images for each data type were analysed in building scale. The resulting CC distributions are presented in Fig. 7.

The figure clearly demonstrates that the CCs changed significantly after the earthquake specifically for the GLCM and NDBI image pairs. This indicates that these particular datasets made the most significant contributions to the proposed methodology. Conversely, the CCs for the VV and VH datasets did not show significant changes after the event, indicating that the utilization of Sentinel-1 SAR data had less impact on the performance of the proposed methodology. The use of logical OR operator in the proposed methodology was found to be useful in finding the damaged buildings with at least one of the features used.

4 Conclusion

This study suggested a novel methodology to detect earthquake-induced changes in buildings after the catastrophic February 6 Kahramanmaraş earthquakes, which are among the most severe disasters in the history of Türkiye. The method is based on the use of pre- and post-event Sentinel-1 SAR and Sentinel-2 MSI images. The capacity of the suggested approach was tested on three test sites, which suffered the most extensive damage. The evaluations showed that the suggested method was found to be effective in identifying damaged buildings, achieving an OA of 75%, 84.4%, and 73.8% in the sites 1, 2, and 3, respectively.

One significant benefit of the suggested approach is its ability to identify changes at building scale on images with medium spatial resolution, which sets it apart from several other techniques that detect changes at pixel level. Identifying earthquake-induced changes on pixel basis could make it difficult to determine which buildings require immediate attention for rescue efforts. The suggested method also has the advantage of rapid identification of damaged and undamaged buildings as it focuses solely on image pixels corresponding to building footprints. This differs from other methods that require analysis of all pixels in the images, which can be time-consuming. The proposed technique can detect changes in a few seconds, which is a significant benefit. It also uses a straightforward strategy and does not require any prior knowledge about the study area. This is a significant advantage over many DL-based methods that require large amounts of training data, which can be difficult to acquire in most cases. Another important advantage of the proposed approach is that it uses free satellite images. Free-to-use satellite images make it easier for researchers, emergency responders, and government officials to access the data they need to make informed decisions without incurring significant costs. Free satellite images, on the other hand, often have a higher temporal resolution, meaning they are available more frequently than commercial images. This enables researchers to track changes over time and monitor the progress of recovery efforts. Commercial satellite images can be costly, have restrictive licensing agreements, and may not provide the same level of coverage and temporal resolution as free satellite images. The proposed method offers another crucial benefit, which is the utilization of both Sentinel-1 SAR and Sentinel-2 MSI data to find the damaged buildings following an earthquake. This allows for the exploitation of the regular revisit time of the Sentinel-1 and Sentinel-2 satellites, which is highly advantageous for techniques that rely on at least one reference data collected before the earthquake—similar to the suggested method. The success of the suggested approach could be hindered by adverse atmospheric and radiometric influences. However, in our research, we overcame this obstacle by utilizing images that were atmospherically and radiometrically corrected. Additionally, the effectiveness of the proposed methodology is affected by the pre-processing procedures applied to Sentinel-1 SAR data. To fully optimize the suggested method, it is also essential to co-register the utilized raster data and building footprint data. The advantages and disadvantages of the proposed method are summarized in Table 3.

The suggested method requires up-to-date building footprints as vector data, which may seem like a disadvantage at first glance. However, considering the importance of up-to-date footprint data for land objects in applications like this, it can be concluded that footprint should be provided by relevant institutions not only for emergency management, but also for other applications regarding land management. On the other hand, although the proposed approach provided promising results, further optimization is needed for more complex landscapes. The relatively high OEs and CEs suggest that the method should be further improved to reduce false-positive and false-negative rates. Future work will focus on optimizing the proposed method to increase its accuracy and effectiveness.

References

Abdi G, Esfandiari M, Jabari S (2021) Building damage detection in post-event high-resolution imagery using deep transfer learning. In: 2021 IEEE international geoscience and remote sensing symposium, pp 531–534. https://doi.org/10.1109/IGARSS47720.2021.9553720

Abdi G, Esfandiari M, Jabari S (2022) A deep transfer learning-based damage assessment on post-event very high-resolution orthophotos. Geomatica 75(4):237–250. https://doi.org/10.1139/geomat-2021-0014

Adams BJ, Mansouri B, Huyck CK (2005) Streamlining post-earthquake data collection and damage assessment for the 2003 Bam, Iran, earthquake using VIEWS™ (visualizing impacts of earthquakes with satellites). Earthq Spectra 21(S1):213–218

Alataş EO, Taşkın G (2019) Attribute profiles in earthquake damage identification from very high resolution post event image. In: 2019 IEEE international geoscience and remote sensing symposium, pp 9299–9302. https://doi.org/10.1109/IGARSS.2019.8899308

Alizadeh N, Beirami BA, Mokhtarzade M (2022) Damage detection after the earthquake using sentinel-1 and 2 images and machine learning algorithms (case study: Sarpol-e Zahab earthquake). In: 2022 12th international conference on computer and knowledge engineering (ICCKE), pp 343–347. https://doi.org/10.1109/ICCKE57176.2022.9960127

Al-Khudhairy DHA, Caravaggi I, Giada S (2005) Structural damage assessments from Ikonos data using change detection, object-oriented segmentation, and classification techniques. Photogramm Eng Remote Sens 71(7):825–837. https://doi.org/10.14358/PERS.71.7.825

Bhangale U, Durbha S, Potnis A, Shinde R (2019) Rapid earthquake damage detection using deep learning from VHR remote sensing images. In: IGARSS 2019 ieee international geoscience and remote sensing symposium, pp 2654–2657. https://doi.org/10.1109/IGARSS.2019.8898147

Brunner D, Lemoine G, Bruzzone L (2010) Earthquake damage assessment of buildings using VHR optical and SAR imagery. IEEE Trans Geosci Remote Sens 48(5):2403–2420. https://doi.org/10.1109/TGRS.2009.2038274

Cao Q, Qingge L, Yang P (2021) Performance analysis of Otsu-based thresholding algorithms: a comparative study. J Sens 2021:1–14. https://doi.org/10.1155/2021/4896853

Chen Z, Hutchinson TC (2010) Image-based framework for concrete surface crack monitoring and quantification. Adv Civ Eng 2010:1–18. https://doi.org/10.1155/2010/215295

Chen Z, Hutchinson TC (2011) Structural damage detection using bi-temporal optical satellite images. Int J Remote Sens 32(17):4973–4997. https://doi.org/10.1080/01431161.2010.494632

Chesnel AL, Binet R, Wald L (2008) Urban damage assessment using multimodal QuickBird images and ancillary data: the Bam and the Boumerdes earthquakes. In: 6th international workshop on remote sensing for disaster management applications

Dell'Acqua F, Gamba P, Polli DA (2011) Earthquake damage assessment from post-event VHR radar data: from Sichuan, 2008 to Haiti, 2010. In: 2011 joint urban remote sensing event. pp 201–204. https://doi.org/10.1109/JURSE.2011.5764755.

Dong L, Shan J (2013) A comprehensive review of earthquake-induced building damage detection with remote sensing techniques. ISPRS J Photogramm Remote Sens 84:85–99. https://doi.org/10.1016/j.isprsjprs.2013.06.011

Duarte D, Nex F, Kerle N, Vosselman G (2019) Damage detection on building façades using multi-temporal aerial oblique imagery. ISPRS Ann Photogramm Remote Sens Spat Inf Sci 4:29–36

Ghaffarian S, Kerle N, Pasolli E, Jokar Arsanjani J (2019) Post-disaster building database updating using automated deep learning: an integration of pre-disaster OpenStreetMap and multi-temporal satellite data. Remote Sens 11(20):2427. https://doi.org/10.3390/rs11202427

Gong L, Wang C, Wu F, Zhang J, Zhang H, Li Q (2016) Earthquake-induced building damage detection with post-event sub-meter VHR TerraSAR-X staring spotlight imagery. Remote Sens 8(11):887. https://doi.org/10.3390/rs8110887

Gusella L, Adams BJ, Bitelli GC, Huyck K, Mognol A (2005) Object-oriented image understanding and post-earthquake damage assessment for the 2003 Bam, Iran, earthquake. Earthq Spectra 21(S1):S225–S238

Hamdaoui F, Sakly A, Mtibaa A(2015) An efficient multi level thresholding method for image segmentation based on the hybridization of modified PSO and Otsu’s method. In: Computational intelligence applications in modeling and control, pp 343–367. https://doi.org/10.1007/978-3-319-11017-2_14

Haralick R, Shanmugan K, Dinstein I (1973) Textural features for image classification. IEEE Trans Syst Man Cybern Syst 3(6):610–621. https://doi.org/10.1109/TSMC.1973.4309314

Ishii M, Goto T, Sugiyama T, Saji H, Abe K (2002) Detection of earthquake damaged areas from aerial photographs by using color and edge information. In: 5th Asian conference on computer vision

Janalipour M, Mohammadzadeh A (2019) A novel and automatic framework for producing building damage map using post-event LiDAR data. Int J Disaster Risk Reduct 39:101238. https://doi.org/10.1016/j.ijdrr.2019.101238

Ji M, Liu L, Buchroithner M (2018) Identifying collapsed buildings using post-earthquake satellite imagery and convolutional neural networks: a case study of the 2010 Haiti earthquake. Remote Sens 10(11):1689. https://doi.org/10.3390/rs10111689

Ji M, Liu L, Du R, Buchroithner MF (2019) A comparative study of texture and convolutional neural network features for detecting collapsed buildings after earthquakes using pre- and post-event satellite imagery. Remote Sens 11:1202. https://doi.org/10.3390/rs11101202

Ji M, Liu L, Zhang R, Buchroithner MF (2020) Discrimination of earthquake-induced building destruction from space using a pretrained CNN model. Appl Sci 10:602. https://doi.org/10.3390/app10020602

Kalantar B, Ueda N, Al-Najjar HA, Halin AA (2020) Assessment of convolutional neural network architectures for earthquake-induced building damage detection based on pre-and post-event orthophoto images. Remote Sens 12(21):3529. https://doi.org/10.3390/rs12213529

Kaya GT, Musaoğlu N, Ersoy OK (2011) Damage assessment of 2010 Haiti earthquake with post-earthquake satellite image by support vector selection and adaptation. Photogramm Eng Remote Sens 77(10):1025–1035

Kerle N, Nex F, Gerke M, Duarte D, Vetrivel A (2019) UAV-based structural damage mapping: a review. ISPRS Int J Geo-Inf 9(1):14. https://doi.org/10.3390/ijgi9010014

Khodaverdizahraee N, Rastiveis H, Jouybari A (2020) Segment-by-segment comparison technique for earthquake-induced building damage map generation using satellite imagery. Int J Disaster Risk Reduct 46:101505. https://doi.org/10.1016/j.ijdrr.2020.101505

Kittler J, Illingworth J (1985) On threshold selection using clustering criteria. IEEE Trans Syst Man Cybern Syst 5:652–655. https://doi.org/10.1109/TSMC.1985.6313443

Klonus S, Tomowski D, Ehlers M, Reinartz P, Michel U (2012) Combined edge segment texture analysis for the detection of damaged buildings in crisis areas. IEEE J Sel Top Appl Earth Obs Remote Sens 5(4):1118–1128. https://doi.org/10.1109/JSTARS.2012.2205559

Kohiyama M, Yamazaki F (2005) Damage detection for 2003 Bam, Iran earthquake using Terra-ASTER satellite imagery. Earthq Spectra 21(S1):267–274. https://doi.org/10.1193/1.2098947

Koukouraki E, Vanneschi L, Painho M (2021) Few-shot learning for post-earthquake urban damage detection. Remote Sens 14(1):40. https://doi.org/10.3390/rs14010040

Kurita T, Otsu N, Abdelmalek N (1992) Maximum likelihood thresholding based on population mixture models. Pattern Recognit 25(10):1231–1240. https://doi.org/10.1016/0031-3203(92)90024-D

Lee JS, Wen JH, Ainsworth TL, Chen KS, Chen AJ (2008) Improved sigma filter for speckle filtering of SAR imagery. IEEE Trans Geosci Remote Sens 47:202–213. https://doi.org/10.1109/TGRS.2008.2002881

Li X, Yang W, Ao T, Li H, Chen W (2011) An improved approach of information extraction for earthquake-damaged buildings using high-resolution imagery. J Earthq Tsunami 5(04):389–399. https://doi.org/10.1142/S1793431111001157

Li Y, Ye S, Bartoli I (2018) Semisupervised classification of hurricane damage from postevent aerial imagery using deep learning. J Appl Remote Sens 12:1–13. https://doi.org/10.1117/1.JRS.12.045008

Li Y, Hu W, Dong H, Zhang X (2019) Building damage detection from post-event aerial imagery using single shot multibox detector. Appl Sci 9(6):1128. https://doi.org/10.3390/app9061128

Menderes A, Erener A, Sarp G (2015) Automatic detection of damaged buildings after earthquake hazard by using remote sensing and information technologies. Procedia Earth Planet Sci 15:257–262. https://doi.org/10.1016/j.proeps.2015.08.063

Miura H, Aridome T, Matsuoka M (2020) Deep learning-based identification of collapsed, non-collapsed and blue tarp-covered buildings from post-disaster aerial images. Remote Sens 12(12):1924. https://doi.org/10.3390/rs12121924

Mohammadi ME, Watson DP, Wood RL (2019) Deep learning-based damage detection from aerial SfM point clouds. Drones 3(3):68. https://doi.org/10.3390/drones3030068

Nex F, Duarte D, Tonolo FG, Kerle N (2019) Structural building damage detection with deep learning: assessment of a state-of-the-art CNN in operational conditions. Remote Sens 11(23):2765. https://doi.org/10.3390/rs11232765

Nie Y, Zeng Q, Zhang H, Wang Q (2021) Building damage detection based on OPCE matching algorithm using a single post-event PolSAR data. Remote Sens 13(6):1146. https://doi.org/10.3390/rs13061146

Ogawa N, Yamazaki F (2000) Photo-interpretation of building damage due to earthquakes using aerial photographs. In: 12th world conference on earthquake engineering, no 1906

Oommen T, Rebbapragada U, Cerminaro D (2012) Earthquake damage assessment using objective image segmentation: a case study of 2010 Haiti earthquake. In: GeoCongress 2012: state of the art and practice in geotechnical engineering, pp 3069–3078. https://doi.org/10.1061/9780784412121.314

Otsu N (1979) A thresholding selection method from gray-level histograms. IEEE Trans Syst Man Cybern SMC 9(1):62–66. https://doi.org/10.1109/TSMC.1979.4310076

Pruthi J, Gupta G (2016) Image segmentation using genetic algorithm and OTSU. In: 5th international conference on soft computing for problem solving: SocProS 2015, pp 473–480

Rao RV (2016) Jaya: a simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int J Ind Eng Comput 7(1):19–34. https://doi.org/10.5267/j.ijiec.2015.8.004

Saito K, Spence RJ (2005) Visual damage assessment using high-resolution satellite images following the 2003 Bam, Iran, earthquake. Earthq Spectra 21(S1):309–318. https://doi.org/10.1193/1.2101107

Saito K, Spence RJ, Going C, Markus M (2004) Using high-resolution satellite images for post-earthquake building damage assessment: a study following the 26 January 2001 Gujarat earthquake. Earthq Spectra 20(1):145–170. https://doi.org/10.1193/1.1650865

Sakamoto M, Takasago Y, Uto K, Kakumoto S, Kosugi Y, Doihara T (2004) Automatic detection of damaged area of Iran earthquake by high-resolution satellite imagery. In: IEEE international geoscience and remote sensing symposium, pp 1418–1421. https://doi.org/10.1109/IGARSS.2004.1368685

Shahabi F, Poorahangaryan F, Edalatpanah SA, Beheshti H (2020) A multilevel image thresholding approach based on crow search algorithm and Otsu method. Int J Comput Intell Appl 19(02):2050015. https://doi.org/10.1142/S1469026820500157

Sumer E, Turker M (2005) Building damage detection from post-earthquake aerial imagery using building grey-value and gradient orientation analyses. In: 2nd international conference on recent advances in space technologies, pp 577–582. https://doi.org/10.1109/RAST.2005.1512634

Sumer E, Turker M (2008) Building-based damage detection due to earthquake using the watershed segmentation of the post-event aerial images. Int J Remote Sens 29(11):3073–3089. https://doi.org/10.1080/01431160701442096

Takhtkeshha N, Mohammadzadeh A, Salehi B (2023) A rapid self-supervised deep-learning-based method for post-earthquake damage detection using UAV data (case study: Sarpol-e Zahab, Iran). Remote Sens 15(1):123. https://doi.org/10.3390/rs15010123

Trinder JC, Salah M (2012) Aerial images and LiDAR data fusion for disaster change detection. ISPRS Ann Photogramm Remote Sens Spat Inf Sci 1:227–232. https://doi.org/10.5194/isprsannals-I-4-227-2012

Turker M, Cetinkaya B (2005) Automatic detection of earthquake-damaged buildings using DEMs created from pre-and post-earthquake stereo aerial photographs. Int J Remote Sens 26(4):823–832. https://doi.org/10.1080/01431160512331316810

Wu C, Zhang F, Xia J, Xu Y, Li G, Xie J, Du Z, Liu R (2021) Building damage detection using U-Net with attention mechanism from pre-and post-disaster remote sensing datasets. Remote Sens 13(5):905. https://doi.org/10.3390/rs13050905

Xu JZ, Lu W, Li Z, Khaitan P, Zaytseva V (2019) Building damage detection in satellite imagery using convolutional neural networks. arXiv preprint arXiv:1910.06444. https://doi.org/10.48550/arXiv.1910.06444

Yamazaki F, Kouchi KI, Matsuoka M, Kohiyama M, Muraoka N (2004) Damage detection from high-resolution satellite images for the 2003 Boumerdes, Algeria earthquake. In: 13th world conference on earthquake engineering, international association for earthquake engineering

Yamazaki F, Yano Y, Matsuoka M (2005) Visual damage interpretation of buildings in Bam city using QuickBird images following the 2003 Bam, Iran earthquake. Earthq Spectra 21(S1):329–336. https://doi.org/10.1193/1.2101807

Yamazaki F, Suzuki D, Maruyama Y (2008) Detection of damages due to earthquakes using digital aerial images. In: 6th international workshop on remote sensing for disaster applications

Ye X, Qin Q, Liu M, Wang J, Wang J (2014) Building damage detection from post-quake remote sensing image based on fuzzy reasoning. In: 2014 IEEE geoscience and remote sensing symposium, pp 529–532. https://doi.org/10.1109/IGARSS.2014.6946476

Yuan XC, Wu LS, Peng Q (2015) An improved Otsu method using the weighted object variance for defect detection. Appl Surf Sci 349:472–484. https://doi.org/10.1016/j.apsusc.2015.05.033

Yusuf Y, Matsuoka M, Yamazaki F (2001) Damage assessment after 2001 Gujarat earthquake using Landsat-7 satellite images. J Indian Soc Remote Sens 29(1):233–239. https://doi.org/10.1007/BF02989909

Zha Y, Gao J, Ni S (2003) Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int J Remote Sens 24(3):583–594. https://doi.org/10.1080/01431160304987

Zhan Y, Liu W, Maruyama Y (2022) Damaged building extraction using modified Mask R-CNN model using post-event aerial images of the 2016 Kumamoto earthquake. Remote Sens 14(4):1002. https://doi.org/10.3390/rs14041002

Zhang Y (1999) Optimisation of building detection in satellite images by combining multi-spectral classification and texture filtering. J Photogramm Remote Sens 50:50–60. https://doi.org/10.1016/S0924-2716(98)00027-6

Zhang JF, Xie LL, Tao XX (2003) Change detection of remote sensing image for earthquake damaged buildings and its application in seismic disaster assessment. In: 2003 IEEE international geoscience and remote sensing symposium, pp 2436–2438

Zhu XX, Tuia D, Mou L, Xia GS, Zhang L, Xu F, Fraundorfer F (2017) Deep learning in remote sensing: a comprehensive review and list of resources. IEEE Geosci Remote Sens Mag 5(4):8–36. https://doi.org/10.1109/MGRS.2017.2762307

Acknowledgements

The Sentinel-1/2 images used in this work were obtained from the GEE. The use of Sentinel data in the GEE is governed by the Copernicus Sentinel Data Terms and Conditions. We would also like to express our sincere gratitude to Planet Labs for providing the Planet data used in the current study. Finally, we would like to thank the reviewers for their constructive comments and suggestions.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Contributions

CSY, VY and KT developed the idea. CSY and VY wrote the scripts for the proposed methodology, analysed the data and prepared the manuscript. KT and NSOA contributed by reviewing and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Consent for publication

The authors declare that the manuscript is original work and has not been published before and is not submitted for publication elsewhere.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Serifoglu Yilmaz, C., Yilmaz, V., Tansey, K. et al. Automated detection of damaged buildings in post-disaster scenarios: a case study of Kahramanmaraş (Türkiye) earthquakes on February 6, 2023. Nat Hazards 119, 1247–1271 (2023). https://doi.org/10.1007/s11069-023-06154-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-023-06154-z