Abstract

Most losses from earthquakes are associated with fully collapsed buildings. So, determining the seismic risk of buildings is essential for building occupants in active earthquake zones. Unfortunately, current methods used to estimate the risk state of large building stocks are insufficient for reliable, fast, and accurate decision-making. In addition, the risk classifications of buildings after major natural disasters depend entirely on the experience of the technical team of engineers. Therefore, the decision on risk distributions of building stocks before and after hazards requires more sustainable and accurate methods that include other means of technological advancement. In this study, the building characteristics dominating the seismic risk outcome were determined using a database of 543 masonry buildings. Later, for the first time in the literature, a new, fast and accurate seismic evaluation method is proposed. The proposed method is thoroughly associated with detailed evaluation results of structures with the help of machine learning algorithms. This study utilized an approach in which six machine learning algorithms work together (i.e., Logistic Regression, Decision Tree, Random Forest, K-Mean Clustering, Support Vector Machine, and Ensemble Learning Method). As a result of the analysis of these algorithms, the correct prediction rates for the learning database (i.e., 434 buildings) and the test database (i.e., 109 buildings) of the proposed method were determined as approximately 96.67% and 95%, respectively. Lastly, machine learning algorithms trained by structures with known after seismic risk results are developed. The proposed method managed to classify risk states with the accuracy of 84.6%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The risk of heavy damage or failure of buildings during a seismic event is a significant concern for communities, as the affected area is generally large. For this reason, determining the earthquake risk of structures has become an essential issue among structural engineers (FEMA356). Most losses from earthquakes are associated with the local or total collapse of buildings. In this context, continuous assessment and monitoring of buildings’ seismic safety and vulnerability are challenging, especially when extensive area assessments are required. In addition, examining structural cracks or determining the wall type in a masonry building damaged due to earthquake events is a very dangerous task for site engineers. For this reason, it has become possible to determine the seismic risk of buildings by observations that can be made from outside the building. But, the construction industry is one of the slowest professions to adapt to new technologies, and this situation needs to change. In this study, a contemporary method was proposed to accurately predict the seismic performance of buildings with the help of machine learning algorithms eliminating the need for any technical personnel to enter the building.

The methodologies designed to determine the seismic risk of individual buildings are well matured. However, they necessitate the use of complex analysis tools along with detailed inputs like material testing, plan drawings, quality of material, etc. (FEMA2456, Eurocode 6, TEC2018, GABHR 2019; Dejong 2009; Aldemir et al. 2013; Penna et al. 2014; Beyer et al. 2014; Penna 2015 and Ahmad and Ali 2017). These detailed seismic assessment techniques become dysfunctional if the number of concerned buildings reaches thousands or more. This is mainly because the inputs and procedures take significant time, manpower of experienced engineers, and computational power. Therefore, these methods could not be employed when the seismic risk of the large building stock is aimed to be determined. Consequently, the current state-of-the-art on detailed seismic assessment of structures is limited by human and infrastructure resources. Therefore, Sozen (2014) stated that the seismic risk assessment of building inventories could only be accomplished by changing the strategy from seeking safety to filtering out vulnerable buildings from the large building stock (i.e., low-pass filtering). Thus, a versatile and accurate method should be formulated to enable decisions to be made using inexpensively acquired building data and the evaluation process to be implemented quickly (Sozen 2014).

Although several researchers have tried to generate simple methods to assess the seismic risk of reinforced concrete (RC) structures (Yakut 2004; Yucemen and Ozcebe 2004; Askan and Yucemen 2010; Maziliguney et al. 2012; Al-Nimry et al. 2015; Perrone et al. 2015; Kumar et al. 2017; FEMA P154; Coskun et al. 2020; Harirchian et al. 2020a; b); the literature shows a limited number of efforts to propose rapid screening (or filtering) methods applicable to unreinforced masonry (URM) building stocks (GABHR 2019; D’Ayala 2013; Shah et al. 2016; Achs and Adam 2012; Grünthal 1998; Achs 2011; Rajarathnam and Santhakumar 2015; Aldemir et al. 2020). D’Ayala (2013) attempted to correlate damage states with fragility curves to determine the seismic vulnerability of masonry structures. However, this method requires the fragility curve for the location of the building, which reduces the applicability of this process, as fragility curves are scarce in number. Shah et al. (2016) used a building classification with respect to the masonry material used, the state of the building, construction quality, building shape irregularity, and the level of earthquake-resistant design. They used the European Macroseismic Scale (Grünthal 1998) and applied defined vulnerability classes (A–F) to determine the risk level of masonry structures. However, the outcome of this method still lacked correlation with actual performance. In another approach, Achs and Adams (2012) proposed a rapid visual screening method that used penalty scores for structural parameters, including seismic hazard, regularity in the plan, regularity in elevation, horizontal stiffness, local failure, secondary structures, soil condition, foundation, and state of preservation. The penalty scores were derived from comprehensive preliminary in situ inspections and measurements of Viennese brick masonry buildings (Achs 2011). Rajarathnam and Santhakumar (2015) used aerial photographs on a geographic information system (GIS) platform to accelerate rapid visual screening. However, none of these methods is based on a large database of masonry structures with detailed seismic assessment results. In other words, all previous methods lack a correlation between rapid screening scores and detailed analysis results. Aldemir et al. (2020) proposed a new rapid visual screening method applicable to masonry structures. They aimed to increase the accuracy of the seismic risk estimation by correlating the rapid visual screening scores with the detailed seismic risk analysis results. To this end, they generated a linear relationship between the risk and the considered parameters. They concluded that this approach resulted in a promising method with some accuracy problems in predicting the test database. The complex relationships between the seismic risk estimations and the selected parameters could be resolved by implementing machine learning algorithms. Similarly, researchers have recently given some effort to incorporate the machine learning algorithms 1- to find a better relationship between the observed damage and seismic events (Mangalathu et al. 2020 and Zhang et al. 2018); 2- to propose methods to predict the fragility curves (Kiani et al. 2019; Ruggieri et al. 2021); 3- to estimate the seismic risk (Zhang et al. 2019; Harirchian et al. 2020a; b). However, none of these studies proposed a versatile seismic risk filtering method for masonry structures incorporating machine learning algorithms. In addition, some recent studies tried to propose contemporary strategies to efficiently evaluate the seismic performance of structures (Javidan and Kim 2022a; b).

Several studies in the literature have succeeded in creating damage maps using unmanned aerial vehicles for post-earthquake damage assessment (Wang et al. 2021; Cooner et al. 2016; Li et al. 2018; Xu et al. 2019; Xu et al. 2018; Sublime and Kalinicheva 2019; Kerle et al. 2020; Stepinac et al. 2020; etc.). These studies have also evolved into studies that include post-earthquake permanent displacement estimations in order to derive new algorithms to accelerate data processing time (Li et al. 2011) and to classify post-earthquake damages (Wang et al. 2020). Finally, studies are carried out to determine the physical properties of buildings and their structural deficiencies, such as soft floors, using unmanned aerial vehicle photographs (Yu et al. 2020). However, none of these studies was designed to be applied before disasters in order to facilitate pre-hazard applications (i.e., to increase preparedness). In addition, machine learning methods have been developed for the estimation of structural systems using photographic data. Geiß et al. (2015) showed in their research that structural systems (masonry, confined masonry, reinforced concrete frame, steel frame, etc.) could be predicted with a high success rate by machine learning methods. In their methods, random forest and support vector machine algorithms are used.

Therefore, this study focused on developing a simple, rapid visual screening method to predict the damage level of masonry buildings using machine learning algorithms. The parameters required for the procedure were aimed to be collectible without the need for the entrance of technical personnel into the risky building. The parameters that could be determined externally are specified as follows:

• Number of Stories (NS)

• Floor system type (FT)

• Visual damage (VD)

• Wall material type (WT)

• Typical story height (TY)

• Vertical irregularity (VI)

• Typical plan area (TA)

• Earthquake zone (EZ)

It should be stated that the age of the structure is not included as an independent parameter in this proposed network. However, the selected parameters managed to correlate the existing physical and mechanical properties with the risk state. Although the age of the building is an important parameter, the visual damage parameter has a significant correlation with the age of the building. Therefore, the proposed network has high accuracy in estimating the risk state. In other words, this study aimed to develop a calculation network based on the properties of any structures from external observations. This network was trained with the known detailed seismic risk analysis results. To this end, machine learning algorithms were trained with a large stock of buildings whose seismic risk analysis results were available (i.e., 543 different real buildings with seismic risk assessment analyses). Then, the seismic risk analysis estimation performance of this algorithm was tested with untrained buildings (i.e., 109 different real buildings with seismic risk assessment analyses). The formed machine learning algorithm estimates the risk and damage level of the analyzed structure during a possible earthquake event.

2 Definition of the URM building database

The database used within the scope of this study was obtained from the Risky Buildings Department of the Ministry of Environment and Urbanization. The earthquake risk analyses of the buildings in the database were determined by the detailed seismic risk analysis calculation method included in the provisions of the Urban Transformation Law No. 6306 (GABHR 2012) or Turkish Earthquake Code (TEC 2007). In this context, the plan drawings, material strengths, etc., of all buildings were available, along with all the physical properties. Therefore, the necessary technical analysis has been done on these structures to train machine learning algorithms. The selected parameters are presented in Table 1. Before using this raw database, data engineering was performed to filter out unnecessary or misleading information by deleting null values, categorizing the selected parameters, etc. In addition, the distribution of parameters is given in Fig. 1. It is known that masonry structures are commonly constructed to have less than four stories in Turkey. However, in some regions of Turkey, the seismicity is low, promoting the use of masonry structures up to 8 stories. In addition, the number of these exceptional cases is low. Thus, the selected database is intentionally formed to have a limited number of masonry buildings with more than four stories (only %25 of the entire database).

The detailed seismic assessment analysis of buildings was performed as per GABHR (2012). In the numerical models, all piers were simulated using 2-node 3-D frame elements, whereas all slabs were modeled with 4-node thin shell elements. In the numerical models, the modulus of elasticity was calculated using the expression (i.e., 200 fm) given in GABHR (2012). In each story, a rigid diaphragm was defined, provided that a reinforced concrete slab existed. After that, a response spectrum analysis was performed under the effect of a reduced design spectrum (i.e., R = 2). The analysis was performed for two orthogonal directions separately. During the response spectrum analysis, 95% of mass participation was satisfied in each orthogonal direction. In the detailed seismic assessment analysis, the performance limits for slab elements were not calculated. The performance of each pier was determined as Minimum Damage (MD) provided that the pier had enough capacity to resist the reduced design spectrum and gravity demands. On the contrary, the performance of each pier was classified as Collapse Damage (CD) if the pier did not have enough capacity to resist the reduced design spectrum and gravity demands. The capacity of each pier was estimated by considering all the failure modes given in TEC (2018) (i.e., diagonal tension and base sliding). Also, the axial load demands were compared with the axial load capacities of each pier. In these calculations, a correction was made depending on the slenderness ratio. The correction factor was taken as 1 for slenderness ratios less than 6, 0.8 for slenderness ratios between 6 and 10, 0.7 for slenderness ratios between 10 and 15, and 0.5 for slenderness ratios greater than 15. If the pier was found to have less axial load capacity than the demand, it would be classified as Collapse Damage (CD). The performance of each masonry building was claimed to be satisfying the life safety performance level provided that less than 50% of the total base shear at the first story is resisted by masonry piers with a Collapse Damage (CD) performance level.

3 Correlation of parameters with detailed seismic assessment analysis results

Detailed seismic assessment analysis of buildings is critical in determining the behavior of the building during seismic events. For this reason, detailed seismic assessment analysis is generally required for the safety check of all buildings over 20 years old and located in close proximity to earthquake zones. On the contrary, rapid screening methods should be used in countries where the filtering of risky buildings is aimed to be performed to take action about retrofitting operations. Therefore, this filtering operation is vital as structures at risk of collapse should be strengthened immediately, or new earthquake-resistant structures should be built instead of these structures. However, current rapid screening methods in the literature have a minimal correlation with the detailed assessment analysis results as none of the methods was calibrated with the detailed analysis results. Thus, it is difficult to depend on the risk estimations of the rapid screening methods while taking actions at the seismic risk mitigation level. Consequently, in this study, it was aimed to form a network to correlate the seismic risk with the properties of buildings. For this reason, it was important to determine the most influential variables to be used in the detection of risky or non-risky structures in correlation with the seismic risk results from the detailed analysis. This operation could yield to dissociate the unnecessary parameters from the network and reduce the bias. The correlation of the variables in the available data set with the class (i.e., the risk state from the detailed analysis, RS) is shown below (Table 2 and Fig. 2). In addition, the relationships between parameters, i.e., visual damage, vertical irregularity, number of stories and typical plan area, and the detailed seismic assessment analysis results are shown in Fig. 3. In Fig. 3, it is apparent that the risk state probability has a robust correlation with the increasing values of story number, vertical irregularity, and visual damage. In addition, total floor area has some limited correlation with the risk state determined from detailed analysis.

In Table 2, the correlation coefficients were calculated using “grouping and mean value determination processes” proposed by McKinney (2011). Grouping and mean value determination processes are used to group the subject data according to some identifiers, to examine the effect of input parameters on the output, to combine these data, or to transform data. This process is called learning through groups (McKinney 2011). In this method, only one independent variable is selected in each case (i.e., vertical irregularities), and the dependent variable is always the state of risk (i.e., 0 or 1). Then, the correlation coefficient for each subcategory in each independent variable is calculated by dividing the number of risky structures with subcategory i to the total number of risky structures. In Table 2, values above 0.5 contribute to the risk of the building, while values below 0.5 contribute to the non-risk of the building. For example, if there is vertical irregularity (VI = 1), the RS value will increase to 0.899, indicating that the building contributes to its risky nature. If there is no vertical irregularity (VI = 0), the RS value will decrease by 0.678, contributing less to the building’s risk. Since vertical irregularity in structures is an undesirable situation as it adversely affects load transfer, this obtained result could be claimed to be reasonable. When the heat map is examined (Fig. 2), the parameters with the highest correlation that affect the detailed seismic assessment analysis result are vertical irregularity (VI), visual damage (VD), and the number of floors (NF). The correlation of these parameters is also technically rational. Because the likelihood that the structure becomes risky for seismic disturbances will increase if the structure is damaged. Likewise, as the number of floors increases, the horizontal drift demands of the building will increase inherently, which increases the seismic risk. Besides, the presence of structural cracks and vertical irregularities in the structure (i.e., VD = 1, VI = 1) causes the risk state (RS) to converge to 1. In contrast, the absence of structural damages (VD = 0, VI = 0) causes the RS to converge to 0.

In order to further investigate the selected parameters from different perspectives, another graph representing the relation between buildings with vertical irregularities and visual damage is plotted (Fig. 4a). From Fig. 4a, it could be inferred that vertical irregularity (VI = 1) is observed more in risky and structurally undamaged structures (i.e., RS = 1 and VD = 0) than in non-risky and structurally undamaged structures (RS = 0 and VD = 0). This leads to the conclusion that vertical irregularity and visual damage correlate well with each other. Figure 4b shows the distributions of the risk states of buildings, the number of stories, and visual damage parameters and their interrelationships. The structures with no structural damage and no risk (i.e., VD = 0 and RS = 0) in the data set are usually one story. Therefore, it is understood that the probability of single-story masonry buildings being non-risky is high, and this situation may change toward risky as the number of floors increases. Higher lateral displacement in high-rise buildings may be the reason for this situation.

4 Machine learning algorithms

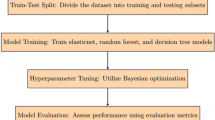

Machine learning (ML) is concerned with the ability of data-driven models to learn information about a system from directly observed data without predetermining the mechanical relationships. ML algorithms can adaptively improve their performance with each new data sample, update their differentiable weights according to the new data and discover relationships in complex heterogeneous and high-dimensional data (Shaikhina et al. 2019). In this study, instead of sticking to a single method, it was preferred to use multiple machine learning methods (i.e., ensemble learning) in order to achieve the highest success percentage. As part of ensemble learning, different supervised machine learning algorithms were utilized since supervised machine learning algorithms rely on labeled input data to learn a function that produces an appropriate output when given new unlabeled data (Fig. 5a). Logistic regression (Peng et al. 2002); decision tree classifier (Kotsiantis et al. 2007); random forest classifier (Shaikhina et al. 2019); support vector machine (SVM) classifier (Widodo and Yang 2007); and K-neighbors classifier (Imandoust and Bolandraftar 2013) are used to predict building damage levels. To this end, the dataset was divided into training and test datasets first. Then, the statistical measures, like the correlation of parameters, are determined along with the feature engineering operations, i.e., categorical variable definitions. Then, the ensemble learning algorithms were codified to perform the necessary learning operations. Finally, the performance of the ML network on the estimation of the risk state was checked with the test database (Fig. 5b).

5 Performance metrics

The seismic risk distribution of a large building stock was aimed to be accurately estimated using the aforementioned machine learning algorithms. In all networks, the risk state from the machine learning algorithm was taken as risky (non-risky) if the risk score became 1 (0). In this part, the performances of the used algorithms will be evaluated not only by the success percentage but also by the metrics like true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). These metrics were transformed into precision, recall, and the combined measure (i.e., Fmeasure) given in Eqs. (1–3) (Saito and Rehmsmeier 2015).

The utilized data set consisted of 543 buildings and was analyzed using multiple machine learning algorithms. All the calculations were made in the Jupyter environment (i.e., formally known as IPython). Initially, the dataset was divided into 434 trains and 49 tests with the “Train Test Split” function from the “Sklearn model selection” library. Next, another dataset with additional 60 buildings excluded from the initial stage was formed for the second test stage. In other words, the total training dataset (i.e., the training dataset plus validation dataset) was equal to 483 buildings, whereas the test dataset comprised 60 buildings. In addition, K-fold cross-validation was applied at the validation stage, which prevents the trained data set from being overfitted by the algorithm. As explained before, logistic regression, decision tree classifier, random forest classifier, support vector machine (SVM) classifier, and K-neighbors classifier were all used in this study. The accuracy of each method is presented in Table 3. In addition, the confusion matrices are summarized in Table 4.

It should be noted that it is essential to choose the correct hyperparameters of the logistic regression to increase the percentage of accuracy. There were many hyperparameters for each model, so an excellent way to identify the best set of hyperparameters was to try different combinations and compare the results. The penalty value of l2 is chosen because l2 provides a better prediction when the output variable is a function of all input properties. The coefficient value, on the other hand, was chosen as one of the values providing the highest percentage of success in the graph in Fig. 6a. In the decision tree algorithm, there are many parameters that affect the success percentage. Of these, one of the values “maximum depth”, providing the highest percentage of success, was selected from Fig. 6b. In the random forest algorithm, there are many parameters that affect the success percentage. Of these, “minimum samples split” was chosen as one of the values that provide the high success percentage in the graph in Fig. 6c. It should be noted that this algorithm has many parameters that affect the results. While applying the KNN algorithm in this study, the “number of data points: k” parameter is one of the most important parameters in increasing the success percentage. Therefore, it was clear from Fig. 6d that the worst neighbor values for the dataset were k < 2, 3 < k < 4, and k > 13. In this case, these values should be avoided in choosing the k value. It has been observed that all values do not change the percentage of success in the penalty parameter selection of the Support Vector Machine Classifier algorithm (Fig. 6e). In this study, the optimum values required to increase the success percentage of each algorithm were determined by the grid search method.

Finally, the number of possible outcomes of the variable RS that lead to the most accurate estimations was investigated. To this end, the Euclidian distances were plotted against the data points (i.e., dendrogram). The least number of possible outcomes could be determined by counting the least number of intersecting points with any possible horizontal lines drawn on the dendrogram. For the risk state variable, this number equaled two (Fig. 7). Therefore, this cross-check also verified the validity of the selected possible outcomes of the RS variable (i.e., risky or non-risky). In other words, the formed machine learning network could only distinguish between the risky and non-risky buildings. It could not classify the buildings according to their possible damage rates like minor damage, moderate damage, or collapse.

In Ensemble Learning, voting is one of the simplest ways of combining the predictions from multiple machine learning algorithms. In this study, a single machine learning algorithm was not used to estimate the seismic analysis result. By using more than one machine learning algorithm, the majority of their predictions are based on votes. For this method, “hard” or “soft” voting can be done. Here, the algorithms give the seismic analysis result with two options: 1–0 (hard) or percentage ratio estimates (soft). In the proposed methodology, it was decided to use hard voting for the data set. Components of the ensemble learning, performance results, and schematic representations are shown in Figs. 8 and 9. In addition, the success rate of this machine learning algorithm is shown in Table 5. In Fig. 9, the mean accuracy is obtained by taking the mean accuracy across k-folds for each algorithm.

6 Performance of the proposed method with damage observations after dinar EQ and elazig-kovancilar EQ in Turkey

In this part, the risk status estimations of the buildings whose actual damages after real earthquakes in Turkey were compared. For this purpose, the Dinar earthquake (ML = 6.1) and the Elazig-Kovancilar earthquake (Mw = 6.1) were utilized. During the Dinar EQ, it was reported that 2,043 buildings were completely destroyed, and approximately 4,500 buildings were severely damaged (EERI 1995). It was also reported that 2,549 buildings collapsed, and approximately 50 buildings were severely damaged (Akkar et al. 2011). The pseudo-spectral accelerations in the constant acceleration region for the Dinar EQ and the Elazig-Kovancilar EQ were reported as 0.90 g and 0.82 g, respectively. To test the prediction performance of the machine learning algorithms proposed in this study, 13 buildings (5 buildings from the Elazig-Kovancilar EQ and eight buildings from the Dinar EQ) were used. Details on risk estimates are presented in Tables 6,7. From Tables 6,7, it could be concluded that the seismic risk status of 11 out of 13 buildings was correctly estimated by the proposed method. In summary, the machine learning algorithm gave correct results by estimating six undamaged structures as non-risky and five damaged structures as risky. But, it failed to classify two damaged structures as non-risky (i.e., Building 1 in Table 6 and Building 3 in Table 8). With these results, the proposed ML algorithms correctly estimated the damage status of 13 buildings and achieved 84.6% accuracy.

7 Discussion of results

Rapid screening methods in the literature classify the earthquake risk of large building stocks by penalizing the existence of some physical deficiencies of structures. The decision is made depending on these penalty scores, which have no intended or calculated correlation to detailed seismic risk analysis. Therefore, in this proposed approach, new insight was brought to the rapid screening method. To this end, a large database is utilized to correlate the seismic risk analysis results to the rapid screening scores. Therefore, unlike the literature-available rapid screening methods, the proposed method is useful for generating a risk distribution map of large building stocks having a significant correlation with the detailed seismic risk analysis results. Consequently, the proposed machine learning network could be employed while generating seismic risk mitigation systems. The confidence in the proposed machine learning network is enhanced by examining the performance of the proposed network with both post-earthquake reconnaissance and numerical seismic analysis results.

The performance of the proposed network is improved by implementing ensemble learning depending on the hard voting scheme. This manipulation significantly enhanced both the estimation success rate of the training and test database. In addition, the risk state estimation after EQs is aimed to be accurately predicted by the proposed method. To this end, the proposed network’s performance was investigated by comparing its risk state estimations with damage states of buildings after real earthquakes. Thirteen buildings damaged during the Dinar EQ (ML = 6.1) and the Elazig-Kovancilar EQ (Mw = 6.1) were utilized. The risk estimation performance of the proposed network was found to be as large as 84.6%. This observation also increased the confidence in the proposed network.

In Table 5, it is apparent that the false-negative ratio of the proposed model is 3.33%, whereas false-positive ratio is 0% for the test database. However, the observation for the real EQ application of the proposed method is different. The model estimated two damaged structures as non-risky, which could be a drawback in the practical application of this model. Therefore, the false-positive ratio of the proposed model should be improved in order to have a more dependable model.

8 Conclusion

It is essential to determine the most vulnerable buildings and take precautions before major earthquakes hit in order to eliminate the loss of lives. However, the available detailed procedures require too much human power and resources to be possibly applied in large stocks of buildings. In addition, current rapid visual screening methods used to estimate the risk state of large building stocks do not result in reliable and accurate filtering. Therefore, in this study, it was aimed to bring a new perspective to the building seismic risk filtration. To this end, a calculation network based on the properties of any structures from external observations was collected. This network was trained with the known detailed seismic risk analysis results. Then, the seismic risk analysis estimation performance of this algorithm was tested with untrained buildings.

In this study, instead of depending on the estimation of a single method, it was preferred to use ensemble learning in order to achieve the highest success rate. As part of ensemble learning, different supervised machine learning algorithms were utilized. Logistic regression, decision tree classifier, random forest classifier, support vector machine classifier, and K-neighbors classifier are used to predict building damage levels. Then, hard voting was utilized as the outcome of the proposed method was designed to be composed of risky and non-risky buildings (i.e., 1–0). The successful percentage estimations of the proposed ensemble learning for the training and test database were 96.7% and 95.9%, respectively.

The proposed network’s performance was also investigated by comparing real EQ damages. In summary, the proposed ML algorithm gave correct results by classifying six undamaged structures as non-risky and five damaged structures as risky. However, the method failed to estimate the risk state of two damaged structures by defining them as non-risky (i.e., Building 1 in Table 6 and Building 3 in Table 8). Therefore, the proposed ML algorithm achieved 84.6% accuracy. This methodology could serve better, provided that mobile applications or web-based software are designed to enable data entry in the field.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Achs G, Adam C (2012) Rapid seismic evaluation of historic brick-masonry buildings in vienna (austria) based on visual screening. Bull Earthq Eng 10:1833–1856. https://doi.org/10.1007/s10518-012-9376-5

Achs, G (2011) Seismic hazard of historic residential buildings: evaluation, classification and experimental investigations. Ph.D. thesis (in German), Vienna University of Technology

Ahmad N, Ali Q (2017) Displacement-based seismic assessment of masonry buildings for global and local failure mechanisms. Cogent Eng 4:1414576. https://doi.org/10.1080/23311916.2017.1414576

Akkar S, Aldemir A, Askan A, Bakır S, Canbay E, Demirel IO, Erberik MA, Guulerce Z, Gülkan P, Kalkan E, Prakash S, Sandıkkaya MA, Sevilgen V, Ugurhan B, Yenier E (2011) 8 March 2010 elazıg-kovancılar (turkey) earthquake: observations on ground motions and building damage. Seismol Res Lett 82(1):42–58

Aldemir A, Erberik MA, Demirel IO, Sucuoglu H (2013) Seismic performance assessment of unreinforced masonry buildings with a hybrid modeling approach. Earthq Spectra 29(1):33–57

Aldemir A, Guvenir E, Sahmaran M (2020) Rapid screening method for the determination of regional risk distribution of masonry structures. Struct Saf 85:101959

Al-Nimry H, Resheidat M, Qeran S (2015) Rapid assessment for seismic vulnerability of low and medium rise infilled rc frame buildings. Earthq Eng Eng Vib 14:275–293. https://doi.org/10.1007/s11803-015-0023-4

Askan A, Yucemen MS (2010) Probabilistic methods for the estimation of potential seismic damage: application to reinforced concrete buildings in turkey. Struct Saf 32:262–271. https://doi.org/10.1016/j.strusafe.2010.04.001

Beyer K, Petry S, Tondelli M, Paparo A. Towards displacement-based seismic design of modern unreinforced masonry structures. InPerspectives on European Earthquake Engineering and Seismology 2014 (401-428) Springer Cham

Cooner AJ, Shao Y, Campbell JB (2016) Detection of urban damage using remote sensing and machine learning algorithms: revisiting the 2010 Haiti earthquake. Remote Sensing 8:868

Coskun O, Aldemir A, Sahmaran M (2020) Rapid screening method for the determination of seismic vulnerability assessment of RC building stocks. Bull Earthq Eng 18:1401–1416

D’Ayala D. Assessing the seismic vulnerability of masonry buildings. In:Handbook of seismic risk analysis and management of civil infrastructure systems 2013 (pp 334-365) Woodhead publishing.

Dejong, MJ (2009) Seismic assessment strategies for masonry structures. Ph.D. Thesis, Massachusetts Institute of Technology, Boston, USA.

EERI (1995) 1 Ekim 1995 Dinar earthquake engineering report (in Turkish), METU Press.

European committee for standardization (CEN) (2003) Eurocode 6: Design of masonry structures. prEN 1996–1, Brussels, Belgium

Federal Emergency Management Agency (FEMA P154) (2015) Rapid visual screening of buildings for potential seismic hazards: A handbook. Washington, D.C, USA.

Federal Emergency Management Agency (FEMA356) (2000) Prestandard and commentary for the seismic rehabilitation of buildings. Washington, D.C, USA.

GABHR (2019) Guidelines for the assessment of buildings under high risk. Ministry of Environment and Urbanization, Ankara, Turkey.

GABHR (2012) Guidelines for the assessment of buildings under high risk, Ministry of Environment and Urbanization, Government of Republic of Turkey (in Turkish).

Geiß C, Pelizari PA, Marconcini M, Sengara W, Edwards M, Lakes T, Taubenböck H (2015) Estimation of seismic building structural types using multi-sensor remote sensing and machine learning techniques. ISPRS J Photogramm Remote Sens 104:175–188

Grünthal, G., ed. (1998) European macroseismic scale 1998. Cahiers du Centre Européen du Géodymamique et de Séismologie 15 Luxembourg: Centre Européen de Géodynamique et de Séismologie, 99 pps

Harirchian E, Kumari V, Jadhav K, Das RR, Rasulzade S, Lahmer T (2020) A machine learning framework for assessing seismic hazard safety of reinforced concrete buildings. Appl Sci 10(20):7153

Harirchian E, Lahmer T, Kumari V, Jadhav K (2020) Application of support vector machine modeling for the rapid seismic hazard safety evaluation of existing buildings. Energies 13(13):3340

Imandoust SB, Bolandraftar M (2013) Application of k-nearest neighbor (knn) approach for predicting economic events: Theoretical background. Int J Eng Res Appl 3(5):605–610

Javidan MM, Kim J (2022) Fuzzy-based method for efficient seismic performance evaluation of structures with uncertainty. Comput Aided Civil Infrastruct Eng 37(6):781–802

Javidan MM, Kim J (2022b) An integrated system for simplified seismic performance evaluation and life-cycle cost analysis. J Build Eng 45(1):103655

Kerle N, Nex F, Gerke M, Duarte D, Vetrivel A (2020) UAV-based structural damage mapping: a review. Int J Geo Inform 9(1):14

Kiani J, Camp C, Pezeshk S (2019) On the application of machine learning techniques to derive seismic fragility curves. Comput Struct 218(1):108–122

Kotsiantis SB, Zaharakis I, Pintelas P (2007) Supervised machine learning: a review of classification techniques. Emerg Artif Intell Appl Comput Eng 160:3–24

Kumar SA, Rajaram C, Mishra S, Kumar RP, Karnath A (2017) Rapid visual screening of different housing typologies in Himachal Pradesh, India. Nat Hazards 85:1851–1875. https://doi.org/10.1007/s11069-016-2668-3

Li C, Zhang G, Lei T, Gong A (2011) Quick image-processing method of UAV without control points data in earthquake disaster area. Trans Nonfer Metal Soc China 21:523–528

Li LL, Liu XG, Chen QH, Yang S (2018) Building damage assessment from polsar data using texture parameters of statistical model. Comput Geosci 113:115–126

Mangalathu S, Sun H, Nweke CC, Yi Z, Burton HV (2020) Classifying earthquake damage to buildings using machine learning. Earthq Spectra 36(1):183–208

Mazılıgüney L, Yakut A, Kadaş K, Kalem İ. Evaluation of Preliminary Assessment Procedures for Reinforced Concrete School Buildings in Turkey. InTenth International Congress on Advances in Civil Engineering (ACE2012) 2012 (17-19)

Peng CYJ, Lee KL, Ingersoll GM (2002) An introduction to logistic regression analysis and reporting. J Educ Res 96(1):3–14

Penna A (2015) Seismic assessment of existing and strengthened stone-masonry buildings: critical issues and possible strategies. Bull Earthq Eng 13:1051–1071. https://doi.org/10.1007/s10518-014-9659-0

Penna A, Lagomarsino S, Galasco A (2014) A nonlinear macroelement model for the seismic analysis of masonry buildings. Earthq Eng Struct Dynam 43(2):159–179

Perrone D, Aiello MA, Pecce M, Rossi F (2015) Rapid visual screening for seismic evaluation of rc hospital buildings. Structures 3:57–70. https://doi.org/10.1016/j.istruc.2015.03.002

Rajarathnam S, Santhakumar AR (2015) Assessment of seismic building vulnerability based on rapid visual screening technique aided by aerial photographs on a gis platform. Nat Hazards 78:779–802. https://doi.org/10.1007/s11069-014-1382-2

Ruggieri S, Cardellicchio A, Leggieri V, Uva G (2021) Machine-learning based vulnerability analysis of existing buildings. Autom Constr 132(1):103936

Saito T, Rehmsmeier M (2015) The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 10(3):e0118432

Shah MF, Ahmed A, Kegyes-B OK. (2016) A case study using rapid visual screening method to determine the vulnerability of buildings in two districts of Jeddah, Saudi Arabia. In: Proceedings of the 15th international symposium on new technologies for urban safety of mega cities in Asia, Tacloban, Philippines (pp 7-9)

Shaikhina T, Lowe D, Daga S, Briggs D, Higgins R, Khovanova N (2019) Decision tree and random forest models for outcome prediction in antibody incompatible kidney transplantation. Biomed Signal Process Control 52:456–462

Sozen MA (2014) Surrealism in facing the earthquake risk. Springer, Seismic Evaluation and Rehabilitation of Structures

Stepinac M, Gašparović M (2020) A review of emerging technologies for an assessment of safety and seismic vulnerability and damage detection of existing masonry structures. Appl Sci 10:5060

Sublime J, Kalinicheva E (2019) Automatic post-disaster damage mapping using deep-learning techniques for change detection: case study of the Tohoku tsunami. Remote Sens 11:1123

TEC (2007) Turkish Earthquake Code Specification for the Buildings to be Constructed in Disaster Areas. Ministry of Public Works and Settlement, Ankara, Turkey

TEC (2018) Turkish Earthquake Code Specification for structures to be built in disaster areas, Ministry of Environment and Urbanization, Ankara, Turkey.

Wang C, Yu Q, Law KH, McKenna F, Yu SX, Taciroglu E, Zsarnoczay A, Elhaddad W, Cetiner B (2021) Machine learning-based regional scale intelligent modeling of building information for natural hazard risk management. Autom Constr 122(1):103474

Wang X, Wittich CE, Hutchinson TC, Bock Y, Goldberg D, Lo E, Kuester F. (2020) Methodology and validation of UAV-based video analysis approach for tracking earthquake-induced building displacements. J Comput Civil Eng 34 (6)

Widodo A, Yang BS (2007) Support vector machine in machine condition monitoring and fault diagnosis. Mech Syst Signal Process 21(6):2560–2574

Xu ZH, Wu LX, Zhang ZX (2018) Use of active learning for earthquake damage mapping from uav photogrammetric point clouds. Int J Remote Sens 39:5568–5595

Xu, JZ, Lu, W, Li, Z, Khaitan, P, Zaytseva, V (2019) Building damage detection in satellite imagery using convolutional neural networks. 33rd Conference on Neural Information Processing Systems, Vancouver, Canada

Yakut A (2004) Preliminary seismic performance assessment procedure for existing rc buildings. Eng Struct 26:1447–1461. https://doi.org/10.1016/j.engstruct.2004.05.011

Yu Q, Wang C, McKenna F, Yu SX, Taciroglu E, Cetiner B, Law KH (2020) Rapid visual screening of soft-story buildings from street view images using deep learning classification. Earthq Eng Eng Vib 19(4):827–838

Yucemen MS, Ozcebe G, Pay AC (2004) Prediction of potential damage due to severe earthquakes. Struct Saf 26:349–366. https://doi.org/10.1016/j.strusafe.2003.09.002

Zhang Y, Burton HV, Sun H, Shokrabadi M (2018) A machine learning framework for assessing post-earthquake structural safety. Struct Saf 72(1):1–16

Zhang Z, Hsu TY, Wei HH, Chen JH (2019) Development of a data-mining technique for regional-scale evaluation of building seismic vulnerability. Appl Sci 9(7):1502

Acknowledgements

The authors thank the Ministry of Environment and Urbanization for its kind support during the database collection. This publication is a part of doctoral dissertation work by the first author in the Academic Program of Civil Engineering, Institute of Science, Hacettepe University.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. Material preparation, data collection, and analysis were performed by Onur Coskun. The first draft of the manuscript was written by Alper Aldemir and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Coskun, O., Aldemir, A. Machine learning network suitable for accurate rapid seismic risk estimation of masonry building stocks. Nat Hazards 115, 261–287 (2023). https://doi.org/10.1007/s11069-022-05553-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-022-05553-y