Abstract

Statistical and dynamic downscaling approaches are commonly used to downscale large-scale climatic variables from global circulation (GCM) and regional circulation (RCM) model outputs to local precipitation. The performance of these two approaches may differ from each other for daily precipitation projections when applied in the same region. This is examined in this study based on the estimation of extreme precipitation. Daily precipitation series are generated from GCM HadCM3, CGCM3/T47 and RCM HadCM3 models for both historical hindcasts and future projections in accordance with the period from 1971 to 2070. The Waikato catchment of New Zealand is selected as a case study. Deterministic and probabilistic performances of the GCM and RCM simulations are evaluated using root-mean-square-error (RMSE) coefficient, percent bias (PBIAS) coefficient and equitable threat score (ETS). The value of RMSE, PBIAS and ETS is 2.89, − 2.16, 0.171 and 8.72, − 4.01, 0.442 for mean areal and at-site daily precipitation estimations, respectively. The study results reveal that the use of frequency analysis of partial duration series (FA/PDS) is very effective in evaluating the accuracy of downscaled daily precipitation series. Both the statistical and the dynamic downscaling perform well for simulating daily precipitation at station level for a return period equal to or less than 100 years. However, the latter outperforms the former for daily precipitation simulation at catchment level.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Climate model simulation of precipitation has improved considerably over time, but its reliability is still limited. This is mainly due to uncertainties associated with the Global Circulation Model (GCM) outputs, errors governed by the methods or techniques used to transform GCM outputs to local precipitation, site location and future projection period (Dubrovsky 2009; Khan et al. 2006; Pielke, 2012; Xu and Yang, 2012). There are numerous GCMs, which were developed by different national centres and the ensemble of the GCMs such as CMIP3 and CMIP5 are commonly used (IPCC 2007, 2013; Taylor et al. 2012). In principle, all GCMs are designed to simulate the dynamics and the processes of atmosphere, land surface and ocean. However, each model has its own features on the representation of, the interaction between and the parameterization of the processes. The performance of each model is dependent on the numerical methods used to solve the model equations as well as the resolution at which the model is run (Randall et al. 2007). Additionally, the model simulates geophysical processes of global change for a large-scale even though these processes in fact occur at much smaller scales. This gives rise to GCM uncertainties (Chun 2010; Dubrovsky 2009; Im et al. 2010; Jeong et al. 2012; Mujumdar and Ghosh 2008; Wilby et al. 1999). In general, the GCM provides information on global climate at coarse spatial resolutions, in a range from 250 to 600 km. Thus, the GCM outputs are primarily applicable for studies dealing with global climate change over a large-scale, e.g. regional, continental or global scale.

In practice, it is essential to study the impacts of climate change at smaller scales, e.g. within a catchment, in which more detailed information is required for extreme event analysis and risk assessment. In this regard, the downscaling concept is applied in order to fill the gap between the large-scale climate change response and the local-scale response (Comnalicer et al. 2010; Coulibaly and Dibike 2004; Prudhomme et al. 2002). Approaches to downscaling can be classified as either dynamical or statistical. Both approaches use GCM outputs to obtain local weather and climate variables. However, in dynamical downscaling (DD), the GCM outputs are used to drive a nested regional climate model (RCM) at higher resolution. In the statistical downscaling (SD), the GCM outputs are downscaled to smaller regions or even at the point scale (Chun 2010; Ferrero et al. 2009; Li and Qi 2010; Mearns et al. 2003; Wilby et al. 1999; Willems and Vrac 2011).

In principle, SD is based on the development of robust direct statistical relationships between large-scale climatic variables (predictors) and the local ones (predictands), utilizing historic data (Timbal et al. 2008). Predictors include circulation variables, temperature-related variables and moisture-related variables, while predictands can be precipitation at station location or over the study area. The relationships are normally assumed to be time-invariant (Sunyer et al. 2012). This assumption is the major uncertainty source because some of the controlling variables are not taken into account when the climate changes (Chen et al. 2011). Other sources of uncertainty in SD stem from the uncertainty in the GCMs themselves (Davani et al. 2012; Mujumdar and Ghosh 2008; Sunyer et al. 2012; Wilby et al. 2004). However, SD is still preferable in practice because methods exist that partially compensates for its disadvantages. For example, the standardization of the predictor variables by mean of standard deviation in the downscaling process could prevent the future downscaled scenarios from inheriting systematic biases from the GCM outputs. Sufficient length of observed data required for both model calibration and validation satisfactorily determines the statistical characteristics of the downscaled scenarios (Wilby et al. 2002). In addition, SD requires less computational effort and offers the opportunities for testing climate change scenarios for many decades or even centuries (Charles et al. 1999; Chen et al. 2012; Mearns et al. 2003; Wang et al. 2012; Wilby et al. 2004). Moreover, SD allows GCM downscaling experts to use various ensemble GCM results to improve simulation accuracy (Pham et al. 2013; Wilby et al. 1999, 2002).

Regional climate models (RCMs) are regarded as alternative competitors to SD at catchment scale which is considered to be sufficient to simulate local climate over an area of interest in a GCM (Giorgi and Means 1991; Rummukainen 2010). Dynamic downscaling (DD) with the use of RCMs is based on the same physical-dynamic description of climate processes that is used in GCMs (Baguis et al. 2010; Ishak et al. 2010). This approach uses numerical meteorological modelling to simulate how global patterns affect local weather conditions over a limited area. For the use of RCMs, it is essential to bear in mind that the domain and the grid size are the most important parameters driving the downscaling process (Castro-Diez 2002; Denis 2002; Xu 2001 in Xue et al. 2007). Using RCMs, data at high resolution can be generated as the outputs from a large number of GCMs with DD (Zhang 2016a; b). Recent advances in DD have resulted in spatial resolution improving from 100 km to about 20 km, with current exploitation of resolution of 10 km or less (Rummukainen 2010). However, in practice, DD requires a lot of computational time. In theory, DD suffers from inherent biases in both the GCMs and the RCMs. There are two major limitations of DD, namely “effects of systematic errors” provided by GCMs and “lack of two-way interaction” between GCMs and RCM (Mearns et al. 2003). In order to cope with these limitations, a bias correction method is an option which is able to reduce some uncertainties and increase the prediction accuracy (Pielke 2012; Yoon et al. 2012). In addition, more advanced techniques employed into RCMs could create more errors. For example, dynamic downscaling performs better with a RCM originally achieved from GCMs rather than from GCMs coupled with prescribed radiative forcing (Pielke 2012). This technical advancement would lead to the difficulty in applying the RCMs because the GCMs and RCMs themselves do not automatically provide reliable forecasts (Landsea and Knaff 2010). It is recommended that the combination of RCM output and observed data sets could be useful for the validation of RCMs as well as for the reduction of explicit errors associated with the models (Mearns et al. 2003).

The physical, temporal and the spatial characteristics of precipitation are complex, and therefore, the model simulation and projection of local precipitation are a major challenge. SD is constrained in its ability to simulate spatial distribution of precipitation (Hashmi et al. 2011; Pham et al. 2013), and especially when it is used in the context of complex terrain (Gutmann et al. 2012). DD results are sensitive to topography, convective system, landuse and soil conditions (Xue et al. 2007). However, a common limitation of both SD and DD is that not all extreme events are captured (von Storch 1995; Sunyer et al. 2012). This is because changes in extreme precipitations are very sensitive to small changes in climate, environment and model resolution (Fowler and Kilsby 2003; Pattanaika and Rajeevanb 2010).

Extreme precipitation is considered as the most sensitive indicator of typical local weather and climate change (Francis and Hengeveld 1998; Chiew et al. 2010; Furcolo et al. 1995; Mason et al. 1999; Im et al. 2010; Lavers et al. 2010; Pal and Tabbaa 2009). There are a number of studies which assess the reliability of model precipitation simulation. Methods used include extreme indices and extreme probabilities. The use of these indices in some studies is not able to capture all characteristics of large-scale and local-scale precipitation limiting their statistical agreement for both current and future climate states (Liu 2012; Hu et al. 2013; Huang et al. 2012; Schmidli and Frei 2005; Yang et al. 2012; Wang et al. 2012).

Extreme value theory (EVT) is an effective approach for probabilistically predicting extreme local precipitation (Friederichs 2010). Up to date, the generalised extreme value (GEV) distribution of annual maximum series (AMS) from GCM outputs (downscaled GEV/AMS model) is commonly used for frequency analysis of extreme precipitations (Friederichs 2010; Fowler et al. 2010; García-Cueto and Santillán-Soto 2012; Huntingford et al. 2003; Park and Jung 2002; Yang et al. 2010). The GEV/AMS model is envisaged to have the potential uncertainty in future projections of extreme events under dramatically changing climate (Cavazos 1998; Furcolo et al. 1995; Lambert et al. 1994). This is because not all extreme events would be captured whose quantitative relationship with climate drivers was not explicitly described (Chun 2010; Li and Qi 2010). An alternative approach to frequency analysis of extreme events is the use of the partial duration series (PDS) which can be described by the General Pareto (GP) distribution. This approach is envisaged to give the best prediction of extreme events because this model overcomes some of the weaknesses of the AMS model (Hosking and Wallis 1997; Katz 2010; Norbiato et al. 2007; Zhang 2010; Zin et al. 2010). Compared with AMS, PDS is a better model because it considers a wider range of events as well as captures more information about extreme phenomena (Norbiato et al. 2007). Moreover, PDS best performs for extreme events at low (T < 10 years) and high (T = 100 years) return periods (Hosking and Wallis 1997; Zvi 2009). However, the use of PDS has not been fully examined in the context of climate change studies and particularly when used with downscaled variables from GCMs and RCMs.

This study aims to simulate and validate future downscaled precipitation based on the analysis of extreme events from the daily time series. It is generated from different models, namely CGCM3, GCM HadCM3 and RCM HadCM3, developed by Canadian and British scientists (DAI 2012; IPCC 2007). This study for the first time compares the GCMs-RCM downscaled daily precipitation, using a regional frequency analysis of partial duration series of precipitation. Also, the downscaled precipitation time series from two GCMs and a RCM are compared with observations. The Waikato catchment located in New Zealand is selected as a case study.

1.1 Study region and previous related studies

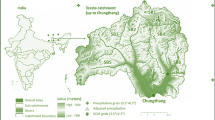

The Waikato catchment of New Zealand was selected for this study because of its importance to the New Zealand economy. Moreover, the main commercial and industrial activities in the catchment, such as hydropower generation and farming, are heavily dependent on precipitation. However, both the spatial and the temporal variability of precipitation over the catchment region are complex (Pham et al. 2013, 2014a,b). This variability could be amplified by future changes in other climatic variables (Pham et al. 2013).

In a previous study by Pham et al. (2013), the daily precipitation time series was downscaled at 14 Waikato meteorological stations from 26 daily observed large-scale climatic variables (see Supplementary A-1). Time series are originally developed from the coupled global climate model, version 3 (CGCM3), the Hadley Centre coupled model, version 3 (HadCM3), and re-analysis. The results of the former model are obtained from the Canadian Centre for Climate Modelling and Analysis (CCCMA, http://www.cccma.ec.gc.ca/) while the results of latter model are obtained from the UK Met Hadley Centre Office (MetOffice, http://www.metoffice.gov.uk/). The 26 corresponding variables can be obtained from National Centre of Environmental Prediction (NCEP, http://www.ncep.noaa.gov/). These GCMs and NCEP outputs are provided at different spatial resolutions. The NCEP data, at 2.50 × 2.50 spatial resolution, were regridded to the horizontal grid resolution of the CGCM3. Data from CGCM3 and HadCM3 are in grid form at 3.750 × 3.750 and 2.80 × 2.80, respectively (see Fig. 3); these are the finest spatial resolutions of the models.

Pham et al. (2013) used a statistical downscaling model (SDSM) developed by Wilby et al. (2002) to produce a synthetic precipitation time series of 30-year length at 14 stations. In this study, the mean areal precipitation was computed for the whole Waikato catchment from data at 14 stations. The synthetic point and the mean areal precipitation time series were compared with observed precipitation series over the same period, 1961–2000. Pham et al. (2013) found that the SDSM parameters are more sensitive to large-scale predictors from HadCM3 outputs than to the corresponding outputs from CGCM3. They also found that SDSM gives better results for regional precipitation than for at-site precipitation for both cases.

2 Data sets and methods

This section presents the details of the different types of data as well as the methods and techniques used to localise the global climate to the catchment precipitation. A method of predicting extreme precipitation events is also described in details.

2.1 Data sets

2.1.1 Precipitation station data

Daily precipitation data observed at 14 stations over 30 year period, from 1960 to 1990, were selected in the vicinity of the study region. The selection of the stations was based on data availability, continuity and consistency. Furthermore, these stations are well spread across the catchment which allows adequate estimation of its areal rainfall. The information about the station location and the available data is presented in Figs. 1 and 2.

2.1.2 Statistically downscaled precipitation data

The downscaled precipitation data from both CGCM3 and CGM HadCM3 were selected for this study. These data sets were developed in the previous study by Pham et al (2013). The following downscaling process was used:

First, the most relevant predictors from 26 large-scale GCMs variables that directly control the local predictand variable (i.e. precipitation) (IPCC 2007; Pham et al. 2013) were selected. This selection was undertaken by applying correlation and cross-correlation tests. The former was used to select the most relevant predictors, and the latter was used to find the time lag(s) at which the predictor and predictand series showed the strongest correlation.

Second, the most reliable statistical relationship between predictor–predictand variables was developed using the SDSM model. It runs with two main parameters, namely variance inflation factor (φ) and bias correction (Bc) (Wilby et al. 2002). The observed precipitation data are split into two periods 1961–1980 and 1981–1990 for calibration and validation, respectively. Model calibration and validation were implemented on a station-by-station basis. The precipitation was synthetized from 20 ensembles. With predictors established for predictand variables defined for each station, statistical regression equations are developed and then used for the downscaling experiment. The optimization sets of φ and Bc for each station were obtained using a trial-and-error procedure. The SDSM calibration is executed with different sets of predictors for different stations. For model validation, the model parameters are also tested by seasons and months together. Model performance was evaluated based on three different statistics, namely the percentage difference (SE), the coefficient of determination (R2), the root-mean-square-error (RMSE) and the percent bias (PBIAS).

This downscaling process was used to obtain precipitation at the station location. Subsequently, the mean areal precipitation was averaged up from station precipitation using the Thiessen polygon method. By doing this, the precipitation simulation could be made comparable with both point and gridded data from CGCM3/GCM HadCM3 and RCM HadCM3 models, respectively.

2.1.3 Dynamically downscaled precipitation data

Bias-corrected precipitation data generated from RCM HadCM3 (as RCM hereinafter) at 0.050 × 0.050 spatial resolution are used. These data are obtained from the National Institute of Water and Atmospheric Research (NIWA). In principle, precipitation variable from the RCM, which runs at 28 km resolution, has been bias-corrected and further downscaled to 5 km resolution (Ackerley et al. 2012 and per. com. with Mullan et al. 2001). An example of dynamically downscaled RCM precipitation on 31 December 1971 is presented in Fig. 3.

Future GCM and RCM data from only one emissions scenario, IPCC SRES A2, are used. This A2 scenario developed by the Intergovernmental Panel on Climate Change (IPCC) represents a high emission scenario as one of the most significant causes of climate change (IPCC 2007). Furthermore, A2 scenario is a common one used by the National Institute of Water and Atmospheric Research (NIWA) to study the climate change effects and impacts assessment for New Zealand local governments (Ministry of Environment 2008).

2.2 Methods

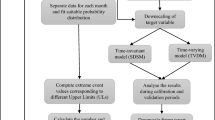

This section presents the step-by-step procedure which is used in this study to obtain the projection of extreme precipitation. This would be beneficial to climate change studies in which the accuracy of downscaled daily precipitation is assessed and validated. First, the precipitation time series from GCMs and RCM are generated for both present (1961–2010) and future (2011–2090) periods. Second, these future precipitation time series are compared to the observed present series. The third but the most important step is to validate the precipitation time series generated from GCMs and RCM using a frequency analysis of partial duration series. Finally, a projection of daily and extreme precipitation is carried out to investigate future changes.

2.2.1 Downscaled precipitation data processing

In this step, data generated from GCMs and RCM are extracted and then used to developed precipitation time series at the station level and for the whole study area.

Similar to the previous study by Pham et al. (2013), in this study, the 30-year time series of precipitation is extracted from the RCM results. The precipitation time series during the period 1971–2010 and 2011–2070 represent the present and the future climate, respectively. These overlaid periods were selected since each model has different present and future time slices. The HDF View and the Unidat Net CDF packages are used to extract the precipitation at the station locations and for integrating the results over the study period. The two packages are originally developed by the National Centre for Supercomputing Application (NCSA) and by North American Regional Climate Change Assessment Programme (NARCCAP), respectively.

The comparison of downscaled precipitation time series for the present and projected future climate indicates changes in precipitation over decades. The comparison is carried out for the precipitation time series at the site level as well as for the mean areal precipitation time series across the study catchment.

2.2.2 Evaluation of point and areal precipitation data

The grid cell precipitation time series from the RCM is assumed to be a good representation of the precipitation at a gauging site if the site is located within a single grid cell. If the gauging site is located along the boundary of two or more grid cells, the site precipitation time series is obtained as the average of the precipitation time series of these grid cells. To obtain the mean areal precipitation, the precipitation is averaged out over 360 grid cells that cover the Waikato catchment (Fig. 1). The RCM precipitation is directly compared to the observed time series at the gauging station. The assumption made here is that the observation at the rainfall gauging stations is considered as ground truth for a 5 km × 5 km pixel area corresponding to 0.050 × 0.050 spatial resolution of the RCM.

For the simplicity in evaluating the GCM and RCM model simulations, the 20-year GCM and the RCM downscaled precipitation time series from 1971 to 1990 are compared. In particular, two statistical coefficients, namely the root-mean-square-error (RMSE) and the percent bias (PBIAS) of the daily time series, are calculated for assessing the similarity between the observed and the RCM simulated outputs. The RMSE is commonly used to measure the fit of the simulated to the observed data. The value of RMSE ranges from 0 to + ∞, zero indicating a perfect fit. The PBIAS measures the average trend of the simulated data if it is larger/smaller than their observed data. The optimal value of PBIAS is 0% and positive values indicate a bias model underestimation of observed values. The RMSE and PBIAS are expressed as follows:

where: Xi is observed/simulated values at time i (mm).

Furthermore, an upscaling (UP) method is also used to verify the probabilistic performance of the GCM and RCM model simulations. This method is based on the equitable threat score (ETS) which discriminate between an event and a non-event in association with temporal and spatial model resolutions (Ebert 2008; JWGV 2009). Basically, a simulation has poor resolution if for a given observed event it predicted equal probabilities both for event and non-event. On the other hands, a simulation has maximum resolution if for a given observation of an event (or non-even) it predicted 100% probability of event (or non-event) to occur.

The ETS can be expressed as follows:

where: a is number of correct simulations of occurrences; ar is number of correct simulations of occurrences by chance; b is the number of incorrect simulations of occurrences; c is the number of incorrect simulations of non-occurrences; and d is the number of correct simulations of non-occurrences. The ETS ranges between − 1/3 to 1. The closer the ETS value to 1, the better is the model performance. In this study, both GCM and RCM initiate and run for same duration of observation for present scenario.

More importantly, quantiles are computed for different return periods in accordance with statistically and dynamically downscaled precipitation series. The resulting growth curves are compared to the regional growth curve developed for the whole North Island of New Zealand by Pham et al. (2014b). This dimensionless frequency curve was obtained by normalizing the original frequency curve by the corresponding mean value. A reliable regionalization procedure of the regional frequency analysis for partial duration rainfall series (FA/PDS) was employed in this regard. A PDS could involve as many highest events (peaks) are counted as possible into stages of reconstruction, gap filling, quality control and homogenization within a homogenous area (Moreno et al. 2010).

2.2.3 Probabilistic model for projecting future extreme precipitation

Studies demonstrated that the FA/PDS can improve the accuracy of extreme simulation from observation. In particular, the PDS can consider more extreme events in which the highest peaks in any year can be neglected if their magnitudes are less than peaks occurring in other years (Madsen et al. 1997; Norbiato et al. 2007; Hosking and Wallis 1997). By doing this, reliable results can be obtained with a short period record. Basically, the use of PDS requires the specification of the average number of peaks per year which is used to define an extreme event. This is because the determination of occurrence and magnitude of extreme precipitation events is more reliable with using a certain threshold value of precipitation or an average number of peaks per year (λ). This is due to the fact that the value of λ controls both the occurrence and the magnitude of extreme events. Therefore, the best PDS performance effectively minimizes the quantile estimation uncertainty. More details on PDS properties can be obtained from Pham et al. (2014a).

Pham et al. (2014a) have shown that by extracting an average of five peaks per year from the original daily precipitation series can generate the most reliable series (PDS5) for predicting extreme precipitation over the North Island region of New Zealand, including the Waikato catchment. In addition, PDS5 series was also confirmed to be valid for regional frequency analysis of precipitation over the catchment (Pham et al. 2014b).

In this study, the PDS5 series is extracted from the downscaled time series for both present and future climate. The obtained PDS5 was confirmed to be random and free from significant trend using Lag-1 autocorrelation and Mann–Kendal trend tests. These tests deal with randomness and stationarity of successive peaks selected in the PDS5. From the precipitation time series for the present climate, the PDS data are generated and then used in the frequency analysis. Likewise, the PDS5 series extracted from downscaled precipitation time series for the 2070s is used for future analysis. It is worth noting that the 14 selected stations located within the catchment were confirmed to be statistically homogenous by Pham et al. (2014b).

Extreme precipitation magnitude at a certain period is computed using the Generalised Pareto (GP) frequency distribution (Hosking and Wallis 1997) for PDS5 series.

3 Results and discussions

As explained in the method section, any comparison between the statistically and the dynamically downscaled precipitation from the GCM and the RCM, respectively, is based on a 20-year time series basis starting from 1971 to 1990. In this section, downscaled precipitation and the projected extreme precipitation time series are compared to the observed time series for 2011–2070 period.

3.1 Daily precipitation time series generated from GCMs and RCM

In this study, the comparison is made between the dynamically downscaled precipitation and the observed precipitation measured at the station level. The results of validation of both deterministic and probabilistic performance of the RCM are shown in Table 1 which shows the RMSE, PBIAS and ETS values. Examination of the table shows that the RMSE values range between 9.88 mm and 17.31 mm suggesting that the simulation is not fitting the observation very well. This is because the greater agreement between the observation and the simulation implies smaller RMSE value. For the PBIAS coefficient, negative values are obtained at the majority of stations. This indicates the RCM is overestimating the observation. The model underestimation can be found at stations 1732, 2103, 2184, 2214 and 2250 with positive values of PBIAS. This is also can be seen in Figure A1. Further inspection of the table also shows that the value of ETS computed for all stations is negative, which varies between − 0.157 and − 0.035 indicating a likely poor simulation of precipitation occurrences. This is due to the relatively low temporal and spatial resolutions in climate models. In general, there is no significant difference in simulating daily precipitation magnitude and occurrence at station locations.

For the mean areal precipitation simulation, the evaluation is implemented for both statistically downscaled and dynamically downscaled precipitation against the observations. The accuracy of mean areal precipitation simulation differs from model to model. Table 2 presents the information on how the model fits the observation. This has been tested for the three different models used in this study. Inspection of the table reveals that all models overestimate the mean areal precipitation with negative values of PBIAS (also shown in figure A2). This model overestimation may be caused by bias or errors inherited in the large grid size of the CGCM3 output compared to the smaller grid size of the HadCM3 output. The RMSE values also indicate a close fit of the precipitation from GCM HadCM3 to observation with the lowest RMSE value being 2.89 mm. The weakest fit is found for the CGCM3. There is a moderate fit between observed and simulated precipitation for the RCM HadCM3. For the simulation of precipitation occurrences, both GCM HadCM3 and RCM HadCM3 models give the higher values of ETS. The value of ETS computed for these two models varies between 0.171 and 0.442, respectively. For the CGCM3 model, the ETS value is 0.006, close to 0, which means that the model has difficulty to simulate the occurrence of daily precipitation.

3.2 Extreme precipitation estimation

The accuracy of mean areal precipitation simulation is also evaluated (see Fig. 4 and Table 3). Examination of the figure demonstrates that the extreme precipitation events are not captured when using SDSM. This may be due to the fact that extreme event process is highly random and nonlinear; and the SDSM could not be able to perfectly predict its amounts and occurrences at local scales using a statistical relationship between large-scale predictors and local predictand. For both GCMs, the maximum value is 15 mm. While the RCM captures the maximum of 130 mm. Investigation of Table 3 also reveals that the extreme precipitation occurred at station is quite high in magnitude. For example, an extreme event recorded at station 2214 on 15 November 1978 was 220.8 mm. This again highlights the importance of regional forcings used in the RCMs which discriminates their performance from the SDSM’s.

The regional frequency curve of North New Zealand daily precipitation is used as a standard one for assessing the accuracy of extreme precipitation from GCMs and RCM. PDS5 series for the corresponding GCMs and RCM mean areal precipitation time series is generated. The precipitation quantiles (XT) are computed according to different return periods (T) for the three GCMs and RCM. Also, the regional growth curve obtained from observation by Pham et al. (2014b) is shown. The results are presented in Table 4 and Fig. 5.

Examination of the table and the figure reveals that all three curves are close to the regional curve for T less than or equal to 100 years. However, the quantile values computed for the two GCMs are lower than these of the regional curve, while the RCM quantile values are higher than these of the regional curve. For T equal to 1000 years, the quantile value from the CGCM3 is significantly different from that of GCM HadCM3, but much lower than regional quantiles. However, the value of RCM quantile is about two times higher than the regional quantile. This may be due to the fact that the 20-year observations used to develop frequency curves are too short and there may be localized effects caused by topography.

3.3 Projection of future extreme precipitation

The mean areal daily precipitation from RCM HadCM3 is selected for studying the variability of extreme precipitation for the future. This runs for emission scenarios A2 over the period 2011–2070 based on 30-year-time slice. This is defined as A2a and A2b scenarios for the period 2011–2040 and 2041–2070, respectively.

Off-line statistics of daily precipitation time series for observation and future projections indicate a rise of annual mean daily precipitation by 1.2% and 2.1% for the A2a and A2b scenarios, respectively. The 30-year-daily precipitation projections for the A2a and A2b scenarios are also comparable based on the frequency analysis of extreme precipitation. The results are presented in Fig. 6. As can be seen from Fig. 6, there is an agreement in future extreme event magnitudes for the two scenarios for return periods less than or equal 100 years. This comes in a line with the use of 20-year data set and the valid selection of five peaks in average year from that data set.

Despite the projected rise in global mean temperature, there is insignificant change in extreme precipitation in the catchment by 2040 and 2070. This is because climate change is not just limited to temperature, especially for this catchment with different climatic characteristics. It can be very windy in exposed areas, but low elevation inland parts of the catchment are more sheltered. Mountain ranges cause rainfall anomalies which are directly related to elevation (Chappell, P. R., nd). Moreover, downscaled precipitation data at relatively high resolution may not fully represent the surrounding environment of the selected station.

4 Conclusions

In this study, the simulation of daily precipitation at the station location and the areal mean precipitation over the Waikato catchment was examined using both the deterministic and probabilistic methods for the period 1971 to 1990. These measures include RMSE and PBIAS coefficients for assessing the accuracy of precipitation magnitudes, and ETS score for its occurrence assessment. The results of this study show that the magnitude of daily precipitation is relatively well simulated at the station level for all GCMs and RCM with the value of RMSE computed at individual stations varying between 9 and 14 mm. However, the occurrence of daily precipitation is likely poor simulated because the ETS value ranges from − 0.157 to − 0.035. For catchment scale, the mean areal precipitation computed from GCMs and RCM is significantly different. The areal precipitation magnitudes are best simulated from GCM HadCM3, and the occurrences of areal precipitation are best simulated from RCM HadCM3. The CGCM3 precipitation simulation has poor performance in terms of magnitude and occurrence. This may be caused by the effects of coarse resolution of GCMs.

The preliminary investigation of extreme precipitation using the GP/PDS5 model conducted in this study also reveals that the dynamically downscaled precipitation time series contains more extreme events than that obtained from statistically downscaled series. This difference between the two time series may be caused by the GCMs and RCM themselves in couple with the use of Thiessen polygon method to obtain the GCMs mean areal precipitation. The study also found that extreme precipitation events from GCMs and RCM are very close in magnitude to those obtained from observations for return periods less than or equal to 100 years. There is a significant difference in the magnitude of extreme events between that obtained from the GCMs/RCM and from the observations for high return periods, greater than 100 years. Preferred frequency curves developed from 20-year observation series may not fully represent the precipitation variability for the whole past century, but up to 100 years. Local effects could be another constraint to the modelled results.

These study findings are expected to assist in planning and design of water works subject to certain extreme events with and/or without considering climate change impacts.

References

Ackerley D, Dean S, Sood A (2012) Regional climate modelling in New Zealand: Comparison to gridded and satellite observations. Weather and Climate 32(1):3–22

Baguis P, Roulin E, Willems P, Ntegeka V (2010) Climate change scenarios for precipitation and potential evapotranspiration over central Belgium. Theor Appl Climatol 99:273–286

Castro-Diez Y, Pozo-Vazquez DP, Rodrigo FS, Esteban-Parra MJ (2002) NAO and winter temperature variability in southern Europe. Geophys Res Lett 29(8):1

Cavazos T (1998) Large-scale circulation anomalies conducive to extreme precipitation events and derivation of daily rainfall in northeastern mexico and southeastern texas. J Clim 12

Chappell PR (2013) The climate and weather of Waikato, vol 61. NIWA Science and Technology Series, 40 pp

Charles SP, Bates BC, Whetton PH, Hughes JP (1999) Validation of downscaling models for changed climate conditions: case study of southwestern Australia. Clim Res 12:1–14

Chen H, Xu CY, Guo S (2012) Comparison and evaluation of multiple GCMs, statistical downscaling and hydrological models in the study of climate change impacts on runoff. J Hydrol 434–435:36–45

Chen J, Brissette FP, Leconte R (2011) Uncertainty of downscaling method in quantifying the impact of climate change on hydrology. J HydroI 401:190–202

Chiew FHS, Kirono DGC, Kent DM, Frost AJ, Charles SP, Timbal B et al (2010) Comparison of runoff modelled using rainfall from different downscaling methods for historical and future climates. J Hydrol 387:10–23

Chun KP (2010) Statistical downscaling of climate model outputs for hydrological extremes. Ph.D. Thesis. Imperial College London, London, UK

Comnalicer EA, Criz RVO, Lee S, Im S (2010) Assessing climate change impacts on water balance in the Mount Makiling forest, Philipines. J Earth Syst Sci 119(3):256–283

Coulibaly P, Dibike YB (2004) Downscaling global climate model outputs for flood frequency analysis in the Saguenay river system. Project No. S02-15-01. McMaster University, Hamilton, ON

DAI (2012) Sets of predictor variable derived from CGCM3.1 T47 and NCEP/NCAR renalysis. Montreal, QC, Canada, 18 pp

Davani HT, Nasseri M, Zahraie B (2012) Improved statistical downscaling of daily precipitation using SDSM platform and data-mining methods. Int J Climatol. https://doi.org/10.1002/joc.3611

Denis B, Laprise R, Caya D et al (2002) Downscaling ability of one-way nested regional climate models: the Big-Brother Experiment. Climate Dynamics 18:627–646

Dravitzki SM (2009) Precipitation in the Waikato River Catchment. Ph.D. Thesis. The School of Geography, Environment and Earth Sciences. Victoria University of Wellington, Wellington, New Zealand

Dubrovsky M (2009) Multi-GCM climate projections for Europe: GCMs performance and uncertainties. Paper presented at the EMA Meeting. Toulouse, France

Ebert EE (2008) Neighborhood verification: a strategy for rewarding close forecasts. Weather Forecast 24:1498–1510

Ferrero AF, Saenz J, Berastegi GI, Fernandez J (2009) Evaluation of statistical downscaling in short range precipitation forecasting. Atmos Res 94:448–461

Fowler HJ, Cooley D, Sain SR, Thurston M (2010) Detecting change in UK extreme precipitation using results from the climateprediction.net BBC climate change experiment. Extremes 13:241–267

Fowler HJ, Kilsby CG (2003) Implications of changes in seasonal and annual extreme rainfall. Geophys Res Lett 30(13):1720

Francis D, Hengeveld H (1998) Extreme weather and climate change. Environmenting report. Authority of the Minister of the Environment

Friederichs P (2010) Statistical downscaling of extreme precipitation events using extreme value theory. Extremes 13:109–132

Furcolo P, Villani P, Rossi F (1995) Statistical analysis of the spatial variability of very extreme rainfall in the Mediterranean area. Paper presented at the US-Italia research workshop on the Hydrometeorology, Impacts, and Management of Extreme Floods. Perugia, Italia

García-Cueto OR, Santillán-Soto N (2012) Modeling extreme climate events: two case studies in Mexico. In: Druyan L (ed) Climate models. IntechOpen, Rijeka. https://doi.org/10.5772/31634

Giorgi F, Means LO (1991) Regional Climate Change. Reviews of Geophysics 29(2):191–216

Gutmann ED, Rasmussen RM et al (2012) A Comparison of statistical and dynamical downscaling of winter precipitation over complex terrain. J Clim 25:262–281

Hashmi MZ, Shamseldin AY, Melville BW (2011) Comparison of SDSM and LARS-WG for simulation and downscaling of extreme precipitation events in a watershed. Stoch Environ Res Risk Assess 25:475–484

Hashmi MZ, Shamseldin AY, Melville BW (2012) Watershed scale climate change projections for use in hydrologic studies: exploring new dimensions. Ph.D. Thesis. The University of Auckland, Auckland, New Zealand

Hosking JRM, Wallis JR (1997) Regional frequency analysis: an approach based on L-moments. Cambridge University Press, Cambridge

Hu Y, Maskey S, Uhlenbrook S (2013) Downscaling daily precipitation over the Yellow River source region in China: a comparison of three statistical downscaling methods. Theor Appl Climatol 112:447–460

Huang J, Zhang J, Zhang Z, Sun S, Yao J (2012) Simulation of extreme precipitation indices in the Yangtze River basin by using statistical downscaling method (SDSM). Theor Appl Climatol 108:325–343

Huntingford C, Jones RG, Prudhomme C, Lamb R, Gash JHC, Jones DA (2003) Regional climate-model predictions of extreme rainfall for a changing climate. Q J R Meteorol Soc 129:1607–1621

Im IS, Jung W, Chang H, Bae DH, Kwon WT (2010) Hydroclimatological response to dynamically downscaled climate change simulations for Korean basins. Clim Change 100:485–508

Ishak AM, Bray M, Remesan R, Han D (2010) Estimating reference evapotranspiration using numerical weather modelling. Hydrol Process 24(24):3490–3509

The Intergovernmental Panel on Climate Change - IPCC (2007) Climate change 2007: synthesis report by the three working group contributions to the AR4. Geneva, Switzeland

IPCC (2013) Climate change 2013: the physical science basis. In: Stocker TF, Qin D, Plattner G-K, Tignor M, Allen SK, Boschung J, Nauels A, Xia Y, Bex V, Midgley PM (eds) Contribution of working group i to the fifth assessment report of the intergovernmental panel on climate change. Cambridge University Press, Cambridge

Jeong DI, St-Hilaire A, Ouarda TBMJ, Gachon P (2012) CGCM3 predictors used for daily temperature and precipitation downscaling in Southern Québec, Canada. Theor Appl Climatol 107:389–406

JWGV (2009) WWRP/WGNE joint working group on forecast verification research. http://www.cawcr.gov.au/projects/verification/. Retrived on 25 July 2014

Katz RW (2010) Statistics of extremes in climate change. Clim Change 100:71–76

Khan MS, Coulibaly P, Dibike YB (2006) Uncertainty analysis of statistical downscaling methods. J Hydrol 319:357–382

Lambert JH, Matalas NC, Ling CW, Haimes YY (1994) Selection of probability distributions in characterizing risk of extreme events. Risk Anal 14(5):731–742

Landsea CW, Knaff JA (2000) How much skill was there in forecasting the very strong 1997–98 El Niño? Am Meteorol Soc 81(9):2107–2119

Lavers D, Prudhomme C, Hannah DM (2010) Large-scale climate, precipitation and British river flows: identifying hydroclimatological connections and dynamics. J Hydrol 395:242–255

Li K, Qi J (2010) Statistical downscaling: a powerful tool for estimating global change impacts on regional scale. Paper presented at the conference on environmental science and information application technology. Wuhan, China

Madsen H, Pearson GP, Rosbjerg D (1997) Comparison of annual maximum series and partial duration series methods for modeling extreme hydrologic events: 2. Regional modeling. Water Resources Res 33(4):759–769

Mason SJ, Waylen P, Mimmack GM, Rajaratnam B, Harrison JM (1999) Changes in extreme rainfall events in South Africa. Clim Change 41:249–257

Mearns LO, Giorgi F, Whetton P, Pabon D, Hulme M, Lal M (2003) Guidelines for use of climate scenarios developed from regional climate model experiments by DDC of IPCC TGCIA Final Version—10/30/03

Ministry of Environment (2008) Climate change effects and impacts assessment: A Guidance Manual for Local Government in New Zealand. 2nd edn. Retrieved from Wellington

Moreno JIL, Vicente-Serrano SM, Martínez MA, Beguería S, Kenawya A (2010) Trends in daily precipitation on the north eastern Iberian Peninsula, 1955–2006. Int J Climatol 30:1026–1041

Mujumdar PP, Ghosh S (2008) Modeling GCM and scenario uncertainty using a possibilistic approach: Application to the Mahanadi River, India. Water Resour Res 44:1–15

Mullan AB, Wratt DS, Renwick JA (2001) Transient model scenarios of climate changes for New Zealand. Weather Clim 21:3–34

Norbiato D, Borga M, Sangati M, Zanon F (2007) Regional frequency analysis of extreme precipitation in the eastern Italian Alps and the August 29, 2003—flash flood. J Hydrol 345:149–166

Pal I, Tabbaa AA (2009) Trends in seasonal precipitation extremes – an indicator of ‘climate change’ in Kerala, India. J Hydrol 367:62–69

Park JS, Jung HS (2002) Modelling Korean extreme rainfall using a Kappa distribution and maximum likelihood estimate. Theoret Appl Climatol 72:55–64

Pattanaika DR, Rajeevanb M (2010) Variability of extreme rainfall events over India during southwest monsoon season. Meteorol Appl 17:88–104

Pielke RA (2012) Regional climate downscaling: what’s the point? Eos 93(5):52–53

Pham HX, Shamseldin AY, Melville BW (2013) Statistical downscaling of daily precipitation using UKSDSM model. Paper presented at the Thai-Japan International Academic Conference. Osaka, Japan.

Pham HX, Shamseldin AY, Melville BW (2014a) Statistical properties of partial duration series. J Hydrol Eng 19(4):807–815

Pham HX, Shamseldin AY, Melville BW (2014b) Statistical properties of partial duration series and its implication in frequency analysis. J Hydrol Eng 19(7):1471–1480

Prudhomme C, Reynard N, Crooks S (2002) Downscaling of global climate models for flood frequency analysis: where are we now? Hydrol Process 16:1137–1150

Randall DA, Wood RA et al (2007) Chapter 8 Climate models and their evaluation. In: Solomon S, Qin D, Manning M (eds) IPCC fourth assessment report: climate change 2007, working group I (AR4 WGI). Cambridge University Press, Cambridge

Rummukainen M (2010) State-of-the-art with regional climate models. Adv Rev WIRES Clim Change 1:82–96

Schmidli J, Frei C (2005) Trends of heavy precipitation and wet and dey spells in Switzerland during the 20th century. Int J Climatol 25:753–771

Sunyer MA, Madsen H, Ang PH (2012) A comparison of different regional climate models and statistical downscaling methods for extreme rainfall estimation under climate change. Atmos Res 103:119–128

Taylor KE, Stouffer RJ, Meehl GA (2012) An overview of CMIP and the experiment design. Bull Am Meteorol Soc 93(4):485–498

Timbal B, Li ZL, Fernandaer E (2008) The Bureau of Meteorology Statistical Downscaling Model Graphical User Interface: user manual and software documentation. Australian Goverment Bureau of Meteorology, Melbourne

Von Storch H (1995) Statistical analysis in climate research. Part III—fitting statistic models. Cambridge University Press, Cambridge, pp 143–192

Wang X, Yang T, Shao Q, Acharya K, Wang W, Yu Z (2012) Statistical downscaling of extremes of precipitation and temperature and construction of their future scenarios in an elevated and cold zone. Stoch Environ Res Risk Assess 26:405–418

Wilby R, Dawson C, Barrow E (2002) SDSM—a decision support tool for the assessment of regional climate change impacts. Environ Model Softw 17(2):145–157

Wilby RL, Charles SP et al (2004) Guidelines for use of climate scenarios developed from statistical downscaling methods by the Task Group on Data ans Scenario Support for Impacts and Climate Analysis (TGICA)

Wilby RL, Hayc LE, Leavesleyc GH (1999) A comparison of downscaled and raw GCM output: implications for climate change scenarios in the San Juan River basin, Colorado. J HydroI 225:67–91

Willems P, Vrac M (2011) Statistical precipitation downscaling for small-scale hydrological impact investigations of climate change. J HydroI 402:193–205

Xu Z, Yang Z-L (2012) An improved dynamical downscaling method with GCM bias corrections and its validation with 30 years of climate simulations. Am Meteorol Soc 25:6271–6286

Xue Y, Vasic R et al (2007) Assessment of dynamic downscaling of the continental U.S. regional climate using the Eta/SSiB Regional climate model. J Clim 20:4172–4193

Yang T, Li H, Wang W, Xu C-Y, Yi Z (2012) Statistical downscaling of extreme daily precipitation, evaporation, and temperature and construction of future scenarios. Hydrol Process 26:3510–3523

Yang T, Shao Q, Hao Z-C, Chen X, Zhang Z, Xu C-Y et al (2010) Regional frequency analysis and spatio-temporal pattern characterization of rainfall extremes in the Pearl River Basin, China. J HydroI 380:386–405

Yoon J-H, Leung LR, Correia J (2012) Comparison of dynamically and statistically downscaled seasonal climate forecasts for the cold season over the United States. J Geophys Res 117:1–17

Zhang C (2016a) Dynamic downscaling of the climate for the Hawaiian islands. Part I: present day. J Clim 29(8):3027–3048

Zhang C (2016b) Dynamic downscaling of the climate for the Hawaiian islands. Part II: projection for the late Twenty-first century. J Clim 29(23):8333–8354

Zhang J (2010) Improving on estimation for the generalized Pareto distribution. Technometrics 52(3):335–339

Zin WZW, Jamaludin S, Deni SA, Jemain AA (2010) Recent changes in extreme rainfall events in Peninsular Malaysia: 1971–2005. Theor Appl Climatol 99:303–314

Zvi AB (2009) Rainfall intensity–duration–frequency relationships derived from large partial duration series. J HydroI 367:104–114

Acknowledgements

The authors would like to acknowledge the Data Access Integration (DAI, see http://quebec.ccsn.ca/DAI/), the Canadian Climate Impacts and Scenarios project (CICS, http://www.cics.uvic.ca/scenarios/), the National Institute of Weather and Atmospheric Research (NIWA, http://www.niwa.co.nz/climate/) for providing the data and to the National Centre for Supercomputing Application (NCSA) and the North American Regional Climate Change Assessment Programme (NARCCAP) for technical support. The authors would also like to thank the anonymous reviewers for their valued comments and constructive suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Pham, H.X., Shamseldin, A.Y. & Melville, B.W. Projection of future extreme precipitation: a robust assessment of downscaled daily precipitation. Nat Hazards 107, 311–329 (2021). https://doi.org/10.1007/s11069-021-04584-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-021-04584-1