Abstract

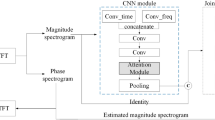

In this paper, a CNN-based structure for the time-frequency localization of information is proposed for Persian speech recognition. Research has shown that the receptive fields’ spectrotemporal plasticity of some neurons in mammals’ primary auditory cortex and midbrain makes localization facilities improve recognition performance. Over the past few years, much work has been done to localize time-frequency information in ASR systems, using the spatial or temporal immutability properties of methods such as HMMs, TDNNs, CNNs, and LSTM-RNNs. However, most of these models have large parameter volumes and are challenging to train. For this purpose, we have presented a structure called Time-Frequency Convolutional Maxout Neural Network (TFCMNN) in which parallel time-domain and frequency-domain 1D-CMNNs are applied simultaneously and independently to the spectrogram, and then their outputs are concatenated and applied jointly to a fully connected Maxout network for classification. To improve the performance of this structure, we have used newly developed methods and models such as Dropout, maxout, and weight normalization. Two sets of experiments were designed and implemented on the FARSDAT dataset to evaluate the performance of this model compared to conventional 1D-CMNN models. According to the experimental results, the average recognition score of TFCMNN models is about 1.6% higher than the average of conventional 1D-CMNN models. In addition, the average training time of the TFCMNN models is about 17 h lower than the average training time of traditional models. Therefore, as proven in other sources, time-frequency localization in ASR systems increases system accuracy and speeds up the training process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Allen JB (1995) How do humans process and recognize speech? ” in Modern methods of speech processing. Springer, pp 251–275

Escabí MA, Schreiner CE (2002) Nonlinear Spectrotemporal Sound Analysis by Neurons in the Auditory Midbrain. J Neurosci 22(10):4114–4131. doi: https://doi.org/10.1523/jneurosci.22-10-04114.2002

Depireux DA, Simon JZ, Klein DJ, Shamma SA (2001) Spectro-temporal response field characterization with dynamic ripples in ferret primary auditory cortex. J Neurophysiol 85(3):1220–1234. doi: https://doi.org/10.1152/jn.2001.85.3.1220

Chi T, Ru P, Shamma SA (2005) Multiresolution spectrotemporal analysis of complex sounds. J Acoust Soc Am 118(2):887–906. doi: https://doi.org/10.1121/1.1945807

Theunissen FE, Sen K, Doupe AJ (2000) Spectral-temporal receptive fields of nonlinear auditory neurons obtained using natural sounds. J Neurosci 20(6):2315–2331

Fritz J, Shamma S, Elhilali M, Klein D (2003) Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci 6(11):1216–1223. doi: https://doi.org/10.1038/nn1141

Calhoun BM, Schreiner CE (1998) Spectral envelope coding in cat primary auditory cortex: linear and non-linear effects of stimulus characteristics. Eur J Neurosci 10(3):926–940. doi: https://doi.org/10.1046/j.1460-9568.1998.00102.x

Shannon RV, Zeng F-G, Kamath V, Wygonski J, Ekelid M (1995) Speech recognition with primarily temporal cues. Sci (80-) 270(5234):303–304

Oxenham AJ (2013) Revisiting place and temporal theories of pitch. Acoust Sci Technol 34(6):388–396. doi: https://doi.org/10.1250/ast.34.388

Swanson BA, Marimuthu VMR, Mannell RH (2019) “Place and Temporal Cues in Cochlear Implant Pitch and Melody Perception,” Front. Neurosci., vol. 13, no. November, pp. 1–18, doi: https://doi.org/10.3389/fnins.2019.01266

Zeng F (2002) Temporal pitch in electric hearing. Hear Res 174:101–106

Qiu A, Schreiner CE, Escabí MA (2003) Gabor analysis of auditory midbrain receptive fields: spectro-temporal and binaural composition. J Neurophysiol 90(1):456–476. doi: https://doi.org/10.1152/jn.00851.2002

Shamma S, De Groot SR, van Weert CG, Hermens WT, van Leeuwen WA, Shamma S (2001) On the role of space and time in auditory processing. Trends Cogn Sci 5(8):340–348. doi: https://doi.org/10.1016/0031-8914(69)90287-0

DeAngelis GC, Ohzawa I, Freeman RD (1995) Receptive-field dynamics in the central visual pathways. Trends Neurosci 18(10):451–458

Schädler MR, Kollmeier B (2015) Separable spectro-temporal Gabor filter bank features: Reducing the complexity of robust features for automatic speech recognition. J Acoust Soc Am 137(4):2047–2059. doi: https://doi.org/10.1121/1.4916618

Robertson S, Penn G, Wang Y (2019) “Exploring spectro-temporal features in end-to-end convolutional neural networks,” arXiv Prepr. arXiv1901.00072, pp. 1–9,

Kleinschmidt M (2002) “Robust speech recognition based on spectro-temporal processing. ” Universität Oldenburg

Bouvrie J, Ezzat T, Poggio T (2008) “Localized spectro-temporal cepstral analysis of speech,” in IEEE International Conference on Acoustics, Speech and Signal Processing, 2008, no. May 2014, pp. 4733–4736, doi: https://doi.org/10.1109/ICASSP.2008.4518714

Andén J, Lostanlen V, Mallat SS, Anden J, Lostanlen V, Mallat SS (2015) “Joint time-frequency scattering for audio classification,” in IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), 2015, vol. 2015-Novem, pp. 1–6, doi: https://doi.org/10.1109/MLSP.2015.7324385

Waibel A (1989) Modular Construction of Time-Delay Neural Networks for Speech Recognition. Neural Comput 1(1):39–46. doi: https://doi.org/10.1162/neco.1989.1.1.39

Lecun Y, Bottou LL, Bengio Y, Haffner PPP (1998) “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, [Online]. Available: http://ieeexplore.ieee.org/document/726791/#full-text-section

Tlanusta Garret M et al (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Abdel-Hamid O, Mohamed AR, Jiang H, Deng L, Penn G, Yu D (2014) “Convolutional neural networks for speech recognition,” IEEE/ACM Trans. audio, speech, Lang. Process., vol. 22, no. 10, pp. 1533–1545, doi: https://doi.org/10.1109/TASLP.2014.2339736

LeCun Y, Bengio Y, Yann L, Yoshua B (1995) “Convolutional networks for images, speech, and time series,” Handb. brain theory neural networks, vol. 3361, no. 10, p. 1995

Lee H et al (2009) Unsupervised feature learning for audio classification using convolutional deep belief networks. Adv Neural Inf Process Syst 22:1096–1104

Abdel-Hamid O, Deng L, Yu D (2013) “Exploring convolutional neural network structures and optimization techniques for speech recognition.,” in Interspeech, vol. 11, no. August, pp. 73–75

Stern RM et al (2012) Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process Mag 29(6):16–17. doi: https://doi.org/10.1109/MSP.2012.2209906

Tóth L (2014) “Convolutional deep maxout networks for phone recognition,” in Fifteenth Annual Conference of the International Speech Communication Association, no. September, pp. 1078–1082

Abdel-Hamid O et al (2012) IEEE international conference on Acoustics, speech and signal processing (ICASSP), 2012, no. July 2015, pp. 4277–4280, doi: https://doi.org/10.1109/ICASSP.2012.6288864

Cai M, Shi Y, Kang J, Liu J, Su T (2014) “Convolutional maxout neural networks for low-resource speech recognition,” in The 9th International Symposium on Chinese Spoken Language Processing, pp. 133–137, doi: https://doi.org/10.1109/ISCSLP.2014.6936676

Mitra V, Franco H (2015) “Time-frequency convolutional networks for robust speech recognition,” in 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), pp. 317–323

Amodei D et al (2016) “Deep speech 2: End-to-end speech recognition in english and mandarin,” in International conference on machine learning, vol. 1, pp. 173–182

Abrol V, Dubagunta SP, Magimai M (2019) “Understanding raw waveform based CNN through low-rank spectro-temporal decoupling,”Idiap,

Zhu B et al (2018) International Joint Conference on Neural Networks (IJCNN), 2018, pp. 1–8

Zhao T, Zhao Y, Chen X (2015) “Time-frequency kernel-based CNN for speech recognition,” in Sixteenth Annual Conference of the International Speech Communication Association, vol. 2015-Janua, pp. 1888–1892

Li J, Mohamed A, Zweig G, Gong Y (2016) “Exploring multidimensional LSTMs for large vocabulary ASR,” in 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), vol. 2016-May, pp. 4940–4944, doi: https://doi.org/10.1109/ICASSP.2016.7472617

Li J, Mohamed A, Zweig G, Gong Y (2015) “LSTM time and frequency recurrence for automatic speech recognition,” in 2015 IEEE workshop on automatic speech recognition and understanding (ASRU), pp. 187–191, doi: https://doi.org/10.1109/ASRU.2015.7404793

van Segbroeck M et al (2007) “Multi-view Frequency LSTM: An Efficient Frontend for Automatic Speech Recognition,” arXiv Prepr. arXiv00131, 2020

Maiti, Bidinger TN, Sainath O, Vinyals A, Senior, Sak H (2015) “Convolutional, long short-term memory, fully connected deep neural networks,” in IEEE international conference on acoustics, speech and signal processing (ICASSP), 2015, vol. 53, no. 9, pp. 4580–4584

Kreyssig FL, Zhang C, Woodland PC (2018) “Improved TDNNs using deep kernels and frequency dependent Grid-RNNs,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, vol. 2018-April, pp. 4864–4868, doi: https://doi.org/10.1109/ICASSP.2018.8462523

Yuan W (2020) “A time–frequency smoothing neural network for speech enhancement,” Speech Commun., vol. 124, no. August, pp. 75–84, doi: https://doi.org/10.1016/j.specom.2020.09.002

Miao X, McLoughlin I, Yan Y (2019) “A New Time-Frequency Attention Mechanism for TDNN and CNN-LSTM-TDNN, with Application to Language Identification.,” in Interspeech, vol. 2019-Septe, pp. 4080–4084, doi: https://doi.org/10.21437/Interspeech.2019-1256

Bae SH, Choi I, Kim NS (2016) “Acoustic scene classification using parallel combination of LSTM and CNN,” Detect. Classif. Acoust. Scenes Events no. September, 2016

Lidy T, Schindler A, Scenes A, Lidy T, Schindler A (2016) “CQT-based convolutional neural networks for audio scene classification,” in Proceedings of the detection and classification of acoustic scenes and events workshop (DCASE2016), 2016, vol. 90, no. September, pp. 1032–1048

Lim TY, Yeh RA, Xu Y, Do MN, Hasegawa-johnson M (2018) “Time-frequency networks for audio super-resolution,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, pp. 646–650

Deng J et al (2020) Exploiting time-frequency patterns with LSTM-RNNs for low-bitrate audio restoration. Neural Comput Appl 32(4):1095–1107. doi: https://doi.org/10.1007/s00521-019-04158-0

Li R, Wu Z, Ning Y, Sun L, Meng H, Cai L (2017) “Spectro-Temporal Modelling with Time-Frequency LSTM and Structured Output Layer for Voice Conversion.,” in INTERSPEECH, vol. 2017-Augus, pp. 3409–3413, doi: https://doi.org/10.21437/Interspeech.2017-1122

Glorot X, Bordes A, Bengio Y (2011) “Deep sparse rectifier neural networks,” in Proceedings of the fourteenth international conference on artificial intelligence and statistics, vol. 15, pp. 315–323

Goodfellow IJ, Warde-Farley D, Mirza M, Courville A, Bengio Y (2013) “Maxout networks,” in International conference on machine learning, no. PART 3, pp. 1319–1327

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: A simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Srebro N, Rennie JDM, Jaakkola TS (2005) “Maximum-margin matrix factorization,” in Advances in neural information processing systems, pp. 1329–1336

Kleinschmidt M, Section MP, Universit CVO (2003) “Localized spectro-temporal features for automatic speech recognition,” in Eighth European conference on speech communication and technology, pp. 1–4

Ezzat T, Bouvrie J, Poggio T (2007) “Spectro-temporal analysis of speech using 2-D Gabor filters,” in Eighth Annual Conference of the International Speech Communication Association, vol. 4, pp. 2308–2311

Lei H, Meyer BT, Mirghafori N (2012) “Spectro-temporal Gabor features for speaker recognition,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2012, pp. 4241–4244

Schädler MR, Meyer BT, Kollmeier B (2012) Spectro-temporal modulation subspace-spanning filter bank features for robust automatic speech recognition. J Acoust Soc Am 131(5):4134–4151. doi: https://doi.org/10.1121/1.3699200

Chang S, Morgan N (2014) “Robust CNN - based Speec h Recognition With Gabor Filter Kernels,”

Kovács G, Tóth L (2015) Joint optimization of spectro-temporal features and deep neural nets for robust automatic speech recognition. Acta Cybern 22(1):117–134. doi: https://doi.org/10.14232/actacyb.22.1.2015.8

Slee SJ, David SV (2015) Rapid task-related plasticity of spectrotemporal receptive fields in the auditory midbrain. J Neurosci 35:13090–13102. doi: https://doi.org/10.1523/JNEUROSCI.1671-15.2015

Tóth L (2014) “Combining time-and frequency-domain convolution in convolutional neural network-based phone recognition,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2014, pp. 190–194

Veselý K, Karafiát M, Grézl F (2011) “Convolutive bottleneck network features for LVCSR,” in 2011 IEEE Workshop on Automatic Speech Recognition & Understanding, pp. 42–47, doi: https://doi.org/10.1109/ASRU.2011.6163903

Kim J, Truong KP, Englebienne G, Evers V (2017) “Learning spectro-temporal features with 3D CNNs for speech emotion recognition,” in Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), 2017, vol. 2018-Janua, pp. 383–388, doi: https://doi.org/10.1109/ACII.2017.8273628

Ji S, Xu W, Yang M, Yu K (2013) 3D Convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231. doi: https://doi.org/10.1109/TPAMI.2012.59

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) “Learning spatiotemporal features with 3d convolutional networks,” in Proceedings of the IEEE international conference on computer vision, vol. 2015 Inter, pp. 4489–4497, doi: https://doi.org/10.1109/ICCV.2015.510

Hubel DH, Wiesel TN (1962) Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol 160(1):106–154

Seyyede Zohreh SAS, Seyyedsalehi (2015) A fast and efficient pre-training method based on layer-by-layer maximum discrimination for deep neural networks. Neurocomputing 168:669–680. doi: https://doi.org/10.1016/j.neucom.2015.05.057

Seyyedsalehi SZ, Seyyedsalehi SA (2015) Bidirectional Layer-By-Layer Pre-Training Method for Deep Neural Networks Training (In Persian). Comput Intell Electr Eng 2:10

Hinton GE, Salakhutdinov RR (2006) “Reducing the dimensionality of data with neural networks,” Science (80-.)., vol. 313, no. 5786, pp. 504–507, doi: https://doi.org/10.1126/science.1127647

Dayan P, Abbott L (2002) Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems (Computational Neuroscience). J Cogn Neurosci 480. doi: https://doi.org/10.1016/j.neuron.2008.10.019

Zeiler MD et al (2013) IEEE International Conference on Acoustics, Speech and Signal Processing, 2013, pp. 3517–3521

Dahl G, Sainath T, Hinton G (2013) “Improving Deep Neural Netowrks for LVCSR Using Recitified Linear Units and Dropout, Department of Computer Science, University of Toronto,” Acoust. Speech Signal Process. (ICASSP), IEEE Int. Conf., pp. 8609–8613, 2013

Maas AL, Hannun AY, Ng AY (2013) “Rectifier nonlinearities improve neural network acoustic models,” in Proc. icml, vol. 30, no. 1, p. 3

Tóth L (2013) “Phone recognition with deep sparse rectifier neural networks,” in IEEE International Conference on Acoustics, Speech and Signal Processing, 2013, pp. 6985–6989

Tóth L (2013) “Convolutional deep rectifier neural nets for phone recognition,” in Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, no. August, pp. 1722–1726

Miao Y, Metze F, Rawat S (2013) “Deep maxout networks for low-resource speech recognition,” in IEEE Workshop on Automatic Speech Recognition and Understanding, 2013, pp. 398–403, doi: https://doi.org/10.1109/ASRU.2013.6707763

Cai M, Shi Y, Liu J (2013) “Deep maxout neural networks for speech recognition,” in 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, pp. 291–296

Miao Y, Metze F (2014) “Improving language-universal feature extraction with deep maxout and convolutional neural networks,” in Fifteenth Annual Conference of the International Speech Communication Association, pp. 800–804

Swietojanski P, Li J, Huang J-T (2014) “Investigation of maxout networks for speech recognition,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2014, pp. 7649–7653

Zhang Y, Pezeshki M, Brakel P, Zhang S, Bengio CLY, Courville A (2017) “Towards end-to-end speech recognition with deep convolutional neural networks,” arXiv Prepr. arXiv1701.02720, vol. 08-12-Sept, pp. 410–414, doi: https://doi.org/10.21437/Interspeech.2016-1446

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140. doi: https://doi.org/10.3390/risks8030083

Dehghani A, Seyyedsalehi SA (2018) “Performance Evaluation of Deep Convolutional Maxout Neural Network in Speech Recognition,” in 25th National and 3rd International Iranian Conference on Biomedical Engineering (ICBME), 2018, pp. 1–6

Bijankhan M, Sheikhzadegan J, Roohani MR (1994) “FARSDAT-The speech database of Farsi spoken language,”

Mahdi Rahiminejad SAS “A Comparative Study of Representation Parameters Extraction and Normalization Methods for Speaker Independent Recognition of Speech (In Persian),”Amirkabir, vol. 55, p.20, 1382

Palm RB (2012) Prediction as a candidate for learning deep hierarchical models of data. Tech Univ Denmark 5:1–87

Acknowledgements

The authors of this article express their gratitude to Ms. Soraya Rahimi, Ms. Fatemeh Maghsoud Lou, and Mr. Arya Aftab for their valuable contributions to implementing and analyzing the performance of CNNs, maxout networks, and dropout training method. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dehghani, A., Seyyedsalehi, S.A. Time-Frequency Localization Using Deep Convolutional Maxout Neural Network in Persian Speech Recognition. Neural Process Lett 55, 3205–3224 (2023). https://doi.org/10.1007/s11063-022-11006-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-022-11006-1