Abstract

In this paper, a novel adaptive sampled-data observer design is studied for a class of nonlinear systems with unknown Prandtl–Ishlinskii hysteresis and unknown unmatched disturbances based on radial basis function neural networks (RBFNNs). To begin with, we investigate a sampled-data nonlinear system and present sufficient conditions such that the sampled-data nonlinear system is ultimately uniformly bounded (UUB). Then, an adaptive sampled-data observer is designed to estimate the unknown states of the nonlinear system. The unknown hysteresis and the unknown disturbances are approximated by RBFNNs. We also give the learning laws of the weights of RBFNNs, and prove that the estimation errors of the states and the weights are UUB, based on the obtained sufficient conditions and a special constructing Lyapunov–Krasovskii function. Finally, the effectiveness of the proposed design method is verified by numerical simulations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to hysteresis is an important nonlinear phenomenon which exists widely in practical systems, nonlinear systems with hysteresis have been one of the rigorous challenging and worthy research for control [1]. The properties, such as, inaccuracies, oscillations and instability affected by the non-differentiability of hysteresis may gradually deteriorate the system performance [2, 3]. Recently, numerous adaptive control schemes have been developed to control uncertain nonlinear systems with unknown backlash-like hysteresis. In [4, 5], an adaptive state feedback control and an adaptive fuzzy output feedback control were designed for a class of uncertain nonlinear systems preceded by unknown backlash-like hysteresis, respectively. Note that the controllers designed in [4,5,6] are based on the backlash-like hysteresis. There are other hysteresis patterns needed to be analyzed. The authors in [7] developed an adaptive neural output feedback control scheme for nonlinear systems with unknown Prandtl–Ishlinskii (PI) hysteresis. Additionally, there exist some methods of control and tracking for this hysteresis system, such as adaptive robust output feedback control and robust adaptive backstepping control, etc. [8,9,10,11].

In some cases, the states of a control system are usually unmeasurable, whereas the system outputs are measurable at sampling instants. In particular, for a networked control system (NCS), its outputs are usually acquired by data acquisitions at sampling instants. Therefore, the design of sampled-data observers is more significant and challenging than that of traditional observers. For a continuous linear system, observer can be designed on the basis of its accurate discretization model. However, for a continuous nonlinear system, it is usually difficult to obtain its accurate discretization model. Therefore, the design method cannot be extended to continuous nonlinear systems. Recently, researchers have paid great enthusiasm on sampled-data observer design for nonlinear systems, and developed three categories of design method, such as design based on approximate discretization models [12, 13], continuous design and corresponding discretization [14, 15], and continuous and discrete design [16,17,18,19,20,21]. Inspired by Chen and Ge [7], we try to extend sampled-data observer design for nonlinear systems with unknown hysteresis by the third method. Because when the sampling-data observer adopts this method, the sampling-data can be directly utilized to update the observer without discretizing the nonlinear system.

In practical engineering applications, nonlinearities and uncertainties are usually contained in an amount of systems. In addition, unmatched time-varying disturbances are unavoidable, with which the whole system will be unstable [22,23,24]. To this end, RBFNNs and fuzzy logic systems possessing superior approximation and adaptability were employed to compensate the uncertainties and the unmatched time-varying disturbances [25,26,27,28].

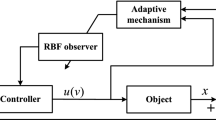

Following the previous references, few results are combined with sampled-data observers, although researches on control and application of hysteresis have been carried out in recent years. In this paper, we consider sampled-data observer design for a class of nonlinear systems with unknown hysteresis and unknown unmatched disturbance based on RBFNNs. The main contributions of this paper are summarized as follows. (1) We investigate a sampled-data nonlinear system and present sufficient conditions such that the considered system is UUB. (2) Continuous observers are designed for a class of nonlinear systems with unknown hysteresis and unknown disturbances, which are approximated by RBFNNs. The sampled measurements are used to update the observer whenever they are available. (3) By constructing a Lyapunov–Krasovskii function, sufficient conditions are derived to guarantee that the observation errors are UUB. Compared with [7], the ways of process of the hysteresis and the disturbances are different, the restriction on the constant control gain parameter is relaxed, and the problem of parameter selection is solved.

The rest of this paper is organized as follows. In Sect. 2, the problem statement, some assumptions, and the control objective are described. Section 3 describes the design procedure of the adaptive sampled-data observer by using RBFNNs. In Sect. 4, an example is used to illustrate the validity of the proposed design methods. Some conclusions are given in Sect. 5.

2 Problem Formulations and Preliminaries

In this paper, our purpose is to design an adaptive sampled-data observer for the following system

where \(x(t) = [{x_1}(t),{x_2}(t), \ldots , {x_n}(t)] \in {R^n}\) (\({\bar{x}_i}(t) = {[{x_1}(t),{x_2}(t), \ldots , {x_i}(t)]^T} \in {R^i}, (i = 1,2, \ldots , n\))) is the state vector of the system, the input \(u(t)\in {R}\), the system output \(y(t) \in R\) is sampled at time instant \({t_k}\), where \(\mathrm{{\{ }}{t_k}\mathrm{{\} }}\) is a strictly increasing sequence and satisfies \({\lim _{k \rightarrow \infty }}{t_k} = \infty \), T is the sampling period, and \(T\mathrm{{ = }}{t_{k + 1}} - {t_k}\). \({f_i}({\bar{x}_i}(t))\)\((i = 1, 2, \ldots , n)\) are known smooth nonlinear functions, \({d_i }({{\bar{x}}_i}(t),t)\in R\)\((i = 1, 2, \ldots , n)\) denote unknown time-varying unmatched disturbances, \(b \in R\)\((b \ne 0)\) represents an unknown but bounded constant control gain, \(\omega (u(t))\in {R}\) represents an unknown PI hysteresis, whose model is given by [29]

where r is a threshold, p(r) is a given density function and satisfies \(p(r) > 0\) and \(\int _0^\infty r p(r)dr < \infty \), \({p_0} = \int _0^{r_1} p (r)dr\) is a constant and depends on the density function, and \(r_1\) denotes the upper limit of the integration. Let \(f_r: R \rightarrow R\) be defined by

The play operator \({F_r}[u]({t})\) is given by

with \({t_i} < t \le {t_{i + 1}}\) and \(0 \le i \le N - 1\), where \(0 = {t_0}< {t_1}< \cdots < {t_N} = {t_E}\) is a partition of \([0, {t_E}]\) such that the function u(t) is monotone on \((t_i, t_{i+1}]\), and \(C[0,{t_E}]\) is a set of bounded continuous functions on \([0,{t_E}]\). For any input \(u(t) \in C[0,{t_E}]\), the play operator is Lipschitz continuous [29].

The system (1) can also be expressed as

where \(\delta (u(t)) = (b{p_0} - {b_0})u(t)\) and \({b_0}\) is a design parameter.

We make the following assumptions to facilitate analysis.

Assumption 1

([6]) There exist constants \({l_{i1}}\)\((i = 1,2, \ldots , n)\) such that the following inequalities

hold.

Assumption 2

For the nonlinear system (3), the input \(u(t) \in C[0,{t_E}]\), thus, there exists an unknown positive constant \({\sigma _0}\) such that \(\left| { \delta (u(t))} \right| \le {\sigma _0}\).

Remark 1

Although the parameter b is unknown, we can select the design parameter \({b_0}\) to approach \(b{p_0}\). In other words, the design parameter \({b_0}\) should be selected appropriately to achieve better state estimation performance.

The following lemmas are needed, which can be found in [7, 30].

Lemma 2.1

([30]) Let \(M \in {R^{n \times n}}\) and \(\gamma \) denote a positive definite matrix and a positive real number, respectively. The vector function \(\varphi (t) \) is defined on the interval \([0,\gamma ]\) and is integrable. Then, we have

Lemma 2.2

([7]) Let f(Z) be a continuous function on a compact set \(\Omega \), which can be approached by a RBFNN, that is,

where \(Z = {[{z_1},{z_2}, \ldots , {z_m}]^T} \in \Omega \subset {R^m}\) and \(\hat{W} \subset {R^q}\) are the input vector and the weight of the RBFNN, respectively, \(S(Z) = {[{S_1}(Z),{S_2}(Z), \ldots , {S_q}(Z)]^T} \in {R^q}\) is the basis function vector, and \(\varsigma >0\) is the approximation error. The optimal weight \({W^*}\) of RBFNN is given by

By using the optimal weight, we have

where \({\varsigma ^*}\) is the optimal approximation error, and \(\bar{\varsigma }> 0\) is the upper bound of the approximation error.

Lemma 2.3

([31]) There exist real numbers \(c_1\), \(c_2\) and real-valued function \(\Delta (x,y)>0\) such that the following inequality holds:

For a sampled-data nonlinear system, we give the definition of UUB and sufficient conditions of UUB.

Definition 1

For the following sampled-data nonlinear system

where \(x(t)\in R^n\) is the state of the system, and \(g(\cdot )\) is a continuous function with \(g(0)=0\). Denote the solution to (5) with respect to the initial conditions \(x_0\) as x(t). If there exists a constant \(b_1>0\) and a constant \(T'(x_0, b_1)\) such that

then, the system (5) is UUB.

Lemma 2.4

For the nonlinear sampled-data system (5), if there exists a Lyapunov function V(x(t)) defined on the interval \([{t_0},\infty )\) such that

and

hold, where \({\alpha _1}\), \({\beta _1}\) and C are three positive real numbers, then it is UUB. Moreover, we have \({\lim _{k \rightarrow \infty }}V(x(t)) \le \frac{2- {\alpha _2}}{{1- {\alpha _2}}}\frac{C}{{{\alpha _1}}}\).

Proof

Multiplying \({e^{{\alpha _1}t}}\) on both sides of the inequality (6), we have

Then,

Let \(t=t_{k+1}\), we can obtain

where \({\alpha _2} = (1 - \frac{{{\beta _1}}}{{{\alpha _1}}}){e^{ - {\alpha _1}T}} + \frac{{{\beta _1}}}{{{\alpha _1}}}\). Since \(\alpha _1>\beta _1\), we have \(\alpha _2<1\). From (8), it follows that

Substituting (9) into (7) results in

Thus, \({\lim _{t \rightarrow \infty }}V(x(t)) \le \frac{2- {\alpha _2}}{{1 - {\alpha _2}}}\frac{C}{{{\alpha _1}}}\), and the sampled-data nonlinear system (5) is UUB. \(\square \)

3 Adaptive Sampled-Data Observer Design

Since the play operator \({F_r}[u](t)\) is continuous and the density function is integrable, it is concluded that the PI model is continuous. In order to design the adaptive sampled-data observer, we use RBFNNs to approximate the unknown time-varying unmatched disturbances \({\nu _i}{d_i }({{\bar{x}}_{i}}(t),t)\)\((i = 1,2, \ldots , n)\) and unknown function \({\nu _0}b{d_0}(u(t))\).

According to Lemma 2.2, we have

where \({\nu _i} > 0\) (\(i=0,1,\ldots ,n\)) are some design parameters.

Then, substituting (10) and (11) into the system (3), we have

where \({\varsigma _i ^*}\) (\(i=0,1,\ldots ,n\)) are the optimal approximation errors, \(\varphi _i ({{ \bar{x}}_i}(t))\)\((i = 1,2, \ldots , n)\) and S(u(t)) are some basis function vectors, and which are selected such that the following conditions

hold for some positive real numbers \({l_{i2}}\)\((i = 1,2, \ldots , n)\).

Let \({D_i} = \frac{1}{{{\nu _i}}}{\varsigma _i}^* \), \((i = 1,2, \ldots , n - 1)\) and \({D_n} = \delta (u(t)) + \frac{1}{{{\nu _n}}}{\varsigma _n}^*+{\frac{1}{{{\nu _0 }}}{\varsigma _0 }^* } \). Then, the system (12) can be expressed as

From Assumption 2 and definitions of \({D_i}\) and \({D_n}\), we can obtain that \(\left| {{{ D}_i}} \right| \le {\sigma _i}_1\) with \({\sigma _i}_1 > 0, (i = 1,2, \ldots , n).\)

Now, we present the following dynamical system to estimate the unknown states of the nonlinear sampled-data system (14).

where \({e_1}({t_k})={x_1}({t_k})-{{\hat{x}}_1}({t_k})\), and \({\hat{x}_i}(t)\), \({\hat{\bar{x}}_i}(t)\), \(\hat{W}\), \(\hat{\theta }_i\), \((i = 1,2, \ldots , n)\) are the estimates of \({x_i}(t)\), \({\bar{x}_i}(t)\), \(W^*\), \(\theta ^*_i\), respectively. \(\Gamma \ge 1 \), \({k_i}>0\) (\(k=1, 2, \ldots , n\)) are the design parameters.

From (14)–(15), the estimation error can be obtained.

where \({e_i}(t) = {x_i}(t) - {\hat{x}_i}(t)\), \({\tilde{f}_i} = {f_i}({\bar{x}_i}(t)) - {f_i}({\hat{\bar{x}}_i}(t))\), \({\tilde{\varphi }_i} = {\varphi }_i({\bar{x}_i}(t)) - {\varphi }_i({\hat{\bar{x}}_i}(t))\), \(\tilde{W} = {W^*} - \hat{W}\), \({{\tilde{\theta }}_i} = {\theta _i}^* - {{\hat{\theta }}_i}\). Consider the following coordinate transformation

After transformation, the system (16) can be rewritten as

We can also obtain the following compact form of the system (17).

where \(\vartheta = {[{\vartheta _1}(t),{\vartheta _2}(t), \ldots , {\vartheta _n}(t)]^T}\), \(\hat{k} = {({k_1},{k_2}, \ldots , {k_n})^T}\), \(\tilde{K}\mathrm{{ = }}\hat{k}({\vartheta _1}(t) - {\vartheta _1}({t_k}))\), \(\tilde{F} = {[\frac{{{{\tilde{f}}_1}}}{{{\Gamma ^0 }}}, \frac{{{{\tilde{f}}_2}}}{{{\Gamma ^1}}}, \ldots , \frac{{{{\tilde{f}}_{n}}}}{{{\Gamma ^{n-1}}}}]^T}\),

\(\tilde{W}_\lambda = {\left[ {\begin{array}{*{20}{c}} 0\\ \vdots \\ 0\\ {{\frac{1}{\nu _0{\Gamma ^{n-1 }} }{{\tilde{W}}^T}{S}(u(t))} } \end{array}} \right] ^{n \times 1}}\), \(A = \left[ {\begin{array}{*{20}{c}} { - {k_1}}&{}1&{} \cdots &{}0\\ \vdots &{} \vdots &{} \ddots &{} \vdots \\ { - {k_{n - 1}}}&{}0&{} \cdots &{}1\\ { - {k_n}}&{}0&{} \cdots &{}0 \end{array}} \right] \), \(\tilde{D} = {[\frac{{{D_1}}}{{{\Gamma ^0 }}},\frac{{{D_2}}}{{{\Gamma ^1}}}, \ldots , \frac{{{D_n}}}{{{\Gamma ^{n-1}}}}]^T}\), \(\tilde{\Phi }= [\frac{1}{{{\nu _1}{\Gamma ^0 }}}\theta _1^{{{^*}^T}}{{\tilde{\varphi }}_1},\frac{1}{{{\nu _2}{\Gamma ^1}}}\theta _2^{{{^*}^T}}{{\tilde{\varphi }}_2}, \ldots , \frac{1}{{{\nu _n}{\Gamma ^{n-1}}}}\times \theta _n^{{{^*}^T}}{{\tilde{\varphi }}_n}]^T\), \(\tilde{D}_\lambda = [\frac{1}{{{\nu _1}{\Gamma ^0 }}}\tilde{\theta }_{1}^{{^T}}\varphi _1 ({\hat{\bar{x}}_1}(t)),\frac{1}{{{\nu _2}{\Gamma ^1}}}\tilde{\theta }_{2}^{{^T}}\varphi _2 ({\hat{\bar{x}}_2}(t)), \ldots , \frac{1}{{{\nu _n}{\Gamma ^{n-1}}}}\tilde{\theta }_{n}^{{^T}}\times \varphi _n ({\hat{\bar{x}}_n}(t))]^T\), and the gains \({k_i} > 0\)\((i = 1,2, \ldots , n)\) are chosen such that the polynomial \(H(s) = {s^n} + {k_1}{s^{n - 1}} + \cdots + {k_{n - 1}}s + {k_n}\) is Hurwitz. Thus, there exists a symmetric positive definite matrix P \((P\mathrm{{ = }}{P^T} > 0)\) such that the following matrix inequality holds,

The adaptive laws of the weights \({\hat{W}}\) and \({\hat{\theta }_i}\) are designed as follows,

where \({\Lambda _0} = {\Lambda _0}^T > 0\), \({\Lambda _i} = {\Lambda _i}^T > 0\) are some constant diagonal design matrices, and \({\ell _0} > 0\), \({\ell _i} > 0\), \({\chi _0}>0\), \({\chi _i}>0\) are some parameters to be designed.

Next, we give the definition of adaptive sampled-data observer of the nonlinear system (1).

Definition 2

For the nonlinear system (1), design the system (15), and the adaptive laws of the weights (20) and (21), if there exist two positive real numbers \(\delta _0\) and \(T_1>0\), such that

then, the system (15) with the adaptive laws (20) and (21) is called an adaptive sampled-data observer of the system (1).

Theorem 1

Consider the system (1) with conditions (4) and (13). If \({k_i} > 0 (i = 1,2, \ldots , n)\) are selected such that the condition (19) holds, and the sampling period T, the parameters \(\phi \), \(\Delta _1\), \(\Delta _2\), \({\ell _0}\), \({\ell _i}\) satisfy the following conditions

and

and

then, the state observation error system (18) is UUB, or, the system (15)–(20)–(21) is an adaptive sampled-data observer of the system (1), where \(L_0=24\Gamma {{\bar{p}}_1}n\bar{k}\), \(L_1=\frac{{{\Delta _2}}}{2}{\upsilon _0}^2{{\bar{\chi }}^2}+{\frac{{{\Delta _2}}}{2}}{\eta _0}^2{{\bar{\chi }}^2}\), \({\upsilon _0} = \left\| {{S}(u(t))} \right\| \), \({\eta _i} = \left\| {\varphi ({\hat{\bar{x}}_i}(t))} \right\| \), \({\eta _0} = \max ({\eta _i})\), \(\underline{\nu }= \min ({\nu _0}, \nu _i)\)\(\bar{\chi }= \max ({\chi _0}, \chi _i)\), \({\bar{p}_1} = {\lambda _{\max }}({P^T}P)\), \({\bar{p}_2} = {\lambda _{\max }}(P)\), \({\bar{p}_3} = {\lambda _{\min }}(P)\), \(\bar{k} = \max ({k_i}^2)\), \(\bar{l} =\max \left( {\sqrt{\sum \limits _{i = 1}^n {i{l_{i1}}^2} }, \sqrt{\sum \limits _{i = 1}^n {i{l_{i2}}^2} }} \right) \).

Proof

Consider the following Lyapunov–Krasovskii functional

where \({V_1}(t) = {\vartheta ^T}P\vartheta \), \({V_2}(t) =\frac{1}{2} {{{\tilde{W}}}^T{\Lambda _0}^{ - 1}{{\tilde{W}}}}\), \({V_3}(t)=\frac{1}{2}\sum \limits _{i = 1}^n {\tilde{\theta }_i^{^T}} {\Lambda _i}^{ - 1}{\tilde{\theta }_i}\), \({V_4}(t) = \int _{t - T}^t {\int _\tau ^t {\left[ {{\vartheta _1}{{(s)}^2} + {\vartheta _2}{{(s)}^2}} \right] } } dsd\tau ,\ t \in [{t_{k0}},\infty ), \) and \( {k_0} = \min \{ k:T < {t_k}\} \).

Then, along the trajectory of the system (18), the derivative of \({V_1}(t)\) is given as follows:

Based on Assumption 1 and Lemma 2.3, the following inequalities hold.

where \({\varpi _i} = \left\| {\theta {{_i^*}^T}} \right\| \), \({\varpi _0} = \max ({\varpi _i})\).

According to Lemma 2.1, we can obtain

where \({l_1} = \max \left( {{l_{11}}, {l_{12}}} \right) \), \(\Gamma \ge \sqrt{\left( {1 + \frac{{{\varpi _0}^2}}{{{\nu _1}^2}}} \right) {l_1}^2 } \).

It follows from (32) and (33) that,

From (26)–(31), and (34), we have

where \(\Gamma \ge 8(2\bar{l}\sqrt{{{\bar{p}}_1}}(1 + \frac{{{\varpi _0}}}{{{\nu _1}}})+4{{\bar{p}}_1}+ \frac{{{\Delta _1}{\upsilon _0}^2{{\bar{p}}_1}}}{{{{\underline{\nu }} ^2}}}+\frac{{{\Delta _1}{\eta _0}^2{{\bar{p}}_1}}}{{{\underline{\nu }}^2}})\).

In order to deal with \({\tilde{W}}\) and \({\tilde{\theta }_i}\), the derivatives of \({V_2}(t)\) and \({V_3}(t)\) are given as follows.

Substituting (20)–(21) into (36)–(37) results in

where \({e_1}({t_k}) = {\vartheta _1}({t_k})\). Then, according to \({\tilde{W}} = {W}^* - {\hat{W}}\), \({{\tilde{\theta }}_i} = {\theta _i}^* - {{\hat{\theta }}_i}\) and Lemma 2.3, we have

Considering (33), (41), and (42), we have

where \(\Gamma \ge 8{L_1}\).

Note that when \(t \in [{t_k},{t_{k + 1}})\), we have \(t-T < {t_k}\). Thus, from (35) and (44), it follows that

Further, the derivative of \(V_4(t)\) is given by

Next, for \(n \ge 2\), we have

Substituting (45) and (47) into (25), we have

Note that

Then, from (48) and (49), we can obtain

Since the sampling period T, and the parameters \(\phi \), \(\Delta _1\), \(\Delta _2\), \({\ell _0}\), \({\ell _i}\) satisfy the conditions (22)–(24), then,

where \({C_1} =\frac{1}{2}\sum \limits _{i = 1}^n {{{\sigma _{i1}}^2}} + {\frac{{{\ell _0}}}{2}{{\left\| {{W}^*} \right\| }^2}}+\sum \limits _{i = 1}^n {\frac{{{\ell _i}}}{2}{{\left\| {{\theta _i}^*} \right\| }^2}}\). In order to ensure the error system is UUB, the corresponding high-gain design parameter \(\Gamma \) should be chosen such that

Since \({\phi _1}=(1 - \frac{1}{2}){e^{ - \phi T}} + \frac{1}{2} < 1\). From the differential inequality (51) and Lemma 2.4, we have

Thus, we obtain that the system (18) is UUB, i.e., \({\lim _{t \rightarrow \infty }}V(t) \le \frac{{{(2- \phi _1)C_1}}}{{(1 - {\phi _1})\phi }}\). On the one hand, \( \mathop {\lim }\limits _{t \rightarrow \infty } {V_1}(t) \le \mathop {\lim }\limits _{t \rightarrow \infty } V(t) \le \frac{{{(2- \phi _1)C_1}}}{{(1 - {\phi _1})\phi }}\), on the other hand, \({V_1}(t) \ge {{\bar{p}}_3}{\vartheta ^T}\vartheta \ge \frac{{{{\bar{p}}_3}{e^T}e}}{{{\Gamma ^{2(n - 1)}}}}\). Thus, we have

This completes the proof. \(\square \)

Remark 2

The design method can be extended to nonlinear systems with other hysteresis inputs and may not necessarily limited to the system described by (1). On the one hand, \(C_1\) in (3) is determined by the parameters \(\sigma _{i1}\), \({\ell _0}\), \({\ell _i}\). Note that the value of the parameters \(\sigma _{i1}\), \( {\ell _0},\)\({\ell _i} \) can be adjusted. Thus, a small value of \(C_1\) can be guaranteed. On the other hand, we can properly select the design parameters \(\Gamma \), \({k_i}\), \({l_{i1}}\), \({l_{i2}}\), \({\upsilon _0}\), \({\eta _i}\), \({\nu _0}\), \({\nu _i}\), \({\ell _0}\), \({\ell _i}\), \({ \chi _0 }\), \({ \chi _i }\), \({\Delta _1}\) and \({\Delta _2}\). Then, based on these parameters, the sampling period T and \(\phi \) can be found such that the error system converges to a relatively small neighborhood of the origin.

Remark 3

In [7], the estimation state \(\hat{x}(t)\) is introduced into the RBFNNs to approximate the hysteresis and the uncertainties. Therefore, the considered nonlinear system not only has the unknown state x(t) but also the estimation state \(\hat{x}(t)\). Whereas, in this paper, we only use the system state x(t) to approximate the hysteresis and the disturbances. Thus, the considered nonlinear system (12) only has x(t) but not \(\hat{x}(t)\). Moreover, compared with [7], we relaxed the restriction on the constant control gain parameter b by using the approximation formulation (10), and solved the problem of parameter selection by introducing the high gain parameter \(\Gamma \).

4 Simulation Example

In this section, a simulation example will be demonstrated the effectiveness of the proposed scheme.

Example 1

Consider the nonlinear system with unknown hysteresis and unknown unmatched disturbance

where \(f(\bar{x}(t)) =-3 \sin ({x_1}(t))\) and \(d({{\bar{x}}}(t),t)=0.1\sin (x_1)e^{-0.1x_2}\). The PI hysteresis out \(\omega (u(t))\) is determined by (2) and the density function is chosen as \(p(r) = 0.8{e^{ - 0.067{{(r - 1)}^2}}}\). We choose \(R\mathrm{{ = }}100\) as the upper limit of integration.

Considering (15), the adaptive sampled-data observer is constructed as

and

where \({e_1}({t_k})=x_1({t_k}) - {{\hat{x}}_1}({t_k})\), and the update laws of the weights are given by (20) and (21). In the following simulation, we choose \(\Gamma =2\), \({k_1}={k_2}=1.5\), \(u(t)=(12\cos (3t)-4)/(1 + 6t)\)\(+\cos (2t)\), \({b_0}=1\), \(\nu _0=0.8\), \(\nu _1=1.5\), \({\Lambda ^1 _{0}}={\Lambda ^2 _{0}}={\Lambda _{l}}=0.004\), \({\ell ^1 _{0}}={\ell ^2 _{0}}={\ell _{l}}=0.05\), \({\chi ^1 _{0}}=60\), \({\chi ^2 _{0}}=55\), \({\chi _{l}}=(0.5,40,0.5,60,0.5)\) and \({\Delta _1}={\Delta _2}=100\). The initial conditions \(({x_1}(0),{x_2}(0))= (-1,1)\), \(({\hat{x}_1}(0),{\hat{x}_2}(0))=(1,3)\), \(({{\hat{W}}^1_{0}}(0), {{\hat{W}}^2_{0}}(0))=(0.05, 0.05)\) and \({{\hat{\theta }}_l}(0)=(0,0.01,\)\(0,-0.05,0)\). By simple computation, we have \(P=[0.8482, -0.5093; -0.5093, 1.0689]\), \(\lambda _{\max }(P)=1.4797\) and \(\lambda _{\min }(P)=0.4374\). The sampling period T is given as \(T=0.1s\). Figures 1 and 2 illustrate the trajectories of \(\left\| {\hat{W}} \right\| \) and \(\left\| {\hat{\theta }} \right\| \), respectively. In Figs. 3 and 4, the state estimation results of two unmeasurable states are presented, respectively. The trajectories of the state estimate errors and Lyapunov function V(t) are depicted in Figs. 5 and 6, respectively.

5 Conclusion

In this paper, a novel adaptive sampled-data observer design based on RBFNNs was proposed for nonlinear systems with unknown PI hysteresis and unknown unmatched disturbances. Firstly, RBFNNs were designed to approximate the unknown time-varying unmatched disturbances and unknown hysteresis of the systems. Then, a sampled-data observer was constructed to estimate the unmeasured states, and the learning laws of the weights of RBFNNs were also given. Based on a Lyapunov function and the corresponding sufficient conditions, we demonstrated that the observer errors were UUB. Finally, the effectiveness of the design scheme was verified by the illustrative simulation case. In the future, the developed adaptive sampled-data observer design method will be extended to the MIMO nonlinear systems with hysteresis and multiple uncertainties.

References

Su C, Stepanenko Y, Svoboda J, Leung TP (2002) Robust adaptive control of a class of nonlinear systems with unknown backlash-like hysteresis. IEEE Trans Autom Control 45(12):2427–2432

Su C, Wang Q, Chen X, Rakheja S (2006) Adaptive variable structure control of a class of nonlinear systems with unknown Prandtl–Ishlinskii hysteresis. IEEE Trans Autom Control 50(12):2069–2074

Tao G, Kokotovic PV (1995) Adaptive control of plants with unknown hysteresis. IEEE Trans Autom Control 40(2):200–212

Zhou J, Wen C, Zhang Y (2004) Adaptive backstepping control of a class of uncertain nonlinear systems with unknown backlash-like hysteresis. IEEE Trans Autom Control 49(10):1751–1759

Wu H, Liu Z, Zhang Y, Chen CL (2019) Adaptive fuzzy output feedback quantized control for uncertain nonlinear hysteretic systems using a new feedback-based quantizer. IEEE Trans Fuzzy Syst 27(9):1738–1752

Li Y, Tong S, Li T (2012) Adaptive fuzzy output feedback control of uncertain nonlinear systems with unknown backlash-like hysteresis. Inf Sci 198:130–146

Chen M, Ge S (2015) Adaptive neural output feedback control of uncertain nonlinear systems with unknown hysteresis using disturbance observer. IEEE Trans Ind Electron 62(12):7706–7716

Zhang X, Li Z, Su C, Lin Y, Fu Y (2016) Implementable adaptive inverse control of hysteretic systems via output feedback with application to piezoelectric positioning stages. IEEE Trans Ind Electron 62(9):5733–5743

Wang Q, Su C (2006) Robust adaptive control of a class of nonlinear systems including actuator hysteresis with Prandtl–Ishlinskii presentations. Automatica 42(5):859–867

Wang H, Shen H, Xie X, Hayat T, Alsaadi FE (2018) Robust adaptive neural control for pure-feedback stochastic nonlinear systems with Prandtl–Ishlinskii hysteresis. Neurocomputing 314:169–176

Liu S, Su C, Li Z (2014) Robust adaptive inverse control of a class of nonlinear systems with Prandtl–Ishlinskii hysteresis model. IEEE Trans Autom Control 59(8):2170–2175

Arcak M, Nešić D (2004) A framework for nonlinear sampled-data observer design via approximate discrete-time models and emulation. Automatica 40:1931–1938

Sayyaddelshad S, Gustafsson T (2015) \(H_{\infty }\) observer design for uncertain nonlinear discrete-time systems with time-delay: LMI optimization approach. Int J Robust Nonlinear Control 25(10):1514–1527

Postoyan R, Nešić D (2012) On emulated nonlinear reduced-order observers for networked control systems. Automatica 48(4):645–652

Ahrens J, Tan X, Khalil H (2009) Multirate sampled-data output feedback control with application to smart material actuated systems. IEEE Trans Autom Control 54(11):2518–2529

Dinh TN, Andrieu V, Nadri M, Serres U (2015) Continuous-discrete time observer design for Lipschitz systems with sampled measurements. IEEE Trans Autom Control 60(3):787–792

Yu G, Shen Y (2019) Event-triggered distributed optimisation for multi-agent systems with transmission delay. IET Control Theory Appl 13(14):2188–2196

Shen Y, Zhang D, Xia X (2017) Continuous observer design for a class of multi-output nonlinear systems with multi-rate sampled and delayed output measurements. Automatica 75:127–132

Zhang D, Shen Y (2017) Continuous sampled-data observer design for nonlinear systems with time delay larger or smaller than the sampling period. IEEE Trans Autom Control 62(11):5822–5829

Shen Y, Zhang D, Xia X (2016) Continuous output feedback stabilization for nonlinear systems based on sampled and delayed output measurements. Int J Robust Nonlinear Control 26(14):3075–3087

Mu C, Wang K (2019) Aperiodic adaptive control for neural-network-based nonzero-sum differential games: a novel event-triggering strategy. ISA Trans 92:1–13

Mu C, Zhang Y (2020) Learning-based robust tracking control of quadrotor with time-varying and coupling uncertainties. IEEE Trans Neural Netw Learn Syst 31(1):259–273

Fazel Zarandi MH, Soltanzadeh S, Mohammadzadeh A, Castillo O (2019) Designing a general type-2 fuzzy expert system for diagnosis of depression. Appl Soft Comput J 80:329–341

Homeira S, Eghbal G (2019) Developing an online general type-2 fuzzy classifier using evolving type-1 rules. Int J Approx Reason 113:336–353

Lei X, Lu P (2014) The adaptive radial basis function neural network for small rotary-wing unmanned aircraft. IEEE Trans Ind Electron 61(9):4808–4815

Mohammadzadeh A, Ghaemi S (2017) Optimal synchronization of fractional-order chaotic systems subject to unknown fractional order, input nonlinearities and uncertain dynamic using type-2 fuzzy CMAC. Nonlinear Dyn 88:2993–3002

Mohammadzadeh A, Sehraneh G, Okyay K, Sohrab K (2019) Robust predictive synchronization of uncertain fractional-order time-delayed chaotic systems. Soft Comput 23:6883–6898

Mohammadzadeh A, Ghaemi S (2018) Robust synchronization of uncertain fractional-order chaotic systems with time-varying delay. Nonlinear Dyn 93(4):1809–1821

Brokate M, Sprekels J (1996) Hysteresis and phase transitions. Springer, New York

Liu Y, Wang Z, Liu X (2006) On global exponential stability of generalized stochastic neural networks with mixed time-delays. Neurocomputing 70(1–3):314–326

He W, Guo J, Xiang Z (2019) Disturbance-observer-based sampled-data adaptive output feedback control for a class of uncertain nonlinear systems. Int J Syst Sci 50(9):1771–1783

Acknowledgements

This work was supported by the National Natural Science Foundation of China (61374028).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, P., Shen, Y. Adaptive Sampled-Data Observer Design for a Class of Nonlinear Systems with Unknown Hysteresis. Neural Process Lett 52, 561–579 (2020). https://doi.org/10.1007/s11063-020-10275-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-020-10275-y