Abstract

In this paper, inner–outer synchronization problem of dynamical networks on time scales is studied. This kind of network synchronization means that two dynamical networks can achieve inner/outer synchronization simultaneously. By designing suitable distributed pinning impulsive controllers, the inner–outer synchronization target is realized. Based on the Lyapunov function method and the mathematical induction approach, two sufficient criteria are given for inner–outer synchronization of two networks with identical and non-identical topologies. Due to the structure of time scales, the derived results can be applied to study the inner–outer synchronization problems of continuous/discrete networks and networks on hybrid time domains. A numerical simulation example is given to illustrate the effectiveness of the derived results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

It is well known that many practical systems in some fields, such as science, nature and engineering, can be described by models of complex networks [1,2,3,4,5,6,7,8,9]. As an interesting and important collective behavior of complex dynamical networks, synchronization inside a network, which is also called “inner synchronization”, has attracted considerable attention over the past years [10,11,12,13,14,15,16]. Besides, another kind of synchronization, named “outer synchronization” between two networks, was first proposed in [17]. In reality, the phenomenon of outer synchronization between two networks does exist in our lives, such as prey-predator communities [17] and AIDS [18]. Since then, a great number of results about outer synchronization between two networks have been obtained [19,20,21,22]. Unfortunately, outer synchronization between two networks dose not ensure that each network can achieve inner synchronization and vice versa. Moreover, the inner/outer synchronization problems of two networks are always studied separately. Then, a natural question is that can inner/outer synchronization between two dynamical networks be achieved simultaneously? To overcome this problem, this paper proposes a kind of network synchronization, called inner–outer synchronization.

Almost all of existing results were about continuous or discrete networks, and they were usually studied separately. However, different nodes in some networks can communicate with each other on an arbitrary time domain, so it is meaningful and necessary to study both continuous and discrete networks under a unform framework. In 1988, the time-scale theory was introduced by Stefan Hilger to unify the theory of difference equations and differential equations. To date, complex dynamical networks on time scales have received continued attention [23,24,25,26,27,28,29,30]. However, to the best of our knowledge, the existing results are only about inner synchronization of networks on time scales, therefore, numerous network synchronization problems on time scales remain to be further solved, such as achieving outer network synchronization on time scales, achieving inner and outer synchronization between two networks on time scales simultaneously, etc.

Recently, inner or outer network synchronization problems have been investigated with different control schemes, such as adaptive distributed control [31, 32], distributed impulsive control [33,34,35,36,37], pinning control [38,39,40], pinning impulsive control [30, 41], etc. In [41], the authors proposed a new control strategy, named pinning impulsive control, to solve stabilization problem of nonlinear time-varying time-delay dynamical networks. In [30], a different pinning impulsive control strategy was proposed to solve the synchronization problem of linear dynamical networks on time scales, in which the number of the nodes to be controlled at impulsive instants can be various. By designing an adaptive pinning impulsive controller, the outer synchronization problem between drive and response networks was investigated in [19].

Motivated by the aforementioned discussions, by designing proper distributed pinning impulsive controllers, this paper investigates the inner–outer synchronization problems of two dynamical networks with identical and non-identical topologies, which have never been studied before. The main contributions can be summarized as follows:

- (i)

Different from the results in [23,24,25,26,27,28, 30], this paper first investigates inner–outer synchronization problem of dynamical networks on time scales, which means inner synchronization and outer synchronization can be achieved simultaneously. Moreover, by the proposed control strategies, inner synchronization and outer synchronization can be realized separately.

- (ii)

Compared with the pinning impulsive control strategy in [30], the designed controllers here allow each distributed controller to use information from its neighboring nodes, which are more feasible to implement in some practical applications. In addition, the designed control schemes here require that only parts of nodes be chosen to be controlled at each impulsive instant, hence they can further reduce the control cost.

- (iii)

The derived results are of generality, since they can be also applied to investigate the inner–outer synchronization problems of continuous/discrete networks and networks on hybrid time domains.

This paper is organized as follows: In Sect. 2, we recall some preliminary knowledge. In Sect. 3, the inner–outer synchronization problem of two dynamical networks on time scales is formulated. By designing suitable distributed pinning impulsive controllers, two inner–outer synchronization criteria for two dynamical networks with identical and non-identical topologies on time scales are given in Sect. 4. In Sect. 5, we give an illustrative simulation example, which is followed by a brief conclusion in Sect. 6.

Notations. In the sequel, the following notations will be used: \(\mathbf {R}_{+}\), \(\mathbf {Z}\), \(\mathbf {N}\), \(\mathbf {N_{+}}\) represent the set of all non-negative real numbers, the set of all integer numbers, the set of all natural numbers and the set of all positive integer numbers, respectively; \(\mathbf {R}^{n}\) denotes the \(n-\)dimensional Euclidean space with the Euclidean norm \(\parallel \cdot \parallel \); \(\sharp M\) denotes the number of elements of a finite set M; \(\overline{M}\) denotes the complementary set of the set M; \(\mathbf {0}\) represents the null vector with proper dimension; the Kronecker product of matrices \(X\in \mathbf {R}^{m\times n}\) and \(Y\in \mathbf {R}^{p\times q}\) is denoted as \(X\otimes Y\in \mathbf {R}^{mp\times nq}\); exp(z) represents the usual exponential function \(e^{z}\). A matrix A satisfying \(A>0\) means that it is positive definite.

2 Preliminaries

A time scale \(\mathbf {T}\) is an arbitrary nonempty closed subset of the set of real numbers \(\mathbf {R}\). When \(t>\inf \mathbf {T}\), the backward jump operator \(\rho :\mathbf {T}\rightarrow \mathbf {T}\) is defined as \(\rho (t)=\sup \{s\in \mathbf {T}:s<t\}\); when \(t<\sup \mathbf {T}\), the forward jump operator \(\sigma :\mathbf {T}\rightarrow \mathbf {T}\) is defined as \(\sigma (t)=\inf \{s\in \mathbf {T}:s>t\}\). If \(\rho (t)<t\), t is said to be left-scattered; if \(\rho (t)=t\), t is said to be left-dense; if \(\sigma (t)>t\), t is said to be right-scattered; if \(\sigma (t)=t\), t is said to be right-dense. The graininess function \(\mu :\mathbf {T}\rightarrow \mathbf {R_{+}}\) is defined by \(\mu (t):=\sigma (t)-t.\) Assume \(g:\mathbf {T}\rightarrow \mathbf {R}\), if for any \(\varepsilon >0\), there exists a constant \(\delta >0\) and a neighborhood \(U_{\mathbf {T}}(=U\bigcap \mathbf {T})\) of t, such that \( \mid g(\sigma (t))-g(s) -\delta [\sigma (t)-s]\mid \le \varepsilon |\sigma (t)-s|,s\in U_{\mathbf {T}},\) then g is said to be \(\varDelta \)-differentiable at t, and the derivative is defined as \(g^{\varDelta }(t)\).

Definition 1

[42] A function \(g:\mathbf {T}\rightarrow \mathbf {R}\) is called rd-continuous provided it is continuous at right-dense points and its left-sided limits exist (finite) at left-dense points. The set of all rd-continuous functions \(g:\mathbf {T}\rightarrow \mathbf {R}\) is denoted as \(C_{rd}(\mathbf {T,\mathbf {R}})\).

Definition 2

[42] A function \(q:\mathbf {T}\rightarrow \mathbf {R}\) is called regressive, if for all \(t\in \mathbf {T}^{\kappa }, 1+\mu (t)q(t)\ne 0\), where the set \(\mathbf {T}^{\kappa }\) is defined by the formula: \(\mathbf {T}^{\kappa }=\mathbf {T}\setminus (\rho (\sup \mathbf {T}),\sup \mathbf {T}]\) if \(\sup \mathbf {T}<\infty \) and left-scattered, and \(\mathbf {T}^{\kappa }=\mathbf {T}\) if \(\sup \mathbf {T}=\infty \). \(\mathcal {R}=\mathcal {R} (\mathbf {T})=\mathcal {R}(\mathbf {T},\mathbf {R})\) denotes the set of all regressive and rd-continuous functions. A function \(q:\mathbf {T}\rightarrow \mathbf {R}\) is called positive regressive, if for all \(t\in \mathbf {T}^{\kappa },1+\mu (t)q(t)>0\). \(\mathcal {R^{+}}=\mathcal {R^{+}}(\mathbf {T}) =\mathcal {R^{+}}(\mathbf {T},\mathbf {R})\) denotes the set of all positive regressive and rd-continuous functions.

Definition 3

[42] If \(q\in \mathcal {R}\), then the exponential function is defined as \(e_{q}(t,s)=\exp (\displaystyle {\int _{s}^{t}\xi _{\mu (\tau )}(q(\tau ))\varDelta \tau })\), where \(s,t\in \mathbf {T}\), \(\displaystyle {\xi _\mu (p) =\frac{1}{\mu } \log (1+\mu p)}\) if \(\mu \ne 0\), \(\xi _\mu (p)=p\) if \(\mu =0\).

Lemma 1

[42] Let \(g\in C_{rd}(\mathbf {T},\mathbf {R})\) and \(q\in \mathcal {R^{+}}\). Then, for all \(t\in \mathbf {T}\), \(z^{\varDelta }(t)\le q(t)z(t)+g(t)\) implies that \(z(t)\le z(t_{0})e_{q}(t,t_{0}) +\displaystyle {\int ^{t}_{t_{0}}}e_{q}(t,\sigma (s))g(s)\varDelta s\).

Lemma 2

[43] Let \(U=(a_{ij})_{N\times N}\), \(M\in \mathbf {R}^{n\times n}\), \(x=(x_{1}^{T},\ldots ,x_{N}^{T})^{T}\), \(y=(y_{1}^{T},\ldots ,y_{N}^{T})^{T}\), where \(x_{i}=(x_{i1},\ldots ,x_{in})^{T}\in \mathbf {R}^{n}\) and \(y_{i}=(y_{i1},\ldots ,y_{in})^{T}\in \mathbf {R}^{n}.\) If \(U=U^{T}\), and each row sum of U is zero, then

3 Problem Statement

Consider the following two dynamical networks:

where \(i=1,2,\ldots ,N\); \(t\in {\mathbf {T}}\), \(\mathbf {T}\) is a time scale with \(\sup \mathbf {T}=\infty \) and \(\mu (t)\le \mu \), where \(\mu (t)\) is the graininess function, and \(\mu \ge 0\) is a constant; \(x_{i}(t)=(x_{i1}(t),\ldots ,x_{in}(t))^{T} \in \mathbf {R}^{n}\), \(y_{i}(t)=(y_{i1}(t),\ldots ,y_{in}(t))^{T}\in \mathbf {R}^{n}\) are the state vectors; matrices A, \(B\in \mathbf {R}^{n\times n}\); \(u_{i}\) and \(v_{i}\) are the control inputs; \(\varGamma =diag\{\alpha _{1}, \ldots ,\alpha _{n}\}>0\) is the matrix describing the inner-coupling between the subsystems at time t; \(C=(c_{ij})_{N\times N}\), \(D=(d_{ij})_{N\times N}\) are the coupling configuration matrices with zero-sum rows, and are defined as follows: if there is a connection from node j to node \(i(i\ne j)\), \(c_{ij}>0\), \(d_{ij}>0\), otherwise \(c_{ij}=0\), \(d_{ij}=0\); \(\alpha >0\), \(\beta >0\) are the coupling strengthes.

Remark 1

According to the structure of time scales, different types of networks can be formulated. For example, when \(\mathbf {T}=\mathbf {R}\) or \(\mathbf {Z}\), networks (1) and (2) can be transformed into the following continuous or discrete forms:

Except for the continuous and discrete networks, other forms can be also formulated by networks (1) and (2), such as systems on nonuniform discrete time domains and systems with a mixed time.

Let \(e_{i}(t)=y_{i}(t)-x_{i}(t)\). The existing results are mainly focused on two cases: (i) inner synchronization of a single dynamical network. (ii) outer synchronization between two dynamical networks without centering on inner synchronization of each network. Our goal is to design proper distributed pinning impulsive controllers that ensure networks (1) and (2) can achieve inner–outer synchronization. The definition of inner–outer synchronization for networks (1) and (2) is given as follows.

Definition 4

Networks (1) and (2) are said to be inner–outer synchronized if there exist suitable controllers, such that for all \(i,j=1,2,\ldots ,N\), \(\displaystyle {\lim _{t\rightarrow \infty }}\parallel x_{i}(t)-x_{j}(t)\parallel =0\), \(\displaystyle {\lim _{t\rightarrow \infty }}\parallel y_{i}(t)-y_{j}(t)\parallel =0\), and \(\displaystyle {\lim _{t\rightarrow \infty }}\parallel e_{i}(t)\parallel =0\).

Remark 2

It is obvious that only if the states trajectories of network (1) and error network converge to equilibriums, inner synchronization of network (2) can be achieved. Therefore, the main objective is to design proper controllers to guarantee inner synchronization of network (1) and outer synchronization between networks (1) and (2). Similarly, it is also feasible to design suitable controllers that ensure inner synchronization of network (2) and outer synchronization between networks (1) and (2). These two strategies are analogous, so we only consider the first case in this paper.

To ensure that networks (1) and (2) can achieve inner–outer synchronization, the distributed pinning impulsive controllers are designed as follows:

where \(k\in \mathbf {N}\), the constants \(q_{1,k}\) and \(q_{2,k}\) are the impulsive control gains to be determined, and \(\delta (\cdot )\) is the Dirac delta function; the impulsive instant sequence \(\{t_{k}\}\) satisfies \(\{t_{k}\}\in {\mathbf {T}}\), \(0=t_{0}<t_{1}<\cdots<t_{k}<t_{k+1}<\cdots \), and \(\displaystyle {\lim _{k\rightarrow \infty }}t_{k}=\infty \); the index set \(\mathcal {D}_{k}\) is defined as follows: if the nodes \(x_{i}\) and \(y_{i}\) are chosen to be controlled at \(t_{k}\), then \(i\in \mathcal {D}_{k}\) and \(\sharp \mathcal {D}_{k}=l_{k}\), where \(l_{k}\le N\).

Remark 3

In the distributed pinning impulsive control schemes (3) and (4), each node is allowed to utilize the information from its neighboring nodes, and only \(l_{k}\) nodes need to be controlled at each impulsive instant, so the designed controllers (3) and (4) are practically more feasible to implement than those control strategies in [30, 35, 36].

By the controller (3), network (1) can be transformed into the following form:

and by the controllers (3) and (4), the error network is formulated as follows:

where \(\varDelta x_{i}(t_{k})=x_{i}(t_{k}^{+})-x_{i}(t_{k}^{-})\), \(\varDelta e_{i}(t_{k})=e_{i}(t_{k}^{+})-e_{i}(t_{k}^{-})\). In this paper, we assume that for all \(k\in \mathbf {N}\), \(x_{i}(t_{k}^{-})=x_{i}(t_{k})\), \(y_{i}(t_{k}^{-})=y_{i}(t_{k})\), which clearly yield \(e_{i}(t_{k}^{-})=e_{i}(t_{k})\).

Let \(x=(x^{T}_{1},\ldots ,x^{T}_{N})^{T}\), \(e=(e^{T}_{1}, \ldots ,e^{T}_{N})^{T}\) and \(\vartheta =(\upsilon _{ij})_{Nn\times Nn}\) be a diagonal matrix with \(\upsilon _{ii}\) equaling 1 if \(i\in \mathcal {D}_{k}\) and \(\upsilon _{ii}\) equaling 0 otherwise. Then, \(x=x_{\mathcal {D}_{k}}+x_{\overline{{\mathcal {D}}}_{k}}\), \(e=e_{\mathcal {D}_{k}}+e_{\overline{{\mathcal {D}}}_{k}}\), where \(x_{\mathcal {D}_{k}}=\vartheta x\), \(e_{\mathcal {D}_{k}}=\vartheta e\). By this decomposition, systems (5) and (6) can be rewritten as the following forms.

where \(\varPhi _{1}=I_{N}\otimes A+\alpha (C\otimes \varGamma )\), \(\varPhi _{2}=I_{N}\otimes B+\beta (D\otimes \varGamma )\), \(\varPhi _{3}=\vartheta (I_{Nn}-C\otimes I_{n})\) and \(\varPhi _{4}=\vartheta (I_{Nn}-D\otimes I_{n})\).

For simplifying the presentation, in the sequel, we let \(H^{\star }=H^{T}H\) and \(H^{*}=H^{T}+H\) for any matrix H.

4 Main Results

In this section, by the controllers (3) and (4), we firstly study the inner–outer synchronization problem of networks (1) and (2) with non-identical topologies (i.e. \(C\ne D)\). A sufficient condition is given as follows:

Theorem 1

Networks (1) and (2) can achieve inner–outer synchronization by the controllers (3) and (4), if there exist three positive constants \(\theta \), \(\gamma \), \(\varepsilon \), two sets of positive constants \(\{\eta _{k}\}\) and \(\{b_{k}\}\), \(k\in \mathbf {N}\), such that the following inequalities hold:

where \(\varPhi _{i}(i=1,\ldots ,4)\) are given in (8), \(\varOmega =U\otimes I_{n}\), and

Proof

Construct the Lyapunov function \(V(t)=V_{1}(t)+V_{2}(t)\), where \(V_{1}(t)=x^{T}(t)\varOmega x(t)\), \(V_{2}(t)=e^{T}(t)e(t)\). When \(t\ne t_{k}\), by calculating the \(\varDelta -\)derivative of \(V_{i}(t)~(i=1,2)\) along the trajectories of systems (7) and (8), we have from Theorem 1.20 in [42] that

According to the fact that \(x^{T}y+y^{T}x\le \theta x^{T}x+\frac{1}{\theta }y^{T}y\), where \(\theta >0\), and by (9)-(10), we can get

From (7), (8), and (11), (12) we can get

By Lemma 1, (14) and (15), we can get that for all \(t\in (t_{k},t_{k+1})_{\mathbf {T}}\),

Since \(\varepsilon >0\), we have

Thus,

For \(t=t_{k+1}\), if \(t_{k+1}\) is left dense, then

If \(t_{k+1}\) is left scattered, then

Thus, for all \(t\in (t_{k},t_{k+1}]_{\mathbf {T}}\),

Let \(\gamma _{0}=exp(\gamma t_{1})\sup _{t \in [0,t_{1}]_{\mathbf {T}}}V(t)\). Then, for all \(t\in [0,t_{1}]_{\mathbf {T}}\), we have \(V(t)\le \gamma _{0}exp(-\gamma t)\).

For \(t\in (t_{1},t_{2}]_{\mathbf {T}}\), by (13) and (16) we can get

Next, by the mathematical induction approach, we will prove that for all \(t\in (t_{k},t_{k+1}]_{\mathbf {T}}\), \(k\in \mathbf {N_{+}}\),

Suppose for all \(t\in (t_{k},t_{k+1}]_{\mathbf {T}}\) and \(k\le m\) (\(m\in \mathbf {N_{+}}\)), (17) holds. For \(k=m+1\) and \(t\in (t_{m+1},t_{m+2}]_{\mathbf {T}}\), by (13) and (16), we can get

Thus, for all \(t\in (t_{k},t_{k+1}]_{\mathbf {T}}\), \(k\in \mathbf {N_{+}}\), (17) holds, which implies

and hence we can get \(\displaystyle {\lim _{t\rightarrow \infty }}V(t)=0\).

By Lemma 2 we have, for all \(i,j=1,2,\ldots ,N\),

which implies \(\displaystyle {\lim _{t\rightarrow \infty }}\parallel x_{i}(t)-x_{j}(t)\parallel =0\) and \(\displaystyle {\lim _{t\rightarrow \infty }}\parallel e_{i}(t)\parallel =0\) for all \(i,j=1,2,\ldots ,N\). The proof is thus completed. \(\square \)

The result in Theorem 1 is concerned with two networks with non-identical topologies (i.e. \(C\ne D)\). For networks (1) and (2) with identical topologies (i.e. \(C=D)\), a sufficient condition can be similarly obtained if the inequality (9)–(13) are changed into the corresponding forms.

Theorem 2

Networks (1) and (2) can achieve inner–outer synchronization by the controllers (3) and (4), if there exist three positive constants \(\theta \), \(\gamma \), \(\varepsilon \), two sets of positive constants \(\{\eta _{k}\}\) and \(\{b_{k}\}\), \(k\in \mathbf {N}\), such that the following inequalities hold:

where \(\varPhi _{i}(i=1,\ldots ,3)\) are given in (8), \(\varOmega =U\otimes I_{n}\), and U is defined in Theorem 1.

Proof

The proof can be directly derived from Theorem 1, so it is omitted here. \(\square \)

Remark 4

According to the statement in Remark 1, networks (1) and (2) are of generality and can be transformed into other forms, thus, the sufficient criteria of Theorem 1 and Theorem 2 are also effective for the inner–outer synchronization problems of different types of networks. For the case \(\mathbf {T}=\mathbf {R}\), \(\mu (t)\equiv 0\), \(e_{\varepsilon }(t_{k+1},t_{k})=e^{\varepsilon (t_{k+1}-t_{k})}\); for the case \(\mathbf {T}=h\mathbf {Z}\), \(h>0\) is a constant, \(\mu (t)\equiv h\), \(e_{\varepsilon }(t_{k+1},t_{k}) =(1+h\varepsilon )^{\frac{t_{k+1}-t_{k}}{h}}\).

Remark 5

It is noted that the configuration matrices C and D need not be symmetric, diffusive, or irreducible, which means that networks (1) and (2) can be undirected or directed, and may also contain isolated nodes or clusters.

The results in Theorem 1 and Theorem 2 are concerned about the situation that the two dynamical networks (1) and (2) can achieve inner/outer synchronization simultaneously, for the cases that inner synchronization of network (1) and outer synchronization between networks (1) and (2), we have the following two corollaries.

Corollary 3

Network (1) can achieve inner by the controller (3), if there exist two positive constants \(\gamma \), \(\varepsilon \), two sets of positive constants \(\{\eta _{k}\}\) and \(\{b_{k}\}\), \(k\in \mathbf {N}\), such that the following inequalities hold:

where \(\varPhi _{1}\) and \(\varPhi _{3}\) are given in (8), \(\varOmega =U\otimes I_{n}\), and U is defined in Theorem 1.

Proof

Construct the Lyapunov function candidate \(V(t)=x^{T}(t)\varOmega x(t)\). When \(t\ne t_{k}\), by calculating the \(\varDelta -\)derivative of V(t) along the trajectories of systems (7), we have

By (23), we can get

From (7), (8), and (24) we can get

The rest proof is similar to that of Theorem 1, so it is omitted here. \(\square \)

Corollary 4

Networks (1) and (2) can achieve outer synchronization by the controller (4) with \(q_{1,k}=0\) for any k, if there exist three positive constants \(\theta \), \(\gamma \), \(\varepsilon \), two sets of positive constants \(\{\eta _{k}\}\) and \(\{b_{k}\}\), \(k\in \mathbf {N}\), such that the following inequalities hold:

where \(\varPhi _{1}\) and \(\varPhi _{2}\) are given in (8), \(\varOmega =U\otimes I_{n}\), and U is defined in Theorem 1. \(\square \)

Proof

Construct the Lyapunov function candidate \(V(t)=e^{T}(t)e(t)\). When \(t\ne t_{k}\), by calculating the \(\varDelta -\)derivative of V(t) along the trajectories of systems (8), we have

According to the fact that \(x^{T}y+y^{T}x\le \theta x^{T}x+\frac{1}{\theta }y^{T}y\), where \(\theta >0\), and by (26) and (27), we can get

From (7), (8), and (28) we can get

The rest proof is similar to that of Theorem 1, so it is omitted here. \(\square \)

5 An Example

In this section, we give a simulation example to illustrate the effectiveness of the theoretical results.

Consider dynamical networks (1) and (2) with \(n=2\), \(N=5\), \(\alpha =\beta =0.1\),

the time scale is given as \(\mathbf {T} =\displaystyle {\bigcup _{j\in \mathbf {Z}}[0.5j-0.1,0.5j+0.1]}\), then, the graininess function \(\mu (t)\) is given as follows:

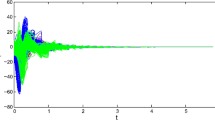

Given initial values \(x_{1}(0)=(0.1,0.2)^{T}\), \(x_{2}(0)=-x_{5}(0)=(-0.1,-0.1)^{T}\), \(x_{3}(0)=x_{4}(0)=(0.1,-0.1)^{T}\), \(y_{1}(0)=y_{3}(0)=y_{5}(0)=(0.1,-0.1)^{T}\), \(y_{2}(0)=-y_{4}(0)=(-0.1,-0.1)^{T}\). Figures 1 and 2 show the states trajectories of networks (1) and (2) without any control, from which we can see that inner synchronization of network (1) and outer synchronization between networks (1) and (2) are not achieved.

To ensure networks (1) and (2) can achieve inner–outer synchronization, we design the distributed pinning impulsive controllers (3) and (4) with the control gains \(q_{1,2k}=-0.005\), \(q_{1,2k+1}=-0.45\) and \(q_{2,2k}=-0.1\), \(q_{2,2k+1}=-0.25\), and according to the form in [30], the impulsive sequence is chosen as \(t_{k}=0.5k~(k\in \mathbf {N})\). Let \(l_{2k}=3\), \(l_{2k+1}=5\), which means that 3 nodes are controlled at impulsive instants \(t_{2k}\), and 5 nodes are controlled at impulsive instants \(t_{2k+1}\). In the designed control scheme, the nodes \(x_{i}\) and \(y_{i}~(i=1,3,5)\) are chosen to be controlled at \(t_{2k}\), and the nodes \(x_{i}\), \(y_{i}~(i=1,\ldots ,5)\) are selected to be controlled at \(t_{2k+1}\).

By using the Matlab LMI Toolbox to solve the inequalities in Theorem 1, we can get \(\theta =0.05\), \(\gamma =0.01\), \(\varepsilon =0.1\), \(\eta _{2k}=3\), \(\eta _{2k+1}=0.25\), accordingly, \(b_{2k}=3\), \(b_{2k+1}=10~(k\in \mathbf {N})\). Therefore, networks (1) and (2) can achieve inner–outer synchronization under the designed distributed pinning impulsive controllers, see Figs. 3and 4 for illustration.

Trajectories \(\parallel x_{i}(t)-x_{j}(t)\parallel ~(i,j=1,\ldots ,5)\) of network (1) without control

Trajectories \(\parallel x_{i}(t)-x_{j}(t)\parallel ~(i,j=1,\ldots ,5)\) of closed-loop network (5)

Trajectories \(\parallel e_{i}(t)\parallel ~(i=1,\ldots ,5)\) of closed-loop network (6)

6 Conclusion

In this paper, we have proposed a kind of network synchronization, called inner–outer synchronization. Two dynamical networks achieving inner–outer synchronization means that each of them can achieve inner synchronization, and outer synchronization between them can be also achieved. By designing suitable distributed pinning impulsive controllers, we have studied the inner–outer synchronization problems of two dynamical networks with identical and non-identical topologies on time scales. According to the theory of time scales, by using the Lyapunov function method and the mathematical induction approach, we have established two sufficient conditions for inner–outer synchronization of two networks on time scales. It has been shown that the results in this paper are applicable to not only discrete/continuous dynamical networks, but also the cases on hybrid time domains. To illustrate the effectiveness of our results, a simulation example has been given. In the future works, we will consider the effect of time delay on the synchronization results.

References

Strogatz S (2001) Exploring complex networks. Nature 410(6825):268–276

Barabasi A, Albert R (2012) Statistical mechanics of complex networks. Rev Mod Phys 74(1):47–97

Boccaletti S, Latora V, Moreno Y, Chavez M, Hwang D (2006) Complex networks, structure and dynamics. Phys Rep 424(4):175–308

Wang X, Chen G (2001) Complex networks, small-world, scale free and beyond. IEEE Circuits Syst Mag 3(1):6–20

Li H, Wang Y (2015) Controllability analysis and control design for switched Boolean networks with state and input constraints. SIAM J Control Optim 53(5):2955–2979

Liu X, Ho DWC, Cao J, Xu W (2016) Discontinuous observers design for finite-time consensus of multiagent systems with external disturbances. IEEE Trans Neural Netw Learn Syst 28(11):2826–2830

Liu X, Ho DWC, Song Q, Xu W (2018) Finite/fixed-time pinning synchronization of complex networks with stochastic disturbances. IEEE Trans Cybern 49(6):2398–2403

Nima M, Claudio D (2017) Agreeing in networks, unmatched disturbances, algebraic constraints and optimality. Automatica 75:63–74

Li H, Xie L, Wang Y (2016) On robust control invariance of Boolean control networks. Automatica 68:392–396

Acebrón JA, Bonilla LL, Vicente CJP, Ritort F, Spigler R (2005) The Kuramoto model: a simple paradigm for synchronization phenomena. Rev Mod Phys 77:137–185

DeLellis P, di Bernardo M, Liuzza D (2015) Convergence and synchronization in heterogeneous networks of smooth and piecewise smooth systems. Automatica 56(6):1–11

Chen Y, Yu W, Tan S, Zhu H (2016) Synchronizing nonlinear complex networks via switching disconnected topology. Automatica 70:189–194

Wu Y, Lu R, Shi P, Su H, Wu Z (2017) Adaptive output synchronization of heterogeneous network with an uncertain leader. Automatica 76:183–192

Tuna S (2017) Synchronization of harmonic oscillators under restorative coupling with applications in electrical networks. Automatica 75:236–243

Yang X, Lu J (2015) Finite-time synchronization of coupled networks with Markovian topology and impulsive effects. IEEE Trans Autom Control 61(8):2256–2261

Lu J, Zhong J, Li L, Ho D, Cao J (2015) Synchronization analysis of master-slave probabilistic Boolean networks. Sci Rep 5:13437

Li C, Sun W, Kurths J (2007) Synchronization between two coupled complex networks. Phys Rev E 76(4):046204

Li C, Xu C, Sun W, Xu J, Kurths J (2009) Outer synchronization of coupled discrete-time networks. Chaos 19(1):013106

Wu Z, Chen G, Fu X (2015) Outer synchronization of drive-response dynamical networks via adaptive impulsive pinning control. J Frankl Inst 352(10):4297–4308

Sun W, Chen Z, Lü J, Chen S (2012) Outer synchronization of complex networks with delay via impulse. Nonlinear Dyn 69(4):1751–1764

Shi H, Sun Y, Miao L, Duan Z (2016) Outer synchronization of uncertain complex delayed networks with noise coupling. Nonlinear Dyn 85(4):2437–2448

Lu W, Zheng R, Chen T (2016) Centralized and decentralized global outer-synchronization of asymmetric recurrent time-varying neural network by data-sampling. Neural Netw 75:22–31

Lu X, Wang Y, Zhao Y (2016) Synchronization of complex dynamical networks on time scales via Wirtinger-based inequality. Neurocomputing 216:143–149

Ali M, Yogambigai J (2016) Synchronization of complex dynamical networks with hybrid coupling delays on time scales by handling multitude Kronecker product terms. Appl Math Comput 291:244–258

Xiao Q, Zeng Z (2017) Scale-limited Lagrange stability and finite-time synchronization for memristive recurrent neural networks on time scales. IEEE Trans Cybern 47(10):2984–2994

Zhang Z, Liu K (2017) Existence and global exponential stability of a periodic solution to interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales. Neural Netw 24(5):427–439

Li Y, Yang L, Li B (2016) Existence and stability of pseudo almost periodic solution for neutral type high-order Hopfield neural networks with delays in leakage terms on time scales. Neural Process Lett 44(3):603–623

Ali MS, Yogambigai J (2019) Synchronization criterion of complex dynamical networks with both leakage delay and coupling delay on time scales. Neural Process Lett 49(2):453–466

Lu X, Zhang X (2019) Stability analysis of switched systems on time scales with all modes unstable. Nonlinear Anal Hybrid Syst 33:371–379

Liu X, Zhang K (2016) Synchronization of linear dynamical networks on time scales. Pinning control via delayed impulses. Automatica 72:147–152

Yu W, Lü J, Yu X, Chen G (2015) Distributed adaptive control for synchronization in directed complex networks. SIAM J Control Optim 53(5):2980–3005

Wang W, Huang J, Wen C, Fan H (2014) Distributed adaptive control for consensus tracking with application to formation control of nonholonomic mobile robots. Automatica 50(4):1254–1263

Li X, Song S (2013) Impulsive control for existence, uniqueness, and global stability of periodic solutions of recurrent neural networks with discrete and continuously distributed delays. IEEE Trans Neural Netw Learn Syst 24(6):868–877

He W, Qian F, Lam J, Chen G, Han Q, Kurths J (2015) Quasi-synchronization of heterogeneous dynamic networks via distributed impulsive control. Error estimation, optimization and design. Automatica 62:249–262

Chen W, Jiang Z, Lu X, Luo S (2015) \(H_{\infty }\) synchronization for complex dynamical networks with coupling delays using distributed impulsive control. Nonlinear Anal Hybrid Syst 17:111–127

Guan Z, Liu Z, Feng G, Wang Y (2010) Synchronization of complex dynamical networks with time-varying delays via impulsive distributed control. IEEE Trans Circuits Syst I Regul Pap 57(8):2182–2195

Zhang X, Lv X, Li X (2017) Sampled-data-based lag synchronization of chaotic delayed neural networks with impulsive control. Nonlinear Dyn 90(3):2199–2207

Yu W, Chen G, Lü J (2009) On pinning synchronization of complex dynamical networks. Automatica 45(2):429–435

Wang J, Wu H, Huang T, Ren S (2016) Pinning control strategies for synchronization of linearly coupled neural networks with reaction–diffusion terms. IEEE Trans Neural Netw Learn Syst 27(4):749–761

He W, Qian F, Cao J (2017) Pinning-controlled synchronization of delayed neural networks with distributed-delay coupling via impulsive control. Neural Netw 85:1–9

Lu J, Wang Z, Cao J, Ho D, Kurths J (2012) Pinning impulsive stabilization of nonlinear dynamical networks with time-varying delay. Int J Bifurc Chaos 22(7):1250176

Bohner M, Peterson A (2001) Dynamic equations on time scales—an introduction with applications. Birkhauser, Boston

Cheng Q, Cao J (2011) Global synchronization of complex networks with discrete time delays and stochastic disturbances. Neural Comput Appl 20(8):1167–1179

Acknowledgements

This work is supported by by the National Natural Science Foundation of China under Grants 61873150, 61503225 the Natural Science Fund for Distinguished Young Scholars of Shandong Province under Grant JQ201613.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest to this work. There is no professional or other personal interest of any nature or kind in any product that could be construed as influencing the position presented in the manuscript entitled.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lu, X., Li, H. Distributed Pinning Impulsive Control for Inner–Outer Synchronization of Dynamical Networks on Time Scales. Neural Process Lett 51, 2481–2495 (2020). https://doi.org/10.1007/s11063-020-10204-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-020-10204-z