Abstract

We address the problem of unsupervised visual domain adaptation for transferring category models from one visual domain or image data set to another. We present a new unsupervised domain adaptation algorithm based on subspace alignment. The core idea of our approach is to reduce the discrepancy between the source domain and the target domain in a latent discriminative subspace. Specifically, we first generate pseudo-labels for the target data by applying spectral clustering to a cross-domain similarity matrix, which is built from sparse coefficients found in a low-dimensional latent space. This coarse alignment between the two domains exploits the assumption that the collection of data of different classes from both domains can be viewed as samples from a union of low-dimensional subspaces. Then, we create discriminative subspaces for both domains using partial least squares correlation. Finally, a mapping which aligns the discriminative source subspace into the target one is learned by minimizing a Bregman matrix divergence function. Experimental results on benchmark cross-domain visual object recognition data sets and cross-view scene classification data sets demonstrate that the proposed method outperforms the baselines and several state-of-the-art competing methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Traditional learning based image classification algorithms rely heavily on the assumption that data used for training and testing are drawn from the same distribution. However, many real-world applications challenge this assumption. For a typical object recognition task, the labeled training data are often obtained from well-established object database such as Caltech-256 [1] while the testing data may be street daily shots acquired by mobile cameras. The same object model in both domains is subject to arbitrary shifts due to a combination of factors including different views, illuminations, object location and pose, resolutions and background clutter. For a more challenging cross view scene classification task, we want to transfer the semantic knowledge of scene models learned from rich annotated ground view images such as SUN database [2] to overhead view aerial or satellite scene images where the scarce of semantic annotations impedes the understanding of remote sensing images (see Fig. 1 for the dataset bias for the same set of object and scene categories).

Recent studies have demonstrated a significant degradation in the performance of state-of-the-art image classifiers under mismatched training and testing conditions [7]. Visual domain adaptation aims to address the problem of transferring object models or scene models from one visual domain to another. Depending on the availability of labeled training examples from the target domain, two scenarios are often differentiated: (i) the unsupervised setting where the training data consists of labeled source data and unlabeled target data and (ii) the semi-supervised setting where a large number of labels are available for the source domain and only a few labels are provided for the target domain. In this paper, we focus on the unsupervised case where the common practice of discriminative training is not applicable. Without target labels, it is not even clear how to define the right discriminative loss on the target domain.

Dataset bias for visual recognition. a Example images from BACK-PACK category in Caltech-256, Amazon, Webcam and DSLR [3]. b Example images from RIVER and INDUSTRIAL categories in two ground view scene datasets Scene-15 [4] and SUN database [2] and two overhead view scene datasets 19-class satellite scene dataset [5] and UCMERCED aerial scene dataset [6]

In this letter, we introduce a new unsupervised visual domain adaptation algorithm based on subspace alignment. The contributions of our approach are twofold: (i) we propose to roughly align the two domains using sparse subspace clustering. Cross-domain sparse subspace clustering provides a natural way of passing down the label information from the source domain to the target domain and is robust to noise and outliers. (ii) we present a discriminative subspace alignment algorithm which maximizes the correlation between data and their labels in the projected subspace and minimizes the data divergence by transforming source data to the target aligned source subspace.

The rest of this letter is organized as follows. Section 2 reviews related work and Sect. 3 introduces the motivation of our method. In Sect. 4, a detailed description of the discriminative sparse subspace clustering and alignment is presented. Section 5 reports the experimental results. Section 6 presents the discussion. Finally, Sect. 7 concludes the paper.

2 Related Work

Techniques for building classifiers that are robust to mismatched distributions have been investigated under the names of domain adaptation, covariate shift, or transfer learning. Recently, considerable effort has been devoted to domain adaptation in computer vision and machine learning communities. Several reviews can be found in [8–11]. Existing visual domain adaptation methods either try to find a common feature space where the data divergence between the source domain and the target domain can be significantly reduced or explicitly learn a new classifier model which minimizes the generalization error in the target domain.

Techniques that modify the representation of the data attempt to adjust the distributions of either the source or the target data, or both, to ultimately obtain a well-aligned feature space. In particular, subspace based visual domain adaptation methods have demonstrated good performance. Si et al. [12] introduced the Bregman divergence based regularization to several popular subspace learning algorithms for cross-domain face recognition and text categorization. Tuia et al. [13] proposed manifold alignment of different modalities of remote sensing images. Pan et al. [14] introduced transfer component analysis, which tries to learn some transfer components across domains in a reproducing kernel Hilbert space using maximum mean discrepancy. In [15], Chang transforms the source data into an intermediate representation such that each transformed source sample can be linearly reconstructed by the target samples. In [16], Shao et al. present a low-rank transfer subspace learning technique which exploits the locality aware reconstruction in a similar way to manifold learning. In [17], Gopalan et al. generate intermediate representations in the form of subspaces along the geodesic path connecting the source subspace and the target subspace on the Grassmann manifold. In [3], Gong et al. propose a geodesic flow kernel which models incremental changes between the source and target domains. In both [3] and [17], a set of intermediate subspaces are used to model the domain shift. Baktashmotlagh et al. [18] propose to learn a projection of the data to a low-dimensional latent space where the distance between the empirical distributions of the source and target samples is minimized. Fernando et al. [19] propose to align PCA based source subspace and PCA target subspace directly. Their method seeks a domain invariant feature space by learning a mapping function which aligns the source subspace with the target one. The solution of the corresponding optimization problem can be obtained in a simple closed form, leading to an extremely fast algorithm.

Previous research [3, 17–19] suggests that partial least squares (PLS) is preferred over other supervised dimensionality reduction techniques for subspace based domain adaptation when label information is available. PLS locates and emphasizes group structure in the data and is closely related with canonical correlation analysis (CCA) and linear discriminant analysis (LDA). The PLS family consists of PLS correlation (PLSC) (also sometimes called PLS-SVD), PLS regression (PLSR) and PLS path modeling (PLS-PM). In this letter, we use PLSC to model the correlation between data samples and their labels.

3 Motivation

As suggested by [20], a reduction of the data distribution divergence between the source domain and the target domain is required to adapt well. From a mutual information point of view, let \(H(\chi _S )\) denotes the entropy of the source data and \(H(\chi _S ,\chi _T )\) denotes the cross entropy between the source data and the target data, the mutual information between the two domains can then be given as follows:

According to Eq. (1), if we want to maximize the mutual information between the source distribution and the target distribution, we need to simultaneously increase the target entropy and reduce the data divergence between the two domains. If we project data from all domains to a target subspace, it will increase the term \(H(\chi _T )\) and hence the mutual information. It will further improve the classification performance if a discriminative target subspace is used. For cross-domain data discrepancy reduction in low-dimensional subspaces, subspace alignment [19] provides a simple but effective framework for unsupervised scenario. However, it projects all data into a PCA based target subspace, which is not optimal for classification tasks. We aim to create a discriminative target subspace when no labels are available from the target domain and project all data from both domains into the generated subspace to minimize the data divergence.

Despite the shift that has occurred, samples belonging to the same category from both domains can be well represented by a low-dimensional subspace of the high-dimensional ambient space. The collection of data from multiple classes lies in a union of low-dimensional subspace. Cross-domain subspace clustering provides a natural way of passing down the labels from the source data to the target data, which can then be exploited for discriminative target subspace creation. Sparse representation and low-rank approximation based subspace clustering methods [21, 22] have gained attention in recent years as they can handle noise and outliers in data, and they do not need to know the dimensions and the number of subspaces a priori.

4 Discriminative Subspace Alignment

4.1 Cross-Domain Subspace Clustering

We adopt the latent space sparse subspace clustering algorithm [21] for cross-domain subspace clustering. Let \(\mathbf{X}=[\mathbf{x}_1 ,\ldots ,\mathbf{x}_N ]\in R^{D\times N}\) be a collection of N samples drawn from a union of n linear subspaces \(S_1 \cup S_2 \cup \cdot \cdot \cdot \cup S_n \) of dimensions \(\{d_\ell \}_{\ell =1}^n\) in \(R^{D}\). Let \(\mathbf{X}_\ell \in R^{D\times N_\ell }\) be a submatrix of \(\mathbf{X}\) of rank \(d_\ell \) with \(N_\ell >d_\ell \) points that lie in \(S_\ell \). It is easy to see that each point in \(\mathbf{X}\) can be efficiently represented by a linear combination of at most \(d_\ell \) other points in \(\mathbf{X}\). That is, one can represent \(\mathbf{x}_i \) as

where \(\mathbf{c}_i =[c_{i1} ,c_{i2} ,\ldots ,c_{iN} ]^{T}\in R^{N}\) are the coefficients. For sparse subspace clustering, the following minimization problem is solved to obtain the coefficients:

Let \(\mathbf{P}\in \mathbf{R}^{d\times D}\) denotes a linear transformation that maps signals from the original space \(\mathbf{R}^{D}\) to a latent output space of dimension d, latent space sparse subspace clustering learns the mapping and finds the sparse codes simultaneously by minimizing the following cost function:

where \(\mathbf{X}=[\mathbf{X}_S ;\mathbf{X}_T]\in \mathbf{R}^{D\times N}\) is the multi-class data from both domains, \(\mathbf{C}\in \mathbf{R}^{N\times N}\) is the sparse coefficient matrix, \(\lambda _1 \) and \(\lambda _2 \) are non-negative constants that control sparsity and regularization. The first two terms promote sparsity of data in the latent subspace. The second term ensures that the projection preserves the main statistics of the data.

The problem can be efficiently solved by using the classical alternating direction method of multiplier. Once \(\mathbf{C}\) is found, spectral clustering is applied on the affinity matrix \(\mathbf{W}=|\mathbf{B}|+|\mathbf{C}|^{\mathbf{T}}\) to obtain the segmentation of the data. Each sample from the target domain can then be assigned a pseudo-label by majority voting of source sample labels in each cluster.

4.2 Discriminative Subspace Creation

Let \(\mathbf{X}_T \) denotes a \(N_T \) by D data matrix from target domain where the rows are observations and the columns are variables and \(\mathbf{Y}_T \) denotes the corresponding \(N_T \) by C pseudo-label matrix coded in the following way:

where \(\mathbf{1}_{k\times 1} \) is a \(k\times 1\) vector of all ones and likewise \(\mathbf{0}_{k\times 1} \) is a \(k\times 1\) vector of all zeros, C is the number of categories common to both domains. Each column of \(\mathbf{X}_T \) is z-normalized (i.e. of zero mean and unit standard deviation). The correlation matrix is then computed as: \(\mathbf{R}_T =\mathbf{Y}_T^\mathbf{T} \mathbf{X}_T \).The SVD of \(\mathbf{R}_T \) decomposes it into three matrices: \(\mathbf{R}_T =\mathbf{U}_T \Delta \mathbf{V}_T ^{\mathbf{T}}\).The subspace basis for \(\mathbf{X}_T \) is obtained as: \(\mathbf{L}_{\mathbf{X}_T } =\mathbf{X}_T \mathbf{V}_T \). Similarly, we use PLSC to create a discriminative source subspace \(\mathbf{L}_{\mathbf{X}_S } \).

4.3 Discriminative Subspace Alignment

A mapping function \(\mathbf{M}\in \mathbf{R}^{d\times d}\) that transforms the source subspace \(\mathbf{L}_{\mathbf{X}_S } \) into the target subspace \(\mathbf{L}_{\mathbf{X}_T } \) is learned by minimizing the following Bregman matrix divergence:

where \(||\cdot ||_F^2 \) is the Frobenius norm. Because the Frobenius norm is invariant to orthonormal operations, the objective function can be written as:

The optimal \(\mathbf{M}^{*}\) is obtained as \(\mathbf{M}^{*}=\mathbf{L}_{\mathbf{X}_S } ^{-1}{} \mathbf{L}_{\mathbf{X}_T } =\mathbf{L}_{\mathbf{X}_S } ^{\mathbf{T}}{} \mathbf{L}_{\mathbf{X}_T } \) and it transforms the source subspace coordinate system into the target subspace coordinate system by aligning the source basis vectors with the target ones. When the source and target domains are the same, and then \(\mathbf{M}^{*}\) is the identity matrix. The pseudo-code of the proposed unsupervised discriminative subspace alignment algorithm is presented in Algorithm 1.

5 Experimental Results

We evaluate our methods in the context of cross-domain visual object recognition and cross-view scene classification. All baseline methods and other competing unsupervised domain adaptation methods project the original high-dimensional feature to a new feature space where a nearest neighbor (NN) classifier is trained on the labeled source data and tested on the unlabeled target data. NN is chosen as the base classifier as it does not require tuning cross-validation parameters. Under our experimental setup, it is difficult to tune the optimal parameters using cross-validation since labeled and unlabeled data are sampled from different distributions.

5.1 Cross-Domain Visual Object Recognition

In order to evaluate the effectiveness of our method for transferring object models from one visual domain to another, we use the benchmark Office+Caltech-10 [3] dataset for cross-domain visual object recognition. The dataset includes four visual domains: Amazon (A: images downloaded from online merchants), Webcam (W: low-resolution images by a web camera), DSLR (D: high-resolution images by a digital SLR camera) and Caltech-256 dataset (C). It consists of 2533 images of 10 classes common to all four domains: BACKPACK, TOURING-BIKE, CALCULATOR, HEAD-PHONES, COMPUTER-KEYBOARD, LAPTOP-101, COMPUTER-MONITOR, COMPUTER-MOUSE, COFFEE-MUG, and VIDEO-PROJECTOR. There are 8 to 151 samples per category per domain. Figure 2 shows the differences among these domains with example images from the category of MONITOR.

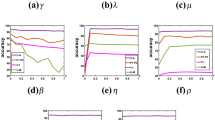

By randomly selecting two different domains as the source domain and target domain respectively, there exist 12 different cross domain object recognition problems. In our experiments, we use the same image representation (SURF features encoded with an 800 words dictionary) and protocol for generating the source and target samples with the literature [3, 17, 19]. We set \(\lambda _1 =\lambda _2 =0.5\) for latent space sparse subspace clustering (LS3C) [22]. We compare our methods to three baselines and several state-of-the-art methods including SGF (sampling geodesic flow) [17], GFK (geodesic flow kernel) [3], transfer component analysis (TCA) [14], subspace alignment (SA) [19], and low-rank transfer subspace learning (LTSL) [16]. The accuracy of pseudo-label generated by LS3C is also reported.

-

Baseline-NA (no adaptation): where we use the original feature representation after z-normalization.

-

Baseline-S: where we project both source and target data into PCA based source subspace.

-

Baseline-T: where we project both source and target data into PCA based target subspace.

The subspace dimensionality of Baseline-S and Baseline-T is determined by MLE based domain intrinsic dimensionality estimation [23, 24]. For each method, we report the best accuracy for each case from the corresponding paper in order to avoid any implementation differences (SGF is based on the implementation of LTSL paper). The results are presented in Table 1. The best performing methods in each column are in bold font and the second best group is in italics and underlined. Figure 3 shows the cross-domain similarity matrix built from LS3C for the case \(\hbox {D}\rightarrow \hbox {C}\). It can be seen that despite the shift that has occurred, the cross-domain data from the same category have a strong similarity.

The best performing methods (differences up to one stand ard error) in each column are in bold font and the second best group is in italics and underlined.

5.2 Cross-View Scene Classification

We have collected a cross-view scene dataset from two ground level scene datasets: SUN database [2] (Source domain 1, S1) and Scene-15 [4] (Source domain 2, S2), and three overhead remote sensing scene datasets: Banja Luka dataset [25] (Target domain 1, T1), UCMERCED dataset [6] (Target domain 2, T2), 19-class satellite scene dataset [5] (Target domain 3, T3). The dataset consists of 2768 images of four common categories (field/agriculture, forest/trees, river/water and industrial). Figure 4 shows an example of the dataset (one image per category per dataset). Table 2 gives the statistics of image numbers in the dataset.

For each image in the dataset, the histogram of oriented edges (HOG) feature is extracted (stacking 2\(\times \)2 neighboring descriptor of 8\(\times \)8 pixels cell). HOG descriptors have been quantized into 300 visual words by k-means. With local-constraint linear coding (LLC), three level spatial histograms are computed on grids of 1\(\times \)1, 2\(\times \)2 and 4\(\times \)4. Each image is finally represented by a 6300 dimensional z-normalized vector. The subspace dimensionality is determined by MLE based domain intrinsic dimensionality estimation using the target data for SGF, GFK, TCA, SA (PCA, PCA), and Baseline-T and using the source data for Baseline-S. The sampling rate of SGF is set to [0.2, 0.4, 0.6, 0.8]. For SGF, GFK and SA, we use the implementations provided by the authors.

For each source-target DA problem, 20 images from each category in the source domain are randomly selected as the training set and all the images in the target domain as the testing set, the classification accuracies of all the above methods over 20 random trials using a NN classifier is summarized in Table 3.

The best performing methods (differences up to one standard error) in each column are in bold font and the second best group is in italics and underlined.

We have also evaluated the performance of our method in the context of transferring scene category models from aerial scenes to satellite scenes. We have collected 1377 images of nine common categories from UCMERCED aerial scene dataset [6] and 19-class satellite scene dataset [5]. Figure 5 shows the images from each category. We adopted the same parameter settings with the cross-view scene classification experiments and the results are reported in Table 4. We have carried out the experiments on a machine with 2.80GHZ Intel CPU and 2.98GB RAM based on matlab implementation of our algorithm. The classification time of different methods is reported in Table 5.

The best performing methods (differences up to one standard error) in each column are in bold font and the second best group is in italics and underlined.

6 Discussions

The proposed method adopts a coarse-to-fine adaptation strategy based on subspace analysis. It is a general framework for unsupervised domain adaptation, and can be easily applied to other signal processing tasks. For cross-domain visual object recognition application, it can be seen from Table 1 that the proposed method outperforms the baselines and other competing methods on average. Our algorithm achieves the best performance for four problems (\(\hbox {A}\rightarrow \hbox {D}\), \(\hbox {A}\rightarrow \hbox {W}\), \(\hbox {D}\rightarrow \hbox {W}\) and \(\hbox {W}\rightarrow \hbox {D}\)) and the second best performance for six problems out of twelve cases. It should be noted that a coarse alignment using cross domain latent subspace clustering improves by 7 % in classification accuracy over no adaptation and outperforms SGF, GFK, TCA and LTSL-PCA. For cross-view scene classification application, it can be seen that the proposed method consistently outperforms the baselines and other competing methods in all six problems. SA achieves the second best performance. The results confirm the effectiveness of projecting source data into a discriminative target subspace. For aerial-to-satellite scene classification application, our method improves by 6 % in classification accuracy over the second best performing methods. The computational complexity of our algorithm mainly comes from two parts. The first part is the cross-domain latent subspace clustering, which has a complexity of \(\hbox {O}((\hbox {K}''\hbox {w}n^{2}+\hbox {D}''\hbox {wn}^{2}))\), where K and M are related to the iteration and feature dimensionality, n is number of samples. The second part is the SVD decomposition of PLSC, which has a complexity of \(\hbox {O}(\hbox {L}^{3})\), where L is number of categories.

Compared with manifold based methods such as SGF and GFK and kernel space based method such as TCA, the subspace alignment strategy is very simple in theory and can be solved in a closed form, leading to an extremely fast algorithm. The major improvements of our method over the original subspace alignment work are twofold: i) a cross-domain latent subspace clustering step is used to pass down the labels from source data to target data; ii) PLSC is adopted to model the correlation between data samples and their labels in both domains. It should be noted that our method relies on the assumption that the target samples can be represented by a sparse set of source samples in latent subspace. When the assumption is violated, it is not likely to perform well. Another weakness of our method is that the sparse representation step for cross domain subspace clustering is computationally demanding. In the future, we will try to use some accelerated sparse approximation tools.

7 Conclusions

Inspired by the recent success of sparse subspace clustering and subspace based visual domain adaptation, we propose a novel discriminative sparse subspace clustering and alignment framework for unsupervised scenario. We aim to create a discriminative target subspace when no labels are available from the target domain and project all data from both domains into the generated subspace to minimize the data divergence. Our method consists of three major components: cross domain latent space sparse subspace clustering, discriminative subspace creation and subspace alignment. Experimental results on benchmark cross-domain visual object recognition datasets and cross-view scene datasets demonstrate the effectiveness of the proposed method.

References

Griffin G, Holub A, Perona P (2007) Caltech-256 object category dataset (technical report), Caltech

Xiao J, Ehinger K, Hays J, Torralba A, Oliva A (2014) SUN database: exploring a large collection of scene categories. Int J Comput Vis 108:1–8

Gong B, Grauman K, Sha F (2014) Learning kernels for unsupervised domain adaptation with applications to visual object recognition. Int J Comput Vis 109:3–27

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: Proceedings of IEEE conference on computer vision and pattern recognition, vol 2, pp 2169–2178

Dai D, Yang W (2011) Satellite image classification via two-layer sparse coding with biased image representation. IEEE Geosci Remote Sens Lett 8(1):173–176

Yang Y, Newsam S (2010) Bag-of-visual-words and spatial extensions for land-use classification. In: Proceedings of the ACM international conference on Advances in geographic information systems, ACM, New York, pp 270–279

Torralba A, Efros A (2011) Unbiased look at dataset bias. In: Proceedings of IEEE conference on computer vision and pattern recognition, vol 2, pp 1521–1528

Margolis A (2011) A literature review of domain adaptation with unlabeled data (technical report), University of Washington, Washington

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Shao L, Zhu F, Li X (2014) Transfer learning for visual categorization: a survey. IEEE TNNLS 26:1019–1034

Patel VM, Gopalan R, Li R, Chellappa R (2015) Visual domain adaptation: an overview of recent advances. In: IEEE signal processing magazine

Si S, Tao D, Geng B (2010) Bregman divergence-based regularization for transfer subspace learning. IEEE Trans Knowl Data Eng 22(7):929–942

Tuia D, Volpi M, Trolliet M, Camps-Valls G (2014) Semisupervised manifold alignment of multimodal remote sensing images. IEEE Trans Geosci Remote Sens 52(12):7708–7720

Pan SJ, Tsang IW, Kwok JT, Yang Q (2011) Domain adaptation via transfer component analysis. IEEE Trans Neural Netw 22(2):199–210

Chang SF (2012) Robust visual domain adaptation with low-rank reconstruction. In: Proceedings of IEEE conference on computer vision and pattern recognition, vol 2, pp 1–8

Shao M, Kit D, Fu Y (2014) Generalized transfer subspace learning through low-rank constraint. Int J Comput Vis 109:74–93

Gopalan R, Li R, Chellappa R (2011) Domain adaptation for object recognition: an unsupervised approach. In: Proceedings of IEEE international conference on computer vision, pp 999–1006

Baktashmotlagh M, Harandi MT, Lovell BC, Salzmann M (2013) Unsupervised domain adaptation by domain invariant projection. In: Proceedings of IEEE international conference on computer vision, pp 769–776

Fernando B, Habrard A, Sebban M, Tuytelaars T (2013) Unsupervised visual domain adaptation using subspace alignment. In: Proceedings of IEEE international conference on computer vision, pp 2960–2967

Ben-David S, Blitzer J, Crammer K, Kulesza A, Pereira F, Wortman J (2010) A theory of learning from different domains. Mach Learn 79:151–175

Elhamifar E, Vidal R (2013) Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans Pattern Anal Mach Intell 35(11):2765–2781

Patel VM, Nguyen HV, Vidal R (2013) Latent space sparse subspace clustering. In: Proceedings of IEEE international conference on computer vision, pp 225–232

Levina E, Bickel PJ (2004) Maximum likelihood estimation of intrinsic dimension. In: Proceedings of the NIPS, pp 1–8

Van der Maaten L, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Res 9(11):1–8

Risojevic V, Babic Z (2011) Aerial image classification using structural texture similarity. In: Proceedings of the IEEE international symposium on signal processing and information technology, pp 190–195

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant 61303186 and by the Ph.D. Programs Foundation of Ministry of Education of China under Grant 20124307120013.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sun, H., Liu, S. & Zhou, S. Discriminative Subspace Alignment for Unsupervised Visual Domain Adaptation. Neural Process Lett 44, 779–793 (2016). https://doi.org/10.1007/s11063-015-9494-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-015-9494-6