Abstract

In this work, we consider a class of impulsive non-autonomous stochastic neural networks with mixed delays. By establishing a new generalized Halanay inequality with impulses, we obtain some sufficient conditions ensuring global mean square exponential stability of the addressed neural networks. The sufficient conditions are easily checked in practice by simple algebra methods and have a wider adaptive range. An example is given to illustrate our results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past few years, there has been increasing interest in neural networks due to their extensive applications in many fields such as pattern recognition, parallel computing, associative memory, signal and image processing and combinatorial optimization. As is well known, in both biological and man-made neural networks, delays occur due to finite switching speed of the amplifiers and communication time. The stability analysis problem for neural networks with delays has gained much research attention, and a large amount of results related to this problem have been published, (see, e.g., [1–12]).

However, besides delay, impulsive effects are also likely to exist in the neural network system. For example, in implementation of electronic networks in which state is subject to instantaneous perturbations and experiences abrupt change at certain moments, which may be caused by switching phenomenon, frequency change or other sudden noise, that is, does exhibit impulsive effects. Some interesting results about the stability for impulsive neural networks with delays have been obtained [13–18].

On the other hand, a real system is usually affected by external perturbations which in many cases are of great uncertainty and hence may be treated as random, as pointed out by [19] that in real nervous systems synaptic transmission is a noisy process brought on by random fluctuations from the release of neurotransmitters, and other probabilistic causes. Therefore, it is significant and of prime importance to consider stochastic effects to the dynamics behavior of impulsive neural networks with delays[20, 21]. In recent years, a large number of stability criteria of neural networks with impulsive and stochastic effects have been reported, see [22–27]. For example, Song and Wang [26] investigated the existence, uniqueness and exponential p-stability of the equilibrium point for impulsive stochastic Cohen-Grossberg neural networks with mixed time delays by employing a combination of the M-matrix theory and stochastic analysis technique. In [27], Wang et al. studied the impulsive stochastic Cohen-Grossberg neural networks with mixed delays by establishing an L-operator differential inequality with mixed delays and using the properties of M-cone and stochastic analysis technique. In [22–24], Zhang et al. studied the dynamical behavior of impulsive stochastic autonomous nonlinear systems.

It is well known that the non-autonomous phenomenon often occurs in many realistic systems. Particularly, when we consider a long-term dynamical behavior of a system, the parameters of the system are usually subjected to environmental disturbances and frequently vary with time. In this case, non-autonomous neural network model can even accurately depict evolutionary processes of networks. Thus the research on the non-autonomous neural networks is also important like on autonomous neural networks [28–35]. Motivated by the above discussions, in this work, we consider a class of impulsive non-autonomous stochastic neural networks with mixed delays. By establishing a new generalized Halanay inequality with impulses which improves the generalized Halanay inequality with impulses established by Li in [18], we obtain some sufficient conditions ensuring global mean square exponential stability of the addressed neural networks.

The rest of this paper is organized as follows. In the next section, we introduce our notations and some basic definitions. Section 3 is devoted to the global exponential stability of the following Eq. (1). We end this paper in Sect. 4 with an illustrative example.

2 Preliminaries

For convenience, we introduce several notations and recall some basic definitions.

Let \(\mathcal {N}=\{1,2,\ldots ,n\}\). C(X, Y ) denotes the space of continuous mappings from the topological space X to the topological space Y. Especially, \(C \buildrel \Delta \over = C( {\left( { -\infty ,0} \right] ,{R^n}} )\) .

where \(J \subset R\) is an interval, H is a complete metric space, \(\psi (s^{+})\) and \(\psi (s^{-})\) denote the right-hand and left-hand limit of the function \(\psi (s)\), respectively. Especially, let \(PC \buildrel \Delta \over = PC\left( {\left( { - \infty ,0} \right] ,{R^n}} \right) \).

where \(\lambda _0\) is a positive constant.

For any \(\varphi \in C\) or \(\varphi \in PC\), we always assume that \(\varphi \) is bounded and introduce the following norm:

Let \((\Omega ,\mathscr {F},\{\mathscr {F}_t\}_{t\ge 0},P)\) be a complete probability space with a filtration \(\{\mathscr {F}_t\}_{t\ge 0}\) satisfying the usual conditions \((\text {i.e, it is right continuous and}\) \(\mathscr {F}_0 \; \text {contains all P-null sets})\). Denote by \(PC^b_{\mathscr {F}_0}\left( {\left( { - \infty ,0} \right] ,{R^n}} \right) \) the family of all bounded \(\mathscr {F}_0\)-measurable, PC-valued random variables \(\varphi \), satisfying \(\left\| \varphi \right\| _{{L^2}}^2 = \mathop {\sup } \limits _{ - \infty < s \le 0} E{\left| {\phi \left( s \right) } \right| ^2} < \infty \) , where E[f] means the mathematical expectation of f.

In this work, we consider the following impulsive non-autonomous stochastic neural networks:

where n corresponds to the number of units in a neural network; \(x_i(t)\) corresponds to the state of the ith unit at time t; \(f_j (x_j (t))\), \(g_j (x_j (t))\) and \(h_j (x_j (t))\) denote the activation functions of the jth unit at time t; \(a_i(t) \ge 0\) represents the rate with which the ith unit will reset its potential to the resting state in isolation when disconnected from the network and external inputs; \((b_{ij}(t))_{n\times n}\), \((c_{ij}(t))_{n\times n}\) and \((d_{ij}(t))_{n\times n}\) are connection matrices; the delay kernels \(k_j(t),\,j=1,2,\ldots ,n,\) are piecewise continuous and satisfy that \(|k_{j}(t)|\le k(t)\in \wp \); \(0\le \tau _{ij}(t)\le \tau \) is the transmission delay, where \(\tau \) is a positive constant. \(\sigma \left( { \cdot , \cdot , \cdot } \right) = \left( {{\sigma _1}\left( { \cdot , \cdot , \cdot } \right) , \ldots ,{\sigma _n}\left( { \cdot , \cdot , \cdot } \right) } \right) :\left[ {{t_0},\infty } \right) \times {R^n} \times {R^n} \rightarrow {R^{n \times n}}\) is the diffusion coefficient matrix; \(w(t) = (w_1 (t), \cdots ,w_n (t))^T \) is an n-dimensional Brownian motion defined on \((\Omega , \mathscr {F},\{\mathscr {F}_t\}_{t\ge 0},P)\). The initial condition \(\varphi (s)=(\varphi _1(s),\varphi _2(s),\ldots ,\varphi _n(s))^T \in PC^b_{\mathscr {F}_0}\left( { (- \infty ,0],{R^n}} \right) .\) \(t_0<t_1<t_2<\cdots \) are fixed impulsive points with \(\mathop {\lim }\limits _{k \rightarrow \infty } {t_k} = \infty ,\) \(k=1,2,\ldots .\)

As a standing hypothesis, we assume that for any initial value \(\varphi \in PC^b_{\mathscr {F}_0}\left( { (- \infty ,0],{R^n}} \right) \) there exists one solution of system (1) which is denoted by \(x(t, t_0,\varphi )\), or, x(t), if no confusion occurs. We will also assume that \(f_j(0) = 0,\ g_j (0) = 0,\ h_j (0) = 0, \,\text {and}\, \sigma _{ij}(t, 0,0) = 0, i\in \mathcal {N},\,t\ge t_0\), for the stability purpose of this work. Then system (1) admits an equilibrium solution \(x(t)\equiv 0\).

Definition 2.1

The zero solution of system (1) is said to be mean square globally exponentially stable if there exist positive constants \(\alpha \) and \(K\ge 1\) such that for any solution \(x(t,t_0,\varphi )\) with the initial condition \(\varphi \in PC^b_{\mathscr {F}_0}\left( { (- \infty ,0],{R^n}} \right) \),

3 Global Exponential Stability

In this section, we will first establish a generalized Halanay inequality with impulses and then give some sufficient conditions on the global exponential stability of zero solution for Eq. (1).

Theorem 3.1

Let u(t) be a solution of the impulsive integro-differential inequality

where u(t) is continuous at \(t\ne t_k\), \(t\ge t_0\), \(\phi \in PC\), k(s) is the same as defined in Sect. 2, \(\alpha (t)\), \(\beta (t)\) and \(\gamma (t)\) are nonnegative continuous functions with \(\alpha (t)\ge \alpha _0>0\) and \(0\le \beta (t)+\gamma (t) \int _0^\infty {k(s)}ds<q\alpha (t)\) for all \(t\ge t_0\) with \(0\le q<1.\) Further assume that there exits a constant \(\rho >0\) such that

where \(\delta _k : = \max \left\{ {1,\left| {p_k } \right| +\left| {q_k } \right| e^{\lambda \tau } } \right\} \). Then

where \(\lambda \in (0,\lambda _0)\) is defined as

Proof

Denote

By assumption \(\alpha (t)\ge \alpha _0>0\) and \(0\le \beta (t)+\gamma (t) \int _0^\infty {k(s)}ds<q\alpha (t)\) for all \(t\ge t_0\) with \(0\le q<1,\) then for any given fixed \(t\ge t_0\), we see that \( H\left( 0 \right) = - \alpha \left( t \right) + \beta \left( t \right) +\gamma (t) \int _0^\infty {k(s)}ds \le - \left( {1 - q} \right) \alpha \left( t \right) \le - \left( {1 - q} \right) \alpha _0 < 0, \mathop {\lim }\limits _{\lambda \rightarrow \infty } H\left( \lambda \right) = \infty , \) and the fact that \(H(\lambda )\) is a strictly increasing function. Therefore, for any \(t\ge t_0\) there is a unique positive \(\lambda (t)\) such that \( \lambda \left( t \right) - \alpha \left( t \right) + \beta \left( t \right) e^{\lambda (t)\tau }+\gamma (t)\int _0^\infty {k\left( s \right) e^{\lambda \left( t \right) s} } ds = 0. \) From the definition, one has \(\lambda ^ * \ge 0\). We have to prove \(\lambda ^ *>0\). Suppose this is not true. Fix \(\tilde{q}\) satisfying \( 0 \le q < \tilde{q} < 1 \) and pick a small enough \(\varepsilon >0\) satisfying \(e^{\varepsilon \tau }<\frac{1}{\tilde{q}},\, \int _0^\infty {k\left( s \right) e^{\varepsilon s} } ds < \frac{1}{{\tilde{q}}}\int _0^\infty {k(s)}ds \), and \( \varepsilon < \left( {1 - \frac{q}{{\tilde{q}}}} \right) \alpha _0. \) Then there is a \(t^*\ge t_0\) such that \(\lambda (t^*)<\varepsilon \) and

Now we have

this contradiction shows that \(\lambda ^*> 0\), so there at least exists a constant \(\lambda \) such that \(0 < \lambda < \min \{\lambda _0,\lambda ^*\}\), that is, the definition of \(\lambda \) for (6) is reasonable.

As \(\phi \in PC\), we always have

We first prove for any given \(k > 1\),

If (8) is not true, then from (7) and the continuity of u(t) for \(t \in [t_0 ,t_1) \), there must exist a \(\hat{t} \in [t_0 ,t_1) \) such that

By using (3),(6) and (10), we obtain that

which contradicts the inequality in (9), and so (8) holds for \(t\in [t_0,\,\,t_1)\). Letting \(k\rightarrow 1\), then we have

Using the result above and the discrete part of (3), we can get

Therefore

Suppose for all \(q = 1, 2,\ldots ,k\), the inequalities

hold, where \(\delta _0=1\). Then, from (12), the discrete part of (3) satisfies that

This, together with (12), leads to

By the mathematical induction, we can conclude that

The proof is completed.\(\square \)

Remark 3.1

If \(\alpha (t)\equiv \alpha \), \(\beta (t)\equiv \beta \) and \(\gamma (t)\equiv \gamma \), \(t\ge t_0\), Theorem 3.1 becomes the lemma 1 in [18].

Theorem 3.2

[18, Lemma 1] Let \(\alpha ,\beta ,\gamma \) and \(p_k,k=1,2,\ldots ,\) denote nonnegative constants and let u(t) be a solution of the impulsive integro-differential inequality

where u(t) is continuous at \(t\ne t_k\), \(t\ge t_0\), \(\phi \in PC\), k(s) is the same as defined in Sect. 2. Assume that

-

(i)

\(\alpha >\beta +\gamma \int _0^\infty k\left( s \right) ds\);

-

(ii)

there exits a constant \(\rho >0\) such that

$$\begin{aligned} \prod \limits _{k = 1}^n {\delta _k } \le e^{\rho \left( {t_n - t_0 } \right) } ,\quad n = 1,2, \ldots , \end{aligned}$$where \(\delta _k : = \max \left\{ {1,\left| {p_k } \right| +\left| {q_k } \right| e^{\lambda \tau } } \right\} .\)

Then

where \(\lambda \in (0,\lambda _0)\) is defined as

We remark here that the autonomous condition (14) is replaced by the non-autonomous condition (3), it is more useful for practical purpose, please see the following example.

Example 3.1

where \(t_k=t_{k-1}+2k, k=1,2,\ldots \). It is clear that Theorem 3.2 fails to apply to Eq. (15). However, from Theorem 3.1, we can easily see that the trivial solution of (15) satisfies \(x\left( t \right) \le e^{ - 0.1t} ,\, t\ge 0\).

Theorem 3.3

Assume that

\((A_1)\) There exist nonnegative constants \(L_j^f , L_j^g ,\) and \(L_{j}^h\) , \(j = 1,\ldots n,\) such that

\((A_2)\) There exist nonnegative continuous functions \(\alpha _i(t)\) and \(\beta _i(t)\), \(i=1,2,\ldots ,n,\) such that

\((A_3)\) There exist nonnegative continuous functions \(\eta (t)\), \(\zeta (t)\) and \(\rho (t)\) , \(t\ge t_0\), such that

where

\((A_4)\) There exists a positive constant \(\gamma \) satisfying

where \( \gamma _k = \max \left\{ 1,\mathop {2n\max }\limits _{1 \le i \le n} \left\{ {\sum \limits _{j = 1}^n {\left( {w_{ij}^k } \right) ^2 } }\right\} +\mathop {2n\max }\limits _{1 \le i \le n} \left\{ {\sum \limits _{j = 1}^n {\left( {e_{ij}^k } \right) ^2 } }\right\} e^{\lambda \tau } \right\} \) and \(0<\lambda \le \lambda _0\) is defined as

Then the zero solution of (1) is mean square globally exponentially stable.

Proof

By a similar argument with (6), one can know that the \(\lambda \) defined by (17) is reasonable.

By the assumptions \((A_1)\)–\((A_4)\), the theorem 4.1 in [36], and the following proof, we know that for any \(\varphi \in PC^b_{\mathscr {F}_0}\left( { (- \infty ,0],{R^n}} \right) \), there exists a unique global solution x(t) through \((t_0, \varphi )\).

Define \( V\left( t \right) = \sum \limits _{i = 1}^n { {x^2_i \left( t \right) } } \). From (1), \((A_1)\), and Itô formula [37] we have

where \(LV\left( t \right) \) is defined by

It follows from \((A_1)-(A_3)\) that

Integrating (18) from \(t_k\) to t, \(t\in [t_k,t_{k+1}),\,k=0,1,2,\ldots ,\) we have

Taking the mathematical expectation, we get that

and for small enough \(\Delta t > 0,\)

Thus, from (20) and (21), it follows that

together with (19), which implies

On the other hand, from (1) and Hölder inequality, we can get

It follows from (16) in condition \((A_4)\) that

Then, all conditions of Theorem 3.1 are satisfied by (22), (23) and condition \((A_3)\), so

i.e,

The proof is complete.\(\square \)

Remark 3.2

The stability of non-autonomous neural networks has been investigated in Refs.[32, 33]. However, the parameters appearing in [32, 33] are bounded. Note in our results, we do not require that the parameters \(a_i(t),\,b_{ij}(t)\,c_{ij}(t),\,d_{ij}(t),\,i,j=1,2,\ldots ,\) in the system (1) are bounded.

If \(w_{ij}^k = 0,i \ne j,e_{ij}^k = 0,i,j = 1,2, \ldots n,\,k=1,2,\ldots \), then the model (1) reduces the following simpler impulsive system.

Corollary 3.1

Assume that \((A_1 )-(A_3 )\) and \((A_4)\) with \(\gamma _k=\max \{1,(w_{ii}^k)^2\}\) hold. Then the zero solution of (24) is mean square globally exponentially stable.

Proof

The proof is similar to that of Theorem 3.3. So we omit it.\(\square \)

Remark 3.3

In the particular case when the parameters \(a_i(t),\,b_{ij}(t),\,c_{ij}(t),\,d_{ij}(t)\) and \(\sigma _{ij}(t,x_i(t),x_i(t-\tau _{ij}(t))),\,i,j=1,2,\ldots ,\) in the system (1) are independent on t, by using Theorem 3.2 with \(q_k=0\), \(k=1,2,\ldots ,\) and the same Lyapunov function V(t) defined in Theorem 3.3, Li[25] obtain some sufficient conditions ensuring global mean square exponential stability of the system (24). As Theorem 3.1 generalizes Theorem 3.2 from autonomous case to non-autonomous case, Corollary 3.1 also generalizes Theorem 3.2 in [25] from autonomous case to non-autonomous case.

Corollary 3.2

Assume that \((A_1 )-(A_3 )\) hold. Then the zero solution of (24) is mean square globally exponentially stable if \(-1\le w_{ii}^k\le 1 \).

Proof

As \(-1\le w_{ii}^k\le 1\), a direct calculation shows that when we take \(\gamma _k=1\) and \(\gamma =0\), the condition \((A_4)\) is satisfied. It follows from Corollary 3.1 that zero solution of (24) is mean square globally exponentially stable. The proof is completed.\(\square \)

If \(w_{ii}^k = 1,w_{ij}^k = 0,i \ne j,e_{ij}^k = 0,i,j = 1,2, \ldots n,\,k=1,2,\ldots \), then the model (1) becomes delay stochastic neural networks without impulses

By using of Theorem 3.3, we can easily get the following corollary.

Corollary 3.3

Assume that \((A_1 )-(A_3 )\) hold. Then the zero solution of (25) is mean square globally exponentially stable with exponential convergent rate \(\lambda \).

4 Example

In this section, we will give an example to illustrate the exponential stability of (1).

Example 4.1

Consider the following impulsive non-autonomous stochastic neural networks:

where \(t_k=t_{k-1}+k,\,k=1,2,\ldots ,\) and \(\int _0^\infty e^{-s}ds=1<\infty \) satisfying the condition (H). We can easily find that Conditions \((A_1)\) and \((A_2)\) are satisfied with \(L_j^f=L_j^g=L_j^h=1,\,j=1,2\), \(\alpha _i(t)\equiv 2,\,\beta _i(t)\equiv 0,\,i=1,2.\) By simple computation, we can get from \((A_3)\) that \(\eta (t)=3+4t\), \(\zeta (t)=\frac{1}{2}+t\), and \(\rho (t)=\frac{1}{2}+\frac{3}{2}t\). Let \(\gamma _k=e^{0.2k} =\max \left\{ {1,e^{0.2k} } \right\} \) and \(\lambda =0.3\) which satisfies inequality (16). Therefore, we obtain that there exists a \(\gamma = 0.2>0 \) such that

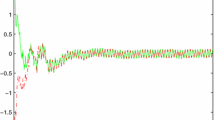

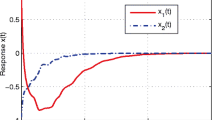

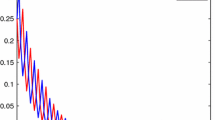

As all the conditions of Corollary 3.1 are satisfied, we conclude that the solution of (26) is mean square globally exponentially stable with exponential convergent rate 0.1. We employ the Euler scheme to discretize this equation, where the integral term is approximated by using the composite \(\Theta \)-rule as a quadrature [38]. The simulation results are illustrated in Fig. 1.

Simulation result of Example 4.1

Remark 4.1

Since \( \mathop {\min }\limits _{t \ge 0} \left\{ {\eta \left( t \right) } \right\} = 3,\) \(\mathop {\max }\limits _{t \ge 0} \left\{ {\zeta \left( t \right) } \right\} = + \infty \) and \(\mathop {\max }\limits _{t \ge 0} \left\{ {\rho \left( t \right) } \right\} = + \infty \), It is clear that the lemma 1 in [15] fails to apply to Eq. (26).

References

Liao X, Wong K, Li C (2004) Global exponential stability for a class of generalized neural networks with distributed delays, Nonlinear Anal. Real World Appl 5:527–547

Liao X, Wang J, Zeng Z (2005) Global asymptotic stability and global exponential stability of delayed cellular neural networks. IEEE Trans Circ Syst II 52(7):403–409

Zhao H (2004) Global asymptotic stability of Hopfield neural network involving distributed delays. Neural Netw 17:47–53

Chen A, Cao J, Huang L (2005) Global robust stability of interval cellular neural networks with time-varying delays. Chao Solit Fractal 23:787–799

Liu Y, Wang Z, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19:667–675

Wang B, Jian J, Jiang M (2010) Stability in Lagrange sense for Cohen-Grossberg neural networks with time-varying delays and finite distributed delays. Nonlinear Anal Hybrid Syst 4:65–78

Zhang G, Shen Y (2015) Novel conditions on exponential stability of a class of delayed neural networks with state-dependent switching. Neural Netw 71:55–61

Zhang G, Shen Y, Sun J (2012) Global exponential stability of a class of memristor-based recurrent neural networks with time-varying delays. Neurocomputing 97:149–154

Huang Z, Feng C, Mohamad S (2012) Multistability analysis for a general class of delayed Cohen-Grossberg neural networks. Inf Sci 187:233–244

Wu A, Zeng Z (2014) New global exponential stability results for a memristive neural system with time-varying delays. Neurocomputing 144:553–559

Sun J, Chen J (2013) Stability analysis of static recurrent neural networks with interval time-varying delay. Appl Math Comput 221:111–120

Bai Y, Chen J (2013) New stability criteria for recurrent neural networks with interval time-varying delay. Neurocomputing 121:179–184

Xu L, Xu D (2008) Exponential stability of nonlinear impulsive neutral integro-differential equations. Nonlinear Anal TMA 69:2910–2923

Xu D, Zhu W, Long S (2006) Global exponential stability of impulsive integro-differential equation. Nonlinear Anal 64:2805–2816

Long S, Xu D, Zhu W (2007) Global exponential stability of impulsive dynamical systems with distributed delays. Electron J Qual Theory Differ Equ 10:1–13

Li X, Chen Z (2009) Stability properties for Hopfield neural networks with delays and impulsive perturbations. Nonlinear Anal Real World Appl 10:3253–3265

Yang X (2009) Existence and global exponential stability of periodic solution for Cohen-Grossberg shunting inhibitory cellular neural networks with delays and impulses. Neurocomputing 72:2219–2226

Li X (2009) Existence and global exponential stability of periodic solution for impulsive Cohen-Grossberg-type BAM neural networks with continuously distributed delays. Appl Math Comput 215:292–307

Haykin S (1994) Neural networks. Prentice-Hall, New Jersey, NJ

Gan Q, Xu R, Yang P (2010) Stability analysis of stochastic fuzzy cellular neural networks with time-varying delays and reaction-diffusion terms. Neural Process Lett 32:45–57

Gan Q, Xu R (2010) Global robust exponential stability of uncertain neutral high-order stochastic hopfield neural networks with time-varying delays. Neural Process Lett 32:83–96

Tang Y, Gao H, Zhang W, Kurths J (2015) Leader-following consensus of a class of stochastic delayed multi-agent systems with partial mixed impulses. Automatica 53:346–354

Zhang W, Tang Y, Wu X, Fang J (2014) Synchronization of nonlinear dynamical networks with heterogeneous impulses. IEEE Trans Circuits Syst I: Regul Pap 61:1220–1228

Wong W, Zhang W, Tang Y, Wu X (2013) Stochastic synchronization of complex networks with mixed impulses. IEEE Trans Circuits Syst I: Regul Pap 60:2657–2667

Li X (2010) Existence and global exponential stability of periodic solution for delayed neural networks with impulsive and stochastic effects. Neurocomputing 73:749–758

Song Q, Wang Z (2008) Stability analysis of impulsive stochastic Cohen-Grossberg neural networks with mixed time delays. Physica A 387:3314–3326

Wang X, Guo Q, Xu D (2009) Exponential p-stability of impulsive stochastic Cohen-Grossberg neural networks with mixed delays. Math Comput Simul 79:1698–1710

Gui Z, Ge W (2007) Periodic solutions of nonautonomous cellular neural networks with impulses. Chaos Solitons Fractals 32:1760–1771

Zhang Q, Wei X, Xu J (2008) Delay-dependent exponential stability criteria for non-autonomous cellular neural networks with time-varying delays. Chaos Solitons Fractals 36:985–990

Zhang Q, Wei X, Xu J (2009) Exponential stability for nonautonomous neural networks with variable delays. Chaos Solitons Fractals 39:1152–1157

Jiang M, Shen Y (2008) Stability of nonautonomous bidirectional associative memory neural networks with delay. Neurocomputing 71:863–874

Zhao H, Mao Z (2009) Boundedness and stability of nonautonomous cellular neural networks with reaction-diffusion terms. Math Comput Simul 79:1603–1617

Lou X, Cui B (2007) Boundedness and exponential stability for nonautonomous cellular neural networks with reaction-diffusion terms. Chaos Solitons Fractals 33:653–662

Niu S, Jiang H, Teng Z (2010) Boundedness and exponential stability for nonautonomous FCNNs with distributed delays and reaction-diffusion terms. Neurocomputing 73:2913–2919

Long S, Li H, Zhang Y (2015) Dynamic behavior of nonautonomous cellular neural networks with time-varying delays. Neurocomputing 168:846–852

Xu D, Li B, Long S, Teng L (2014) Moment estimate and existence for solutions of stochastic functional differential equations. Nonlinear Anal 108:128–143

Mao X (1997) Stochastic differential equations and applications. Horwood, Chichester

Song Y, Baker C (2004) Qualitative behaviour of numerical approximations to Volterra integro-differential equations. J Comput Appl Math 172:101–115

Acknowledgments

The work is supported by National Natural Science Foundation of China under Grants 11271270, 11326118, 11501065 and 11201320, Fundamental Research Funds for the Central Universities under Grant 2682015CX059 and Natural Science Foundation of Chongqing under Grant cstc2015jcyjA00033.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, D., Li, B. Global Mean Square Exponential Stability of Impulsive Non-autonomous Stochastic Neural Networks with Mixed Delays. Neural Process Lett 44, 751–764 (2016). https://doi.org/10.1007/s11063-015-9492-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-015-9492-8