Abstract

The paper presents an image denoising algorithm by combining a method that is based on directional quasi-analytic wavelet packets (qWPs) with the popular BM3D algorithm. The qWP-based denoising algorithm (qWPdn) consists of decomposition of the degraded image, application of adaptive localized soft thresholding to the transform coefficients using the Bivariate Shrinkage methodology, and restoration of the image from the thresholded coefficients from several decomposition levels. The combined method consists of several iterations of qWPdn and BM3D algorithms, where at each iteration the output from one algorithm updates the input to the other. The proposed methodology couples the qWPdn capabilities to capture edges and fine texture patterns even in the severely corrupted images with utilizing the sparsity in real images and self-similarity of patches in the image that is inherent in the BM3D. Multiple experiments, which compared the proposed methodology performance with the performance of six state-of-the-art denoising algorithms, confirmed that the combined algorithm was quite competitive.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

High quality denoising is one of the main challenges in image processing. It tries to achieve suppression of noise while capturing and preserving edges and fine structures in the image. A huge number of publications related to a variety of denoising methods (see, for example the reviews Goyal et al., 2020; Ilesanmi & Ilesanmi, 2021; Tian et al., 2020) exist.

Currently, two main groups of image denoising methods exist:

-

1.

“Classical” schemes, which operate on single images;

-

2.

Methodologies based on Deep Learning.

In turn, most up to date “classical” schemes, where the proposed algorithm belongs to, are based on one of two approaches.

Utilization of non-local self-similarity (NLSM) in images: Starting from the introduction of the Non-local mean (NLM) filter in Buades et al. (2005), which is based on the similarity between pixels in different parts of the image, the exploitation of various forms of the non-local self-similarity in images has resulted in a remarkable progress in image denoising. It is reflected in multiple publications (Dabov et al., 2007; 2009; Dong et al., 2013; Gu et al., 2014, Hou & Shen 2018; Liu & Osher, 2019, Zhou et al., 2021, to name a very few). The non-local self-similarity is explored in some denoising schemes based on Deep Learning (Chen & Pock, 2017; Zhang et al., 2017, for example).

A kind of benchmark in image denoising remains the BM3D algorithm (Dabov et al., 2007), which was presented as far as 2007. The algorithm exploits the self-similarity of patches and sparsity of the image in a transform domain. It collects similar patches in the image into a 3D array, which is subjected to a decorrelating 3D transform followed by either hard thresholding or Wiener filtering. After the inverse transforms, the processed patches are returned to their original locations with corresponding weights. This method is highly efficient in restoration of moderately noised images. However, the BM3D tends to over-smooth and smear the image fine structure and edges when noise is strong. Also, the BM3D is not successful when the image contains many edges oriented in multiple directions.

Some improvement of the original BM3D algorithm was achieved by using shape adaptive neighborhoods and the inclusion of the PCA into the 3D transform (BM3D-SAPCA algorithm, Dabov et al. 2009). Even better results compared to BM3D and BM3D-SAPCA are demonstrated by the so-called WNNM method (Gu et al., 2014), which is based on the assumption that, by stacking the nonlocal similar patch vectors into a matrix, this matrix should be a low rank matrix and, as such, must have sparse singular values. The low rank matrix approximation in Gu et al. (2014) is achieved by an adaptive weighted thresholding SVD values of such matrices. Many denoising algorithms presented in recent years, which are based on the NLSM concept, report results close to the results produced by BM3D-SAPCA and WNNM. At the same time, they share, to some extent, the shortcomings of the BM3D algorithm, especially blurring the fine structure of images restored from the severely degraded inputs.

Transform domain filtering using directional filters: A way to capture lines, edges and texture pattern while restoring degraded images is to use directionional filters and, respectively, dictionaries of waveforms oriented in multiple directions. A number of dictionaries are reported in the literature and applied to image processing. We mention contourlets (Do & Vetterli, 2008), curvelets (Candés & Donoho, 2004; Candés et al., 2006), pseudo-polar Fourier transforms (Averbuch et al., 2008a, b) and related to them shearlets (Kutyniok & Labate, 2012; Lim et al., 2012). However, while these transforms successfully capture edges in images, these dictionaries did not demonstrate a satisfactory texture restoration due to the shortage of oscillating waveforms in the dictionaries.

A number of publications (Bayram & Selesnick, 2008; Han & Zhao, 2014; Han et al., 2019, 2016; Jalobeanu et al., 2000; Ji et al., 2017, 2018), to name a few, present directional dictionaries by the tensor multiplication of complex wavelets (Kingsbury, 1999; Selesnick et al., 2005), wavelet frames and wavelet packets (WPs). The tight tensor-product complex wavelet frames (TP_\(\mathbb {C}\)TF\(_{n}\))Footnote 1 with different numbers of directions, are designed in Han and Zhao (2014), Han et al. (2016, 2019) and some of them, in particular cptTP_\(\mathbb {C}\)TF\(_{6}\), TP_\(\mathbb {C}\)TF\(_{6}\) and TP_\(\mathbb {C}\)TF\(^{\downarrow }_{6}\), demonstrate impressive performance for image denoising and inpainting. The waveforms in these frames are oriented in 14 directions and, due to the 2-layer structure of their spectra, they possess certain, although limited, oscillatory properties.

In Che and Zhuang (2018) [algorithm Digital Affine Shear Filter Transform with 2-Layer Structure (DAS-2)] the two-layer structure, which is inherent in the TP_\(\mathbb {C}\)TF\(_{6}\) frames, is incorporated into shearlet-based directional filter banks introduced in Zhuang (2016). This improves the performance of DAS-2 in comparison to TP_\(\mathbb {C}\)TF\(_{6}\) on texture-rich images such as “Barbara”, which is not the case for smoother images like “Lena”.

Recently, we designed a family of complex WPs (Averbuch et al., 2020, brief outline of the design is in Averbuch et al., 2021), which are referred to as quasi-analytic WPs (qWPs). As a base for the design, the family of WPs originated from polynomial splines of different orders, which are described in Averbuch et al. (2019) (Chapter 4), is used. The two-dimensional (2D) qWPs are derived by a standard tensor products of 1D qWPs. The real parts of the 2D qWPs possess a combination of properties valuable for image processing: They are oriented in multiple directions (see Table 1). The waveforms are close to directional cosines with multiple frequencies modulated by localized low-frequency 2D signals. Their DFT spectra form a refined split of the frequency domain. Both one- and two-dimensional qWP transforms are implemented in a very fast ways by using the Fast Fourier transform (FFT). The directional qWPs are successfully applied to image inpainting (Averbuch et al., 2021).

Due to the above properties, a qWP-based denoising algorithm (qWPdn), which utilizes a version of the Bivariate Shrinkage algorithm (BSA Şendur and Selesnick 2002b, a), proved to be efficient for image denoising. Experiments with the qWPdn demonstrate its ability to restore edges and texture details even from severely degraded images. In most experiments, the qWPdn to be described in Sect. 3.1 provides better resolution of edges and fine structures compared to the cptTP-\(\mathbb {C}\)TF\(_6\), DAS-2 and NLSM-based algorithms, which is reflected in getting higher SSIM (Wang et al., 2004; Wang & Bovik, 2009) values. On the other hand, the NLSM-based algorithms proved to be superior in the noise suppression, especially in smooth regions of images, thus producing the highest PSNR values in almost all the experiments. However, some over-smoothing effect on the edges and fine texture persisted under the BM3D, BM3D-SAPCA, NCSR (Dong et al., 2013) and WNNM algorithms. Especially, this is the case for severely degraded images.

Therefore, we propose to combine the qWPdn and BM3D algorithms in order to retain strong features of both algorithms and to get rid of their drawbacks. The combined qWPdn–BM3D algorithms presented in the paper consists of the iterated execution of the qWPdn and BM3D algorithms in a way that at each iteration, the output from one algorithm updates the input to the other. Typically, 2–3 (rarely more than 4) iterations are needed to get an excellent result. In multiple experiments, part of which is reported in Sect. 3.3, the qWPdn–BM3D algorithms performance is compared with the performance of the NLSM-based algorithms BM3D, BM3D-SAPCA, NCSR and WNNM algorithms and the algorithms cptTP-\(\mathbb {C}\)TF\(_6\) and DAS-2 using directional waveforms. The hybrid qWPdn–BM3D algorithms demonstrated noise suppression efficiency that is quite competitive with BM3D. Thus, it produces PSNR values higher than or very close to the values BM3D produces. On the other hand, its performance related to the edge and fine structures resolution is much better than the performance of the NLSM-based algorithms, thus, producing significantly higher SSIM values. In almost all the experiments the performance of the cptTP-\(\mathbb {C}\)TF\(_6\) and DAS-2 algorithms were inferior to that of the qWPdn–BM3D algorithms.

Contribution of the paper:

-

Development of fast image denoising algorithm qWPdn based on recently designed directional quasi-analytic wavelet packets.

-

Design of the fast iterative hybrid qWPdn–BM3D algorithms, which are highly efficient in capturing edges and fine structures even in severely degraded images.

-

Experimental comparison of the hybrid qWPdn–BM3D algorithms performance with the performance of multiple state-of-the-art algorithms, which demonstrated a decisive advantage of the hybrid algorithms in a SSIM sense and visual perception.

The paper is organized as follows: Sect. 2 briefly outlines properties of the qWP transforms in one and two dimensions. Section 3.1 describes the qWPdn algorithm. Section 3.2 presents the combined qWPdn–BM3D algorithms. In the multiple experiments in Sect. 3.3, the performance of these algorithms is compared with the performance of the cptTP-\(\mathbb {C}\)TF\(_6\), DAS-2, BM3D, BM3D-SAPCA, NCSR and WNNM algorithms. Section 3.4 briefly discusses the relation of the proposed methodology to the recently published Deep Learning denoising methods. Section 4 provides an overview of the achieved results. The Matlab code which counts the directions number of 2D qWPs for different decomposition levels is placed in “Appendix”.

Notation and abbreviations: \(N=2^{j}\), \(\omega {\mathop {=}\limits ^{{\textit{def}}}}e^{2\pi \,i/N}\) and \(\Pi [N]\) is a space of real-valued N-periodic signals. \(\Pi [N,N]\) is the space of two-dimensional N-periodic arrays in both vertical and horizontal directions.

The abbreviations WP, dWP and qWP mean wavelet packet, orthonormal spline-based wavelet packet \(\psi ^{p}_{[m],l}\) and quasi-analytic wavelet packets \(\Psi ^{p}_{\pm [m],l}\), respectively, in a 1D case, and wavelet packet \(\psi ^{p}_{[m],j,l}\) and quasi-analytic wavelet packets \(\Psi ^{p}_{+\pm [m],l,j}\), respectively, in a 2D case.

qWPdn designates the qWP-based image denoising algorithm. qWPdn–BM3D means an hybrid image denoising algorithm combining the qWPdn with the BM3D.

PSNR means Peak Signal-to-Noise ratio in decibels (dB). SSIM means Structural Similarity Index (Wang et al., 2004). BSA stands for Bivariate Shrinkage algorithm (Şendur & Selesnick, 2002a, b).

BM3D stands for Block-matching and 3D filtering (Dabov et al., 2007), SAPCA means Shape-Adaptive Principal Component Analysis (Dabov et al., 2009), NCSR means Nonlocally Centralized Sparse Representation (Dong et al., 2013), WNNM means Weighted Nuclear Norm Minimization (Gu et al., 2014), cptTP-\(\mathbb {C}\)TF stands for Compactly Supported Tensor Product Complex Tight Framelets with Directionality (Zhuang & Han, 2019) and DAS-2 stands for Digital Affine Shear Filter Transform with 2-Layer Structure (Che & Zhuang, 2018).

2 Preliminaries: quasi-analytic directional wavelet packets

Recently we designed a family of wavelet packets (qWPs), which possess a collection of properties indispensable for image processing. A brief outline of the qWPs design and the implementation of corresponding transforms is provided in the paper Averbuch et al. (2021), which describes successful application of qWPs to image inpainting. A detailed description of the design and implementation is given in Averbuch et al. (2020). In this section we list properties of qWPs and present some illustrations.

2.1 Properties of qWPs

One-dimentional qWPs The qWPs are derived from the periodic WPs originating from orthonormal discretized polynomial splines of different orders (dWPs), which are described in Chapter 4 in Averbuch et al. (2019) (a brief outline is given in Averbuch et al. 2020). The dWPs are denoted by \(\psi ^{p}_{[m],l}\), where p means the generating spline’s order, m is the decomposition level and \(l=0,\ldots 2^m-1,\) is the index of an m-level wavelet packets. The \(2^{m}\)-sample shifts \(\left\{ \psi ^{p}_{[m],l}(\cdot -2^{m}\,k)\right\} ,\;l=0,\ldots ,2^m-1,\;k=0,\ldots ,N/2^m-1,\) of the m-level dWPs form an orthonormal basis of the space \(\Pi [N]\) of N-periodic discrete-time signals. Surely, other orthonormal bases using the wavelet packets \(\psi ^{p}_{[m],l}\) are possible, for example, wavelet and Best bases (Coifman & Wickerhauser, 1992).

The waveforms \(\psi ^{p}_{[m],l}[k]\) are symmetric, well localized in the spatial domain and have oscillatory structure, their DFT spectra form a refined split of the frequency domain. The shapes of magnitude spectra tend to rectangular as the spline’s order p grows. A common way to extend 1D WP transforms to multiple dimensions is by the tensor-product extension. The 2D dWPs from the level m are: \(\psi _{[m],j ,l}^{p}[k,n]{\mathop {=}\limits ^{{\textit{def}}}}\psi _{[m],j}^{p}[k]\,\psi _{[m],l}^{p}[n]\). Their \(2^{m}\)-sample shifts along vertical and horizontal directions form orthonormal bases of the space \(\Pi [N,N]\) of 2D signals \(N-\)periodic in both directions. The drawback is the lack of directionality. The directionality can be achieved by switching to complex wavelet packets.

For this, we start with application of the Hilbert transform (HT) to the dWPs \(\psi ^{p}_{[m],l}\), thus getting the signals \(\tau ^{p}_{[m],l}=H(\psi ^{p}_{[m],l}), \; m=1,\ldots ,M,\;l=0,\ldots ,2^m-1\). A slight correction of those signals’ DFT:

provides us with a set of signals from the space \(\Pi [N]\), whose properties are similar to the properties of the dWPs \(\psi ^{p}_{[m],l}\). In particular, their shifts form orthonormal bases in \(\Pi [N]\) and the magnitude spectra of \(\phi ^{p}_{[m],l}\) coincide with the magnitude spectra of the dWPs \(\psi ^{p}_{[m],l}\). However, unlike the symmetric dWPs \(\psi ^{p}_{[m],l}\), the signals \(\phi ^{p}_{[m],l}\) are antisymmetric for all l except for \(l_{0}=0\) and \(l_{m}=2^{m}-1\). We refer to the signals \(\phi ^{p}_{[m],l}\) as the complementary orthonormal WPs (cWPs).

The sets of complex-valued WPs, which we refer to as the quasi-analytic wavelet packets (qWP), are defined as \( \Psi ^{p}_{\pm [m],l}{\mathop {=}\limits ^{{\textit{def}}}}\psi ^{p}_{[m],l} \pm i\phi ^{p}_{[m],l}, \quad m=1,\ldots ,M,\;l=0,\ldots ,2^{m}-1\), where \(\phi ^{p}_{[m],l}\) are the cWPs defined by Eq. (2.1). The qWPs \(\Psi ^{p}_{\pm [m],l}\) differ from the analytic WPs by adding two values \(\pm i\,\hat{\psi }^{p}_{[m],l}[0]\) and \(\pm i\,\hat{\psi }^{p}_{[m],l}[N/2]\) into their DFT spectra, respectively. The DFT spectra of the qWPs \( \Psi ^{p}_{+[m],l}\) are located within positive half-band of the frequency domain and vice versa for the qWPs \( \Psi ^{p}_{-[m],l}\).

Figure 1 displays the signals \({\psi }^{9}_{[3],l}\) and \({\phi }^{9}_{[3],l},\;l=0,\ldots ,7\) derived from ninth-order splines, from the third decomposition level and their magnitude spectra (right half-band), that coincide with each other. Addition of \(\hat{\psi }^{9}_{[3],l}[0]\) and \(\hat{\psi }^{9}_{[3],l}[N/2]\) to the spectra of \({\phi }^{9}_{[3],l},\;l=0,7,\) results in an antisymmetry distortion. These WPs provide a collection of diverse symmetric and antisymmetric well localized waveforms, which range from smooth wavelets for \(l=0,1\) to fast oscillating transients for \(l=5,6,7\). Thus, this collection is well suited to catching smooth as well as oscillating local patterns in signals.

In the 2D case, these valuable properties of the spline-based wavelet packets are completed by the directionality of the tensor-product waveforms.

Two-dimensional qWPs Similarly to the 2D dWPs \(\psi _{[m],j ,l}^{p}[k,n]\), the 2D cWPs are defined as the tensor products of 1D WPs such that \( \phi _{[m],j ,l}^{p}[k,n]{\mathop {=}\limits ^{{\textit{def}}}}\phi _{[m],j}^{p}[k]\,\phi _{[m], l}^{p}[n]. \) The \(2^{m}\)-sample shifts of the WPs \(\left\{ \phi _{[m],j ,l}^{p}\right\} ,\;j , l=0,\ldots ,2^{m}-1,\) in both directions form an orthonormal basis for the space \(\Pi [N,N]\) of arrays that are N-periodic in both directions.

2D qWPs and their spectra The 2D dWPs \(\left\{ \psi _{[m],j ,l}^{p}\right\} \) as well as the cWPs \(\left\{ \phi _{[m],j ,l}^{p}\right\} \) lack the directionality property which is needed in many applications that process 2D data. However, real-valued 2D wavelet packets oriented in multiple directions can be derived from tensor products of complex quasi-analytic qWPs \(\Psi _{\pm [m],\rho }^{p}\). The complex 2D qWPs are defined as follows:

where \( m=1,\ldots ,M,\;j ,l=0,\ldots ,2^{m}-1,\) and \(k ,n=0,\ldots ,N-1\). The real parts of these 2D qWPs are

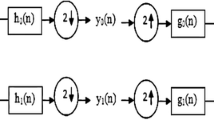

The diagram in Fig. 2 illustrates the design of qWPs. The DFT spectra of the 2D qWPs \(\Psi _{++[m],j ,l}^{p},\;j ,l=0,\ldots ,2^{m}-1,\) are tensor products of the one-sided spectra of the qWPs \(\hat{ \Psi }_{++[m],j ,l}^{p}[p,q] =\hat{ \Psi }_{+[m],j}^{p}[p]\,\hat{\Psi }_{+[m], l}^{p}[q]\) and, as such, they fill the quadrant \(\mathbf {q}_{0} \) of the frequency domain, while the spectra of \(\Psi _{+-[m],j ,l}^{p},\;j ,l=0,\ldots ,2^{m}-1,\) fill the quadrant \(\mathbf {q}_{1}\) (see Fig. 2). Figure 3 displays magnitude spectra of the ninth-order 2D qWPs \(\Psi _{++[2],j ,l}^{9}\) and \(\Psi _{+-[2],j ,l}^{9}\) from the second decomposition level. Figure 3 shows that the DFT spectra of the qWPs \(\Psi _{+\pm [m],j ,l}^{9}\) effectively occupy relatively small squares in the frequency domain. For deeper decomposition levels, sizes of the corresponding squares decrease as geometric progression. Such configuration of the spectra leads to the directionality of the real-valued 2D WPs \( \theta _{\pm [m],j ,l}^{p}\). The directionality of the WPs \( \theta _{\pm [m],j ,l}^{p}\) is discussed in Averbuch et al. (2020). It is established that if the spectrum of a WP \(\Psi _{+\pm [m],j ,l}^{p}\) occupies a square whose center lies in the point \([\kappa _{0},\nu _{0}]\), then the respective real-valued WP \( \theta _{\pm [m],j ,l}^{p}\) is represented by \( \theta _{\pm [m],j ,l}^{p}[k,n] \approx {\cos \frac{2\pi (\kappa _{0}k+\nu _{0}n)}{N}}\,\underline{\theta }[k,n] , \) where \(\underline{\theta }[k,n]\) is a spatially localized low-frequency waveform which does not have a directionality. But the 2D signal \(\cos \frac{2\pi (\kappa _{0}k+\nu _{0}n)}{N}\) is oscillating in the direction D, which is orthogonal to the vector \(\vec {V}=\kappa _{0}\vec {i}+\nu _{0}\vec {j}\). Therefore, WP \(\theta _{\pm [m],j ,l}^{p}\) can be regarded as the directional cosine modulated by the localized low-frequency signal \(\underline{\theta }\). The cosine frequencies in the vertical and horizontal directions are determined by the indices j and l, respectively, of the WP \( \theta _{\pm [m],j ,l}^{p}\). The bigger is the index, the higher is frequency in the respective direction. The situation is illustrated in Fig. 4. The imaginary parts of the qWPs \(\Psi _{+\pm [m],j ,l}^{p}\) have a similar structure.

Figure 5 displays WPs \(\theta _{+[2],j ,l}^{9},\;j,l=0,1,2,3,\) from the second decomposition level and their magnitude spectra.

Figure 6 displays WPs \(\theta _{-[2],j ,l}^{9},\;j,l=0,\ldots ,7,\) from the second decomposition level and their magnitude spectra.

Figure 7 displays WPs \(\theta _{+[3],j ,l}^{9}\) and \(\theta _{-[3],j ,l}^{9},\;j,l=0,1,2,3,\) from the third decomposition level.

Remark 2.1

Note that all the WPs \(\theta _{+[m],j ,l}^{p}\) whose spectra are located along a vector \(\vec {V}\) have approximately the same orientation. It is seen in Figs. 6, 5 and 7. Consequently, the number of different orientations of the m-th level WPs is less than the number of WPs, which is \(2\cdot 4^{m}\). For example, all the “diagonal” qWPs \(\left\{ \theta _{\pm [m],j ,j}^{p}\right\} ,\;j=0,\ldots ,2^{m}-1,\) are oscillating with different frequencies in the directions of either \(135^{\circ }\) (for \( \theta _{+}\)) or \(45^{\circ }\) (for \( \theta _{-}\)). These orientation numbers are given in Table 1. The Matlab code for the calculation of these numbers is contained in “Appendix”.

2.2 Outline of the implementation scheme for 2D qWP transforms

The spectra of 2D qWPs \(\left\{ \Psi _{++[m],j ,l}^{p}\right\} ,\;j ,l=0,\ldots ,2^{m}-1\) fill the quadrant \(\mathbf {q}_{0}\) of the frequency domain (see Fig. 2), while the spectra of 2D qWPs \(\left\{ \Psi _{+-[m],j ,l}^{p}\right\} \) fill the quadrant \(\mathbf {q}_{1}\). Consequently, the spectra of the real-valued 2D WPs \(\left\{ \theta _{+[m],j ,l}^{p}\right\} ,\;j ,l=0,\ldots ,2^{m}-1\), and \(\left\{ \theta _{-[m],j ,l}^{p}\right\} \) fill the pairs of quadrant \(\mathbf {q}_{+}=\mathbf {q}_{0}\bigcup \mathbf {q}_{2}\) and \(\mathbf {q}_{-}=\mathbf {q}_{1}\bigcup \mathbf {q}_{3}\), respectively.

By this reason, none linear combination of the WPs \(\left\{ \theta _{+[m],j ,l}^{p}\right\} \) and their shifts can serve as a basis in the signal space \(\Pi [N,N]\). The same is true for WPs \(\left\{ \theta _{-[m],j ,l}^{p}\right\} \). However, combinations of the WPs \(\left\{ \theta _{\pm [m],j ,l}^{p}\right\} \) provide frames of the space \(\Pi [N,N]\).

The transforms are implemented in the frequency domain using modulation matrices of the filter banks, which are built from the corresponding wavelet packets. It is important to mention that the structure of the filter banks \(\mathbf {Q}_{+}\) and \(\mathbf {Q}_{-}\) for the first decomposition level is different for the transforms with the “positive” \(\Psi _{+[m],l}^{p}\) and “negative” \(\Psi _{-[m],l}^{p}\) qWPs, respectively. However, the transforms from the first to the second and further decomposition levels are executed using the same filter bank \(\mathbf {H}_{m}\) for the “positive” and “negative” qWPs. This fact makes it possible a parallel implementation of the transforms.

The one-level 2D qWP transforms of a signal \(\mathbf {X}=\left\{ X[k,n] \right\} \in \Pi [N,N]\) are implemented by a tensor-product scheme. To be specific, for the transform with \(\Psi ^{p}_{++[1]}\), the 1D transform of columns from the signal \(\mathbf {X}\) is executed using the filter bank \(\mathbf {Q}_{+}\), which is followed by the 1D transform of rows of the produced coefficient arrays using the same filter bank \(\mathbf {Q}_{+}\). These operation results in the transform coefficient array \(\mathbf {Z}_{+[1]}=\bigcup _{\mathrm{J},l=0}^{1}\mathbf {Z}_{+[1]}^{j,l}\) comprising of four blocks.

The transform with \(\Psi ^{p}_{+-[1]}\) is implemented by the subsequent application of the filter banks \(\mathbf {Q}_{+}\) and \(\mathbf {Q}_{-}\) to columns from the signal \(\mathbf {X}\) and rows of the produced coefficient arrays, respectively. This results in the coefficient array \(\mathbf {Z}_{-[1]}=\bigcup _{\mathrm{J},l=0}^{1}\mathbf {Z}_{-[1]}^{j,l}\).

The further transforms starting from the arrays \(\mathbf {Z}_{+[1]}\) and \(\mathbf {Z}_{-[1]}\) produce two sets of the coefficients \(\left\{ \mathbf {Z}_{+[m]}=\bigcup _{\mathrm{J},l=0}^{2^{m}-1}\mathbf {Z}_{+[m]}^{j,l}\right\} \) and \(\left\{ \mathbf {Z}_{-[m]}=\bigcup _{\mathrm{J},l=0}^{2^{m}-1}\mathbf {Z}_{-[m]}^{j,l}\right\} ,\;m=2,\ldots ,M\). The transforms are implemented by application of the same filter banks \(\mathbf {H}_{m}, \;m=2,\ldots ,M\) to rows and columns of the “positive” and “negative” coefficient arrays. The coefficients from a level m comprise of \(4^{m}\) “positive” blocks of coefficients \(\left\{ \mathbf {Z}_{+[m]}^{j,l}\right\} ,\;l,j=0,\ldots ,^{2^{m}-1},\) and the same number of “negative” blocks \(\left\{ \mathbf {Z}_{-[m]}^{j,l}\right\} \).

Coefficients from a block are inner products of the signal \(\mathbf {X}=\left\{ X[k,n] \right\} \in \Pi [N,N]\) with the shifts of the corresponding wavelet packet:

The inverse transforms are implemented accordingly. Prior to the reconstruction, some structures, possibly different, are defined in the sets \(\left\{ \mathbf {Z}_{+[m]}^{j,l}\right\} \) and \(\left\{ \mathbf {Z}_{-[m]}^{j,l}\right\} ,\;m=1,\ldots M,\) (for example, 2D wavelet, Best Basis or single-level structures) and some manipulations on the coefficients, (for example, thresholding, shrinkage, \(l_1\) minimization) are executed. The reconstruction produces two complex arrays \( \mathbf {X}_{+}\) and \( \mathbf {X}_{-}\). The signal X is restored by \({\tilde{\mathbf {X}}}=\mathfrak {Re}(\mathbf {X}_{+}+\mathbf {X}_{-})/8\).

Figure 8 illustrates the image “Fingerprint” restoration by the 2D signals \(\mathfrak {Re}(\mathbf {X}_{\pm })\). The signal \(\mathfrak {Re}(\mathbf {X}_{-})\) captures oscillations oriented to north-east, while \(\mathfrak {Re}(\mathbf {X}_{+})\) captures oscillations oriented to north-west. The signal \(\tilde{\mathbf {X}}=\mathfrak {Re}(\mathbf {X}_{+}+\mathbf {X}_{-})/8\) perfectly restores the image achieving \(\mathrm{PSNR}=312.3538\) dB.

3 Image denoising

In this section, we describe application of the directional qWP transforms presented in Sect. 2 to the restoration of an image \(\mathbf {X}\) from the data \({\check{\mathbf {X}}}=\mathbf {X}+\mathbf {E}\), where \(\mathbf {E}\) is the Gaussian zero-mean noise whose \(\mathrm{STD}=\sigma \).

3.1 Denoising scheme for 2D qWPs

The degraded image \({\check{\mathbf {X}}}\) is decomposed into two sets \(\left\{ {\check{\mathbf {Z}}}_{+[m]}^{j,l}\right\} \) and \(\left\{ {\check{\mathbf {Z}}}_{-[m]}^{j,l}\right\} ,\;m=1,\ldots ,M,\;j,l=0,2^{m}-1,\) of the qWP transform coefficients, then a version of the Bivariate Shrinkage algorithm (BSA) (Şendur & Selesnick, 2002a, b) is implemented and the image \(\tilde{\mathbf {X}}\approx \mathbf {X}\) is restored from the shrunken coefficients. The restoration is executed separately from the sets of coefficients belonging to several decomposition levels and the results are averaged with some weights.

3.1.1 Image restoration from a single-level transform coefficients

Consider the estimation of an image \(\mathbf {X}\in \Pi [N,N]\) from the fourth-level transform coefficients of the degraded array \({\check{\mathbf {X}}}\) of size \(N\times N\).

The denoising algorithm, which we refer to as qWPdn, is implemented by the following steps:

-

1.

In order to eliminate boundary effects, the degraded image \({\check{\mathbf {X}}}\) is symmetrically extended to image \({\check{\mathbf {X}}}_{T}\) of size \(N_{1}\times N_{1}\), where \(N_{1}=N+2T\). Typically, either \(T=N/4 \text{ or } T=N/8\).

-

2.

The Bivariate Shrinkage (BSA) utilizes the interscale dependency of the transform coefficients. Therefore, the direct 2D transforms of the image \({\check{\mathbf {X}}}_{T}\) with the complex qWPs \(\Psi ^{p}_{++}\) and \(\Psi ^{p}_{+-}\) are executed down to the fifth decomposition level. As a result, two sets \({\check{\mathbf {Z}}}_{+[m]}^{j,l}=\left\{ {\check{\mathbf {Z}}}_{+[m]}^{j,l}[k,n]\right\} \) and \({\check{\mathbf {Z}}}_{-[m]}^{j,l}=\left\{ {\check{\mathbf {Z}}}_{+[m]}^{j,l}[k,n]\right\} ,\;m=1,\ldots ,5,\;j,l=0,\ldots ,2^{m}-1,\; k,n=0,\ldots ,N_{1}/2^{m}-1,\) of the qWP transform coefficients are produced.

-

3.

The noise variance is estimated by \(\tilde{\sigma }_{e}^{2}=\frac{\mathrm {median}({\textit{abs}}({\check{\mathbf {Z}}}_{+[1]}^{1,1}[k,n]))}{0.6745}.\)

-

4.

Let \(\check{c}_{4}[k,n]{\mathop {=}\limits ^{{\textit{def}}}}\check{Z}_{+[4]}^{j,l}[k,n]\) denote a coefficient from a block \({\check{\mathbf {Z}}}_{+[4]}^{j,l}\) at the fourth decomposition level. The following operations are applied to the coefficient \(\check{c}_{4}[k,n]\):

-

(a)

The averaged variance \(\bar{\sigma }_{c}[k,n]^{2}=\frac{1}{W_{4}^{2}}\sum _{\kappa ,\nu =-W_{4}/2}^{W_{4}/2-1}\check{c}_{4}[k+\kappa ,n+\nu ]^{2}\) is calculated. The integer \(W_{4}\) determines the neighborhood of \(\check{c}_{4}[k,n]\) size.

-

(b)

The marginal variance for \(\check{c}_{4}[k,n]\) is estimated by \(\tilde{\sigma }[k,n]^{2}=(\bar{\sigma }_{c}[k,n]^{2}-\tilde{\sigma }_{e}^{2})_{+}.\)Footnote 2

-

(c)

In order to estimate the clean transform coefficients from the fourth decomposition level, it is needed to utilize coefficients from the fifth level. The size of the coefficient block \({\check{\mathbf {Z}}}_{+[4]}^{j,l}\) is \(N_{1}/16\times N_{1}/16\). The coefficients from that block are related to the qWP \(\Psi ^{p}_{++[4],j,l}\), whose spectrum occupies, approximately, the square \(\mathbf {S}_{+[4]}^{j,l}\) of size \(N_{1}/32\times N_{1}/32 \) within the quadrant \(\mathbf {q}_{0}\) (see Fig. 2). The spectrum’s location determines the directionality of the waveform \(\Psi ^{p}_{++[4],j,l}\). On the other hand, four coefficient blocks \(\left\{ {\check{\mathbf {Z}}}_{+[5]}^{2j+\iota ,2l+\lambda }\right\} ,\;\iota ,\lambda =0,1,\) of size \(N_{1}/32\times N_{1}/32 \) are derived by filtering the block \({\check{\mathbf {Z}}}_{+[4]}^{j,l}\) coefficients followed by downsampling. The coefficients from those blocks are related to the qWPs \(\Psi ^{p}_{++[5],2j+\iota ,2l+\lambda }\), whose spectra occupy, approximately, the squares \(\mathbf {S}_{+[5]}^{2j+\iota ,2l+\lambda }\) of size \(N_{1}/64\times N_{1}/64 \), which fill the square \(\mathbf {S}_{+[4]}^{j,l}\). Therefore, the orientations of the waveforms \(\Psi ^{p}_{++[5],2j+\iota ,2l+\lambda }\) are close to the orientation of \(\Psi ^{p}_{++[4],j,l}\). Keeping this in mind, we form the joint fifth-level array \(\mathbf {c}_{5}^{j,l}\) of size \(N_{1}/16\times N_{1}/16 \) by interleaving the coefficients from the arrays \(\left\{ {\check{\mathbf {Z}}}_{+[5]}^{2j+\iota ,2l+\lambda }\right\} \). To be specific, the joint array \(\mathbf {c}_{5}^{j,l}\) consists of the quadruples:

$$\begin{aligned} \mathbf {c}_{5}^{j,l}=\left\{ \left[ \begin{array}{cc} \check{Z}_{+[5]}^{2j,2l}[\kappa ,\nu ] &{} \check{Z}_{+[5]}^{2j,2l+1}[\kappa ,\nu ] \\ \check{Z}_{+[5]}^{2j+1,2l}[\kappa ,\nu ] &{} \check{Z}_{+[5]}^{2j+1,2l+1}[\kappa ,\nu ] \\ \end{array} \right] \right\} ,\quad \kappa ,\nu =0,\ldots N_{1}/32-1. \end{aligned}$$ -

(d)

Let \(\breve{c}_{5}[k,n]\) denote a coefficient from the joint array \(\mathbf {c}_{5}^{j,l}\). Then, the transform coefficient \(Z^{j,l}_{+[4]}[k,n]\) from the fourth decomposition level is estimated by the bivariate shrinkage of the coefficients \(\check{Z}^{j,l}_{+[4]}[k,n]\):

$$\begin{aligned} Z^{j,l}_{+[4]}[k,n]\approx \tilde{Z}^{j,l}_{+[4]}[k,n]= \frac{\left( \sqrt{\check{c}_{4}[k,n]^{2}+\breve{c}_{5}[k,n]^{2}}- \frac{\sqrt{3}\,\tilde{\sigma }_{e}^{2}}{\tilde{\sigma }[k,n]}\right) _{+}}{\sqrt{\check{c}_{4}[k,n]^{2}+\breve{c}_{5}[k,n]^{2}}}\,\check{c}_{4}[k,n]. \end{aligned}$$

-

(a)

-

5.

As a result of the above operations, the fourth-level coefficient array \({\tilde{\mathbf {Z}}}_{+[4]}=\left\{ {\tilde{\mathbf {Z}}}_{+[4],j,l}\right\} ,\;j,l=0,\ldots ,15\), is estimated, where \({\tilde{\mathbf {Z}}}_{+[4],j,l}=\left\{ \tilde{Z}^{j,l}_{+[4]}[k,n] \right\} ,\;k,n=0,\ldots N_{1}/16-1\).

-

6.

The inverse qWP transform is applied to the coefficient array \({\tilde{\mathbf {Z}}}_{+[4]}\) and the result shrinks to the original image size \(N\times N\). Thus, the sub-image \({\tilde{\mathbf {X}}}_{+}^{4}\) is obtained.

-

7.

The same operations are applied to \(\check{\mathbf {Z}}^{j,l}_{-[m]},\;m=4,5\), thus resulting in the sub-image \({\tilde{\mathbf {X}}}_{-}^{4}\).

-

8.

The clean image is estimated by \(\mathbf {X}\approx {\tilde{\mathbf {X}}}^{4}=\frac{\mathfrak {Re}({\tilde{\mathbf {X}}}_{+}^{4}+{\tilde{\mathbf {X}}}_{-}^{4})}{8}.\)

3.1.2 Image restoration from several decomposition levels

More stable estimation of the image \(\mathbf {X}\) is derived by the weighted average of several single-level estimations \(\left\{ {\tilde{\mathbf {X}}}^{m}\right\} \). In most cases, the estimations from the second, third and fourth levels are combined, so that \(m=2,3,4\).

The approximated image \({\tilde{\mathbf {X}}}^{3}\) is derived from the third-level coefficients \({\check{\mathbf {Z}}}_{\pm [3]}^{j,l}\). The fourth-level coefficients that are needed for the Bivariate Shrinkage of the coefficients \({\check{\mathbf {Z}}}_{\pm [3]}^{j,l}\) are taken from the “cleaned” arrays \({\tilde{\mathbf {Z}}}_{\pm [4],j,l}\) rather than from the “raw” ones \({\check{\mathbf {Z}}}_{\pm [4],j,l}\).

Similarly, the image \({\tilde{\mathbf {X}}}^{2},\) is derived from the coefficient arrays \({\check{\mathbf {Z}}}_{\pm [2]}^{j,l}\) and \({\tilde{\mathbf {Z}}}_{\pm [3]}^{j,l}\). The final operation is the weighted averaging such as

Remark 3.1

Matlab implementation of all the operations needed to transform the degraded array \({\check{\mathbf {X}}}\) of size \(512\times 512\) into the estimation \( {\tilde{\mathbf {X}}}\) given by Eq. (3.1) takes 1 s. Note that the noise STD is not a part of the input. It is evaluated as indicated in Item 3.

Remark 3.2

In some cases, restoration from third, fourth and fifth levels is preferable. Then, the degraded array \({\check{\mathbf {X}}}_{T}\) is decomposed down to the sixth level.

Remark 3.3

The algorithm comprises a number of free parameters which enable a flexible adaptation to the processed object. These parameters are the order “p” of the generating spline, integers \(W_{4}\), \(W_{3}\) and \(W_{2}\), which determine the sizes of neighborhoods for the averaged variances calculation, and the weights \(\alpha _{2}\), \(\alpha _{3}\) and \(\alpha _{4}\).

Remark 3.4

Fragments of the Matlab functions denoising_dwt.m and bishrink.m from the websites http://eeweb.poly.edu/iselesni/WaveletSoftware/denoising_dwt.html and http://eeweb.poly.edu/iselesni/WaveletSoftware/denoise2.html, respectively, were used as patterns while compiling our own denoising software.

3.2 qWPdn–BM3D: hybrid algorithm

Equation (2.2) imply that the coefficients of the qWP transforms reflect the correlation of the image under processing with the collection of waveforms, which are well localized in the spatial domain and are oscillating in multiple directions with multiple frequencies. By this reason, these transforms are well suited for capturing edges and texture patterns oriented in different directions. Experiments with the qWPdn image denoising demonstrate its ability to restore edges and texture details even from severely degraded images. In most conducted experiments, the qWPdn provides better resolution of edges and fine structures compared to the most state-of-the-art algorithms including the BM3D algorithm, which is reflected in higher SSIM values. On the other hand, the BM3D algorithm proved to be superior in noise suppression especially in smooth regions in images, thus producing high PSNR values in almost all the experiments. However, some over-smoothing effect on the edges and fine texture persisted with the application of the BM3D algorithm. Especially, this was the case for severely degraded images.

In this section, we propose to combine qWPdn with BM3D algorithms to benefit from the strong features of both algorithms.

Denote by \(\mathbf {W}\) and \(\mathbf {B}\) the operators of application of the qWPdn , which is described in Sect. 3, and BM3D denoising algorithms, respectively, to a degraded array \(\mathbf {A}\): \(\mathbf {W}\,\mathbf {A}=\mathbf {D}_{W}\) and \(\mathbf {B}\,\mathbf {A}=\mathbf {D}_{B}\).

Assume that we have an array \(\check{\mathbf {X}}^{0}=\mathbf {X}+\mathbf {E}\), which represents an image \(\mathbf {X}\) degraded by additive Gaussian noise \(\mathbf {E}\) whose STD is \(\sigma .\) The denoising processing is implemented along the following boosting scheme.

First step Apply the operators \(\mathbf {W}\) and \(\mathbf {B}\) to the input array \(\check{\mathbf {X}}^{0}\): \(\mathbf {Y}_{W}^{1}=\mathbf {W}\,\check{\mathbf {X}}^{0}\) and \(\mathbf {Y}_{B}^{1}=\mathbf {B}\,\check{\mathbf {X}}^{0}\).

Iterations \(i=1,\ldots ,I-1\)

-

1.

Form new input arrays \(\check{\mathbf {X}}_{W}^{i}=\frac{\check{\mathbf {X}}^{0}+\mathbf {Y}_{W}^{i}}{2},\quad \check{\mathbf {X}}_{B}^{i}=\frac{\check{\mathbf {X}}^{0}+\mathbf {Y}_{B}^{i}}{2}.\)

-

2.

Apply the operators \(\mathbf {W}\) and \(\mathbf {B}\) to the new input arrays: \(\mathbf {Y}_{W}^{i+1}=\mathbf {W}\,\check{\mathbf {X}}_{B}^{i}, \quad \mathbf {Y}_{B}^{i+1}=\mathbf {B}\,\check{\mathbf {X}}_{W}^{i}. \)

Estimations of the clean image Three estimations are used:

-

1.

The updated BM3D estimation \(\tilde{\mathbf {X}}_{uB}{\mathop {=}\limits ^{{\textit{def}}}}\mathbf {Y}_{B}^{I}\) (upBM3D).

-

2.

The updated qWPdn estimation \(\tilde{\mathbf {X}}_{uW}{\mathop {=}\limits ^{{\textit{def}}}}\mathbf {Y}_{W}^{I}\) (upqWP).

-

3.

The hybrid estimation \(\tilde{\mathbf {X}}_{H}{\mathop {=}\limits ^{{\textit{def}}}}(\mathbf {Y}_{B}^{I}+\mathbf {Y}_{W}^{I})/2\) (hybrid).

3.3 Experimental results

In this section, we compare the performance of our denoising schemes designated as upBM3D, upqWP and hybrid on the restoration of degraded images with the performances of the state-of-the-art algorithms such as BM3D (Dabov et al., 2007), BM3D-SAPCA (Dabov et al., 2009), WNNM (Gu et al., 2014), NCSR (Dong et al., 2013), cptTP-\(\mathbb {C}\)TF\(_{6}\) (Zhuang & Han, 2019) and DAS-2 (Che & Zhuang, 2018). To produce results for the comparison, we used the software available at the websites http://www.cs.tut.fi/~foi/GCF-BM3D/index.html#ref_software (BM3D and BM3D-SAPCA), http://staffweb1.cityu.edu.hk/xzhuang7/softs/index.html#bdTPCTF (cptTP-\(\mathbb {C}TF_{6}\) and DAS-2), https://github.com/csjunxu/WNNM_CVPR2014 (WNNM), and https://www4.comp.polyu.edu.hk/~cslzhang/NCSR.htm (NCSR).

The restored images were evaluated by the visual perception, by Peak Signal-to-Noise ratio (PSNR) [see Eq. (3.2)]Footnote 3 and by the Structural Similarity Index (SSIM) (Wang et al. 2004, ssim.m Matlab 2020b function). SSIM measures the structural similarity of small moving windows in two images. It varies from 1 for fully identical windows to \(-1\) for completely dissimilar ones. The global index is the average of local indices. Currently, SSIM is regarded as more informative characteristics of the image quality compared to PSNR and Mean Square Error (MSE) (see discussion in Wang and Bovik 2009).

For the experiments, we used a standard set of benchmark images: “Lena”, “Boat”, “Hill”, “Barbara”, “Mandrill”, “Bridge”, “Man”, “Fabric” and “Fingerprint”. One image that represents a stacked seismic section is designated as “Seismic”. The ”clean” images are displayed in Fig. 9.

The images were corrupted by Gaussian zero-mean noise whose STD was \(\sigma =5\), 10, 25, 40, 50, 80 and 100 dB. Then, the BM3D, BM3D-SAPCA, NCSR, WNNM, upBM3D, upqWP, hybrid, cptTP-\(\mathbb {C}TF_{6}\) and DAS-2 denoising algorithms were applied to restore the images. In most experiments the algorithm upBM3D performed better than upqWP. However, this was not the case in experiments with the “Seismic” image. Therefore, in the “Seismic” block in Table 3 and pictures in Fig. 16 we provide results from experiments with the upqWP rather than with upBM3D algorithm.

Table 2 summarizes experimental results from the restoration of the “Barbara”, “Boat”, “Fingerprint”, “Lena” and “Mandrill” images corrupted by additive Gaussian noise. PSNR and SSIM values for each experiment are given.

Table 3 summarizes experimental results on the restoration of the “Hill”, “Seismic”, “Fabric”, “Bridge” and“Man” images corrupted by additive Gaussian noise. The PSNR and SSIM values for each experiment are given.

It is seen from Tables 2 and 3 that, while our methods upBM3D and hybrid produce PSNR values close to those produced by other methods, in the overwhelming majority of cases the SSIM values produced by upBM3D and hybrid significantly exceed the SSIM from all other methods. Especially it is true for the restoration of images in presence of a strong noise. This observation reflects the fact that directional qWPs have exceptional capabilities to capture fine structures even in severely degraded images.

Diagrams in Fig. 10 illustrate the results reported in Tables 2 and 3. Top frame in Fig. 10 shows PSNR values for restoration of images degraded by Gaussian noise with STD \(\sigma =5\), 10, 25, 40 50, 80 and 100 dB, by all eight methods. The PSNR values are averaged over ten images participated in the experiments (see Fig. 9). Bottom frame in Fig. 10 does the same for the SSIM values. We can observe that the averaged PSNR values for our methods upBM3D and hybrid practically coincide with each other and are very close to the values produced by BM3D and NCSR. The averaged PSNR values for the methods BM3D-SAPCA and WNNM exceed the values produced by all other methods. They practically coincide with each other for \(\sigma \le 40\) dB, but for \(\sigma >40\) dB, WNNM produces significantly higher PSNR values. The values from the methods cptTP-\(\mathbb {C}\)TF\(_{6}\) and DAS-2 are inferior.

A different situation we see in the bottom frame, which displays averaged SSIM values. Again, the averaged values for our methods upBM3D and hybrid practically coincide with each other but they strongly override the values produced by all other methods. BM3D-SAPCA demonstrates some advantage over the rest of the methods. It is worth mentioning relatively good results by DAS-2, which are better than those by NCSR.

Figures 11, 12, 13, 14, 15 and 16 display results of restoration of several images corrupted by strong Gaussian noise. In all those cases the SSIM values from the upBM3D or/and hybrid algorithms significantly exceed values from all other algorithms. Respectively, the restoration of the images’ fine structure by the upBM3D or/and hybrid algorithms is much better compared to the restoration by other algorithms.

Each of the mentioned figures comprises 12 frames, which are arranged in a \(4\times 3\) order: \(\left( \begin{array}{ccc} f_{11} &{} f_{12} &{} f_{13} \\ f_{21} &{} f_{22} &{} f_{23} \\ f_{31} &{} f_{32} &{} f_{33} \\ f_{41} &{} f_{42} &{} f_{43} \\ \end{array} \right) .\) Here frame \( f_{11} \) displays noised image; frame \( f_{21} \)—image restored by BM3D; \( f_{12} \)—image restored by BM3D-SAPCA; \( f_{22} \)—image restored by WNNM; \( f_{13} \)—image restored by upBM3DFootnote 4; \( f_{23} \)—image restored by hybrid. Frame \( f_{31} \) displays a fragment of the original image. The remaining frames \( \left\{ f_{32},f_{33}, f_{41} , f_{42} , f_{43}\right\} \) display the fragments of the restored images shown in frames \( \left\{ f_{12},f_{13}, f_{21} , f_{22} , f_{23}\right\} \), which are arranged in the same order.

3.4 Relation of the proposed algorithms to the deep learning methods

In recent years, the focus of the image denoising research shifted to the Deep Learning methods, which resulted in a huge amount of publications. We mention a few of them: Chen and Pock (2017); Zhang et al. (2017); Cruz et al. (2018); Fang and Zeng (2020); Shi et al. (2019); Li et al. (2020); Quan et al. (2021); Binh et al. (2021). Much more references can be found in the reviews (Tian et al., 2020; Ilesanmi & Ilesanmi, 2021). One of advantages of the Deep Learning methods is that, once the Neural Net is trained (which can involve extended datasets and take several days), its application to test images is very fast. Therefore, the experimental results in most related publications are presented via the PSNR and, sometimes, the SSIM values averaged over some test datasets such as, for example, Set12 introduced in Zhang et al. (2017).

Set12 partially overlap with the set of 10 (Set10) images that we used in our experiments. Namely the images “Barbara”, Boat”, “Fingerprint”, “Hill”, “Lena” and “Man” participate in both sets. The structure of the remaining images “Seismic”, “Fabric”, “Mandrill” and “Bridge” from Set10 is more complicated compared to the images “Camera”, “Couple”, “House”, “Monarch”, “Pepper” and “Straw” from Set12. Therefore, the averaged results comparison from these two datasets is quite justified. For even better compatibility, we compare the gains of results from different methods over the corresponding results from BM3D: \(P_{{\textit{method}}} {\mathop {=}\limits ^{{\textit{def}}}}\frac{{\textit{PSNR}}_{{\textit{method}}}}{{\textit{PSNR}}_{BM3D}}\) and \( S_{{\textit{method}}} {\mathop {=}\limits ^{{\textit{def}}}}\frac{{\textit{SSIM}}_{{\textit{method}}}}{{\textit{SSIM}}_{BM3D}}\). Recall that for the calculation of SSIM we use the function ssim from Matlab 2020b, whereas in most publications SSIM is computed by some other schemes.

We compare results from the recent state-of-the-art algorithms Cola_Net (Mou et al., 2022), CDNet (Quan et al., 2021), FLCNN (Binh et al., 2021), DRCNN (Li et al., 2020), and the non-Deep Learning algorithm presented in Zhou et al. (2021), which we mark as SRENSS, averaged over Set12 with the results from WNNM (Gu et al., 2014) and the proposed hybrid algorithm averaged over Set10.Footnote 5 The PSNR and SSIM values for all methods except for WNNM and hybrid are taken from the corresponding publications. Table 4 shows the results of this comparison.

We can observe from the table that all participated up-to-date schemes, including the non-Deep Learning algorithm SRENSS, demonstrate a moderate gain over BM3D in both PSNR and SSIM values averaged over Set12 (far from being a breakthrough). The values from WNNM averaged over Set10 are very close to those from BM3D averaged over the same set. The same can be said for the PSNR values from the hybrid algorithm. However, the SSIM values from the hybrid algorithm demonstrate a strong gain over BM3D, which is clearly seen in Fig. 10. Especially it is true for a strong Gaussian noise with \(\sigma =80,\,100\) dB. This fact highlights the ability of the qWP-based algorithms to restore edges and fine structures even in severely damaged images.

Note that denoising results presented in the overwhelming majority of the Deep Learning publications deal with the noise level not exceeding 50 dB.

4 Discussion

We presented a denoising scheme that combines the qWPdn algorithm based on the directional quasi-analytic wavelet packets, which are designed in Averbuch et al. (2020), with the popular BM3D algorithm (Dabov et al., 2007) considered to be one of the best in the field. The qWPdn and BM3D methods complement each other. Therefore, the idea to combine these methods is natural. In the iterative hybrid scheme qWPdn–BM3D, which is proposed in Sect. 3.2, the output from one algorithm updates the input to the other. The scheme proved to be highly efficient. It is confirmed by a series of experiments on restoration of 10 multimedia images of various structure, which were degraded by Gaussian noise of various intensity. In the experiments, the performance of two combined qWPdn–BM3D algorithms was compared with the performance of six advanced denoising algorithms BM3D, BM3D-SAPCA (Dabov et al., 2009), WNNM (Gu et al., 2014), NCSR (Dong et al., 2013), cptTP-\(\mathbb {C}\)TF\(_{6}\) (Zhuang & Han, 2019) and DAS-2 (Che & Zhuang, 2018). In the overwhelming majority of the experiments reported in Sect. 3.3, the two combined algorithms produce PSNR value, which are very close to the values produced by BM3D but give in to the values from WNNM and BM3D-SAPCA. Their noise suppression efficiency is competitive with that of the BM3D. On the other hand, their results in the resolution of edges and fine structures are much better than the results from all other algorithms participating in the experiments. This is seen in the images presented in Sect. 3.3. Consequently, the SSIM values produced by the combined algorithms qWPdn–BM3D are significantly higher than the values produced by BM3D and exceed the values produced by all other participated algorithms. The combined algorithm runs fast. In most cases, it is sufficient to conduct two to three iterations (very rarely five to six iterations) in order to get an excellent result. For example, the Matlab implementation of three-iterations processing of a \(512\times 512\) image takes about 20 s, where 11 s are consumed by the BM3D (MEX-files) and 3 s are consumed by a non-compiled version of the qWP denoising algorithm. For comparison, processing of the same image by the WMMN algorithm takes 470 s and BM3D-SAPCA processing may take from 3 min to several hours.

The discussion in Sect. 3.4 shows that the qWPdn–BM3D algorithm can compete, in some aspects, with denoising methods based on Deep Learning. Especially it is true for the restoration of images contaminated by strong noise.

It is worth noting that our combined methods have some distant relation to the SOS boosting scheme presented in Romano and Elad (2015). The main distinction between the qWPdn–BM3D and the SOS boosting is that each of the qWPdn and BM3D algorithms is “boosted” by the output from the other algorithm. Such a scheme can be regarded as a CrossBoosting.

Summarizing, having such a versatile and flexible tool as the directional qWPs that proved to be highly efficient in image inpainting and denoising, we are in a position to address data processing problems such as deblurring, superresolution, segmentation and classification, target detection (here the directionality is of utmost importance). Another potential application to be addressed is the extraction of characteristic features in multidimensional data in the deep learning framework. The 3D directional wavelet packets, whose design is underway, will be beneficial for seismic and hyper-spectral processing.

Notes

The index n refers to the number of filters in the underlying one-dimensional complex tight framelet filter bank.

\(s_{+}{\mathop {=}\limits ^{{\textit{def}}}}\max \{s, 0\}\).

- $$\begin{aligned}&{\textit{PSNR}}(\mathbf {x},{\tilde{\mathbf {x}}}){\mathop {=}\limits ^{{\textit{def}}}}10\log _{10}\left( \frac{K\,255^2}{\sum _{k=1}^K(x_{k}-\tilde{x}_{k})^2}\right) \; dB. \end{aligned}$$(3.2)

For the “Seismic” image upqWP instead of upBM3D

Averaged results from upBM3D are almost identical to those from hybrid algorithm.

References

Averbuch, A., Neittaanmäki, P., & Zheludev, V. (2020). Directional wavelet packets originating from polynomial splines. arXiv:2008.05364

Averbuch, A., Neittaanmäki, P., Zheludev, V., Salhov, M., & Hauser, J. (2021). Image inpainting using directional wavelet packets originating from polynomial splines. Signal Processing: Image Communication,, 97. arXiv:2001.04899

Averbuch, A., Coifman, R. R., Donoho, D. L., Israeli, M., & Shkolnisky, Y. (2008). A framework for discrete integral transformations I—The pseudopolar Fourier transform. SIAM Journal on Scientific Computing, 30(2), 764–784.

Averbuch, A., Coifman, R. R., Donoho, D. L., Israeli, M., Shkolnisky, Y., & Sedelnikov, I. (2008). A framework for discrete integral transformations II—The 2d discrete Radon transform. SIAM Journal on Scientific Computing, 30(2), 785–803.

Averbuch, A., Neittaanmäki, P., & Zheludev, V. (2019). Splines and spline wavelet methods with application to signal and image processing, Volume III: Selected topics. Springer.

Bayram, I., & Selesnick, I. W. (2008). On the dual-tree complex wavelet packet and m-band transforms. IEEE Transactions on Signal Processing, 56, 2298–2310.

Binh, P. H. T., Cruz, C., & Egiazarian, K. (2021). Flashlight CNN image denoising. In 2020 28th European signal processing conference (EUSIPCO) (pp. 670–674).

Buades, A., Coll, B., & Morel, J.-M. (2005). A review of image denoising algorithms, with a new one. Multiscale Modeling & Simulation, 4(2), 490–530.

Candés, E., Demanet, L., Donoho, D., & Ying, L. X. (2006). Fast discrete curvelet transforms. Multiscale Modeling & Simulation, 5, 861–899.

Candés, E., & Donoho, D. (2004). New tight frames of curvelets and optimal representations of objects with piecewise \(c^{2}\) singularities. Communications on Pure and Applied Mathematics, 57, 219–266.

Chen, Y., & Pock, T. (2017). Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(6), 1256–1272.

Che, Z., & Zhuang, X. (2018). Digital affine shear filter banks with 2-layer structure and their applications in image processing. IEEE Transactions on Image Processing, 27(8), 3931–3941.

Coifman, R. R., & Wickerhauser, V. M. (1992). Entropy-based algorithms for best basis selection. IEEE Transactions on Information Theory, 38(2), 713–718.

Cruz, Cristóvão, Foi, Alessandro, Katkovnik, Vladimir, & Egiazarian, Karen. (2018). Nonlocality-reinforced convolutional neural networks for image denoising. IEEE Signal Processing Letters, 25(8), 1216–1220.

Dabov, K., Foi, A., Katkovnik, V., & Egiazarian, K. O. (2009). BM3D image denoising with shape adaptive principal component analysis. In Proceedings of the workshop on signal processing with adaptive sparse structured representations (SPARS’09).

Dabov, K., Foi, A., Katkovnik, V., & Egiazarian, K. (2007). Image denoising by sparse 3d transform-domain collaborative filtering. IEEE Transactions on Image Processing, 16(8), 2080–2095.

Dong, W. S., Zhang, L., Shi, G. M., & Li, X. (2013). Nonlocally centralized sparse representation for image restoration. IEEE Transactions on Image Processing, 22(4), 1620–1630.

Do, M. N., & Vetterli, M. (2008). Contourlets. In G. V. Welland (Ed.), Beyond wavelets. Academic Press.

Fang, Y., & Zeng, T. (2020). Learning deep edge prior for image denoising. Computer Vision and Image Understanding, 200, 103044.

Goyal, B., Dogra, A., Agrawal, S., Sohi, B. S., & Sharma, A. (2020). Image denoising review: From classical to state-of-the-art approaches. Information Fusion, 55, 220–244.

Gu, S., Zhang, L., Zuo, W., & Feng, X. (2014). Weighted nuclear norm minimization with application to image denoising. In 2014 IEEE conference on computer vision and pattern recognition (pp. 2862–2869).

Han, B., Mo, Q., Zhao, Z., & Zhuang, X. (2019). Directional compactly supported tensor product complex tight framelets with applications to image denoising and inpainting. SIAM Journal on Imaging Sciences, 12(4), 1739–1771.

Han, B., & Zhao, Z. (2014). Tensor product complex tight framelets with increasing directionality. SIAM Journal on Imaging Sciences, 7(2), 997–1034.

Han, B., Zhao, Z., & Zhuang, X. (2016). Directional tensor product complex tight framelets with low redundancy. Applied and Computational Harmonic Analysis, 41(2), 603–637.

Hou, Y., & Shen, D. (2018). Image denoising with morphology- and size-adaptive block-matching transform domain filtering. EURASIP Journal on Image and Video Processing,59.

Ilesanmi, A. E., & Ilesanmi, T. O. (2021). Methods for image denoising using convolutional neural network: A review. Complex & Intelligent Systems, 7, 2179–2198.

Jalobeanu, A., Blanc-Féraud, L., & Zerubia, J. (2000). Satellite image deconvolution using complex wavelet packets. In Proc. IEEE Int. Conf. Image Process. (ICIP) (pp. 809–812).

Ji, H., Shen, Z., & Zhao, Y. (2017). Directional frames for image recovery: Multi-scale discrete Gabor frames. Journal of Fourier Analysis and Applications, 23(4), 729–757.

Ji, H., Shen, Z., & Zhao, Y. (2018). Digital Gabor filters with MRA structure. SIAM Journal on Multiscale Modeling and Simulation, 16(1), 452–476.

Kingsbury, N. G. (1999). Image processing with complex wavelets. Philosophical Transactions of the Royal Society of London. Series A: Mathematical, Physical and Engineering Sciences, 357(1760), 2543–2560.

Kutyniok, G., & Labate, D. (2012). Shearlets: Multiscale analysis for multivariate data. Birkhäuser.

Lim, W.-Q., Kutyniok, G., & Zhuang, X. (2012). Digital shearlet transforms. In Shearlets: multiscale analysis for multivariate data (pp. 239–282). Birkhäuser.

Liu, J., & Osher, S. (2019). Block matching local SVD operator based sparsity and TV regularization for image denoising. Journal of Scientific Computing, 78, 607–624.

Li, Xiaoxia, Xiao, Juan, Zhou, Yingyue, Ye, Yuanzheng, Lv, Nianzu, Wang, Xueyuan, et al. (2020). Detail retaining convolutional neural network for image denoising. Journal of Visual Communication and Image Representation, 71, 102774.

Mou, Chong, Zhang, Jian, Fan, Xiaopeng, Liu, Hangfan, & Wang, Ronggang. (2022). Cola-net: Collaborative attention network for image restoration. IEEE Transactions on Multimedia, 24, 1366–1377.

Quan, Yuhui, Chen, Yixin, Shao, Yizhen, Teng, Huan, Xu, Yong, & Ji, Hui. (2021). Image denoising using complex-valued deep CNN. Pattern Recognition, 111, 107639.

Romano, Y., & Elad, M. (2015). Boosting of image denoising algorithms. SIAM Journal on Imaging Sciences, 8(2), 1187–1219.

Selesnick, I. W., Baraniuk, R. G., & Kingsbury, N. G. (2005). The dual-tree complex wavelet transform. IEEE Signal Processing Magazine, 22(6), 123–151.

Şendur, L., & Selesnick, I. W. (2002a). Bivariate shrinkage functions for wavelet-based denoising exploiting interscale dependency. IEEE Transactions on Signal Processing, 50, 2744–2756.

Şendur, L., & Selesnick, I. (2002b). Bivariate shrinkage with local variance estimation. IEEE Signal Processing Letters, 9(12), 438–441.

Shi, Wuzhen, Jiang, Feng, Zhang, Shengping, Wang, Rui, Zhao, Debin, & Zhou, Huiyu. (2019). Hierarchical residual learning for image denoising. Signal Processing: Image Communication, 76, 243–251.

Tian, C., Fei, L., Zheng, W., Zuo, W., Xu, Y., & Lin, C.-W. (2020). Deep learning on image denoising: An overview. Neural Networks,131.

Wang, Z., & Bovik, A. C. (2009). Mean squared error : Love it or leave it? IEEE Signal Processing Magazine, 26, 98–117.

Wang, Z., Bovik, A. C., Sheikh, H. R., & Simoncelli, E. P. (2004). Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

Zhang, K., Zuo, W., Chen, Y., Meng, D., & Zhang, L. (2017). Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Transactions on Image Processing, 26(7), 3142–3155.

Zhou, T., Li, C., Zeng, X., & Zhao, Y. (2021). Sparse representation with enhanced nonlocal self-similarity for image denoising. Machine Vision and Applications, 32(5), 1–11.

Zhuang, X. (2016). Digital affine shear transforms: Fast realization and applications in image/video processing. SIAM Journal on Imaging Sciences, 9(3), 1437–1466.

Zhuang, X., & Han, B. (2019). Compactly supported tensor product complex tight framelets with directionality. In 2019 International conference on sampling theory and applications (SampTA), Bordeaux, France.

Acknowledgements

This research was partially supported by the Israel Science Foundation (ISF, 1556/17), Blavatnik Computer Science Research Fund Israel Ministry of Science and Technology 3-13601 and 3-14481.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Matlab code for calculation of number of different orientations of real qWPs

Appendix: Matlab code for calculation of number of different orientations of real qWPs

Rights and permissions

About this article

Cite this article

Averbuch, A., Neittaanmäki, P., Zheludev, V. et al. An hybrid denoising algorithm based on directional wavelet packets. Multidim Syst Sign Process 33, 1151–1183 (2022). https://doi.org/10.1007/s11045-022-00836-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-022-00836-w