Abstract

Retinopathy of prematurity (ROP) is a leading cause of childhood blindness affecting the retina of low birth weight preterm infants. Plus disease in ROP characterised by abnormal tortuosity and dilation of posterior retinal blood vessels, is a benchmark that identifies treatment-requiring ROP cases. A Plus disease classifier with zero false negatives is a major requirement of an ROP screening system. In this paper, an efficient Artificial Neural Network (ANN) architecture with an optimal feature set is proposed which meets the above requirement. A total of 178 images with 81(45%) Plus and 97 (55%) No Plus are used for the analysis. A feature set derived from transform domain representation of retinal funds images is used along with the existing vascular features in the proposed work. Wavelet and Curvelet transforms are considered for deriving the additional feature set in the experimental analysis. The feature set containing Curvelet transform energy coefficient along with the vascular features gave an Accuracy of 96% and Specificity of 93% with 100% Sensitivity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Retinopathy of Prematurity (ROP) is a vasoproliferative disorder of developing retina, which may start only in prematurely born infants within few weeks after their birth [15]. ROP is a potentially avoidable cause of infant blindness, which can be prevented by proper screening and treatment. The World Health Organization (WHO) has estimated that out of 15 million preterm births (defined as before 37 weeks of gestation) every year around the world, India has the largest number of premature infants, accounting for > 3.5 million annually out of 27.2 million live births per year. Along with other middle income countries, India suffers from “third” epidemic of blindness due to ROP [42, 43].

According to the Revised International Classification of Retinopathy of Prematurity (ICROP) [11, 31], the disease can be categorized with respect to the location, extent, stages and due to Posterior pole vascular abnormalities as aggressive posterior ROP, Plus and Pre Plus disease.

Plus disease is defined as the presence of dilation, tortuosity and irregular branching of the blood vessels in the posterior pole. Studies namely Cryotherapy for Retinopathy of Prematurity (CRYO-ROP) [27] and Early Treatment for Retinopathy of Prematurity (ETROP) [35] have indicated that the primary criterion for identifying treatment grade ROP is the presence of Plus disease. Even though the stage and zone of the disease indicate the progression of the disease, the presence of Plus disease always requires immediate treatment. Therefore, the examination of posterior retinal blood vessels, whose nature signifies the presence of Plus disease, is a crucial step in diagnosing treatment requiring or threshold ROP. A Pre Plus disease having abnormal vasculature that is insufficient for the diagnosis of Plus disease, but that gives more arterial tortuosity and venous dilation than normal is another sign for the detection of ROP. Figure 1 shows the digital fundus images of the normal infant retina and retina of infants with Plus disease. The difference between the characteristics of blood vessels in the normal and diseased images are clearly shown in this figure. We can notice that blood vessels of the images of the retina with Plus disease are more twisted and broader than the blood vessels in the normal images [32].

We can reduce blindness due to ROP if the disease is identified in its early stage and treated. But the accuracy and reliability of Plus disease diagnosis of ROP experts are imperfect. Therefore, it follows that accurate computer based analysis of the images of infant retina will be very useful to augment and assist clinical diagnosis.

Also the analysis of the retinal images in premature infants is considerably more challenging than in adult subjects due to clarity of the images. Another reason for the demand for a computerized system for ROP diagnosis is the high level of inter-expert disagreement over the diagnosis [16]. For instance,the presence or absence of the Plus disease is concluded based on comparing the observed blood vessel to that of the photograph showing the minimum standard of vascular tortuosity rather than by establishing standard cutoff values. Therefore, there may be significant variability in the accuracy and consistency of Plus disease diagnosis from wide-angle images, even among recognized ROP experts [4]. Therefore, a computer-based image analysis system, which extracts relevant information from the fundus images, could lead to ensure timely diagnosis and treatment of ROP. This work aims to develop suitable techniques to overcome these limitations while designing an accurate system for Plus disease detection. The novelty of our research includes:

-

Identification of better discriminating features for the analysis of Plus disease detection by combing the advantages of vascular and transform based features of the retinal images.

-

Design of a simple neural network classifier with the limited data set and performance comparable to that of existing convolutional neural network classifiers.

-

Development of a Plus disease classifier with zero false negatives to meet the requirements of an ROP screening system.

The paper is structured as follows: Section 2 reviews the previous works on the detection of Plus disease. The data set used is introduced in Section 3, and the overview of the proposed technique is provided in Section 4. Section 5 and Section 6 describes the performance metrics evaluated on the extracted features along with experimental setup and the results. Finally, in Section 7 conclusions are drawn.

2 Literature survey

In recent years, there has been a growing interest in developing diagnostic tools that use artificial intelligence (AI) techniques to diagnose various medical conditions like cancer [38], heart problems, eye abnormalities [20], gastrointestinal diseases, brain tumors and mental disorders [5] etc. One such area where AI-based diagnostic tools have shown potential is in the diagnosis of retinopathy of prematurity (ROP). With the latest advancements in digital imaging of ROP such as Retcam, fundus retinal images can be effectively analyzed using AI methods. These AI methods include traditional machine learning (ML) and modern deep learning approaches (DL). In traditional ML methods, conventional image processing and feature extraction techniques are used to identify pathological patterns such as fundus lesions and blood vessels. On the other hand, DL approaches use artificial neural networks to learn and classify features from the input images without explicit feature extraction. Overall, the development of AI-based diagnostic tools has the potential to improve the accuracy and efficiency of medical diagnoses, leading to better patient outcomes. However, further research is needed to validate the effectiveness of these methods in real-world clinical settings.

Many researchers have built computer-aided systems for the analysis of Plus disease in ROP. These systems may provide more objective for the diagnosis, and such studies have demonstrated that methods are capable of discriminating between cases with and without Plus disease as accurately as experts . The accuracy of the methods depends on the analysis of premature retinal infant images in terms of identifying the best features that classify the disease. There are many methods to classify ROP by analysing the features from blood vessel segments of retina [45]. Most of the works are deals with healthy/disease category of classification and have limited works for Plus/No Plus classification category.

Jomier et al. [22] developed a method to assess Retinopathy of Prematurity by capturing images of the retina to extract and quantify both tortuosity and dilation of blood vessels as features on 20 infant images. This approach demonstrates 80% sensitivity and 92% specificity in the prediction of Retinopathy as Plus/No Plus category by using a neural network classifier. In a study conducted by Ataer et al. [3], they introduced a diagnostic tool called “i-ROP” which utilizes 77 images to classify healthy, Pre-Plus, and Plus ROP disease. To achieve this classification, they implemented a support vector machine (SVM) classifier and were able to attain a high accuracy of 95%. These methods relied on manual feature extraction and segmentation of vessels, which may introduce inaccuracies in the diagnosis due to potential errors and professional bias in selecting target vessels. Additionally, the time required for image processing, such as segmentation and feature extraction, can be lengthy.

The increase in the tortuosity of retinal vessels can indicate the presence of various diseases, including Retinopathy of Prematurity (ROP). Precise detection and measurement of such changes can aid in the computer-aided diagnosis of Plus disease, which requires ROP treatment. Oloumi et al. [29] developed image processing methods for detecting and segmenting retinal vessels, measuring vessel tortuosity, and incorporating clinical definitions of Plus diagnosis into diagnostic decision-making criteria. And for 110 retinal fundus images of preterm infants (91 without Plus disease and 19 with Plus disease), demonstrate high sensitivity of 0.89 (17/19)in the diagnosis of Plus disease. In Pour et al. [30] eighty-seven well-focused fundal images taken with RetCam were classified to three groups of Plus, No Plus, and Pre Plus. Automated algorithms in this study were designed based on the curvature measure and distance transform, for assessment of tortuosity and vascular dilatation as two major parameters of Plus disease detection. The performance is compared with three different classifiers named k-nearest neighbor, support vector machine and multilayer perceptron network and achieved an accuracy rate of 72.3%, 83.7%, and 84.4% respectively. Samant et al. [36] shows the preprocessing of 30 affected and 30 normal images by using histogram equalization and morphological operations to detect the blood vessels from retina, and from this extracted blood vessels tortuosity is computed, and classified using conventional methods like SVM, K-NN and Ensemble with 92.5%, 95%, and 87.5% accuracy values respectively.

34 digital retinal images were interpreted by 22 ROP experts for presence of Plus disease in Chiang et al. [10] . These images were segmented using Retinal Image Multi Scale Analysis (RISA), yielding 6 retinal vessel features called arterial integrated curvature (AIC), arterial diameter (AD), arterial tortuosity index (ATI), venular integrated curvature (VIC), venular diameter (VD), and venular tortuosity index (VTI) and classified using Recursive partitioning and regression tree (RPART) classifier with a performance of 0.8077 AUC. The preprocessing using structure adaptive filter, guided filter and coherence enhancing diffusion filter along with segmentation using connectivity and proximity analysis and morphological operations are explained in nisha et al. [28]. This paper proposes the usage of additional retinal features namely leaf node count and vessel density to portray the abnormal growth and branching of the blood vessels and to complement the commonly used features namely tortuosity and width. The test results shows a better classification of Plus disease in terms of sensitivity (95%) and specificity (93%) using an SVM classifier on 178 infant retinal images.

Recently, several diagnostic tools for Retinopathy Of Prematurity(ROP) that rely on Deep Learning (DL) techniques have emerged. These systems use transfer learning (TL), a method that involves reusing pre-trained Convolutional Neural Networks (CNNs) that were trained with large datasets such as ImageNet to solve similar classification problems with a smaller number of images [7]. By using a pre-trained CNN that has already learned image features from a vast and diverse dataset, TL has been demonstrated to improve diagnostic accuracy [9].

Brown et al. [8] introduced a software tool known as “i-ROP”. The tool utilizes two convolutional neural networks (CNNs), with the first CNN being responsible for image segmentation, while the second CNN classifies retinal images into healthy, Pre-Plus, and Plus ROP disease categories. The authors trained and tested i-ROP using a dataset of 5511 retinal images, achieving an average sensitivity of 96.5%. An automated system called “DeepROP” was introduced by Wang et al. [46], which is based on deep learning. The system used 11,707 images and began by categorizing images as either normal or showing signs of ROP. Subsequently, ROP images were divided into severe or minor categories. The study found that the system had a sensitivity of 96.64% for distinguishing normal versus ROP images, 88.46% sensitivity and 92.31% specificity for categorizing severe versus minor ROP images. In a recent development [40], a new automated tool known as “ROP.AI” has been introduced for diagnosing both normal and Plus diseases. According to the researchers, the classification model utilized by this tool was able to attain an impressive sensitivity of 96.6%. In their study Lei et al. [25] utilized ResNet-50 as the base model and integrated an attention module and channel to facilitate the diagnosis of ROP. Likewise, Zhang et al [55] employed multiple variations of ResNet to develop their model for ROP diagnosis.

In a study conducted by Rani et al. [33], a tool based on Deep Learning (DL) and multiple instance learning (MIL) was introduced. The images were divided into equal patches, and then a Convolutional Neural Network (CNN) was utilized to extract features from these patches. The features from the same image were combined to differentiate between normal and Retinopathy of Prematurity (ROP) cases. The system achieved an accuracy of diagnosis 83.33%. Subsequently, the authors of [52] proposed a pipeline named “I-ROP ASSIST” to differentiate between healthy and Plus ROP diseases. The authors segmented the images using U-Net CNN and extracted handcrafted features from these segmented images to train multiple machine learning classifiers. The highest accuracy achieved in this study was 94%. In [17] a new convolutional neural network (CNN) architecture is presented for the detection and evaluation of retinopathy of prematurity (ROP). The proposed architecture comprises of two main components: a feature extraction sub-network and a feature aggregation operator for combining features from different images in an examination. The study evaluates the proposed architecture using several pre-trained ImageNet models, including VGG-16, Inception-V2, and ResNet50. The proposed architecture is tested on a large dataset consisting of 2668 examinations of fundus images in infants. The experimental results reveal that Inception-V2 with the max aggregate operator in module 2 performs better than the other networks for the recognition of the existence and severity of ROP with a sensitivity of 96%. Worrall et al. [48] implemented Convolutional Neural Network architecture using a modified GoogLeNet as classifiers on 1459 images and classified as diseased/healthy with 93.6% accuracy, 95.4% sensitivity and 94.6% specificity. An analysis on 2361 images were performed by wang et al [47] using id-Net architecture for diseased/healthy classification with 96.64% sensitivity. Mao et al. [26] performed the classification as Plus/Pre Plus and No Plus categories using a DenseNet architecture with 93.31% accuracy and 95.1% sensitivity values on 3311 images.

In Huang et al.[18] five different deep neural network architectures were utilized to identify ROP in preterm infants using applied transfer learning techniques. The findings indicated that among the tested models, the VGG19 architecture exhibited the highest level of performance in detecting ROP in preterm infants, with an accuracy of 96% and sensitivity of 96.6%. A deep convolutional neural network (DCNN) is developed for automated ROP detection using wide-angle retinal images in [24] . It mainly uses ResNet50 as base architecture and improve the ResNet by adding a channel and a spatial attention module. The classification was mainly as ROP/Normal cases on 3412 infant retinal images and obtains an accuracy of 96% and sensitivity of 98 %. Zhang et al. [54] used an automated system for diagnosing AP-ROP using two independent networks. The proposed system divides the task into two parts: Network 1 identifies the presence of ROP in fundus images, while Network 2 distinguishes between AP-ROP and Regular ROP in ROP fundus images. The feature representation of the two networks is improved using a channel attention module, and a bilinear pooling module is utilized to extract complementary information between layers. The transfer learning mechanism is applied to transfer the parameters of Network 1 to Network 2, resulting in improved classification performance. The system is trained on 6867 fundus images, and its performance is evaluated using 3654 additional images. The results show that the accuracy and sensitivity for diagnosing AP-ROP using Network 2 are 93.09% and 87.70%, respectively. In their recent study Yildiz et al. [51] introduce a novel method called Structural Visual Guidance Attention Networks (SVGA-Net), which utilizes structural domain knowledge to guide visual attention in Convolutional Neural Networks (CNNs). Their experiments were conducted on a dataset of 5512 posterior retinal images captured using a wide angle fundus camera. The results of the experiments demonstrate that SVGA-Net achieves impressive performance with an AUC of 0.987 and 0.979 for predicting plus and normal categories, respectively. This suggests that SVGA-Net has the potential to be a valuable tool in the diagnosis and treatment of retinal diseases. Overall, the proposed method represents a promising avenue for improving the accuracy and efficiency of CNNs in medical image analysis.

From the literature, we can see that there are only a few works which uses simple neural network architectures for the classification of Plus disease. The recent works in literature uses Convolutional Neural Network architecture based classification of ROP, but all are focusing on Normal/Diseased classification rather than Plus/No Plus classification and requires larger number of posterior retinal images for accurate classification. So in this work, we are mainly focused on the identification and extraction of optimal features to develop a neural network based classifiers for efficient classification of ROP as Plus/No Plus categories on a limited data set of around 178 images with 81 Plus and 97 No Plus images.

3 Materials

The data used in this research is obtained from Narayana Nethralaya Eye Hospital, Bangalore, India. The images are captured from premature infants with an average birth weight of 1750 grams and/or gestational age fewer than 34 weeks using RetCam3, as part of the KIDROP program, which is India’s largest telemedicine program to diagnose, treat and monitor ROP. A total of 178 images are taken for analysis, of which 97 (55%) images are healthy with the No-Plus disease, and 81 (45%) images show vessel abnormalities denoting the presence of Plus disease.

4 Methods

The accurate judgment of Plus disease is critical to identify the treatment requiring ROP, so that the vision of the baby is saved at the right time. The crucial step for the identification of Plus disease is the selection of optimal features that describe the nature of the retinal vasculature and design of an accurate classifier. Hence, as a first step, a detailed analysis was conducted to identify features that will improve the classification accuracy [34]. The idea here is to extract features from the images which will represent the nature of blood vessels using appropriate transforms along with the conventional vascular features. After the feature extraction stage a detailed analysis is being conducted to identify the best classifier for the given application using the extracted features. Simplified block diagram of the proposed Plus disease detection process is shown in Fig. 2.

The preprocessing stage is to reduce the noise, blurring effect and enhance the contrast for improving the efficiency of the segmentation and feature extraction stages. After the segmentation of vascular structure, various types of features are extracted. Which is mainly denoted as two classes, vascular features and transform domain features. The vascular features are extracted from the segmented vasculature, where as transform domain features are extracted from the entire image using various transforms. The detailed flow of work is explained below.

4.1 Preprocessing

Image preprocessing is an essential and crucial step that decides the accuracy of segmentation and feature extraction stages [39]. Preprocessing involves the removal of undesired artifacts in the image and enhancement of objects of interest. The fundus image (I) is a color image with three components: Red (IR), Blue (IB) and Green (IG) as shown in Fig. 3. For blood vessel detection, the color planes are analyzed in terms of vessel-to-background contrast. Green channel (IG) is selected for further processing because it provides better contrast between the background and the vessels compared to red and blue channels [50].

Figure 4 shows the block schematic of preprocessing stages for segmentation. Extracted green plane image is filtered using a median filter of size 3×3 and is named as IMF. A Gaussian filter as given in (1) with standard deviation σ = 9 is used as the smoothing filter, which reduces the noise in the flat region from being amplified and suppresses the high frequency details for further processing.

The Gaussian filtered image IG is subtracted from the median filtered image IMF to get detailed image which contains all the edge information which needs to be preserved. This detailed layer is amplified by multiplying with a constant K = 5 to boost the details further for analysis and is added with the Gaussian filtered image as in (2).

The preprocessed output is shown in Fig. 5. It can be seen that this enhanced image is better in terms of contrast and noise reduction. We can see that it reduces the noise amplification in homogeneous regions, which is useful in reducing the number of false artifacts appearing in the segmented output. This enhancement intends to ensure that the relevant blood vessels are clearly extracted from the background rather than just visual quality enhancement of the image.

4.2 Vascular segmentation

Segmentation is the process of extracting the objects from the background of an image. In the problem under consideration, the object of interest is the vascular structure of the retina. Thus segmentation involves the identification of blood vessels from the rest of the retinal fundus images and thus highlighting the region of interest for feature extraction. The segmentation method adopted in this work is from [28] which is summarized below.

Figure 6 shows the block schematic for proposed vessel tree segmentation. This approach extracts the thick and thin vessels separately and finally fuse them together to get the complete vessel structure. For the extraction of thick vessels Top hat transform is used. It is defined as difference between the input image and it’s opening by a structuring element, as given in (3), where ∘ denotes morphological opening operation. A disc-shaped structuring element b, with radius 5 is used in this work.

Thin vessel extraction is done using background subtraction with the best smoothing filter suitable for the infant funds images. The commonly used filters for background subtraction are the mean and median filters. But these filters do not segment the thinner vessels because severe blurring will eliminate the fragile vessels from the foreground. So a guided filter is employed for thin vessel extraction. Guided filter is a structure adaptive filter that has the property of smoothing only the homogeneous regions without affecting the edges. It enhances the image without increasing the noise present in the homogeneous regions. This edge preserving and unique filtering property of Guided Filter is utilized in this study. It is found that most of the small blood vessels can be extracted even from areas with low contrast, by varying the filter parameters. Thus the enhanced image is Guided filtered using a small neighborhood of 5*5, and the filtered output from the Guided filter (Ifilted) is taken as the smoothed background image. This background is subtracted from the original image to obtain the foreground image which contains the blood vessels.

Post-processing helps in further improving the result of segmentation by removing falsely detected vessels [6]. The resultant image after top hat transformation and guided filter, has both the required blood vessels and noise. Therefore the required foreground pixels are chosen based on a threshold T given by Otsu’s algorithm. Otsu’s thresholding is a method of selecting T, which is defined as the global threshold varying from 0,1,2,...L-1 such that it minimizes the intraclass or within-class variance of the object and the background class. All the pixels with intensity values less than T will belong to class C0 or background class and the rest of the pixels become the foreground class or class C1 as denoted by,

Thus applying Otsu’s thresholding on extracted blood vessels using top hat transform and guided filter, we get thin and thick blood vessels of retinal infant images, then we combine them to produce segmented vessel tree as shown in Fig. 7.

4.3 Feature extraction

Segmented output contains vascular tree with various structures, indicating abnormal growth of blood vessels. The convention features used for classification of Plus and No Plus in infant funds images are tortuosity and width. In this work two additional features leaf node count and vessel density suggested by authors in [28] are also used. In order to study the effect of transform domain features in the classification of the disease a detailed analysis is being performed on the transform domain feature based on the wavelet and curvelet transform.

4.3.1 Vascular feature extraction

Detection of blood vessels from the infant retinal images is a major task for the analysis of Plus disease. The characteristics of blood vessels like length, width, tortuosity and branching patterns have an important role in the disease diagnosis. The vascular features extracted in this study are listed below.

(i) Tortuosity (T): Tortuosity have a major role in the indication of Plus disease, it mainly indicates the twisting of blood vessels. The most simple and widely used measure of tortuosity is defined as the ratio between the total length of a vessel segment(as shown by continuous line in Fig. 8) and its chord (the line connecting the endpoints of the vessel segment), often called the Distance Metric [23].

A modified definition of tortuosity by adding the number of peaks as given in (5) is chosen as a measure of tortuosity. Parameter L is arc length using geodesic distance transform, mod(x1x2) is the euclidean distance between endpoints x1 and x2 and P is the number of peaks.

(ii) Width (W): Width is calculated using Distance Transform as in (6). For each pixel, the distance transform assigns a number that is the distance between that pixel and the nearest nonzero pixel [19]. The input to the width calculation algorithm is the segmented image with white pixels in the place of vessels and black elsewhere. n is the sample size and it depends on the number of vessel segments.

(iii) Leaf node Count (LNC): Leaf node is defined as the vessel segment between a branch point and an end-point. Increased number of leaf nodes indicate excessive branching which is an indication of abnormality in blood vessel structure.

(iv) Vessel Density (VD): It is the ratio of Area occupied by blood vessels (in pixels) into Size of the image (in pixels).

Table 1 shows a comparison of the feature values average tortuosity, maximum width, leaf node count and vessel density for a set of sample images taken from the Plus and No Plus cases. From Table 1 we can see the feature values are higher for Plus images compared to No Plus images [28].

4.3.2 Transform based feature extraction

For transform based feature extraction, the best transforms that represent the vascular structure, the main object of interest, are selected. From the literature [2] we know that wavelet and curvelet transforms are efficient in representing edges and other singularities along the curves in an image. Hence the behavior of these two transforms are analysed in this study to get the best representative features for the vascular structure of the retina.

4.3.2.1 Wavelet transform

The wavelet transform is a multi scale transform, which is recognized as a useful feature extraction method to represent image features at different scales. The wavelet transforms exhibit impressive performance in detecting point and line like features from an image [49]. After one-level decomposition on an original image, an approximate sub-image and three detail sub-images are obtained. Those detail sub-images are at horizontal, vertical and diagonal orientation respectively. In this work the Daubechies wavelet transform has been used. A wavelet transform can be defined as in (7), where ψ(a,b) is the wavelet with scale a, location b by dilations and translations from mother wavelet ψ centered on the origin.

Wavelet decomposition of an image is shown in Fig. 9. DWT algorithm decomposes the image into 4 sub-band (sub-image) ie, LL, LH, HL and HH [53]. In this LL is the approximation of the input image, which is used for further decomposition process. LH sub band extract the horizontal features of original image. HL sub band gives vertical features and HH sub band gives diagonal features.

In one level decomposition, the sub-bands labeled LH1, HL1 and HH1 represent the finest scale wavelet coefficients i.e., detail images while the sub-band LL1 corresponds to coarse level coefficients i.e., approximation image as in Fig. 10(a). To obtain the next coarse level of wavelet coefficients, the sub-band LL1 alone is further decomposed and critically sampled. This results in two level wavelet decomposition as shown in Fig. 10(b). Similarly, to obtain further decomposition, LL2 will be used. This process continues until some final scale is reached.

4.3.2.2 Curvelet Transform

Curvelet transform is a geometric transform used to overcome the inherent limitations of wavelet like transforms. It is a multi-scale and multi-directional transform. Curvelet transform basis functions are needle shaped and have high directional sensitivity and anisotropy. Curvelet obey parabolic scaling. Because of these properties, curvelet transform allows almost optimal sparse representation of curve singularities. The curvelet transform at different scales and directions span the entire frequency space. So, curvelet transform was designed to represent edges and other singularities along curves much more efficiently than traditional transforms [1]. In this work wrapping based discrete curvelet transform as in (8) is used to collect curvelet coefficients of the images.

Here, C implies curvelet coefficient functions of (j,l,k) with j as scale (level of decomposition of image using curvelet), l as orientation(angle at which curvelets are arranged), k as spacial location of curvelet, ϕ(x,y) as curvelet functions and f(x,y) as input image having dimension M × N. The algorithmic flow for the calculation of curvelet coefficients using curvelet transform with preprocessed infant retinal image as input is given in Fig. 11.

Figure 12 shows the curvelet digital tiling of an image for 5 level decomposition. The low frequency (coarse scale) coefficients are stored at the center of the display. The cartesian concentric coronae show the coefficients at different scales; the outer coronae correspond to higher frequencies. There are four strips associated to each corona, corresponding to the four cardinal points; these are further subdivided in angular panels. Each panel represent coefficients at a specified orientation and along the scale suggested by the position of the panel. In the retinal infant image decomposition using curvelets, input image is resized to 512×512 and then decomposed into a number of directional sub-bands as shown in Fig. 13.

In 5 level decomposition, we have 32 sub-band images at scale 2 and 64 sub-band images at scales 3 and 4 respectively. Thus in the first scale we have one sub-band which is coded by one feature value, in the second scale thirty two sub-bands which are coded by 32x1 feature values and in the third and fourth scale we get 64 sub-bands which are coded by 64x1 feature values, finally in the fifth scale we get one sub-band which is coded by a single features value. Thus, our feature vector have 1 row which contains 162 values of features extracted from 162 sub-bands for 5 level decomposition [21]. Similarly for 3 level and 4 level decomposition it contains 66 and 130 feature values with 1, 64, 1 and 1, 64, 64, 1 sub band features respectively.

4.4 Optimal feature set selection

In order to select the best transform based features in terms of curvelets and wavelets which represents the vascular structure, a detailed analysis is done by reconstructing the images with varying number of transform coefficients.

-

(i)

Wavelet based feature selection:

In this wavelet decomposition is performed on the infant retinal images using various families of Daubechies wavelets. Inorder to select the number of decomposition levels suited for our application, we have analysed the wavelet decomposition in different levels like level one, level two and level three using various Daubechies families. Figure 14 shows the images reconstructed by taking only the detail bands of level 1, 2 and 3 decomposition using various families. From these we can notice that detail bands of one level decomposition are more predominant in visualizing blood vessel structure of infant images compared to detail bands of two level and three level decomposition. This may be due to the presence of unwanted details or noises that get introduced (while going for more finer details) at the higher level decomposition bands. Also among this various Daubechies families the results shows that level 1 decomposition of Db1 wavelet represents the vascular structure more efficiently compared to other wavelets and hence level one decomposition of Db1 wavelets is used for all further analysis.

To select the best band of Db1(Haar) wavelet transform which is useful for the analysis of blood vessel extraction, images were reconstructed using different combinations of detailed frequency bands. Among the various reconstructions HH band of one level decomposition gives more accurate results for blood vessel structures as shown in Fig. 15. Thus our further analysis takes only the HH band of one level decomposition of Db1(Haar) wavelet to compute the feature vectors for Plus disease classification.

-

(ii)

Curvelet based feature selection:

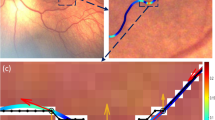

To select the best decomposition scales and levels of curvelet transform features suited for Plus disease classification, we have performed the reconstruction of curvelet coefficients in different scales and reached into a conclusion that detail bands of scale 3 gives better result for blood vessel extraction with segmented images as reference compared to other scales as in Fig. 16. This may be due to the presence of unwanted details that get imposed while going for more finer details.

The corresponding scale 3 coefficients are plotted in Fig. 17, these coefficients are arranged in a multi-scale, multi-directional way to reflect the geometric feature of the image and are organized in a hierarchical structure with the finest band capturing the highest frequency information such as fine details and noise, the intermediate or detail band capturing the medium-scale features such as edges and curves and the coarser band capturing the lower frequency information such as large-scale features and smooth variations. At the detail band, there are four strips in each band where the curvelet coefficients are further decomposed into sub-bands that correspond to different orientations in horizontal, vertical, and diagonal directions with the number of sub-bands depending on the choice of the directional filters we used in the decomposition.

The reconstruction using different sub bands is performed to analyse which sub band shows better results for extraction of vascular features. The analysis shows that sub band 2 represents blood vessels more accurately compared to other sub bands as shown in Fig. 18.

Because of the symmetry of the directional band pass images at each scale, only one half of the directional band pass images are sufficient for feature extraction. The reason why only first half of the total sub bands at a resolution level are considered for feature calculation is that, the curvelet at angle Θ produces the same coefficients as the curvelet at angle (Θ + π) in the frequency domain. Therefore, considering half of the total number of sub bands at each scale reduces the total computation time for the feature vector formation [37]. Thus we have performed a dimensionality reduction technique by employing this symmetric property using a thresholding method described in Fig. 19.

Reconstructed images at different thresholding levels of subband 2 of scale 3 decomposition is shown in Fig. 20. From this, we can notice that blood vessel structers are more predominant in the reconstruction of highest 50% of curvelet coefficients and thus follows the symmetric property.

The most interesting fact about curvelets is that it has been developed specially to represent objects with ’curve punctuated smoothness’ .i.e. objects which display smoothness except for discontinuity along a general curve; images with edges would be good example of this kind of objects. Wavelet transform has been profusely employed to address different problems of pattern recognition and computer vision because of their capability of detecting singularities. But, though wavelets are good at representing point singularities in both 1D and 2D signals, they fail to detect curved singularities efficiently. Figure 21 shows the edge representation capability of wavelet (left) and curvelet transform (right). For the square shape of wavelets at each scale, more wavelets are required for an edge representation than that compared to the number of required curvelets, which are of elongated needle shape. One more novelty of curvelet transform is that it is based on anisotropic scaling principal, whereas wavelets rely on isotropic scaling.

In the case of curvelet and wavelet transform, extracting features from these sub-band images and representing in a compact form is a major problem [44]. To overcome this we encode each sub-band images using its energy and standard deviation. Since the number of energy and standard deviation features of wavelet transform is less compared to curvelet transform, because of their lack of representing orientation features, we also extract the histogram features of wavelet tansform to make our analysis.

-

(i)

Energy: In signal processing, the energy of a signal X is defined as the area under the squared magnitude of the considered signal i.e., mathematically

-

(ii)

Standard deviation: It is a measure of contrast in an image. Larger the standard deviation, higher is the contrast.

Here, X is value in data points, μ is mean of data points and N is number of data points.

-

(iii)

Histogram Feature: Compute by partitioning the values in wavelet transform in to bins of uniform width by using an automatic binning algorithm.

4.4.1 Statistical analysis

Statistical analysis of features have an important role to validate their significance in Plus disease classification. Analysis of vascular features using kruskal-wallis test is shown in [28] and it can be concluded that vascular features namely average tortuosity, maximum width, leaf node count and vessel density have significant discrimination in the case of Plus and No Plus classes and hence significant for classification.

Here, we have evaluated the statistical significance of extracted transform based features using one-way analysis of variance (ANOVA) test. This test uses the variation (variance) within the classes and translates it into variation (i.e. differences) between the classes, taking into account the number of subjects in the classes. In this analysis the null hypothesis is that there is no statistically significant differences between the feature values of the two classes called Plus and No Plus. If the observed differences are high then it indicates that the difference between the features for the Plus and No Plus classes are statistically significant (lower ’p’ value). To analyse the effect of transform based features like wavelet and curvelet for Plus disease classification, we analysed them individually. If the p value is less than 0.05 then the feature calculated for each classes are statistically different and thus null hypothesis is rejected [14].

The graphical plots of ANOVA test for transform based features of Plus and No Plus images are shown in Fig. 22. The box plot function in Matlab, enables clear comparisons to be made between the significance of features to each classes by displaying a box and whisker plot. The box has lines at the lower quartile, median, and upper quartile values, while whiskers are lines extending from each end of the box to show the extent of the rest of the data and outliers are data with values beyond the ends of the whiskers. In a notched box plot, the notches represent a robust estimate of the uncertainty about the medians for box-to-box comparison. Boxes whose notches do not overlap indicate that the medians of the two groups differ at the 5% significance level.

We can see that there is a slight overlapping between the notches of wavelet transform features in terms of energy, standard deviation and histogram for the Plus and No Plus classes, while there is a remarkable difference between the notches of curvelet transform features in term of energy and standard deviation for the Plus and No Plus classes. Also the spacing between the different part of the boxes shows degree of skewness in the data points. In the case of curvelet energy features we can see a large skewness in the case of Plus disease than curvelet standard deviation features and wavelet features.

A small p-value of about 2.29863e-08 has been obtained for curvelet energy coefficient, showing that it rejects the null hypothesis that all class means are identical, while in the case of curvelet standard deviation features it is about 0.0045. Thus it implies that curvelet energy features are highly capable for distinguishing between Plus and No Plus images than curvelet standard deviation features, and have a major significance in the classification of Plus disease. In the case of wavelet energy, standard deviation and histogram features the p-value is not less than 0.05 indicating that it accepts the null hypothesis and thus shows its lower discriminating power for Plus disease classification.

4.5 Classification

An algorithms for the classification of Plus and No Plus cases are chosen based on the applications and the availability of data sets. Since we have limited data sets with 97 images of No Plus category and 81 images of Plus category, the study mainly aims to find a suitable set of features to aid better classification of Plus disease. The same set of features which works for one classifier may not give optimal performance for another classifier. However, since the task at hand is a binary classification problem with No Plus being the negative class and Plus being a positive class, we have performed the analysis by using an Artificial Neural Network (ANN) architecture.

Artificial Neural Networks (ANNs) classifier have been recognised as powerful and cost-effective tool for solving a large variety of problems using supervised learning approach. ANN uses the method of gradient descent and looks for the minimised error function in weight space by back propagation [41]. ANN architecture have input layer with neurons equal to the size of the feature vector. The output layer has only one neuron to distinguish between Plus and No Plus. The number of hidden layers and neuron in the hidden layers are empirically determined. We have varied the number of the neurons from 5 to 150 and chosen the one which gives the best accuracy. The Mean square error (MSE) to evaluate the errors for each output in individual iteration is represented mathematically by (11). Where, N is the number of iterations, Ei is actual output and E0 is out of the model.

In order to select the best architecture, we conducted the cross validation technique by using k-fold method. We analysed the ANN classifier result by tuning the hyper parameters like number of hidden layers, neurons in each layer, number of epoch, learning rate, training algorithms and train, validation and test split ratio as per syntax in (12) and the best network parameters are listed in Table 2.

5 Results

Plus/No Plus classification of ROP disease is performed using various supervised machine learning techniques. The results are evaluated in terms of Accuracy, Sensitivity and Specificity. The data used in this research is obtained from Narayana Nethralaya Eye Hospital, Bangalore, India. 178 Infant retinal images with 87 Plus and 99 No Plus cases have been assigned as the output target in machine learning techniques. All the results have been simulated in an i7 Processor with 64 GB RAM and using a 64-bit Windows-10 operating system in the MATLAB R2019a (64-bit) environment.

We have analyzed the performance of Plus disease classification system using various combinations of vascular features such as average tortuosity, leaf node count, vessel density, maximum width [28], and transform based features taking the energy and standard deviation of the curvelet coefficients and energy, standard deviation and histogram features of wavelet coefficients.

The performance parameters are calculated from the confusion matrix, which gives a summary of correct and incorrect predictions. Figure 23 shows the confusion matrix for a two-class problem. Diagonal entries show the count of all the correctly classified samples and the off-diagonal element represents misclassification, i.e., class i is predicted as class j. The performance matrices are computed using (13) to (15).

Where True Positives (TP) represents the case where abnormal images (Plus disease) are correctly detected as abnormal, True Negatives (TN) gives the case where normal images (No Plus) are correctly detected as normal. Similarly False Positives (FP) denotes images which are wrongly classified as abnormal (Plus disease) and in False Negatives (FN), the images wrongly classified as normal (No Plus).

The analysis of wavelet transform features in the classification of Plus disease in terms of energy, standard deviation and histogram features are shown in Tables 3, 4 and 5 respectively. The third column indicates the performance of the classifier considering only the wavelet features. The fourth column shows the results of adding the four vascular features along with the wavelet features. We have extracted the histogram features for various bin widths like 10, 20, 30 etc and noticed that bin width of 10 gives better result for Plus disease classification as in Table 5. From these tables we can reach in to a conclusion that HH1 band of energy of wavelet transform with four vascular features shows improvement in accuracy, sensitivity and specificity compared to other sub bands, standard deviation and histogram features.

Tables 6 and 7 shows the classification results for the proposed ANN architecture using the curvelet energy and curvelet standard deviation features respectively. The results are given for various thresholding levels of energy and standard deviation of subband 2 of scale 3 curvelet coefficients. The third column indicates the performance of the classifier considering only the curvelet features. The fourth column shows the results of adding the four vascular features along with the curvelet features.

From Tables 6 and 7 it can conclude that top 50% of the curvelet energy features along with four vascular features used have more significance in improving the accuracy, sensitivity and specificity values compared to curvelet standard deviation features and wavelet transform features because of their discriminating nature. Thus total number of features which gives improved accuracy for the proposed ANN architecture is 32 transform based features and 4 vascular features and totally 36 input features.

6 Discussions

The algorithm proposed for Plus disease classification has taken care of the various deformations that affects the ROP images by incorporating appropriate preprocessing techniques such as Gaussian filtering and high boost filtering. This eliminates the noise and illumination variations in the image. Also, this leads to improved contrast and enhanced vascular structure.

The training performance plot of the proposed ANN classifier for the best result in Table 6 is shown in Fig. 24. From this, we can see that both training loss and validation loss end up being roughly the same, hence there is perfect fitting.

Figure 25(a) shows ANN classifier’s testing confusion matrix. All confusion matrices with first two diagonal cells demonstrate the number and percentage of correct classifications by the network. This test confusion matrix plots 96.3% of classification are correct, and 3.7% are incorrect for the proposed system. Figure 25(b) shows the corresponding Receiver operating characteristics (ROC) curve which indicates the correct positive rate and false positive rate in different edge setting of the network, thus illustrates a premium percentage result for the system. The perfect results are obtained when all the test points are concentrated on the upper left corner of the ROC curve [12]. The ROC curve shown has more concentration of points towards upper left corner with the Area Under the Curve (AUC) as 0.9583.

Table 8 shows the performance analysis of Plus/No Plus classification with the other popular machine learning classifiers by using the same feature values which gives the best result for the proposed ANN architecture. From this table we can reach to a conclusion that our proposed ANN classifier gives better result compared to the conventional classifiers that are used for analysis. The parameter settings of used classifiers are given below,

-

Logistic regression : Batch size = 100, Regularization = L2 Regularization, Ridge parameter = 1.0E-8, Learning rate = 1.0E-5.

-

Naive Bayes : Batch size = 100.

-

Random Tree : Batch size = 100, Minimum total weight of the instances in a leaf = 1, Minimum Variance Probability = 0.001.

-

Random Forest : Batch size = 100, Bag Size Percent = 100, Number of iterations or number of trees = 100.

-

Support Vector Machines : Batch size = 100, Complexity parameter (C) = 0.1, Calibrator = Logistic, Epsilon for round-off error = 1.0E-12, Filter Type = Normalizing, kernel= Polykernal, Tolerance Parameter = 0.001.

The proposed method is also compared with similar existing works for Plus/No Plus classification. A direct comparison is not possible since there are wide variations with these methods in terms of number of images, features extracted for analysis and type of classifier employed as well the labelling of classes. However from the Table 9, it is clear that proposed method offers good performance on 178 images (81 Plus and 97 No Plus)in terms of accuracy, sensitivity and specificity by using vascular features like toruosity, width, leaf node count, and vessel density along with transform based feature in terms of energy of curvelet coefficients using a neural network classifier.

7 Conclusion

The major aim of this work is to develop a Neural Network classifier with optimal set of features with zero false negative to aid in the screening of ROP. A total of 178 images are taken for analysis, of which 97 images are healthy with the No Plus disease, and 81 images show vessel abnormalities denoting the presence of Plus Disease. This work is mainly focused on optimal feature extraction for the better classification of plus disease in ROP diagnosis. Along with vascular features available in literature, transform based features of Wavelet and Curvelet transforms are extracted for analysis. A neural network classifier with four vascular features representing the tortuosity, width and vessel abnormalities along with energy of the Curvelet coefficients (with highest 50% coefficients of sub band2 of level 3 decomposition) gave better results compared to the existing methods in literature in terms of accuracy (96%), sensitivity (100%) and specificity (92%). This shows the ability of Curvelet transform in representing the vascular features for the efficient classification of Plus disease. For the limited data set taken for experimentation, the proposed ANN architecture with 100% sensitivity gives promising results for a deployable ROP Screening system. The experimental analysis can be extended further by extracting new features using various descriptors like LBP, MLBP, LTP, SIFT and SURF algorithms [13] for identifying an improved feature set which can give better accuracy and specificity values. The improvement of performance matrices by employing deep neural network based architectures on an extended dataset to make a generalizable architecture which can be deployed in a hospital environment would be an immediate future scope of the work.

Data Availability

The datasets generated during and/or analysed during the current study are not publicly available due to ethical/legal/commercial restrictions.

References

AlZubi S, Islam N, Abbod M (2011) Multiresolution analysis using wavelet, ridgelet, and curvelet transforms for medical image segmentation. Journal of biomedical imaging, pp 1–8

AlZubi S, Islam N, Abbod M (2011) Multiresolution analysis using wavelet, ridgelet, and curvelet transforms for medical image segmentation. Journal of Biomedical Imaging, pp 1–8

Ataer-Cansizoglu E, Bolon-Canedo V, Campbell JP, Bozkurt A, Erdogmus D, Kalpathy-Cramer J, Patel S, Jonas K, Chan RP, Ostmo S, Chiang MF (2015) Computer-based image analysis for plus disease diagnosis in retinopathy of prematurity: performance of the “i-ROP” system and image features associated with expert diagnosis. Transl Vis Sci Techn 4(6):5

Ataer-Cansizoglu E, Kalpathy-Cramer J, You S, Keck K, Erdogmus D, Chiang MF (2015) Analysis of underlying causes of inter-expert disagreement in retinopathy of prematurity diagnosis. Methods Inf Med 54(01):93–102

Attallah O (2021) MB-AI-His: histopathological diagnosis of pediatric medulloblastoma and its subtypes via AI. Diagnostics 11(2):359

BahadarKhan K, A Khaliq A, Shahid M (2016) A morphological hessian based approach for retinal blood vessels segmentation and denoising using region based otsu thresholding. PloS one 7:e0158996

Bhuiyan MN, Shamsujjoha M, Ripon SH, Proma FH, Khan F (2019) Transfer learning and supervised classifier based prediction model for breast cancer. In: Big Data Analytics for Intelligent Healthcare Management Academic Press, pp 59–86

Brown JM, Campbell JP, Beers A, Chang K, Ostmo S, Chan RP, Dy J, Erdogmus D, Ioannidis S, Kalpathy-Cramer J, Chiang MF (2018) Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol 136(7):803–10

Byra M, Styczynski G, Szmigielski C, Kalinowski P, Michałowski Ł, Paluszkiewicz R, Ziarkiewicz-wróblewska B, Zieniewicz K, Sobieraj P, Nowicki A (2018) Transfer learning with deep convolutional neural network for liver steatosis assessment in ultrasound images. Int J Comput Assisted Radiol Surg 13:1895–903

Chiang MF, Gelman R, Jiang L, Martinez-Perez ME, Du YE, Flynn JT (2007) Plus disease in retinopathy of prematurity: an analysis of diagnostic performance. Trans Am Ophthalmol Soc 105:73

Chiang MF, Quinn GE, Fielder AR, Ostmo SR, Chan RP, Berrocal A, Binenbaum G, Blair M, Campbell JP, Capone Jr A, Chen Y (2021) International classification of retinopathy of prematurity. Ophthalmol 128 (10):e51–68

Erguzel TT, Ozekes S, Tan O, Gultekin S (2015) Feature selection and classification of electroencephalographic signals: an artificial neural network and genetic algorithm based approach. Clin EEG Neurosci 46(4):321–6

Fekri-Ershad S (2021) Cell phenotype classification using multi threshold uniform local ternary patterns in fluorescence microscope images. Multimed Tools Appl, pp 12103–16

Ferreira JE, Pinheiro MT, dos Santos WR, Maia RD (2016) Graphical representation of chemical periodicity of main elements through boxplot. Educació,n química 27(3):209–16

Gopal L, Sharma T, Ramachandran S, Shanmugasundaram R, Asha V (1995) Retinopathy of prematurity: a study. Indian J Ophthalmol 43 (2):59

Gschließer A, Stifter E, Neumayer T, Moser E, Papp A, Pircher N, Dorner G, Egger S, Vukojevic N, Oberacher-Velten I, Schmidt-Erfurth U (2015) Inter-expert and intra-expert agreement on the diagnosis and treatment of retinopathy of prematurity. Am J Ophthalmol 160(3):553–60

Hu J, Chen Y, Zhong J, Ju R, Yi Z (2018) Automated analysis for retinopathy of prematurity by deep neural networks. IEEE Trans Med Imaging 6;38(1):269–79

Huang YP, Vadloori S, Chu HC, Kang EY, Wu WC, Kusaka S, Fukushima Y (2020) Deep learning models for automated diagnosis of retinopathy of prematurity in preterm infants. Electronics 9(9):1444

Huang YP, Vadloori S, Kang EY, Wu WC (2022) Computer-Aided Detection of retinopathy of prematurity severity in preterm infants via measurement of temporal vessel width and angle. Front Pediatr 10:11

Jemshi KM, Gopi VP, Issac Niwas S (2018) Development of an efficient algorithm for the detection of macular edema from optical coherence tomography images. Int J CARS 1369–77

Jero SE, Ramu P, Ramakrishnan S (2015) ECG Steganography using curvelet transform. Biomed Signal Process Control 22:161–9

Jomier J, Wallace DK, Aylward SR (2003) Quantification of retinopathy of prematurity via vessel segmentation. In: Medical Image Computing and Computer-Assisted intervention-MICCAI 2003: 6th International Conference, montréal, Canada Proceedings Springer Berlin Heidelberg, pp 620–626

Kiely AE, Wallace DK, Freedman SF, Zhao Z (2010) Computer-assisted measurement of retinal vascular width and tortuosity in retinopathy of prematurity. Archives Ophthalmol 128(7):847–52

Lei B, Zeng X, Huang S, Zhang R, Chen G, Zhao J, Wang T, Wang J, Zhang G (2021) Automated detection of retinopathy of prematurity by deep attention network. Multimed Tools Appl 80:36341–60

Lei B, Zeng X, Huang S, Zhang R, Chen G, Zhao J, Wang T, Wang J, Zhang G (2021) Automated detection of retinopathy of prematurity by deep attention network. Multimed Tools Appl 80:36341–60

Mao J, Luo Y, Liu L, Lao J, Shao Y, Zhang M, Zhang C, Sun M, Shen L (2020) Automated diagnosis and quantitative analysis of plus disease in retinopathy of prematurity based on deep convolutional neural networks. Acta Ophthalmol 98(3):e339–45

Mills MD (2007) Evaluating the cryotherapy for retinopathy of prematurity study (CRYO-ROP). Arch Ophthalmol 125(9):1276–81

Nisha KL, Sreelekha G, Sathidevi PS, Mohanachandran P, Vinekar A (2019) A computer-aided diagnosis system for plus disease in retinopathy of prematurity with structure adaptive segmentation and vessel based features. Comput Med Imaging Graph 74:72–94

Oloumi F, Rangayyan RM, Casti P, Ells AL (2015) Computer-aided diagnosis of plus disease via measurement of vessel thickness in retinal fundus images of preterm infants. Comput Biol Med 1(66):316–29

Pour EK, Pourreza H, Zamani KA, Mahmoudi A, Sadeghi AM, Shadravan M, Karkhaneh R, Pour RR, Esfahani MR (2017) Retinopathy of prematurity-assist: Novel software for detecting plus disease. Korean J Ophthalmol 31(6):524–32

Quinn GE (2005) The international classification of retinopathy of prematurity revisited. Arch Ophthalmol 123:991–9

Rajashekar D, Srinivasa G, Vinekar A (2016) Comprehensive retinal image analysis for aggressive posterior retinopathy of prematurity. PloS one 11 (10):e0163923

Rani P, Elagiri Ramalingam R, Rajamani KT, Kandemir M, Singh D (2016) Multiple instance learning: Robust validation on retinopathy of prematurity. Int J Ctrl Theory 9:451–9

Rani P, Rajkumar ER (2016) Classification of retinopathy of prematurity using back propagation neural network. Int J Biomed Eng Technol 22(4):338–48

Repka MX, Tung B, Good WV, Capone A, Shapiro MJ (2011) Outcome of eyes developing retinal detachment during the Early Treatment for Retinopathy of Prematurity study. Arch Ophthalmol 129(9):1175–9

Samant A, Kadge S (2017) Classification of a retinal disease based on different supervised learning techniques. Int J Sci Res Netw Secur Commun 5 (3):9–13

Schmitt A, Wessel B, Roth A (2014) An innovative curvelet-only-based approach for automated change detection in multi-temporal SAR imagery. Remote Sens 6(3):2435–62

Shafiei F, Ershad SF (2020) Detection of lung cancer tumor in CT scan images using novel combination of super pixel and active contour algorithms. Traitement du Signal 37(6):1029–35

Shahriar MT, Li H (2020) A study of image pre-processing for faster object recognition. arXiv:2011.069281

Tan Z, Simkin S, Lai C, Dai S (2019) Deep learning algorithm for automated diagnosis of retinopathy of prematurity plus disease. Transl Vis Sci Technol 8(6):23

Thangaraj S, Periyasamy V, Balaji R (2018) Retinal vessel segmentation using neural network. IET Image Process 12(5):669–78

Vinekar A (2011) IT-enabled innovation to prevent infant blindness in rural India: the KIDROP experience. Journal of Indian Business Research

Vinekar A, Mangalesh S, Jayadev C, Gilbert C, Dogra M, Shetty B (2017) Impact of expansion of telemedicine screening for retinopathy of prematurity in India. Indian J Ophthalmol 65(5):390

Vyas R, Kanumuri T, Sheoran G, Dubey P (2019) Efficient iris recognition through curvelet transform and polynomial fitting. Optik 185:859–67

Wallace DK, Jomier J, Aylward SR, Landers IIIMB (2003) Computer-automated quantification of plus disease in retinopathy of prematurity. J Am Assoc Pediatr Ophthalmol Strabismus 7(2):126–30

Wang J, Ju R, Chen Y, Zhang L, Hu J, Wu Y, Dong W, Zhong J, Yi Z (2018) Automated retinopathy of prematurity screening using deep neural networks. EBioMedicine 35:361–8

Wang J, Ju R, Chen Y, Zhang L, Hu J, Wu Y, Dong W, Zhong J, Yi Z (2018) Automated retinopathy of prematurity screening using deep neural networks. EBioMedicine 35:361–8

Worrall DE, Wilson CM, Brostow GJ (2016) Automated retinopathy of prematurity case detection with convolutional neural networks. In: Deep Learning and data labeling for medical applications: First international workshop, LABELS 2016, and Second international workshop, DLMIA 2016, Held in conjunction with MICCAI 2016, Athens, Greece, Springer International Publishing, Proceedings 1 2016, pp 68–76

Yadav P (2018) A brief description of wavelet and wavelet transforms and their applications. Int J Stat Appl Math 3(1):266–71

Yavuz Z, Köse C (2017) Blood vessel extraction in color retinal fundus images with enhancement filtering and unsupervised classification. Journal of healthcare engineering

Yildiz V, Ioannidis S, Yildiz I, Tian P, Campbell JP, Ostmo S, Kalpathy-Cramer J, Chiang MF, Erdoğmuş D, Dy J (2021) Structural Visual Guidance Attention Networks In Retinopathy Of Prematurity. In: 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI) (pp 353–357). IEEE

Yildiz VM, Tian P, Yildiz I, Brown JM, Kalpathy-Cramer J, Dy J, Ioannidis S, Erdogmus D, Ostmo S, Kim SJ, Chan RP (2020) Plus disease in retinopathy of prematurity: convolutional neural network performance using a combined neural network and feature extraction approach. Transl Vis Sci Technol 9 (2):10

Zhang D, Zhang D (2019) Wavelet transform. Fundamentals of image data mining: Analysis, Features, Classification and Retrieval, pp 35–44

Zhang R, Zhao J, Xie H, Wang T, Chen G, Zhang G, Lei B (2022) Automatic diagnosis for aggressive posterior retinopathy of prematurity via deep attentive convolutional neural network. Expert Syst Appl 187:115843

Zhang R, Zhao J, Xie H, Wang T, Chen G, Zhang G, Lei B (2022) Automatic diagnosis for aggressive posterior retinopathy of prematurity via deep attentive convolutional neural network. Expert Syst Appl 187:115843

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by K M Jemshi, G. Sreelekha, P. S. Sathidevi and Poornima Mohanachandran. The first draft of the manuscript was written by K. M. Jemshi and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval

This study is approved by the institutional review board and ethics committee of Narayana Netralaya eye hospital, Bangalore, India.

Consent for Participate

Informed consent was taken from the parents of the study participants. Also, data were de-identified following encoding to ensure confidentiality of study participants.

Consent for Publication

The authors affirm that parents of human research participants provided informed consent for publication of the images in Fig. 1a, b.

Competing Interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jemshi, K.M., Sreelekha, G., Sathidevi, P. et al. Plus disease classification in Retinopathy of Prematurity using transform based features. Multimed Tools Appl 83, 861–891 (2024). https://doi.org/10.1007/s11042-023-15430-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15430-w