Abstract

In recent years, artificial intelligence and its tools are demonstrated enough potential for analyzing medical images. Several deep learning models have been proposed in previous studies for gastrointestinal (GI) tract like ulcers, polyps, bleeding, and other lesions. Hand-operated investigation of these lesions requires time, cost, and an expert physician. Automatic detection and classification of GI tract lesions are vital because misdiagnosis of them can affect the quality of human life. In our study, an effective model is proposed for a GI tract classification with the best performance. The proposed method’s main aim is to classify GI tract lesions precisely from endoscopic video frames automatically. The different scenarios are designed, assessed, and compared by implementing 5-fold cross-validation on the KVASIR V1 dataset to achieve this aim. This dataset includes anatomical landmarks (pylorus, z-line, and cecum), pathological findings (esophagitis, ulcerative colitis, and polyp), and polyp removals (dyed lifted polyps, and dyed resection margins) as output classes. Each class includes 500 images, and an image’s resolution varies from 750 × 576 to 1920 × 1072 pixels. These first and second scenarios are based on deep neural networks (DNNs). However, in the first scenario, a novel approach is proposed for visualizing 2-D data maps from features extracted from the convolutional auto-encoder (CAE). The last one is schemed based on pre-trained convolutional neural networks (CNNs). The experimental results illustrate the average accuracy of the first, second, and third scenarios is 99.87 ± 0.001, 92.07 ± 0.086, and 90.55 ± 0.111, respectively. The first scenario outperforms the compared ones with an average accuracy of 99.87 ± 0.001 and an AUC of 100.00 ± 0.000.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

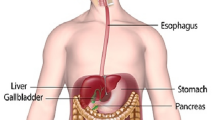

Some illnesses can affect the gastrointestinal (GI) tract, according to GLOBOCAN 2020 estimates of cancer incidence and mortality produced by the International Agency for Research on Cancer [35]. Esophageal, stomach, and colorectal cancer are the most common cancers worldwide [35]. In 2020, 1.9 million new cases and 935,000 deaths from colorectal cancer were estimated, which is the third in incidence and the second in mortality [35]. Stomach cancer, with 1.1 million new cases and 769,000 deaths, has the fifth for incidence and the fourth for mortality, grades globally [35]. Esophageal cancer with 604,000 new cases is the seventh in incidence and with 504,000 deaths is the sixth in mortality in total [35].

With the advent of the minimally invasive surgeries (MIS), like endoscopy for examination of the upper GI tract and colonoscopy for screening the lower GI tract, physicians use MIS techniques for lesions, ulcers, polyps, and other abnormal finding and removal [28]. Failing that diagnoses the mentioned abnormalities during GI screening can lead to growing the diseases such as GI malignancies in patients in the next few years [12]. To reduce the rate of misdiagnosis, previous studies have focused on the automatic identification, classification and localization of the abnormalities used for automated analysis of medical images [8, 11, 14, 20, 30, 32].

Some previous studies have designed and proposed the automatic detection of polyps [32], tumors [36], cancer [19], erosion and ulcer [2], and bleeding [4, 16, 27].

Various previous studies have designed and proposed methods based on conventional machine learning models [19, 23, 36] and deep neural networks (DNNs) [4, 32] as the newer branch of machine learning models [5]. Previous studies have shown that DNNs can extract, analyze, and learn the valuable features from the raw dataset automatically [5, 11, 20, 30]. The principal prerequisite of using and training the conventional machine learning models is extracting handcrafted features [5]. Some previous studies combines handcrafted features and DNNs features to enhance the performance [10, 16].

The different architectures of DNNs are used for the automatic identification GI tract abnormalities in the previous study, like convolutional neural network (CNN) [7, 37], auto-encoders (AE) [13], regionbased convolutional neural networks (R-CNN) [39], generative adversarial networks (GANs) [31].

In [7], the researchers have proposed a CNN to classify bleeding capsule endoscopic video frames from non-bleeding ones. They have used pre-trained AlexNet then trained its last dense layers and also, SegNet which is used for semantic segmentation of the bleeding zones [7].

Another previous study has proposed a method consisting of two sequential convolutional encoder-decoder to extract features from images and detect polyps automatically [13]. The novelty of their proposed approach has been using a hetero-associator (hetero-encoder) in front of the model, which generates labeled images with a specific similarity to the actual image [13].

A study has recommended a feature learning method named stacked sparse auto-encoder with image the manifold of image constraint (SSAEIM), to prepare discriminative explanation of polyps and recognize them into the images of Wireless capsule endoscopy (WCE) [38]. They have assumed that the images with identical classes similar features and the others should be different enough [38].

Our previous study has proposed a semi-supervised deep model for anatomical landmark detection from endoscopic video frames. Our semi-supervised convolutional neural network (SSCNN) has been functional when accessibility to the labeled video frames was difficult. We have examined our previously proposed method on the KVASIR V1 dataset [28].

Previous research is used the representational power of convolutional auto-encoders (CAE) networks for feature extraction. In [21], a CNN, with three convolutional layers, is displaced with average pooling layers to extract smooth features from Optical Emission Spectroscopy (OES) data. In the other study, the researcher used CAE to learn audio features. This pipeline is for converting source lectures into target ones. This proposed method achieves high-level features that consist of an authentic representation of the audio file [6].

Feature extraction is a necessary step in many machine learning problems. The advent of automatic feature extraction methods has required the conversion and representation of sophisticated data into lower dimensional without losing any information. This approach in feature extraction is the basis of the inspiration and accomplishment of novel technologies in computer vision.

As demonstrated by previous studies, researchers have tended to use DNNs in recent years because of their abilities in various areas. A combination of DNNs and using their advantages of them together to find the lesions in endoscopic frames can be helpful.

Previous studies have shown that discriminating some classes from each other would be difficult, and some automatic models have not demonstrated desirable performance in distinguishing them [2]. Some previous researchers have addressed this issue by working on a new extended version of the dataset [3, 25] or focusing on specific lesions [15, 29].

The prime purpose of the proposed method is to develop a novel approach based on the combination of DNNs for classifying GI tract lesions from endoscopic video frames. The second is to provide a novel method that extracts high-level features from the endoscopic video frames and depicts them into a 2-D data map.

The main novelties of the proposed method can summarize as the following:

-

Proposing a new approach combining DNNs to classify the GI tract lesions from endoscopic video frames.

-

Utilizing the benefits of CAE and 2-D visualization together.

-

Extracting high-level features with CAE and converting them into 2-D data maps.

-

Training CNN with novel 2-D visualization data maps.

2 Materials and methods

In the proposed method, three different scenarios are designed, proposed and compared for classifying GI tract lesions from endoscopic video frames as shown by Fig. 1.

Three designed and proposed different scenarios for classifying GI tract lesions from endoscopic video frames. a The first scenario for classifying GI tract lesions from endoscopic video frames (AE-2DV-CNN). b The second scenario for classifying GI tract lesions from endoscopic video frames (CNN-Sc). c The third scenario for classifying GI tract lesions from endoscopic video frames (Incept_TL)

As illustrated by Fig. 1, the first proposed scenario is composed of encoding N patches of each image using CAE, visualizing the features into a 2-D data map and considering it as an image, and feeding 2-D data map image into CNNs as their input. Since the first proposed scenario combines using Convolutional Auto-Encoders, the patches extracted from each image feed into Convolutional Auto-encoder as input. 2-D visualization and CNNs, we named this scenario the CAE-2DV-CNN scenario. The second scenario consists of training the end-to-end CNN for classifying the KVASIR V1 dataset, so we named this CNN-Sc. The last scenario uses the Inception-V3 as transfer learning, so we called that Incept_TL.

More details of the main steps of each scenario have described in the following subsections.

2.1 Dataset description

Several studies have used the KVASIR V1 dataset for designing and examining their proposed methods [2, 28, 29]. We use this dataset. It consists of 4,000 images based on anatomical landmarks, pathological findings and polyp removal [28]. These images have been listed into eight classes. Each class includes 500 images with different resolutions ranging from 720 × 576 to 1920 × 1072 pixels [28].

K-fold cross validation (C.V.) is a sampling technique that is portioned into k-equal subsets [34]. For each k, one of these k subsets is considered the test dataset, and the others are considered the training dataset. In all scenarios of the proposed method, five-fold cross validation is used for sampling from endoscopic video frames to build the training and test datasets. In each fold, 80%, or 3,200 images used for training, and 20%, or 800 images used for testing.

2.2 The first proposed scenario (CAE-2DV-CNN) for classifying GI tract lesions from endoscopic video frames

The first scenario, as shown in Fig. 1, includes image processing and patch extraction, encoding image by CAE, 2-D visualization of the feature map as a data map, training CNN classifier based on data map and evaluation and validation.

2.2.1 Image preprocessing and patch extraction

At first, images were resized into 64 × 64 pixels. Then N patches (N = 1089) were extracted from each image with a size of 32 × 32 pixels.

2.2.2 Encoding image by convolutional auto-encoder (CAE)

An auto-encoder (AE) is a specific type of Artificial Neural Network (ANN) that aim is to regenerate the inputs under the unsupervised learning fashion [22]. A typical AE consists of two main blocks, including an encoder block compressing the inputs into the low dimensional representation and a decoder block that is trained to reconstruct the inputs from the features extracted with the encoder block [22]. The encoder block is a strong feature extractor that can be designed by suitable output layers and then fine-tuned to receive the eligible features [21]. Minimization of the error calculated from the regenerated input images is achieved by optimizing Eq. (1).

In the proposed method, the encoder block takes images as inputs. Therefore, the first layers of the encoder block should be convolutional. Thus, this type of AEs is called a convolutional auto-encoder (CAE). CAE has been used to exploit the power of CNN in feature extraction [21]. The patches which are extracted from each image are fed into CAE as input. The best architecture of CAE among the examined architectures in the proposed method for classifying Gastrointestinal (GI) tract lesions from endoscopic video frames is shown in Table 1.

AE is trained by Adam optimizer [18] with learning rate of 0.001 and a Mean Squared Error (MSE) loss function. At last, for each image, the features which are produced by the encoder’s layer of our designed CAE will be saved. A list of different hyper-parameter values for each proposed scenario is shown in Table 4.

2.2.3 Visualizing data into two dimensions and generating a 2-D data map

In this section, for constructing creative inputs for training by CNN, the features generated by encoder’s block is reshaped into (N, 4*4*8) size. Next, the similarity relationship between the distinctive patches are extracted from each image is visualized by drawing a scatter plot into a 2-dimensional data map. The resolution of new data maps is 378 × 248 pixels. The samples of the 2-D data map for the patches of each image are illustrated in Fig. 2.

2.2.4 Designing and training end-to-end CNN classifier

The images of the 2-D data map are fed to a CNN in an end-to-end fashion to be classified as different classes of GI tract lesions. CNN is a type of deep neural network that can learn hierarchical features from low to high dimensions. The grid search method is c for tuning the hyper-parameters. The hyper-parameters of the examined CNNs tuned by this method include the learning rate, the activation function, dropout rate, batch size, the optimizer, the number of convolutional blocks, and the number of neurons in convolutional layers. Therefore, the architecture of CNN among the compared and examined ones having the best performance on the validation dataset is shown in Table 2.

According to Table 2, CNN architectonics consists of many layers namely convolution, pooling, dropout, fully connected layer, and dense. Any change in the structure of CNN leads to the creation of new architectonics. The convolutional layers consist of filters of different sizes which slide over the input images. By this layer, the feature of the image is learned and saved into a feature map [26]. \(W\) is known as the height of the filters, so, the filter will be \(W\times W\) as the multiple of width to height. For the sake of computing pre-nonlinearity input in each layer (\({\text{x}}_{\text{i}\text{j}}^{\text{l}}\)), the filter works the same as Eq. (2).

In Eq. (2), x is the input, y is the output, w is the convolution filter, and b indicates the bias. Sliding of the convolution filters is extracted features from the input images and reduces the parameters. In addition, the pooling layer is used to reduce the parameters. This layer is used for transitioning the proper features to other layers and is included max-pooling, average-pooling and sum-pooling. The max-pooling that is worn in this study is deliberated by Eq. (3).

In Eq. (3), x is known as the input matrix. In our method, to overcome the vanishing and the exploding gradient after the convolution layer’s operation used the activation functions, ReLU, which is introduced the feature of non-linearity to the DNNs. ReLU is calculated by \(max(0.x)\).

During training the model, ReLU can die, so, the Leaky ReLU, which is indicated by Eq. (4), is used to overcome this problem.

In the last dense layer to classify the inputs, is used the SOFTMAX activation functions which is calculated as Eq. (5).

CNN is trained for 100 epochs with Adam optimizer [18] with learning rate of 0.001 and batch size of 512. The activation function for all layers except last layer is ReLU [24]. The last layer uses Softmax activation function.

As shown in Fig. 1c, the main steps of our proposed and designed AE-2DV-CNN are described in Algorithm 1.

2.2.5 Evaluation and validation

Different scenarios should be assessed by precise metrics to weigh their strength generalizability. These measures include accuracy, precision, recall, F1-Score and Area under Receiver Operating Characteristics (ROC) curve (AUC) [9].

The value of accuracy shows the strength of the model in classifying the data Eq. (6) [9]:

(TP) is the abbreviation of True Positives, (TN) is the abbreviation of True Negative, and N is the all numbers of data records.

Precision denotes what portion of data is predicted exactly like its actual label [9]. This measure calculates as Eq. (7).

Recall is known as true positive rate denoted in Eq. (8) and it shows the portion of positive classes which is predicted right.

(FP) is the abbreviation of False Positives, (FN) is the abbreviation of False Negative.

The F1-measure is the harmonic mean of precision and recall, as is shown in Eq. (9) [9].

In the following, Eqs. (10)-(15) shows the measures which are estimated the performance of the multi classes classification [33]:

In the above equations, NOC is the number of different classes.

2.3 The second scenario (CNN-Sc) for classifying GI tract lesions from endoscopic video frames

In the second scenario, an end-to-end CNN model is designed, and KVASIR V1 dataset is fed into it as its inputs. The hyper-parameter tuning is determined with the Grid search method. The architecture model with the best performance compared to the examined ones is listed in Table 3.

The second scenario is trained for 100 epochs with Adam optimizer [18] with a learning rate of 0.001, and a batch size of 512.

2.4 The third scenario (Incept_TL) for classifying GI tract lesions from endoscopic video frames

For the last scenario, we assess different pre-trained CNNs models such as MobileNet [29], Inception-V3 [15], VGG16 and VGG19 [34] to compare their results. Figure 2 demonstrates the performance measures of different models are evaluated in the last scenario.

As is shown in Fig. 2, Inception-V3 demonstrates the best performance among the compared and examined pre-trained CNNs for transfer learning in the proposed method.

2.4.1 Transfer learning

We use the pre-trained CNNs trained previously on Image-net dataset. The convolutional layers of the pre-trained CNNs are locked to prevent from changing their connection weights. To avoid the overfitting, dropout layers are added. The last layer of CNN is replaced with a dense layer with eight neurons, and the SOFTMAX activation function. Finally, the root-mean-square propagation (RMSprop) optimizer is used with the learning rate of 0.01 to tune last dense layer’s weight. The model is trained for 100 epochs with the batch size of 512. The images are resized to 75 × 75 pixels and are fed into the model as inputs.

Table 4 indicates the best value hyper-parameters of models in different scenarios.

3 Results and discussion

In this section, different scenarios are compared based on the classification performance measures to find the scenario which has the best efficiency.

In Table 5, the performance measures for each proposed scenario for classifying GI tract lesions are reported based on five-fold cross validation strategy.

As illustrated by Table 5, the accuracies of 99.87 ± 0.001, 92.07 ± 0.086, and 90.55 ± 0.111 are achieved for CAE-2DV-CNN, CNN-Sc, and Incept_TL, respectively. So, the first scenario (CAE-2DV-CNN) has the best efficiency compared to the other scenarios. It demonstrates our innovative approach to using 2-D maps instead of initial images to train with CNN is thoughtful.

Some researchers have trained and evaluated their proposed methods on the KVASIR V1 dataset. Their techniques, and the results are listed and are compared with the proposed method in Table 6.

By assessing the performances in Table 6, it is apprehended that our first scenario leads to superior performance compared to the former studies that used the KVASIR V1 dataset.

Figure 3 Illustrates the confusion matrix for each scenario per each data fold used for five-fold C.V. As realized by Fig. 3, the first scenario is classified as 2-D maps correctly. In the last scenario, the misclassification problem alarmed in the previous studies is reduced.

Figure 4 displays Roc curve of each class per fold for different scenarios. As shown in Fig. 4, the AUC of various scenarios is highly desirable..

The confusion matrix for different scenarios per fold. a The first scenario for classifying GI tract lesions from endoscopic video frames (AE-2DV-CNN). b The second scenario for classifying GI tract lesions from endoscopic video frames (CNN-Sc). c The third scenario for classifying GI tract lesions from endoscopic video frames (Incept_TL)

Figure 5 indicates the accuracy and the loss function per epoch in each fold for the various scenarios. As illustrated by Fig. 5, the accuracy and loss function of the first scenario for each fold except, the first fold, are smooth while the second and third scenarios have fluctuated (Fig. 6).

The ROC curve of each class per fold for different scenarios. a The first scenario for classifying GI tract lesions from endoscopic video frames (AE-2DV-CNN). b The second scenario for classifying GI tract lesions from endoscopic video frames (CNN-Sc). c The third scenario for classifying GI tract lesions from endoscopic video frames (Incept_TL)

Training and validation curves of each fold (accuracy and loss function per epoch) for different scenarios. a The first scenario for classifying GI tract lesions from endoscopic video frames (AE-2DV-CNN). b The second scenario for classifying GI tract lesions from endoscopic video frames (CNN-Sc). c The third scenario for classifying GI tract lesions from endoscopic video frames (Incept_TL)

Table 7 represents the processing time details for steps of each scenario in the proposed method, which is calculated by “Google Colab”. The maximum amount of RAM is 12.76 GB and the maximum amount of disk is 68.40 GB, which is assigned to each user. The GPU models in “Google Colab” are NVIDIA K80, P100, P4, T4, and V100 GPUs. All deep learning models have used the python libraries like, Scikit-learn, TensorFlow, and Keras.

The principal goal of the proposed method is to suggest novel scenarios that use the advantages of DNNs for classifying GI tract lesions from endoscopic video frames.

Our proposed method in the first scenario can solve some issues which are reported by the previous studies, such as the misclassification of the classes or overfitting of the models.

In addition, in the first scenario, visualizing a 2-D data map represents an innovative approach that leads to the used of the power of CAE in extracting the high-level features and outstanding performance of the model.

4 Conclusions

Misdiagnosis of GI tract abnormalities is exceedingly common during the screening of the GI tract. The accurate diagnosis of the mentioned abnormalities is highly related to the expertise level of the physician and the quality of the images captured by the endoscope and shown on the monitor. Several previous studies have proposed methods with the power of automatic recognition of GI tract abnormalities.

However, low performance in diagnosing some abnormality types are some instances in which suffered from some limitations and drawbacks.

During the advent of computer vision and artificial intelligence, automatic detection has become a common area of research. DNNs are the subset of machine learning methods based on artificial intelligence with the ability the learning. Extracting high-level features from images leads to learning and accessing the superior performance of these models.

So, we aim to use the DNNs model to visualize the high-level features of each image into a 2-D data map and feed them into CNN.

Therefore, in the proposed method, a novel approach is designed and introduced to classify the GI tract lesions from an endoscopic video frame with superior accuracy. The dataset analyzed in the proposed method is KVASIR V1.

In the proposed method, three various scenarios are designed, and their results are compared. The average accuracy of the first, second, and third scenarios is 99.87 ± 0.001, 92.07 ± 0.086 and 90.55 ± 0.111, respectively. The experimental, results demonstrate the superiority of the first proposed scenario in the proposed method over others compared with other ones, and the previous related works focused on the KVASIR V1 dataset. The main novelty of the first scenario is visualizing a 2-D data map from the features extracted by CAE and feeding them into the CNN as inputs.

As a future research direction, it proposes to use and analyze the extended dataset consisting of more abnormalities like Hyperkvasir which is gathered in recent years [10].

Another research opportunity is using other data analysis techniques to extract features with significant correlation from original images and visualize them into 2-D data maps as the inputs of CNN and evaluate their results.

Data availability

We use KVASIR V1 dataset in this study for designing and examining our proposed which is publicly available at https://datasets.simula.no/kvasir/.

References

Ahmad J, Muhammad K, Lee MY, Baik SW (2017) Endoscopic image classification and retrieval using clustered convolutional features, (in Eng). J Med Syst 41(12):196. https://doi.org/10.1007/s10916-017-0836-y

Asperti A, Mastronardo C (2017) The effectiveness of data augmentation for detection of gastrointestinal diseases from endoscopical images. arXiv preprint arXiv:1712 03689. https://doi.org/10.1016/j.compmedimag.2020.101852

Borgli H et al (2020) HyperKvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Sci Data 7(1):1–14. https://doi.org/10.1038/s41597-020-00622-y

Caroppo A, Leone A, Siciliano P (2021) Deep transfer learning approaches for bleeding detection in endoscopy images. Comput Med Imaging Graph 88:101852. https://doi.org/10.1016/j.compmedimag.2020.101852

Chauhan NK, Singh K (2018) A review on conventional machine learning vs deep learning. In: 2018 International Conference on Computing, Power and Communication Technologies (GUCON), pp 347–352, https://doi.org/10.1109/GUCON.2018.8675097

Elhami G, Weber RM (2019) Audio feature extraction with convolutional neural autoencoders with application to voice conversion. Conference: infoscience

Ghosh T, Chakareski J (2021) Deep transfer learning for automated intestinal bleeding detection in Capsule Endoscopy Imaging. J Digit Imaging. https://doi.org/10.1007/s10278-021-00428-3

Guo X, Yuan Y (2020) Semi-supervised WCE image classification with adaptive aggregated attention. Med Image Anal 64:101733. https://doi.org/10.1016/j.media.2020.101733

Han J, Kamber M, Pei J (2011) Data mining concepts and techniques, 3rd edn. The Morgan Kaufmann Series in Data Management Systems 5(4):83–124. https://doi.org/10.1016/C2009-0-61819-5

Hasan MM, Hossain MM, Mia S, Ahammad MS, Rahman MM (2022) A combined approach of non-subsampled contourlet transform and convolutional neural network to detect gastrointestinal polyp. Multimedia Tools Appl 81(7):9949–9968. https://doi.org/10.1007/s11042-022-12250-2

Heidari M, Mirniaharikandehei S, Khuzani AZ, Danala G, Qiu Y, Zheng B (2020) Improving the performance of CNN to predict the likelihood of COVID-19 using chest X-ray images with preprocessing algorithms. Int J Med Inform 144:104284. https://doi.org/10.1016/j.ijmedinf.2020.104284

Hu H et al (2021) Content-based gastric image retrieval using convolutional neural networks. Int J Imaging Syst Technol 31(1):439–449. https://doi.org/10.1002/ima.22470

Hwang M et al (2020) An automated detection system for colonoscopy images using a dual encoder-decoder model, (in Eng). Comput Med Imaging Graph 84:101763. https://doi.org/10.1016/j.compmedimag.2020.101763

Jain S et al (2021) A deep CNN model for anomaly detection and localization in wireless capsule endoscopy images. Comput Biol Med 137:104789. https://doi.org/10.1016/j.compbiomed.2021.104789

Jha D et al (2020) Kvasir-seg: A segmented polyp dataset. In: International Conference on Multimedia Modeling, 2020. Springer, Berlin, pp 451–462. https://doi.org/10.1007/978-3-030-37734-2_37

Jia X, Meng MQ (2017) Gastrointestinal bleeding detection in wireless capsule endoscopy images using handcrafted and CNN features. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 11–15 July 2017, pp 3154–3157, https://doi.org/10.1109/EMBC.2017.8037526

Khan MA et al (2022) GestroNet: a framework of saliency estimation and optimal deep learning features based gastrointestinal diseases detection and classification. Diagnostics 12(11):2718. [Online]. Available: https://www.mdpi.com/2075-4418/12/11/2718

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv: 1412.6980. https://doi.org/10.48550/arXiv.1412.6980

Leung WK, Cheung KS, Li B, Law SYK, Lui TKL (2021) Applications of machine learning models in the prediction of gastric cancer risk in patients after Helicobacter pylori eradication. Aliment Pharmacol Ther 53(8):864–872. https://doi.org/10.1111/apt.16272

Li L et al (2020) Multi-task deep learning for fine-grained classification and grading in breast cancer histopathological images. Multimedia Tools Appl 79:14509–14528. https://doi.org/10.1007/s11042-018-6970-9

Maggipinto M, Masiero C, Beghi A, Susto GA (2018) A convolutional autoencoder approach for feature extraction in virtual metrology. Procedia Manuf 17:126–133. https://doi.org/10.1016/j.promfg.2018.10.023

McClelland JL, Rumelhart DE, Group PR (1986) Parallel distributed processing. MIT Press, Cambridge. https://doi.org/10.7551/mitpress/5236.001.0001

Mohapatra S, Nayak J, Mishra M, Pati GK, Naik B, Swarnkar T (2021) Wavelet transform and deep convolutional neural network-based smart healthcare system for gastrointestinal disease detection, (in Eng). Interdiscip Sci. https://doi.org/10.1007/s12539-021-00417-8

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines. In: ICML. https://dl.acm.org/doi/10.5555/3104322.3104425

Owais M, Arsalan M, Choi J, Mahmood T, Park KR (2019) Artificial intelligence-based classification of multiple gastrointestinal diseases using endoscopy videos for clinical diagnosis. J Clin Med 8(7):986. https://doi.org/10.3390/jcm8070986

Öztürk Ş, Özkaya U (2020) Gastrointestinal tract classification using improved LSTM based CNN. Multimedia Tools Appl 79(39):28825–28840. https://doi.org/10.1007/s11042-020-09468-3

Pannu HS, Ahuja S, Dang N, Soni S, Malhi AK (2020) Deep learning based image classification for intestinal hemorrhage. Multimedia Tools Appl 79:21941–21966. https://doi.org/10.1007/s11042-020-08905-7

Pogorelov K et al (2017) KVASIR: a Multi-Class Image dataset for computer aided gastrointestinal disease detection. https://doi.org/10.1145/3193289

Ponnusamy R, Sathiamoorthy S (2019) Prediction of esophagitis and Z-line from wireless capsule endoscopy images using fusion of low-level features. Int J Recent Technol Eng (IJRTE) 8(3):6024–6028. https://doi.org/10.35940/ijrte.C5568.098319

Raksasat R et al (2021) Accurate surface ultraviolet radiation forecasting for clinical applications with deep neural network. Sci Rep 11(1):5031. https://doi.org/10.1038/s41598-021-84396-2

Rau A et al (2019) Implicit domain adaptation with conditional generative adversarial networks for depth prediction in endoscopy. Int J Comput Assist Radiol Surg 14(7):1167–1176. https://doi.org/10.1007/s11548-019-01962-w

Safarov S, Whangbo TK (2021) A-denseunet: Adaptive densely connected unet for polyp segmentation in colonoscopy images with atrous convolution. Sensors 21(4):1–15, Art no. 1441. https://doi.org/10.3390/s21041441

Sokolova M, Lapalme G (2009) A systematic analysis of performance measures for classification tasks. Inf Process Manag 45(4):427–437. https://doi.org/10.1016/j.ipm.2009.03.002

Stone M (1974) Cross-validatory choice and assessment of statistical predictions. J R Stat Soc: Ser B (Methodological) 36(2):111–133. https://doi.org/10.1111/j.2517-6161.1974.tb00994.x

Sung H et al (2021) Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. Cancer J Clin. https://doi.org/10.3322/caac.21660

Vieira PM, Freitas NR, Valente J, Vaz IF, Rolanda C, Lima CS (2020) Automatic detection of small bowel tumors in wireless capsule endoscopy images using ensemble learning. Med Phys 47(1):52–63. https://doi.org/10.1002/mp.13709

Xing X, Yuan Y, Meng MQH (2020) Zoom in lesions for better diagnosis: attention guided deformation network for WCE image classification. IEEE Trans Med Imaging 39(12):4047–4059. https://doi.org/10.1109/TMI.2020.3010102

Yuan Y, Meng MQH (2017) Deep learning for polyp recognition in wireless capsule endoscopy images. Med Phys 44(4):1379–1389. https://doi.org/10.1002/mp.12147

Zhang C, Zhang N, Wang D, Cao Y, Liu B (2020) Artifact detection in endoscopic video with deep convolutional neural networks. In: 2020 Second International Conference on Transdisciplinary AI (TransAI), pp 1–8. https://doi.org/10.1109/TransAI49837.2020.00007

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest in this study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nezhad, S.A., Khatibi, T. & Sohrabi, M. Combining CNNs and 2-D visualization method for GI tract lesions classification. Multimed Tools Appl 83, 15825–15844 (2024). https://doi.org/10.1007/s11042-023-15347-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15347-4