Abstract

Automatic text generation is the generation of natural language text by machines. Enabling machines to generate readable and coherent text is one of the most vital yet challenging tasks. Traditionally, text generation has been implemented either by using production rules of a predefined grammar or performing statistical analysis of existing human-written texts to predict sequences of words. Recently a paradigm change has emerged in text generation, induced by technological advancements, including deep learning methods and pre-trained transformers. However, many open challenges in text generation need to be addressed, including the generation of fluent, coherent, diverse, controllable, and consistent human-like text. This survey aims to provide a comprehensive overview of current advancements in automated text generation and introduce the topic to researchers by offering pointers and synthesis to pertinent studies. This paper studied the relevant twelve years of articles from 2011 onwards in the field of text generation and observed a total of 146 prime studies relevant to the objective of this survey that has been thoroughly reviewed and discussed. It covers core text generation applications, including text summarization, question–answer generation, story generation, machine translation, dialogue response generation, paraphrase generation, and image/video captioning. The most commonly used datasets for text generation and existing tools with their application domain have also been mentioned. Various text decoding and optimization methods have been provided with their strengths and weaknesses. For evaluating the effectiveness of the generated text, automatic evaluation metrices have been discussed. Finally, the article discusses the main challenges and notable future directions in the field of automated text generation for potential researchers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Text Generation is a sub-discipline of Natural Language Processing used to fulfill specific communicative requirements by automatically generating natural language texts that leverage computational linguistics and artificial intelligence abilities [168]. Text generation has many real-world [51] applications depending on the input (data, text, or multimodal); however, the output is always a natural language text. Thus, based on the type of input, the text generation has been categorized mainly into three categories: text-to-text generation (T2T), data-to-text generation (D2T), and multimodality-to-text generation (M2T), as shown in Fig. 1. The text-to-text generation tasks take existing text as input and automatically generate a new, coherent text as output. For T2T generation, the most common applications include summarising the input document [13, 101], generating questions and answers from a text document [4, 48, 193], translating a sentence from one language to another [1, 11], creating or completing a story outline [66, 171, 211]. The data-to-text generation tasks automatically generate text from numerical or structured data such as key-value lists and tables. For D2T generation, the example applications include reports generation from numerical data [148, 154], and generating text from the meaning representations to represent the meaning of natural language [113, 180]. The multimodality-to-text generation tasks transfer the semantics in multimodal input, such as videos or images, into natural language texts. For M2T generation, the example applications include generating captions from images or videos [71, 126], video summarization, and visual storytelling.

The research on text generation has a long history. The earliest text generation systems used template and rule based methods to capture linguistic knowledge of vocabulary, syntax, and grammar. The next-generation models encode the dependency between vocabulary and context in conditional probability. These methods also couple with template-based methods for text generation. Then with the development of deep learning technologies, neural-based models gradually occupy a dominant position. Deep Learning belongs to a class of machine learning algorithms that identifies patterns in text and identifies features that assist in solving several text generation tasks [97]. The capacity of deep neural networks to learn representations of varying degrees of complexity has aided in achieving state-of-the-art performances across different text generation tasks, such as machine translation, text summarization, storytelling, and dialogue systems [62]. The availability and accessibility of a vast number of corpora and massive computational resources are other factors supporting deep learning growth. Most recently, the pre-trained text generation models based on the Transformer architecture have the ability to better capture the linguistic knowledge of vocabulary, syntax, and grammar. However, while these models generate fluent and grammatical text, they are prone to making factual errors that contradict the input text. Generating fluent, informative, well-structured, and coherent text is pivotal for many text generation tasks. It takes significant effort by humans to generate text that is consistent and model long-term dependencies. And it is an equally challenging to do it automatically because of the discrete nature of textual data. Traditional template-based methods generate reliable texts but lack diversity, fluency, and informativeness. The deep learning models generate fluent and informative texts but are limited by the faithfulness and controllability of the neural-based models. And the transformer based models generate fluent, informative, and controllable text, but the inconsistency with the input information persists. The motivation for conducting this survey and the primary contributions of the paper are presented in the following subsection.

1.1 Motivation and our contribution

Automated text generation has been gaining attention with the advances in deep learning. In the last ten years, the text generation field has evolved significantly. Numerous appealing surveys [51, 59, 120, 123, 173, 216] have been introduced, summarizing the work done in this field. However, there is no proper survey on ATG in terms of prominent benchmark datasets, real-world tools, decoding methods, evaluation metrices, and challenges of automated text generation applications. This motivates us to perform a Systematic Literature Review for automatic text generation using deep learning techniques. Another motivation is the gaining interest in this research field of text generation. The analysis of the twelve-year articles published in this field has consistently increased, indicating that automated text generation is gaining interest each year. Keeping this in mind, this paper studies all the relevant articles from 2011 onwards to find methods for automated generation of text in different application domains, different existing tools and datasets used with their application domain, and evaluation metrices for evaluating the effectiveness of the generated text. The purpose of this survey is to provide a comprehensive overview of current advancements in automated text generation and introduce the topic to researchers by providing pointers and synthesis to pertinent studies.

The main contribution of this paper comprises the following key points:

-

I.

This survey provides an up-to-date synthesis of automated text generation along with its core applications, including text summarization, question–answer generation, dialogue generation, machine translation, story generation, paraphrasing, and image captioning, and the key techniques behind them.

-

II.

A comprehensive outline of techniques and methods employed to generate text automatically, including traditional statistical methods, deep learning, and pre-trained transformer based models, has been discussed.

-

III.

This paper enlists standard datasets required to train, test, and validate the text generation models for the automated generation of fluent and coherent texts.

-

IV.

The text decoding strategies and optimization techniques significantly impact the quality of the generated text. This paper discussed these decoding techniques and optimization methods with their strengths and limitations.

-

V.

In this article, real-time task-specific tools for automated text generation have also been provided with their features and URLs.

-

VI.

For the effectiveness of the generated text, various metrices/approaches have also been summarized to evaluate text generation models automatically that depict different text attributes such as fluency, grammaticality, coherence, readability, and diversity.

-

VII.

This paper also identifies various challenges of text generation applications, including the generation of human-like text that is fluent, diverse, controllable, and consistent. This survey also outlines potential future research directions in the area of automated text generation.

1.2 Comparison with other surveys

There have been related attempts and literature surveys on automated text generation. This subsection overviews such attempts and highlights the contrast between existing and current surveys with their strengths and limitations. For example, Gatt et al. [51] surveyed natural language generation emphasizing image-to-text tasks. Liu et al. [120] reviewed deep learning architectures and limited text generation applications. Xie [216] describes techniques for training and dealing with natural language generation models using neural networks. Santhanam et al. Lu et al. [123] surveyed only neural text generation models. [173] review language generation with a focus on dialogue systems. Garbacea et al. [59] present an overview of natural language generation methods, tasks, and assessments. Yu et al. [232] reviewed knowledge-enhanced text generation. However, there are many existing surveys on text generation but are limited in terms of standard datasets, existing real-time tools, optimization methods, evaluation metrices, and challenges of automated text generation applications. This motivates us to perform a Systematic Literature Review on automatic text generation. This survey captures the comprehensive study and up-to-date review of current advancements in the field of text generation and also studies various methods for automated generation of text in different application domains, different existing tools, and datasets used with their application domain, text decoding, and optimization techniques, and evaluation metrices for evaluating the effectiveness of the generated text. Table 1 summarizes these aspects in comparison to the surveys mentioned above reports and contributions in literature.

The rest of this paper is organized as follows. A detailed review strategy and various research questions with significance are provided in Sect. 2. This section also mentions the search criteria and the research parameters for writing this survey paper. The extraction of studies and discussion is presented in Sects. 3 to 10. In Sect. 3, the core applications of text generation are reviewed. Section 4 mentions the methods and techniques employed for generating text. Section 5 enlists application-specific standard datasets required to train, test, and validate the models. Text decoding and optimization techniques significantly impact the generated text, which are mentioned in Sect. 6. There are many real-time task-specific tools for text generation, which are provided in Sect. 7, along with their access URLs. Section 8 reviewed the approaches to evaluate the effectiveness of the generated text. Various open challenges to automate the text generation task is mentioned in Sect. 9. Finally, Sect. 10 concludes this paper and outlines potential directions for future research.

2 Research methodology

The research methodology is a process of systematically researching. It includes an empirical analysis of all concepts relevant to the field of research. Generally, it includes the concepts of phases, models, and quantitative as well as qualitative techniques. This paper follows the review process suggested by Kitchenham and Charters [92], which includes planning, conducting, and reporting the review, as shown in Fig. 2.

2.1 Planning review

The planning process included identifying the need for a Systematic Literature Review (SLR) and concluding with the formulation and validation of the review procedure. A systematic review is needed to identify, compare, and classify the existing text generation work. The studies published on text generation in the last twelve years are observed, but none are robust. This paper comprehensively analyzes emerging models, methods, tools, and deep-learning-based text generation application techniques to identify and compare them systematically.

The research questions (RQs) are prepared to facilitate the review process to be more focused, clear, and consistent. Eight research questions (RQ 1 to RQ 8) have been framed, which help to perform SLR. The research questions and their significance in this literature review are mentioned in Table 2.

2.2 Conducting review

This phase involves selecting studies, extracting required resources, and synthesizing knowledge. This SLR includes research papers from different publications and the various online electronic databases selected, such as IEEE Xplore, ACM digital library, Science Direct-Elsevier, Springer link, Web of Science, and Wiley online library. The search string includes keywords: “Text Generation” OR “Natural Language Generation” OR “Text Generation using Deep Learning” OR “Neural Text Generation” OR “Neural Language Generation” AND “Applications” OR “Text Generation Applications.” The sources contain documents of several types, such as book chapters, research articles, reviews, and proceeding papers, published in the last twelve years, i.e., from 2011 to 2022. It discusses the research papers from journals, magazines, conferences, workshops, and symposiums. The studies were explored and based on inclusion–exclusion criteria, and a total of 146 research papers were obtained, as shown in Fig. 3.

These 146 research papers from the ‘2011–2022’ time frame are thoroughly reviewed and discussed in this survey paper. The number of extracted research papers based on their year of publication is shown in Fig. 4. It can be observed that before 2011 there was limited work in the research area of text generation using deep neural networks. And there has been a gradual increase in the number of research papers from 2011 onwards, showing growth in the field of automated text generation with the developments in deep neural models.

Discussion on research question 1

This yearly analysis of papers helps answer RQ 1- “How the automated text generation study evolves with advancements in deep learning.” It has been observed that limited work has been done on text generation before 2011, and there is a gradual increase in the number of research papers from the year 2011 onwards. This growth results from advancements in text generation methods, from traditional rule-based methods to deep neural networks and pre-trained transformer models. The traditional template or rule based methods were used for text generation usually before 2013, but these rules/templates are difficult to design and are very time consuming. These shortcomings of traditional approaches were overcome in the years with the developments in deep learning methods. The research in the field of text generation increases gradually thereafter. The availability of powerful deep neural models and computationally intensive architecture results in the incredible adoption of a variety of text generation applications, including text summarization, machine translation, creative applications such as story generation, and dialogue generation. Now, with pre-trained transformers models, the automated text generation work has immensely accelerated. Many sectors have started using automated text to improve user experience as the recent advancements in technology is capable of generating human-like texts. The content generated by the automated tools is fast and cheap. The analysis of the published articles in this field indicates consistent growth and adaptation to the research area of automated text generation from 2011. Keeping this in mind, this paper studies all the relevant articles from 2011 onwards to find methods for automated generation of text in different application domains, different existing tools and datasets used to achieve the task, text decoding and optimization techniques, and evaluation metrices for the effectiveness of the generated text.

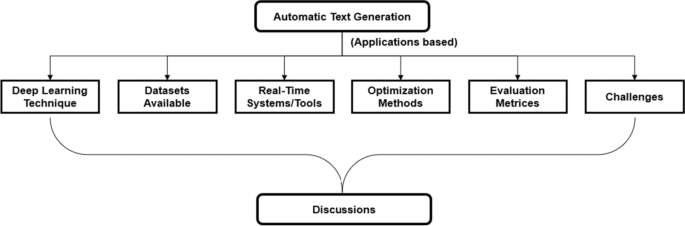

2.3 Reporting review

For reporting the review, this phase provides the research parameters. The research parameters that have been followed for SLR include the core text generation applications. For each text generation application, the deep learning technique used, standard datasets, existing real-time tools for that application, optimization methods, evaluation metrics, and challenges for each application are presented. The different parameters used in this paper are shown in Fig. 5. The extraction of studies and discussions is presented in this survey.

These research parameters are discussed in-depth in the following sections, and the results or analysis of these parameters are also reviewed and mentioned in Sects. 3 to 10.

3 Applications of automated text generation

The field of artificial intelligence has developed techniques that generate text automatically in seconds. Automatic text generation is one such application that is the need for the hour. Many applications of text generation are crucial and very significant for smart systems and enable better communication between humans and machines, for example, machine translation, summarization, and simplification of long or complex texts, grammar, and spelling correction, generating peer reviews for scientific papers, questionnaire generation, automatic documentation systems for large software, question–answer generation, business letter writing, chatbots and much more. The core text generation applications are shown in Fig. 6, and the details about these applications are discussed below.

3.1 Text summarization

With each day, enormous amounts of data from diversified sources have evolved. This massive volume of data incorporates crucial facts, information, and knowledge that needs to be effectively summarized to be helpful. Thus, automatic text summarization came into the picture to tackle the problem of information overloading [142]. A text summarization method generates an abbreviated version of a document by filtering the significant information from the original document [57]. A strong summary consists of all aspects, such as coverage, non-redundancy, cohesion, relevancy, and readability, in addition to relevant key points [145]. There are two prominent types of summarization techniques. First, extractive summarization techniques form summaries by copying parts of the input sentences [134], and second, the abstractive summarization technique [5, 132] generates a summary by including words and phrases not present in the source [135]. Nallapati et al. [135] propose recurrent neural network-based encoder-decoder models for abstractive text summarization. In follow-up work [134], extractive summarization techniques using recurrent neural networks are presented. Rush et al. [172] propose an attention-based network for the abstractive summarization of sentences, and Cheng et al. [28] proposed an attentional encoder-decoder for extractive single-document summarization. See et al. [177] used a pointer generator network for abstractive summarization. Paulus et al. [147] use the reinforcement learning model for abstractive summarization, while others use reinforcement learning for extractive single-document summarization [136, 212]. Mehta et al. [129] use Long Short Term Memory (LSTM) and attention model to summarize scientific papers. Liu et al. [118] focus on multi-document summarization by generating fluent, coherent multi-sentence Wikipedia articles using extractive summarization. Modified BERT transformer [40] for extractive summarization is capable of extracting automatically the features in the internal layers [116] Multi-document summarization using abstractive methods has also been used [15, 239]. Xu et al. [221] propose a multi-task framework with a hybrid of the extractive and abstractive models. Transformer architecture also performs great in many NLG tasks [195]. Tan et al. [20] used a pretrained model, GPT-2 for the summarization task with the idea that the model will start generating a summary based on the delimiter. More recent works leverage pre-trained transformer based networks, such as GPT [162], BART [102], T5 [163], and PEGASUS [240], for summary generation [63, 119, 213].

3.2 Question answer generation

Automatic question generation (QG) aims to generate questions from some form of input, such as raw text or a database, whereas Question Answering (QA) is the task of automatically providing precise responses to questions in the natural language given corresponding document. In the last years, the widespread use of QA-based personal assistants has been observed, including Microsoft’s Cortana, Apple’s Siri, Samsung’s Bixby, Amazon’s Alexa, and Google Assistant, which have answered a wide variety of questions. QG systems proposed by [46, 185] automatically generate answer-unaware questions from within the given document, whereas [88, 180, 186] generate answer-agnostic questions. Du et al. [47] initiated a neural question generation model using an attention sequence-to-sequence model [11]; subsequently, [48, 69, 245] also adopted an attention mechanism. Zhao et al. [241] proposed a gated self-attention encoder. Most neural QG models [69, 95, 204, 245] employ the copying mechanism for question generation. Weston et al. [206] proposed the use of a Memory networks model in the system to answer the questions effectively. The Dynamic Memory Networks model [94] overcomes the shortcomings of the memory networks by combining the paradigms of memory networks and attention mechanisms. This work was later extended by Xiong et al. [219] for visual question answering. Other works, including visual question answering [2, 9, 58, 122] have generated natural and engaging questions for an image. [233] have adopted policy gradient methods to diversify the generated question. [40, 99, 225] uses pre-trained models for the question-answering task, and [100] uses transformer-based models to generate answer aware questions. [203, 204] propose a neural model for question generation and answering that jointly asks and answers questions given a document. Most of the earlier work focuses on using a single QA dataset, such as SQuAD [165]. While working on the generation of multi-hop [30], open ended and controllable [23], or cause-effect [183] questions have gained attention, each direction is studied in isolation as it usually requires a separate question–answer dataset. More recent works leverage pre-trained transformer based networks, such as BART [102], T5 [163], and PEGASUS [240], for question generation, which have been successful in many applications [6, 98, 107, 164, 194].

3.3 Dialogue response generation

Dialogue systems or conversational agents are computer programs capable of replying with natural, coherent, meaningful, and engaging responses. A good dialogue model generates dialogues with high human similarity [104]. [131, 181] work on building end-to-end dialog generation systems using neural networks, whereas [178, 191] use hierarchical encoder-decoder to generate responses. [218, 228] use the attention model, while [103, 110] use reinforcement learning, and Li et al. [111] use generative adversarial networks for dialog generation. Niu et al. [141] also use a reinforcement learning model focusing on polite dialogue responses. The use of pre-trained models for conversational agents is also observed. [10, 242] use embeddings; [41, 209] use transformers for response generation [98, 107]. These conversational models have enabled robots to interact with humans in natural languages; for example, Window’s Cortana, Google’s assistant, Apple’s Siri, and Amazon’s Alexa are the software and devices that follow Dialogue systems. [76, 153] proposes dialogue generation with recognition of emotions, and [56, 167] also generates empathetic dialogues. [181, 243, 244] generates single-turn dialogue responses while [159, 167, 235] generates multi-turn dialogues. [184] has used text style transfer and GPT for the creation of a dialog generation system over gender-specific, emotion-specific, and sentiment-specific dialogue datasets.

3.4 Neural machine translation

The data accessible to everyone is a challenge because language becomes the barrier, and machine translation came into the picture to overcome this. Machine translation is the task of automatic translation of written text from one natural language into another. Neural machine translation (NMT) uses neural nets to transform the source sentence into the target sentence [29, 82, 188]. [11] introduced attention mechanism in NMT models, which was later extended [124]. Luong et al. [124] have used a unidirectional recurrent neural network model, while [214] used bidirectional recurrent neural networks (BRNN). [3] and [79] does the translation for low-resource language pairs. [29, 31] uses gated recurrent units and achieves better performance on NMT. Tu et al. [192] use a copy and coverage mechanism; Wang et al. [200] use a pre-computed word embedding layer, GlOVe (Global Vectors for Word Representation). Park et al. [144] proposed a mobile device-based sign language translation system. [1] uses an attention-based multi-layer neural network. Transformer architecture [195] also performs well in NMT. [12, 200] proposed deep Transformer models for translation. Transformers like BART [102], BERT [35, 40] and GPT [20] have also been used for the NMT task. More recent works leverage pre-trained transformer based networks for machine translation [54, 64]. Recent works on non-autoregressive neural machine translation [65, 72, 161] improve model efficiency by decoding in parallel as compared to sequential decoding in traditional autoregressive machine translation methods [215].

3.5 Story generation

Automated story generation is the task of automatically identifying a series of actions, events, or words that have been told as a story. Li [106] attempts to automatically generate a story about any domain without prior knowledge. To encode the context, recurrent networks, and convolutional networks successfully model sentences [38, 81]. A fusion mechanism [182] is introduced to support sequence-to-sequence models build dependencies between their input and output. Pawade et al. [149] have implemented a recurrent neural network-based story system to generate a new story based on a series of inputted stories. Vaswani et al. [195] use multi-head attention. [108, 170] use LSTM networks to learn the text hierarchically. Jain et al. [78] chain a series of variable length independent descriptions together into a well-formed comprehensive story. Clark et al. [34] model entities in story generation. Martin et al. [128] present an event-based end-to-end story generation pipeline. Similarly, [68] generates summaries of movies as sequences of events using a recurrent neural network (RNN) and sample event representations. [53, 227] propose a hierarchical story generation framework that first plans a storyline and then generates a story based on the storyline. [151, 208] propose a framework that enables controllable story generation. [7, 189] uses policy gradient deep reinforcement learners to perform an event-to-event task. [26] uses the BERT language model for story plot generation.

3.6 Paraphrase generation

Texts that convey a similar meaning but different expressions are referred to as paraphrases. Paraphrase generation refers to an activity in which, given a sentence, the system creates paraphrases of it. Bowman et al. [18] use a variational autoencoder (VAE) to model holistic properties of sentences such as style, topic, and other features. Gupta et al. [67] use VAE-LSTM to generate more diverse paraphrases. Prakash et al. [155] employ a stacked residual LSTM network in the Sequence-to-sequence model. [105, 166] propose deep reinforcement learning (RL) to guide Sequence-to-sequence training. Cao et al. [22] utilize a novel sequence-to-sequence model to join copying, and restricted generation [237] tackle a comparable task with the Sequence-to-sequence model coupled with deep reinforcement learning. See et al. [177] use a pointer-generator while [125] utilizes an attention layer. Iyyer et al. [77] utilize syntactic information for controllable paraphrase generation. Yang et al. [224] propose an end-to-end conditional generative architecture for generating paraphrases. Qian et al. [160] propose an approach that generates a diverse variety of different paraphrases. [21, 43] tackle the problem of QA-specific paraphrasing while [223] help diversifies the response of chatbot systems. [117] first uses abstract rules and then leverage neural networks to generate paraphrases by refining the transformed sentences.

3.7 Image/Video caption generation

The generation of semantically and syntactically correct description sentences of an image is called image captioning. The recognition of vital objects, their properties, and their relationships in an image is required for image captioning. Kiros [90] propose the initial work for extracting image features with the use of a convolutional neural network (CNN) in generating image captions. Then, with the use of LSTM [90] extended their work [91]. Mao et al. [127] proposed a multimodal recurrent neural network (m-RNN) and [229] used hierarchical recurrent neural networks for generating image descriptions. [201] proposed a deep Bi-LSTM based method for image captions. [80, 220] proposed an attention-based image captioning method. [169, 238] introduced a reinforcement learning-based image captioning method. [37, 179] proposed an image captioning method based on Generative Adversarial Networks (GAN). Vinyals et al. [199] proposed a neural image caption generator method. Donahue et al. [42] propose long-term recurrent convolutional networks that have been processing variable-length inputs. [150, 230] propose an attention-based image captioning model. Some method uses a CNN for image representations and an LSTM for generating image captions. Yao et al. [226] proposed a copying mechanism to generate a description for novel objects. [55, 85] use pre-computed word embedding layers and thus generate better image captions. [202] has proposed a framework that unifies a diverse set of cross-modal and unimodal tasks, including image captioning, and language modelling.

Discussion on research question 2

Text generation research consists of various tasks, topics, or trends. This section helped to answer RQ 2- “What are the main core text generation applications that have arisen with advancements in the field.” It has been observed that there are many real-world applications depending on the input (data, text, or multimodal); however, the output is always a natural language text. Thus, based on the type of input, the text generation has been categorized mainly into three categories: text-to-text generation (T2T), data-to-text generation (D2T), and multimodality-to-text generation (M2T), as discussed earlier. For T2T generation, the most common applications include summarising the input document, generating questions and answers from a text document, translating a sentence from one language to another, and creating or completing a story outline. For D2T generation, the example applications include reports generation from numerical data and generating text from the meaning representations to represent the meaning of natural language. For M2T generation, the example applications include generating captions from images or videos, video summarization, and visual storytelling. The summarized description of the above-mentioned text generation applications is mentioned in Table 3.

The availability of powerful deep neural models, computationally intensive architecture, and pre-trained transformer models results in the incredible adoption of a variety of text generation applications. The applications use different methods including recurrent neural networks, long short-term memory networks, gated recurrent units for learning language representations, and later sequence-to-sequence learning, which opens a new chapter characterized by the wide application of the encoder-decoder architecture. However, these sequence-to-sequence models cannot capture long term dependencies, motivated the development of pointer networks and attention networks. Then, the transformer architecture incorporates an encoder and a decoder with self-attention mechanism, which is now widely used by text generation tasks. Applying these models to different text generation tasks can result in different levels of performance due to differences in task-specific requirements, training data availability, model architecture, hyperparameters, and evaluation metrics. Even if the same or similar models are used for different tasks, the architecture of the model may need to be modified or fine-tuned based on the requirements of the specific task.

The availability and quality of training data can significantly impact the performance of a text generation model. Models that are trained on large, diverse, and high-quality datasets specific to a given task or domain tend to perform better than those trained on more general datasets or limited data. Different text generation tasks may require different data preprocessing steps, such as tokenization, normalization, stemming, and stop-word removal. The choice of the model architecture and hyperparameters can also impact the performance of a text generation model. For example, transformer-based models such as GPT tend to perform well on a variety of text generation tasks due to their ability to capture long-term dependencies, but different hyperparameters, such as the number of layers or attention heads, can affect the model's performance. Different text generation tasks have different requirements and constraints that affect the effectiveness of the model. Models that are optimized for a specific task may perform better than those that are more general-purpose. Thus, it is important to carefully consider these factors when selecting a model for a particular task.

In this section, advancements in text generation applications have been seen with the rise of deep neural network approaches. The text generation approaches are discussed in the next section.

4 Text generation approaches

Text Generation is an emerging area of research. Recently, deep learning approaches have made remarkable success in various text generation tasks [138], including text summarization, machine translation, question answering, story generation, short-dialog generation, and paraphrasing. This section presents the traditional approaches for text generation, deep learning techniques, and pre-trained transformer-based approaches to text generation.

4.1 Traditional approaches

Traditionally, text generation was done either by using templates or production rules of a predefined grammar or performing statistical analysis of existing human-written texts to predict sequences of words [17, 60, 139, 222]. The template-based text generation systems adopted rules and templates to design different modules for text generation that reflect the linguistic knowledge of vocabulary, syntax, and grammar. This approach decomposes the text-generation task into several interacting subtasks depending on the task-specific text generation application. The template-based approaches usually consist of several components, including content planning (deciding the input data, selecting and structuring content), sentence planning (choosing words, syntactic structures, choosing appropriate referring expressions to describe input entities), and text realization (converting specifications to a real text), each performing a specific function [137]. The statistical-based text generation systems encode the dependency between vocabulary and context in conditional probability [93]. The most popular statistical text generation model is the n-gram language model, which is usually coupled with the template-based approach for re-ordering and selecting fluent generated texts. With the traditional approaches, it is very time-consuming to automatically generate text like those generated by humans. Deep learning techniques have overcome these shortcomings of traditional approaches. With the development of deep learning approaches, the neural-based text generation models have gradually occupied a dominant position that better models the statistical relationship between vocabulary and context, thus significantly improving the performance of text generation, as discussed in the subsequent section.

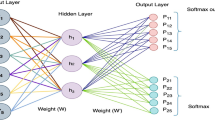

4.2 Deep learning techniques

Deep learning architectures and algorithms have recently achieved state-of-the-art results in question–answer generation, machine translation, text summarization, dialogue response generation, and other text generation tasks. Deep learning supports automated multi-level attribute representation learning. The deep neural networks provide a uniform end-to-end framework for text generation. First, a neural network creates a representation of the user input. Then, this representation is used as input to a decoder which generates the system response. Representation learning often happens in a continuous space, such that different modalities of text (words, sentences, and even paragraphs) are represented by dense vectors.

A variety of architectures based on deep neural networks have been developed for the different application tasks of text generation. This section introduces deep learning techniques that are commonly used in text generation application tasks.

4.2.1 The encoder-decoder framework

Much of the work on neural text generation adopts the encoder-decoder approach that was first advocated and shown to be successful for machine translation [32, 188]. First, the input is encoded into a continuous representation using an encoder. Then the text is produced using the decoder. Figure 7 illustrates this encoder-decoder framework for text generation. This network is often referred to as a sequence-to-sequence model as it takes as input a sequence, one element at a time, and then outputs a sequence, one element at a time.

This encoder and decoder are neural networks. The encoder depicts the input sequence as a hidden state vector and then transfers it to the decoder. The decoder then produces the output sequence.

4.2.2 Convolutional neural networks

Convolutional neural networks (CNNs) are specialized for processing data with a known grid-like topology. These networks have succeeded in computer vision tasks, which have been represented as 2-dimensional grids of image pixels [201, 217]. In recent years, CNNs have also been applied to natural language. In particular, they have been used to learn word representations for language modelling effectively [177] and summarization [28, 136, 138]. CNNs employ a specialized kind of linear operation called convolution (filter which extracts a specific pattern), followed by a pooling operation (subsamples the input on each filter to a fixed dimension of output), to build a representation that is aware of spatial interactions among input data points as shown in Fig. 8.

There are many variants of CNN that have different application areas, as mentioned in Table 4.

4.2.3 Recurrent neural networks

Recurrent Neural Networks are based on the concept of processing sequential data. They are termed “recurrent” since they perform the same computation over each token in the sequence, and each step depends on the results of previous computations, as shown in Fig. 9.

Most work on neural text generation has used RNNs due to their ability to capture the sequential nature of the text naturally and to process inputs and outputs of arbitrary length. There are various variants of RNN, including Bi-directional RNN, Parallel-RNN, Quasi RNN, RNN with external memory, and Convolutional RNN. Their features and application areas are provided in Table 5.

However, as the length of the input sequence grows, RNNs are prone to losing information from the beginning of the sequences due to vanishing and exploding gradients issues [16, 146]. RNNs fail to model the long-range dependencies of natural languages. Consequently, Long short-term memory [74] and gated recurrent unit [32] have been proposed as alternative recurrent networks that are better prepared to learn long-distance dependencies. These units are better at learning to memorize only the part that is relevant for the future. At each time step, they dynamically update their states, deciding on what to memorize and what to forget from the previous input. The LSTM cell has separate input and forget gates as shown in Fig. 10, while the GRU cell performs both of these operations together using its reset gate.

The forget gate decides which information of the long-term memory is useful and which to forget. The next input gate determines which new information to be added to the network, and the final output gate decides the new hidden state. In a vanilla RNN, the entire cell state is updated with the current activation, whereas both LSTMs and GRUs have the mechanism to keep the memory from previous activations. This allows recurrent networks with LSTM or GRU cells to remember features for a long time and reduces the vanishing gradient problems as the gradient back propagates through multiple bounded nonlinearities. LSTMs and GRUs have been very successful in modelling natural languages in recent years

4.2.4 SeqGANs and reinforcement learning

SeqGAN (Sequential Generative Adversarial Network) is a variant of GAN (Generative Adversarial Network), used to generate text. This SeqGAN combines reinforcement learning and GANs for learning from discrete sequence data [231]. In SeqGANs, the generator is treated as an RL agent. The tokens generated till a particular time become the state. The token to be generated next is the action and the reward is the feedback given by the discriminator to guide the generator in evaluating the generated sequence.

Reinforcement learning (RL) is a gradual stamping of behaviour [86, 166] where an agent learns how to act in an environment by performing actions and analyzing the outcomes, as shown in Fig. 11. The performance is maximized by allowing software agents and machines to determine the ideal behaviour within a specific context automatically. The agents are required to learn their behaviour using simple reward feedback, known as the reinforcement signal.

When using reinforcement learning for automated text generation, the actions are writing words, and the states are the words already written by the algorithm. The actions, rewards, and policies corresponding to text generation tasks are mentioned in Table 6.

4.2.5 Transformer models

The Transformer models introduced by [195] have facilitated the enhancement of a wide range of text generation tasks. Transformer models are based on global dependencies between the input and output, use attention mechanisms, and have the capability to capture the linguistic knowledge of vocabulary, syntax, and semantics. The transformer is an encoder-decoder architecture. RNNs and LSTM architectures have significant difficulties with longer sequences as a result of the vanishing gradient problem [16, 146]. The probability of keeping context from a word that is further away from the word that is being processed diminishes exponentially as the sentence grows longer. Parallelization is a practical approach for training on larger datasets. The transformers expand with data and architectural size, capture longer sequence features, and enables parallel training. As a result, more effective and coherent language models are feasible. Prior to this, most of the ATG models were trained on supervised learning. However, supervised models need a large amount of annotated data for learning a particular task which is often not easily available, and they fail to generalize for other tasks.

The Transformer is a sequence-to-sequence model and consists of an encoder and decoder. Both encoder and decoder are multiple identical blocks layered on top of each other. The overall architecture of the Transformer is shown in Fig. 12. Each encoder block consists of a multi-head self-attention module followed by a position-wise feed-forward network (FFN). Around each module, a residual connection is employed, followed by the layer Normalization. Compared to the encoder blocks, decoder blocks additionally insert a third module, known as encoder-decoder attention, between the multi-head self-attention and FFN module. Furthermore, the masked attention module preserves the auto-regressive property, ensuring to prevent each position from attending to subsequent positions.

Transformer models such as BERT [40], and GPT-3 [20] are pre-trained on large corpora and use unsupervised learning for text generation. These pre-trained transformers are classified into three categories, namely: encoder-only (like BERT), decoder-only (like GPT-n), and encoder-decoder (like T5). Bidirectional Encoder Representations from Transformers (BERT) is the first deep bidirectional, unsupervised language representation [40] model. It is built upon work in contextual representations. BERT uses an attention mechanism along with a Transformer that learns contextual relations in text to generate a text [40]. Generative Pre-Trained Transformer (GPT) is an autoregressive language model that was trained with 175 billion parameters to generate text automatically. It uses unlabeled data and then fine-tuning for the specific downstream task. GPT-n series (GPT-1 [162], GPT-2 [20], GPT-3 [20]) shows significant performance on various ATG tasks even without finetuning or gradient updates. Transformer-based models, such as GPT, T5, XLNet, and BERT [20, 40, 163], showed impressive results on several text generation tasks such as question answering, language modelling, machine translation, sentiment analysis, and summarization, as shown in Table 7.

Discussion on research question 3

With the advancements in deep neural networks, text generation models are capable of generating realistic, fluent, and coherent natural language. This section helped to answer RQ 3- “What are the various approaches and associated architectures in the field of text generation.” It has been observed that the field of text generation has undergone significant changes from template-based and statistical-based traditional approaches to, most recently, pre-trained transformer based models. As a result of these advancements in techniques, the text generation research field has witnessed remarkable progress and a surge in interest for the study. The shift starts with recurrent neural networks, long short-term memory networks, gated recurrent units for learning language representations, and later sequence-to-sequence learning, which opens a new chapter characterized by the wide application of the encoder-decoder architecture. However, these sequence-to-sequence models cannot capture long term dependencies, which motivated the development of pointer networks and attention networks. Then, the transformer architecture incorporates an encoder and a decoder with self-attention mechanism, which is now widely used by text generation tasks. The availability of powerful deep neural models, computationally intensive architecture and pre-trained transformer models results in the incredible adoption of a variety of text generation applications, including text summarization, machine translation, creative applications such as story generation, and dialogue generation. However, applying these models to different neural text generation tasks can depend on various factors, including the type of task, the architecture of the model, the size and quality of the training data, and the evaluation metrics used to measure the performance of the model.

The deep learning approaches arise due to the availability of a large number of corpora and significant computational resources. The standard datasets for text generation applications are mentioned in the next section.

5 Datasets for text generation tasks

In research, the datasets have been used to assess the performance of a proposed method. The deep learning models trained on large-scale datasets demonstrate unrivalled abilities to understand patterns in the data, opening a whole slew of new possibilities for creating realistic and coherent texts. Several datasets were recently created to support the training of text generation models. The datasets vary in terms of output lengths, generation tasks, and domain specificity. This section describes some of the datasets that are commonly used in text generation tasks. In Table 8, a shortlist of some of the task-specific standard datasets is provided, which is organized by the text generation applications.

Each dataset has many files, including training, testing, and validation files, in various formats. Few datasets have files in json format, text format, and excel format, while others are in csv format. For a better understanding of the above-mentioned datasets, screenshots of few datasets have been provided in the Fig. 13 below.

Screenshots of datasets [a CNN/DM dataset for summarization task b Gigaword dataset for summarization task c SQuAD dataset for question-answering task d Empathetic dialogues dataset for dialogue generation task e ROC story dataset for story generation and question-answering task f WMT dataset for translation task g Quora question pairs dataset for paraphrasing task h Flickr30k dataset for captioning task]

Discussion on research question 4

The availability of large and diverse datasets has also benefited the recent progress in text generation. This section helped to answer RQ 4- “What are the available datasets adopted in which the stated applications are organized.” It has been observed that there are many datasets that vary in terms of text generation tasks and domain specificity. The datasets help to train, test and validate the text generation models. Nowadays, there is a trend to train models on massive datasets. However, training text generation models on diverse datasets provide the opportunity to improve their robustness. The models trained on massive datasets show an unmatched ability to automate the generation of fluent and coherent texts. Thus, while training a text generation model for a particular task, it is critical to choose the dataset carefully. For a specific text generation task, the most commonly used datasets are shown in Fig. 14. It also specifies the percentage of articles in which a given dataset is used for a specific text generation task.

Summarized percentage of datasets usage in particular text generation task [a Summary of text summarization datasets b Summary of question answering datasets c Summary of dialogue generation datasets d Summary of machine translation datasets e Summary of story generation datasets f Summary of paraphrasing datasets g Summary of image/video description datasets]

After training the model, text decoding plays a vital role in the generation of text. The following sections discuss text decoding techniques and optimization methods for text generation.

6 Text decoding techniques and optimization algorithms

The automatic text generation model aims to generate text that is as good as human-written text. And after training the model, the quality of the generated text has a significant impact on the decoding strategy and optimization technique that one employs. In this section, decoding methods and optimization techniques important for text generation have been discussed.

6.1 Decoding techniques

Decoding is the process of generating natural language texts from a model. As there is a need for one-to-one correspondence between input and output time steps of generation, which leads to a crucial key aspect named decoding. The decoding approach in a neural text generation system describes how the system searches for potential output utterances when generating a sequence. It specifies how the words are combined to form text and sentences. Without an appropriate decoding technique, the generated text results in vague and dull text.

Primarily, decoding can be categorized as autoregressive and non-autoregressive [215]. In an autoregressive generation, the target tokens are generated one by one in a sequential manner, as shown in Fig. 15. The beginning and end of decoding are controlled by special tokens, including [BOS] (beginning of a sentence) and [EOS] (end of a sentence), which implicitly determine the target length during decoding.

Traditional models capture the true distributions of words using this strategy. The fundamental reason is the conditional dependence property from left to right. The transformer-based models that cannot replicate the training benefits as training can be nonsequential, and inference holds to be sequential with autoregressive decoding is among one issue with this technique. Another issue with the autoregressive approach is that it is time-consuming, especially for generating long target sentences. To alleviate this problem and accelerate decoding, non-autoregressive generation is proposed [65, 72]. The non-autoregressive decoding technique can generate all the target tokens in parallel, as shown in Fig. 16.

In this method, as all the target tokens are generated in parallel, there is no need for special token or target information to guide the termination of decoding. Using this technique, inference speed is hugely increased. [115, 157] proposes non-autoregressive techniques for summarization. The autoregressive techniques can be further viewed as sampling and search techniques. There are many different decoding strategies, including greedy, beam search, random sampling, top-k sampling, and nucleus sampling, as discussed.

A greedy search selects the most probability word from the language model. It uses this word as the next word and feeds it as input on the next step till it reaches maximum length [207]. However, greedy search is bound in a loop of the same words, resolved by random sampling [14]. Greedy search also lacks backtracking, which results in unnatural and meaningless sentences. The greedy search is not optimal for generating high-probability sentences [109], and this problem has been addressed by the beam search decoding method.

Random sampling picks the word randomly according to the conditional word probability extracted from the text generation model [14]. However, directly using the probabilities extracted from the text generation models often leads to incoherent text. Moreover, this text decoding method is not deterministic. Nevertheless, applying a softmax over the probability distribution and varying its parameter makes it smoother.

Beam search keeps the most probable words by tracking multiple possible sequences at once. It keeps track of the k-most probable partial sequences at each step, where k is the beam size. Beam search chooses the words to obtain an overall highest probability sentence [109, 198]. The text generated with beam search is more fluent as compared to greedy search. But when beam size is equal to one, beam search behaves as greedy search. Beam search produces a list of nearly identical sequences that fail to capture the inherent ambiguity of complex text generation tasks. Diverse Beam Search overcomes this problem by describing beam search as an optimization problem and augments the objective with diversity [197].

In top-k sampling, the ‘k’ most likely next words are selected, and then the next predicted word is sampled only from these ‘k’ words [53]. As’k’ is fixed in top-k sampling, the number of words filtered from the next word probability distribution is not dynamically modified [205]. As a result, unlikely words may be selected among these ‘k’ words if the next word probability distribution is very sharp.

Nucleus sampling (or top-p sampling) selects words from the smallest possible set with a cumulative probability greater than some probability p. As a consequence, the number of words in the set can dynamically decrease and increase according to the next word probability distribution [75]. It is the best available decoding strategy for generating long-form text that is both high-quality as measured by human evaluation and as diverse as human-written text.

Other decoding techniques include semi-autoregressive, iterative, and mixed decoding [215]. The semi-autoregressive decoding generates multiple target tokens at one decoding step. The iterative decoding provides target information on each decoding step. Some works aim to combine these decoding strategies into a unified model. Tian et al. [190] propose a unified approach for machine translation that supports autoregressive, iterative, and autoregressive decoding methods.

Thus, based on the strengths and limitations of the text decoding techniques, the choice of decoding method has a significant impact on the linguistic features and the quality of the generated text.

6.2 Optimization techniques

With the tremendous growth in the amount of data, optimization has become an essential part of deep learning. The goal of the optimization algorithm is to minimize the loss function by reaching global semi-minima. Deep Learning models are becoming efficient and achieve better results with the use of optimization techniques. This section describes the commonly used optimization methods from a neural text generation perspective. There has been much interest in modifying the stochastic gradient descent algorithm with an adaptive learning rate for more stable training, e.g., AdaGrad, AdaDelta, and Adam, as shown in Fig. 17.

Further, these optimization algorithms are reviewed in a summarized manner based on their properties, pros, and cons, as mentioned in Table 9.

Discussion on research question 5

The text decoding methods and optimization techniques significantly impact the quality of the generated text This section helps to answer RQ 5- “What are various text decoding and optimization techniques used to automatically generate text.” These techniques can be applied to different text generation tasks, i.e., text summarization, story generation, paraphrasing task, translation, and image captioning. Based on the text generation task, the autoregressive and non-autoregressive decoding technique can be utilized. Using a good model with bad decoding strategies lead to repetitive loop problem and inconsistent, incoherent text generation problems. As a result, it is recommended to choose a decoding strategy carefully. Decoding methods like, top-k sampling and nucleus sampling produce more fluent text than beam search and greedy search. However, top-k sampling has suffered from generating repetitive word sequences recently. There has also been observed that greedy and beam search perform better if a different training objective is used by the text generation model.

In an end-to-end neural framework, all kinds of inputs, including target generated text are firstly mapped into numeric embeddings, and then neural modules feed-forward information layer by layer. Finally, the last output of the neural framework is used to generate the target tokens with a decoding strategy and calculate the losses to optimize parameters. Optimization algorithms are among those parameters and play an important part to infer the losses between neural networks. Thus, optimization algorithms are equally important for text generation models. It has been observed that the training performance of the model is influenced by the selection of an optimization algorithm. After understanding the concepts of various optimization algorithms and the function of their parameters, text generation models perform better. For a particular text generation task, the most commonly used optimizers are shown in Fig. 18. It also specifies the percentage of articles in which a given optimizer is used for a specific text generation task. There are many real-time text generation tools which are discussed in next section.

Summarized percentage of optimizers used in particular text generation task [a Summary of text summarization Optimizer b Summary of Question Answering Optimizer c Summary of Dialogue Generation Optimizer d Summary of Machine translation Optimizer e Summary of story generation Optimizer f Summary of paraphrasing Optimizer g Summary of image/video description Optimizer]

7 Real-time text generation tools

Text generation has played an essential role in various applications of text generation, such as paraphrasing, question generation, summarization, and dialogue systems. Text generation systems assist human writers and make the writing process more effective and time-saving. This section describes several real-time tools for text generation. The tools for text generation applications are mentioned in Table 10.

Discussion on research question 6

The existing automatic text generation application tools have been able to generate interesting text but are limited in terms of consistency, fluency, controllability, and diversity of the generated text. This section helps to answer RQ 6- “What real-time tools are available for automatic text generation tasks.” It has been observed that there are diverse categories of automated text generation tools for different applications in the real world, such as some excel in generating short texts like headlines or tweets, and others excel at generating long texts like articles or blog entries. However, the authenticity of the content is missing with automated text generation tools, thus the fake/inaccurate content is roaming around. Many sectors have started using automated text to improve user experience as the algorithms are capable of generating human-like texts. But the automated tools can be exploited negatively. These tools can be abused by students who want to cheat on school work and hampers the student’s ability. The content generated by these tools is fast and cheap but lacks the artistry involved in expressing thoughts. However, some of the observed tools are average in text generation, while others generate fluent text but are not freely accessible. Sometimes the text generated by these tools is superficial and repetitive. Thus, there is still much research being done and many problems to be solved, including long-term dependencies, redundancy while generating text, word sense ambiguity, incorrect grammar, consistency, and many more. These tools are limited by the data they were trained on and may not have a deep understanding of the topic one is writing about. The automated text generators cannot provide original and creative ideas, and makes people lazy and dependent on automation. For the effective text generation and the reliable assessment of the text generation models, there are many task-specific evaluation measures, as described in the next section.

8 Evaluation metrics for text generation

This section discusses the automatic evaluation measures that are frequently used to assess the advancements in the text generation system. Without proper evaluation, it is difficult to measure a system’s competitiveness, which hinders the development of advanced algorithms for text generation. The goal of evaluation metrics is to evaluate the effectiveness of text generation tasks, and for this, a robust and unbiased evaluation metric is important. An automatic metric that correlates well with human assessments is ideal. It is desirable to employ a variety of metrics to assess the efficiency of the system over multiple aspects. The most popular automated evaluation methods for evaluating machine-generated text are mentioned in Table 11, with the pros and cons of the metric.

Automated text evaluation metrics are used to assess the text generation models, such as question–answer generation, text summarization, or machine translation. These evaluation measures provide a score that reflects the similarity between a human written reference text and an automatically generated text. There are many criteria based on which one decides which metric to use for which text generation task, as shown in Table 12.

Discussion on research question 7

As the field of text generation is continually advancing, evaluation is becoming critical for assessing progress in the area and performing comparisons between text generation models. This section answered the RQ 7- “Which metrics or indicators are used to evaluate automated text generation.” It has been observed that traditionally language models have been evaluated based on perplexity, which concerns with the probability of a sentence being produced by the model. There are many well established automated evaluation metrics for assessing specific text generation tasks, such as METEOR and ROUGE for text summarization, BLEU for machine translation, SPICE and CIDEr for image captioning. However, there is no universal metric that suits all text generation tasks and reflects all relevant features of text. These work well to judge the quality of the generated text that the model has generated natural, human-like and grammatically correct sentences. However, with open-ended generation tasks such as story telling or dialogue generation, the model is expected to not only produce high quality text but also to be creative and diverse. Another important aspect to open-ended text generation is commonsense reasoning, which is referred as consistency. Since the models are expected to produce much longer text, they are more prone to generating illogical or factually incorrect sentences. BEAMetrics [176], a Benchmark to Evaluate Automatic Metrics help in better understanding the strengths and limitations of current metrics across a broad spectrum of tasks. Fast and reliable evaluation metrics are key to progress in research. While traditional natural language generation metrics are fast, they are not very reliable. Conversely, new metrics based on large pretrained language models are much more reliable, but require significant computational resources [83]. It is important that language models are evaluated in all dimensions of open-ended text generation—quality, diversity and consistency [140]. When evaluating language models on open-ended text generation task, it has been observed that Corpus-BLEU is the best metric to evaluate the quality of generated text due to its similarity with human judgement. As for diversity, Self-BLEU appears to be the best metric to use due to its simplicity to calculate. To evaluate the consistency of the generated text, using selection accuracy on the MultiNLI dataset is good enough for most cases. For specific task such as story generation, other dataset can be considered such as StoryCloze. Thus, the evaluation of large language models such as GPT is task specific [24, 63, 119]. While evaluating the text generation models for efficiency, it is necessary to rely on multiple metrics that reflect different text attributes such as fluency, grammaticality, coherence, readability, diversity, etc., Though human evaluations represent the gold standard for assessing the quality of machine-generated texts, but it is costly and time-consuming. As a result, automated measures for evaluation are to be used. But these automated evaluation measures should only be used as a supplement to human judgments and not as a replacement. Also, the automated metrices of evaluation are to be used when they present a reasonable correlation with human judgments.

However, there are still many issues or open problems in the generation of automated text which are discussed in the next section.

9 Challenges of automated text generation tasks

Generating fluent, meaningful, well-structured, and coherent text is pivotal for many text generation tasks. It takes significant effort by humans to model long-term dependencies while generating consistent text. It is an equally challenging task to do it automatically due to the discrete nature of textual data. This section identifies the main difficulties or challenges for the effective generation of text as below.

9.1 Text summarization

Text summarization is a challenging task since it requires thorough text analysis to provide a reliable summary [57]. A good summary must include relevant details and must be precise, but it must also consider aspects such as non-redundancy, significance, coverage, coherence, and readability [145]. To achieve all these things, in summary, is a major challenge. While many text summarization models provide tangible results, several issues are being suppressed. They often tend to repeat factually inaccurate information, struggle with Out of Vocabulary (OOV) words, emphasize a word/phrase several times, and are also a bit repetitive [142]. Another challenge is to develop a system that summarizes multi-lingual texts and generates a summary whose quality matches that of a human generated summary [57]. For multi-document summarizing, redundancy is the biggest problem [15, 239]. The so far proposed systems strive to identify important sentences in groups of different themes and hence suffer from the problem of sentence ordering. There is a need for a richer dataset and computation power. Thus, pre-trained models came into the picture [195]. The hybrid approach has gained attention recently [221]. By combining extractive and abstractive techniques, developing efficient hybrid approach methods to generate good quality summaries so that they match closely to human-written ones is another major challenge. The automatic text summarization evaluation metric such as ROUGE [114] is not considered complete [147]. The challenge with summary evaluation is to determine how adequate or useful a summary is relative to its source. Thus, methods for generating and evaluating summaries should complement each other.

9.2 Question-answering

Automatic question–answer generation is a significant advancement still many challenging issues are yet to be resolved. One such issue is to precisely understand the natural language questions and deduce the exact meaning to retrieve specific responses [50]. Another challenge is the selection of a question that has good coverage of the content and is of appropriate difficulty, and in the case of multiple-choice questions, distractor generation is the big challenge. As input texts grow longer, sequence-to-sequence models struggle to effectively utilize relevant contexts while avoiding unnecessary information [47]. The models do not pay much attention to the answers that are critical to question word generation. As a result, the generated question words do not match the answer form [186]. Previous neural question generation models suffer from a problem where a large percentage of the generated questions contain words from the question target. As a result, they generate unintended questions [88]. The models are not aware of the positions of the context words. Instead of considering the close and relevant words to the answer, they copy the context words that are far apart and irrelevant to the answer [186]. The one most frequent problem is the Lexical gap between questions. It concerns variation in the formation of questions in natural language. Users formulate the question in different ways and ask for the same information. This results in questions that differ lexically but are semantically equivalent. The problem of word sense ambiguity is still a challenge in the QA field [180]. To leverage the wide range of the available datasets for the question–answer generation is not trivial. The task of selecting an appropriate dataset is still an open problem [50]. Challenges also arise due to the limited size of the user’s utterance, ambiguous, and missing information while interpreting a question.

9.3 Dialog response generation

Usually, conversational systems rely on RNN models, and RNNs are not able to model high-level variability [178]. The end-to-end conversational agents are prone to generating dull, generic, and boring responses [173]. To elicit a coherent, novel, and insightful response that is in line with the conversation spectrum, The conversational agents require adequate, accessible data [178, 181]. However, the conversational models, even with the powerful performance of neural networks, lack style, which possesses to be an issue as users may not be entirely satisfied with the interaction. Another problem is to encode contextual data such as world facts from knowledge bases or prior conversations. The response generated has to be contextually relevant to the conversation and also convey accurate paralinguistic functionality. Generating personalized dialogues is another challenging task [141].

9.4 Machine translation

Although neural machine translation (NMT) has been witnessing fast-growing research progresses, there are still many challenges. The major neural MT challenges are listed here. A major limitation of NMT is that it is not able to incorporable larger contextual information efficiently due to the learning ability of the model itself. The problem of reordering has not been addressed much so far [124]. The problems of alignment mechanism and vocabulary coverage always affect most of the NMT models [192]. NMT also struggles to deal with the translation of idioms [3]. Low-resource language MT is another hot spot, owing to multiple reasons, including morphological complexity and diversity, in addition to a lack of resources for many languages [79].

9.5 Story generation

Automatic story generation is challenging since it requires the generation of long-range dependencies and coherent natural language to describe a sensible sequence of events [227]. Another challenge in story generation is to create interactive narration along a certain story path so that the interactor has been provided the ability to modify the space or even the plot [208]. The commonly observed issues in generated stories are repeated plots, conflicting logic, and inter-sentence incoherence [20, 34, 53]. Another challenge is to use constraints to generate a creative story within the structure of the plot [151]. In most systems, evaluating the topicality, fluency, and overall quality of the stories generated poses a unique challenge [53].

9.6 Paraphrase generation

The ability to automatically generate alternative phrases of the same content has been demonstrated to be useful in several NLG areas, such as text summarization and question generation [155]. Automatically generating diverse and accurate paraphrases continues to be a difficult challenge due to the complexity of natural language [224]. Evaluation of the paraphrases generated is the most difficult aspect [105]. Another issue to be addressed is the generation of multiple diverse paraphrases of high quality to enhance generalization and robustness [160]. The issue of model holistic properties of sentences such as topic, style, and other features is still challenging.

9.7 Image captioning/ Video description

The major challenge in describing visual information to text is to learn the intermediate representation between the natural language domain and the visual domain [127]. Another challenge is the fine-grained natural descriptions of images or videos [42]. For instance, occlusions of interactive objects and unclear unit boundaries present additional challenges in effectively decoding the intent of the human behavior in a video. There are challenges associated with automatically generating textual reports for medical images and helping medical professionals produce reports more accurately and efficiently. The first is to generate prolonged texts with several sentences or even paragraphs, and the other is to generate captions with a wide range of heterogeneous forms [229].

Discussion on research question 8

The automated text generation applications have various challenges, including the generation of human-like text that is fluent, unambiguous, and diverse. This section helped to answer the RQ 8- “What challenges are faced in automated text generation tasks.” As the textual data is discrete in nature, it takes time and effort to model long-term dependencies while generating consistent text. Thus, applying neural models to different neural text generation tasks can depend on various factors, including the type of task, the architecture of the model, the size and quality of the training data, and the evaluation metrics used to measure the performance of the model. It has also been observed that the automated text generation is challenging, since it is not always possible to reproduce the reported results. Since the datasets used for text generation models are not always available publicly, it is difficult to conduct comparisons among various approaches to text generation. The size of training data and other key hyperparameters have a substantial impact on the quality of the generation text. However, there are still many open challenges in text generation that need to be addressed, including the generation of fluent, coherent, diverse, controllable, and consistent human-like text. Inspired by these challenges, the future aspects of this research area are presented in the next section.

10 Conclusion and future aspects