Abstract

Camera calibration is a crucial step in 3D reconstruction. To improve the reconstruction accuracy, we propose a calibration method for the paracatadioptric camera based on the projective properties of spheres. The starting point of the method is to consider the three great circles that are parallel to the projection of the sphere onto the unit viewing sphere in the imaging model of the paracatadioptric camera. The absolute conic is determined from the orthogonal vanishing points obtained from the intersections of the projections of the three great circles on the image plane. Then, the intrinsic parameters are obtained from the algebraic constraints on the image of the absolute conic. Compared with results obtained by Zhao’s, Yu’s and Li′s methods, 3D reconstruction using our method appears more accurate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Camera calibration is the essential step of 3D reconstruction algorithms [24, 25]. Catadioptric cameras are widely employed since they overcome the shortcoming of small imaging angle. They are currently employed in two versions: the non-central catadioptric cameras and the central catadioptric cameras [17]. In this paper, we focus on central catadioptric cameras since the algebraic constraints may be obtained by using the unit viewing sphere model, making the calibration method easier to obtain from geometric constraints [11].

According to type of mirrors, central catadioptric cameras could be divided into four categories: planar, ellipsoidal, hyperboloidal and paraboloidal [3]. The line and point are used to calibrate the central catadioptric camera [23, 32]. Under the central catadioptric camera, a cluster of line images may be fitted [9]. Duan et al. [8] have found the algebraic constraints between the projection of the circle on image plane and the image of absolute conic (IAC) to calibrate central catadioptric camera. Compared with the above common geometry, the projection of the sphere may be fitted more accurately, so the sphere is often chosen for calibration [1, 2, 10, 13, 26]. The projection of the sphere onto the image of central catadioptric cameras has double contact with modified image of IAC. Two methods of central catadioptric camera calibration have been presented based on IAC [19, 28]. Zhang et al. [31] have discussed the relationship between the dual sphere image and the dual absolute conic. Based on this, a novel central catadioptric camera calibration method has been derived. They also proposed two calibration algorithms according to the apparent contour of the projection of the three spheres [30]. The geometric invariants have been then applied to calibrate the central catadioptric camera [27]. Those geometric invariants are not suitable for the paracatadioptric camera calibration. Two calibration methods for paracatadioptric camera by using lines were presented [4, 12]. Based on the sphere, Duan et al. [5, 6] have presented two different methods for paracatadioptric camera calibration based on the antipodal sphere images. Projections of two spheres on the image plane were used to calibrate the paracatadioptric camera [20, 21]. Zhao et al. [14, 15] used properties of the polar of a point at infinity with respect to a circle and antipodal sphere images to obtain the imaged circular points. Yu et al. [34] have suggested a method exploiting self-polar triangles to calibrate paracatadioptric camera. Wang et al. [22] have presented three methods to calibrate paracatadioptric camera by pole-polar relationship and the antipodal sphere image.

Ying et al. [28] have found that the projection of the sphere onto the unit viewing sphere is a special case of the line, but this relationship was not applied to calibrate the paracatadioptric camera. Duan et al. [5, 6] have presented a method that it is necessary to fit the projective contour of the parabolic mirror and need a lot of pictures of the sphere. In order to reduce the demand for photos of the sphere, three different cases of two spheres were used to calibrate the paracatadioptric camera [20, 21]. However, they need to be in the proper place when taking photos, and projections of two spheres onto the image plane were hard to fit. Based on three pictures of a sphere, Zhao et al. [14, 15, 22, 34] have proposed the calibration methods that obtain the camera intrinsic parameter based on pole-polar relationship and the antipodal point images. These methods all need to acquire the antipodal sphere images, because antipodal point images were obtained based on the antipodal sphere images. Thus, the self-polar triangles and the pole-polar could be used to calibrate the paracatadioptric camera. These calibration methods are complex due to obtaining the formulation of antipodal sphere images. Once the antipodal sphere images are computed wrongly, the intrinsic parameters would be inaccurate. Therefore, they are sensitive to the noise.

To improve abovementioned calibration methods for paracatadioptric camera, we propose a calibration method using the sphere image directly instead of antipodal sphere images. Firstly, the projection of a sphere onto the unit viewing sphere is a small circle. Additionally, we may calculate the great circle which passes through the center of the unit viewing sphere and is parallel with the small circle. This may be regarded as the projection of a line onto the unit viewing sphere. Overall, a pair of orthogonal vanishing points is determined by the intersections of the projection of the great circles on the image plane. Based on constraints between the vanishing points and the absolute conic (IAC), the intrinsic parameters of the paracatadioptric camera can be obtained using three sphere images. The proposed method is simpler, so the performance is better against the noise.

In this paper, we use the relationship between the projection of the sphere and the line onto the unit viewing sphere to calibrate paracatadioptric camera. In this way, the calibration methods using the line would connect with that using the sphere. However, the mirror parameter for other types of the central catadioptric cameras is unknown. The formulation of the sphere image will contain an unknown mirror parameter constant ξ which cannot be solved directly in our method. Due to the sphere image is still a conic, the principle in this paper is also suitable for solving ξ by adding the sphere image.

This paper is organized as follows. Section 2 reviews the unit viewing sphere model for the paracatadioptric camera, the calibration method using the line, and the geometric properties of the line and the sphere under the paracatadioptric camera. Section 3 describes that our calibration algorithm using the projection properties of three spheres in detail. Section 4 illustrates the results for simulated and real experiments, confirming the effectiveness of the algorithms. Section 5 closes the paper with some concluding remarks.

2 Preliminaries

In this section, we review the geometric properties of the line and the sphere with central catadioptric camera [28]. The imaging and calibration processes for the line based on the paracatadioptric camera also are discussed [33].

2.1 Paracatadioptric camera projection model

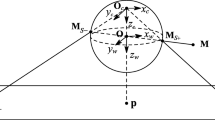

Geyer et al. [11] have presented the unit viewing sphere model for central catadioptric camera calibration. There are two coordinate systems, as shown in Fig. 1. One is the global coordinate system O-xwywzw, where the origin O is placed at the center of the unit viewing sphere, and the zw- axis is perpendicular to the image plane. The other coordinate system is that of the virtual camera Oc-xcyczc, whose zc- axis coincides with zw- axis. They are both referred to as optical axis. Based on the unit viewing sphere model, Ying and Zha [27] have suggested a projective process of the sphere for the paracatadioptric camera. This consists of two steps, as follows.

-

Step 1:

The projection of the sphere Q onto the unit viewing sphere, which is the small circle s.

-

Step 2:

By the perspective projection of the virtual camera, the projection of the small circle s into the image plane∏, i.e., the conic Cs is obtained.

Let the intrinsic parameter matrix of the virtual camera be K. It can be expressed as

where fe, r, s are the effective focal length, the aspect radio, and the skew factor, respectively. [u0v0 1]T is the homogeneous coordinate of the principal point P.

2.2 Geometric properties of the line and the sphere under the central Catadioptric camera

Ying et al. [28] have introduced the projective geometric properties of the line and the sphere, whose properties are as follows:

Proposition 1

In Fig. 1, the projection of the sphere onto the unit viewing sphere is the small circle s, it can be written as:

where [nxnynz]T is the unit normal vector and |d0| is the distance from the origin O to the plane. [xwywzw]T is a point on s. Thus, there is the great circle S that is parallel to the small circle and placed across the origin O. It can be regarded as projection of the line onto the unit viewing sphere. By setting d0 = 0, it can be written as:

The projection of the line may be considered as a special case of the projection of the sphere onto the image.

Proposition 2

In Fig. 1, Hs is referred to as the viewing conic which is formed by projection of the sphere onto the image plane Cs and the center of the visual camera OC.

2.3 Using the line to calibrate the Paracatadioptric camera

Gu et al. [33] have proposed a method to calibrate the central catadioptric camera using the line. On the unit viewing sphere, projection of the line is the great circle across the origin O. Because a point on the circle outside the diameter is perpendicular to the line at both ends of the diameter, the orthogonal vanishing points can be determined by the line. The intrinsic parameters of camera may be obtained from the algebraic constraints between the orthogonal vanishing points and IAC.

3 Method of calibrating the intrinsic parameters of the PARACATADOIPTRIC camera

This section discusses how to use the sphere to calibrate the paracatadioptric camera in details.

3.1 Solution of the equation of the small circle

Proposition 3

In Fig. 1, Hs is formed by the projection of the sphere onto the image Cs and the center of the visual camera OC. The equation of Hs is

where fe is the effective focal length, and βij (i, j = 1, 2, 3, 4) are non-zero constants.

Proof

In homogeneous coordinate, Cs can be rewritten as

where βij = βji (i, j = 1, 2, 3, 4), and [x1y1z1 1]T is the homogeneous coordinate of a point on Cs. The global coordinate of the zw axis is fe+1. Cs can be also expressed as

where βij = βji (i, j = 1, 2, 3, 4). It is expressed as F′(x1, y1, z1) = 0.

The line formed by the point m on Cs and OC is called the generating line, where OC = [0 0–1 1]T. The parametric equation of the generating line is

Thus, the equation of the viewing cone Hs can be obtained by merging Eqs. (6) and (7).

from which we have

where βij = βji (i, j = 1, 2, 3, 4). It also can be written as F′(x, y, z) = 0.

Proposition 4

If the equation of Cs is known, equation of the small circle s can be obtained.

Proof

From Fig. 1, one may see that the small circle s is the intersection of the right cone and the viewing cone.

In the global coordinate system, the unit viewing sphere can be re-expressed as

It also can be expressed as F′(xw, yw, zw) = 0.

Thus, the equation of the small circle s can be obtained from

3.2 Calculating of the great circle on the unit viewing sphere

Proposition 5

From Fig. 1, one sees that if the small circle s is known, then the great circle S can be written as

Proof

The equation of the small circle s can be described as

where n = [nxnynz 1]T is the normal direction of s. If we choose three points at random on the small circle s, the normal direction n can be calculated by Eq. (13). The distance of the small circle s from the center of the origin O = [0 0 0 1]T can be computed as

The equation of the great circle S is then given by

Proposition 6

If the normal direction of the small circle s is known, the projection of the line onto the image plane CL can be written as

where fe is the effective focal length.

Proof

The sphere image Cs satisfies

where \( {K}_C=\left[\begin{array}{ccc}{f}_x& s& {u}_0\\ {}0& {f}_y& {v}_0\\ {}0& 0& 1\end{array}\right] \) describes the intrinsic parameters of the virtual camera.

It is the equation of the viewing cone, where d0 is the distance of the origin O from the small circle s. The great circle S can be regarded as the projection of the line onto the unit viewing sphere. The projection of the line onto the image plane CL also can be obtained from (17) and rewritten as

where \( {H}_S^{\prime }=\left[\begin{array}{ccc}{n_z}^2& 0& -{n}_z{n}_x\\ {}0& {n_z}^2& -{n}_z{n}_y\\ {}-{n}_z{n}_x& -{n}_z{n}_y& -{n_z}^2\end{array}\right] \).

3.3 Calibrating the Paracatadioptric camera by the projection of the small circle onto the image

Starting from the projection of three great circles onto the image plane, the vanishing points would be obtained by the intersections of two of them. This allows one to calibrate the paracatadioptric camera. To prove this statement, let us start from the following proposition.

Proposition 7

The vanishing points can be determined from the three small circles si(i = 1,2,3).

Proof

As shown in Fig. 2, one sees that for every small circle si may determine the unique great circle Si(i = 1,2,3). Intersection of them on the image plane is obtained from.

where the CLi(i, j = 1, 2, 3) is the projection of the great circle onto the image plane, and “˄“represents the intersection of two conics. \( \overline{\boldsymbol{H}} \)*\( \overline{\boldsymbol{K}} \) is the intersection of them on image plane [33].

As shown in Fig. 2, there are total six intersections, according to the geometric properties of the circle. Thus, three pairs of orthogonal vanishing points can be determined on image plane. The intersection of \( {\overline{\boldsymbol{H}}}_{12}{\overline{\boldsymbol{H}}}_{13} \) and \( {\overline{\boldsymbol{K}}}_{12}{\overline{\boldsymbol{K}}}_{13} \) (see in Fig. 3) can be obtained from

and the intersection of \( {\overline{\boldsymbol{H}}}_{12}{\overline{\boldsymbol{K}}}_{13} \) and \( {\overline{\boldsymbol{H}}}_{13}{\overline{\boldsymbol{K}}}_{12} \) can be obtained from

where f23 and u23 are the intersections. They also are a pair of the orthogonal vanishing points. Other two pairs of orthogonal vanishing points are f24 and u24, f34 and u34. They can be computed in same way.

In Fig. 4, \( {\overline{\boldsymbol{H}}}_{12}{\overline{\boldsymbol{K}}}_{12} \), \( {\overline{\boldsymbol{H}}}_{13}{\overline{\boldsymbol{K}}}_{13} \) and \( {\overline{\boldsymbol{H}}}_{23}{\overline{\boldsymbol{K}}}_{23} \) intersect at a point P = (wxwy) which is the principal point. It can be obtained from

If the center of coordinate system of the image plane is moved to principal point P, all image points also would be moved. This shift is obtained by multiplying by the matrix T0

In the new coordinate system, the coordinates of \( {\overline{\boldsymbol{H}}}_{ij}{\overline{\boldsymbol{K}}}_{ij} \) can be obtained from.

The new coordinates for the orthogonal vanishing points are obtained from

Because f′ij (i, j = 1,2,3) and u′ij (i, j = 1,2,3) is a pair of the orthogonal vanishing points, the algebraic constraint of IAC may be written.

The image of IAC is written as

where K′ = T0K.

A pair of the orthogonal vanishing points provides a constraint for the IAC, whereas the projection of a sphere onto the unit viewing sphere can only provide a great circle. Since ω′ has five degrees of freedom, at least the projection of three spheres is necessary to obtain ω′. In order to calculate K′-1, one may decompose using Cholesky decomposition, and the matrix K′ can be obtained by solving for the inverse of K′-1. If the principal point P is known, the intrinsic parameter matrix K can be obtained.

According to the above analysis, we may summarize the algorithm in six steps.

-

Step 1:

Use the projection of k (k ≥ 3) spheres onto the image plane as input, and extract the pixel coordinates of the conics Cs and projective contour of the mirror. Solve the equation of the Cs [19].

-

Step 2:

Select the points on the Cs, and evaluate the effective focal length fe [7].

-

Step 3:

Solve the equation of the viewing conic Hs by Proposition 1 and the small circle s, which is the intersection between the viewing conic and the unit viewing sphere. It may be obtained by Proposition 2.

-

Step 4:

Obtain the image of the great circle S that is parallel to the s on the surface of the unit viewing sphere by Proposition 3 and Proposition 5. The orthogonal vanishing points may be obtained from Eqs. (21) and (22).

-

Step 5:

Determine the principal point P by Eq. (23). Moving the origin of coordinate system of the image plane to principal point P. Obtain the new coordinates of a pair of the vanishing points by multiplying by a matrix T0, according to Eqs. (26) and (27).

-

Step 6:

Find ω′ by using Eq. (29). K′ may then be calculated by decomposing ω′ which obtained using Cholesky decomposition. The matrix K′ can be obtained by solving the inverse of K′-1. Since the principal point P is known, the intrinsic parameter matrix K can be obtained.

4 Experiments

In order to assess the performance of the proposed method, we have performed experiments with simulated and real data.

4.1 Experiment with simulated data

The principal point of the simulated paracatadioptric camera were assumed as P = (400,300 1). We have generated three views to calibrate the camera. Figure 5 shows the projection of the sphere onto the image plane. The blue small conic and the great conic represent the projection of the sphere onto the image plane, the projected contour of the paraboloidal mirror, respectively. In Fig. 6, the blue small circle represents the projection of the sphere onto the unit viewing sphere, and the green great circle represents the great circle that is parallel with them, regarded as projection of the line onto the unit viewing sphere. The red great circle represents the contour circle of the unit viewing sphere.

To test the steady of our method, we have compared the steady of our method to that of Li’s method, Yu’s and Zhao’s methods with the same noise. We used 100 points from each image to added Gaussian noise which sampled from a distribution with zero mean and a standard deviation σ. The noise level σ was varied from 0 to 4 pixels. For each noise level, we have performed 100 independent trials to obtain the absolute errors of the five intrinsic parameters. The experimental results are as shown in Figs. 7(a)-(e).

As it is apparent from those plots, the absolute errors increase linearly with the noise. However, the projection of the sphere onto the unit viewing sphere is used directly in our method. It’s easier than others based on the antipodal sphere image, so our method is more robust against the noise. Although other methods are all linear too, the performance of Zhao’s [22] methods is better using the simpler pole-polar relationship. The absolute error variation trend of three methods presented in Zhao’s study [22] is mostly the same due to the similar geometric relationship. Yu’s [34] method involves additional steps. Moreover, the common self-polar triangles highly depend on four imaginary intersection points from the pair of antipodal circles and great circle. Therefore, the robustness is less than that of the Zhao’s methods. Li’s [15] methods are necessary to acquire two pairs of antipodal image points on every sphere image. In addition, the vanishing line passing through the orthogonal vanishing points would be inaccurate due to the added noise. Therefore, the absolute error of Li’s methods [15] is slightly larger than that of other methods, and there are no obvious distinctions from Figs. 7(a)-(e) based on the Li’s methods [15]. The results state that our method has the superior robustness.

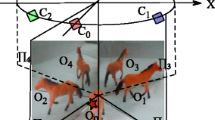

4.2 Experiment with real images

To measure whether our method can estimate the intrinsic parameters successfully, the five intrinsic parameters have been obtained using our method, Zhao’s [22] methods, Li’s [15], and Yu’s methods [34]. The results are presented in Table 1. Although other methods are linear too, these methods highly rely on the antipodal sphere images. Compared to our method which directly obtains the intrinsic parameters using the sphere images, these methods are complex to obtain the antipodal sphere image. Moreover, the common self-triangles would be inaccurate in Yu’s method [34], due to the four imaginary intersection points are obtianed diffcultly umder the influence of the noise. In Zhao’s method [22], the sphere image contour is acquried inaccurate due to the noise. Therefore, the intrinsic parameters obtained by our method are closer to the ground truths as shown in Table 1. The results state that the intrinsic parameters are more accurate obtained by our method.

In our experiment, we have employed a central catadioptric camera designed by the Center for Machine Perception at Czech Technical University and used a yellow table tennis ball as the calibration model. The paraboloidal mirror parameter is ξ = 0.966. The table tennis has been placed on a checkerboard in different positions (see the images in Figs. 8(a)-(c)), and the resolution is 1204 × 1176 pixels. The edge points of the sphere images and the mirror contour projection have been acquired by Canny edge detector, as shown in Figs. 9(a)-(c). The projection of the spheres and mirror contour onto the image plane has been obtained by least-square fitting.

To further assess the performance of our methods, we have used the intrinsic parameters obtained by our method to correct images of Figs. 8(a)-(c). The corrected results are shown in Figs. 10(a)-(c), which all the deformed lines were straightened. The real information is displayed by corrected images well. To perform the more detailed verification, we checked the orthogonal lines on the checkerboard. The orthogonal intersections of the projection for two corresponding parallel lines on to the image plane have been detected by Hough transformation [16]. Results are shown in Figs. 11(a)-(c). In principle, a vanishing line can be determined by a pair of orthogonal vanishing points, which should be coincident. In practice, they do not intersect at a single point due to the noise, as shown in Figs. 12(a)-(c). Results clearly show that our method is convincing and accurate.

To further verify the accuracy of the intrinsic parameters obtained by our method, we have performed the 3D reconstruction [18] for the checkerboard of Fig. 8 using the parameters reported in Table 1. Fifty points of the checkerboard using Harris corner detection [29] have been selected from each image as shown in Figs. 13(a)-(c). The results are shown in Figs. 14(a)-(g) using our method, Zhao’s [22] methods, Yu’s [34] and Li’s [15] methods. As shown Figs. 14(a)-(g), the 3D reconstruction results are similar. To measure the performance of 3D reconstruction based on the different methods, the parallelism and orthogonality of the 3D reconstruction results would be test. To obtain the equation for each line and column as shown in Figs. 14(a)-(g), the angles between any two lines in parallel directions have been calculated and then averaged by least-square fitting. The average angle in the orthogonal directions may be analogously computed. The angle results as Table 2 shown. The angles for parallel and orthogonal lines are 0° and 90° in the checkerboard, respectively. Therefore, the closer the angles for the parallelity and orthogonality of 3D reconstruction are to 0°, 90°, respectively, the better the performance of the method. More antipodal image points and the projective contour of the mirror are obtained in Li’s methods [34], so the results of Li’s methods [34] are not good as others. Common self-polar triangles highly depend on four imaginary intersection points obtained more difficultly, so the performance of Yu’s methods [15] is not competitive with that of Zhao’s methods [22]. Only a pair of antipodal image points is obtained in Zhao’s methods [22], so the results of their three methods are similar and better than Li’s [34] and Yu’s [15] methods. Different from these methods, our method is only based on the projection of sphere onto the unit viewing sphere directly without using the pole-polar relationship and properties of the antipodal sphere image, so it is simpler. It demonstrates that the reliability of our method and the enhancement in precision compared to other six methods.

5 Conclusion

In this paper, we propose a calibration method for paracatadioptric camera. According to the unit viewing sphere model, the projection of the spatial sphere is the small circle onto the unit viewing sphere. There exists a great circle which is parallel to the corresponding small circle, representing the projection of a line onto the unit viewing sphere. Based on a point of the circle outside the diameter is perpendicular to the line at both ends of the diameter on the great circle, the orthogonal vanishing points may be obtained using the geometric invariance on the image plane. Therefore, the intrinsic parameters of paracatadioptric camera are obtained from the algebraic constraints between the vanishing points and intrinsic parameters. The experimental results state that our method can estimate the intrinsic parameters accurately.

The calibration methods [15, 22, 34] for paracatadioptric camera using the sphere are all based on the antipodal sphere image. Li et al. [15] used the antipodal sphere image to obtain polar and corresponding point at infinity. However, the vanishing lines cannot intersect at circular points due to the noise. Yu et al. [34] used the antipodal sphere image to acquire the self-polar triangles, but the imaginary intersection points are obtained difficultly. Zhao et al. [22] acquired the symmetry axis according to the antipodal sphere image, but the sphere image contour is obtained inaccurate due to the noise. To improve the abovementioned methods, we obtained the intrinsic parameters by exploiting the properties of the projection of the sphere onto the unit viewing sphere. Our method makes indeed use of the geometric properties of the projection of the line and the sphere onto the unit viewing sphere directly. This also allows that the calibration method using lines may be also applied to cases where spheres are used as calibration model.

References

Agrawal M, Davis LS (2003) Camera calibration using spheres: asemi-definite programming approach, in Proceedings of the IEEE International Conference on Computer Vision (2003), pp. 782–789

Agrawal M, Davis LS (2007) Complete camera calibration using spheres: a dual-space approach. Anal. Int Math J Anal Appl 34(3):257–282

Baker S, Nayar SK (1999) A theory of single-viewpoint catadioptricimage formation. Int J Comput Vis 35(2):175–196

Barreto JP, Araujo H (2003) Paracatadioptric camera calibration using lines, in Proceedings of the IEEE International Conference on Computer Vision, pp. 1–7

Duan H, Wu Y (2011) Paracatadioptric camera calibration using sphere images, in Processing IEEE International Conference on Image, pp. 641–643

Duan H, Wu Y (2012) A calibration method for paracatadioptric camera from sphere images. Pattern Recogn Lett 33(6):677–684

Duan F, Wu F, Zhou M, Deng X, Tian Y (2012) Calibrating effective focal length for central catadioptric cameras using one space line. Pattern Recogn Lett 33(5):646–653

Duan HX, Wu YH, Song L, Wang J, Liu N (2014) Properties of central catadioptric circle images and camera calibration,in Springer International Conference on Rough Sets and Knowledge Technology, pp. 229–240

Duan HX, Wu YH, Song L, Wang J (2019) Fitting a cluster of line images under central catadioptric camera. Cluster Computer 22:781–793

Geyer C, Daniilidis K (2000) Equivalence of catadioptric projectionsand mappings of the sphere, in Proceedings of the IEEE Work.shop on Omnidirectional Vision (2000), pp. 91–96

Geyer C, Daniilidis K (2001) Catadioptric projective geometry. Int J Comput Vis 45(3):223–243

Geyer C, Daniilidis K (2002) Paracatadioptric camera calibration. IEEE Trans Pattern Anal Mach Intell 24(5):687–695

Jia J, Jiang G, Cheng-Ke WU (2010) Geometric interpretations and applications of the extrinsic parameters derived from the cameracalibration based on spheres. IEEE Trans Pattern Anal Mach Intell 23(2):160–164

Li YZ, Zhao Y (2017) Calibration of paracatadioptric camera by projection imaging of single sphere. Appl Opt 10:2230–2240

Li YZ, Zhao Y, Zheng BH (2018) Calibrating a paracatadioptric camera by the property of the polar of a point at infinity with respect to a circle. Applied Optics 57(15):4345–4352

lllingworth J, Kittler J (1988) A survey of the Hough transform. Comput. Vis. Graph. Image Process 43:765–768

Marr D (1982) Vision. W. H, Freeman and Company (San Fransisco

Micusik B, Pajdla T (2004) Para-catadioptric camera auto-calibration from epipolar geometry, in Proceedings of the Asian Conference on Computer Vision (AFCV), pp. 748–753

Teramoto H, Xu G (2002) Camera calibration by a single image of balls: from conics to the absolute conic, in Proceedings of the5th Asian Conference on Computer Vision (2002), pp. 499–506

Wang YL, Zhao Y (2015) Intricsic parameter determination of a paracatadioptric camera by the intersection of two sphere projections. Optical Society of America A 32(1):2201–2209

Wang YL, Zhao Y (2019) Paracatadioptric camera calibration based on the projecting relationship of the relative position between two spheres. Springer Multimedia Tools and Applications 78:12223–12249

Wang SW, Zhao Y, You J (2020) Calibration of paracatadioptric cameras based on sphere images and pole-polar relationship. OSA Continuum 3(4):993–1012

Wu YH, Hu ZY (2005) Geometric invariants and applications under catadioptric camera model, in Proceedings of the IEEE International Conference on Computer Vision pp.1547–1554.

Xu G, Hao Z, Li X, Su J, Liu H, Zhang X (2016) Calibration method of the laser plane equation for vision measurement adoptingobjective function of uniform horizontal height of feature points. Opt Rev 23(1):33–39

Yang S, Liu M, Song J, Yin S, Guo Y, Ren Y, Zhu J (2017) Projector calibration method based on stereo vision system. Opt Rev 24(5):727–733

Ying X, Hu Z (2004) Spherical objects based motion estimation forcatadioptric cameras, in Proceedings of the IEEE InternationalConference on Pattern Recognition (2004), pp. 231–234

Ying X, Hu Z (2004) Catadioptric camera calibration using geometric invariants. IEEE Trans Pattern Anal Mach Intell 26(10):1260–1271

Ying X, Zha H (2008) Identical projective geometric properties of central catadioptric line images and sphere images with applications to calibration. Int J Comput Vis 78(1):89–105

Zhang X, Ji X (2012) An improved Harris corner detection algorithm for noised images, in International Conference on Materials Science and Information Technology, pp. 6151–6156

Zhang H, Zhang G, Wong KY (2005) Camera calibration with spheres: linear approaches, in Proceedings of the IEEE International Conference on Image Processing, pp. 1150–1153

Zhang H, Wong KY, Zhang G (2007) Camera calibration from images of spheres. IEEE Trans Pattern Anal Mach Intell 29(3):1499–1502

Zhang L, Du X, Hu JL (2010) Using concurrent lines in central catadioptric camera calibration. Zhejiang University Science C 12(3):239–249

Zhao Y, Gu WJ (2016) Method to solve intrinsic geometric parameters of a paracatadioptric camera using 3D lines. Optick 127(5):2325–2330

Zhao Y, Yu XJ (2019) Paracatadioptric camera calibration using sphere iamges and common self-polar triangles. Opt Rev 26(11):1–12

Funding

National Natural Science Foundation of China (62063034).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, R., Zhang, J. A calibration method for paracatadioptric cameras based on circular sections. Multimed Tools Appl (2022). https://doi.org/10.1007/s11042-022-12918-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-022-12918-9