Abstract

Parallax, exposure differences, ghost and efficiency handling are the challenging tasks for image stitching, which is regarded as the promising approach to resolve the issues in the tasks. In this paper, we propose a novel stitching method that locates the overlapped regions of the input images, and records the feature points at the same time. The warping of each image is then guided by a mesh interpolation map in a local warp model. We also propose an arc function weight model to eliminate image chromatic aberration. It is proved via the validation cases that our approach shows constantly the better performance than the AutoStitch, APAP, SPHP, ANAP, ELA and many other state-of-the-art methods. Our method can effectively avoid mismatched points, improve the matching efficiency of feature points of large-size images by about 60%, eliminate the color difference seam and ghost of the image, and still have good accuracy and stability in complex scenes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image stitching technology combines a set of images with overlapped regions to form a new image of a wide-angle scene containing information of each image [34]. Image stitching technology is widely used in panoramic images [5] and videos [12, 33, 37], virtual reality [32, 36, 40], remote sensing [17], real-time monitoring, military reconnaissance [10, 35] and other fields [16, 26, 30, 38]. The conventional procedure is to first register the feature points of images I1 and I2. The image is then deformed and converted to the same coordinate system. Finally, the deformed image is fused [11, 27]. However, registration of feature points will take long time. Also, there will be ghosting and object distortion due to the mismatch between feature points and image distortion. In order to solve the above problems, this paper proposes a new image stitching method for feature positioning and stitching seam elimination.

The point mapping relationship between the images is determined by the ORB [29], and the boundary line of the polygon overlapping area is calculated. The feature points are extracted and the parallel orientation registration is performed on the overlapping region by SIFT [25]. The optimization of the TPS models can be found in the work [1, 4], and then the image is deformed. In order to obtain a high quality stitched image, we finally use the arc function model to smooth the deformed image pixels.

2 Related work

Feature extraction and image fusion are two challenging points that image stitching technology confronts. In recent years, related algorithms have been continuously contributed in the optimized manner.

Feature matching is an important factor that affects the real-time and quality of image stitching. Many studies have spared no effort to improve the performance of matching methods. Lowe [25] proposed a robust scale-invariant feature transform (SIFT) registration algorithm in 1999 and improved it in 2004. In 2006, Herbert Bay proposed an improved algorithm for the SIFT algorithm, Speeded Up Robust Features (SURF) [2], which uses the integral image to increase the computational speed of SIFT. Rosten et al. optimized corner detection and proposed the Features from Accelerated Segment Test (FAST) in 2006 [28]. The FAST algorithm performs corner detection by comparing the gray values of 19 pixels in the neighborhood in sections, but it also has the shortcomings of excessive dependence on threshold, lack of scale and rotation invariance. Calonder et al. proposed the binary coded descriptor BRIEF [7]. This method selects N point pairs around the key point P in a certain way, and then combines the corner results of the N point pairs to construct the descriptor of the key point. Rublee [29] proposed a new framework algorithm in 2011, Oriented fast and Rotated Brief (ORB), 3 times faster than the sift. Bian et al. [3] proposed an ORB-based grid motion statistics (GMS) matching algorithm to refine the violent matching point pairs, thereby obtaining data containing a large number of correct matching point pairs. It not only depends on the threshold value, but also produces a lot of mismatches. In summary, compared to the SIFT algorithm, although the later matching algorithm has improved the speed of acquisition, it has lost the quality of the matching. Therefore, the performance of feature matching still needs to be improved.

AutoStitch [6] takes the multiband fusion as the core and stitches together the images of the camera’s optically close coincidence. DHW [13] divides the scene into background and foreground planes, using two homography matrices to align and splice separately. Zaragoza J et al. proposed a milestone APAP [39], which divides the image into dense grids and proposes a local alignment method. SPHP [8] adds similar transformation constraints to the entire image, reducing projection distortion in non-overlapping regions. ANAP [24] linearizes the homography matrix and transforms it into a global similar transformation that represents camera motion. GSP [9] use linear alignment constraints to determine the angular selection of global similar matrices, while using local similarity constraints and global similarity constraints. Li Jing introduced the Bayesian probability model to refine the feature point set and proposed the ELA [20] image stitching method. The stitching results of these methods will have different degrees of ghosting problems, which will affect the image quality. In sum, the problems of real-time and ghosting encountered in image stitching still need to be solved.

3 Our method

Our method aims to improve the efficiency and quality of image stitching. Figure 1 is an overview of the method. First, the positioning of the image coincidence area is performed. Secondly, the feature points are oriented and registered in a parallel processing manner. The image is then deformed. The deformed image is weighted and fused afterwards. Finally, the spliced image without color difference and ghost is output. The function of positioning is similar to the preprocessing, and the weighted fusion works like the post-processing stage. And then in part A, we first introduce the localization and feature point registration, the B part is the image deformation, the C part is the pixel smoothing process.

The target and reference images are represented by images I1 and I2. The matching points between I1 and I2 are represented by the homogeneous coordinates \( \mathbf{p}\mathbf{1}={\left[x\kern0.5em y\kern0.5em \mathsf{1}\right]}^T \) and \( \mathbf{p}\mathbf{2}={\left[u\kern0.5em v\kern0.50em \mathsf{1}\right]}^T \). The homography matrix

is used to represent the relationship between p1 and p2 [8, 9, 13, 20, 24, 39].

By rewriting the formula (1) to 03 × 1 = p1 · H · p2, that is

Given N matching points \( {\left\{\mathbf{P}{\mathbf{1}}_i\right\}}_{i=1}^N \) and \( {\left\{\mathbf{P}{\mathbf{2}}_i\right\}}_{i=1}^N \), H can be estimated by

Where A is a 2N · 9 matrix. The solution is the least significant right singular vector of A.

We use \( {\left\{\mathbf{P}{\mathbf{1}}_i\prime \right\}}_{i=1}^N \) to denote the projection of \( {\left\{\mathbf{P}{\mathbf{1}}_i\right\}}_{i=1}^N \) in I1 computed by (1).

3.1 Feature positioning

We propose a method of overlapping regional positioning to avoid a large number of invalid calculations.

Firstly the images I1 and I2 are divided into 10 test sub-images To speed up the calculation, we set the height of the sub-image to 1/2 of the height of the original image. The reason is that this segmentation matching works best in multiple sets of experiments. The index of the sub-image of I1 from left to right is I19-I10 and the index of the sub-image of I2 from left to right is I20-I29. Establish an ORB-based feature point registration algorithm.

The right side boundary of I1 and the left side boundary of I2 are respectively mapped by the formula (1) in the corresponding figures to obtain corresponding polygon overlapping areas. In the Parallel directional registration section of Fig. 1, we divide the corresponding overlapping regions into 5 sub-images from A to E in order. This split matching balances accuracy and efficiency. From the homography matrix H, the position of the feature points can be estimated. We separately align the feature points in the images A, B, and C with the feature points of D and E at the same time. The homography between images is obtained by sampled sub-image matching. This homography can accurately match all feature points in the coincident area to avoid mismatching.

3.2 Image wrapping

Thin-plate spline functions are commonly used to transform images [20, 21, 31] with expressions

Where φ(s) is a radial basis function RBF. The coefficients a = (a0, a1, a2)T and ω = (ω1, ⋯, ωn)T are calculated from the set of registered feature points and the following equations.

Where

I is an identity matrix, and the elements of the matrix S represent the distance between the current point and other points in the point set.

In order to tolerate errors such as noise, regularization parameters are usually introduced to control the smoothness of TPS interpolation. In this paper, the average distance R between all feature points in the feature point set is used as a regular parameter instead of the previous empirical value constant to achieve adaptive adjustment of the smoothness of the surface. Let λ = R.

Bring the coordinates of any point of image into Eq. (4) to get the corresponding coordinates in deformed graph. The image is deformed by the Eq. (4) at a fixed pixel interval. The image is mapped to the grid map by an interpolation function. In order to make the image more natural, we add related constraints.

Where \( {\overline{\boldsymbol{I}}}_{\mathbf{1}}\left(\boldsymbol{x},\boldsymbol{y}\right) \) and \( {\overline{\boldsymbol{I}}}_2\left(\boldsymbol{x},\boldsymbol{y}\right) \) is the deformation map that will eventually be merged, I1(x, y) and I2(x, y) is the image after the image I1 and I2 are deformed by TPS, Hq = α · H + β · Hs and Hp = Hq · H−1 are obtained by the setting of ANAP [24] and ELA [20].

3.3 Image weighting

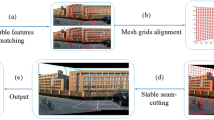

The traditional method combines linearly blended warped images to reduce the color difference and image weight of the image [8, 24]. As shown in Fig. 2, the image shows the ghosting problem of this fusion algorithm. In order to better handle ghosting and chromatic gaps, we try to introduce nonlinear weights to assign pixel weights more flexibly.

In this paper, a arc function nonlinear weight model is proposed to perform pixel values of smoother transitional coincidence regions.

Where P1(x, y), P2(x, y) represent the pixel values of the overlapping regions in the images \( {\overline{\boldsymbol{I}}}_{\mathbf{1}}\left(\boldsymbol{x},\boldsymbol{y}\right) \) and \( {\overline{\boldsymbol{I}}}_2\left(\boldsymbol{x},\boldsymbol{y}\right) \), respectively. And P(x, y) represents the pixel values of the overlapping regions in the final fused image. ω1and ω2are weighting coefficients

Where \( x=\frac{j-{j}_{Li}}{j_{Ri}-{j}_{Li}} \),\( x\in \left[\mathsf{0,0.5}\right]\cup \left(\mathrm{0.5,1}\right] \), j is the value of the column of the pixel to be smoothed in the overlapping region, jLi and jRiare the values of the column of the left end and the right end of the row of the pixel to be smoothed in the overlapping region, respectively.

The total weight change of the linear weight of the pixel value corresponding to the image I1 with the position is −1, and the total rate of change of the nonlinear weight is

That is, the overall change rate of non-linear and linear weighting is the same. Our method will not produce more error than the linear weight model, but will expand the range of smooth transition, thus reducing the problem of ghosting.

As shown in Fig. 3, comparing the most advanced image stitching techniques on the Temple image dataset, we list the images of the corresponding overlapping regions in AutoStitch, APAP, SPHP+APAP, ANAP, ELA and our result graphs. AutoStitch creates ghosting, misalignment, and chromatic gaps. Algorithms such as SPHP also produce ghosting and chromatic gaps. In our method, the arc function model is used to fuse the image, which solves the chromatic aberration problem of the red circle’s mark. Compared with the traditional linear fusion scheme, the arc function can increase or decrease the proportion of the warping image at a suitable position to achieve smoother pixel transition and suppress the problem of ghosting. For example, the method in this paper effectively increases the influence of the left image in the position on the left side of the midpoint, and accordingly reduces the proportion of the corresponding position in the right image, while the opposite is true when it is on the right side. The optimized TPS function is used to further improve the pixel alignment accuracy to solve the problem of overlap and ghosting of blue circle marks. The result is a more natural image.

4 Experiments and results

We have carried out comparative experiments of six methods using image datasets such as Temple, followed by the methods of AutoStitch [6], SPHP [8], APAP [39], ANAP [24], ELA [20] and ours. In our experiments, we chose the same parameter settings suggested in the corresponding paper and used the code provided by the author of the paper to obtain the comparison results. More results were provided in the supplemental materials. Our experimental data was run on a desktop with 3.9 GHz CPU and 4 GB RAM.

Figure 4 compares all methods of stitching two challenging images we provide. Each row represents an effect image obtained by different methods, namely AutoStitch, APAP, SPHP, ANAP, ELA and ours. The image on the left is the output image containing the marker box that needs to be highlighted, and the middle red box and the green box on the right are images of the local area containing the parallax error and distortion area.

The AutoStitch in row 1 does not align the image of the overlapping region well with multiband fused images. In the second row, SPHP with global similarity transformation constraints and APAP with network optimization in row 3 are misaligned in the red and green marker boxes. The ANAP on row 4 eliminates the parallax of the overlapping region by the linearized homography matrix, but still does not solve the perspective distortion problem in non-overlapping regions. The results obtained by the ELA in the fifth row using the global homography and similarity also have perspective distortion problems. The results of the last row show that our method is more aligned and can successfully handle parallax problems with no visible parallax errors and viewing angle distortion.

Table 1 compares the time consumption of our method with other methods without location processing to handle feature points, including feature point extraction and matching and total time consumption, as well as the number of registered feature points. Figure 5 is a comparison of the time consumption of our method with various advanced algorithms.

As shown in Table 1, after adding the positioning method, compared with the traditional SIFT, under the same conditions, the extraction and registration time consumption of feature points is greatly reduced, and the number of feature points obtained by the registration is also significantly increased. Furthermore, compared with the fast feature matching method, the method in this paper has more advantages in the number of features, which is more conducive to the subsequent image alignment process. From the comparison results of the time consumption of each algorithm in Fig. 5, we can see that our efficiency is significantly higher than other advanced algorithms. It can be seen from various examples that our method improves the efficiency of the output image and improves the quality of the output image.

The best visual image of AutoStitch [6], SPHP [8], APAP [39], ANAP [24] and ELA [20], which is the tiniest image of parallax and ghosting, is used as a reference image. The quality of the reference map and our image was evaluated by SSIM [14] to obtain Table 2 The higher the SSIM score, the better the quality of the stitched image. The comparison results in Table 2 show that our method is in most cases ahead of other advanced methods, that is, the quality of the image output by our method is better.

In terms of the matching of the features, our contributions are three-folds: firstly, the proposed approach maintains the stability of SIFT as much as possible to obtain considerable feature points, secondly, it improves matching efficiency and quality through preprocessing. Thirdly, the alignment accuracy of the image is also improved, due to more stable matching. A large number of matches can effectively improve the robustness of alignment to reduce or even avoid ghosting.

5 Conclusion

In this paper, a novel solution is proposed to solve the following problems, given to the fact the feature extraction efficiency in the existing image stitching is poor, and the image fusion has visual problems such as chromatic aberration, deformation and ghosting. The example results show that the stitching effect of this method is better than some of the most advanced technologies, such as AutoStitch, APAP, SPHP, ANAP and ELA. There are no stitching in the image, and the distortion caused by the perspective transformation is alleviated.

Although our method has been able to better accelerate image stitching and provide better fusion effects, the objects in the image will still have some unnaturalness. Next, we will continue to optimize the performance of the method, such as making better. The pixel weight model and the image distortion of the non-overlapping regions are optimized to make the image effect more natural. We have considered the latest feature matching methods such as GMS [3], and the latest image stitching methods such as QH [19], SPW [23] and TFA [22]. At the same time, line matching such as HLM [18] and CLPI [15] can also be introduced into feature matching to enrich feature points.

References

Antonio R, Markus B (2010) Thin-plate spline analysis of allometry and sexual dimorphism in the human craniofacial complex. Am J Phys Anthropol 117(3):236–245

Bay H, Tuytelaars T, Gool LV (2006) SURF: Speeded up robust features. In Proc of European Conf. on Computer Vision (pp. 404–417)

Bian JW, Lin WY, Matsushita Y, Yeung SK, Nguyen TD, Cheng MM (2017) GMS: Grid-Based Motion Statistics for Fast, Ultra-Robust Feature Correspondence. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 2828–2837)

Bookstein FL (1989) Principal warps: thin-plate splines and the decomposition of deformations IEEE Transactions on Pattern Analysis and Machine Intelligence 2(6)

Brown M, Lowe D G (2003) Recognising panoramas. Proceedings Ninth IEEE International Conference on Computer Vision 2:1218–1225

Brown M, Lowe DG (2007) Automatic panoramic image stitching using invariant features. Int J Comput Vis 74(1):59–73

Calonder M, Lepetit V, Strecha C, Fua P (2010) BRIEF: binary robust independent elementary features. Lect Notes Comput Sci 6314(4):778–792

Chang CH, Sato Y, Chuang YY (2014) Shape-preserving half-projective warps for image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (PP. 3254–3261)

Chen YS, Chuang YY (2016) Natural image stitching with the global similarity prior. In Proc of European Conf on Computer Vision (pp. 186–201)

Chen W-C, Xiong Y, Gao J, et al. (2007) Efficient extraction of robust image features on Mobile devices. The Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality Nara. Japan

Davis J (1998) Mosaics of scenes with moving objects. Proc IEEE Conf Comput Vision Patt Recog

Gaddam VR, Riegler M, Eg R, Griwodz C, Halvorsen P (2016) Tiling in interactive panoramic video: approaches and evaluation. IEEE Trans Multimed 18(9):1819–1831

Gao J, Kim SJ, Brown MS (2011) Constructing image panoramas using dual-homography warping. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (pp. 49–56)

Hore A, Ziou D (2010) Image Quality Metrics: PSNR vs. SSIM. International Conference on Pattern Recognition (ICPR 2010) 20th Istanbul. (pp. 2366–2369)

Jia Q, Gao X, Fan X et al (2016) Novel coplanar line-points invariants for robust line matching across views. In Proc of European Conf. on Computer Vision (pp 599–611)

Jiang Y, Xu K, Zhao R, Zhang G, Cheng K, Zhou P (2017) Stitching images of dual-cameras onboard satellite. ISPRS J Photogramm Remote Sens 128:274–286

Li X, Hui N, Shen H, Fu Y, Zhang L (2015) A robust mosaicking procedure for high spatial resolution remote sensing images. ISPRS J Photogramm Remote Sens 109:108–125

Li K, Yao J, Lu X, Li L, Zhang Z (2016) Hierarchical line matching based on line–junction–line structure descriptor and local homography estimation. Neurocomputing 184:207–220

Li N, Xu Y, Wang C (2017) Quasi-homography warps in image stitching. IEEE Trans Multimed 20(6):1365–1375

Li J, Wang Z, Lai S, Zhai Y, Zhang M (2018) Parallax-tolerant image stitching based on robust elastic warping. IEEE Trans Multimed 20(7):1672–1687

Li JL, Jiang PQ, Song SX et al (2019) As-aligned-as-possible image stitching based on deviation-corrected warping with global similarity constraints. IEEE Access 7:156603–156611

Li J, Deng BS, Tang RF, Wang ZM, Yan Y (2020) Local-adaptive image alignment based on triangular facet approximation. IEEE Trans Image Process 2356–2369

Liao TL, Li N (2019) Single-perspective warps in natural image stitching. IEEE Trans Image Process 29:724–735

Lin CC, Pankanti SU, Ramamurthy KN, et al. (2015) Adaptive as-natural-as-possible image stitching. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 7:1155–1163

Lowe DG (2004) Distinctive image features from scale-invariant Keypoints. Int J Comput Vis 60(2):91–110

Millis BA, Tyska MJ (2017) High-resolution image stitching as a tool to assess tissue-level protein distribution and localization. Methods Mol Biol 1606:281

Peleg S (1981) Elimination of seams from photomosaics. Comput Graph Image Process 16(1):90–94

Rosten E, Drummond T (2006) Machine learning for high-speed corner detection. Lecture Notes in Computer Science. pp 430–443

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: an efficient alternative to SIFT or SURF. Proceedings of the IEEE International Conference on Computer Vision (pp. 2564–2571)

Semenishchev EA, Voronin VV, Marchuk VI, et al. (2017) Method for stitching microbial images using a neural network. Proceedings of the mobile multimedia/image processing, security, and applications 10221:102210O

Sheng H, Lou C, Xu W et al (2014) A seamless approach to stitching lunar DOMs with TPS. Appl Math Inf Sci 555–562

Shum HY, Ng KT, Chan SC (2005) A virtual reality system using the concentric mosaic: construction, rendering, and data compression. IEEE Trans Multimed 7(1):85–95

Sun X, Foote J, Kimber D et al (2005) Region of interest extraction and virtual camera control based on panoramic video capturing. IEEE Trans Multimed 7(5):981–990

Szeliski R (2006) Image alignment and stitching: a tutorial. Foundations and Trends in Computer Graphics and Vision 2(1):1–10

Takacs G, Xiong Y, Grzeszczuk R, et al. (2008) Outdoors augmented reality on mobile phone using loxel-based visual feature organization. Proceeding of the 1st ACM international conference on Multimedia information retrieval Vancouver. British Columbia, Canada

Tang WK, Wong TT, Heng P (2005) A system for real-time panorama generation and display in tele-immersive applications. IEEE Trans Multimed 7(2):280–292292

Tzavidas S, Katsaggelos AK (2005) A multicamera setup for generating stereo panoramic video. IEEE Trans Multimed 7(5):880–890

Yang F, Deng ZS, Fan QH (2013) A method for fast automated microscope image stitching. Micron 48:17–25

Zaragoza J, Chin TJ, Brown MS et al (2013) As-projective-as-possible image stitching with moving DLT. IEEE Transactions on Pattern Analysis and MachineIntelligence 36(7):1285–1298

Zhao Q, Wan L, Feng W, Zhang J, Wong TT (2013) Cube2Video: navigate between cubic panoramas in real-time. IEEE Trans Multimed 15(8):1745–1754

Acknowledgments

This work is supported by National Natural Science Foundation of China (61762018), the Guangxi 100 Youth Talent Program (F-KA16016), the Colleges and Universities Key Laboratory of Intelligent Integrated Automation, Guilin University of Electronic Technology, China (GXZDSY2016-03), the research fund of Guangxi Key Lab of Multi-source Information Mining & Security (18-A-02-02), Natural Science Foundation of Guangxi (2018GXNSFAA281310) and the Innovation Project of Guangxi Graduate Education (XYCSZ2019075).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Qin, Y., Li, J., Jiang, P. et al. Image stitching by feature positioning and seam elimination. Multimed Tools Appl 80, 20869–20881 (2021). https://doi.org/10.1007/s11042-021-10694-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-10694-6