Abstract

This paper presents new three proposed approaches for enhancement of Infrared (IR) night vision images. The first approach is based on merging gamma correction with the Histogram Matching (HM). The second approach depends on hybrid gamma correction with Contrast Limited Adaptive Histogram Equalization (CLAHE). The third approach is based on a trilateral enhancement that the IR images pass through three stages: segmentation, enhancement and sharpening. In the first stage, the IR image is divided into segments based on Optimum Global Thresholding (OTSU) method. The second stage, which is the heart of the enhancement approach, depends on Additive Wavelet Transform (AWT) to decompose the image into an approximation and details. Homomorphic enhancement is performed on the detail components, while Plateau Histogram Equalization (PHE) is performed on the approximation plane. Then, the image is reconstructed and subjected to a post-processing high pass filter. Average gradient, Sobel edge magnitude, entropy and spectral entropy has been used as quality metrics for assessment the three proposed approaches. It is clear that the third proposed approach gives superior results to the two proposed approaches point view the quality metrics. On the other hand, clear that the third proposed approach takes long computation time in the implementation with respect to the two proposed approaches. The first proposed approach gives better results to the two proposed approaches from the computation time perspective.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image enhancement techniques have been widely used in many applications of image processing, where the subjective quality of images is important for human interpretation. Contrast is an important factor in any subjective evaluation of image quality. Contrast is the difference in visual properties that makes an object distinguishable from other objects and the background [5, 6, 9].

Night vision signifies the ability to see in dark (night). This capability is normally possessed by owls and cats, but with the development of science and technology, devices have been developed to enable human beings to see in the dark as well as in adverse atmospheric conditions such as fog, rain, and dust [3, 15, 17, 23, 24].

The main purpose for the development of night vision technology was military use to locate enemies at night [8, 13]. This technology is not only used extensively for military purposes, but also for navigation, surveillance, targeting and security [1, 2, 7].

IR astronomy uses sensor-equipped telescopes to penetrate dusty regions of space such as molecular clouds, object detection such as planets. This astronomy is used also to view highly red-shifted objects from the early days of the universe. IR cameras are used to detect heat loss and observe changing blood flow in the skin in insulated systems [4, 20].

Some thermal IR datasets have been published in the past such as the OTCBVS Benchmark [11, 20] and the LITIV Thermal-Visible Registration Dataset [11, 18, 19, 21]. Some samples of these datasets will be used in the simulation experiments introduced in this paper. Edge information is one of the crucial features for IR images [10, 12, 14, 16, 22].

This paper presents third approaches for the enhancement of IR night vision images. The first approach is based on mixing gamma correction with the HM. The second approach depends on hybrid gamma correction with CLAHE. The third proposed approach is based on trilateral enhancement of IR night vision images. The paper is arranged as follows. Section 2 gives motivation and related work. Section 3 gives an explanation of the segmentation. Section 4 gives the plateau histogram equalization. Section 5 gives a discussion of the AWT homomorphic enhancement. Section 6 presents the first proposed approach. Section 7 presents the second proposed approach. Section 8 presents the third proposed approach. In section 9, performance evaluation metrics is spotted. Section 10 gives a discussion of the simulation results. Finally, section 11 clears the conclusion and future work.

2 Motivation and related work

This paper deals with a vital topic derived from the problems addressed for IR images. The objective is the development of image processing technologies to enhance IR night vision images. The proposed approach is based on a hybrid implementation of three stages: segmentation, enhancement and sharpening. Compared to the most relevant work [5, 6], this work depends on performance evaluation with average gradient, Sobel edge magnitude, entropy and spectral entropy [4]. It is clear that the obtained results in this paper are better than those of the previous works as shown in Tables 2, 3, 4 and 5 for four cases. Enhancement of the night vision images and videos is very important for many computer vision tasks, such as visual tracking in the night [3, 5]. The use of multiple features for tracking from IR videos can be enhanced with the proposed approach since different types of variations such as illumination; occlusion and pose can be enhanced [4].

To intelligently analyze and understand video content, a main step is to accurately perceive the motion of the objects of interest in videos. The task of object tracking aims to determine the position and status of the objects of interest in consecutive video frames. This field is very important, and has received great research interest in the last decade. Although numerous algorithms have been proposed for object tracking in RGB videos, the task is still limited in IR videos [3, 4, 6].

3 Segmentation stage

This stage is based on an OTSU’s N thresholding method. It is a nonparametric and unsupervised method of automatic threshold selection for segmentation of images. It is a simple procedure and utilizes only the zeroth and the first-order cumulative moments of the gray-level histogram. It is optimum in the sense that it maximizes the between class variance, a well-known measure used in statistical discriminant analysis [6, 14]:

where M x N is the size of an image, ni is the total number of pixels in the image. Suppose we select a threshold k, and use it to threshold the image into two classes, C1 and C2. Class C1 consists of pixels with intensity values in the range [0, k]. Class C2 consists of the pixels with intensity values in the range [k + 1, L-1]. Using this threshold, the probability, P1(k), that a pixel is assigned to class C1 is given by the cumulative sum as follows [10, 16]:

where i is the number of pixels having gray level value, the pixels of the input image be represented in L gray levels, k is a selected threshold from 0 < k < L-1.

Similarly, the probability of Class C2 occurring is:

where P1(k) is the probability of Class C1.

The mean intensity values of the pixels assigned to class C1:

where P1(k) is the probability of Class C1.

and similarly the mean intensity values of the pixels assigned to class C2:

where P2(k) is the probability of Class C2.

The global mean is given by this equation:

The problem is to find an optimum value for k which will maximize the criterion defined by this equation:

where σB2(k) is the between class variance defined as:

and σG2(k) is the global variance defined as:

where the optimum threshold is the value k*, that maximizes σB2(k.)

4 Plateau histogram equalization

The PHE modifies the shape of the input histogram by reducing or

increasing the value in the histogram’s bins based on a threshold limit before the equalization takes place. An appropriate threshold value is selected firstly, which is represented as “T”. If the value of P(Xk) is greater than T, then it is forced to equal T, otherwise it is unchanged, as shown below [6]:

where Pm(Xk) is the modified probability density function,nk represents the number of times that the level Xk appears in the input image and nt is the total number of samples in the input image, for k = 0, 1, L − 1.

where Pm(Xk) is the modified probability density function, T is the selected threshold value.

Then, the Histogram Equalization (HE) HE is carried out using this modified probability density function. There is one main problem associated with the PHE. Most of the methods need the user to set manually the plateau threshold of the histogram which makes these methods not suitable for automatic systems. Although some methods can set the plateau threshold automatically, the process for deciding one threshold is often complicated.

Selection of plateau threshold value is very important in the IR image improvement way of the PHE. It would have effect on the contrast enhancement of images. Appropriate plateau threshold value would greatly enhance the contrast of image. In addition, some plateau value would be appropriate to some IR images, but not appropriate to others. As a result, the plateau threshold value would be selected adaptively according to different IR images in the process of image enhancement.

The steps of this algorithm are performed as follows:

-

1.

The IR image is obtained from an object through the optical lens of a thermal imager.

-

2.

All the pixel values of the image are arranged in an ascending order.

-

3.

Then, histogram is generated.

-

4.

Median value of the histogram is evaluated, rounded to the nearest integer value and set as the threshold.

-

5.

The equalization problem is performed as if we have two separate histograms based on the estimated threshold.

-

6.

Histogram equalized value for every pixel is calculated using the formula:

where HE is histogram equalization, cdf is CDF value, NO is the number the number output precise, and NE is the entire number of pixels.

5 AWT Homomorphic enhancement

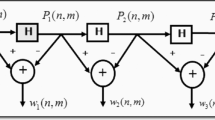

In this approach, we merge the benefits of the AWT and homomorphic enhancement. The AWT idea is decompose the image into additive components by passing the image through cascaded low pass kernels of the same coefficients. By passing the image through kernel H defined as follows [6]:

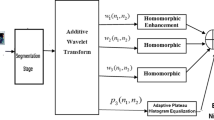

The steps of the AWT approach as shown in Fig. 1. We obtain an approximation image .the difference between the inputs Image and the approximation images give entropy values. The first detail plane w1. If this process is repeated, we can obtain more detail planes w2 up to wn and finally an approximation plane pn.

We avoid sub-sampling of the planes to save all the information in the image and avoid losses. All these planes and the approximation plane can be further processed with image enhancement techniques such the homomorphic method as shown in Fig. 2. After that, each sub-band is processed, separately, using the homomorphic enhancement to reinforce its details.

-

Homomorphic Image Enhancement

An image can be represented as a product of two components as in the following equation [4, 6]:

where f(m, n) is the obtained image pixel value, i(m, n) is the light illumination incident on the object to be imaged and r(m, n) is the reflectance of that object.

It is known that illumination is approximately constant since the light falling on all objects is approximately the same. The only change between object images is in the reflectance component. If we apply a logarithmic process on Eq. (13), we can change the multiplication process into an addition process as follows:

The first term in the above equation has small variations but the second term has large variations as it corresponds to the reflectivity of the object to be imaged. By attenuating the first term and reinforcing the second term of Eq. (14), we can reinforce the image details. It expected that enhanced IR images can be obtained with this approach. To enhance the performance of the previously mention approach with AWT and homomorphic approach, we recommend processing of the approximation component also with adaptive Plateau histogram.

The steps of Additive Wavelet adaptive Plateau Histogram Homomorphic (AWPH) approach can be summarized as follows:

-

1.

Decompose the IR image into four sub-bands p3, w1, w2 and w3 using the AWT.

-

2.

Apply a logarithmic operation on each sub-band to get the illumination and reflectance components of the sub-bands w1, w2 and w3 as they contain the details.

-

3.

Perform a reinforcement operation of the reflectance component on each sub-band and an attenuation operation of the illumination component.

-

4.

Reconstruct each sub-band from its illumination and reflectance using addition and exponentiation processes.

-

5.

Apply adaptive plateau histogram on the plane p3

-

6.

Perform an inverse AWT on the obtained sub-bands by adding p3, w1, w2 and w3 after the homomorphic processing to get the enhanced image.

-

Sharpness Stage

In image processing, it is often desirable to emphasize high frequency components representing the image details without eliminating low frequency components. The high-pass boost filter can be used to enhance high-frequency components. It is used for amplifying high frequency components of images. The amplification is achieved via a procedure which subtracts a smoothed version of the image from the original one [1, 2, 6]:

where Whb is high boost filter Whp is high pass filter,A is constant ,

6 The first proposed approach

This approach depends on merging the gamma correction with the HM as a tool to enhance the IR images. Generally, visible images have better distributed histograms compared to IR images, which have very band-limited histograms. If we think of modifying the histogram of the IR image to be spread over the range of the visible image, the IR image would be enhanced visually. This process is well-known as HM. It can be carried out through modification of the picture statistics such as the mean and variance of the IR image based on their counterparts in the visual image.

-

Gamma Correction Technique

The Gamma Correction (GC) often simply is the name of a nonlinear operation used to encode and decode luminance values in video or still image systems. Gamma correction is defined as follows [5]:

where a is a constant and f is the original IR night vision image, v is the enhanced IR image in the morning and γ is a positive constant introducing the gamma value.

The GC is implemented on normalized images with values varying from 0 to 1.if we assume a value of γ less than 1,this means estimating a root of degree .It is known that roots of fractional numbers are larger than the numbers themselves and roots of 1 are equal to 1. This property gives some gain to low pixels values, while keeping high pixel values close to one .This, in terms, enhances the visibility for objects in IR images immersed in darkness. The block diagram of this approach is shown in Fig. 3.

The steps of the first proposed approach depend on merging gamma correction with the HM of the IR image to the visual image are summarized as follow:

-

1.

Acquire the IR night vision image from the camera

-

2.

Apply GC on the IR night vision image using Eq. (19)

-

3.

Compute the mean of the resultant image g(m, n)

where M and N are the dimensions of the IR image.

-

4.

Compute the mean value of the reference visual image fr(k, l).

where fr is the reference visual image, K and L are the dimensions of the reference visual image.

-

5.

Estimate the standard deviation of the resultant image σ1.

-

6.

Estimate the standard deviation of the reference image σ2.

-

7.

Estimate the correction factor Ce by dividing the standard deviation of reference image by the standard deviation of the IR image.

Compute a modified mean factor fc

-

8.

Estimate the histogram matched FH

7 The second proposed approach

This approach is concerned with enhancement of IR images based on merging the GC with the CLAHE. The hybrid approach of CLAHE and the GC is used to enhance the visual quality of the IR images. The conventional HE does not adapt to local contrast requirements. Minor contrast differences can be entirely missed, when the number of pixels falling in a particular gray range is relatively small. An adaptive technique to avert this drawback is block-based processing for the HE. The steps of this approach are shown in Fig. 4.

The steps of this proposed approach can be summarized as follows:

-

1.

Apply the GC on IR night vision images with Eq. (19).

-

2.

Divide the resultant image into non overlapping blocks.

-

3.

Estimate the histogram and apply the HE on each sub-image or block.

-

4.

Apply a clip limit to overcome the noise problem and limit the amplification by clipping the histogram at a value before computing the cumulative distribution functions to get the IR enhanced image.

The clip limit Ω is obtained by the following equation:

where Ω is a clip limit, ʘ is a clip factor, M and N are the region size in gray scale value,and Smax is the maximum of the new distribution after the HE.

8 The third proposed approach

This approach is concerned with enhancement of IR night vision images based on trilateral visual quality enhancement. The trilateral means three stages. The IR night images pass through three stages: segmentation, enhancement, and sharpness. The steps of this approach are shown in Fig. 5.

The steps of the third proposed approach can be summarized as follows:

-

1.

Acquire the IR night vision images from IR night imaging camera.

-

2.

Divide the IR night vision image into non overlapping sub-images in a segmentation stage

-

3.

Apply the AWPH stage on the resultant image.

-

4.

Apply a high-pass boosting filter on the enhanced resultant image to get high quality for IR night enhanced image.

9 Performance evaluation metrics

This section presents the quality metrics used for the valuation of the enhancement results after the three approaches. These metrics include entropy (E), average gradient (AG), Sobel edge magnitude (∇f) and spectral entropy (Ef).

The philosophy of entropy that it is metric of the amount of information in a random variable .the histogram of an image can be interpreted in the form of the PDF if it is normalized by the image dimensions. Assuming an equally spread histogram, this equivalent to a uniform random variable. The uniformly distributed random variable has equal probabilities of events leading to maximum entropy .on the other hand discrete peaked histograms as in black and white images have low amount of information. Hence the objective of processing of IR images is to get close to histograms with uniform distributions. The entropy of an image is expressed as follows [5]:

where E is the entropy of the IR image, 255 is the maximum level for an 8-bit gray-scale image. The larger the number of levels in an image, the higher is the entropy. pi is the probability of occurrence of pixels in the image having intensity ‘i’. Suppose the number of pixels having intensity ‘i’ is ni and the image contains n pixels\( , {p}_i=\raisebox{1ex}{${n}_i$}\!\left/ \!\raisebox{-1ex}{$n$}\right. \).

In image processing applications many edges mean much information. Unfortunately, in IR images, the images are dark preventing the edges and details to appear. So, our objective is to reinforce edges revealing more details. Mathematically edges can be obtained with differentiation in both directions in images. Hence, the The average gradient is expressed as follows [4, 6]:

where AG is the Average gradient of the IR image, f is the original IR image, MxN is the size of the IR image.

Another metric for edge intensity is the magnitude of both horizontal and vertical derivatives defined as The Sobel edge magnitude is expressed as follows [4]:

where ∇f is the sobel edge magnitude, fx and fy are two images that at each point contain the horizontal and vertical derivative approximations, respectively.

To estimate fx, we need to differentiate with x, and need to differentiation mask and also fy we need to differentiate with y, and need to differentiation mask as shown in Fig. 6.

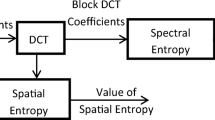

Another perspective to look at the quality of images is to enhance the spectral content of the images. Enhancing the spectral content of an image means many edges and details as more frequency component appear in the image spectrum. A good metric to assess the spectral content of an image is the spectral entropy. The spectral entropy which is evaluated using the block diagram in Fig. 7.

The image is the first segment into blocks and the DCT is estimated for each block. It is known that the first value of the DCT of a block is the largest value or the DC value of the spatial domain block. To treat the DCT coefficients for spectral Entropy estimation, we need some sort of normalized for coefficient values as probability distribution function (PDF) as follows [5, 6]:

where 1 ≤ i ≤ 8, 1 ≤ j ≤ 8, i, j ≠ 1, and c(i, j) represents the DCT coefficients.

This process guarantees that each value of the coefficients after normalization is less than one. This allows the treatment of values as if they are probabilities. We collect the normalized DC values of all blocks

in the image after DCT and estimate the PDF of these values to use them for the estimation of the spectral entropy as follows [5, 6]:

where p(k, l)is probability density function estimate from the normalized DC values of all blocks as shown in Fig. 8.

If we can evaluate the normalized DC values after the PDFS for two obtained IR images to evaluate the quality, we can say that the PDF spam is the evidence of better quality as guarantees higher.

10 Simulation results

This section presents several simulation experiments executed on four IR night vision images. The evaluation metrics for IR image quality are entropy, average gradient, Sobel edge magnitude and spectral entropy. These results adopt a strategy of presenting the original IR images with their enhanced versions using the first proposed, the second proposed and the third proposed and comparing between them. The characteristics of the four IR images used in experiments are given in Table 1.

The results of the first experiment are shown in Figure. 9. Figure. 9a gives the original IR night vision image. Figure. 9b gives the IR image after AWPH. Figure. 9c gives the IR image the after APH. Figure. 9d gives the reference image for the first proposed approach. Figure. 9e gives the enhanced IR image using the first proposed approach. Fig. 9f gives the enhanced IR image using the second proposed approach. Fig. 9 g gives the enhanced IR image using the third proposed approach.

Similar experiments have been carried out on other IR images and the results are given in Figs. 10, 11 and 12. From these results, clear that the higher the value of the entropy, the average gradient and Sobel edge magnitude, the better the image quality. It has been shown that it has been shown that this algorithm has succeeded in the improvement of the visual quality of that IR image, and the best details have been obtained. From these results, it is clear that the third proposed approach has succeeded in obtaining the best results for the improvement of IR night vision images from both the visual quality and performance metrics perspectives as given in Tables 2 and 3.

-

Database IR Images

To further confirm the effectiveness of the first, second and third proposed approaches, the experiments on many other datasets are presented [11, 18, 19, 21]. The “Dune” and “OTCBVS” images with size 300 × 300 pixels, respectively, the “car” images with size 301 × 149 pixels were provided by Shao et al. [12]. The results of the third proposed approach are shown in Figs. 11 and 12. The results have illustrated that the third proposed approach is superior as compared with other methods. These results are given in Tables 2, 3, 4 and 5.

Table 2 shows the quality metrics of the first experiment. It has been shown that the values of the entropy for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach, the third proposed approach. It has been shown that the values of the average gradient for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. It has been shown that the value of the Sobel edge magnitude for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. With comparing between the three proposed approaches with the other methods, clear that the third proposed approach has high value of the entropy, the average gradient and Sobel edge magnitude. Comparing between Fig. 9a and 9 g,it is clear that the third proposed enhancement algorithm has enhanced the visual quality of the processed image for the first experiment

Similar experiments have been carried out on other IR images and the results are given in Figs. 10 and 12. From these results, clear that the higher the value of the entropy, the average gradient and Sobel edge magnitude, the better the image quality. It has been shown that it has been shown that the third proposed algorithm has succeeded in the improvement of the visual quality of that IR image, and the best details have been obtained. From these results, it is clear that the third proposed approach has succeeded in obtaining the best results for the improvement of IR night vision images from both the visual quality and performance metrics perspectives as given in Tables 3, 4 and 5.

Table 3 shows the quality metrics of the second experiment. It has been shown that the values of the entropy for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach, the third proposed approach. It has been shown that the values of the average gradient for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. It has been shown that the value of the Sobel edge magnitude for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. With comparing between the three proposed approaches with the other methods, clear that the third proposed approach has high value of the entropy, the average gradient and Sobel edge magnitude. Comparing between Fig. 10a, to Fig. 10 g, it is clear that the third proposed enhancement algorithm has enhanced the visual quality of the processed image for the second experiment.

Table 4 shows the quality metrics of the third experiment. It has been shown that the values of the entropy for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach, the third proposed approach. It has been shown that the values of the average gradient for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. It has been shown that the value of the Sobel edge magnitude for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. With comparing between the three proposed approaches with the other methods, clear that the third proposed approach has high value of the entropy, the average gradient and Sobel edge magnitude. Comparing between Fig. 11a, to Fig. 11 g, it is clear that the third proposed enhancement algorithm has enhanced the visual quality of the processed image for the third experiment

Table 5 shows the quality metrics of the fourth experiment. It has been shown that the values of the entropy for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach, the third proposed approach. It has been shown that the values of the average gradient for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. It has been shown that the value of the Sobel edge magnitude for the PHE, AWPH, BHE, the GC, the first proposed approach, the second proposed approach and the third proposed approach. With comparing between the three proposed approaches with the other methods, clear that the third proposed approach has high value of the entropy, the average gradient and Sobel edge magnitude. Comparing between Fig. 12a, to Fig. 12 g,it is clear that the third proposed enhancement algorithm has enhanced the visual quality of the processed image for the fourth experiment

The strategy of the quality evaluation based on spectral entropy depends on estimating the entropy of each block in the DCT domain. The spreading of the distribution of the HE, the BHE, the PHE and the AWPH is less than the spreading of the distribution of the third proposed approach. The more spreading of the distribution is a good indicator for better spectral quality of the enhanced image of the third proposed approach than the HE, the BHE, the PHE,the AWPH, the first proposed and the second proposed approaches. The obtained results of the spectral entropy for all experiments are shown in Figure. 13 and 16.

The Figure. 13a shows the distribution of the spectral quality of the original image for the first experiment is cramped with respect to the enhanced image after the for the AWPH. Figure. 13b shows that the distribution of the spectral quality of the original image is tight with respect to the enhanced image after the PHE. Figure. 13c shows that the distribution of the spectral quality of the original image is narrow with respect to the enhanced image after the first proposed approach. Figure. 13d shows the spreading distribution of the spectral quality of the enhanced image of the second proposed approach with respect to the original image. Figure. 13e shows the spreading distribution of the spectral quality of the enhanced image of the third proposed approach with respect to the original image. The obtained results show that, the third proposed approach is better than the AWPH, the PHE, the BHE, the HE, the first proposed and the second proposed approaches point view the spectral entropy for the first experiment.

The Figure. 14a shows the distribution of the spectral quality of the original image for the second experiment is tight with respect to the enhanced image after the for the AWPH. Figure. 14b shows that the distribution of the spectral quality of the original image is tight with respect to the enhanced image after the PHE. Figure. 14c shows that the distribution of the spectral quality of the original image is narrow with respect to the enhanced image after the first proposed approach. Figure. 14d shows the spreading distribution of the spectral quality of the enhanced image of the second proposed approach with respect to the original image. Figure. 14e shows the spreading distribution of the spectral quality of the enhanced image of the third proposed approach with respect to the original image. The obtained results show that, the third proposed approach is better than the AWPH, the PHE, the BHE, the HE, the first proposed and the second proposed approaches point view the spectral entropy for the second experiment.

The Figure. 15a shows the distribution of the spectral quality of the original image for the third experiment is narrow with respect to the enhanced image after the for the AWPH. Figure. 15b shows that the distribution of the spectral quality of the original image is tight with respect to the enhanced image after the PHE. Fig. 15c shows that the distribution of the spectral quality of the original image is narrow with respect to the enhanced image after the first proposed approach. Fig. 15d shows the spreading distribution of the spectral quality of the enhanced image of the second proposed approach with respect to the original image. Fig. 15e shows the spreading distribution of the spectral quality of the enhanced image of the third proposed approach with respect to the original image. The obtained results show that, the third proposed approach is better than the AWPH, the PHE, the BHE, the HE, the first proposed and the second proposed approaches point view the spectral entropy for the third experiment.

The Fig. 16a shows the distribution of the spectral quality of the original image for the fourth experiment is scrimpy with respect to the enhanced image after the for the AWPH. Fig. 16b shows that the distribution of the spectral quality of the original image is tight with respect to the enhanced image after the PHE. Fig. 16c shows that the distribution of the spectral quality of the original image is narrow with respect to the enhanced image after the first proposed approach. Fig. 16d shows the spreading distribution of the spectral quality of the enhanced image of the second proposed approach with respect to the original image. Fig. 16e shows the spreading distribution of the spectral quality of the enhanced image of the third proposed approach with respect to the original image. The obtained results show that, the third proposed approach is better than the AWPH, the PHE, the BHE, the HE, the first proposed and the second proposed approaches point view the spectral entropy for the fourth experiment. From these results, clear that the third proposed approach gives superior results to than the first proposed approach and the second proposed approach from the quality metrics perspectives. The first proposed approach is faster in the implementation than the second and the third proposed approaches.

11 Conclusion and future work

This paper has concentrated on enhancing IR night vision images from the visual quality and resolution perspectives. Towards this target, the paper has presented a class of suggested three proposed approaches for IR night vision image enhancement. For the visual quality enhancement, histogram processing was the first direction to follow to achieve this objective.

These two approaches are presented for IR night vision image enhancement depending on adaptive gamma correction. The first method is based on hybrid gamma correction with the CLAHE and the second one is based on merging gamma correction with histogram matching. The histogram matching is based on a reference visual image. Simulation results revealed that the first proposed approach gives superior results to the second one from the computation time perspective. On the other hand, the second proposed approach is better from the quality metrics perspectives.

Simulation results revealed that histogram matching to visual images is a simple and efficient enhancement method for IR night vision images. Moreover, we found that the limited histogram equalization is preferred to the unlimited one due to the constraints put on the pixel values.

Another direction adopted in this framework for visual quality enhancement of IR night vision images is based on trilateral contrast enhancement approach for IR image enhancement. It depends on three stages: segmentation, enhancement and sharpness. The third proposed approach is based on an enhancement stage using AWTH. Simulation results revealed that this approach gives superior results to the HE, the BHE, the PHE, the AWPH approaches from the quality metrics perspectives. With comparing between the three proposed approaches, clear that the third proposed approach gives superior results to the two proposed approaches point view the quality metrics. On the other hand, clear that the third proposed approach takes long computation time in the implementation with respect to the two proposed approaches.In the future work, the three suggested approaches can be recommended utilization the deep learning concepts for object detection of the obtained IR images.

References

Affonso AA, Rodrigues ELL, Veludo de Paiva MS (2017) High-boost weber local filter for precise eye localization under uncontrolled scenarios. Pattern Recogn Lett 102:50–57. https://doi.org/10.1016/j.patrec.2017.12.015

Alirezanejad M, Saffari V, Amirgholipour S, Sharifi AM (2014) Effect of Locations of using High Boost Filtering on the Watermark Recovery in Spatial Domain Watermarking. Indian J Sci Technol 7(4):517–524

Ashiba HI, Mansour HM, Ahmed HM, El-Kordy MF, Dessouky MI, El-Samie FEA (March 2018) Enhancement of infrared images based on efficient histogram processing. Wirel Pers Commun 99(2):619–636

Ashiba HI, Mansour HM, Ahmed HM, El-Kordy MF, Dessouky MI, Zahran O, El-Samie FEA (May 2019) Enhancement of IR images using histogram processing and the Undecimated additive wavelet transform. Multimed Tools Appl 78(9):11277–11290

Ashiba MI, Tolba MS, El-Fishawy AS, Abd El-Samie FE (2019) Gamma correction enhancement of infrared night vision images using histogram processing. Multimed Tools Appl 78(19):27771–27783

Ashiba MI, Tolba MS, El-Fishawy AS, Abd El-Samie FE (2019) Hybrid enhancement of infrared night vision images system. Multimed Tools Appl 79:6085–6108. https://doi.org/10.1007/s11042-019-7510-y

Bai X, Liu H (2017) Edge enhanced morphology for infrared image analysis. Infrared Phys Technol 80:44–57

Cai H, Zhuo L, Chen X, Zhang W (2019) Infrared and visible image fusion based on BEMSD and improved fuzzy set. Infrared Phys Technol 98:201–211

Chen Y, L Yang, Z Zeng, Q Ren, X Xu, Q Zhang, T Xu, S Ouyang (2017) “Degradation in LED night vision imaging and recovery algorithms”, Optik - International Journal for Light and Electron Optics, https://doi.org/10.1016/j.ijleo.2017.05.079

Deepa S, Bharathi VS (2013) Efficient ROI segmentation of Digital Mammogram images using Otsu’s N thresholding method. Journal of Automation and Artifical Intelligence 1(2) ISSN: 2320–4001

Gade R, Moeslund TB (2014) Thermal cameras and applications: a survey. Mach Vis Appl 25(1):245–262

Gundogdu E (2015) Comparison of infrared and visible imagery for object tracking: toward trackers with superior IR performance. In: CVPR Workshops, Proceedings of the IEEE Conference on Computer Vision and Pattern

Höglund J, Mitkusa M, Olssona P, Linda O, Drewsa A, Blochc NI, Kelbera A, Strandh M (2019) Owls lack UV-sensitive cone opsin and red oil droplets, but see UV light at night: retinal transcriptomes and ocular media transmittance. Vis Res 158:109–119

Jung J and J Gibson (2006) “The interpretation of spectral entropy based upon rate distortion functions,” in IEEE International Symposium on Information Theory, pp. 277–281

Kong X, Liu L, Qian Y, Wang Y (2019) Infrared and visible image fusion using structure-transferring fusion method. Infrared Phys Technol 98:161–173

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man & Cybernatics 9(1):62–66

Qi W, Han J, Zhang Y, Bai L (2016) Infrared image enhancement using cellular automata. Infrared Phys Technol 76:684–690

Saad MA, Bovik AC, Charrier C (2012) Blind image quality assessment: a natural scene statistics approach in the DCT domain. IEEE Transaction on Image Processing 21(8):3339–3352

Torabi A, Masse G, Bilodeau G-A (2012) An iterative integrated framework for thermal-visible image registration, sensor fusion, and people tracking for video surveillance applications. Comput Vis Image Underst 116(2):210–221

Wang J, Peng J, Feng X, He G, Fan J (2014) Fusion method for infrared and visible images by using non-negative sparse representation. Infrared Phys Technol 67:477–489

Wu Z ,N Fuller,D Theriault, M Betke (n.d.) ” A Thermal Infrared Video Benchmark for Visual Analysis”, http://www.vcipl.okstate.edu/otcbvs/bench/

Zhang S, Li P, Xu X, Li L, Chang CC (2018) No-Reference Image Blur Assessment Based on Response Function of Singular Values. Symmetry 10(304):2–15

Zhao J, Cui G, Gong X, Zang Y, Tao S, Wang D (2017) Fusion of visible and infrared images using global entropy and gradient constrained regularization. Infrared Phys Technol 81:201–209

Zhu P, X Ma, Z Huang (2017) ”Fusion of infrared-visible images using improved multi-scale top-hat transform and suitable fusion rules”, Infrared Physics & Technology, https://doi.org/10.1016/j.infrared.2017.01.013

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ashiba, H.I., Ashiba, M.I. Super-efficient enhancement algorithm for infrared night vision imaging system. Multimed Tools Appl 80, 9721–9747 (2021). https://doi.org/10.1007/s11042-020-09928-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09928-w